Exploration of the 3D World on the Internet Using Commodity Virtual Reality Devices

Abstract

:1. Introduction

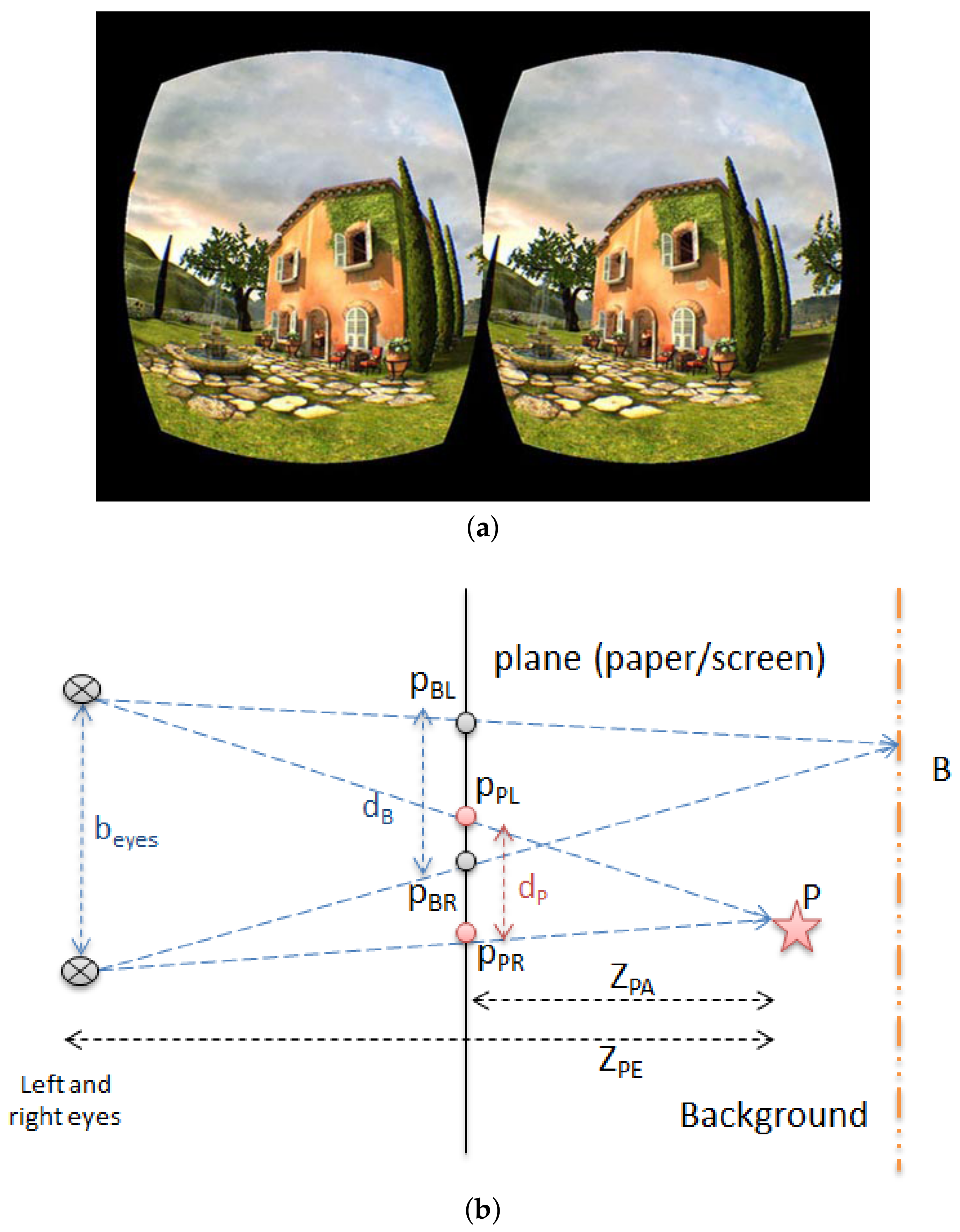

1.1. Binocular Problems with Traditional VR Kit

1.2. Limited Dataset in VR Applications

1.3. Current Goal of the Project

2. Background of Virtual Reality

2.1. VR Concept

2.2. Google Cardboard

2.3. Google Daydream

2.4. Samsung Gear VR

2.5. VR Sickness

2.6. Limited VR Contents

3. Smartphone VR Development Environment

3.1. Android IDE

3.2. Unity Engine

3.3. Unreal Engine

3.4. Unity Engine & Unreal Engine Comparison

4. Publicly Available 3D Related Datasets on the Internet

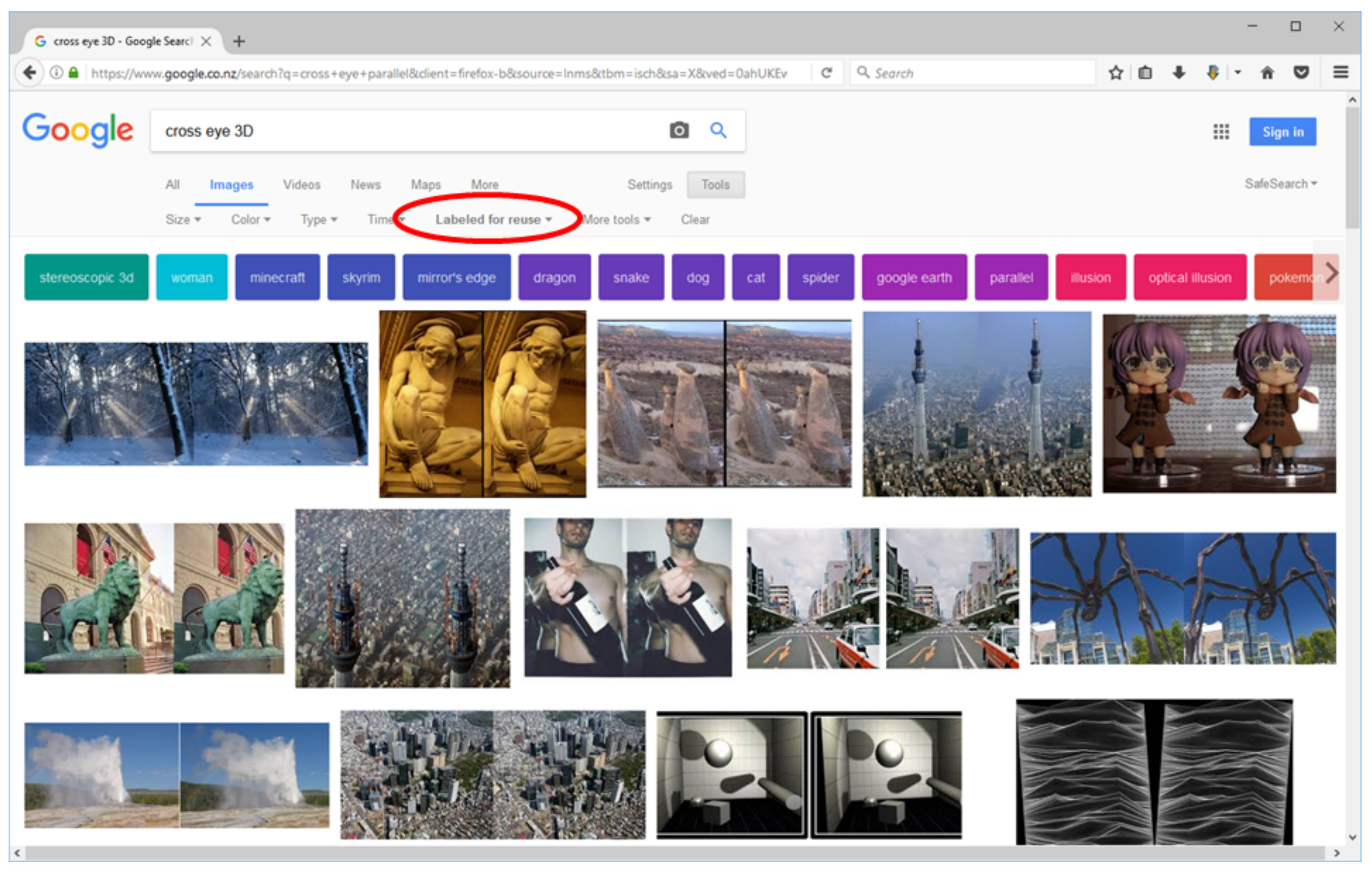

- Side by side combined stereo images (searching keyword: “side by side stereo 3D”, “cross eye 3D”, or “parallel eye 3D”).

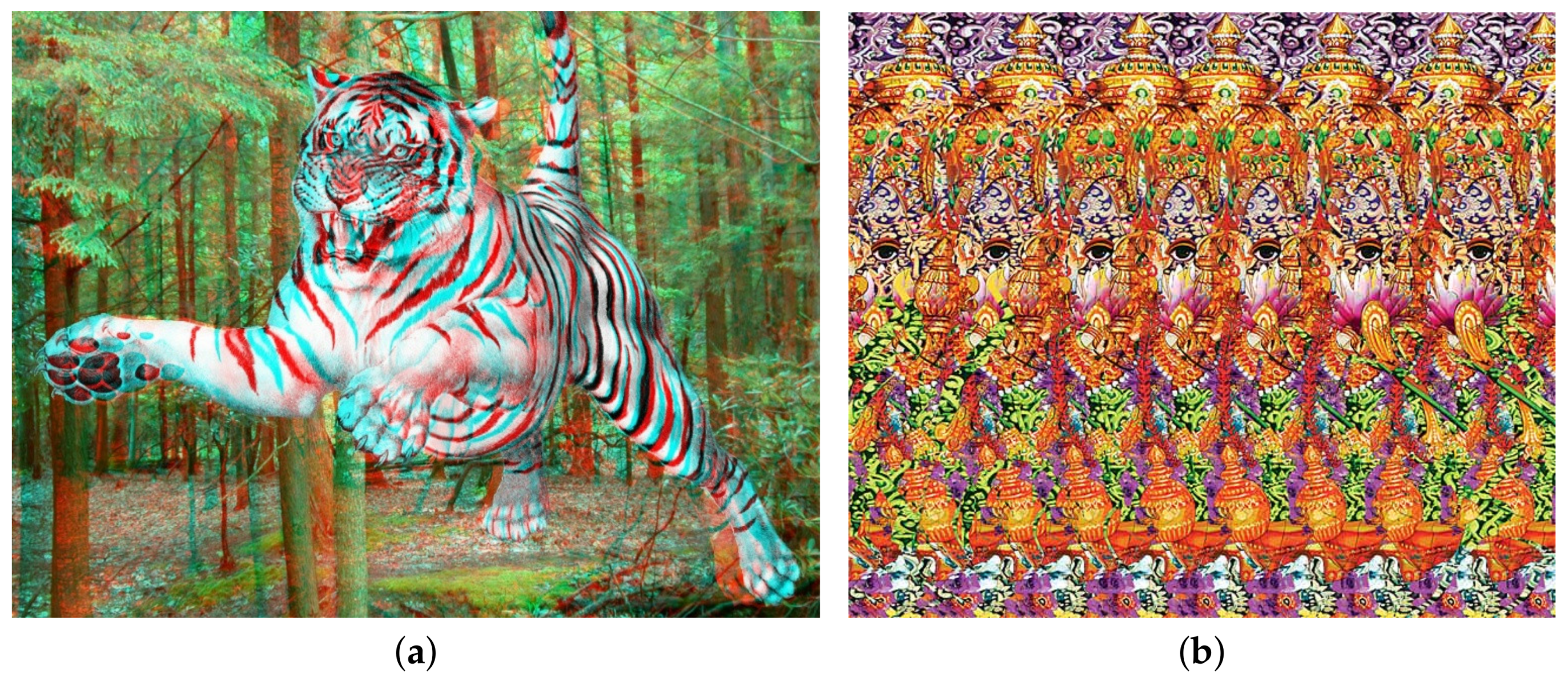

- Anaglyph images (searching keyword: “anaglyph 3D”, “red cyan 3D”, or “red blue 3D”).

- Stereogram images (searching keyword: “stereogram 3D”, “autostereogram 3D”, or “magic eye 3D”).

4.1. Side by Side Parallel- and Cross-Eyed Views

4.2. Anaglyph

4.3. Stereogram

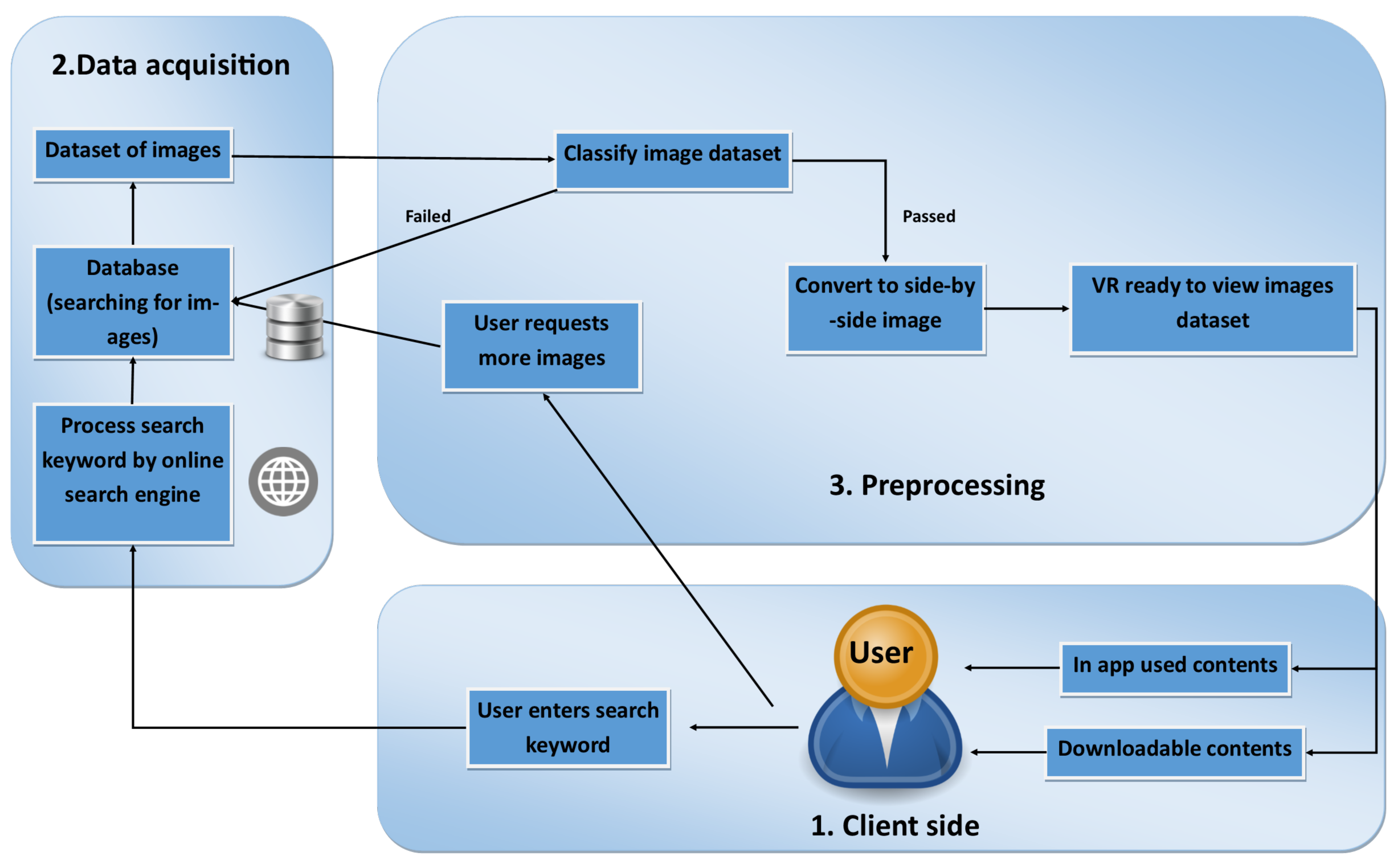

5. System Design and Implementation

5.1. Client Side Search Engine

<script type="text/javascript" src="www.google.com/jsapi"></script>

<script type="text/javascript">

google.load("search", "SEARCHING_TEXT");

</script>

SEARCHING_TEXT = ("side by side stereo 3D" OR "cross eye 3D" OR

"parallel eye 3D" OR "anaglyph 3D", "red cyan 3D", OR "red blue 3D"

OR "stsereogram 3D" OR "autostereogram 3D" OR "magic eye 3D")

SEARCHING_TEXT = SEARCHING_TEXT + AND + "EXTRA_KEYWORDS"

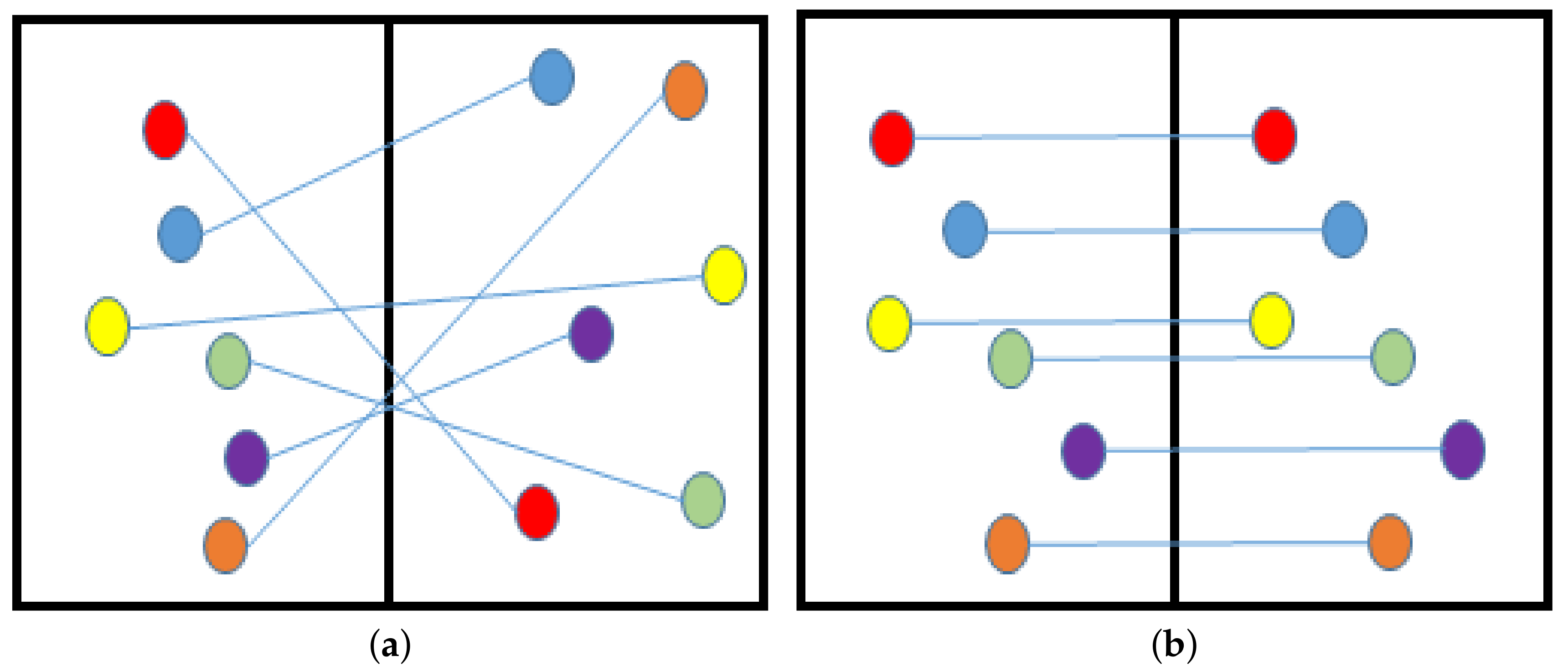

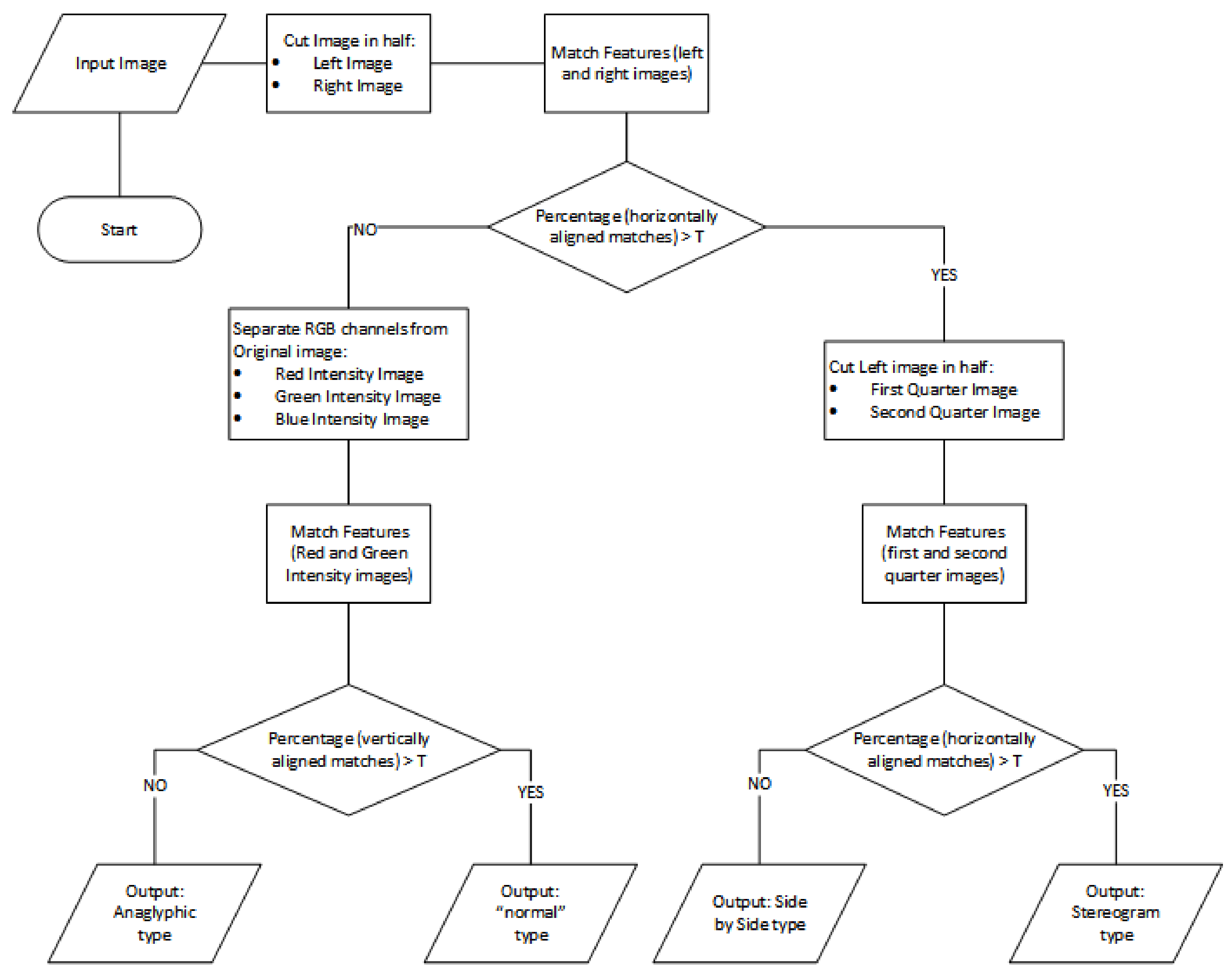

5.2. Data Acquisition and Image Type Classification

- side-by-side photos (type SBS)

- anaglyphs (type ANAG)

- stereograms (type STE)

- “normal” (type NOR)

5.2.1. KLT, SIFT, and SURF Feature Extraction for Correspondence Matching

- Laboratory-produced stereo images (Figure 7) with the correspondences between images are known (Section 5.2.2).

- Real-life near stereo images (Figure 8) with ground truth correspondences are not known (Section 5.2.3).

5.2.2. Evaluation of KLT, SIFT, and SURF trackers on 2005 and 2006 Middlebury Stereo Datasets

5.2.3. Evaluation of KLT, SIFT, and SURF on Real-Life Images

5.2.4. KLT Features for Stereo Image Type Classification

- left/right half: is vertically cut into two and features are searched between those.

- 1st/2nd quarters: is vertically cut into four, and first two quarters are chosen.

- red/green channels: Red, Green, Blue channels are separated from , Red and Green ones are selected for feature matching.

- Small: Average distance is less than 50 pixels

- Large: Average distance is more than 200 pixels

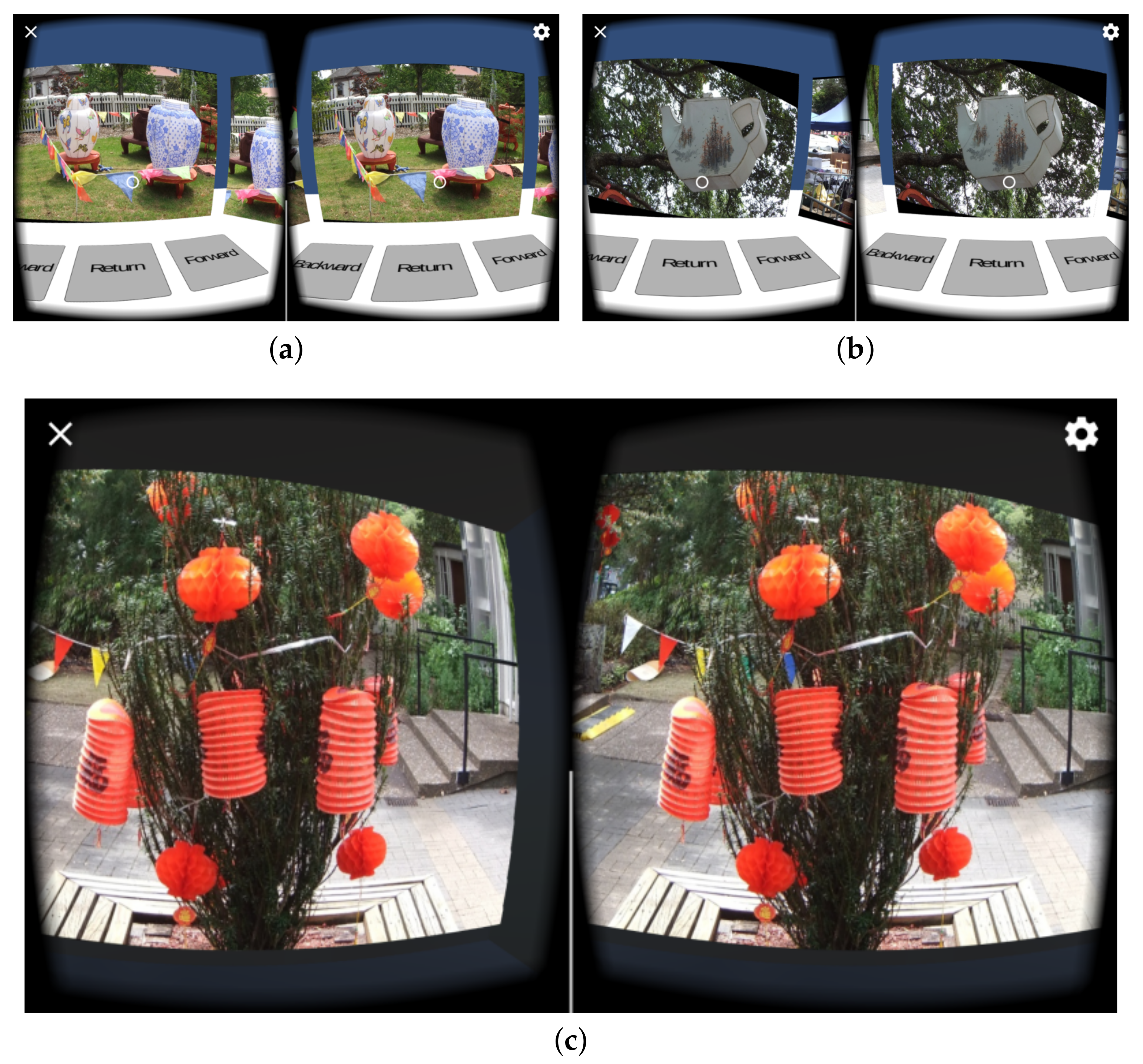

5.3. Final Results

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Churchland, P.S.; Sejnowski, T.J. The Computational Brain, 1st ed.; MIT Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Alan Gordon Enterprises, Inc. The Pocket Stereoscope—From the Website. Available online: http://stereoinstruments.com (accessed on 21 July 2017).

- Bavor, C. Folding a Virtual Journey with Google Cardboard. Available online: https://www.blog.google/products/google-vr/unfolding-virtual-journey-cardboard/ (accessed on 21 July 2017).

- Samsung Electronics. The Samsung Gear VR—From the Website. Available online: http://www.samsung.com/global/galaxy/gear-vr (accessed on 21 July 2017).

- Wee, A. Huawei Digs into Google Daydream: VR Gadgets Will Be Available by This Year. Available online: http://en.zinggadget.com/huawei-digs-into-vr-daydream-vr-gadgets-will-be-available-by-this-year/ (accessed on 21 July 2017).

- Leroy, L. 3.2 Too much depth. In Eyestrain Reduction in Stereoscopy; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Patterson, R.E. Accommodation-Vergence Conflict. In Human Factors of Stereoscopic 3D Displays; Springer: Dayton, OH, USA, 2015; pp. 33–41. [Google Scholar]

- Hoffman, D.M.; Girshick, A.R.; Akeley, K.; Banks, M.S. Vergence–accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 2008, 8, 1–30. [Google Scholar] [CrossRef] [PubMed]

- Sural, I. Chapter 10 mobile augmented reality applications in education. In Mobile Technologies and Augmented Reality in Open Education; Kurubacak, G., Altinpulluk, H., Eds.; IGI Global: Hershey, PA, USA, 2017; pp. 200–201. [Google Scholar]

- Jerald, J. 1.3 What is vr good for? 2. A history of vr. In The vr Book: Human—Centered Design for Virtual Reality; Jerald, J., Ed.; Morgan & Claypool: San Rafael, CA, USA, 2015. [Google Scholar]

- Wheatstone, C. Contributions to the physiology of vision on some remarkable, and hitherto unobserved, phenomena of binocular vision. Philos. Trans. R. Soc. Lond. 1838, 128, 371–394. [Google Scholar] [CrossRef]

- Holmes, O.W. The stereoscope and the stereograph. Atl. Mon. 1859, 3, 738–748. [Google Scholar]

- Emspak, J. What Is Virtual Reality? Available online: https://www.livescience.com/54116-virtual-reality.html (accessed on 21 July 2017).

- Parisi, T. Learning Virtual Reality: Developing Immersive Experiences and Applications for Desktop, Web, and Mobile; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2015. [Google Scholar]

- Ng, A.K.; Chan, L.K.; Lau, H.Y. Depth Perception in Virtual Environment: The Effects of Immersive System and Freedom of Movement. In Proceedings of the Virtual, Augmented and Mixed Reality: 8th International Conference VAMR, Toronto, ON, Canada, 17–22 July 2016. [Google Scholar]

- Jonnalagadda, H. Google Has Shipped 10 Million Cardboard vr Headsets Since 2014. Available online: http://www.androidcentral.com/google-has-shipped-10-million-cardboard-vr-headsets-2014 (accessed on 21 July 2017).

- Google. Google Cardboard—Technical Specification Version 2.0. Available online: https://vr.google.com/cardboard/downloads/wwgc_manufacturers_kit.zip (accessed on 21 July 2017).

- Oscillada, J. Comparison Chart of fov (Field of View) of vr Headsets. Available online: http://www.virtualrealitytimes.com/2015/05/24/chart-fov-field-of-view-vr-headsets/ (accessed on 21 July 2017).

- Machkovech, S. How Side-Mounted LEDs Can Help Fix VR’s “Tunnel Vision” and Nausea Problems. Available online: https://arstechnica.com/gaming/2016/05/how-side-mounted-leds-can-help-fix-vrs-tunnel-vision-and-nausea-problems/ (accessed on 10 August 2017).

- Powell, W.; Powell, V.; Brown, P.; Cook, M.; Uddin, J. Getting around in google cardboard—Exploring navigation preferences with low cost mobile vr. In Proceedings of the 2016 IEEE 2nd Workshop on Everyday Virtual Reality (WEVR 2016), Greenville, SC, USA, 20–20 March 2016. [Google Scholar]

- Faulkner, C.; Phones, M. Google Daydream Release Date, News and Features. Available online: http://www.techradar.com/news/phone-and-communications/mobile-phones/android-vr-release-date-news-features-1321245 (accessed on 21 July 2017).

- Amadeo, R. Daydream VR Hands—On: Google’s “Dumb” VR Headset Is Actually Very Clever. Available online: https://arstechnica.com/gadgets/2016/10/daydream-vr-hands-on-googles-dumb-vr-headset-is-actually-very-clever/ (accessed on 21 July 2017).

- Novet, J. How Google’s Daydream Mobile VR System Will Work. Available online: https://venturebeat.com/2016/05/21/google-daydream-standards/ (accessed on 21 July 2017).

- Orland, K. Google Daydream Review: The Fast—Casual Restaurant of the VR World. Available online: https://arstechnica.com/gaming/2016/11/google-daydream-review-the-fast-casual-restaurant-of-the-vr-world/ (accessed on 21 July 2017).

- Google. Daydream—Phones. Available online: https://vr.google.com/daydream/phones (accessed on 21 July 2017).

- Samsung. Specifications | Samsung Gear VR with Controller. Available online: http://www.samsung.com/global/galaxy/gear-vr/specs/ (accessed on 21 July 2017).

- Smith, S.L. Samsung Gear VR Guide: Everything You Need to Know. Available online: http://www.tomsguide.com/us/samsung-gear-vr-guide,review-3267.html (accessed on 21 July 2017).

- Amazon. Samsung SM-R323NBKAXAR Gear Virtual Reality. 2016. Available online: https://www.amazon.com/Samsung-SM-R323NBKAXAR-Virtual-International-Warranty/dp/B01M0YDS2A/ (accessed on 21 July 2017).

- Peckham, J. Samsung Gear VR Review. Available online: http://www.techradar.com/reviews/samsung-gear-vr-2017 (accessed on 21 July 2017).

- Kolasinski, E. Simulator Sickness in Virtual Environments (ARI 1027); U.S. Army Research Institute for the Behavioral and Social Sciences: Alexandria, VA, USA, 1995.

- Cobb, S.V.G.; Nichols, S.C. Static posture tests for the assessment of postural instability after virtual environment use. Brain Res. Bull. 1998, 47, 459–464. [Google Scholar] [CrossRef]

- Sharples, S.; Cobb, S.; Moody, A.; Wilson, J.R. Virtual reality induced symptoms and effects (vrise): Comparison of head mounted display (hmd), desktop and projection display systems. Displays 2008, 29, 58–69. [Google Scholar] [CrossRef]

- Stanney, K.M.; Kennedy, R.S. Simulation sickness. In Human Factors in Simulation and Training; CRC Press: Boca Raton, FL, USA, 2009; pp. 117–127. [Google Scholar]

- Pappas, S. Why Does Virtual Reality Make Some People Sick? Available online: http://www.livescience.com/54478-why-vr-makes-you-sick.html (accessed on 21 July 2017).

- O’Connor, F. Google to Cardboard Eevelopers: Keep It Short and Simple, and Watch out for Nausea. Available online: https://www.pcworld.idg.com.au/article/576233/google-cardboard-developers-keep-it-short-simple-watch-nausea/ (accessed on 21 July 2017).

- Petrovan, B. Google Clarifies Requirements for Daydream VR—Ready Phones. Available online: http://www.androidauthority.com/daydream-vr-ready-phones-specs-727780/ (accessed on 21 July 2017).

- Google. Android 7.1 Compatibility Definition. Available online: https://source.android.com/compatibility/android-cdd (accessed on 21 July 2017).

- Simonite, T. Inside Google’s Plan to Make Virtual Reality Mainstream Before Facebook Can. Available online: https://www.technologyreview.com/s/542991/google-aims-to-make-vr-hardware-irrelevant-before-it-even-gets-going/ (accessed on 21 July 2017).

- Freefly, V.R. Virtual Reality Content for Android and I—Phone Users. Available online: https://freeflyvr.com/content/ (accessed on 21 July 2017).

- Jaunt. All Virtual Reality Videos. Available online: https://www.jauntvr.com/title/ (accessed on 21 July 2017).

- Google. Apps for Google Cardboard. Available online: https://vr.google.com/cardboard/apps/ (accessed on 21 July 2017).

- Matterport. Content Creation for Virtual Reality. Available online: https://matterport.com/virtual-reality/ (accessed on 21 July 2017).

- RIZE. Virtual Reality 360 Video. Available online: https://www.360rize.com/vr/ (accessed on 21 July 2017).

- Apple Inc. Welcome to iOS. Available online: https://support.apple.com/ios (accessed on 21 July 2017).

- Navarro, A.; Pradilla, J.V.; Rios, O. Open source 3D game engines for serious games modeling. In Modeling and Simulation in Engineering; InTech: Rijeka, Croatia, 2012. [Google Scholar]

- Stack Overflow. Stack Overflow Developer Survey. 2017. Available online: https://insights.stackoverflow.com/survey/2017 (accessed on 21 July 2017).

- Horton, J.; Portales, R. Why Java, Android, and Games? In Android: Game Programming; Packt Publishing Ltd.: Birmingham, UK, 2016; pp. 8–10. [Google Scholar]

- Google. Google VR NDK Overview | Google VR. Available online: https://developers.google.com/vr/android/ndk/gvr-ndk-overview (accessed on 21 July 2017).

- Google. Getting Started with the NDK | Android Developers. Available online: https://developer.android.com/ndk/guides/index.html (accessed on 21 July 2017).

- Mullis, A. Developing with the Google VR SDK and NDK. Available online: http://www.androidauthority.com/developing-with-the-google-vr-sdk-and-ndk-699472/ (accessed on 21 July 2017).

- Unity Technologies. Fast Facts. Available online: https://unity3d.com/public-relations (accessed on 21 July 2017).

- Unity Technologies. Unity-Editor. Available online: https://unity3d.com/unity/editor (accessed on 21 July 2017).

- Busby, J.; Parrish, Z.; Wilson, J. Mastering Unreal Technology, Volume I: Introduction to Level Design with Unreal Engine 3; Pearson Education: London, UK, 2009. [Google Scholar]

- Tavakkoli, A. A. A history of unreal technology. In Game Development and Simulation with Unreal Technology; CRC Press: Hoboken, NJ, USA, 2015. [Google Scholar]

- Crecente, B. The Future Is Unreal (Engine). Available online: https://www.polygon.com/a/epic-4-0/the-future-is-unreal-engine (accessed on 21 July 2017).

- Epic Games. Unreal Engine-Features. Available online: https://epicgames.com/ (accessed on 21 July 2017).

- VR Status Unreal Engine VS Unity. Available online: https://www.vrstatus.com/news/unreal-engine-vs-unity.html (accessed on 21 July 2017).

- Pluralsight. Unreal Engine 4 vs. Unity: Which Game Engine Is Best for You? Available online: https://www.pluralsight.com/blog/film-games/unreal-engine-4-vs-unity-game-engine-best (accessed on 10 August 2017).

- Eisenberg, A. Unity vs. Unreal Engine—Best VR Gaming Platforms. Available online: https://appreal-vr.com/blog/unity-or-unreal-best-vr-gaming-platforms (accessed on 21 July 2017).

- Rollmann, W. Zwei neue stereoskopische Methoden (Two new stereoscopic methods). Ann. Phys. 1853, 166, 186–187. [Google Scholar] [CrossRef]

- McAllister, D.F. Stereo and 3D Display Technologies. Encycl. Imaging Sci. Technol. 2006, 1327–1344. [Google Scholar] [CrossRef]

- Tomasi, C.; Kanade, T. Detection and Tracking of Point Features; School of Computer Science, Carnegie Mellon University: Pittsburgh, PA, USA, 1991. [Google Scholar]

- Hoff, W.; Ahuja, N. Surfaces from stereo: Integrating feature matching, disparity estimation, and contour detection. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 121–136. [Google Scholar] [CrossRef]

- Bicego, M.; Lagorio, A.; Grosso, E.; Tistarelli, M. On the use of SIFT features for face authentication. In Proceedings of the IEEE Computer Vision and Pattern Recognition Workshop, New York, NY, USA, 17–23 June 2006; p. 35. [Google Scholar]

- Shi, J.; Tomasi, C. Good features to track. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 15–17 June 1993; pp. 593–600. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; pp. 674–679. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Tuytelaars, T.; Mikolajczyk, K. Local invariant feature detectors: a survey. Found. Trends Comput. Graph. Vis. 2008, 3, 177–280. [Google Scholar] [CrossRef] [Green Version]

- Juan, L.; Gwun, O. A Comparison of SIFT, PCA-SIFT and SURF. Int. J. Image Process. 2009, 3, 143–152. [Google Scholar]

- Scharstein, D.; Szeliski, R. High-accuracy stereo depth maps using structured light. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 16–22 June 2003; Volume 1, pp. 195–202. [Google Scholar]

- Computational Stereo Vision Gallery—From the Website. Available online: http://www.ivs.auckland.ac.nz/web/scene_gallery.php (accessed on 21 July 2017).

| Unity 5 | Unreal Engine 4 |

|---|---|

| Development feature | |

| Mainly based on C# & JavaScript | Mainly based on C++ & UnrealScript |

| Requires knowledge of programming (at least C# or JavaScript) for project development | Include Blueprint feature, which allows developing a project with minimum knowledge of Coding |

| Provides wider tweak & settings for the environment and object | Environment & object settings in Blueprint are limited. Requires custom-made model & platform for more settings |

| Graphic is good enough for mobile users, but limited compared with Unreal Engine | Graphic is generally better, including better shadow, physic, terrain |

| Better performance for mobile use | Better performance for PC & Console |

| Supports a wide range of platforms, including mobile & web | Supports mainly PC and Console |

| Development fee | |

|

|

| Community | |

| Huge user base | Moderate user base |

| Extensive asset library, including objects, scripts, animation, tools required for a complete game with low fee | Small asset library. High fee of use |

| Huge database of the tutorial, including videos, demo & scripts. Requires less time for training | Limited database of tutorial |

| Community & Tutorials suitable for all types of developers, including beginners and hobbyist | Tutorials largely are designed for designers rather than programmers |

| Method | AVG Matches | STD Matches | AVG Time (Seconds) | STD Time (Seconds) |

|---|---|---|---|---|

| KLT | 811 | 60 | 0.95 | 0.29 |

| SURF | 810 | 167 | 1.72 | 0.36 |

| SIFT | 714 | 235 | 2.04 | 0.67 |

| Method | AVG | STD | AVG | STD | AVG | STD | AVG | STD |

|---|---|---|---|---|---|---|---|---|

| KLT | 0.16 | 0.12 | 0.27 | 0.24 | 0.47 | 0.50 | 24 | 46 |

| SURF | 0.16 | 0.12 | 0.26 | 0.23 | 0.43 | 0.47 | 31 | 53 |

| SIFT | 0.13 | 0.11 | 0.17 | 0.18 | 0.21 | 0.29 | 27 | 60 |

| Image Types | Matched between | ||

|---|---|---|---|

| “Normal” & Anaglyphic | left/right half | Small | Small |

| Stereogram & Side by Side | left/right half | Large | Small |

| “Normal” | red/green channels | Large | Large |

| Anaglyphic | red/green channels | Large | Small |

| Stereogram | 1st/2nd quarters | Large | Small |

| Side by Side | 1st/2nd quarters | Small | Small |

| Image Type | Correct Detection | Incorrect Detection | Percentage |

|---|---|---|---|

| “normal” images (type NOR) | 48 | 2 | 96% |

| anaglyphs (type ANAG) | 50 | 0 | 100% |

| side-by-side photos (type SBS) | 50 | 0 | 100% |

| stereograms (type STE) | 50 | 0 | 100% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, M.; Tran, H.; Le, H. Exploration of the 3D World on the Internet Using Commodity Virtual Reality Devices. Multimodal Technol. Interact. 2017, 1, 15. https://doi.org/10.3390/mti1030015

Nguyen M, Tran H, Le H. Exploration of the 3D World on the Internet Using Commodity Virtual Reality Devices. Multimodal Technologies and Interaction. 2017; 1(3):15. https://doi.org/10.3390/mti1030015

Chicago/Turabian StyleNguyen, Minh, Huy Tran, and Huy Le. 2017. "Exploration of the 3D World on the Internet Using Commodity Virtual Reality Devices" Multimodal Technologies and Interaction 1, no. 3: 15. https://doi.org/10.3390/mti1030015