Open Source and Independent Methods for Bundle Adjustment Assessment in Close-Range UAV Photogrammetry

Abstract

:1. Introduction

2. Related Work

2.1. UAV

2.2. Bundle Adjustment

- Free-network bundle adjustment: The free-network approach involves a calculation of the exterior parameters in an arbitrary coordinate system, followed by a 3D similarity transformation to align the network to the coordinate system of the control point (“the real world system”). In classical aerial photogrammetry, this approach echoes the relative orientation (free network orientation) and the absolute orientation (similarity transformation) steps.

- Block bundle adjustment: The block bundle approach involves a simultaneous least-squares estimation of the 3D point coordinates, the external camera parameters and, optionally, the internal camera parameters, in the coordinate system of the control points. This is done by introducing at least three control points and integrating them within the computation matrix. Appropriate weights can be applied to these observations.

2.3. Software Solutions

2.3.1. Agisoft PhotoScan

2.3.2. DBAT

2.3.3. Apero

3. Data Acquisition and Research Design

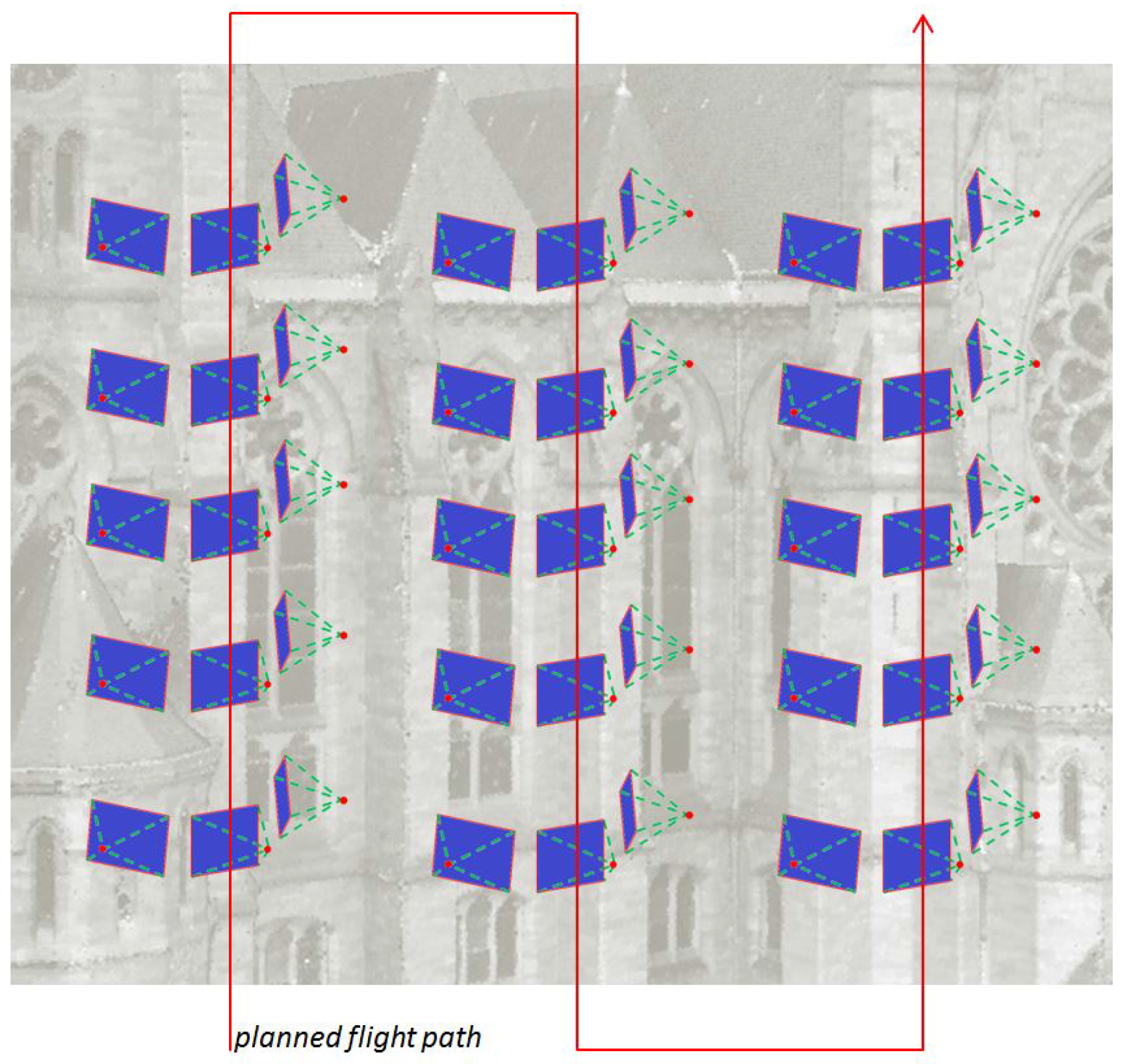

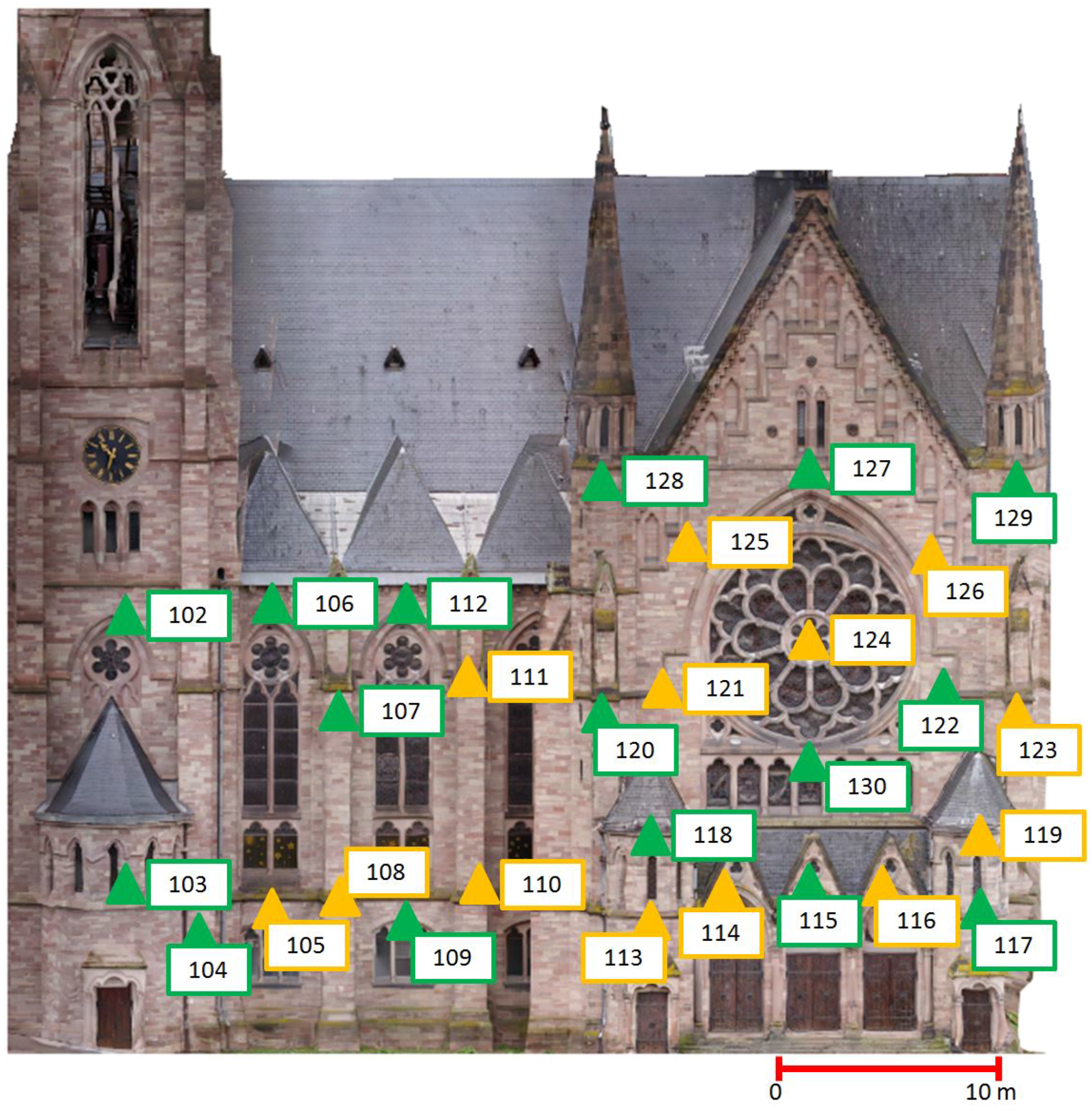

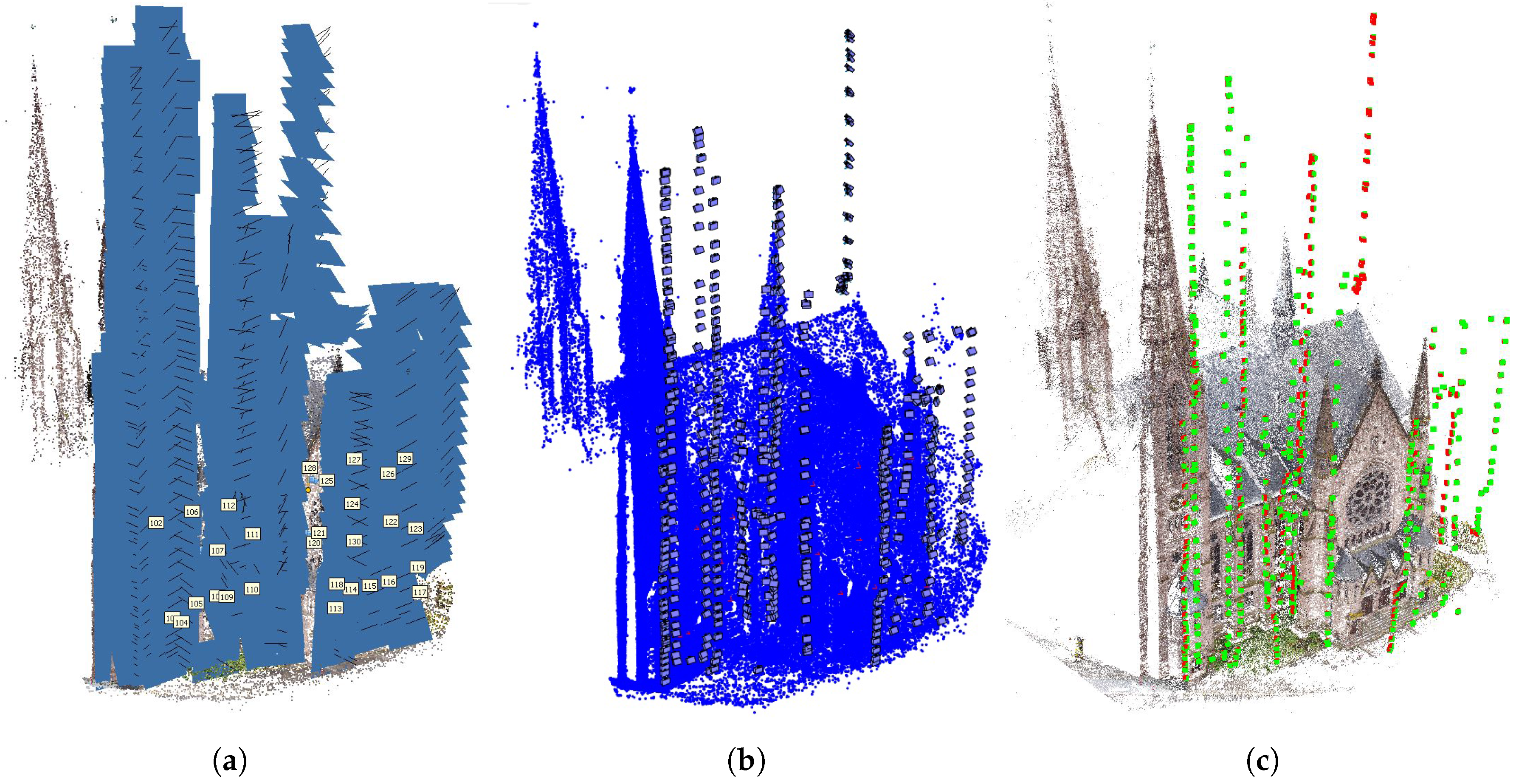

3.1. Project Planning

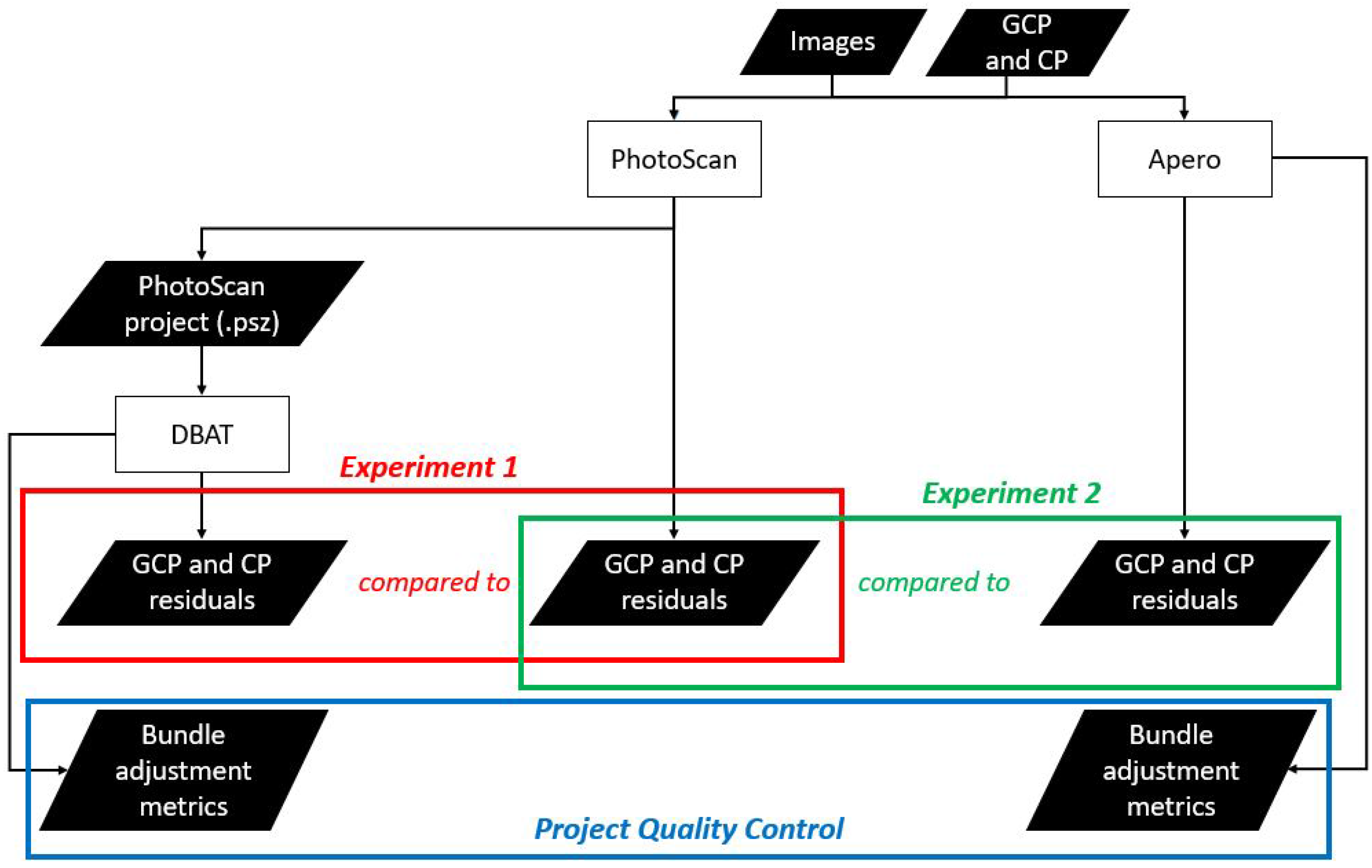

3.2. Experiments

4. Results and Discussions

4.1. Bundle Adjustment Assessment

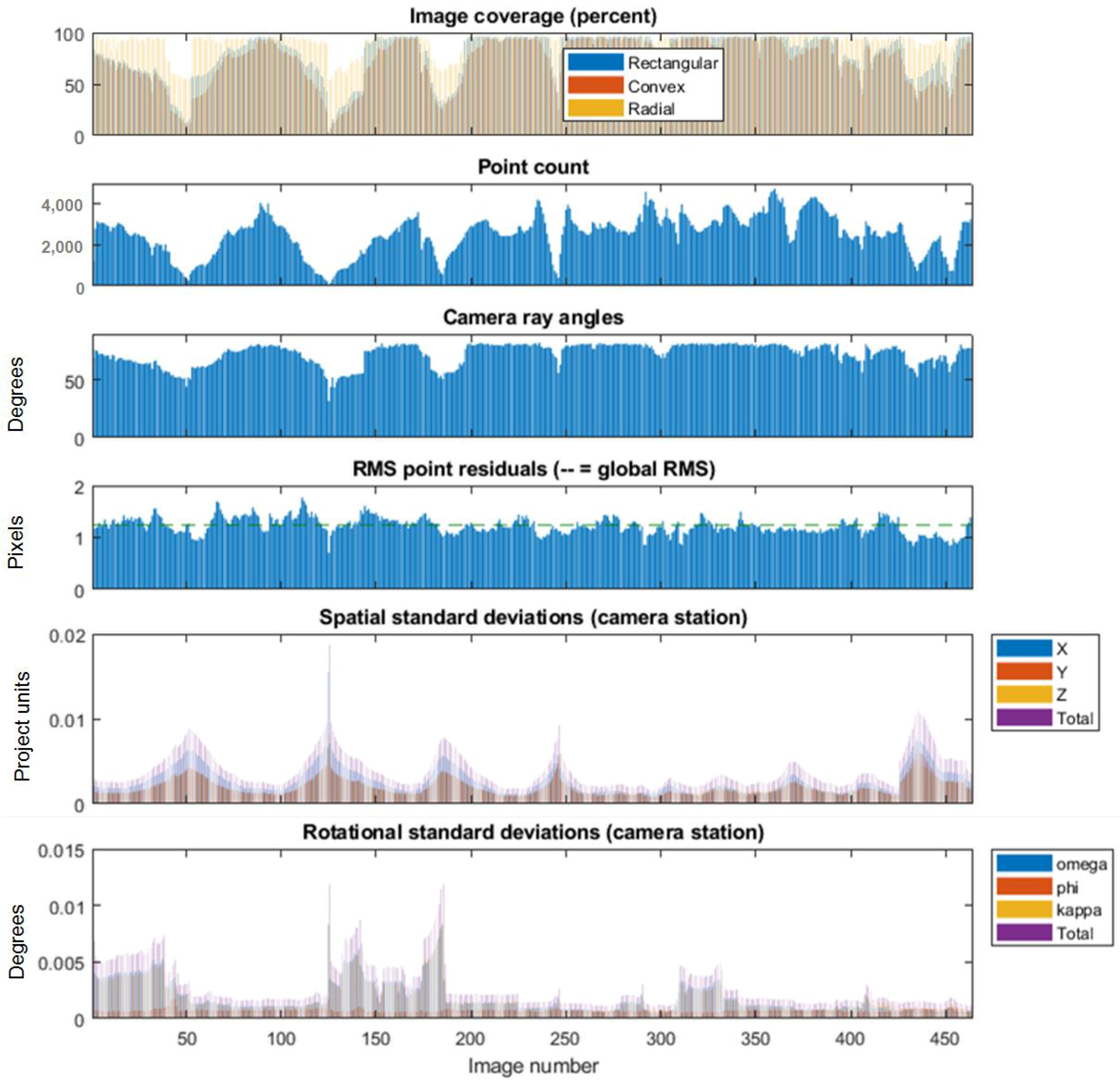

4.1.1. Experiment 1: Reprocessing of PhotoScan Using DBAT

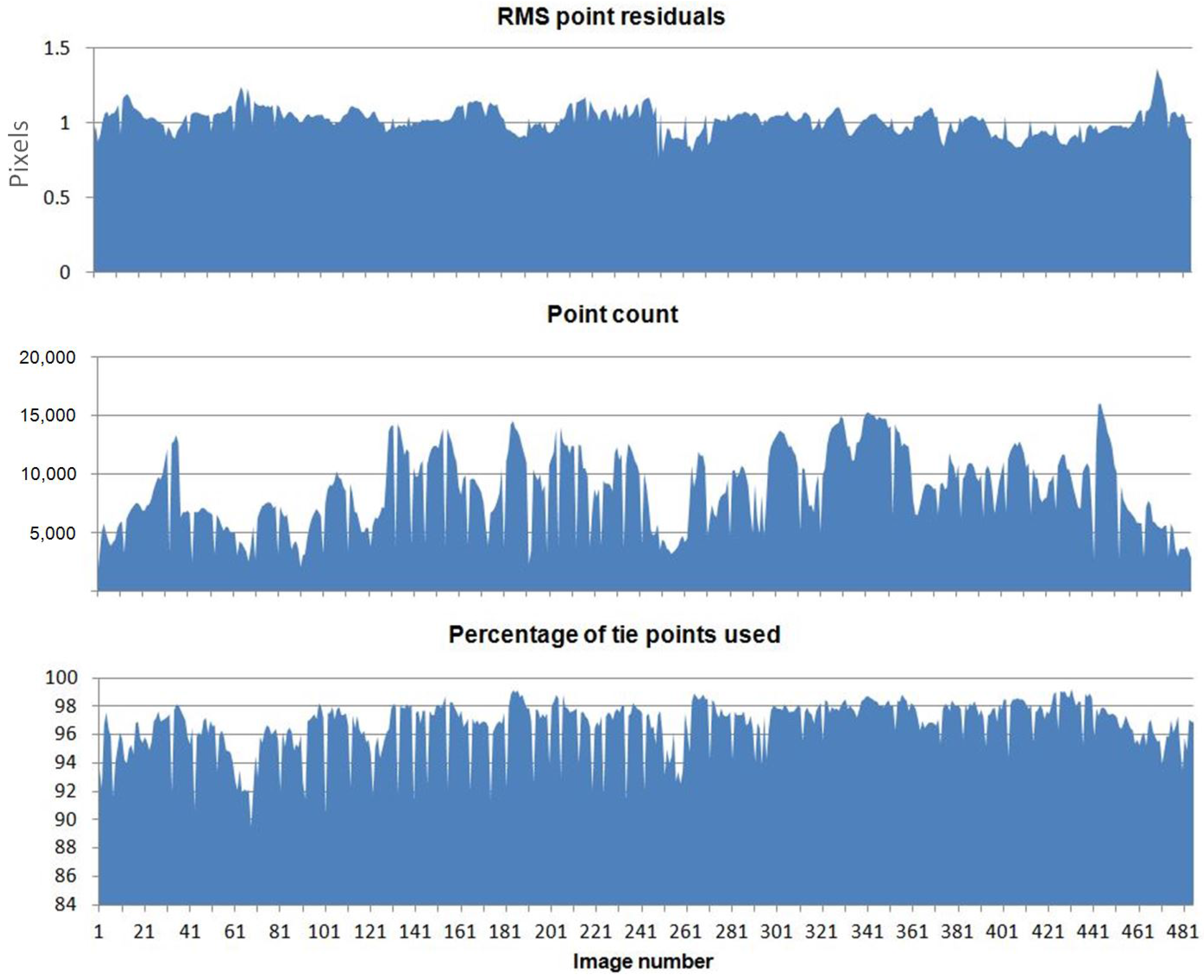

4.1.2. Experiment 2: Independent Check Using Apero

4.2. Quality Control

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Grussenmeyer, P.; Al Khalil, O. Solutions for exterior orientation in photogrammetry: A review. Photogramm. Rec. 2002, 17, 615–634. [Google Scholar] [CrossRef]

- Fritsch, D.; Becker, S.; Rothermel, M. Modeling Façade Structures Using Point Clouds From Dense Image Matching. In Proceedings of the International Conference on Advances in Civil, Structural and Mechanical Engineering, Hong Kong, China, 3–4 August 2013; pp. 57–64. [Google Scholar]

- Alidoost, F.; Arefi, H. An image-based technique for 3D building reconstruction using multi-view UAV images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W5, 43–46. [Google Scholar] [CrossRef]

- Hanan, H.; Suwardhi, D.; Nurhasanah, T.; Bukit, E.S. Batak Toba Cultural Heritage and Close-range Photogrammetry. Procedia Soc. Behav. Sci. 2015, 184, 187–195. [Google Scholar] [CrossRef]

- Suwardhi, D.; Menna, F.; Remondino, F.; Hanke, K.; Akmalia, R. Digital 3D Borobudur—Integration of 3D Surveying and Modeling Techniques. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W7, 417–423. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Grussenmeyer, P. Documentation of heritage buildings using close-range UAV images: Dense matching issues, comparison and case studies. Photogramm. Rec. 2017, 32, 206–229. [Google Scholar] [CrossRef]

- Grenzdörffer, G.J.; Naumann, M.; Niemeyer, F.; Frank, A. Symbiosis of UAS Photogrammetry and TLS for Surveying and 3D Modeling of Cultural Heritage Monuments—A Case Study About the Cathedral of St. Nicholas in the City of Greifswald. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-1/W4, 91–96. [Google Scholar]

- Grussenmeyer, P.; Landes, T.; Alby, E.; Carozza, L. High Resolution 3D Recording and Modelling of the Bronze Age Cave “Les Fraux” in Perigord (France). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, XXXVIII, 262–267. [Google Scholar]

- Remondino, F. Heritage recording and 3D modeling with photogrammetry and 3D scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef] [Green Version]

- Nocerino, E.; Lago, F.; Morabito, D.; Remondino, F.; Porzi, L.; Poiesi, F.; Rota Bulo, S.; Chippendale, P.; Locher, A.; Havlena, M.; et al. A smartphone-based 3D pipeline for the creative industry—The replicate eu project. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 535–541. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Hirschmüller, H. Accurate and efficient stereo processing by semi-global matching and mutual information. IEEE Int. Conf. Comput. Vis. Pattern Recognit. 2005, 2, 807–814. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multi-view stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1362–1376. [Google Scholar]

- Achille, C.; Adami, A.; Chiarini, S.; Cremonesi, S.; Fassi, F.; Fregonese, L.; Taffurelli, L. UAV-based photogrammetry and integrated technologies for architectural applications-methodological strategies for the after-quake survey of vertical structures in Mantua (Italy). Sensors 2015, 15, 15520–15539. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Murtiyoso, A.; Grussenmeyer, P.; Koehl, M.; Freville, T. Acquisition and Processing Experiences of Close Range UAV Images for the 3D Modeling of Heritage Buildings. In Proceedings of the 6th International Conference on Digital Heritage EuroMed 2016. Progress in Cultural Heritage: Documentation, Preservation, and Protection: Part I, Nicosia, Cyprus, 31 October–5 November 2016; Ioannides, M., Fink, E., Moropoulou, A., Hagedorn-Saupe, M., Fresa, A., Liestøl, G., Rajcic, V., Grussenmeyer, P., Eds.; Springer International Publishing: Berlin, Germany, 2016; pp. 420–431. [Google Scholar]

- Pierrot-Deseilligny, M.; Clery, I. Apero, an Open Source Bundle Adjusment Software for Automatic Calibration and Orientation of Set of Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXVIII, 269–276. [Google Scholar] [CrossRef]

- González-Aguilera, D.; López-Fernández, L.; Rodriguez-Gonzalvez, P.; Guerrero, D.; Hernandez-Lopez, D.; Remondino, F.; Menna, F.; Nocerino, E.; Toschi, I.; Ballabeni, A.; Gaiani, M. Development of an all-purpose free photogrammetric tool. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 31–38. [Google Scholar] [CrossRef]

- Burns, J.H.R.; Delparte, D. Comparison of Commercial Structure-From-Motion Photogrammety Software Used for Underwater Three-Dimensional Modeling of Coral Reef Environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 127–131. [Google Scholar] [CrossRef]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef]

- Luhmann, T.; Robson, S.; Kyle, S.; Boehm, J. Close-Range Photogrammetry and 3D Imaging, 2nd ed.; De Gruyter: Berlin, Germany, 2014; p. 684. [Google Scholar]

- Börlin, N.; Grussenmeyer, P. External Verification of the Bundle Adjustment in Photogrammetric Software Using the Damped Bundle Adjustment Toolbox. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B5, 7–14. [Google Scholar]

- Murtiyoso, A.; Grussenmeyer, P.; Börlin, N. Reprocessing Close Range Terrestrial and UAV Photogrammetric Projects with the DBAT Toolbox for Independent Verification and Quality Control. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2 W8, 171–177. [Google Scholar] [CrossRef]

- Ouédraogo, M.M.; Degré, A.; Debouche, C.; Lisein, J. The evaluation of unmanned aerial system-based photogrammetry and terrestrial laser scanning to generate DEMs of agricultural watersheds. Geomorphology 2014, 214, 339–355. [Google Scholar] [CrossRef]

- Jaud, M.; Passot, S.; Le Bivic, R.; Delacourt, C.; Grandjean, P.; Le Dantec, N. Assessing the accuracy of high resolution digital surface models computed by PhotoScan® and MicMac® in sub-optimal survey conditions. Remote Sens. 2016, 8, 1–18. [Google Scholar] [CrossRef]

- Remondino, F.; Del Pizzo, S.; Kersten, T.P.; Troisi, S. Low-cost and open-source solutions for automated image orientation - a critical overview. Prog. Cult. Herit. Preserv. 2012, 7616 LNCS, 40–54. [Google Scholar]

- James, M.R.; Robson, S.; D’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; Smith, M.W. 3-D uncertainty-based topographic change detection with structure-from-motion photogrammetry: precision maps for ground control and directly georeferenced surveys. Earth Surface Process. Landf. 2017, 42, 1769–1788. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV: platforms, regulations, data acquisition and processing. In 3D Recording and Modelling in Archaeology and Cultural Heritage: Theory and Best Practices; Remondino, F., Campana, S., Eds.; chapter Photogramm; Archaeopress: Oxford, UK, 2014; pp. 73–86. [Google Scholar]

- Baiocchi, V.; Dominici, D.; Mormile, M. UAV application in post-seismic environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 21–25. [Google Scholar] [CrossRef]

- Roca, D.; Laguela, S.; Diaz-Vilarino, L.; Armesto, J.; Arias, P. Low-cost aerial unit for outdoor inspection of building façades. Autom. Constr. 2013, 36, 128–135. [Google Scholar] [CrossRef]

- Cramer, M. The UAV @ LGL BW project—A NMCA case study. In Proceedings of the 54th Photogrammetric Week, Stuttgart, Germany, 9–13 September 2013; pp. 165–179. [Google Scholar]

- Chiabrando, F.; Donadio, E.; Rinaudo, F. SfM for orthophoto generation: a winning approach for cultural heritage knowledge. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-5/W7, 91–98. [Google Scholar] [CrossRef]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV photogrammetry for mapping and 3D modeling-current status and future perspectives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, XXXVIII, 25–31. [Google Scholar] [CrossRef]

- Schenk, T. Introduction to Photogrammetry; Department of Civil and Environmental Engineering and Geodetic Science, The Ohio State University: Columbus, OH, USA, 2005; pp. 79–95. [Google Scholar]

- Granshaw, S.I. Photogrammetric Terminology: Third Edition. Photogramm. Rec. 2016, 31, 210–252. [Google Scholar] [CrossRef]

- Wolf, P.; DeWitt, B.; Wilkinson, B. Elements of Photogrammetry with Applications in GIS, 4th ed.; McGraw-Hill Education: New York, NY, USA, 2014; p. 696. [Google Scholar]

- Granshaw, S.I. Bundle Adjustment Methods in Engineering Photogrammetry. Photogramm. Rec. 1980, 10, 181–207. [Google Scholar] [CrossRef]

- Bedford, J. Photogrammetric Applications for Cultural Heritage; Historic: Swindon, UK, 2017; p. 128. [Google Scholar]

- Börlin, N.; Grussenmeyer, P. Bundle adjustment with and without damping. Photogramm. Rec. 2013, 28, 396–415. [Google Scholar] [CrossRef]

- Börlin, N.; Grussenmeyer, P. Experiments with Metadata-derived Initial Values and Linesearch Bundle Adjustment in Architectural Photogrammetry. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-5/W1, 43–48. [Google Scholar]

- Rupnik, E.; Daakir, M.; Pierrot Deseilligny, M. MicMac - a free, open-source solution for photogrammetry. Open Geospat. Data, Softw. Stand. 2017, 2, 14. [Google Scholar] [CrossRef]

- Verhoeven, G. Taking Computer Vision Aloft - Archaeological Three-dimensional Reconstructions from Aerial Photographs with PhotoScan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Murtiyoso, A.; Koehl, M.; Grussenmeyer, P.; Freville, T. Acquisition and Processing Protocols for UAV Images: 3D Modeling of Historical Buildings using Photogrammetry. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, Vol. 4, 163–170. [Google Scholar] [CrossRef]

- Wu, C. VisualSFM : A Visual Structure from Motion System. 2017. Available online: http://ccwu.me/vsfm/ (accessed on 4 December 2017).

- Wenzel, K.; Rothermel, M.; Haala, N.; Fritsch, D. SURE-The IfP software for dense image matching. In Proceedings of the 54th Photogrammetric Week, Stuttgart, Germany, 9–13 September 2013; pp. 59–70. [Google Scholar]

- Börlin, N.; Grussenmeyer, P. Camera Calibration using the Damped Bundle Adjustment Toolbox. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II, 89–96. [Google Scholar] [CrossRef]

- Dall’Asta, E.; Thoeni, K.; Santise, M.; Forlani, G.; Giacomini, A.; Roncella, R. Network design and quality checks in automatic orientation of close-range photogrammetric blocks. Sensors 2015, 15, 7985–8008. [Google Scholar] [CrossRef] [PubMed]

- Pierrot-Deseilligny, M.; Paparoditis, N. A multiresolution and optimization-based image matching approach: An application to surface reconstruction from SPOT5-HRS stereo imagery. In Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, XXXVI, 1–5. [Google Scholar]

- DGAC (Direction générale de l’aviation civile). Arrêté du 17 Décembre 2015 Relatif à L’utilisation de L’espace Aérien par les Aéronefs qui Circulent Sans Personne à Bord; 2015. [Google Scholar]

- Kraus, K.; Waldhäusl, P. Manuel de Photogrammétrie; Hermes: Paris, France, 1998. [Google Scholar]

- Gerke, M.; Przybilla, H.J. Accuracy Analysis of Photogrammetric UAV Image Blocks: Influence of Onboard RTK-GNSS and Cross Flight Patterns. Photogramm. Fernerkund. Geoinf. 2016, 2016, 17–30. [Google Scholar] [CrossRef]

- Kim, J.; Lee, S.; Ahn, H.; Seo, D.; Park, S.; Choi, C. Feasibility of employing a smartphone as the payload in a photogrammetric UAV system. ISPRS J. Photogramm. Remote Sens. 2013, 79, 1–18. [Google Scholar] [CrossRef]

- Ai, M.; Hu, Q.; Li, J.; Wang, M.; Yuan, H.; Wang, S. A robust photogrammetric processing method of low-altitude UAV images. Remote Sens. 2015, 7, 2302–2333. [Google Scholar] [CrossRef]

Sample Availability: The 3D model of the St-Paul church resulting from this project can be consulted through the following Sketchfab link: https://skfb.ly/6vtQT (accessed on 20 December 2017). |

| Point | PhotoScan | DBAT | ||||||

|---|---|---|---|---|---|---|---|---|

| X (mm) | Y (mm) | Z (mm) | 3D (mm) | X (mm) | Y (mm) | Z (mm) | 3D (mm) | |

| 102 | 0.9 | 0.5 | 0.3 | 1.1 | −2.0 | 0.0 | 2.0 | 2.8 |

| 103 | −1.6 | 0.0 | 3.7 | 4.0 | −1.0 | −1.0 | 5.0 | 5.2 |

| 104 | −3.5 | 0.1 | 0.0 | 3.5 | −3.0 | −1.0 | 0.0 | 3.2 |

| 106 | −3.2 | −7.4 | −3.3 | 8.7 | −5.0 | −7.0 | −2.0 | 8.8 |

| 107 | 0.5 | 1.7 | −4.2 | 4.6 | 1.0 | 2.0 | −4.0 | 4.6 |

| 109 | −1.7 | 1.9 | 3.2 | 4.1 | −1.0 | 3.0 | 3.0 | 4.4 |

| 112 | 2.8 | −4.8 | 2.9 | 6.3 | 2.0 | −4.0 | 2.0 | 4.9 |

| 115 | −0.1 | 2.1 | −1.2 | 2.5 | −1.0 | 3.0 | −1.0 | 3.3 |

| 117 | 3.3 | 4.4 | 0.7 | 5.5 | 3.0 | 6.0 | 1.0 | 6.8 |

| 118 | 2.8 | −0.4 | 0.7 | 2.9 | 3.0 | −1.0 | 0.0 | 3.2 |

| 120 | −3.1 | −4.6 | −0.8 | 5.6 | −3.0 | −5.0 | 0.0 | 5.8 |

| 122 | 0.5 | −7.0 | −1.0 | 7.1 | 1.0 | −6.0 | −2.0 | 6.4 |

| 127 | 3.6 | 5.5 | −0.3 | 6.5 | 4.0 | 4.0 | −1.0 | 5.7 |

| 128 | −3.4 | 2.9 | 0.5 | 4.5 | −4.0 | 2.0 | 0.0 | 4.5 |

| 129 | −0.1 | −5.7 | 1.5 | 5.9 | 0.0 | −5.0 | −1.0 | 5.1 |

| 130 | 0.9 | 5.3 | −3.4 | 6.4 | 1.0 | 5.0 | −4.0 | 6.5 |

| RMS | 5.3 | RMS | 5.3 | |||||

| Point | PhotoScan | DBAT | ||||||

|---|---|---|---|---|---|---|---|---|

| X (mm) | Y (mm) | Z (mm) | 3D (mm) | X (mm) | Y (mm) | Z (mm) | 3D (mm) | |

| 105 | 6.3 | −1.7 | −3.2 | 7.3 | 7.0 | −2.0 | −4.0 | 8.3 |

| 108 | −2.7 | 1.9 | 0.7 | 3.4 | −2.0 | 1.0 | 1.0 | 2.4 |

| 110 | −1.5 | 6.5 | −6.2 | 9.1 | 0.0 | 7.0 | −6.0 | 9.2 |

| 111 | 0.8 | 2.0 | 0.1 | 2.1 | 2.0 | 1.0 | 0.0 | 2.2 |

| 113 | −0.1 | −0.5 | −2.7 | 2.7 | 2.0 | −1.0 | −3.0 | 3.7 |

| 114 | 4.7 | 3.0 | −2.5 | 6.1 | 5.0 | 3.0 | −3.0 | 6.6 |

| 116 | 2.6 | −1.3 | −1.3 | 3.2 | 4.0 | 0.0 | −1.0 | 4.1 |

| 119 | 1.4 | −0.1 | 4.1 | 4.3 | −1.0 | 2.0 | 3.0 | 3.7 |

| 121 | −0.7 | −1.4 | 3.2 | 3.5 | 0.0 | −2.0 | 3.0 | 3.6 |

| 123 | 1.0 | 3.6 | −5.9 | 7.0 | 2.0 | 2.0 | −2.0 | 3.5 |

| 124 | −0.6 | 6.7 | −0.9 | 6.7 | 0.0 | 5.0 | −2.0 | 5.4 |

| 125 | 0.1 | 9.7 | −5.7 | 11.3 | 0.0 | 9.0 | −6.0 | 10.8 |

| 126 | −1.1 | 6.1 | 3.8 | 7.3 | 1.0 | 2.0 | 3.0 | 3.7 |

| RMS | 6.3 | RMS | 5.8 | |||||

| Point | PhotoScan | Apero | ||||||

|---|---|---|---|---|---|---|---|---|

| X (mm) | Y (mm) | Z (mm) | 3D (mm) | X (mm) | Y (mm) | Z (mm) | 3D (mm) | |

| 102 | 0.9 | 0.5 | 0.3 | 1.1 | 1.1 | 0.9 | 0.4 | 1.5 |

| 103 | −1.6 | 0.0 | 3.7 | 4.0 | 4.6 | −1.3 | −5.9 | 7.6 |

| 104 | −3.5 | 0.1 | 0.0 | 3.5 | 1.3 | −0.6 | −4.9 | 5.1 |

| 106 | −3.2 | −7.4 | −3.3 | 8.7 | 2.6 | 17.0 | 1.9 | 17.3 |

| 107 | 0.5 | 1.7 | −4.2 | 4.6 | −0.7 | −4.3 | 5.5 | 7.0 |

| 109 | −1.7 | 1.9 | 3.2 | 4.1 | 1.9 | −2.6 | −4.6 | 5.6 |

| 112 | 2.8 | −4.8 | 2.9 | 6.3 | −1.7 | 6.9 | −2.8 | 7.6 |

| 115 | −0.1 | 2.1 | −1.2 | 2.5 | −1.2 | 0.7 | 0.4 | 1.4 |

| 117 | 3.3 | 4.4 | 0.7 | 5.5 | −10.7 | −5.5 | −2.5 | 12.2 |

| 118 | 2.8 | −0.4 | 0.7 | 2.9 | −3.7 | −1.9 | −1.7 | 4.5 |

| 120 | −3.1 | −4.6 | −0.8 | 5.6 | 6.6 | 1.6 | 6.4 | 9.3 |

| 122 | 0.5 | −7.0 | −1.0 | 7.1 | −1.3 | 4.7 | 6.6 | 8.2 |

| 127 | 3.6 | 5.5 | −0.3 | 6.5 | −3.3 | −5.0 | −0.8 | 6.0 |

| 128 | −3.4 | 2.9 | 0.5 | 4.5 | 5.0 | 2.5 | −0.9 | 5.7 |

| 129 | −0.1 | −5.7 | 1.5 | 5.9 | 1.8 | 5.2 | −0.4 | 5.5 |

| 130 | 0.9 | 5.3 | −3.4 | 6.4 | −1.1 | −7.0 | 4.9 | 8.6 |

| RMS | 5.3 | RMS | 8.0 | |||||

| Point | PhotoScan | Apero | ||||||

|---|---|---|---|---|---|---|---|---|

| X (mm) | Y (mm) | Z (mm) | 3D (mm) | X (mm) | Y (mm) | Z (mm) | 3D (mm) | |

| 105 | 6.3 | −1.7 | −3.2 | 7.3 | −4.1 | −4.3 | 4.1 | 7.2 |

| 108 | −2.7 | 1.9 | 0.7 | 3.4 | 1.8 | −3.8 | −3.1 | 5.2 |

| 110 | −1.5 | 6.5 | −6.2 | 9.1 | 0.6 | −7.2 | 2.9 | 7.8 |

| 111 | 0.8 | 2.0 | 0.1 | 2.1 | −0.6 | −0.2 | −1.3 | 1.4 |

| 113 | −0.1 | −0.5 | −2.7 | 2.7 | 0.7 | −0.5 | 4.2 | 4.3 |

| 114 | 4.7 | 3.0 | −2.5 | 6.1 | −4.3 | −2.5 | 2.3 | 5.4 |

| 116 | 2.6 | −1.3 | −1.3 | 3.2 | −8.1 | 3.6 | −2.2 | 9.1 |

| 119 | 1.4 | −0.1 | 4.1 | 4.3 | −4.9 | 4.7 | −3.5 | 7.7 |

| 121 | −0.7 | −1.4 | 3.2 | 3.5 | −1.4 | 1.2 | −6.8 | 7.1 |

| 123 | 1.0 | 3.6 | −5.9 | 7.0 | −2.5 | −5.3 | 4.3 | 7.3 |

| 124 | −0.6 | 6.7 | −0.9 | 6.7 | −0.5 | −0.6 | 1.3 | 3.7 |

| 125 | 0.1 | 9.7 | −5.7 | 11.3 | −0.1 | 4.7 | 3.2 | 5.7 |

| 126 | −1.1 | 6.1 | 3.8 | 7.3 | −5.6 | 4.4 | −2.8 | 7.7 |

| RMS | 6.3 | RMS | 6.4 | |||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Murtiyoso, A.; Grussenmeyer, P.; Börlin, N.; Vandermeerschen, J.; Freville, T. Open Source and Independent Methods for Bundle Adjustment Assessment in Close-Range UAV Photogrammetry. Drones 2018, 2, 3. https://doi.org/10.3390/drones2010003

Murtiyoso A, Grussenmeyer P, Börlin N, Vandermeerschen J, Freville T. Open Source and Independent Methods for Bundle Adjustment Assessment in Close-Range UAV Photogrammetry. Drones. 2018; 2(1):3. https://doi.org/10.3390/drones2010003

Chicago/Turabian StyleMurtiyoso, Arnadi, Pierre Grussenmeyer, Niclas Börlin, Julien Vandermeerschen, and Tristan Freville. 2018. "Open Source and Independent Methods for Bundle Adjustment Assessment in Close-Range UAV Photogrammetry" Drones 2, no. 1: 3. https://doi.org/10.3390/drones2010003