Fusion of Multispectral Imagery and Spectrometer Data in UAV Remote Sensing

Abstract

:1. Introduction

2. Methodology

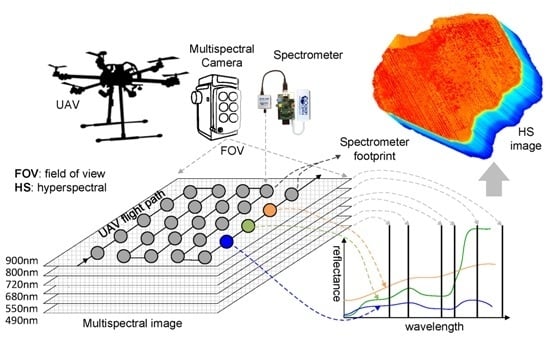

2.1. The Conceptual Framework of Data Acquisition and Fusion

2.2. Alignment of Spectrometer and Multispectral Camera Data

2.3. Fusion of Spectrometer and Multispectral Camera Data

3. Study Site, UAV System, and Flight Design

3.1. Study Site

3.2. UAV Spectrometer and Multispectral Camera Systems

4. Results: Data Alignment and Fusion to Produce Estimated Hyperspectral Imagery

4.1. The Data Alignment Procedure

4.1.1. Profile of Time Domain Alignment

4.1.2. Profile of Spatial Domain Alignment

4.1.3. Global Optimization vs. Two-Step Optimization

4.2. The Performance of Multispectral-Spectrometer Data Fusion

4.2.1. Accuracy of Data Fusion

4.2.2. Stability of Data Fusion Parameters

4.2.3. Comparison of Data Fusion Methods

4.3. Fused Hyperspectral Imagery

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, M.; Qin, Z.; Liu, X.; Ustin, S.L. Detection of stress in tomatoes induced by late blight disease in California, USA, using hyperspectral remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2003, 4, 295–310. [Google Scholar] [CrossRef]

- Goel, P.K.; Prasher, S.O.; Landry, J.A.; Patel, R.M.; Viau, A.A.; Miller, J.R. Estimation of crop biophysical parameters through airborne and field hyperspectral remote sensing. Trans. Am. Soc. Agric. Eng. 2003, 46, 1235–1246. [Google Scholar]

- Lee, W.S.; Alchanatis, V.; Yang, C.; Hirafuji, M.; Moshou, D.; Li, C. Sensing technologies for precision specialty crop production. Comput. Electron. Agric. 2010, 74, 2–33. [Google Scholar] [CrossRef]

- Houborg, R.; Fisher, J.B.; Skidmore, A.K. Advances in remote sensing of vegetation function and traits. Int. J. Appl. Earth Obs. Geoinf. 2015, 43, 1–6. [Google Scholar] [CrossRef]

- Navarro-Cerrillo, R.M.; Trujillo, J.; de la Orden, M.S.; Hernández-Clemente, R. Hyperspectral and multispectral satellite sensors for mapping chlorophyll content in a mediterranean Pinus sylvestris L. Plantation. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 88–96. [Google Scholar] [CrossRef]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Catalina, A.; González, M.R.; Martín, P. Relationships between net photosynthesis and steady-state chlorophyll fluorescence retrieved from airborne hyperspectral imagery. Remote Sens. Environ. 2013, 136, 247–258. [Google Scholar] [CrossRef]

- Gevaert, C.M.; Suomalainen, J.; Tang, J.; Kooistra, L. Generation of spectral—Temporal response surfaces by combining multispectral satellite and hyperspectral uav imagery for precision agriculture applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3140–3146. [Google Scholar] [CrossRef]

- Roelofsen, H.D.; van Bodegom, P.M.; Kooistra, L.; van Amerongen, J.J.; Witte, J.-P.M. An evaluation of remote sensing derived soil pH and average spring groundwater table for ecological assessments. Int. J. Appl. Earth Obs. Geoinf. 2015, 43, 149–159. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, M.V.; Fereres, E. Seasonal stability of chlorophyll fluorescence quantified from airborne hyperspectral imagery as an indicator of net photosynthesis in the context of precision agriculture. Remote Sens. Environ. 2016, 179, 89–103. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from uav images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (uavs). Photogramm. Eng. Remote Sens. 2015, 81, 281–329. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Kukko, A.; Yu, X.; Kaartinen, H.; Lehtomäki, M.; Lin, Y. A low-cost multi-sensoral mobile mapping system and its feasibility for tree measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Suomalainen, J.; Anders, N.; Iqbal, S.; Roerink, G.; Franke, J.; Wenting, P.; Hünniger, D.; Bartholomeus, H.; Becker, R.; Kooistra, L. A lightweight hyperspectral mapping system and photogrammetric processing chain for unmanned aerial vehicles. Remote Sens. 2014, 6, 11013–11030. [Google Scholar] [CrossRef]

- Uto, K.; Seki, H.; Saito, G.; Kosugi, Y.; Komatsu, T. Development of a low-cost, lightweight hyperspectral imaging system based on a polygon mirror and compact spectrometers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 861–875. [Google Scholar] [CrossRef]

- Burkart, A.; Cogliati, S.; Schickling, A.; Rascher, U. A novel uav-based ultra-light weight spectrometer for field spectroscopy. IEEE Sens. J. 2014, 14, 62–67. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3d hyperspectral information with lightweight uav snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, T.; Ma, L.; Wang, N. Spectral calibration of hyperspectral data observed from a hyperspectrometer loaded on an unmanned aerial vehicle platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2630–2638. [Google Scholar]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N.F. Radiometric and geometric analysis of hyperspectral imagery acquired from an unmanned aerial vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef]

- Zeng, C.; Richardson, M.; King, D.J. The impacts of environmental variables on water reflectance measured using a lightweight unmanned aerial vehicle (UAV)-based spectrometer system. ISPRS J. Photogramm. Remote Sens. 2017, 130, 217–230. [Google Scholar] [CrossRef]

- Rau, J.Y.; Jhan, J.P.; Huang, C.Y. Ortho-Rectification of Narrow Band Multi-Spectral Imagery Assisted by Dslr Rgb Imagery Acquired by a Fixed-Wing Uas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 67–74. [Google Scholar] [CrossRef]

- Yahyanejad, S.; Rinner, B. A fast and mobile system for registration of low-altitude visual and thermal aerial images using multiple small-scale uavs. ISPRS J. Photogramm. Remote Sens. 2015, 104, 189–202. [Google Scholar] [CrossRef]

- Mobley, C.D. Light and Water: Radiative Transfer in Natural Waters; Academic Press: San Diego, CA, USA, 1994. [Google Scholar]

- Andover, C. Standard Bandpass Optical Filter. Available online: https://www.andovercorp.com/products/bandpass-filters/standard/600-699nm/ (accessed on 1 June 2016).

- Mello, M.P.; Vieira, C.A.O.; Rudorff, B.F.T.; Aplin, P.; Santos, R.D.C.; Aguiar, D.A. Stars: A new method for multitemporal remote sensing. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1897–1913. [Google Scholar] [CrossRef]

- Villa, G.; Moreno, J.; Calera, A.; Amorós-López, J.; Camps-Valls, G.; Domenech, E.; Garrido, J.; González-Matesanz, J.; Gómez-Chova, L.; Martínez, J.A.; et al. Spectro-temporal reflectance surfaces: A new conceptual framework for the integration of remote-sensing data from multiple different sensors. Int. J. Remote Sens. 2013, 34, 3699–3715. [Google Scholar] [CrossRef]

- Murphy, K. Machine Learning: A Probabilistic Perspective; The MIT Press: Cambridge, MA, USA, 2012; p. 1096. [Google Scholar]

- Folch-Fortuny, A.; Arteaga, F.; Ferrer, A. Missing data imputation toolbox for matlab. Chemom. Intell. Lab. Syst. 2016, 154, 93–100. [Google Scholar] [CrossRef]

- Folch-Fortuny, A.; Arteaga, F.; Ferrer, A. Pca model building with missing data: New proposals and a comparative study. Chemom. Intell. Lab. Syst. 2015, 146, 77–88. [Google Scholar] [CrossRef]

- Grung, B.; Manne, R. Missing values in principal component analysis. Chemom. Intell. Lab. Syst. 1998, 42, 125–139. [Google Scholar] [CrossRef]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and multispectral image fusion based on a sparse representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review. IEEE Geosci. Remote Sens. Mag. 2017, in press. [Google Scholar] [CrossRef]

- Gamon, J.A.; Peñuelas, J.; Field, C.B. A narrow-waveband spectral index that tracks diurnal changes in photosynthetic efficiency. Remote Sens. Environ. 1992, 41, 35–44. [Google Scholar] [CrossRef]

| Optimization Methods | Pre-Processing | Time Domain | Space Domain | Data Fusion * |

|---|---|---|---|---|

| Global | 24.3 min | 16.9 min | 8.5 min | |

| Two-step | 1.9 s | 14.6 s | ||

| Category | Method | Time (s) | ME | MAE | RMSE (10−3) | STD_AE | SNR | UIQI | SAM | ERGAS | DD (10−3) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| TSR | 0.46 | 3.63% | 16.83% | 28.947 | 0.137 | 14.97 | 0.960 | 12.37 | 22.10 | 20.751 | |

| KDR | 0.50 | 5.09% | 17.54% | 28.954 | 0.141 | 14.97 | 0.961 | 12.47 | 22.22 | 20.896 | |

| PCA | PCR | 0.49 | 3.63% | 16.83% | 28.947 | 0.137 | 14.97 | 0.960 | 12.37 | 22.10 | 20.751 |

| KDR–PLS | 0.67 | 3.57% | 16.78% | 28.953 | 0.137 | 14.97 | 0.960 | 12.37 | 22.11 | 20.746 | |

| PMP | 0.50 | 3.63% | 16.83% | 28.947 | 0.137 | 14.97 | 0.960 | 12.37 | 22.10 | 20.751 | |

| IA | 0.19 | 3.63% | 16.83% | 28.947 | 0.137 | 14.97 | 0.960 | 12.37 | 22.10 | 20.751 | |

| NIPALS | 0.68 | 4.46% | 16.69% | 29.357 | 0.139 | 14.85 | 0.959 | 12.42 | 22.51 | 21.039 | |

| DA | 124.20 | 3.19% | 17.40% | 28.906 | 0.146 | 14.99 | 0.961 | 12.43 | 22.23 | 20.785 | |

| Bayesian | Gibbs | 38.67 | 2.80% | 17.41% | 28.620 | 0.142 | 15.07 | 0.962 | 12.30 | 22.02 | 20.649 |

| EM | 1.03 | 3.25% | 17.35% | 28.895 | 0.145 | 14.99 | 0.961 | 12.42 | 22.23 | 20.773 | |

| ICM | 0.20 | 3.70% | 17.28% | 28.910 | 0.144 | 14.99 | 0.961 | 12.43 | 22.23 | 20.769 | |

| Spline * | 0.004 | −4.28% | 116.63% | 39.437 | 28.990 | 13.29 | 0.923 | 14.18 | 26.71 | 30.678 |

| Method | #Image | #Training | #Test | Time (Min) | AvgR2 | Δt (s) | Δx (Pixel) | Δy (Pixel) | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Site 1 (tomato) | 128 | 107 | 21 | 40.23 | 0.946 | −0.2 | 45 | 5 | |||

| Site 2 (corn/soybean) | 228 | 185 | 43 | 41.25 | 0.715 | −1.2 | 85 | −20 | |||

| Time (s) | ME | MAE | RMSE (10−3) | STD_AE | SNR | UIQI | SAM | ERGAS | DD (10−3) | ||

| Site 1 | PCA_TSR | 0.46 | 3.63% | 16.83% | 28.947 | 0.137 | 14.97 | 0.9597 | 12.37 | 22.10 | 20.751 |

| Bys_Gibbs | 38.67 | 2.80% | 17.41% | 28.620 | 0.142 | 15.07 | 0.9617 | 12.30 | 22.02 | 20.649 | |

| Spline | 0.004 | −4.28% | 116.63% | 39.437 | 28.990 | 13.29 | 0.9230 | 14.18 | 26.71 | 30.678 | |

| Site 2 | PCA_TSR | 0.54 | −1.37% | 16.99% | 27.244 | 0.284 | 15.34 | 0.9651 | 13.65 | 21.01 | 15.903 |

| Bys_Gibbs | 91.07 | −3.10% | 16.80% | 27.488 | 0.309 | 15.26 | 0.9652 | 13.83 | 20.99 | 15.586 | |

| Spline | 0.05 | −4.32% | 111.52% | 38.474 | 43.272 | 13.37 | 0.9326 | 15.14 | 26.37 | 26.987 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, C.; King, D.J.; Richardson, M.; Shan, B. Fusion of Multispectral Imagery and Spectrometer Data in UAV Remote Sensing. Remote Sens. 2017, 9, 696. https://doi.org/10.3390/rs9070696

Zeng C, King DJ, Richardson M, Shan B. Fusion of Multispectral Imagery and Spectrometer Data in UAV Remote Sensing. Remote Sensing. 2017; 9(7):696. https://doi.org/10.3390/rs9070696

Chicago/Turabian StyleZeng, Chuiqing, Douglas J. King, Murray Richardson, and Bo Shan. 2017. "Fusion of Multispectral Imagery and Spectrometer Data in UAV Remote Sensing" Remote Sensing 9, no. 7: 696. https://doi.org/10.3390/rs9070696

APA StyleZeng, C., King, D. J., Richardson, M., & Shan, B. (2017). Fusion of Multispectral Imagery and Spectrometer Data in UAV Remote Sensing. Remote Sensing, 9(7), 696. https://doi.org/10.3390/rs9070696