Exploring Metaphorical Transformations of a Safety Boundary Wall in Virtual Reality

Abstract

:1. Introduction

2. Related Work

2.1. The Importance of Bystander Awareness

2.2. Bystander Awareness Cues in Virtual Reality

2.3. Metaphors in VR Visual Cues

2.4. Safety Boundaries in Virtual Reality

3. User Study

4. Discussion

5. Future Work: Exploring a Design Space for Interactive Safety Boundary Walls

- Dimension 1: Interactions between VR users and bystanders. We categorize these interactions into three types: interruption, coexistence, and AR interaction. Interruption refers to instances where bystanders disrupt the user’s VR experience. Coexistence refers to scenarios where bystanders and VR users share the same physical space without interfering with each other’s activities. AR interaction refers to scenarios in which bystanders interact with VR users through the visualization of the VR users’ safety boundary walls using augmented reality technology.

- Dimension 2: Transformations of the safety boundary wall. We mainly classify transformations of the safety boundary wall into three types: rigid (e.g., translation, rotation, etc.), non-rigid (e.g., fragmentation, height changes, etc.), and texture changes (e.g., color, material, etc.).

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- O’Hagan, J.; Williamson, J.R.; McGill, M.; Khamis, M. Safety, power imbalances, ethics and proxy sex: Surveying in-the-wild interactions between VR users and bystanders. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Bari, Italy, 4–8 October 2021; pp. 211–220. [Google Scholar]

- O’Hagan, J.; Khamis, M.; McGill, M.; Williamson, J.R. Exploring attitudes towards increasing user awareness of reality from within virtual reality. In Proceedings of the ACM International Conference on Interactive Media Experiences, Aveiro, Portugal, 22–24 June 2022; pp. 151–160. [Google Scholar]

- Dao, E.; Muresan, A.; Hornbæk, K.; Knibbe, J. Bad breakdowns, useful seams, and face slapping: Analysis of VR fails on youtube. In Proceedings of the 2021 Chi Conference on Human Factors in Computing Systems, Yokohama Japan, 8–13 May 2021; pp. 1–14. [Google Scholar]

- Do, Y.; Brudy, F.; Fitzmaurice, G.W.; Anderson, F. Vice VRsa: Balancing Bystander’s and VR user’s Privacy through Awareness Cues Inside and Outside VR. In Proceedings of the Graphics Interface 2023-Second Deadline, Victoria, BC, Canada, 30 May 2023. [Google Scholar]

- Oculus. 2023. Guardian System. Available online: https://developer.oculus.com/documentation/native/pc/dg-guardian-system (accessed on 14 May 2024).

- Valve Corporation. Chaperone. Available online: https://help.steampowered.com/en/faqs/view/30FC-2296-D4CD-58DA (accessed on 14 May 2024).

- McGill, M.; Boland, D.; Murray-Smith, R.; Brewster, S. A dose of reality: Overcoming usability challenges in VR head-mounted displays. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 2143–2152. [Google Scholar]

- O’Hagan, J.; Williamson, J.R.; Mathis, F.; Khamis, M.; McGill, M. Re-evaluating VR user awareness needs during bystander interactions. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–17. [Google Scholar]

- Ghosh, S.; Winston, L.; Panchal, N.; Kimura-Thollander, P.; Hotnog, J.; Cheong, D.; Reyes, G.; Abowd, G.D. Notifivr: Exploring interruptions and notifications in virtual reality. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1447–1456. [Google Scholar] [CrossRef] [PubMed]

- Hsieh, C.Y.; Chiang, Y.S.; Chiu, H.Y.; Chang, Y.J. Bridging the virtual and real worlds: A preliminary study of messaging notifications in virtual reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–14. [Google Scholar]

- O’Hagan, J.; Williamson, J.R. Reality aware VR headsets. In Proceedings of the 9th ACM International Symposium on Pervasive Displays, Manchester, UK, 4–5 June 2020; pp. 9–17. [Google Scholar]

- Zenner, A.; Speicher, M.; Klingner, S.; Degraen, D.; Daiber, F.; Krüger, A. Immersive notification framework: Adaptive & plausible notifications in virtual reality. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–6. [Google Scholar]

- Kudo, Y.; Tang, A.; Fujita, K.; Endo, I.; Takashima, K.; Kitamura, Y. Towards balancing VR immersion and bystander awareness. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–22. [Google Scholar] [CrossRef]

- Von Willich, J.; Funk, M.; Müller, F.; Marky, K.; Riemann, J.; Mühlhäuser, M. You invaded my tracking space! using augmented virtuality for spotting passersby in room-scale virtual reality. In Proceedings of the 2019 on Designing Interactive Systems Conference, San Diego, CA, USA, 23–28 June 2019; pp. 487–496. [Google Scholar]

- Gottsacker, M.; Norouzi, N.; Kim, K.; Bruder, G.; Welch, G. Diegetic representations for seamless cross-reality interruptions. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Bari, Italy, 4–8 October 2021; pp. 310–319. [Google Scholar]

- Medeiros, D.; Dos Anjos, R.; Pantidi, N.; Huang, K.; Sousa, M.; Anslow, C.; Jorge, J. Promoting reality awareness in virtual reality through proxemics. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March–1 April 2021; pp. 21–30. [Google Scholar]

- Wang, C.H.; Chen, B.Y.; Chan, L. Realitylens: A user interface for blending customized physical world view into virtual reality. In Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, Bend, OR, USA, 29 October–2 November 2022; pp. 1–11. [Google Scholar]

- Reed, C.N.; Strohmeier, P.; McPherson, A.P. Negotiating Experience and Communicating Information Through Abstract Metaphor. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–16. [Google Scholar]

- Mendes, D.; Caputo, F.M.; Giachetti, A.; Ferreira, A.; Jorge, J. A survey on 3d virtual object manipulation: From the desktop to immersive virtual environments. Comput. Graph. Forum 2019, 38, 21–45. [Google Scholar] [CrossRef]

- Englmeier, D.; Sajko, W.; Butz, A. Spherical World in Miniature: Exploring the Tiny Planets Metaphor for Discrete Locomotion in Virtual Reality. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March–1 April 2021; pp. 345–352. [Google Scholar]

- Wagner, J.; Silva, C.T.; Stuerzlinger, W.; Nedel, L. Reimagining TaxiVis through an Immersive Space-Time Cube metaphor and reflecting on potential benefits of Immersive Analytics for urban data exploration. arXiv 2024, arXiv:2402.00344. [Google Scholar]

- Pointecker, F.; Oberögger, D.; Anthes, C. Visual Metaphors for Notification into Virtual Environments. In Proceedings of the 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Sydney, Australia, 16–20 October 2023; pp. 60–64. [Google Scholar]

- How to Open and Use Windows Mixed Reality Flashlight in Windows 10. Available online: https://www.tenforums.com/tutorials/121260-open-usewindows-mixed-reality-flashlight-windows-10-a.html (accessed on 17 April 2024).

- George, C.; Tien, A.N.; Hussmann, H. Seamless, bi-directional transitions along the reality-virtuality continuum: A conceptualization and prototype exploration. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Porto de Galinhas, Brazil, 9–13 November 2020; pp. 412–424. [Google Scholar]

- Cirio, G.; Marchal, M.; Regia-Corte, T.; Lécuyer, A. The magic barrier tape: A novel metaphor for infinite navigation in virtual worlds with a restricted walking workspace. In Proceedings of the 16th ACM Symposium on Virtual Reality Software and Technology, Kyoto, Japan, 18–20 November 2009; pp. 155–162. [Google Scholar] [CrossRef]

- Dominjon, L.; Lécuyer, A.; Burkhardt, J.M.; Andrade-Barroso, G.; Richir, S. The “bubble” technique: Interacting with large virtual environments using haptic devices with limited workspace. In Proceedings of the First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems. World Haptics Conference, Pisa, Italy, 18–20 March 2005; pp. 639–640. [Google Scholar]

- Qu, C.; Che, X.; Chang, E.; Cai, Z. Motion Prediction based Safety Boundary Study in Virtual Reality. In Proceedings of the 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Shanghai, China, 25–29 March 2023; pp. 833–834. [Google Scholar]

- Yang, K.T.; Wang, C.H.; Chan, L. Sharespace: Facilitating shared use of the physical space by both vr head-mounted display and external users. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, Berlin, Germany, 14 October 2018; pp. 499–509. [Google Scholar]

- Marwecki, S.; Brehm, M.; Wagner, L.; Cheng, L.P.; Mueller, F.; Baudisch, P. Virtualspace-overloading physical space with multiple virtual reality users. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–10. [Google Scholar]

- Wu, S.; Li, J.; Sousa, M.; Grossman, T. Investigating Guardian Awareness Techniques to Promote Safety in Virtual Reality. In Proceedings of the 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Shanghai, China, 25–29 March 2023; pp. 631–640. [Google Scholar]

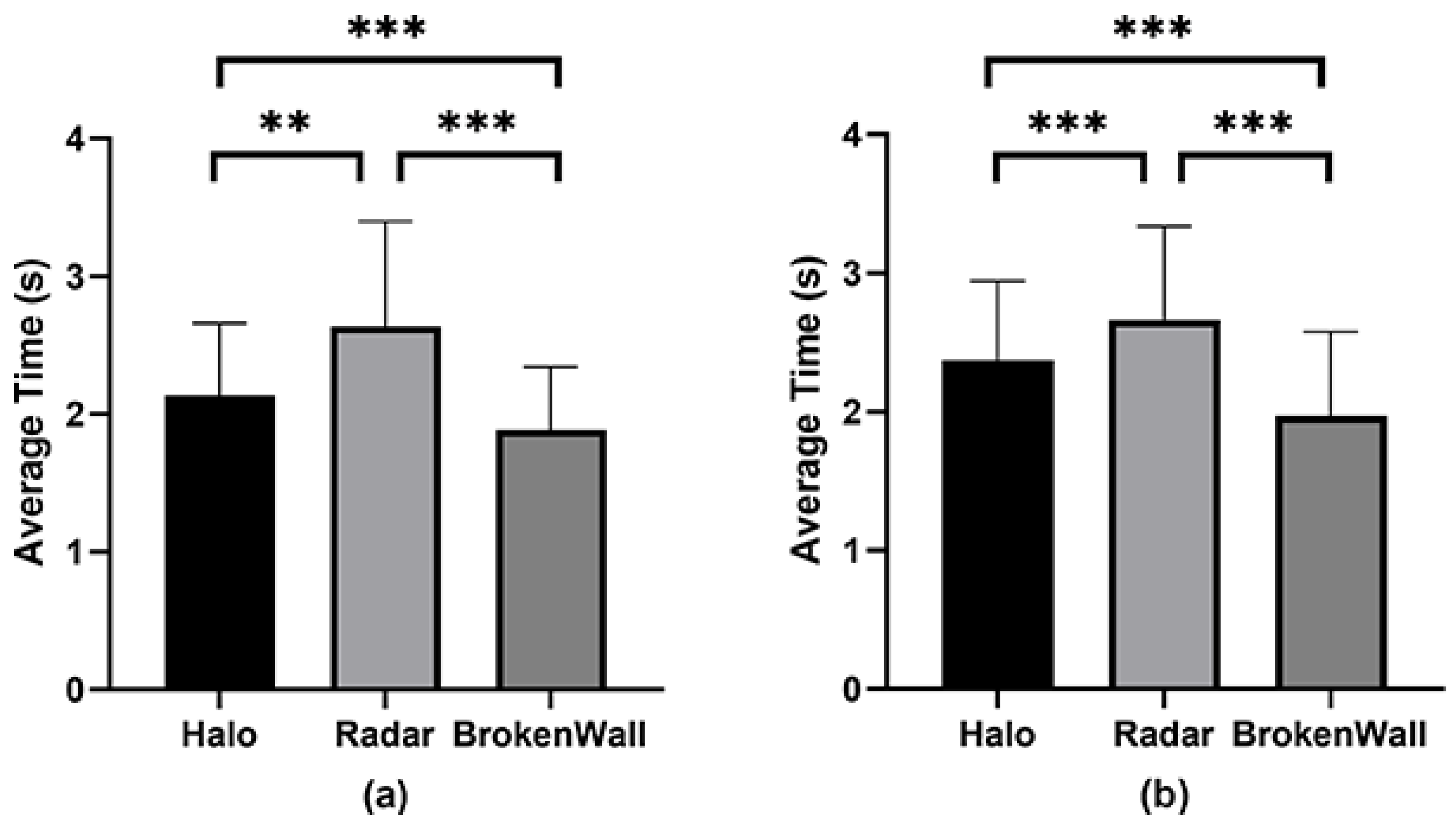

| Experimental Data | 1 Halo | 2 Radar | 3 BrokenWall | Non-Parametric Friedman Test | Post–hoc:Wilcoxon |

|---|---|---|---|---|---|

| Naturalness | 4.9 (1.1) | 4.3 (1.0) | 5.9 (1.2) | (2) = 21.0 | 1-2, 1-3, 2-3 |

| Understandability | 5.1 (1.7) | 4.6 (1.3) | 6.1 (1.0) | (2) = 15.453 | 1-3, 2-3 |

| Urgency | 5.2 (1.2) | 4.0 (1.1) | 6.3 (0.8) | (2) = 38.264 | 1-2, 1-3, 2-3 |

| Security | 4.3 (1.5) | 4.1 (1.0) | 6.2 (0.8) | (2) = 33.811 | 1-3, 2-3 |

| Efficiency | 5.1 (1.0) | 4.1 (1.1) | 5.9 (1.1) | (2) = 21.868 | 1-2, 1-3, 2-3 |

| Comfort | 4.6 (1.4) | 4.3 (1.5) | 5.9 (1.2) | (2) = 19.825 | 1-3, 2-3 |

| Like | 4.9 (1.3) | 4.2 (1.1) | 6.1 (1.0) | (2) = 28.752 | 1-2, 1-3, 2-3 |

| Workload | 6.8 (2.3) | 9.0 (2.5) | 5.2 (2.6) | (2) = 40.492 | 1-2, 1-3, 2-3 |

| Interactions between VR Users and Bystanders | ||||

|---|---|---|---|---|

| Interruptions | Coexistence | AR Interaction | ||

| Transformations of the safety boundary wall | Rigid | Rotation: The safety boundary wall notifies the user about an intruder’s direction through rotation. Vibration: The safety boundary wall notifies the user about intrusion events through vibration. | Translation: The safety boundary wall adjusts its position to allow for some activity space for bystanders through translational motion. Vibration: The safety boundary wall adjusts its vibration intensity to display the magnitude of real-world noise. | Translation: Bystanders push the safety boundary walls of VR users to enable their movement during spatial conflicts. |

| Non-rigid | Breaking: The breaking of the safety boundary wall notifies the user about intrusion events. | Height: Changes in the safety boundary wall’s height indicate the proximity of bystanders. Scale: During spatial conflicts, the safety boundary wall scales up or down to adjust the activity area [27]. (*) Deformation: During spatial conflicts, the safety boundary wall deforms to alter the activity area [25]. (*) | Deformation: Bystanders can deform the security boundary wall by squeezing it with their hands or bodies. Breaking: When bystanders touch the safety boundary wall with their hands or bodies, the safety boundary wall will break to remind them that they are entering the VR user’s activity area. | |

| Texture | Color: The safety boundary wall changes its color to signify intrusion events. | Blinking: Bystanders’ approach is indicated by the blinking of the safety boundary wall. Color: A darker color of the safety boundary wall indicates the proximity of bystanders. Transparent: The nearest section of the safety boundary wall is displayed to indicate the path of a bystander’s movement. Texture Mapping: Crack textures appear in security boundary walls to represent surrounding noise. | Color: The color of the safety boundary wall represents the current status of the VR user. Transparency: Changes in the transparency in the safety boundary wall indicate whether the VR user is demoing VR to bystanders. Texture Mapping: Changes in the texture mapping of the safety boundary wall indicate whether the VR user is demoing VR. | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, H.; Qin, Y.; Su, J.; Tian, Y. Exploring Metaphorical Transformations of a Safety Boundary Wall in Virtual Reality. Sensors 2024, 24, 3187. https://doi.org/10.3390/s24103187

Qin H, Qin Y, Su J, Tian Y. Exploring Metaphorical Transformations of a Safety Boundary Wall in Virtual Reality. Sensors. 2024; 24(10):3187. https://doi.org/10.3390/s24103187

Chicago/Turabian StyleQin, Haozhao, Yechang Qin, Jianchun Su, and Yang Tian. 2024. "Exploring Metaphorical Transformations of a Safety Boundary Wall in Virtual Reality" Sensors 24, no. 10: 3187. https://doi.org/10.3390/s24103187