Application of Laser Systems for Detection and Ranging in the Modern Road Transportation and Maritime Sector

Abstract

:1. Introduction

- Introduction

- Lidar System

- Application of Lidar System in Road Transportation

- Application of the Lidar System in the Maritime Sector

- Challenges and Future Trends

- Conclusions

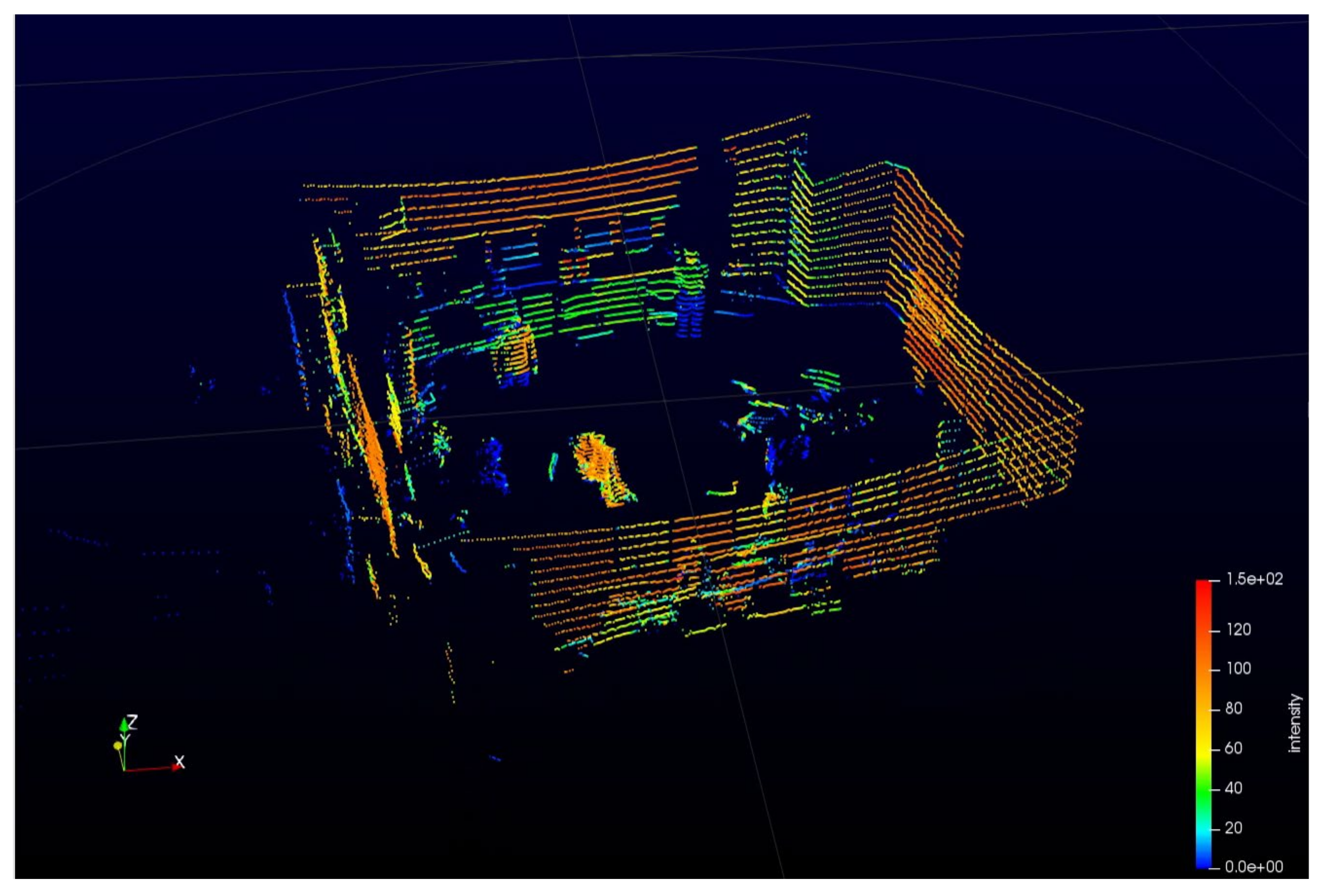

2. Lidar System

2.1. Types of Lidar Systems

2.1.1. Mechanical Lidar

2.1.2. Solid-State Lidar

2.2. Measurement Principles

2.2.1. Pulsed Lidar

2.2.2. AMCW Lidar

2.2.3. FMCW Lidar

2.3. Parameters

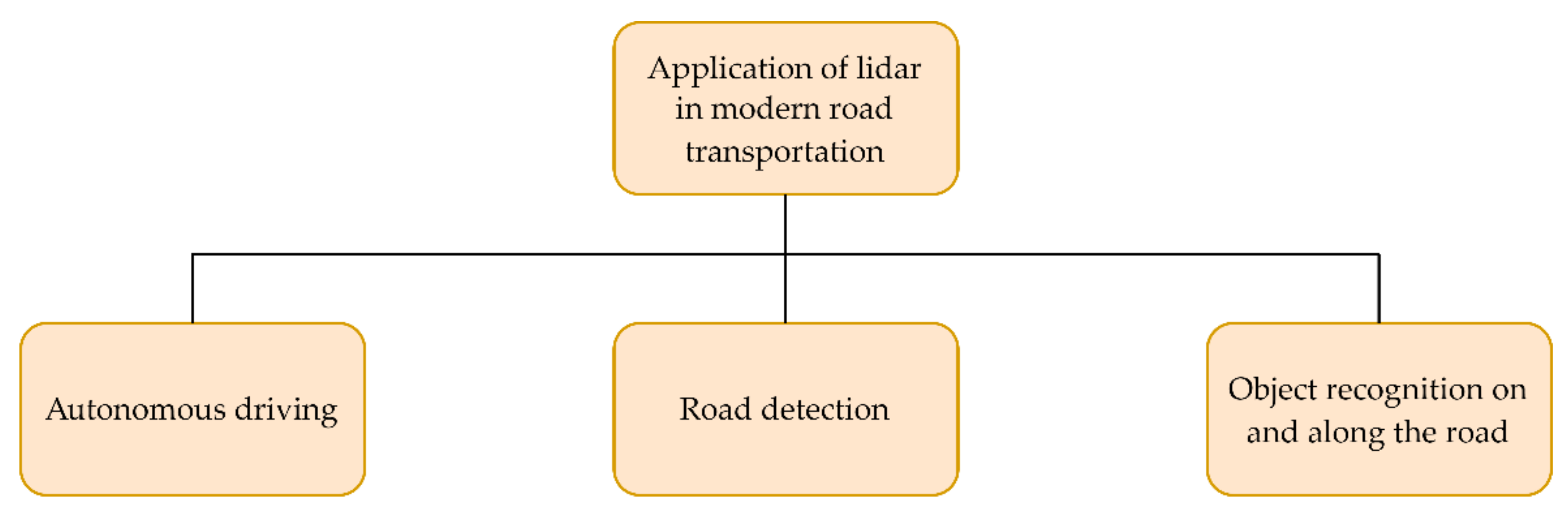

3. Application of Lidar System in Road Transportation

3.1. Application in Autonomous Driving

3.2. Road Detection

3.3. Object Recognition on the Road and along the Road

4. Application of the Lidar System in the Maritime Sector

4.1. Autonomous Navigation and Object Detection on Seas and Oceans

4.2. Monitoring Ocean Ecosystems

4.3. Mapping Coastal Areas

4.4. Other Applications

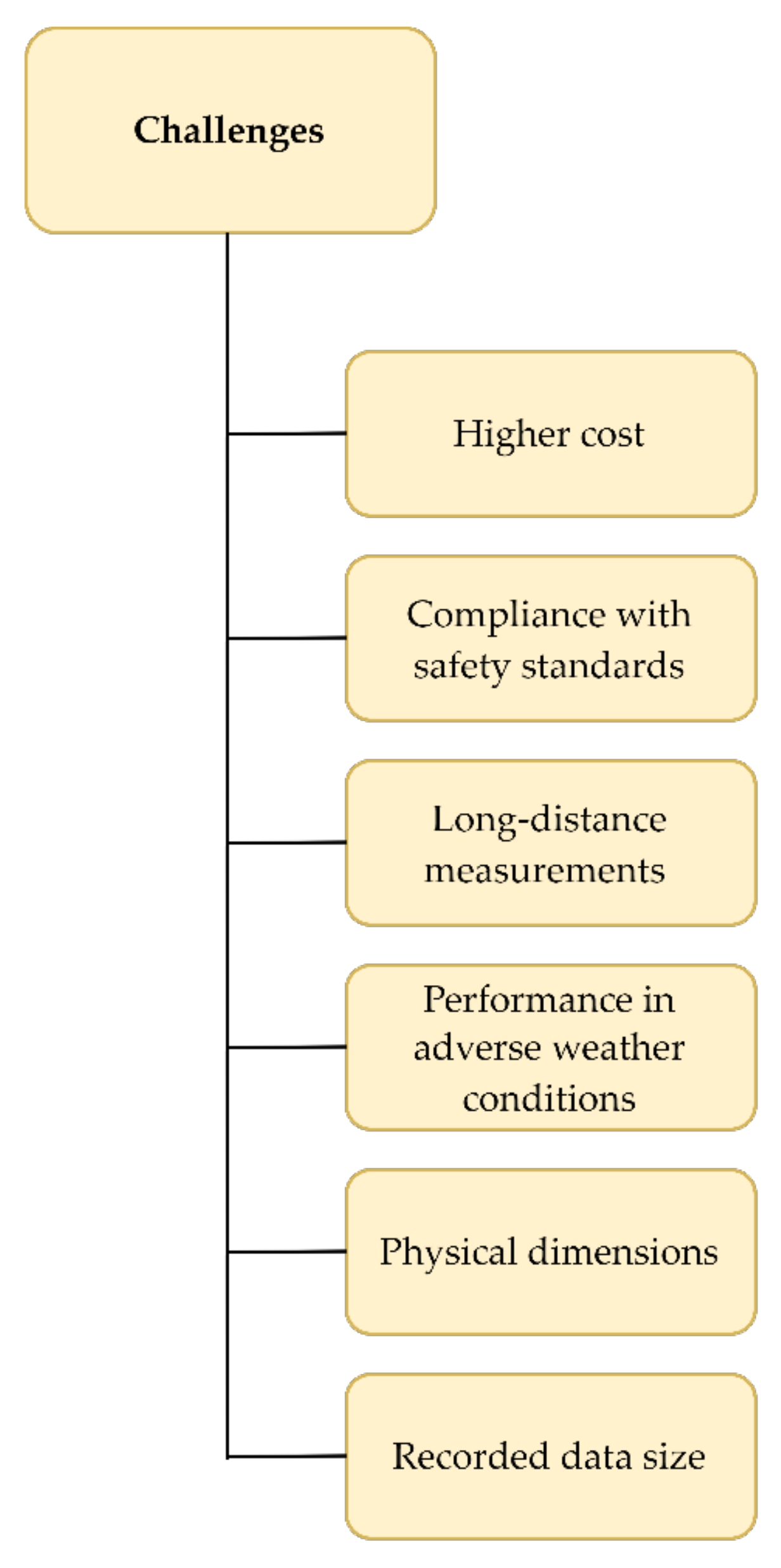

5. Challenges and Future Trends

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 2D | two-dimensional |

| 3D | three-dimensional |

| ADAS | Advanced Driver Assistance System |

| AI | artificial intelligence |

| ALS | airborne laser scanning |

| AMCW | amplitude modulated continuous wave |

| APD | avalanche photodiode |

| BIM | building information modeling |

| BP-ANN | backpropagation artificial neural network |

| CMOS | complementary metal-oxide semiconductor |

| CNN | convolutional neural network |

| DAC | digital-to-analog converter |

| DBSCAN | density-based spatial clustering of applications with noise |

| DM-ISDNN | dual-modal instance segmentation deep neural network |

| E2E | end-to-end |

| FCN | fully convolutional neural network |

| FMCW | frequency modulated continuous wave |

| FOV | field of view |

| FRPDM | free-resolution probability distributions map |

| GIS | geographic information system |

| GMM | Gaussian mixture model |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| GPU | graphics processing unit |

| HMM | hidden Markov model |

| IDW | inverse distance weighted |

| IMM | interacting multiple model |

| IMU | inertial measurement unit |

| KITTI | Karlsruhe Institute of Technology and Toyota Technological Institute |

| lidar | light detection and ranging |

| LoDNN | Lidar-only Deep Neural Network |

| MEMS | micro-electromechanical system |

| MLS | mobile laser scanning |

| MPE | maximum permissible exposure |

| MSTV | multiscale tensor voting |

| OPA | optical phased array |

| PMT | photomultiplier tube |

| radar | radio detection and ranging |

| RGB | red-green-blue |

| SiPM | silicon photomultiplier |

| SLAM | simultaneous localization and mapping |

| SNR | signal-to-noise ratio |

| SPAD | single-photon avalanche photodiode |

| SVM | support vector machine |

| TDC | time-to-digital converter |

| TLS | terrestrial laser scanning |

| ToF | time of flight |

| UAV | unmanned aerial vehicle |

| WND | weighted neighboring difference |

| WSMLR | weakly supervised metric learning |

References

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Frost & Sullivan. LiDAR: Driving the Future of Autonomous Navigation; Frost & Sullivan: Mountain View, CA, USA, 2016; pp. 1–30. [Google Scholar]

- Fernandez Diaz, J.C.; Carter, W.E.; Shrestha, R.L.; Glennie, C.L. Lidar Remote Sensing. In Handbook of Satellite Applications; Pelton, J.N., Madry, S., Camacho-Lara, S., Eds.; Springer: New York, NY, USA, 2013; pp. 757–808. ISBN 978-1-4419-7671-0. [Google Scholar]

- Royo, S.; Ballesta-Garcia, M. An Overview of Lidar Imaging Systems for Autonomous Vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef] [Green Version]

- Yoo, H.W.; Druml, N.; Brunner, D.; Schwarzl, C.; Thurner, T.; Hennecke, M.; Schitter, G. MEMS-Based Lidar for Autonomous Driving. Elektrotechnik Inf. 2018, 135, 408–415. [Google Scholar] [CrossRef] [Green Version]

- Stann, B.L.; Dammann, J.F.; Giza, M.M. Progress on MEMS-Scanned Ladar. In Proceedings of the Laser Radar Technology and Applications XXI, Baltimore, MD, USA, 19–20 April 2016; Society of Photo-Optical Instrumentation Engineers (SPIE): Baltimore, MD, USA, 2016; Volume 9832. [Google Scholar]

- Khader, M.; Cherian, S. An Introduction to Automotive LIDAR; Texas Instruments Incorporated: Dallas, TX, USA, 2020. [Google Scholar]

- McManamon, P. Field Guide to Lidar; SPIE Field Guides; Society of Photo-Optical Instrumentation Engineers (SPIE): Bellingham, WA, USA, 2015. [Google Scholar]

- Behroozpour, B.; Sandborn, P.A.M.; Wu, M.C.; Boser, B.E. Lidar System Architectures and Circuits. IEEE Commun. Mag. 2017, 55, 135–142. [Google Scholar] [CrossRef]

- Jha, A.; Azcona, F.J.; Royo, S. Frequency-Modulated Optical Feedback Interferometry for Nanometric Scale Vibrometry. IEEE Photonics Technol. Lett. 2016, 28, 1217–1220. [Google Scholar] [CrossRef] [Green Version]

- Velodyne LiDAR. Velodyne LiDAR Puck; Velodyne LiDAR, Inc.: San Jose, CA, USA, 2019. [Google Scholar]

- Velodyne LiDAR. Velodyne LiDAR Alpha Prime; Velodyne LiDAR, Inc.: San Jose, CA, USA, 2021. [Google Scholar]

- RIEGL Laser Measurement Systems GmbH. RIEGL VUX-1HA; RIEGL Laser Measurement Systems GmbH: Horn, Austria, 2021. [Google Scholar]

- Leica Geosystems. Leica ScanStation P50; Leica Geosystems AG: Heerbrugg, Switzerland, 2017. [Google Scholar]

- Brnelić, A. Application of Laser Systems for Object Detection in the Modern Transportation Sector. Master’s Thesis, University of Rijeka, Faculty of Maritime Studies, Rijeka, Croatia, 2021. [Google Scholar]

- Velodyne LiDAR. VLP-16 User Manual; Velodyne LiDAR, Inc.: San Jose, CA, USA, 2019. [Google Scholar]

- Wang, Y.; Chen, Q.; Zhu, Q.; Liu, L.; Li, C.; Zheng, D. A Survey of Mobile Laser Scanning Applications and Key Techniques over Urban Areas. Remote Sens. 2019, 11, 1540. [Google Scholar] [CrossRef] [Green Version]

- Williams, K.; Olsen, M.J.; Roe, G.V.; Glennie, C. Synthesis of Transportation Applications of Mobile LIDAR. Remote Sens. 2013, 5, 4652–4692. [Google Scholar] [CrossRef] [Green Version]

- Vosselman, G.; Maas, H.-G. Airborne and Terrestrial Laser Scanning; Whittles Publishing: Caithness, UK, 2010. [Google Scholar]

- Guan, H.; Li, J.; Cao, S.; Yu, Y. Use of Mobile LiDAR in Road Information Inventory: A Review. Int. J. Image Data Fusion 2016, 7, 219–242. [Google Scholar] [CrossRef]

- Hecht, J. Lidar for Self-Driving Cars. Opt. Photonics News 2018, 29, 26–33. [Google Scholar] [CrossRef]

- Gargoum, S.; El-Basyouny, K. Automated Extraction of Road Features Using LiDAR Data: A Review of LiDAR Applications in Transportation. In Proceedings of the 2017 4th International Conference on Transportation Information and Safety (ICTIS), Banff, AB, Canada, 8–10 August 2017; pp. 563–574. [Google Scholar]

- Zou, Q.; Sun, Q.; Chen, L.; Nie, B.; Li, Q. A Comparative Analysis of LiDAR SLAM-Based Indoor Navigation for Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6907–6921. [Google Scholar] [CrossRef]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather Influence and Classification with Automotive Lidar Sensors. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1527–1534. [Google Scholar]

- Peynot, T.; Underwood, J.; Scheding, S. Towards Reliable Perception for Unmanned Ground Vehicles in Challenging Conditions. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 1170–1176. [Google Scholar]

- Miclea, R.-C.; Dughir, C.; Alexa, F.; Sandru, F.; Silea, I. Laser and LIDAR in a System for Visibility Distance Estimation in Fog Conditions. Sensors 2020, 20, 6322. [Google Scholar] [CrossRef]

- Li, Y.; Duthon, P.; Colomb, M.; Ibanez-Guzman, J. What Happens for a ToF LiDAR in Fog? IEEE Trans. Intell. Transp. Syst. 2021, 22, 6670–6681. [Google Scholar] [CrossRef]

- Lin, S.-L.; Wu, B.-H. Application of Kalman Filter to Improve 3D LiDAR Signals of Autonomous Vehicles in Adverse Weather. Appl. Sci. 2021, 11, 3018. [Google Scholar] [CrossRef]

- Wojtanowski, J.; Zygmunt, M.; Kaszczuk, M.; Mierczyk, Z.; Muzal, M. Comparison of 905 Nm and 1550 Nm Semiconductor Laser Rangefinders’ Performance Deterioration Due to Adverse Environmental Conditions. Opto-Electron. Rev. 2014, 22, 183–190. [Google Scholar] [CrossRef]

- Caltagirone, L.; Scheidegger, S.; Svensson, L.; Wahde, M. Fast LIDAR-Based Road Detection Using Fully Convolutional Neural Networks. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1019–1024. [Google Scholar]

- Wu, J.; Xu, H.; Zhao, J. Automatic Lane Identification Using the Roadside LiDAR Sensors. IEEE Intell. Transp. Syst. Mag. 2020, 12, 25–34. [Google Scholar] [CrossRef]

- Caltagirone, L.; Bellone, M.; Svensson, L.; Wahde, M. LIDAR–Camera Fusion for Road Detection Using Fully Convolutional Neural Networks. Robot. Auton. Syst. 2019, 111, 125–131. [Google Scholar] [CrossRef] [Green Version]

- Barazzetti, L.; Previtali, M.; Scaioni, M. Roads Detection and Parametrization in Integrated BIM-GIS Using LiDAR. Infrastructures 2020, 5, 55. [Google Scholar] [CrossRef]

- Chen, L.; Yang, J.; Kong, H. Lidar-Histogram for Fast Road and Obstacle Detection. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1343–1348. [Google Scholar]

- Zhang, Y.; Wang, J.; Wang, X.; Dolan, J.M. Road-Segmentation-Based Curb Detection Method for Self-Driving via a 3D-LiDAR Sensor. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3981–3991. [Google Scholar] [CrossRef]

- Kumar, P.; McElhinney, C.P.; Lewis, P.; McCarthy, T. An Automated Algorithm for Extracting Road Edges from Terrestrial Mobile LiDAR Data. ISPRS J. Photogramm. Remote Sens. 2013, 85, 44–55. [Google Scholar] [CrossRef] [Green Version]

- Wolcott, R.W.; Eustice, R.M. Visual Localization within LIDAR Maps for Automated Urban Driving. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 176–183. [Google Scholar]

- de Miguel, M.Á.; García, F.; Armingol, J.M. Improved LiDAR Probabilistic Localization for Autonomous Vehicles Using GNSS. Sensors 2020, 20, 3145. [Google Scholar] [CrossRef]

- Ilci, V.; Toth, C. High Definition 3D Map Creation Using GNSS/IMU/LiDAR Sensor Integration to Support Autonomous Vehicle Navigation. Sensors 2020, 20, 899. [Google Scholar] [CrossRef] [Green Version]

- Hata, A.Y.; Wolf, D.F. Feature Detection for Vehicle Localization in Urban Environments Using a Multilayer LIDAR. IEEE Trans. Intell. Transp. Syst. 2016, 17, 420–429. [Google Scholar] [CrossRef]

- Kim, K.-W.; Jee, G.-I. Free-Resolution Probability Distributions Map-Based Precise Vehicle Localization in Urban Areas. Sensors 2020, 20, 1220. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Wang, Z.; Lin, L.; Xu, F.; Yu, J.; Liang, H. Optimal Vehicle Pose Estimation Network Based on Time Series and Spatial Tightness with 3D LiDARs. Remote Sens. 2021, 13, 4123. [Google Scholar] [CrossRef]

- Xue, H.; Fu, H.; Dai, B. IMU-Aided High-Frequency Lidar Odometry for Autonomous Driving. Appl. Sci. 2019, 9, 1506. [Google Scholar] [CrossRef] [Green Version]

- Yang, B.; Fang, L.; Li, Q.; Li, J. Automated Extraction of Road Markings from Mobile Lidar Point Clouds. Photogramm. Eng. Remote Sens. 2012, 78, 331–338. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Yu, Y.; Ji, Z.; Wang, C. Using Mobile LiDAR Data for Rapidly Updating Road Markings. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2457–2466. [Google Scholar] [CrossRef]

- Landa, J.; Prochazka, D. Automatic Road Inventory Using LiDAR. Procedia Econ. Financ. 2014, 12, 363–370. [Google Scholar] [CrossRef] [Green Version]

- Tan, M.; Wang, B.; Wu, Z.; Wang, J.; Pan, G. Weakly Supervised Metric Learning for Traffic Sign Recognition in a LIDAR-Equipped Vehicle. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1415–1427. [Google Scholar] [CrossRef]

- Gargoum, S.; El-Basyouny, K.; Sabbagh, J.; Froese, K. Automated Highway Sign Extraction Using Lidar Data. Transp. Res. Rec. 2017, 2643, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and Tracking of Pedestrians and Vehicles Using Roadside LiDAR Sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Wu, J. An Automatic Procedure for Vehicle Tracking with a Roadside LiDAR Sensor. ITE J. 2018, 88, 32–37. [Google Scholar]

- Li, B.; Zhang, T.; Xia, T. Vehicle Detection from 3D Lidar Using Fully Convolutional Network. In Proceedings of the Robotics: Science and Systems XII, Ann Arbor, MI, USA, 18 June 2016; Volume 12. [Google Scholar]

- Asvadi, A.; Garrote, L.; Premebida, C.; Peixoto, P.; Nunes, J.U. Multimodal Vehicle Detection: Fusing 3D-LIDAR and Color Camera Data. Pattern Recognit. Lett. 2018, 115, 20–29. [Google Scholar] [CrossRef]

- Schlosser, J.; Chow, C.K.; Kira, Z. Fusing LIDAR and Images for Pedestrian Detection Using Convolutional Neural Networks. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 2198–2205. [Google Scholar]

- Gao, H.; Cheng, B.; Wang, J.; Li, K.; Zhao, J.; Li, D. Object Classification Using CNN-Based Fusion of Vision and LIDAR in Autonomous Vehicle Environment. IEEE Trans. Ind. Inform. 2018, 14, 4224–4231. [Google Scholar] [CrossRef]

- Nowak, T.; Ćwian, K.; Skrzypczyński, P. Real-Time Detection of Non-Stationary Objects Using Intensity Data in Automotive LiDAR SLAM. Sensors 2021, 21, 6781. [Google Scholar] [CrossRef]

- Geng, K.; Dong, G.; Yin, G.; Hu, J. Deep Dual-Modal Traffic Objects Instance Segmentation Method Using Camera and LIDAR Data for Autonomous Driving. Remote Sens. 2020, 12, 3274. [Google Scholar] [CrossRef]

- Shahian Jahromi, B.; Tulabandhula, T.; Cetin, S. Real-Time Hybrid Multi-Sensor Fusion Framework for Perception in Autonomous Vehicles. Sensors 2019, 19, 4357. [Google Scholar] [CrossRef] [Green Version]

- Kang, D.; Wong, A.; Lee, B.; Kim, J. Real-Time Semantic Segmentation of 3D Point Cloud for Autonomous Driving. Electronics 2021, 10, 1960. [Google Scholar] [CrossRef]

- Park, M.; Kim, H.; Park, S. A Convolutional Neural Network-Based End-to-End Self-Driving Using LiDAR and Camera Fusion: Analysis Perspectives in a Real-World Environment. Electronics 2021, 10, 2608. [Google Scholar] [CrossRef]

- Wang, H.; Wang, B.; Liu, B.; Meng, X.; Yang, G. Pedestrian Recognition and Tracking Using 3D LiDAR for Autonomous Vehicle. Robot. Auton. Syst. 2017, 88, 71–78. [Google Scholar] [CrossRef]

- Thakur, R. Scanning LIDAR in Advanced Driver Assistance Systems and Beyond: Building a Road Map for next-Generation LIDAR Technology. IEEE Consum. Electron. Mag. 2016, 5, 48–54. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Filgueira, A.; González-Jorge, H.; Lagüela, S.; Díaz-Vilariño, L.; Arias, P. Quantifying the Influence of Rain in LiDAR Performance. Measurement 2017, 95, 143–148. [Google Scholar] [CrossRef]

- Fritsch, J.; Kühnl, T.; Geiger, A. A New Performance Measure and Evaluation Benchmark for Road Detection Algorithms. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 1693–1700. [Google Scholar]

- RIEGL Laser Measurement Systems GmbH. RIEGL VMX-450; RIEGL Laser Measurement Systems GmbH: Horn, Austria, 2015. [Google Scholar]

- Miyajima, C.; Takeda, K. Driver-Behavior Modeling Using On-Road Driving Data: A New Application for Behavior Signal Processing. IEEE Signal Process. Mag. 2016, 33, 14–21. [Google Scholar] [CrossRef]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019; pp. 9297–9307. [Google Scholar]

- Milioto, A.; Vizzo, I.; Behley, J.; Stachniss, C. RangeNet ++: Fast and Accurate LiDAR Semantic Segmentation. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 4213–4220. [Google Scholar]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High Quality Object Detection and Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1483–1498. [Google Scholar] [CrossRef] [Green Version]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Lopac, N.; Hržić, F.; Vuksanović, I.P.; Lerga, J. Detection of Non-Stationary GW Signals in High Noise from Cohen’s Class of Time–Frequency Representations Using Deep Learning. IEEE Access 2022, 10, 2408–2428. [Google Scholar] [CrossRef]

- Zhou, X.; Li, Y.; Liang, W. CNN-RNN Based Intelligent Recommendation for Online Medical Pre-Diagnosis Support. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021, 18, 912–921. [Google Scholar] [CrossRef]

- Alhichri, H.; Alswayed, A.S.; Bazi, Y.; Ammour, N.; Alajlan, N.A. Classification of Remote Sensing Images Using EfficientNet-B3 CNN Model with Attention. IEEE Access 2021, 9, 14078–14094. [Google Scholar] [CrossRef]

- Liu, H.; Jurdana, I.; Lopac, N.; Wakabayashi, N. BlueNavi: A Microservices Architecture-Styled Platform Providing Maritime Information. Sustainability 2022, 14, 2173. [Google Scholar] [CrossRef]

- Thombre, S.; Zhao, Z.; Ramm-Schmidt, H.; Vallet García, J.M.; Malkamäki, T.; Nikolskiy, S.; Hammarberg, T.; Nuortie, H.; Bhuiyan, H.M.Z.; Särkkä, S.; et al. Sensors and AI Techniques for Situational Awareness in Autonomous Ships: A Review. IEEE Trans. Intell. Transp. Syst. 2022, 23, 64–83. [Google Scholar] [CrossRef]

- Chen, C.; Li, Y. Ship Berthing Information Extraction System Using Three-Dimensional Light Detection and Ranging Data. J. Mar. Sci. Eng. 2021, 9, 747. [Google Scholar] [CrossRef]

- Hu, B.; Liu, X.; Jing, Q.; Lyu, H.; Yin, Y. Estimation of Berthing State of Maritime Autonomous Surface Ships Based on 3D LiDAR. Ocean. Eng. 2022, 251, 111131. [Google Scholar] [CrossRef]

- Perkovič, M.; Gucma, L.; Bilewski, M.; Muczynski, B.; Dimc, F.; Luin, B.; Vidmar, P.; Lorenčič, V.; Batista, M. Laser-Based Aid Systems for Berthing and Docking. J. Mar. Sci. Eng. 2020, 8, 346. [Google Scholar] [CrossRef]

- Jindal, M.; Jha, A.; Cenkeramaddi, L.R. Bollard Segmentation and Position Estimation from Lidar Point Cloud for Autonomous Mooring. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–9. [Google Scholar] [CrossRef]

- Zeng, T.; Wang, H.; Sun, X.; Li, H.; Lu, Z.; Tong, F.; Cheng, H.; Zheng, C.; Zhang, M. Dual-Channel LIDAR Searching, Positioning, Tracking and Landing System for Rotorcraft from Ships at Sea. J. Navig. 2022, 1–27. [Google Scholar] [CrossRef]

- Wessman, M. Object Detection Using LIDAR in Maritime Scenarios. Master’s Thesis, Abo Akademi University, Turku, Finland, 2018. [Google Scholar]

- Thompson, D.J. Maritime Object Detection, Tracking, and Classification Using Lidar and Vision-Based Sensor Fusion. Master’s Thesis, Embry-Riddle Aeronautical University, Daytona Beach, FL, USA, 2017. [Google Scholar]

- Thompson, D.; Coyle, E.; Brown, J. Efficient LiDAR-Based Object Segmentation and Mapping for Maritime Environments. IEEE J. Ocean. Eng. 2019, 44, 352–362. [Google Scholar] [CrossRef]

- Churnside, J.H. Review of Profiling Oceanographic Lidar. Optical Engineering 2013, 53, 051405. [Google Scholar] [CrossRef] [Green Version]

- Pittman, S.J.; Costa, B.; Wedding, L.M. LiDAR Applications. In Coral Reef Remote Sensing: A Guide for Mapping, Monitoring and Management; Goodman, J.A., Purkis, S.J., Phinn, S.R., Eds.; Springer: Dordrecht, The Netherlands, 2013; pp. 145–174. ISBN 978-90-481-9292-2. [Google Scholar]

- Hostetler, C.A.; Behrenfeld, M.J.; Hu, Y.; Hair, J.W.; Schulien, J.A. Spaceborne Lidar in the Study of Marine Systems. Annu. Rev. Mar. Sci. 2018, 10, 121–147. [Google Scholar] [CrossRef] [Green Version]

- Klemas, V. Beach Profiling and LIDAR Bathymetry: An Overview with Case Studies. J. Coast. Res. 2011, 27, 1019–1028. [Google Scholar] [CrossRef]

- Smeeckaert, J.; Mallet, C.; David, N.; Chehata, N.; Ferraz, A. Large-Scale Classification of Water Areas Using Airborne Topographic Lidar Data. Remote Sens. Environ. 2013, 138, 134–148. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Cheng, Y.-T.; Zhou, T.; Ravi, R.; Hasheminasab, S.M.; Flatt, J.E.; Troy, C.; Habib, A. Evaluation of UAV LiDAR for Mapping Coastal Environments. Remote Sens. 2019, 11, 2893. [Google Scholar] [CrossRef] [Green Version]

- Ge, Z.; Shi, H.; Mei, X.; Dai, Z.; Li, D. Semi-Automatic Recognition of Marine Debris on Beaches. Sci. Rep. 2016, 6, 25759. [Google Scholar] [CrossRef] [Green Version]

- Wolken-Möhlmann, G.; Gottschall, J.; Lange, B. First Verification Test and Wake Measurement Results Using a SHIP-LIDAR System. Energy Procedia 2014, 53, 146–155. [Google Scholar] [CrossRef]

- Pichugina, Y.L.; Banta, R.M.; Brewer, W.A.; Sandberg, S.P.; Hardesty, R.M. Doppler Lidar–Based Wind-Profile Measurement System for Offshore Wind-Energy and Other Marine Boundary Layer Applications. J. Appl. Meteorol. Climatol. 2012, 51, 327–349. [Google Scholar] [CrossRef]

- Jurdana, I.; Lopac, N.; Wakabayashi, N.; Liu, H. Shipboard Data Compression Method for Sustainable Real-Time Maritime Communication in Remote Voyage Monitoring of Autonomous Ships. Sustainability 2021, 13, 8264. [Google Scholar] [CrossRef]

- Munim, Z.H. Autonomous Ships: A Review, Innovative Applications and Future Maritime Business Models. Supply Chain. Forum Int. J. 2019, 20, 266–279. [Google Scholar] [CrossRef]

- Felski, A.; Zwolak, K. The Ocean-Going Autonomous Ship—Challenges and Threats. J. Mar. Sci. Eng. 2020, 8, 41. [Google Scholar] [CrossRef] [Green Version]

- Dujmović, J.; Krljan, T.; Lopac, N.; Žuškin, S. Emphasis on Occupancy Rates in Carbon Emission Comparison for Maritime and Road Passenger Transportation Modes. J. Mar. Sci. Eng. 2022, 10, 459. [Google Scholar] [CrossRef]

- Lee, P.T.-W.; Kwon, O.K.; Ruan, X. Sustainability Challenges in Maritime Transport and Logistics Industry and Its Way Ahead. Sustainability 2019, 11, 1331. [Google Scholar] [CrossRef] [Green Version]

- Lopac, N.; Jurdana, I.; Lerga, J.; Wakabayashi, N. Particle-Swarm-Optimization-Enhanced Radial-Basis-Function-Kernel-Based Adaptive Filtering Applied to Maritime Data. J. Mar. Sci. Eng. 2021, 9, 439. [Google Scholar] [CrossRef]

| Reference | Description of Application | Conclusion | |

|---|---|---|---|

| Autonomous driving | [1] | A review of the state-of-the-art lidar technologies and the associated perception algorithms for application in autonomous driving | The limitations and challenges of the lidar technology are presented, as well as the impressive results of the analyzed algorithms |

| [21] | Discussion of the lidar systems’ role in autonomous driving applications | The vital role of monitoring fixed and moving objects in traffic | |

| [22] | A review of lidar applications in automated extraction of road features and a discussion on challenges and future research | Use of lidar for various transportation applications, including on-road (road surface, lane, and road edge), roadside (traffic signs, objects), and geometric (road cross, vertical alignment, pavement condition, sight distance, vertical clearance) information extraction | |

| [23] | Simultaneous localization and mapping (SLAM)-based indoor navigation for autonomous vehicles directly based on the three-dimensional (3D) spatial information from the lidar point cloud data | A comparative analysis of different navigation methods is conducted based on extensive experiments in real environments | |

| [24] | Extensive analysis of automotive lidar performance in adverse weather conditions, such as dense fog and heavy rain | Poor perception and detection of objects during rain and fog; the proposed rain and fog classification method provides satisfactory results | |

| [25] | Testing the lidar system for outdoor unmanned ground vehicles in adverse weather conditions, including rain, dust, and smoke | Signal attenuation due to scattering, reflection, and absorption of light and the reduction of detection distance are identified | |

| [26] | Analysis of the effects of fog conditions on the lidar system for visibility distance estimation for autonomous vehicles on roads | The visibility distances obtained by lidar systems are in the same range as those obtained by human observers; the correlation between the decrease in the optical power and the decrease of the visual acuity in fog conditions is established | |

| [27] | Analysis of the performance of a time-of-flight (ToF) lidar in a fog environment for different fog densities | The relations between the ranging performance and different types of fog are investigated, and a machine learning-based model is developed to predict the minimum fog visibility that allows successful ranging | |

| [28] | Application of Kalman filter and nearby point cloud denoising to reconstruct lidar measurements from autonomous vehicles in adverse weather conditions, including rain, thick smoke, and their combination | The experiments in the 2 × 2 × 0.6 m space show an improved normal weather 3D signal reconstruction from the lidar data in adverse weather conditions, with a 10–30% improvement | |

| [29] | Analysis of the influence of adverse environmental factors on the ToF lidar detection range, considering the 905 nm and 1550 nm laser wavelengths | A significant difference in the performance of the two laser types is identified—a 905 nm laser is recommended for poor environmental conditions | |

| Road detection | [30] | Deep learning road detection based on the simple and fast fully convolutional neural networks (FCNs) using only lidar data, where a top-view representation of point cloud data is considered, thus reducing road detection to a single-scale problem | High accuracy of road segmentation in all lighting conditions accompanied by fast inference suitable for real-time applications |

| [31] | Automatic traffic lane detection method based on the roadside lidar data of the vehicle trajectories, where the proposed method consists of background filtering and road boundary identification | Two case studies confirm the method’s ability to detect the boundaries of lanes for curvy roads while not being affected by pedestrians’ presence | |

| [32] | Deep learning road detection based on the FCNs using camera and lidar data fusion | High system accuracy is achieved by the multimodal approach, in contrast to the poor detection results obtained by using only a camera | |

| [33] | Road detection based on the lidar data as input to the system integrating the building information modeling (BIM) and geographic information system (GIS) | Accurate road detection is achieved by lidar data classification, but additional manual adjustments are still required | |

| [34] | Lidar-histogram method for detecting roads and obstacles based on the linear classification of the obstacle projections with respect to the line representing the road | Promising results in urban and off-road environments, with the proposed method being suitable for real-time applications | |

| [35] | Road-segmentation-based pavement edge detection for autonomous vehicles using 3D lidar sensors | The accuracy, robustness, and fast processing time of the proposed method are demonstrated on the experimental data acquired by a self-driving car | |

| [36] | An automated algorithm based on the parametric active contour model for detecting road edges from terrestrial mobile lidar data | Tests on various road types show satisfactory results, with dependence on the algorithm parameter settings | |

| Object recognition on and along the road | [37] | Visual localization of an autonomous vehicle in the urban environment based on a 3D lidar map and a monocular camera | The possibility of using a single monocular camera for the needs of visual localization on a 3D lidar map is confirmed, achieving performance close to the state-of-the-art lidar-only vehicle localization while using a much cheaper sensor |

| [38] | Probabilistic localization of an autonomous vehicle combining lidar data with Kalman-filtered Global Navigation Satellite System (GNSS) data | Improved localization with smooth transitions between using GNSS data to using lidar and map data | |

| [39] | Generating high-definition 3D maps based on the autonomous vehicle sensor data integration, including GNSS, inertial measurement unit (IMU), and lidar | Existing autonomous vehicle sensor systems can be successfully utilized to generate high-resolution maps with a centimeter-level accuracy | |

| [40] | Vehicle localization consisting of curb detection based on ring compression analysis and least trimmed squares, road marking detection based on road segmentation, and Monte Carlo localization | Experimental tests in urban environments show high detection accuracy with lateral and longitudinal errors of less than 0.3 m | |

| [41] | Vehicle localization based on the free-resolution probability distributions map (FRPDM) using lidar data | Efficient object representation with reduced map size and good position accuracy in urban areas are achieved | |

| [42] | Optimal vehicle pose estimation based on the ensemble learning network utilizing spatial tightness and time series obtained from the lidar data | Improved pose estimation accuracy is obtained, even on curved roads | |

| [43] | Autonomous vehicle localization based on the IMU, wheel encoder, and lidar odometry | Accurate and high-frequency localization results in a diverse environment | |

| [44] | Automatic recognition of road markings from mobile lidar point clouds | Good performance in recognizing road markings; further research is needed for more complex markings and intersections | |

| [45] | Development and implementation of a strategy for automatic extraction of road markings from the mobile lidar data based on the two-dimensional (2D) georeferenced feature images, modified inverse distance weighted (IDW) interpolation, weighted neighboring difference histogram (WNDH)-based dynamic thresholding, and multiscale tensor voting (MSTV) | Experimental tests in a subtropical urban environment show more accurate and complete recognition of road markings with fewer errors | |

| [46] | Automatic detection of traffic signs, road markings, and pole-shaped objects | The experimental tests on the two-kilometer long road in an urban area show that the proposed method is suitable for detecting individual signs, while there are difficulties in distinguishing multiple signs on the same construction | |

| [47] | Recognition of traffic signs for lidar-equipped vehicles based on the latent structural support vector machine (SVM)-based weakly supervised metric learning (WSMLR) method | Experiments indicate the effectiveness and efficiency of the proposed method, both for the single-view and multi-view sign recognition | |

| [48] | Automatic highway sign extraction based on the multiple filtering and clustering of the mobile lidar point cloud data | The tests conducted on three different highways show that the proposed straightforward method can achieve high accuracy values and can be efficiently used to create an accurate inventory of traffic signs | |

| [49] | Pedestrian and vehicle detection and tracking at intersections using roadside lidar data, the density-based spatial clustering of applications with noise (DBSCAN), backpropagation artificial neural network (BP-ANN), and Kalman filter | The experimental tests with a 16-laser lidar show the proposed method’s accuracy above 95% and detection range of about 30 m | |

| [50] | Vehicle tracking using roadside lidar data and a method consisting of background filtering, lane identification, and vehicle position and speed tracking | Satisfactory vehicle detection and speed tracking in experimental case studies, with a detection range of about 30 m; difficulties in the vehicle type identification | |

| [51] | Vehicle detection from the Velodyne 64E 3D lidar data using 2D FCN, where the data are transformed to the 2D point maps | An end-to-end (E2E) detection method with excellent performance and a possibility for additional improvements by including more training data and designing deeper networks | |

| [52] | Convolutional neural network (CNN)-based multimodal vehicle detection using three data modalities from the color camera and 3D lidar (dense-depth map, reflectance map, and red-green-blue (RGB) image) | The proposed data fusion approach provides higher accuracy than the individual modalities for the Karlsruhe Institute of Technology and Toyota Technological Institute (KITTI) dataset | |

| [53] | Camera and lidar data fusion for pedestrian detection using CNNs, where lidar data features (horizontal disparity, height above ground, and angle) are fused with RGB images | The tests on the KITTI pedestrian detection dataset show that the proposed approach outperforms the one using only camera imagery | |

| [54] | CNN-based classification of objects using camera and lidar data from autonomous vehicles, where point cloud lidar data are upsampled and converted into the pixel-level depth feature map, which is then fused with the RGB images and fed to the deep CNN | Results obtained on the public dataset support the effectiveness and efficiency of the data fusion and object classification strategies, where the proposed approach outperforms the approach using only RGB or depth data | |

| [55] | Real-time detection of non-stationary (moving) objects based on the CNN using intensity data in automotive lidar SLAM | It is demonstrated that non-stationary objects can be detected using CNNs trained with the 2D intensity grayscale images in the supervised or unsupervised manner while achieving improved map consistency and localization results | |

| [56] | Target detection for autonomous vehicles in complex environments based on the dual-modal instance segmentation deep neural network (DM-ISDNN) using camera and lidar data fusion | The experimental results show the robustness and effectiveness of the proposed approach, which outperforms the competitive methods | |

| [57] | Road segmentation, obstacle detection, and vehicle tracking based on an encoder-decoder-based FCN, an extended Kalman filter, and camera, lidar, and radar sensor fusion for autonomous vehicles | Experimental results indicate that the proposed affordable, compact, and robust fusion system outperforms benchmark models and can be efficiently used in real-time for the vehicle’s environment perception | |

| [58] | CNN-based real-time semantic segmentation of 3D lidar data for autonomous vehicle perception based on the projection method and the adaptive break point detector method | Practical implementation and satisfactory speed and accuracy of the proposed method | |

| [59] | E2E self-driving algorithm using a CNN that predicts the vehicles’ longitudinal and lateral control values based on the input camera images and 2D lidar point cloud data | Experimental tests in the real-world complex urban environments show promising results | |

| [60] | Pedestrian recognition and tracking for autonomous vehicles using an SVM classifier and Velodyne 64 lidar data, generating alarms when pedestrians are detected on the road or close to curbs | The validity of the method was confirmed on the autonomous vehicle platform in two scenarios: when the vehicle is stationary and while driving |

| Reference | Description of Application | Conclusion | |

|---|---|---|---|

| Autonomous navigation and object detection | [76] | Lidar as a part of the sensor system (absolute positioning, visual, audio, and remote sensing sensors) combined with artificial intelligence (AI) techniques for situational awareness in autonomous vessels | Several drawbacks of the current lidar technology are detected for application on autonomous vessels, including limited laser power due to eye-safety issues, lower operational ranges, expensive optics, and unsuitability for the harsh working environment |

| [77] | Ship berthing information extraction based on the 3D lidar data using principal component analysis | The effectiveness of the proposed method in dynamic target recognition and safe ship berthing is confirmed by experimental validation on the ro-ro ship berthing | |

| [78] | Berthing perception framework for maritime autonomous surface ships based on the estimation of the vessel’s berthing speed, angle, distance, and other parameters from the 3D shipborne lidar data | The proposed method allows accurate berthing in real-time, as confirmed by experiments | |

| [79] | Low-cost lidar-based ship berthing and docking system, with a novel method of fusing lidar and GNSS positioning data | The usefulness of the proposed system in safe ship berthing is proven experimentally during several berthing maneuvers and compared to the GNSS-based navigational aid system | |

| [80] | Computer-aided method for bollard segmentation and position estimation from the 3D lidar point cloud data for autonomous mooring based on the 3D feature matching and mixed feature-correspondence matching algorithms | The proposed approach is validated on experimental mooring scenes with a robotic arm equipped with lidar | |

| [81] | Use of the dual-channel lidar for rotorcraft searching, positioning, tracking, and landing on a ship at sea based on the estimation of the azimuth angle, the distance of the ship relative to the rotorcraft, and the ship’s course | The simulation and experimental tests confirm the effectiveness of the developed method and associated models | |

| [82] | Algorithm for detecting objects on seas and oceans using lidar data for application on maritime vessels in different environmental conditions | A proven accurate object detection method called DBSCAN is used to cluster the data points | |

| [83] | Detection, monitoring, and classification of objects on seas and oceans based on the SVM classifier and the fusion of lidar and camera data | The proposed method is proven to be highly effective, with an overall accuracy of 98.7% for six classes | |

| [84] | Detection, classification, and mapping of objects on seas and oceans using an unmanned surface vehicle with four multi-beam lidar sensors and polygon representation methods | The ability to create a map of the environment with detected objects that are not in motion, with polygons being accurate to 20 cm using a 10 cm occupancy grid | |

| Monitoring ocean ecosystems | [85] | A review of the development of profiling oceanographic lidars | The possibility of sea and ocean analysis and monitoring of animal species using lidar is described where these lidars can provide quantitative profiles of the optical properties of the water column to depths of 20–30 m in coastal waters and 100 m for a blue lidar in the open ocean |

| [86] | Application of lidar for monitoring and mapping the marine coral reef ecosystems | Successful monitoring of fish, plankton, and coral reef distribution using 3D lidar data | |

| [87] | Spaceborne lidar for ocean observations | The usefulness of satellite lidar for observations of ocean ecosystems, particularly in combination with ocean color observations | |

| Mapping coastal areas | [88] | A review of lidar application in creating shoreline and bathymetric maps | Lidar, combined with Global Positioning System (GPS), provides accurate topographical and bathymetric coastal maps, with 10–15 cm vertical accuracy, where best water penetration is achieved by using a blue-green laser with a wavelength of 530 nm |

| [89] | Classification of large bodies of water using airborne laser scanning (ALS) | Automatic and efficient classification of water surfaces with an SVM classifier, with an accuracy of over 95% for most cases of coastal areas | |

| [90] | Mapping coastal terrains using unmanned aerial vehicle (UAV) lidar | High resolution and quality of topographic data (5–10 cm accuracy) of UAV lidar that outperforms UAV imagery in terms of ground coverage, point density, and the ability to penetrate through the vegetation | |

| [91] | Semi-automatic coastal waste detection and recognition using 3D lidar data | Possible classification of waste into plastic, paper, fabric, and metal | |

| Other applications | [92] | Monitoring the dynamics of the upper part of the ocean by ship-lidar with the analysis of motion impact on lidar measurements | Measurement of waves, turbulence, and the impact of wind farms on the seas |

| [93] | Doppler lidar-based data collection for offshore wind farms | High-resolution measuring of wind speed and direction at various altitudes for proper realization of offshore wind farms |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lopac, N.; Jurdana, I.; Brnelić, A.; Krljan, T. Application of Laser Systems for Detection and Ranging in the Modern Road Transportation and Maritime Sector. Sensors 2022, 22, 5946. https://doi.org/10.3390/s22165946

Lopac N, Jurdana I, Brnelić A, Krljan T. Application of Laser Systems for Detection and Ranging in the Modern Road Transportation and Maritime Sector. Sensors. 2022; 22(16):5946. https://doi.org/10.3390/s22165946

Chicago/Turabian StyleLopac, Nikola, Irena Jurdana, Adrian Brnelić, and Tomislav Krljan. 2022. "Application of Laser Systems for Detection and Ranging in the Modern Road Transportation and Maritime Sector" Sensors 22, no. 16: 5946. https://doi.org/10.3390/s22165946