Automatic Measurement of Seed Geometric Parameters Using a Handheld Scanner

Abstract

:1. Introduction

2. Materials and Methods

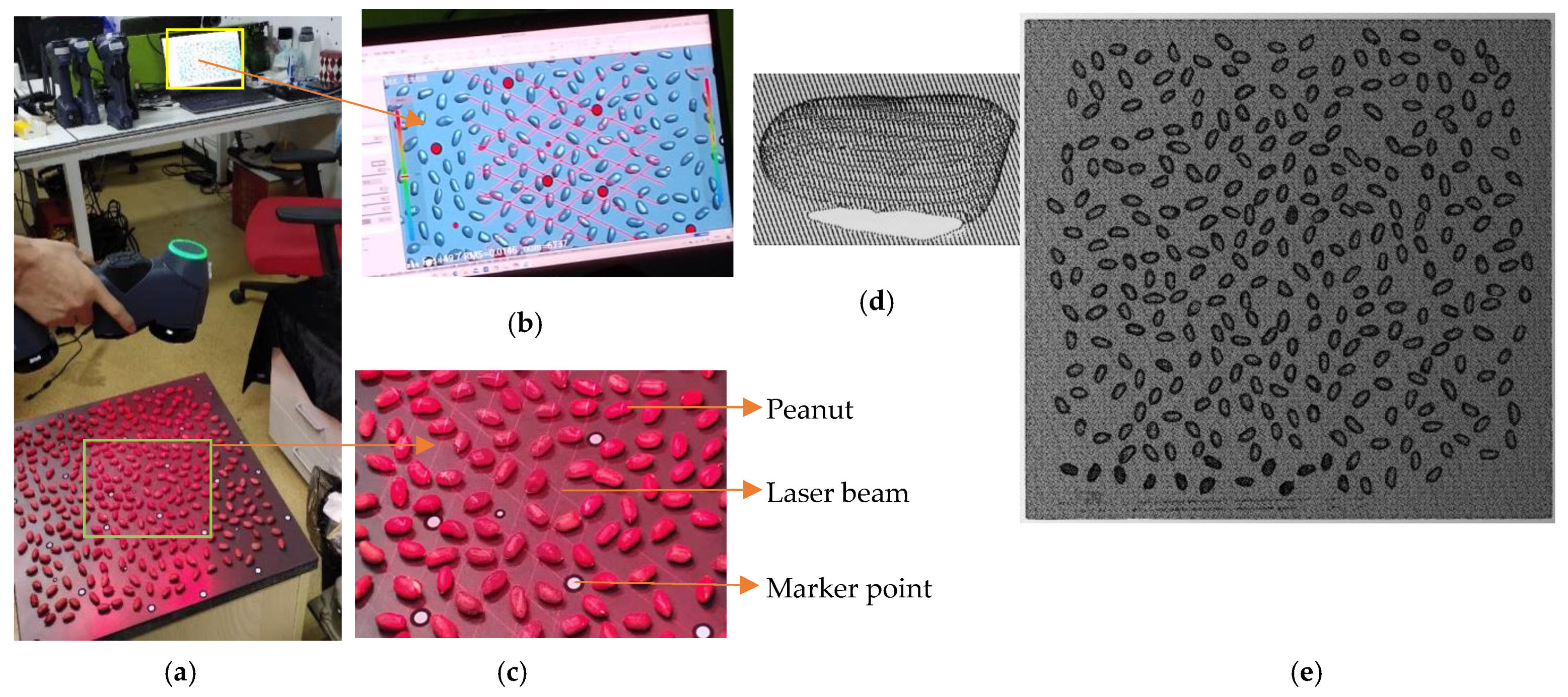

2.1. Data Acquisition

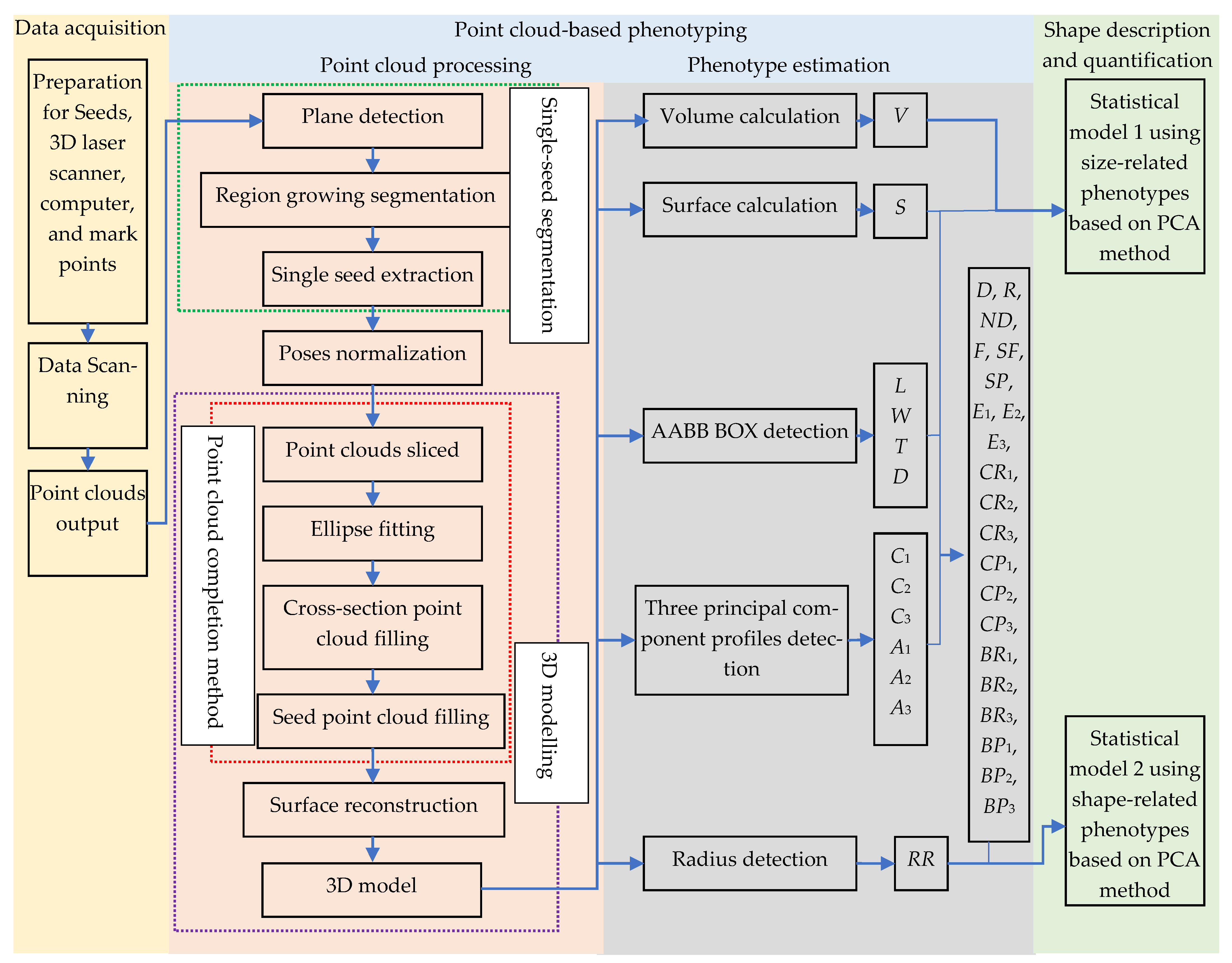

2.2. Point Cloud-Based Phenotyping

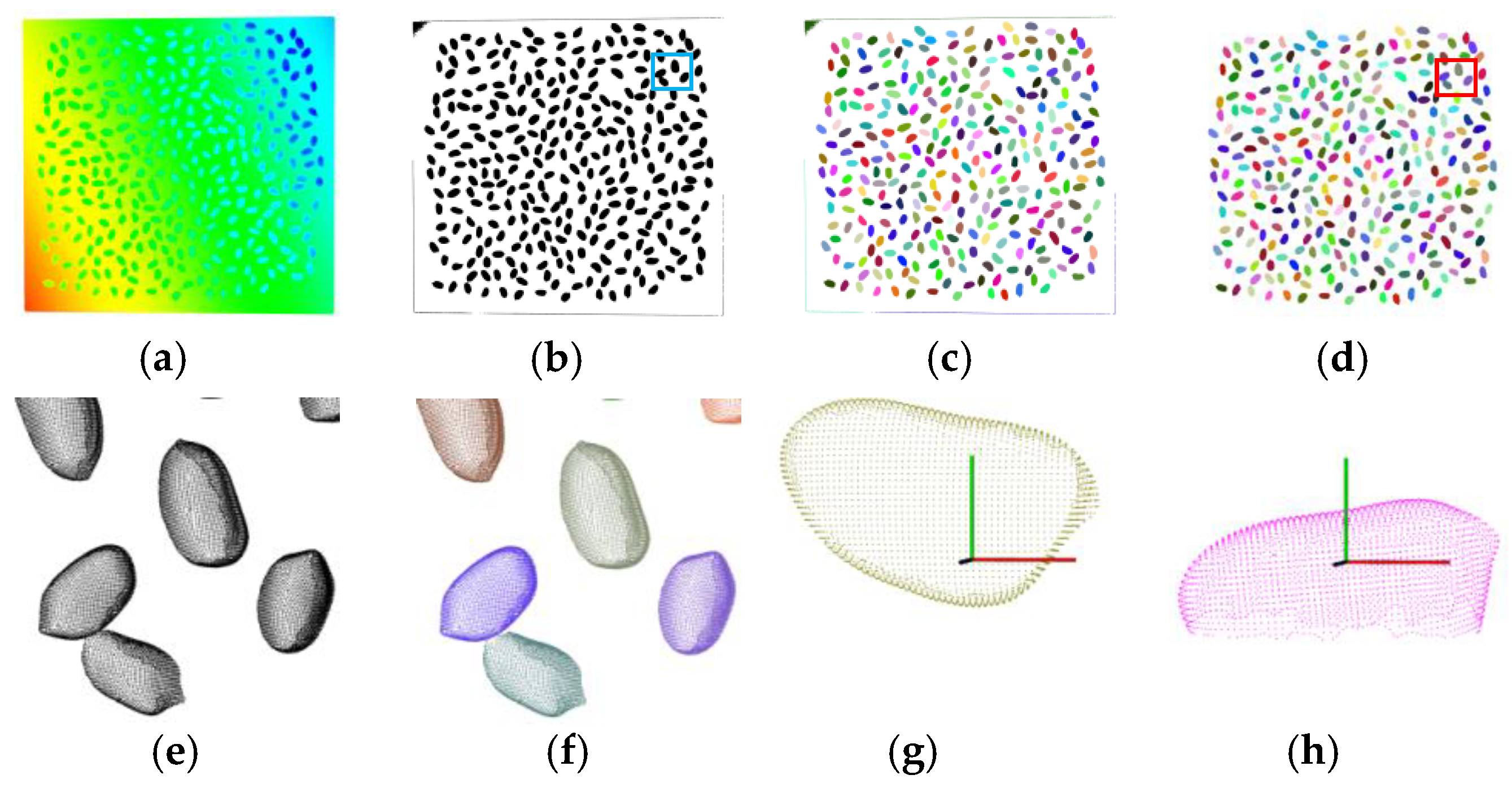

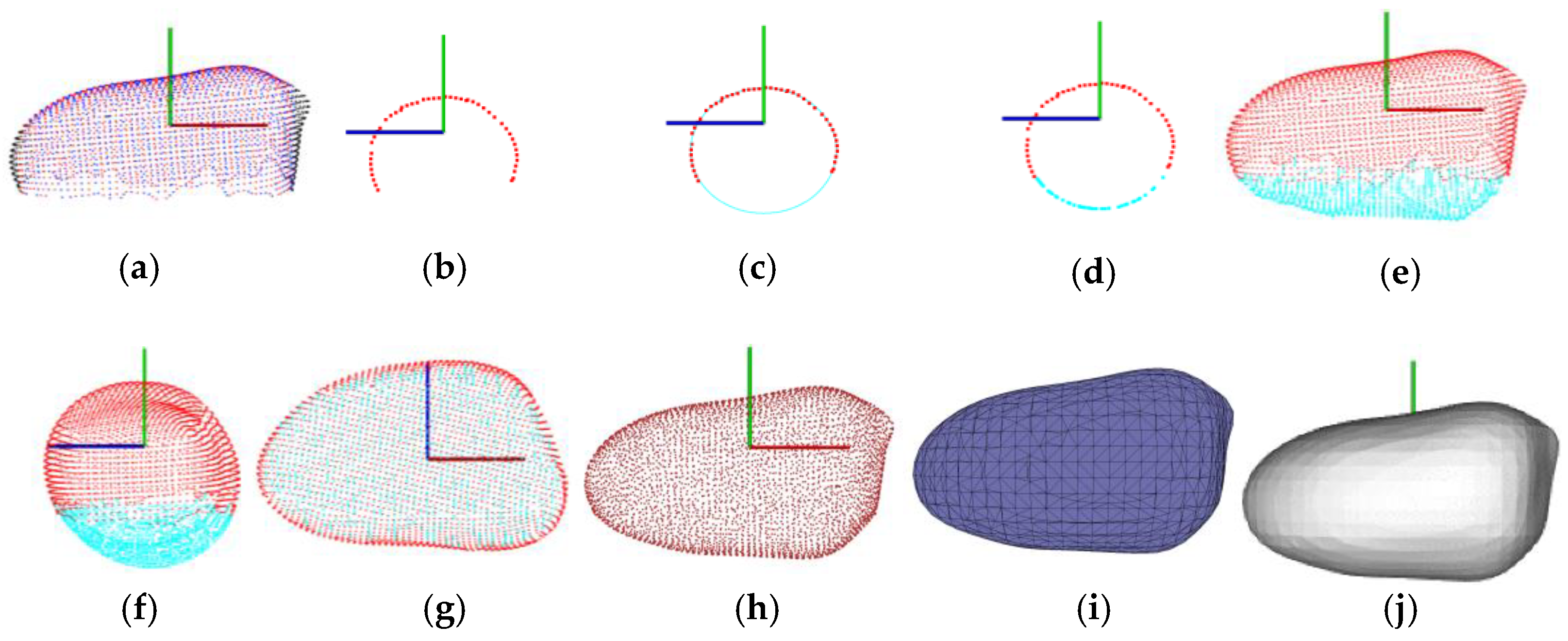

2.2.1. Point Cloud Processing

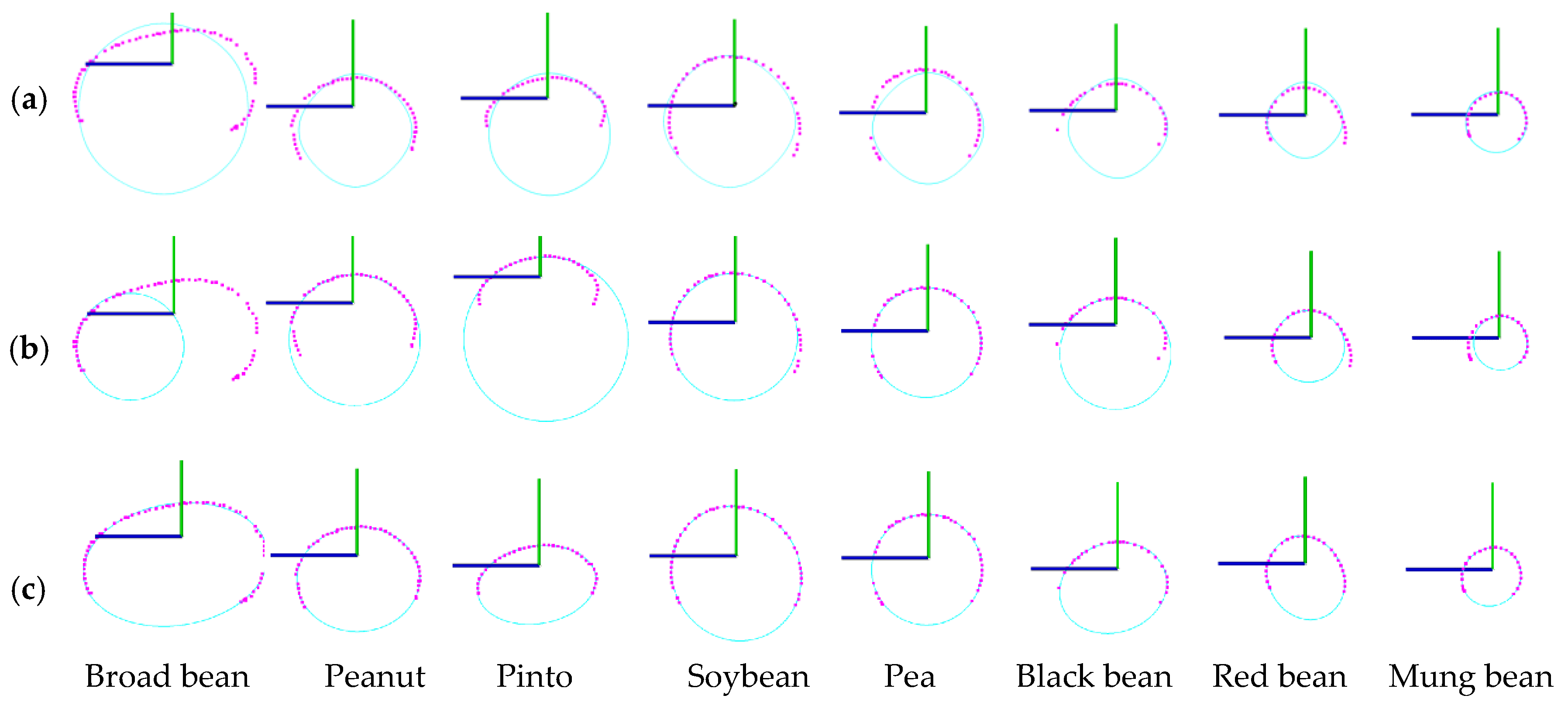

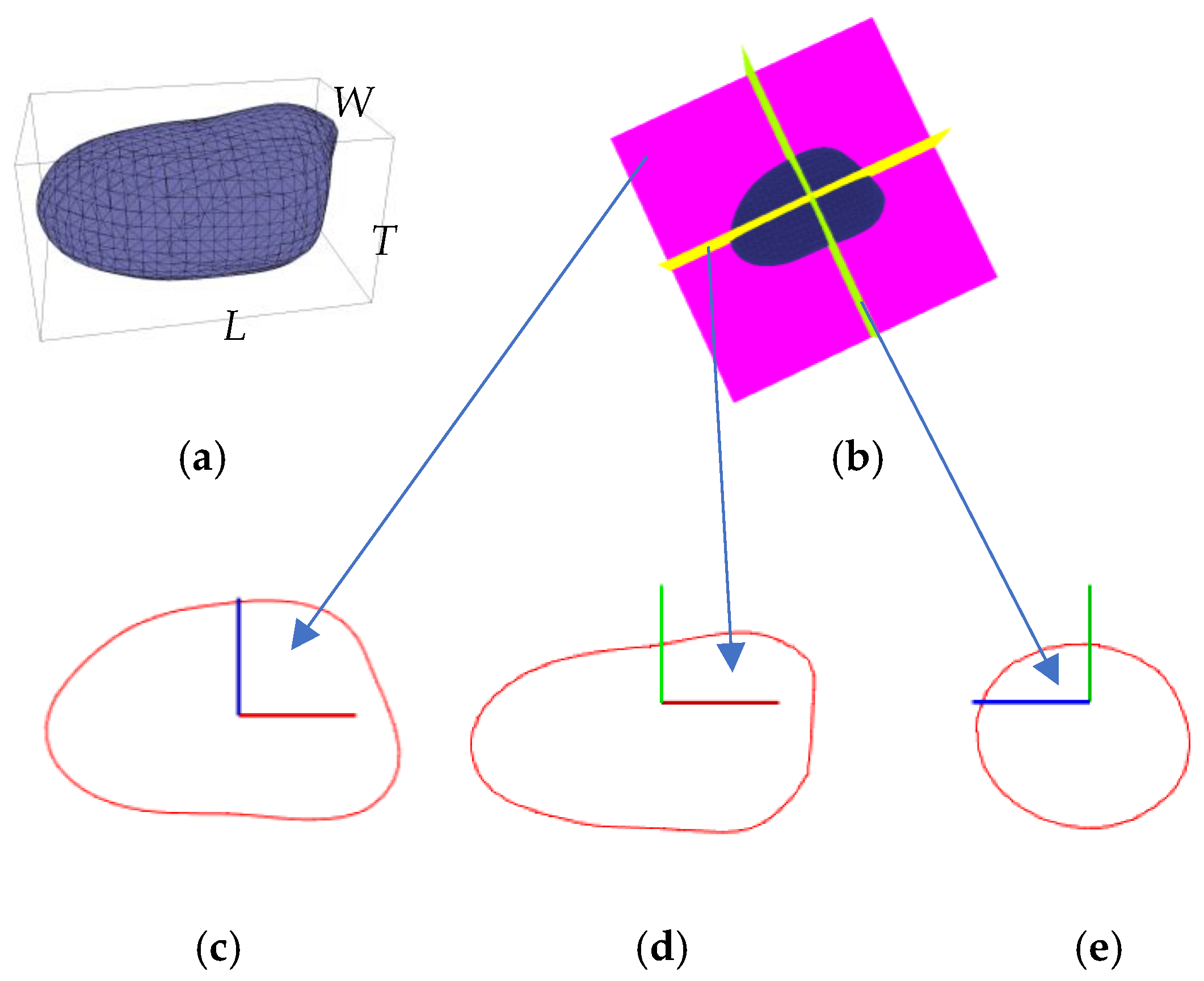

2.2.2. Phenotype Estimation

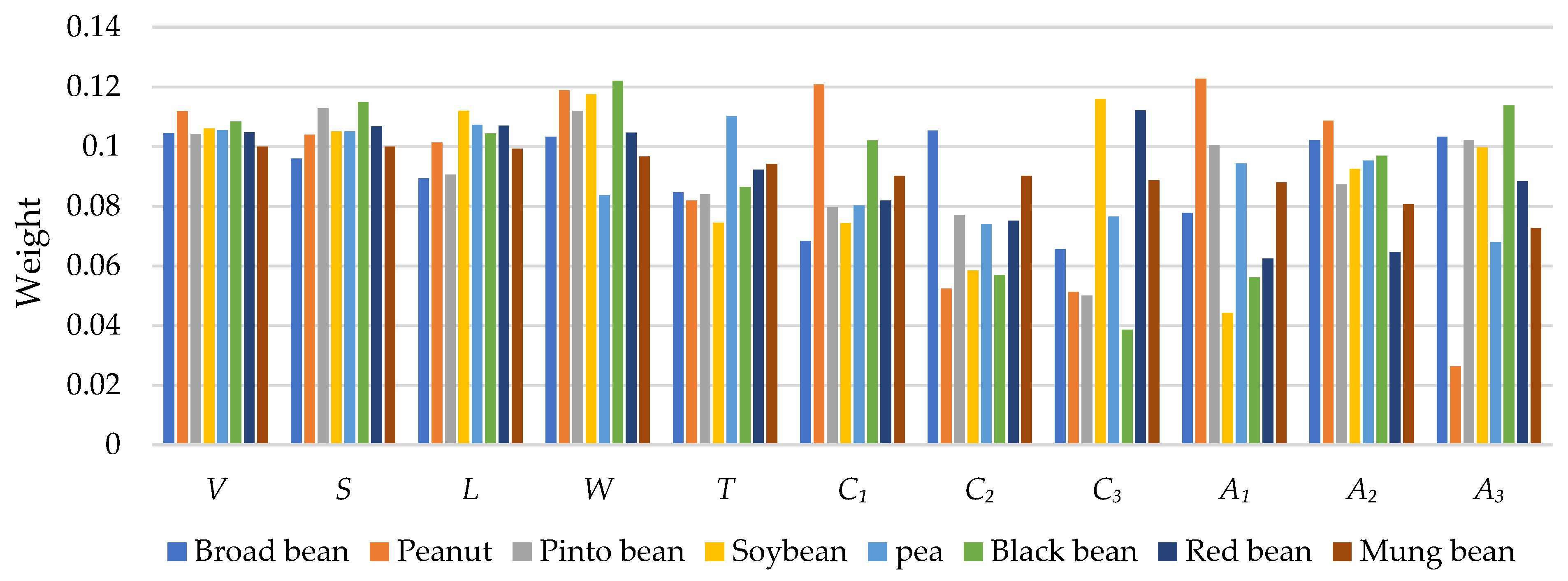

2.3. Shape Description and Quantification Based on Statistical Models

2.4. Accuracy Analysis

3. Results

3.1. Data Scanning and Segmentation Results

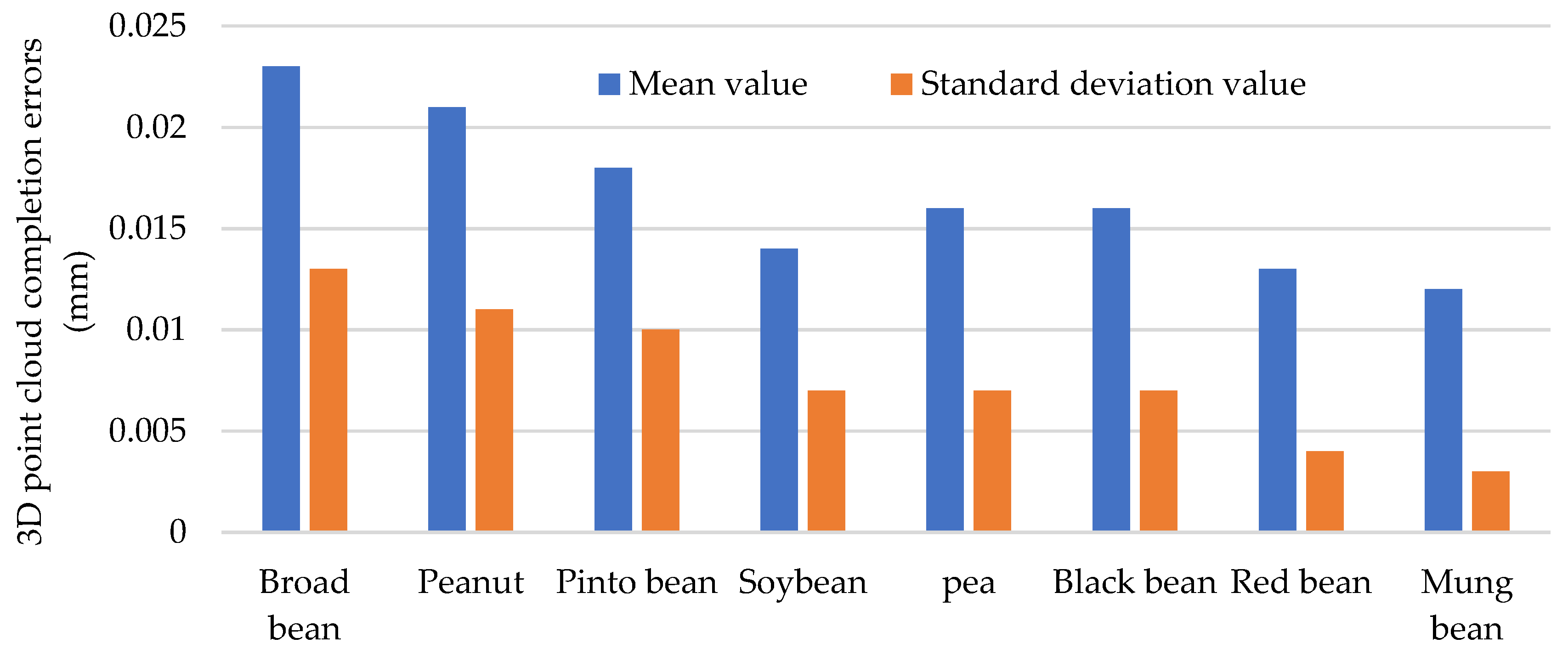

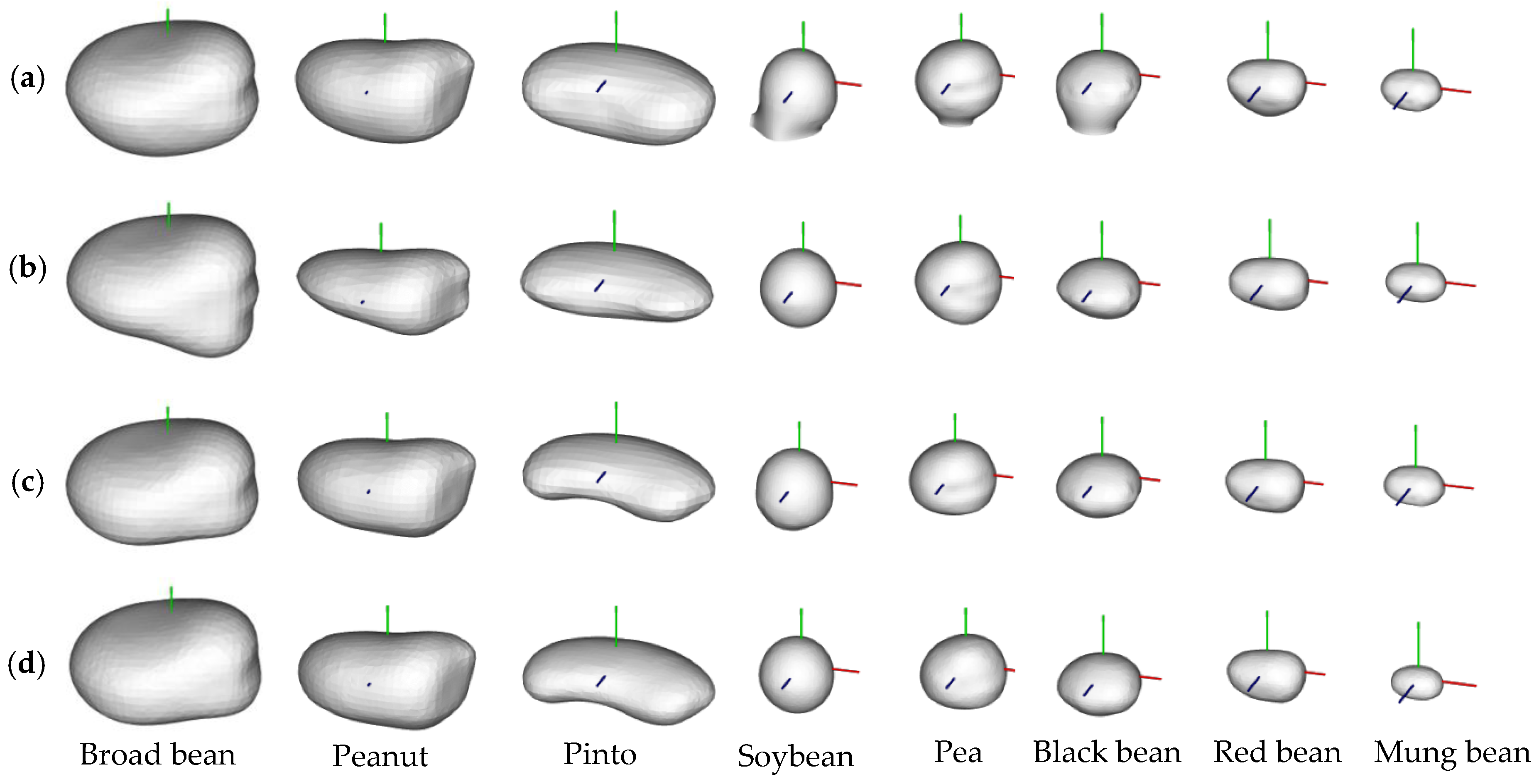

3.2. Point Cloud Completion Results

3.3. Phenotype Estimation Results

3.4. Shape Description and Quantification Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Scanning and Segmentation Results

References

- Chen, Z.; Lancon-Verdier, V.; Le Signor, C.; She, Y.-M.; Kang, Y.; Verdier, J. Genome-wide association study identified candidate genes for seed size and seed composition improvement in M. truncatula. Sci. Rep. 2021, 11, 4224. [Google Scholar] [CrossRef] [PubMed]

- Hasan, S.; Furtado, A.; Henry, R. Analysis of domestication loci in wild rice populations. Plants 2023, 12, 489. [Google Scholar] [CrossRef] [PubMed]

- Yong, Q. Research on painting image classification based on transfer learning and feature fusion. Math. Probl. Eng. 2022, 2022, 5254823. [Google Scholar] [CrossRef]

- Loddo, A.; Loddo, M.; Di Ruberto, C. A novel deep learning based approach for seed image classification and retrieval. Comput. Electron. Agric. 2021, 187, 106269–106280. [Google Scholar] [CrossRef]

- Ashfaq, M.; Khan, A.S.; Ullah Khan, S.H.; Ahmad, R. Association of various morphological traits with yield and genetic divergence in rice (Oryza sativa). Int. J. Agric. Biol. 2012, 14, 55–62. [Google Scholar] [CrossRef]

- Sanwong, P.; Sanitchon, J.; Dongsansuk, A.; Jothityangkoon, D. High temperature alters phenology, seed development and yield in three rice varieties. Plants 2023, 12, 666. [Google Scholar] [CrossRef] [PubMed]

- Malinowski, D.P.; Rudd, J.C.; Pinchak, W.E.; Baker, J.A. Determining morphological traits for selecting wheat (Triticum aestivum L.) with improved early-season forage production. J. Adv. Agric. 2018, 9, 1511–1533. [Google Scholar] [CrossRef]

- Liu, W.; Liu, C.; Jin, J.; Li, D.; Fu, Y.; Yuan, X. High-throughput phenotyping of morphological seed and fruit characteristics using X-ray computed tomography. Front. Plant Sci. 2020, 11, 601475–601485. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.; Wang, K.; Huang, C.; Zhang, X.; Yan, J.; Yang, W. A high-throughput maize kernel traits scorer based on line-scan imaging. Measurement 2016, 90, 453–460. [Google Scholar] [CrossRef]

- Feng, X.; He, P.; Zhang, H.; Yin, W.; Qian, Y.; Cao, P.; Hu, F. Rice seeds identification based on back propagation neural network model. Int. J. Agric. Biol. Eng. 2019, 12, 122–128. [Google Scholar] [CrossRef]

- Sharma, R.; Kumar, M.; Alam, M.S. Image processing techniques to estimate weight and morphological parameters for selected wheat refractions. Sci. Rep. 2021, 11, 20953–20965. [Google Scholar] [CrossRef] [PubMed]

- Chu, Y.; Chee, P.; Isleib, T.G.; Holbrook, C.C.; Ozias-Akins, P. Major seed size QTL on chromosome A05 of peanut (Arachis hypogaea) is conserved in the US mini core germplasm collection. Mol. Breed. 2020, 40, 6. [Google Scholar] [CrossRef]

- McDonald, L.; Panozzo, J. A review of the opportunities for spectral-based technologies in post-harvest testing of pulse grains. Legume Sci. 2023, 5, e175. [Google Scholar] [CrossRef]

- Juan, A.; Martín-Gómez, J.J.; Rodríguez-Lorenzo, J.L.; Janoušek, B.; Cervantes, E. New techniques for seed shape description in silene species. Taxonomy 2022, 2, 1–19. [Google Scholar] [CrossRef]

- Cervantes, E.; Martín Gómez, J.J. Seed shape description and quantification by comparison with geometric models. Horticulturae 2019, 5, 60. [Google Scholar] [CrossRef]

- Merieux, N.; Cordier, P.; Wagner, M.-H.; Ducournau, S.; Aligon, S.; Job, D.; Grappin, P.; Grappin, E. ScreenSeed as a novel high throughput seed germination phenotyping method. Sci. Rep. 2021, 11, 1404. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Song, B.; Guo, Y.; Wang, B.; Xu, C.; Zhu, H.; Lizhu, E.; Lai, J.; Song, W.; Zhao, H. QTL analysis reveals conserved and differential genetic regulation of maize lateral angles above the ear. Plants 2023, 12, 680. [Google Scholar] [CrossRef]

- Cervantes, E.; Martin Gomez, J.J. Seed shape quantification in the order Cucurbitales. Mod. Phytomorphol. 2018, 12, 1–13. [Google Scholar] [CrossRef]

- Rahman, A.; Cho, B.-K. Assessment of seed quality using non-destructive measurement techniques: A review. Seed Sci. Res. 2016, 26, 285–305. [Google Scholar] [CrossRef]

- Zhang, Z.; Ma, X.; Guan, H.; Zhu, K.; Feng, J.; Yu, S. A method for calculating the leaf inclination of soybean canopy based on 3D point clouds. Int. J. Remote Sens. 2021, 42, 5721–5742. [Google Scholar] [CrossRef]

- Li, H.; Qian, Y.; Cao, P.; Yin, W.; Dai, F.; Hu, F.; Yan, Z. Calculation method of surface shape feature of rice seed based on point cloud. Comput. Electron. Agric. 2017, 142, 416–423. [Google Scholar] [CrossRef]

- Yan, H. 3D scanner-based corn seed modeling. Appl. Eng. Agric. 2016, 32, 181–188. [Google Scholar] [CrossRef]

- García-Lara, S.; Chuck-Hernandez, C.; Serna-Saldivar, S.O. Development and structure of the Corn Kernel. In Corn, 3rd ed.; Serna-Saldivar, S.O., Ed.; AACC International Press: Oxford, UK, 2019; pp. 147–163. [Google Scholar] [CrossRef]

- Kazhdan, M.; Chuang, M.; Rusinkiewicz, S.; Hoppe, H. Poisson surface reconstruction with envelope constraints. Proc. Comput. Graph. Forum 2020, 39, 173–182. [Google Scholar] [CrossRef]

- Zhang, W.; Xiao, C. PCAN: 3D attention map learning using contextual information for point cloud based retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12436–12445. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, X.; Cai, Z.; Pan, L.; Zhao, H.; Yi, S.; Yeo, C.K.; Dai, B.; Loy, C.C. Unsupervised 3D shape completion through GAN inversion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 1768–1777. [Google Scholar] [CrossRef]

- Cervantes, E.; Martín, J.J.; Chan, P.K.; Gresshoff, P.M.; Tocino, Á. Seed shape in model legumes: Approximation by a cardioid reveals differences in ethylene insensitive mutants of Lotus japonicus and Medicago truncatula. J. Plant Physiol. 2012, 169, 1359–1365. [Google Scholar] [CrossRef] [PubMed]

- Chang, F.; Lv, W.; Lv, P.; Xiao, Y.; Yan, W.; Chen, S.; Zheng, L.; Xie, P.; Wang, L.; Karikari, B. Exploring genetic architecture for pod-related traits in soybean using image-based phenotyping. Mol. Breed. 2021, 41, 28. [Google Scholar] [CrossRef]

- Sun, X.; Wang, H.; Wang, W.; Li, N.; Hämäläinen, T.; Ristaniemi, T.; Liu, C. A statistical model of spine shape and material for population-oriented biomechanical simulation. IEEE Access 2021, 9, 155805–155814. [Google Scholar] [CrossRef]

- Martín-Gómez, J.J.; Rewicz, A.; Rodríguez-Lorenzo, J.L.; Janoušek, B.; Cervantes, E. Seed morphology in silene based on geometric models. Plants 2020, 9, 1787. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Zhu, S.; Liu, F.; He, Y.; Bao, Y.; Zhang, C. Hyperspectral imaging for seed quality and safety inspection: A review. Plant Methods 2019, 15, 91. [Google Scholar] [CrossRef] [PubMed]

- Frangi, A.F.; Rueckert, D.; Schnabel, J.A.; Niessen, W.J. Automatic construction of multiple-object three-dimensional statistical shape models: Application to cardiac modeling. IEEE Trans. Med. Imaging 2002, 21, 1151–1166. [Google Scholar] [CrossRef]

- Huang, X.; Zheng, S.; Zhu, N. High-throughput legume seed phenotyping using a handheld 3D laser scanner. Remote Sens. 2022, 14, 431. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Proc. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Li, D.; Yan, C.; Tang, X.S.; Yan, S.; Xin, C. Leaf segmentation on dense plant point clouds with facet region growing. Sensors 2018, 18, 3625. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Lee, D.; Kim, T.; Oh, S.; Cho, H. Automated extraction of geometric primitives with solid lines from unstructured point clouds for creating digital buildings models. Autom. Constr. 2023, 145, 104642. [Google Scholar] [CrossRef]

- Cervantes, E.; Martín, J.J.; Saadaoui, E. Updated methods for seed shape analysis. Scientifica 2016, 2016, 5691825. [Google Scholar] [CrossRef] [PubMed]

- Fitzgibbon, A.; Pilu, M.; Fisher, R.B. Direct least square fitting of ellipses. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 476–480. [Google Scholar] [CrossRef]

- Kulikov, V.A.; Khotskin, N.V.; Nikitin, S.V.; Lankin, V.S.; Kulikov, A.V.; Trapezov, O.V. Application of 3-D imaging sensor for tracking minipigs in the open field test. J. Neurosci. Methods 2014, 235, 219–225. [Google Scholar] [CrossRef] [PubMed]

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. ACM Trans. Graph. 2013, 32, 1–13. [Google Scholar] [CrossRef]

- Hu, W.; Zhang, C.; Jiang, Y.; Huang, C.; Liu, Q.; Xiong, L.; Yang, W.; Chen, F. Nondestructive 3D image analysis pipeline to extract rice grain traits using X-ray computed tomography. Plant Phenomics 2020, 3, 3414926. [Google Scholar] [CrossRef]

- Yalçın, İ.; Özarslan, C.; Akbaş, T. Physical properties of pea (Pisum sativum) seed. J. Food Eng. 2007, 79, 731–735. [Google Scholar] [CrossRef]

- Tateyama, T.; Foruzan, H.; Chen, Y. 2D-PCA based statistical shape model from few medical samples. In Proceedings of the 2009 Fifth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kyoto, Japan, 12–14 September 2009; pp. 1266–1269. [Google Scholar] [CrossRef]

| NO. | Traits | Sym. | Var. | |

|---|---|---|---|---|

| Size-related phenotypes | 1 | Volume | V | X01 |

| 2 | Surface area | S | X02 | |

| 3 | Length | L | X03 | |

| 4 | Width | W | X04 | |

| 5 | Thickness | T | X05 | |

| 6 | Horizontal cross-section perimeter | C1 | X06 | |

| 7 | Transverse cross-section perimeter | C2 | X07 | |

| 8 | Longitudinal cross-section perimeter | C3 | X08 | |

| 9 | Horizontal cross-section area | A1 | X09 | |

| 10 | Transverse cross-section area | A2 | X010 | |

| 11 | Longitudinal cross-section area | A3 | X011 | |

| Shape-related phenotypes | 12 | Radius ratio | RR | X1 |

| 13 | Geometric mean | D = (LWT)1/3 | X2 | |

| 14 | Roundness | R = L/(WT)1/2 | X3 | |

| 15 | Needle degree | ND = L/W | X4 | |

| 16 | Flatness | F = T/W | X5 | |

| 17 | Shape factor | SF = TL/W2 | X6 | |

| 18 | Sphericity | SP = (WT/L2)1/3 | X7 | |

| 19 | Elongation 1 | E1 = abs((L − W)/L) | X8 | |

| 20 | Elongation 2 | E2 = abs((L − T)/L) | X9 | |

| 21 | Elongation 3 | E3 = abs((T − W)/W) | X10 | |

| 22 | Circularity 1 | CR1 = C12/4πA1 | X11 | |

| 23 | Circularity 2 | CR2 = C22/4πA2 | X12 | |

| 24 | Circularity 2 | CR3 = C32/4πA3 | X13 | |

| 25 | Compactness 1 | CP1 = 16A1/C12 | X14 | |

| 26 | Compactness 2 | CP2 = 16A2/C22 | X15 | |

| 27 | Compactness 3 | CP3 = 16A3/C32 | X16 | |

| 28 | Bounding rectangle 1 | BR1 = A1/LW | X17 | |

| 29 | Bounding rectangle 2 | BR2 = A2/LT | X18 | |

| 30 | Bounding rectangle 3 | BR3 = A3/WT | X19 | |

| 31 | Bounding rectangle to perimeter 1 | BP1 = C1/2(L + W) | X20 | |

| 32 | Bounding rectangle to perimeter 2 | BP2 = C2/2(L + T) | X21 | |

| 33 | Bounding rectangle to perimeter 3 | BP3 = C3/2(T + W) | X22 |

| N1 | N2 | N3 | Scanning Accuracy (R_scan) | Segmentation Accuracy (R_seg1) | |

|---|---|---|---|---|---|

| Broad bean | 300 | 300 | 300 | 100.00% | 100.00% |

| Peanut | 300 | 300 | 300 | 100.00% | 100.00% |

| Pinto bean | 300 | 299 | 299 | 99.67% | 100.00% |

| Soybean | 500 | 500 | 500 | 100.00% | 100.00% |

| pea | 500 | 500 | 500 | 100.00% | 100.00% |

| Black bean | 500 | 500 | 500 | 100.00% | 100.00% |

| Red bean | 500 | 497 | 497 | 99.40% | 100.00% |

| Green bean | 500 | 500 | 500 | 100.00% | 100.00% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, X.; Zhu, F.; Wang, X.; Zhang, B. Automatic Measurement of Seed Geometric Parameters Using a Handheld Scanner. Sensors 2024, 24, 6117. https://doi.org/10.3390/s24186117

Huang X, Zhu F, Wang X, Zhang B. Automatic Measurement of Seed Geometric Parameters Using a Handheld Scanner. Sensors. 2024; 24(18):6117. https://doi.org/10.3390/s24186117

Chicago/Turabian StyleHuang, Xia, Fengbo Zhu, Xiqi Wang, and Bo Zhang. 2024. "Automatic Measurement of Seed Geometric Parameters Using a Handheld Scanner" Sensors 24, no. 18: 6117. https://doi.org/10.3390/s24186117