Multiscale Representation of Radar Echo Data Retrieved through Deep Learning from Numerical Model Simulations and Satellite Images

Abstract

:1. Introduction

2. Data and Methods

2.1. Radar Echo Data

2.2. Numerical Model Simulations

2.3. Geostationary Satellite Images

2.4. Data Preprocessing

2.5. Deep Network Model

2.6. Training

2.7. Evaluations and Interpretations

3. Results

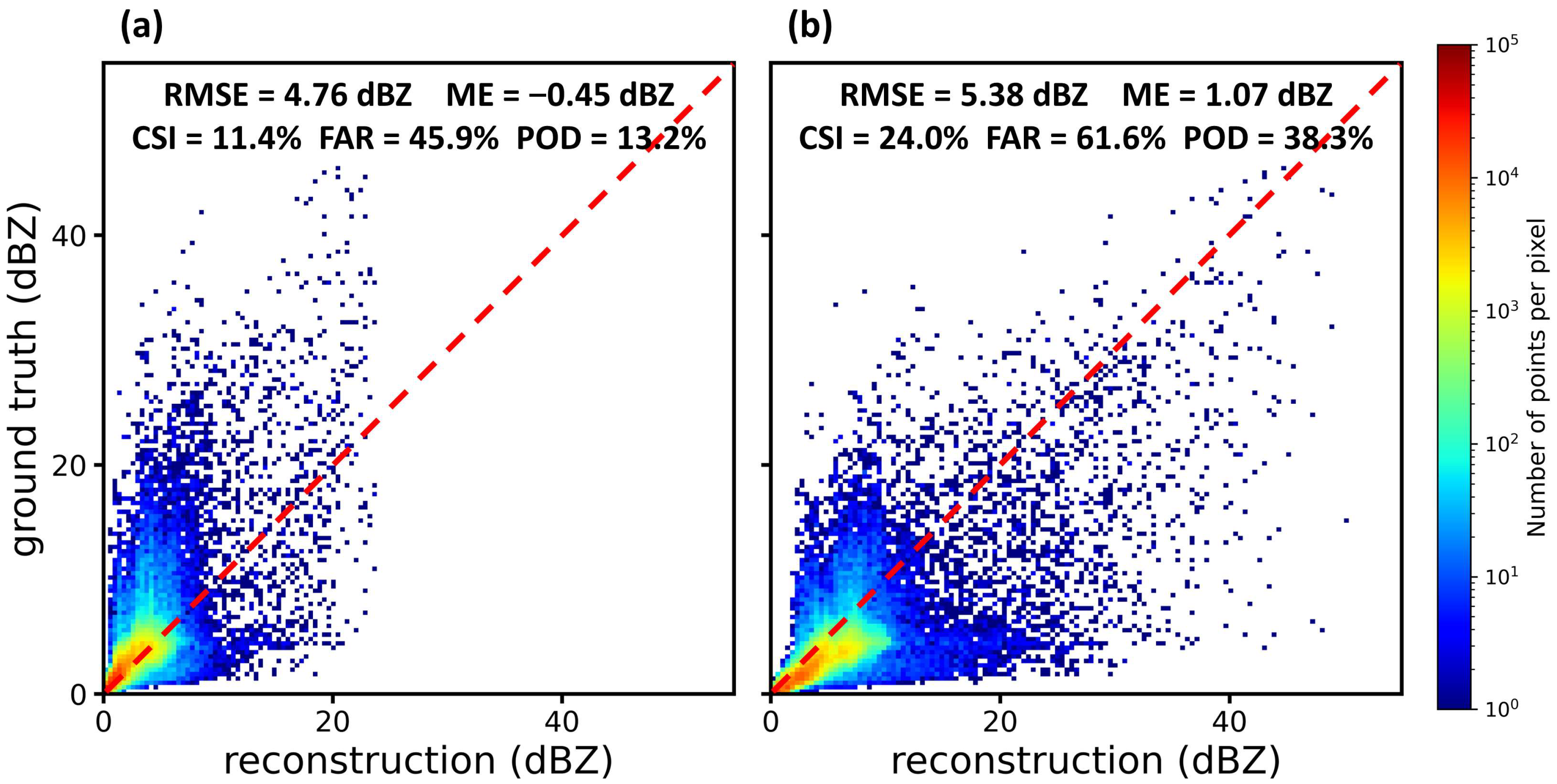

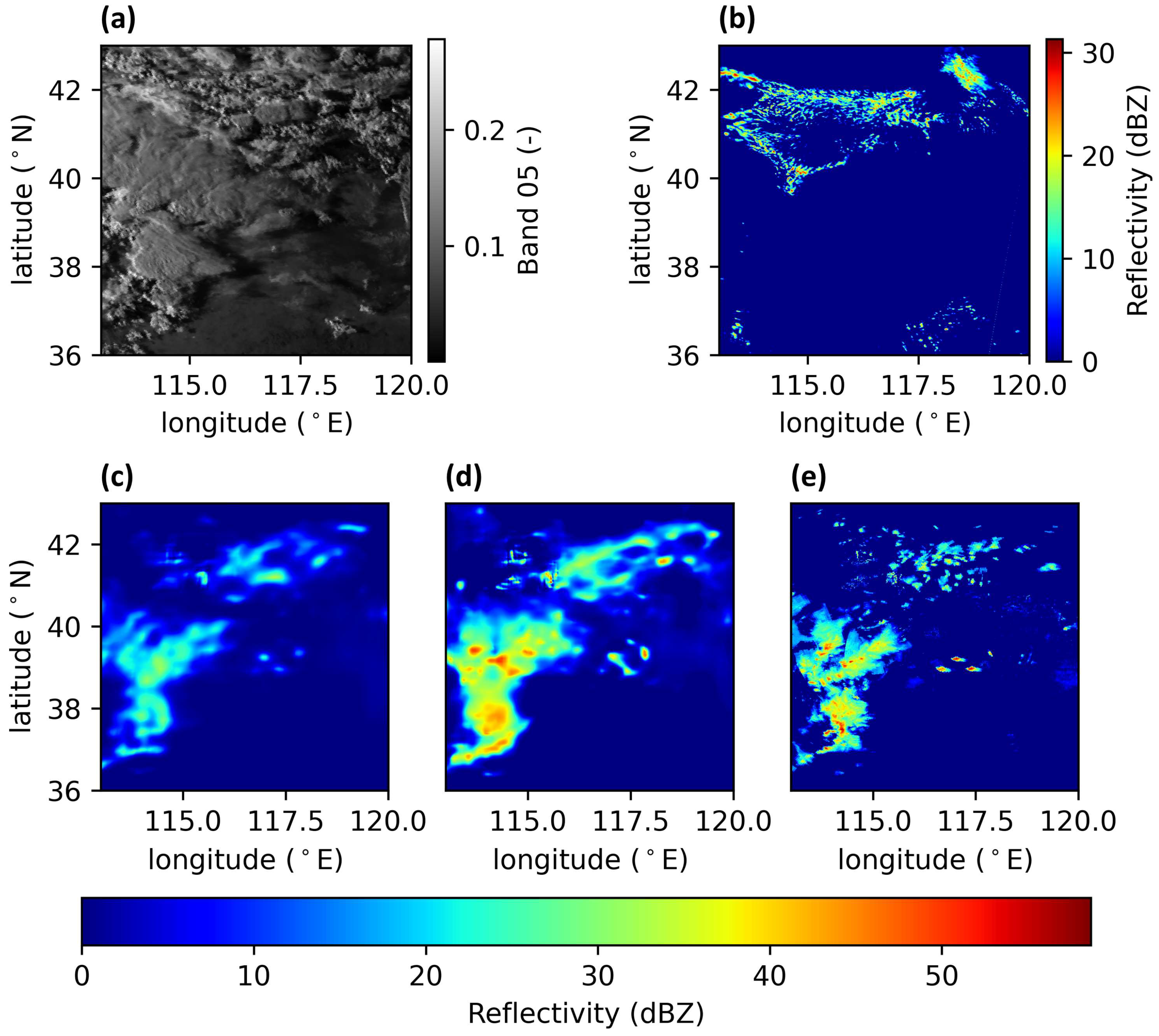

3.1. Echo Reconstructions

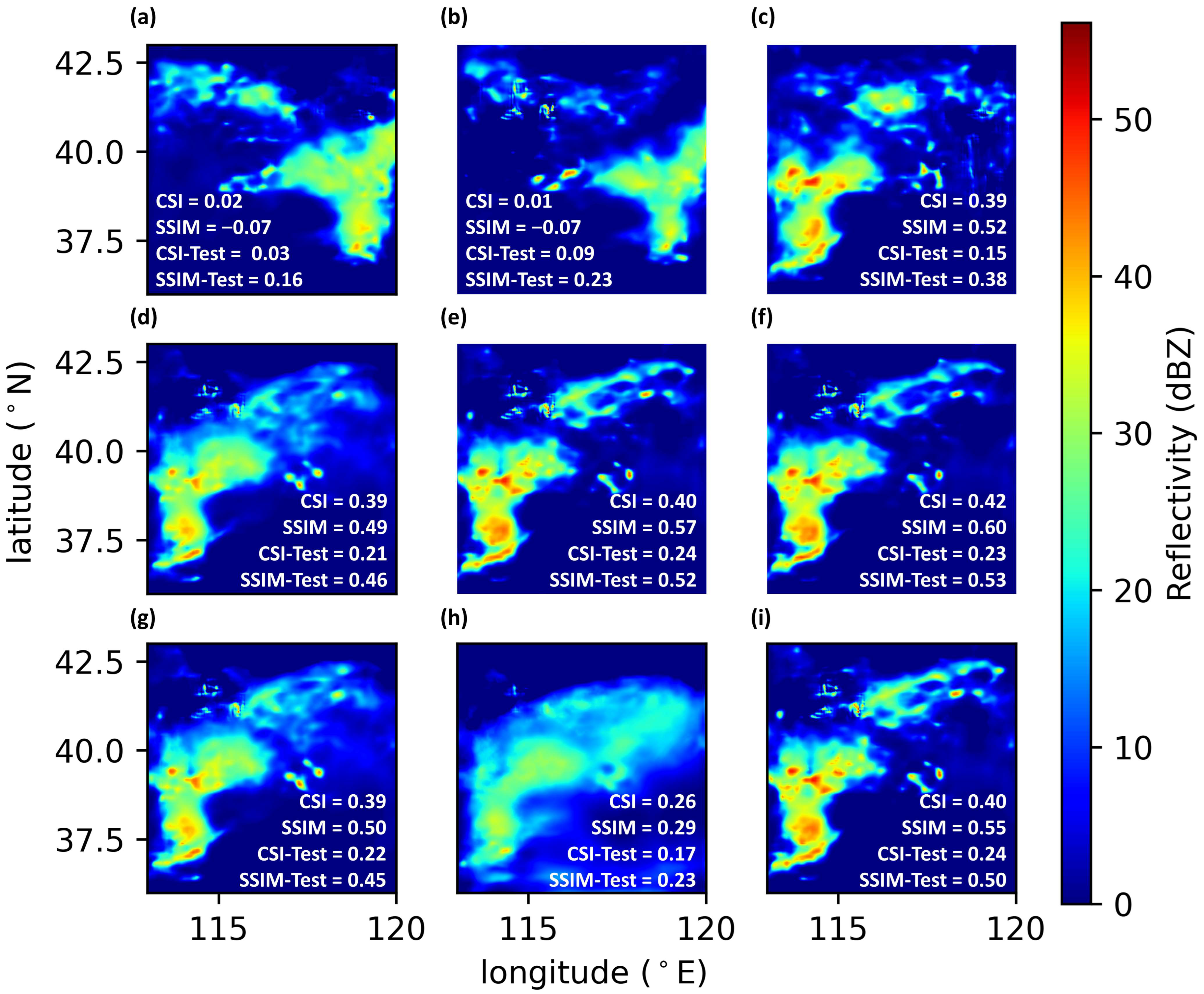

3.2. Multiscale Representation

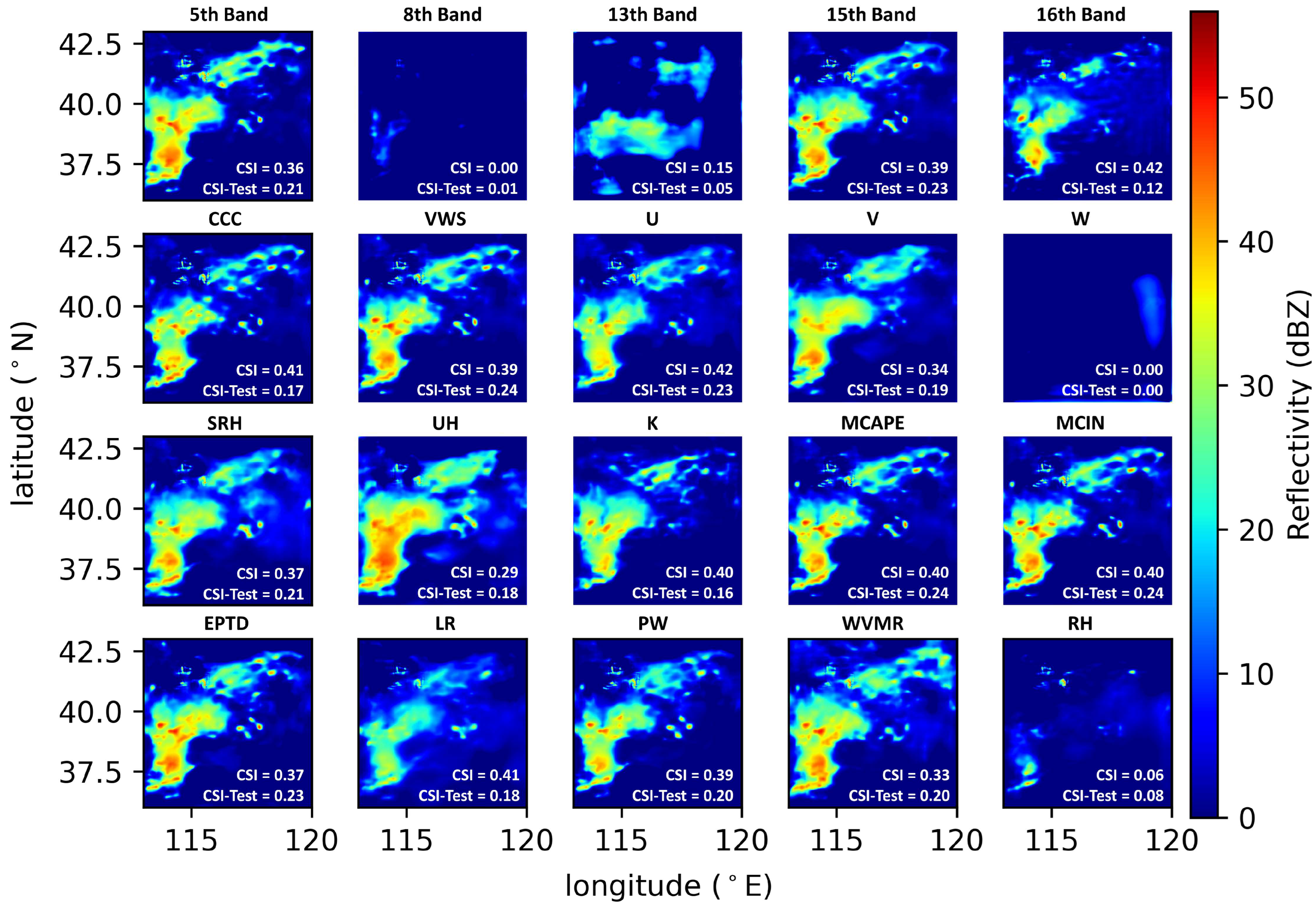

3.3. Physical Interpretations of the MSR

4. Summary and Discussions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yano, J.-I.; Ziemianski, M.Z.; Cullen, M.; Termonia, P.; Onvlee, J.; Bengtsson, L.; Carrassi, A.; Davy, R.; Deluca, A.; Gray, S.L.; et al. Scientific challenges of convective-scale numerical weather prediction. Bull. Am. Meteorol. Soc. 2018, 99, 699–710. [Google Scholar] [CrossRef]

- Wyngaard, J.C. Toward numerical modeling in the “terra incognita”. J. Atmos. Sci. 2004, 61, 1816–1826. [Google Scholar] [CrossRef]

- Dixon, M.; Wiener, G. TITAN: Thunderstorm identification, tracking, analysis, and nowcasting—A radar-based methodology. J. Atmos. Ocean. Technol. 1993, 10, 785–797. [Google Scholar] [CrossRef]

- Pulkkinen, S.; Nerini, D.; Hortal, A.A.P.; Velasco-Forero, C.; Seed, A.; Germann, U.; Foresti, L. Pysteps: An open-source Python library for probabilistic precipitation nowcasting (v1.0). Geosci. Model Dev. 2019, 12, 4185–4219. [Google Scholar] [CrossRef] [Green Version]

- James, P.M.; Reichert, B.K.; Heizenreder, D. NowCastMIX: Automatic integrated warnings for severe convection on nowcasting time scales at the German weather service. Weather Forecast. 2018, 33, 1413–1433. [Google Scholar] [CrossRef]

- Foresti, L.; Sideris, I.V.; Panziera, L.; Nerini, D.; Germann, U. A 10-year radar-based analysis of orographic precipitation growth and decay patterns over the Swiss Alpine region. Q. J. R. Meteorol. Soc. 2018, 144, 2277–2301. [Google Scholar] [CrossRef]

- Sideris, I.V.; Foresti, L.; Nerini, D.; Germann, U. NowPrecip: Localized precipitation nowcasting in the complex terrain of Switzerland. Q. J. R. Meteorol. Soc. 2020, 146, 1768–1800. [Google Scholar] [CrossRef]

- Sun, J.; Xue, M.; Wilson, J.W.; Zawadzki, I.; Ballard, S.P.; Onvlee-Hooimeyer, J.; Joe, P.; Barker, D.M.; Li, P.-W.; Golding, B.; et al. Use of NWP for nowcasting convective precipitation. Bull. Am. Meteorol. Soc. 2014, 95, 409–426. [Google Scholar] [CrossRef] [Green Version]

- Fabry, F.; Meunier, V. Why are radar data so difficult to assimilate skillfully? Mon. Weather Rev. 2020, 148, 2819–2836. [Google Scholar] [CrossRef]

- Clark, P.; Roberts, N.; Lean, H.; Ballard, S.P.; Charlton-Perez, C. Convection-permitting models: A step-change in rainfall forecasting. Meteorol. Appl. 2016, 23, 165–181. [Google Scholar] [CrossRef] [Green Version]

- Gustafsson, N.; Janjic, T.; Schraff, C.; Leuenberger, D.; Weissmann, M.; Reich, H.; Brousseau, P.; Montmerle, T.; Wattrelot, E.; Bucanek, A.; et al. Survey of data assimilation methods for convective-scale numerical weather prediction at operational centres. Q. J. R. Meteorol. Soc. 2018, 144, 1218–1256. [Google Scholar] [CrossRef] [Green Version]

- Bannister, R.N.; Chipilski, H.G.; Martinez-Alvarado, O. Techniques and challenges in the assimilation of atmospheric water observations for numerical weather prediction towards convective scales. Q. J. R. Meteorol. Soc. 2020, 146, 1–48. [Google Scholar] [CrossRef]

- Bluestein, H.B.; Carr, F.H.; Goodman, S.J. Atmospheric observations of weather and climate. Atmosphere-Ocean 2022, 60, 149–187. [Google Scholar] [CrossRef]

- Vignon, E.; Besic, N.; Jullien, N.; Gehring, J.; Berne, A. Microphysics of snowfall over coastal east Antarctica simulated by Polar WRF and observed by radar. J. Geophys. Res.-Atmos. 2019, 124, 11452–11476. [Google Scholar] [CrossRef]

- Troemel, S.; Simmer, C.; Blahak, U.; Blanke, A.; Doktorowski, S.; Ewald, F.; Frech, M.; Gergely, M.; Hagen, M.; Janjic, T.; et al. Overview: Fusion of radar polarimetry and numerical atmospheric modelling towards an improved understanding of cloud and precipitation processes. Atmos. Chem. Phys. 2021, 21, 17291–17314. [Google Scholar] [CrossRef]

- Chow, F.K.; Schar, C.; Ban, N.; Lundquist, K.A.; Schlemmer, L.; Shi, X. Crossing multiple gray zones in the transition from mesoscale to microscale simulation over complex terrain. Atmosphere 2019, 10, 274. [Google Scholar] [CrossRef] [Green Version]

- Jeworrek, J.; West, G.; Stull, R. Evaluation of cumulus and microphysics parameterizations in WRF across the convective gray zone. Weather Forecast. 2019, 34, 1097–1115. [Google Scholar] [CrossRef]

- Kirshbaum, D.J. Numerical simulations of orographic convection across multiple gray zones. J. Atmos. Sci. 2020, 77, 3301–3320. [Google Scholar] [CrossRef]

- Honnert, R.; Efstathiou, G.A.; Beare, R.J.; Ito, J.; Lock, A.; Neggers, R.; Plant, R.S.; Shin, H.H.; Tomassini, L.; Zhou, B. The atmospheric boundary layer and the “gray zone” of turbulence: A critical review. J. Geophys. Res.-Atmos. 2020, 125, e2019JD030317. [Google Scholar] [CrossRef]

- Tapiador, F.J.; Roca, R.; Del Genio, A.; Dewitte, B.; Petersen, W.; Zhang, F. Is precipitation a good metric for model performance? Bull. Am. Meteorol. Soc. 2019, 100, 223–234. [Google Scholar] [CrossRef]

- Koo, C.C. Similarity analysis of some meso-and micro-scale atmospheric motions. Acta Meteorol. Sin. 1964, 4, 519–522. [Google Scholar] [CrossRef]

- Tao, S.Y.; Ding, Y.H.; Sun, S.Q.; Cai, Z.Y.; Zhang, M.L.; Fang, Z.Y.; Li, M.T.; Zhou, X.P.; ZHao, S.X.; Dian, S.T.; et al. The Heavy Rainfalls in China; Science Press: Beijing, China, 1980; p. 225. [Google Scholar]

- Surcel, M.; Zawadzki, I.; Yau, M.K. A study on the scale dependence of the predictability of precipitation patterns. J. Atmos. Sci. 2015, 72, 216–235. [Google Scholar] [CrossRef]

- Mecikalski, J.R.; Bedka, K.M. Forecasting convective initiation by monitoring the evolution of moving cumulus in daytime GOES imagery. Mon. Weather Rev. 2006, 134, 49–78. [Google Scholar] [CrossRef] [Green Version]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N.; Prabhat, F. Deep learning and process understanding for data-driven Earth system science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef] [PubMed]

- Ravuri, S.; Lenc, K.; Willson, M.; Kangin, D.; Lam, R.; Mirowski, P.; Fitzsimons, M.; Athanassiadou, M.; Kashem, S.; Madge, S.; et al. Skilful precipitation nowcasting using deep generative models of radar. Nature 2021, 597, 672–677. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.-Y.; Wong, W.-K.; Woo, W.-C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Advances in Neural Information Processing Systems 28; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; Advances in Neural Information Processing Systems; Neural Information Processing Systems: La Jolla, CA, USA, 2015; Volume 28. [Google Scholar]

- Shi, X.; Gao, Z.; Lausen, L.; Wang, H.; Yeung, D.-Y.; Wong, W.-K.; Woo, W.-C. Deep learning for precipitation nowcasting: A benchmark and a new model. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Advances in Neural Information Processing Systems; Neural Information Processing Systems: La Jolla, CA, USA, 2017; Volume 30. [Google Scholar]

- Wang, Y.; Long, M.; Wang, J.; Gao, Z.; Yu, P.S. PredRNN: Recurrent neural networks for predictive learning using spatiotemporal LSTMs. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Lebedev, V.; Ivashkin, V.; Rudenko, I.; Ganshin, A.; Molchanov, A.; Ovcharenko, S.; Grokhovetskiy, R.; Bushmarinov, I.; Solomentsev, D. Precipitation nowcasting with satellite imagery. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 2680–2688. [Google Scholar]

- Hilburn, K.A.; Ebert-Uphoff, I.; Miller, S.D. Development and interpretation of a neural-network-based synthetic radar reflectivity estimator using GOES-R satellite observations. J. Appl. Meteorol. Climatol. 2021, 60, 3–21. [Google Scholar] [CrossRef]

- Veillette, M.S.; Hassey, E.P.; Mattioli, C.J.; Iskenderian, H.; Lamey, P.M. Creating synthetic radar imagery using convolutional neural networks. J. Atmos. Ocean. Technol. 2018, 35, 2323–2338. [Google Scholar] [CrossRef]

- Duan, M.; Xia, J.; Yan, Z.; Han, L.; Zhang, L.; Xia, H.; Yu, S. Reconstruction of the radar reflectivity of convective storms based on deep learning and Himawari-8 observations. Remote Sens. 2021, 13, 3330. [Google Scholar] [CrossRef]

- Mohr, C.G.; Vaughan, R.L. Economical procedure for Cartesian interpolation and display of reflectivity factor data in 3-dimensional space. J. Appl. Meteorol. 1979, 18, 661–670. [Google Scholar] [CrossRef]

- Zhang, J.; Howard, K.; Xia, W.W.; Gourley, J.J. Comparison of objective analysis schemes for the WSR-88D radar data. In Proceedings of the 31st Conference on Radar Meteorology, Seattle, WA, USA, 6–12 August 2003. [Google Scholar]

- Davis, C.; Brown, B.; Bullock, R. Object-based verification of precipitation forecasts. Part I: Methodology and application to mesoscale rain areas. Mon. Weather Rev. 2006, 134, 1772–1784. [Google Scholar] [CrossRef] [Green Version]

- Davis, C.; Brown, B.; Bullock, R. Object-based verification of precipitation forecasts. Part II: Application to convective rain systems. Mon. Weather Rev. 2006, 134, 1785–1795. [Google Scholar] [CrossRef] [Green Version]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Wang, W.; Powers, J.G. A description of the Advanced Research WRF version 3. NCAR Tech. Note 2008, 475, 113. [Google Scholar] [CrossRef]

- Blanco-Ward, D.; Rocha, A.; Viceto, C.; Ribeiro, A.C.; Feliciano, M.; Paoletti, E.; Miranda, A.I. Validation of meteorological and ground-level ozone WRF-CHIMERE simulations in a mountainous grapevine growing area for phytotoxic risk assessment. Atmos. Environ. 2021, 259, 118507. [Google Scholar] [CrossRef]

- Giordano, C.; Vernin, J.; Ramio, H.V.; Munoz-Tunon, C.; Varela, A.M.; Trinquet, H. Atmospheric and seeing forecast: WRF model validation with in situ measurements at ORM. Mon. Not. R. Astron. Soc. 2013, 430, 3102–3111. [Google Scholar] [CrossRef] [Green Version]

- Hong, S.Y.; Dudhia, J.; Chen, S.H. A revised approach to ice microphysical processes for the bulk parameterization of clouds and precipitation. Mon. Weather Rev. 2004, 132, 103–120. [Google Scholar] [CrossRef]

- Mlawer, E.J.; Taubman, S.J.; Brown, P.D.; Iacono, M.J.; Clough, S.A. Radiative transfer for inhomogeneous atmospheres: RRTM, a validated correlated-k model for the longwave. J. Geophys. Res.-Atmos. 1997, 102, 16663–16682. [Google Scholar] [CrossRef] [Green Version]

- Dudhia, J. Numerical study of convection observed during the winter monsoon experiment using a mesoscale two-dimensional model. J. Atmos. Sci. 1989, 46, 3077–3107. [Google Scholar] [CrossRef]

- Jimenez, P.A.; Dudhia, J. Improving the representation of resolved and unresolved topographic effects on surface wind in the WRF model. J. Appl. Meteorol. Climatol. 2012, 51, 300–316. [Google Scholar] [CrossRef] [Green Version]

- Chen, F.; Dudhia, J. Coupling an advanced land surface-hydrology model with the Penn State-NCAR MM5 modeling system. Part I: Model implementation and sensitivity. Mon. Weather Rev. 2001, 129, 569–585. [Google Scholar] [CrossRef]

- Hong, S.-Y.; Noh, Y.; Dudhia, J. A new vertical diffusion package with an explicit treatment of entrainment processes. Mon. Weather Rev. 2006, 134, 2318–2341. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Wang, Y.; Hamilton, K. Improved representation of boundary layer clouds over the southeast Pacific in ARW-WRF using a modified Tiedtke cumulus parameterization scheme. Mon. Weather Rev. 2011, 139, 3489–3513. [Google Scholar] [CrossRef] [Green Version]

- Bessho, K.; Date, K.; Hayashi, M.; Ikeda, A.; Imai, T.; Inoue, H.; Kumagai, Y.; Miyakawa, T.; Murata, H.; Ohno, T.; et al. An introduction to Himawari-8/9—Japan’s new-generation geostationary meteorological satellites. J. Meteorol. Soc. Japan. Ser. II 2016, 94, 151–183. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention, Pt Iii; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Trebing, K.; Stanczyk, T.; Mehrkanoon, S. SmaAt-UNet: Precipitation nowcasting using a small attention-UNet architecture. Pattern Recognit. Lett. 2021, 145, 178–186. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Y.; Sun, R.; Guo, F.; Xu, X.; Xu, H. Convective storm VIL and lightning nowcasting using satellite and weather radar measurements based on multi-task learning models. Adv. Atmos. Sci. 2023, 40, 887–899. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Convolutional Deep Belief Networks on cifar-10. 2010. Available online: http://www.cs.toronto.edu/~kriz/conv-cifar10-aug2010.pdf. (accessed on 26 May 2023).

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Ankenbrand, M.J.; Shainberg, L.; Hock, M.; Lohr, D.; Schreiber, L.M. Sensitivity analysis for interpretation of machine learning based segmentation models in cardiac MRI. Bmc Med. Imaging 2021, 21, 27. [Google Scholar] [CrossRef] [PubMed]

- Ladwig, W. Wrf-Python, Version 1.2.3; Github: San Francisco, CA, USA, 2017. [CrossRef]

- Toda, Y.; Okura, F. How convolutional neural networks diagnose plant disease. Plant Phenomics 2019, 2019, 9237136. [Google Scholar] [CrossRef]

- Poggio, T.; Mhaskar, H.; Rosasco, L.; Miranda, B.; Liao, Q. Why and when can deep-but not shallow-networks avoid the curse of dimensionality: A review. Int. J. Autom. Comput. 2017, 14, 503–519. [Google Scholar] [CrossRef] [Green Version]

- Mhaskar, H.; Liao, Q.; Poggio, T. When and why Are deep networks better than shallow ones? In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Doswell, C.A.; Brooks, H.E.; Maddox, R.A. Flash flood forecasting: An ingredients-based methodology. Weather Forecast. 1996, 11, 560–581. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [Green Version]

- McGovern, A.; Lagerquist, R.; Gagne, D.J., II; Jergensen, G.E.; Elmore, K.L.; Homeyer, C.R.; Smith, T. Making the black box more transparent: Understanding the physical implications of machine learning. Bull. Am. Meteorol. Soc. 2019, 100, 2175–2199. [Google Scholar] [CrossRef]

- Bai, J.; Gong, B.; Zhao, Y.; Lei, F.; Yan, C.; Gao, Y. Multi-scale representation learning on hypergraph for 3d shape retrieval and recognition. IEEE Trans. Image Process. 2021, 30, 5327–5338. [Google Scholar] [CrossRef]

- Jiao, L.; Gao, J.; Liu, X.; Liu, F.; Yang, S.; Hou, B. Multi-scale representation learning for image classification: A survey. IEEE Trans. Artif. Intell. 2021, 4, 23–43. [Google Scholar] [CrossRef]

- Foresti, L.; Sideris, I.V.; Nerini, D.; Beusch, L.; Germann, U. Using a 10-year radar archive for nowcasting precipitation growth and decay: A probabilistic machine learning approach. Weather Forecast. 2019, 34, 1547–1569. [Google Scholar] [CrossRef]

- Mallat, S. Group invariant scattering. Commun. Pure Appl. Math. 2012, 65, 1331–1398. [Google Scholar] [CrossRef] [Green Version]

- Michau, G.; Frusque, G.; Fink, O. Fully learnable deep wavelet transform for unsupervised monitoring of high-frequency time series. Proc. Natl. Acad. Sci. USA 2022, 119, e2106598119. [Google Scholar] [CrossRef]

- Ramzi, Z.; Michalewicz, K.; Starck, J.-L.; Moreau, T.; Ciuciu, P. Wavelets in the deep learning era. J. Math. Imaging Vis. 2023, 65, 240–251. [Google Scholar] [CrossRef]

- Wilson, J.; Megenhardt, D.; Pinto, J. NWP and radar extrapolation: Comparisons and explanation of errors. Mon. Weather Rev. 2020, 148, 4783–4798. [Google Scholar] [CrossRef]

- Fabry, F. Radar Meteorology: Principles and Practice; Cambridge University Press: Cambridge, UK, 2018. [Google Scholar]

- Sokol, Z.; Szturc, J.; Orellana-Alvear, J.; Popova, J.; Jurczyk, A.; Celleri, R. The role of weather radar in rainfall estimation and its application in meteorological and hydrological modelling-a review. Remote Sens. 2021, 13, 351. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutnik, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [Green Version]

- Gagne, D.J., II; Haupt, S.E.; Nychka, D.W.; Thompson, G. Interpretable deep learning for spatial analysis of severe hailstorms. Mon. Weather Rev. 2019, 147, 2827–2845. [Google Scholar] [CrossRef]

- Toms, B.A.; Barnes, E.A.; Ebert-Uphoff, I. Physically interpretable neural networks for the geosciences: Applications to Earth system variability. J. Adv. Model. Earth Syst. 2020, 12, e2019MS002002. [Google Scholar] [CrossRef]

- Davenport, F.V.; Diffenbaugh, N.S. Using machine learning to analyze physical causes of climate change: A case study of U.S. midwest extreme precipitation. Geophys. Res. Lett. 2021, 48, e2021GL093787. [Google Scholar] [CrossRef]

- Bocquet, M.; Brajard, J.; Carrassi, A.; Bertino, L. Bayesian inference of chaotic dynamics by merging data assimilation, machine learning and expectation-maximization. Found. Data Sci. 2020, 2, 55–80. [Google Scholar] [CrossRef] [Green Version]

- Geer, A.J. Learning earth system models from observations: Machine learning or data assimilation? Philos. Trans. R. Soc. A-Math. Phys. Eng. Sci. 2021, 379. [Google Scholar] [CrossRef]

- Penny, S.G.; Smith, T.A.; Chen, T.C.; Platt, J.A.; Lin, H.Y.; Goodliff, M.; Abarbanel, H.D.I. Integrating recurrent neural networks with data assimilation for scalable data-driven state estimation. J. Adv. Model. Earth Syst. 2022, 14, e2021MS002843. [Google Scholar] [CrossRef]

- Bocquet, M.; Farchi, A.; Malartic, Q. Online learning of both state and dynamics using ensemble Kalman filters. Found. Data Sci. 2021, 3, 305–330. [Google Scholar] [CrossRef]

- Arcucci, R.; Zhu, J.; Hu, S.; Guo, Y.-K. Deep data assimilation: Integrating deep learning with data assimilation. Appl. Sci. 2021, 11, 1114. [Google Scholar] [CrossRef]

| Process | Parameterization Scheme |

|---|---|

| Microphysics | WSM3 [41] |

| Longwave radiation | RRTM [42] |

| Shortwave radiation | Dudhia [43] |

| Surface layer | Revised MM5 Monin–Obukhov [44] |

| Surface physics | Unified Noah land surface [45] |

| Planetary boundary layer | YSU [46] |

| Cumulus | Modified Tiedtke [47] (only for the outermost domain) |

| Band Number | Central Wavelength (µm) | Concerned Physical Properties |

|---|---|---|

| 5 | 1.6 | Cloud phases |

| 8 | 6.2 | Middle and upper tropospheric humidity |

| 13 | 10.4 | Clouds and cloud top |

| 15 | 12.4 | Clouds and total water |

| 16 | 13.3 | Cloud heights |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, M.; Liao, Q.; Wu, L.; Zhang, S.; Wang, Z.; Pan, X.; Wu, Q.; Wang, Y.; Su, D. Multiscale Representation of Radar Echo Data Retrieved through Deep Learning from Numerical Model Simulations and Satellite Images. Remote Sens. 2023, 15, 3466. https://doi.org/10.3390/rs15143466

Zhu M, Liao Q, Wu L, Zhang S, Wang Z, Pan X, Wu Q, Wang Y, Su D. Multiscale Representation of Radar Echo Data Retrieved through Deep Learning from Numerical Model Simulations and Satellite Images. Remote Sensing. 2023; 15(14):3466. https://doi.org/10.3390/rs15143466

Chicago/Turabian StyleZhu, Mingming, Qi Liao, Lin Wu, Si Zhang, Zifa Wang, Xiaole Pan, Qizhong Wu, Yangang Wang, and Debin Su. 2023. "Multiscale Representation of Radar Echo Data Retrieved through Deep Learning from Numerical Model Simulations and Satellite Images" Remote Sensing 15, no. 14: 3466. https://doi.org/10.3390/rs15143466

APA StyleZhu, M., Liao, Q., Wu, L., Zhang, S., Wang, Z., Pan, X., Wu, Q., Wang, Y., & Su, D. (2023). Multiscale Representation of Radar Echo Data Retrieved through Deep Learning from Numerical Model Simulations and Satellite Images. Remote Sensing, 15(14), 3466. https://doi.org/10.3390/rs15143466