UAS Quality Control and Crop Three-Dimensional Characterization Framework Using Multi-Temporal LiDAR Data

Abstract

:1. Introduction

1.1. Related Work

1.2. Contributions

- (a)

- We aim to evaluate the overall point densities of multi-temporal point clouds obtained with varying ULS operational parameters, e.g., flight altitude, PRR, and return echo mode [53].

- (b)

- Point clouds have frequently been used to obtain CHM to derive crop height metrics [36]. Therefore, GNSS-based crop heights were measured in the field to assess the accuracy of multi-temporal CHM [36]. Multi-temporal CHM reflect spatio-temporal changes purely from a crop height (z) perspective; failure to address many external factors that influence the LiDAR backscatter, e.g., crop phenology, may result in false observations about canopy heights [54].

- (c)

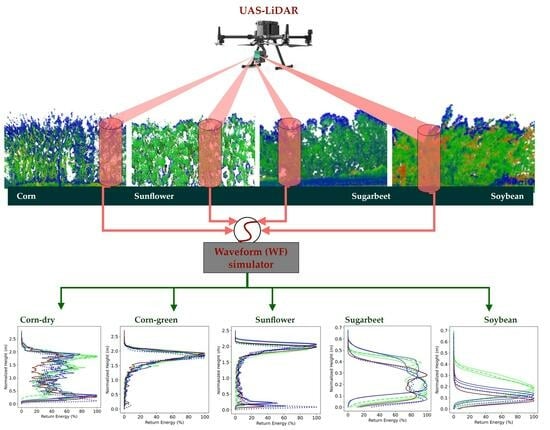

- To understand external factors influencing the LiDAR backscatter, crop characteristic ULS waveform (WF) analysis is performed using multi-temporal simulated WFs [16,55]. WF analysis is designed to address the following: (i) do WFs show the characteristic WFs of corn, sunflower, soybean, and sugar beet, and in multi-temporal WFs; and (ii) does phenology influence the WF shapes, resulting in crop height differences over different crop successional stages? We aimed to consider all internal, e.g., flight altitude, PRR, scanning, and return echo modes, and external factors, e.g., crop type, structural complexity, and phonological stages.

- (d)

2. Materials and Methods

2.1. Study Area

2.2. Data Collection

2.2.1. ULS Survey

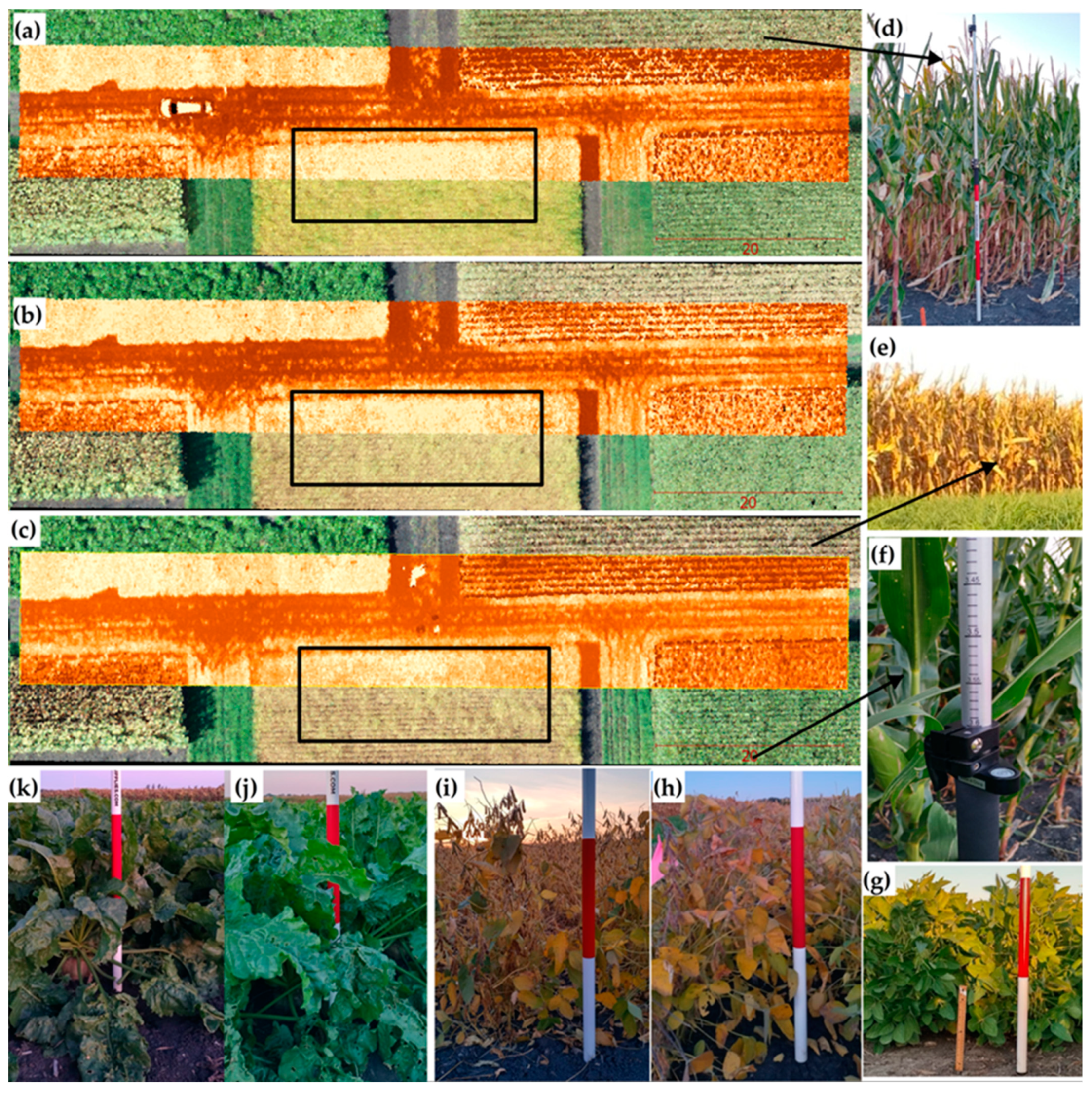

2.2.2. GNSS Field Survey

2.2.3. Terrestial Laser Scanner

3. Methodology

3.1. Data Processing

3.2. Derivative Products

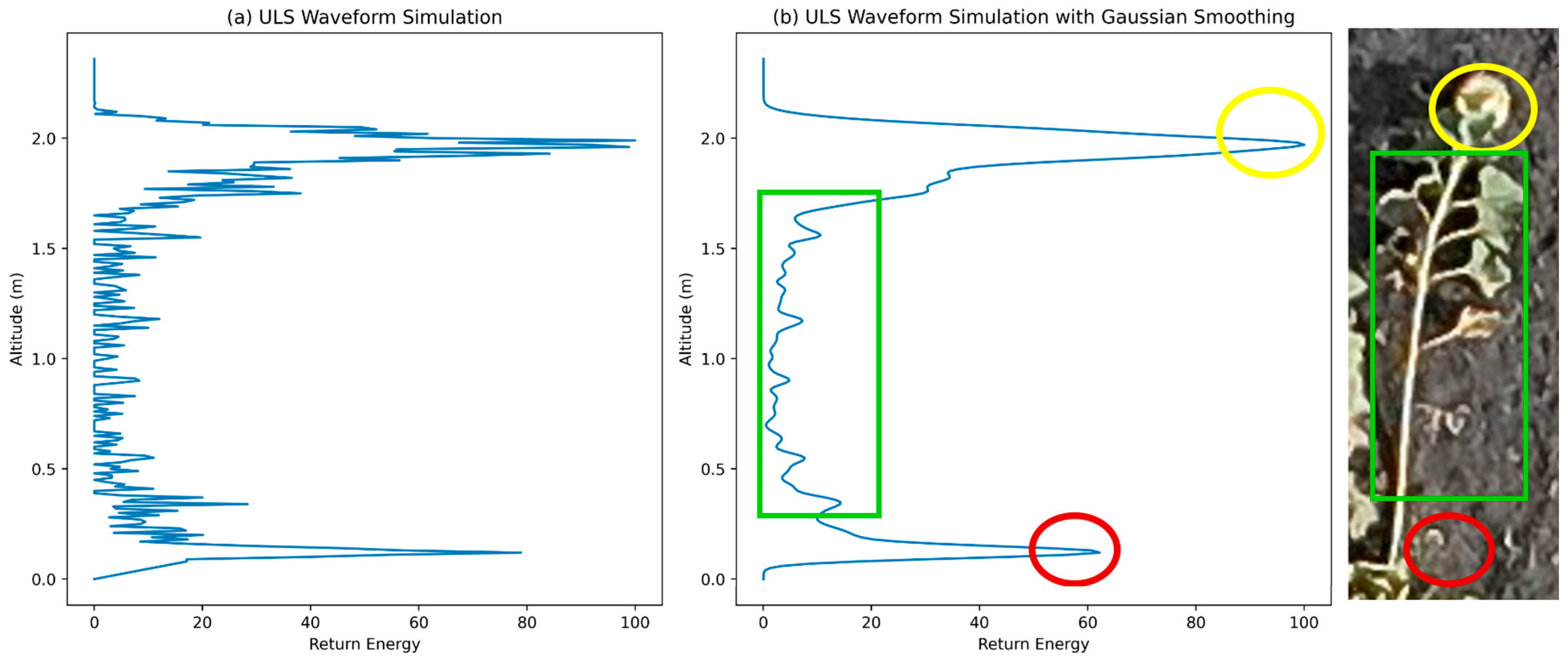

3.3. Simulated ULS/TLS WFs

3.4. Evaluation Approaches

3.4.1. Multi-Temporal CHM Analysis

3.4.2. Multi-Temporal WF Analysis

4. Results

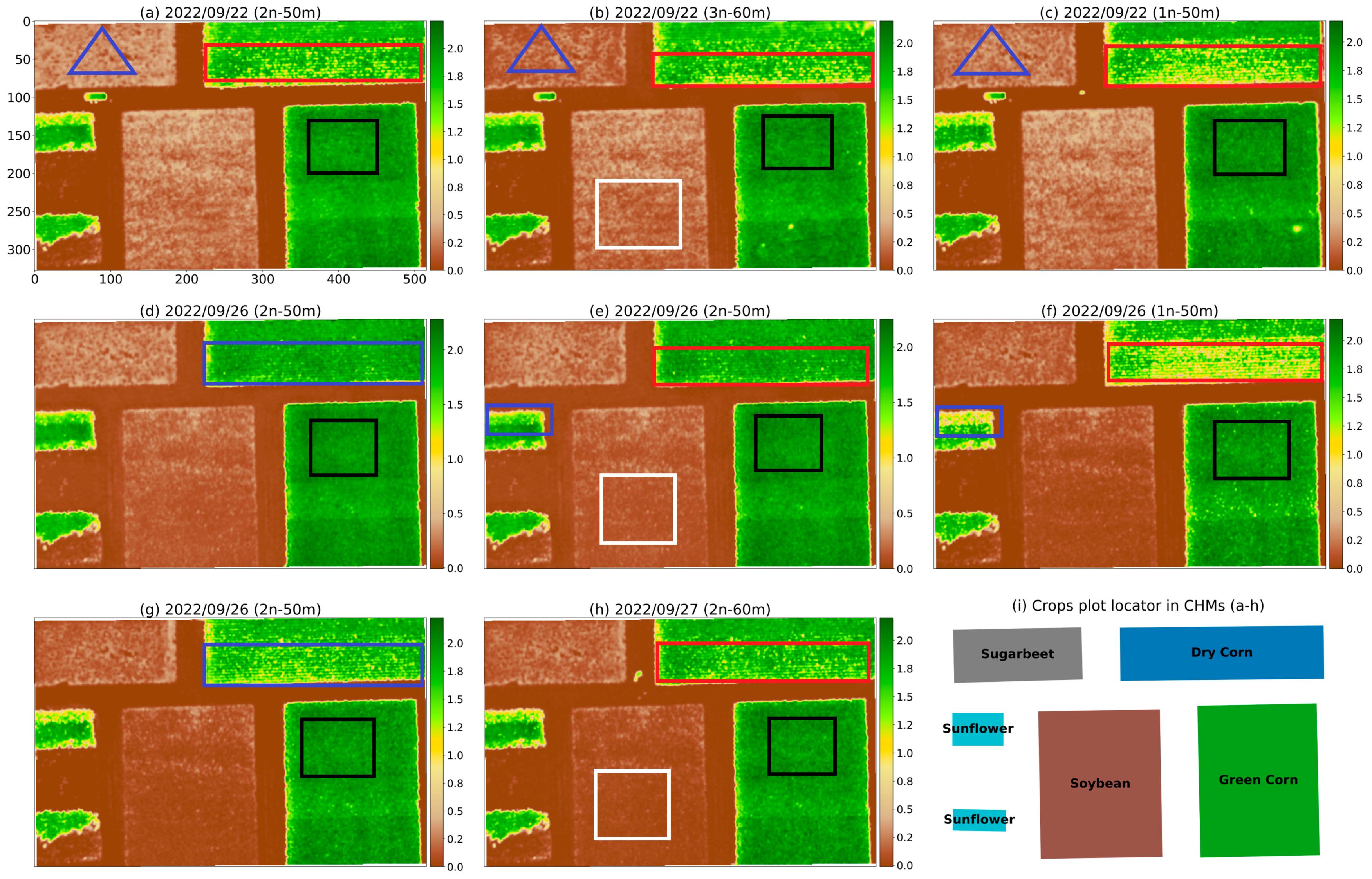

4.1. Point Density Analysis

4.2. Multi-Temporal CHM Analysis

4.3. Crop Height Analysis

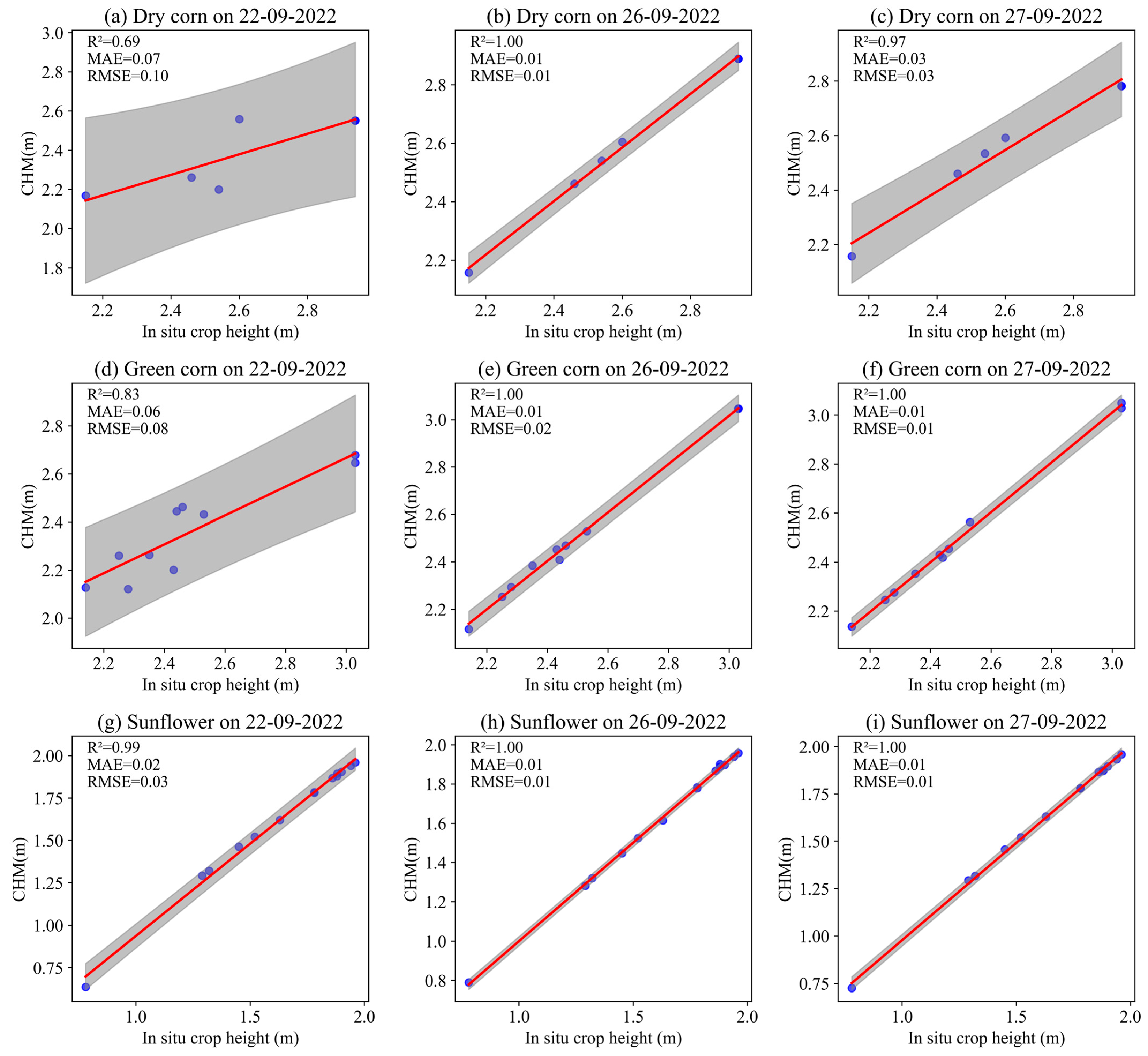

4.3.1. Tall Crop Height Analysis

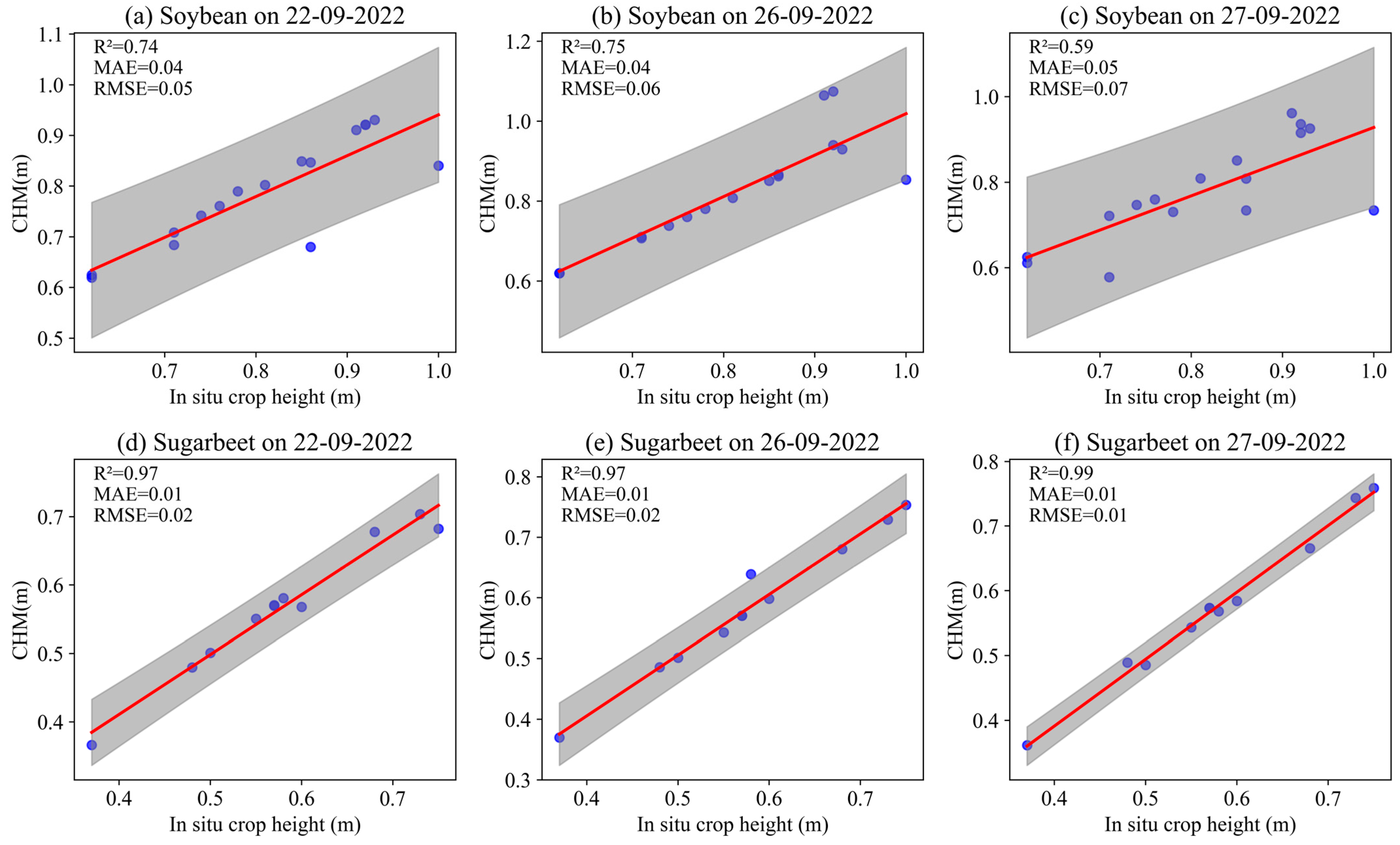

4.3.2. CHM-Based Short Crop Height Analysis

4.4. Multi-Temporal WF Analysis

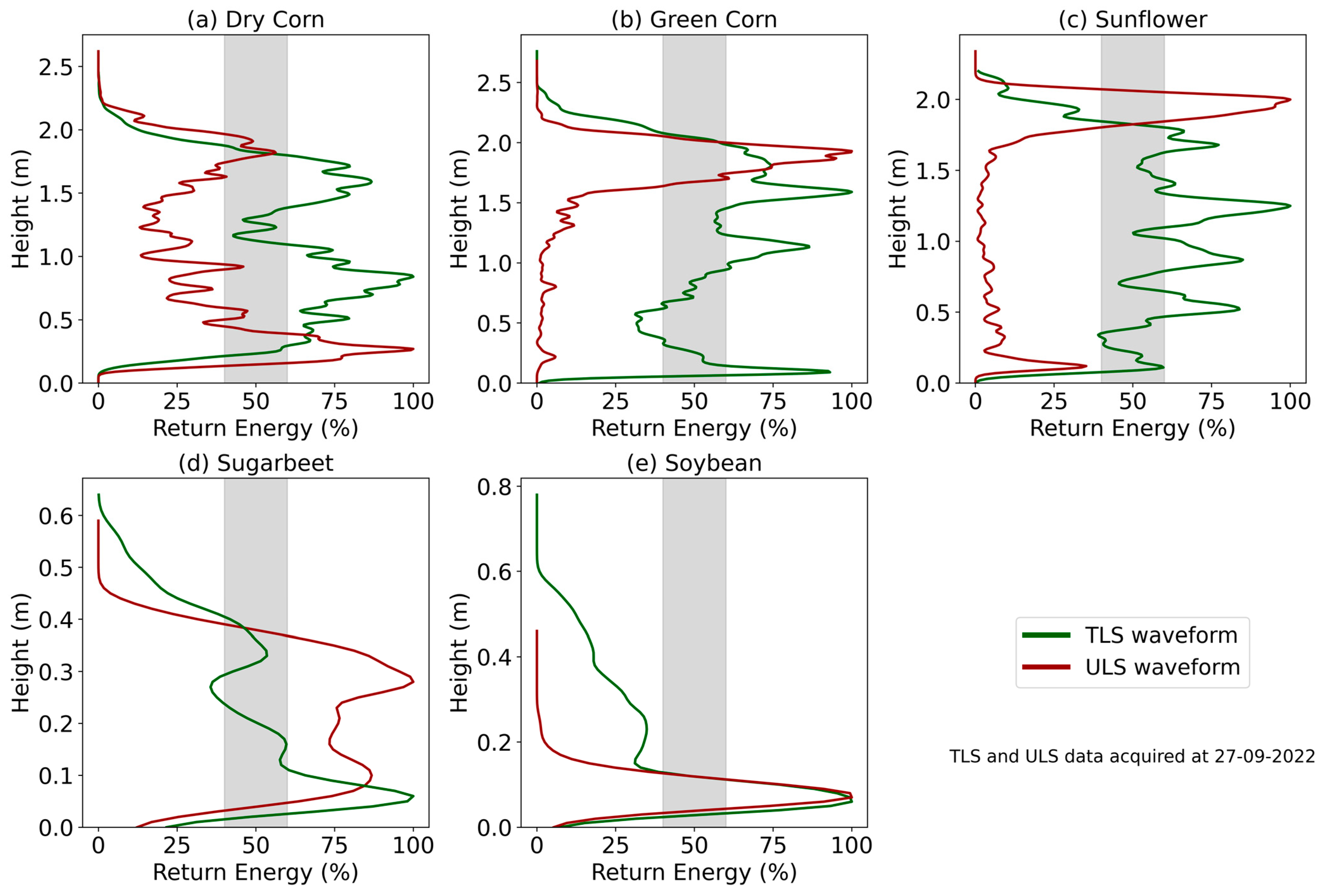

4.4.1. Crop Characteristic WFs

4.4.2. Crop Phenological Stages

4.4.3. Influence of ULS Operational Parameters

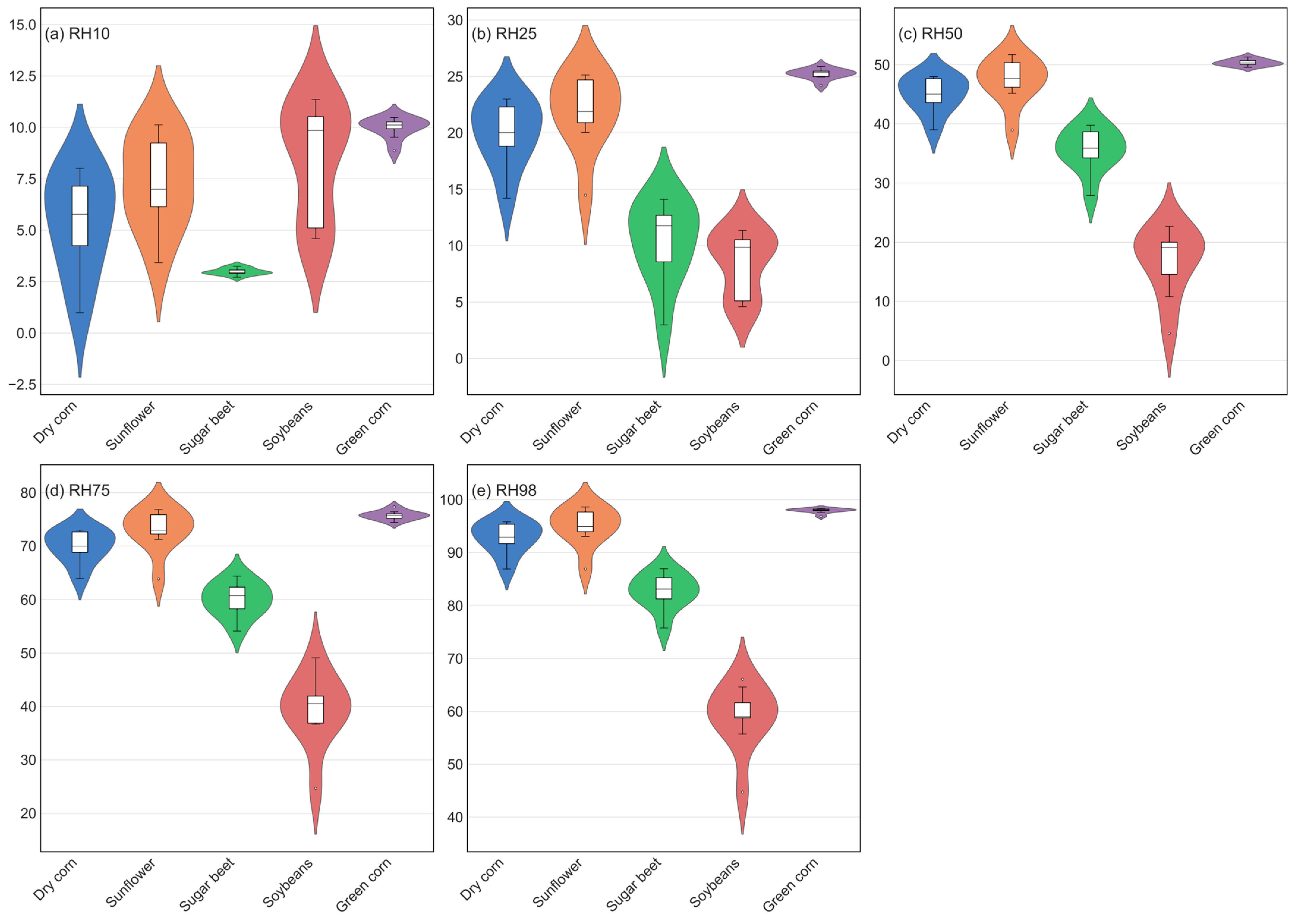

4.5. Statistical Change in RH Metrics

4.6. ULS Crop Characteristic Information Loss

4.6.1. Crop Height (z) Accuracy

4.6.2. Information Loss Assessment

4.6.3. Comparative Assessment of Crop Characteristic WFs

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rivera, G.; Porras, R.; Florencia, R.; Sánchez-Solís, J.P. LiDAR Applications in Precision Agriculture for Cultivating Crops: A Review of Recent Advances. Comput. Electron. Agric. 2023, 207, 107737. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote Sensing for Agricultural Applications: A Meta-Review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Wendel, A.; Underwood, J.; Walsh, K. Maturity Estimation of Mangoes Using Hyperspectral Imaging from a Ground Based Mobile Platform. Comput. Electron. Agric. 2018, 155, 298–313. [Google Scholar] [CrossRef]

- Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Gay, P. Unsupervised Detection of Vineyards by 3D Point-Cloud UAV Photogrammetry for Precision Agriculture. Comput. Electron. Agric. 2018, 155, 84–95. [Google Scholar] [CrossRef]

- Tao, H.; Xu, S.; Tian, Y.; Li, Z.; Ge, Y.; Zhang, J.; Wang, Y.; Zhou, G.; Deng, X.; Zhang, Z.; et al. Proximal and Remote Sensing in Plant Phenomics: 20 Years of Progress, Challenges, and Perspectives. Plant Commun. 2022, 3, 100344. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Jin, S.; Zang, J.; Wang, X.; Sun, Z.; Li, Z.; Xu, S.; Ma, Q.; Su, Y.; Guo, Q.; et al. Deciphering the Contributions of Spectral and Structural Data to Wheat Yield Estimation from Proximal Sensing. Crop J. 2022, 10, 1334–1345. [Google Scholar] [CrossRef]

- Chang, C.Y.; Zhou, R.; Kira, O.; Marri, S.; Skovira, J.; Gu, L.; Sun, Y. An Unmanned Aerial System (UAS) for Concurrent Measurements of Solar-Induced Chlorophyll Fluorescence and Hyperspectral Reflectance toward Improving Crop Monitoring. Agric. For. Meteorol. 2020, 294, 108145. [Google Scholar] [CrossRef]

- Jiang, S.; Jiang, C.; Jiang, W. Efficient Structure from Motion for Large-Scale UAV Images: A Review and a Comparison of SfM Tools. ISPRS J. Photogramm. Remote Sens. 2020, 167, 230–251. [Google Scholar] [CrossRef]

- Zhou, J.; Khot, L.R.; Bahlol, H.Y.; Boydston, R.; Miklas, P.N. Evaluation of Ground, Proximal and Aerial Remote Sensing Technologies for Crop Stress Monitoring. IFAC-Pap. 2016, 49, 22–26. [Google Scholar] [CrossRef]

- Müllerová, J.; Gago, X.; Bučas, M.; Company, J.; Estrany, J.; Fortesa, J.; Manfreda, S.; Michez, A.; Mokroš, M.; Paulus, G.; et al. Characterizing Vegetation Complexity with Unmanned Aerial Systems (UAS)—A Framework and Synthesis. Ecol. Indic. 2021, 131, 108156. [Google Scholar] [CrossRef]

- Li, J.; Tang, L. Developing a Low-Cost 3D Plant Morphological Traits Characterization System. Comput. Electron. Agric. 2017, 143, 1–13. [Google Scholar] [CrossRef]

- Sarić, R.; Nguyen, V.D.; Burge, T.; Berkowitz, O.; Trtílek, M.; Whelan, J.; Lewsey, M.G.; Čustović, E. Applications of Hyperspectral Imaging in Plant Phenotyping. Trends Plant Sci. 2022, 27, 301–315. [Google Scholar] [CrossRef] [PubMed]

- Xie, C.; Yang, C. A Review on Plant High-Throughput Phenotyping Traits Using UAV-Based Sensors. Comput. Electron. Agric. 2020, 178, 105731. [Google Scholar] [CrossRef]

- Hadaś, E.; Estornell, J. Accuracy of Tree Geometric Parameters Depending on the LiDAR Data Density. Eur. J. Remote Sens. 2016, 49, 73–92. [Google Scholar] [CrossRef]

- An, N.; Welch, S.M.; Markelz, R.J.C.; Baker, R.L.; Palmer, C.M.; Ta, J.; Maloof, J.N.; Weinig, C. Quantifying Time-Series of Leaf Morphology Using 2D and 3D Photogrammetry Methods for High-Throughput Plant Phenotyping. Comput. Electron. Agric. 2017, 135, 222–232. [Google Scholar] [CrossRef]

- Zhao, F.; Yang, X.; Schull, M.A.; Román-Colón, M.O.; Yao, T.; Wang, Z.; Zhang, Q.; Jupp, D.L.B.; Lovell, J.L.; Culvenor, D.S.; et al. Measuring Effective Leaf Area Index, Foliage Profile, and Stand Height in New England Forest Stands Using a Full-Waveform Ground-Based Lidar. Remote Sens. Environ. 2011, 115, 2954–2964. [Google Scholar] [CrossRef]

- Rossi, R.; Costafreda-Aumedes, S.; Leolini, L.; Leolini, C.; Bindi, M.; Moriondo, M. Implementation of an Algorithm for Automated Phenotyping through Plant 3D-Modeling: A Practical Application on the Early Detection of Water Stress. Comput. Electron. Agric. 2022, 197, 106937. [Google Scholar] [CrossRef]

- Gao, M.; Yang, F.; Wei, H.; Liu, X. Individual Maize Location and Height Estimation in Field from UAV-Borne LiDAR and RGB Images. Remote Sens. 2022, 14, 2292. [Google Scholar] [CrossRef]

- Luo, S.; Liu, W.; Zhang, Y.; Wang, C.; Xi, X.; Nie, S.; Ma, D.; Lin, Y.; Zhou, G. Maize and Soybean Heights Estimation from Unmanned Aerial Vehicle (UAV) LiDAR Data. Comput. Electron. Agric. 2021, 182, 106005. [Google Scholar] [CrossRef]

- Cucchiaro, S.; Straffelini, E.; Chang, K.-J.; Tarolli, P. Mapping Vegetation-Induced Obstruction in Agricultural Ditches: A Low-Cost and Flexible Approach by UAV-SfM. Agric. Water Manag. 2021, 256, 107083. [Google Scholar] [CrossRef]

- Amarasingam, N.; Ashan Salgadoe, A.S.; Powell, K.; Gonzalez, L.F.; Natarajan, S. A Review of UAV Platforms, Sensors, and Applications for Monitoring of Sugarcane Crops. Remote Sens. Appl. Soc. Environ. 2022, 26, 100712. [Google Scholar] [CrossRef]

- Nex, F.; Armenakis, C.; Cramer, M.; Cucci, D.A.; Gerke, M.; Honkavaara, E.; Kukko, A.; Persello, C.; Skaloud, J. UAV in the Advent of the Twenties: Where We Stand and What Is Next. ISPRS J. Photogramm. Remote Sens. 2022, 184, 215–242. [Google Scholar] [CrossRef]

- Brede, B.; Bartholomeus, H.M.; Barbier, N.; Pimont, F.; Vincent, G.; Herold, M. Peering through the Thicket: Effects of UAV LiDAR Scanner Settings and Flight Planning on Canopy Volume Discovery. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103056. [Google Scholar] [CrossRef]

- Nowak, M.M.; Pędziwiatr, K.; Bogawski, P. Hidden Gaps under the Canopy: LiDAR-Based Detection and Quantification of Porosity in Tree Belts. Ecol. Indic. 2022, 142, 109243. [Google Scholar] [CrossRef]

- Fareed, N.; Flores, J.P.; Das, A.K. Analysis of UAS-LiDAR Ground Points Classification in Agricultural Fields Using Traditional Algorithms and PointCNN. Remote Sens. 2023, 15, 483. [Google Scholar] [CrossRef]

- Jurado, J.J.M.; López, A.; Pádua, L.; Sousa, J.J. Remote Sensing Image Fusion on 3D Scenarios: A Review of Applications for Agriculture and Forestry. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102856. [Google Scholar] [CrossRef]

- Lin, Y. LiDAR: An Important Tool for next-Generation Phenotyping Technology of High Potential for Plant Phenomics? Comput. Electron. Agric. 2015, 119, 61–73. [Google Scholar] [CrossRef]

- Micheletto, M.J.; Chesñevar, C.I.; Santos, R. Methods and Applications of 3D Ground Crop Analysis Using LiDAR Technology: A Survey. Sensors 2023, 23, 7212. [Google Scholar] [CrossRef] [PubMed]

- Sofonia, J.J.; Phinn, S.; Roelfsema, C.; Kendoul, F.; Rist, Y. Modelling the Effects of Fundamental UAV Flight Parameters on LiDAR Point Clouds to Facilitate Objectives-Based Planning. ISPRS J. Photogramm. Remote Sens. 2019, 149, 105–118. [Google Scholar] [CrossRef]

- Maltamo, M.; Peltola, P.; Packalen, P.; Hardenbol, A.; Räty, J.; Saksa, T.; Eerikäinen, K.; Korhonen, L. Can Models for Forest Attributes Based on Airborne Laser Scanning Be Generalized for Different Silvicultural Management Systems? For. Ecol. Manag. 2023, 546, 121312. [Google Scholar] [CrossRef]

- Mathews, A.J.; Singh, K.K.; Cummings, A.R.; Rogers, S.R. Fundamental Practices for Drone Remote Sensing Research across Disciplines. Drone Syst. Appl. 2023, 11, 1–22. [Google Scholar] [CrossRef]

- Diara, F.; Roggero, M. Quality Assessment of DJI Zenmuse L1 and P1 LiDAR and Photogrammetric Systems: Metric and Statistics Analysis with the Integration of Trimble SX10 Data. Geomatics 2022, 2, 254–281. [Google Scholar] [CrossRef]

- Adnan, S.; Maltamo, M.; Mehtätalo, L.; Ammaturo, R.N.L.; Packalen, P.; Valbuena, R. Determining Maximum Entropy in 3D Remote Sensing Height Distributions and Using It to Improve Aboveground Biomass Modelling via Stratification. Remote Sens. Environ. 2021, 260, 112464. [Google Scholar] [CrossRef]

- Pedersen, R.Ø.; Bollandsås, O.M.; Gobakken, T.; Næsset, E. Deriving Individual Tree Competition Indices from Airborne Laser Scanning. For. Ecol. Manag. 2012, 280, 150–165. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR System with Application to Forest Inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Zheng, Z.; Schmid, B.; Zeng, Y.; Schuman, M.C.; Zhao, D.; Schaepman, M.E.; Morsdorf, F. Remotely Sensed Functional Diversity and Its Association with Productivity in a Subtropical Forest. Remote Sens. Environ. 2023, 290, 113530. [Google Scholar] [CrossRef]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A Compilation of UAV Applications for Precision Agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Malambo, L.; Popescu, S.C.; Horne, D.W.; Pugh, N.A.; Rooney, W.L. Automated Detection and Measurement of Individual Sorghum Panicles Using Density-Based Clustering of Terrestrial Lidar Data. ISPRS J. Photogramm. Remote Sens. 2019, 149, 1–13. [Google Scholar] [CrossRef]

- Bi, K.; Gao, S.; Xiao, S.; Zhang, C.; Bai, J.; Huang, N.; Sun, G.; Niu, Z. N Distribution Characterization Based on Organ-Level Biomass and N Concentration Using a Hyperspectral Lidar. Comput. Electron. Agric. 2022, 199, 107165. [Google Scholar] [CrossRef]

- Hu, X.; Sun, L.; Gu, X.; Sun, Q.; Wei, Z.; Pan, Y.; Chen, L. Assessing the Self-Recovery Ability of Maize after Lodging Using UAV-LiDAR Data. Remote Sens. 2021, 13, 2270. [Google Scholar] [CrossRef]

- Tian, Y.; Huang, H.; Zhou, G.; Zhang, Q.; Tao, J.; Zhang, Y.; Lin, J. Aboveground Mangrove Biomass Estimation in Beibu Gulf Using Machine Learning and UAV Remote Sensing. Sci. Total Environ. 2021, 781, 146816. [Google Scholar] [CrossRef]

- Jin, C.; Oh, C.; Shin, S.; Wilfred Njungwi, N.; Choi, C. A Comparative Study to Evaluate Accuracy on Canopy Height and Density Using UAV, ALS, and Fieldwork. Forests 2020, 11, 241. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Cheng, Y.-T.; Zhou, T.; Ravi, R.; Hasheminasab, S.; Flatt, J.; Troy, C.; Habib, A. Evaluation of UAV LiDAR for Mapping Coastal Environments. Remote Sens. 2019, 11, 2893. [Google Scholar] [CrossRef]

- Hu, T.; Sun, X.; Su, Y.; Guan, H.; Sun, Q.; Kelly, M.; Guo, Q. Development and Performance Evaluation of a Very Low-Cost UAV-Lidar System for Forestry Applications. Remote Sens. 2020, 13, 77. [Google Scholar] [CrossRef]

- Zhang, Y.; Tan, Y.; Onda, Y.; Hashimoto, A.; Gomi, T.; Chiu, C.; Inokoshi, S. A Tree Detection Method Based on Trunk Point Cloud Section in Dense Plantation Forest Using Drone LiDAR Data. For. Ecosyst. 2023, 10, 100088. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Línková, L. A New Method for UAV Lidar Precision Testing Used for the Evaluation of an Affordable DJI ZENMUSE L1 Scanner. Remote Sens. 2021, 13, 4811. [Google Scholar] [CrossRef]

- Bartmiński, P.; Siłuch, M.; Kociuba, W. The Effectiveness of a UAV-Based LiDAR Survey to Develop Digital Terrain Models and Topographic Texture Analyses. Sensors 2023, 23, 6415. [Google Scholar] [CrossRef]

- Lao, Z.; Fu, B.; Wei, Y.; Deng, T.; He, W.; Yang, Y.; He, H.; Gao, E. Retrieval of Chlorophyll Content for Vegetation Communities under Different Inundation Frequencies Using UAV Images and Field Measurements. Ecol. Indic. 2024, 158, 111329. [Google Scholar] [CrossRef]

- Hu, X.; Gu, X.; Sun, Q.; Yang, Y.; Qu, X.; Yang, X.; Guo, R. Comparison of the Performance of Multi-Source Three-Dimensional Structural Data in the Application of Monitoring Maize Lodging. Comput. Electron. Agric. 2023, 208, 107782. [Google Scholar] [CrossRef]

- Shu, M.; Li, Q.; Ghafoor, A.; Zhu, J.; Li, B.; Ma, Y. Using the Plant Height and Canopy Coverage to Estimation Maize Aboveground Biomass with UAV Digital Images. Eur. J. Agron. 2023, 151, 126957. [Google Scholar] [CrossRef]

- Wang, D.; Xing, S.; He, Y.; Yu, J.; Xu, Q.; Li, P. Evaluation of a New Lightweight UAV-Borne Topo-Bathymetric LiDAR for Shallow Water Bathymetry and Object Detection. Sensors 2022, 22, 1379. [Google Scholar] [CrossRef] [PubMed]

- Appiah Mensah, A.; Jonzén, J.; Nyström, K.; Wallerman, J.; Nilsson, M. Mapping Site Index in Coniferous Forests Using Bi-Temporal Airborne Laser Scanning Data and Field Data from the Swedish National Forest Inventory. For. Ecol. Manag. 2023, 547, 121395. [Google Scholar] [CrossRef]

- Zhou, C.; Ye, H.; Sun, D.; Yue, J.; Yang, G.; Hu, J. An Automated, High-Performance Approach for Detecting and Characterizing Broccoli Based on UAV Remote-Sensing and Transformers: A Case Study from Haining, China. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103055. [Google Scholar] [CrossRef]

- Korpela, I.; Polvivaara, A.; Hovi, A.; Junttila, S.; Holopainen, M. Influence of Phenology on Waveform Features in Deciduous and Coniferous Trees in Airborne LiDAR. Remote Sens. Environ. 2023, 293, 113618. [Google Scholar] [CrossRef]

- Dalponte, M.; Jucker, T.; Liu, S.; Frizzera, L.; Gianelle, D. Characterizing Forest Carbon Dynamics Using Multi-Temporal Lidar Data. Remote Sens. Environ. 2019, 224, 412–420. [Google Scholar] [CrossRef]

- Penman, S.; Lentini, P.; Law, B.; York, A. An Instructional Workflow for Using Terrestrial Laser Scanning (TLS) to Quantify Vegetation Structure for Wildlife Studies. For. Ecol. Manag. 2023, 548, 121405. [Google Scholar] [CrossRef]

- Su, W.; Zhu, D.; Huang, J.; Guo, H. Estimation of the Vertical Leaf Area Profile of Corn (Zea Mays) Plants Using Terrestrial Laser Scanning (TLS). Comput. Electron. Agric. 2018, 150, 5–13. [Google Scholar] [CrossRef]

- Xuming, G.; Yuting, F.; Qing, Z.; Bin, W.; Bo, X.; Han, H.; Min, C. Semantic Maps for Cross-View Relocalization of Terrestrial to UAV Point Clouds. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103081. [Google Scholar] [CrossRef]

- Yan, D.; Li, J.; Yao, X.; Luan, Z. Integrating UAV Data for Assessing the Ecological Response of Spartina Alterniflora towards Inundation and Salinity Gradients in Coastal Wetland. Sci. Total Environ. 2022, 814, 152631. [Google Scholar] [CrossRef]

- Xu, W.; Yang, W.; Wu, J.; Chen, P.; Lan, Y.; Zhang, L. Canopy Laser Interception Compensation Mechanism—UAV LiDAR Precise Monitoring Method for Cotton Height. Agronomy 2023, 13, 2584. [Google Scholar] [CrossRef]

- Zhang, Z.; Pan, L. Current Performance of Open Position Service with Almost Fully Deployed Multi-GNSS Constellations: GPS, GLONASS, Galileo, BDS-2, and BDS-3. Adv. Space Res. 2022, 69, 1994–2019. [Google Scholar] [CrossRef]

- Beland, M.; Parker, G.; Sparrow, B.; Harding, D.; Chasmer, L.; Phinn, S.; Antonarakis, A.; Strahler, A. On Promoting the Use of Lidar Systems in Forest Ecosystem Research. For. Ecol. Manag. 2019, 450, 117484. [Google Scholar] [CrossRef]

- Sun, J.; Lin, Y. Assessing the Allometric Scaling of Vectorized Branch Lengths of Trees with Terrestrial Laser Scanning and Quantitative Structure Modeling: A Case Study in Guyana. Remote Sens. 2023, 15, 5005. [Google Scholar] [CrossRef]

- El-Naggar, A.G.; Jolly, B.; Hedley, C.B.; Horne, D.; Roudier, P.; Clothier, B.E. The Use of Terrestrial LiDAR to Monitor Crop Growth and Account for Within-Field Variability of Crop Coefficients and Water Use. Comput. Electron. Agric. 2021, 190, 106416. [Google Scholar] [CrossRef]

- Guo, Q.; Su, Y.; Hu, T. The Origin and Development of LiDAR Techniques. In LiDAR Principles, Processing and Applications in Forest Ecology; Elsevier: Amsterdam, The Netherlands, 2023; pp. 1–22. ISBN 978-0-12-823894-3. [Google Scholar]

- Jin, S.; Sun, X.; Wu, F.; Su, Y.; Li, Y.; Song, S.; Xu, K.; Ma, Q.; Baret, F.; Jiang, D.; et al. Lidar Sheds New Light on Plant Phenomics for Plant Breeding and Management: Recent Advances and Future Prospects. ISPRS J. Photogramm. Remote Sens. 2021, 171, 202–223. [Google Scholar] [CrossRef]

- Willers, J.; Jin, M.; Eksioglu, B.; Zusmanis, A.; O’Hara, C.; Jenkins, J. A Post-Processing Step Error Correction Algorithm for Overlapping LiDAR Strips from Agricultural Landscapes. Comput. Electron. Agric. 2008, 64, 183–193. [Google Scholar] [CrossRef]

- Shao, J.; Yao, W.; Wan, P.; Luo, L.; Wang, P.; Yang, L.; Lyu, J.; Zhang, W. Efficient Co-Registration of UAV and Ground LiDAR Forest Point Clouds Based on Canopy Shapes. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103067. [Google Scholar] [CrossRef]

- Letard, M.; Lague, D.; Le Guennec, A.; Lefèvre, S.; Feldmann, B.; Leroy, P.; Girardeau-Montaut, D.; Corpetti, T. 3DMASC: Accessible, Explainable 3D Point Clouds Classification. Application to BI-Spectral TOPO-Bathymetric Lidar Data. ISPRS J. Photogramm. Remote Sens. 2024, 207, 175–197. [Google Scholar] [CrossRef]

- Wang, B.; Ma, Y.; Zhang, J.; Zhang, H.; Zhu, H.; Leng, Z.; Zhang, X.; Cui, A. A Noise Removal Algorithm Based on Adaptive Elevation Difference Thresholding for ICESat-2 Photon-Counting Data. Int. J. Appl. Earth Obs. Geoinf. 2023, 117, 103207. [Google Scholar] [CrossRef]

- Nelson, A.; Reuter, H.I.; Gessler, P. Chapter 3 DEM Production Methods and Sources. In Developments in Soil Science; Elsevier: Amsterdam, The Netherlands, 2009; Volume 33, ISBN 978-0-12-374345-9. [Google Scholar]

- Roussel, J.-R.; Auty, D.; Coops, N.C.; Tompalski, P.; Goodbody, T.R.H.; Meador, A.S.; Bourdon, J.-F.; De Boissieu, F.; Achim, A. lidR: An R Package for Analysis of Airborne Laser Scanning (ALS) Data. Remote Sens. Environ. 2020, 251, 112061. [Google Scholar] [CrossRef]

- Dong, T.; Zhang, X.; Ding, Z.; Fan, J. Multi-Layered Tree Crown Extraction from LiDAR Data Using Graph-Based Segmentation. Comput. Electron. Agric. 2020, 170, 105213. [Google Scholar] [CrossRef]

- Klápště, P.; Fogl, M.; Barták, V.; Gdulová, K.; Urban, R.; Moudrý, V. Sensitivity Analysis of Parameters and Contrasting Performance of Ground Filtering Algorithms with UAV Photogrammetry-Based and LiDAR Point Clouds. Int. J. Digit. Earth 2020, 13, 1672–1694. [Google Scholar] [CrossRef]

- Khosravipour, A.; Skidmore, A.K.; Isenburg, M.; Wang, T.; Hussin, Y.A. Generating Pit-Free Canopy Height Models from Airborne Lidar. Photogramm. Eng. Remote Sens. 2014, 80, 863–872. [Google Scholar] [CrossRef]

- Blair, J.B.; Hofton, M.A. Modeling Laser Altimeter Return Waveforms over Complex Vegetation Using High-resolution Elevation Data. Geophys. Res. Lett. 1999, 26, 2509–2512. [Google Scholar] [CrossRef]

- Myroniuk, V.; Zibtsev, S.; Bogomolov, V.; Goldammer, J.G.; Soshenskyi, O.; Levchenko, V.; Matsala, M. Combining Landsat Time Series and GEDI Data for Improved Characterization of Fuel Types and Canopy Metrics in Wildfire Simulation. J. Environ. Manag. 2023, 345, 118736. [Google Scholar] [CrossRef] [PubMed]

- Gu, Z.; Cao, S.; Sanchez-Azofeifa, G.A. Using LiDAR Waveform Metrics to Describe and Identify Successional Stages of Tropical Dry Forests. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 482–492. [Google Scholar] [CrossRef]

- Di Tommaso, S.; Wang, S.; Vajipey, V.; Gorelick, N.; Strey, R.; Lobell, D.B. Annual Field-Scale Maps of Tall and Short Crops at the Global Scale Using GEDI and Sentinel-2. Remote Sens. 2023, 15, 4123. [Google Scholar] [CrossRef]

- Wang, Y.; Ni, W.; Sun, G.; Chi, H.; Zhang, Z.; Guo, Z. Slope-Adaptive Waveform Metrics of Large Footprint Lidar for Estimation of Forest Aboveground Biomass. Remote Sens. Environ. 2019, 224, 386–400. [Google Scholar] [CrossRef]

- Ranson, K.J.; Sun, G. Modeling Lidar Returns from Forest Canopies. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2617–2626. [Google Scholar] [CrossRef]

- Amini Amirkolaee, H.; Arefi, H.; Ahmadlou, M.; Raikwar, V. DTM Extraction from DSM Using a Multi-Scale DTM Fusion Strategy Based on Deep Learning. Remote Sens. Environ. 2022, 274, 113014. [Google Scholar] [CrossRef]

- Jing, L.; Wei, X.; Song, Q.; Wang, F. Research on Estimating Rice Canopy Height and LAI Based on LiDAR Data. Sensors 2023, 23, 8334. [Google Scholar] [CrossRef]

- Fieuzal, R.; Baup, F. Estimation of Leaf Area Index and Crop Height of Sunflowers Using Multi-Temporal Optical and SAR Satellite Data. Int. J. Remote Sens. 2016, 37, 2780–2809. [Google Scholar] [CrossRef]

- Jay, S.; Bendoula, R.; Hadoux, X.; Féret, J.-B.; Gorretta, N. A Physically-Based Model for Retrieving Foliar Biochemistry and Leaf Orientation Using Close-Range Imaging Spectroscopy. Remote Sens. Environ. 2016, 177, 220–236. [Google Scholar] [CrossRef]

- Seo, B.; Lee, J.; Lee, K.-D.; Hong, S.; Kang, S. Improving Remotely-Sensed Crop Monitoring by NDVI-Based Crop Phenology Estimators for Corn and Soybeans in Iowa and Illinois, USA. Field Crops Res. 2019, 238, 113–128. [Google Scholar] [CrossRef]

- Yue, J.; Tian, J.; Philpot, W.; Tian, Q.; Feng, H.; Fu, Y. VNAI-NDVI-Space and Polar Coordinate Method for Assessing Crop Leaf Chlorophyll Content and Fractional Cover. Comput. Electron. Agric. 2023, 207, 107758. [Google Scholar] [CrossRef]

- Mallet, C.; Bretar, F. Full-Waveform Topographic Lidar: State-of-the-Art. ISPRS J. Photogramm. Remote Sens. 2009, 64, 1–16. [Google Scholar] [CrossRef]

- Malambo, L.; Popescu, S.C.; Murray, S.C.; Putman, E.; Pugh, N.A.; Horne, D.W.; Richardson, G.; Sheridan, R.; Rooney, W.L.; Avant, R.; et al. Multitemporal Field-Based Plant Height Estimation Using 3D Point Clouds Generated from Small Unmanned Aerial Systems High-Resolution Imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 31–42. [Google Scholar] [CrossRef]

- Zhao, X.; Su, Y.; Hu, T.; Cao, M.; Liu, X.; Yang, Q.; Guan, H.; Liu, L.; Guo, Q. Analysis of UAV Lidar Information Loss and Its Influence on the Estimation Accuracy of Structural and Functional Traits in a Meadow Steppe. Ecol. Indic. 2022, 135, 108515. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Kukko, A.; Yu, X.; Kaartinen, H.; Lehtomäki, M.; Lin, Y. A Low-Cost Multi-Sensoral Mobile Mapping System and Its Feasibility for Tree Measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Ma, H.; Zhou, W.; Zhang, L. DEM Refinement by Low Vegetation Removal Based on the Combination of Full Waveform Data and Progressive TIN Densification. ISPRS J. Photogramm. Remote Sens. 2018, 146, 260–271. [Google Scholar] [CrossRef]

- Liu, G.; Xia, J.; Zheng, K.; Cheng, J.; Wang, K.; Liu, Z.; Wei, Y.; Xie, D. Measurement and Evaluation Method of Farmland Microtopography Feature Information Based on 3D LiDAR and Inertial Measurement Unit. Soil Tillage Res. 2024, 236, 105921. [Google Scholar] [CrossRef]

- Alexander, C.; Korstjens, A.H.; Hill, R.A. Influence of Micro-Topography and Crown Characteristics on Tree Height Estimations in Tropical Forests Based on LiDAR Canopy Height Models. Int. J. Appl. Earth Obs. Geoinf. 2018, 65, 105–113. [Google Scholar] [CrossRef]

- Kohek, Š.; Žalik, B.; Strnad, D.; Kolmanič, S.; Lukač, N. Simulation-Driven 3D Forest Growth Forecasting Based on Airborne Topographic LiDAR Data and Shading. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102844. [Google Scholar] [CrossRef]

- Guo, T.; Fang, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Chen, Q.; Qiu, X.; Yao, X. Detection of Wheat Height Using Optimized Multi-Scan Mode of LiDAR during the Entire Growth Stages. Comput. Electron. Agric. 2019, 165, 104959. [Google Scholar] [CrossRef]

- Ao, Z.; Wu, F.; Hu, S.; Sun, Y.; Su, Y.; Guo, Q.; Xin, Q. Automatic Segmentation of Stem and Leaf Components and Individual Maize Plants in Field Terrestrial LiDAR Data Using Convolutional Neural Networks. Crop J. 2022, 10, 1239–1250. [Google Scholar] [CrossRef]

- Chen, Y.; Xiong, Y.; Zhang, B.; Zhou, J.; Zhang, Q. 3D Point Cloud Semantic Segmentation toward Large-Scale Unstructured Agricultural Scene Classification. Comput. Electron. Agric. 2021, 190, 106445. [Google Scholar] [CrossRef]

- Li, L.; Mu, X.; Chianucci, F.; Qi, J.; Jiang, J.; Zhou, J.; Chen, L.; Huang, H.; Yan, G.; Liu, S. Ultrahigh-Resolution Boreal Forest Canopy Mapping: Combining UAV Imagery and Photogrammetric Point Clouds in a Deep-Learning-Based Approach. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102686. [Google Scholar] [CrossRef]

| Stage | Echo Mode | Date | Frequency (kHz) | Scanning Mode | UAS Flight | ||

|---|---|---|---|---|---|---|---|

| Altitude | Strip Overlap (%) | Speed (m/s) | |||||

| Early | 1 | 22 September 2022 | 160 | Repetitive | 50 | 50 | 5 |

| 2 | 22 September 2022 | 160 | Non-Repetitive | 50 | 50 | 5 | |

| 3 | 22 September 2022 | 260 | Repetitive | 60 | 50 | 5 | |

| Intermediate | 1 | 26 September 2022 | 160 | Repetitive | 50 | 50 | 5 |

| 2 | 26 September 2022 | 160 | Non-Repetitive | 50 | 50 | 5 | |

| 2 | 26 September 2022 | 260 | Repetitive | 50 | 50 | 5 | |

| Late | 2 | 27 September 2022 | 260 | Repetitive | 60 | 50 | 5 |

| Crop | No of Obs. | Crop Height (m) | |||

|---|---|---|---|---|---|

| Minimum | Maximum | Mean | Standard Deviation | ||

| Green corn | 10 | 2.14 | 3.03 | 2.49 | 0.30 |

| Dry corn | 6 | 2.15 | 2.94 | 2.60 | 0.30 |

| Soybean | 16 | 0.62 | 1.00 | 0.81 | 0.11 |

| Sugar beet | 11 | 0.37 | 0.75 | 0.58 | 0.11 |

| Sunflower | 14 | 0.78 | 1.96 | 1.64 | 0.33 |

| ID | Scanning Mode | Crop | Pts./m2 | Wavelength | FOV | Range | Scan Mode | Range Accuracy |

|---|---|---|---|---|---|---|---|---|

| 1 | Spherical | Dry corn | 9236.71 | 1064 nm | 70.4 H: 77.4 V | 200 m | Non-repetitive | 2 mm |

| 2 | Spherical | Green corn | 14,557.61 | 1064 nm | 70.4 H: 77.4 V | 200 m | Non-repetitive | 2 mm |

| 3 | Spherical | Sunflower | 4872.45 | 1064 nm | 70.4 H: 77.4 V | 200 m | Non-repetitive | 2 mm |

| 4 | Spherical | Sugar beet | 6072.14 | 1064 nm | 70.4 H: 77.4 V | 200 m | Non-repetitive | 2 mm |

| 5 | Spherical | Soybean | 8615.76 | 1064 nm | 70.4 H: 77.4 V | 200 m | Non-repetitive | 2 mm |

| ID | N Mode | Date | PRR (kHz) | Scanning Mode | Altitude (AGL) | Pts./m2 | Total pts (Millions). |

|---|---|---|---|---|---|---|---|

| Early | 1 | 22 September 2022 | 160 | Repetitive | 50 | 590.82 | 4.00 |

| 2 | 22 September 2022 | 160 | Non-Repetitive | 50 | 345.00 | 2.34 | |

| 3 | 22 September 2022 | 260 | Repetitive | 60 | 533.31 | 3.70 | |

| Intermediate | 1 | 26 September 2022 | 260 | Repetitive | 50 | 912.17 | 6.18 |

| 2 | 26 September 2022 | 160 | Non-Repetitive | 50 | 285.04 | 1.93 | |

| 2 | 26 September 2022 | 160 | Repetitive | 50 | 609.64 | 4.13 | |

| Late | 2 | 27 September 2022 | 260 | Repetitive | 60 | 473.31 | 3.20 |

| RH Metric | Crop | Mean | Median | Minimum | Maximum | Standard Deviation |

|---|---|---|---|---|---|---|

| RH10 | Dry corn | 5.37 | 5.77 | 0.98 | 8.01 | 2.43 |

| Green corn | 9.99 | 10.12 | 8.87 | 10.48 | 0.50 | |

| Soybeans | 8.50 | 9.85 | 4.59 | 11.36 | 2.79 | |

| Sugar beet | 2.98 | 2.95 | 2.72 | 3.24 | 0.16 | |

| Sunflower | 7.33 | 6.99 | 3.42 | 10.12 | 2.24 | |

| RH25 | Dry corn | 19.99 | 20.02 | 14.20 | 22.99 | 2.92 |

| Green corn | 25.22 | 25.33 | 24.22 | 25.89 | 0.47 | |

| Soybeans | 8.50 | 9.85 | 4.59 | 11.36 | 2.79 | |

| Sugar beet | 10.34 | 11.76 | 2.96 | 14.11 | 3.58 | |

| Sunflower | 21.94 | 21.89 | 14.48 | 25.13 | 3.40 | |

| RH50 | Dry corn | 45.05 | 45.03 | 38.98 | 47.98 | 3.04 |

| Green corn | 50.34 | 50.28 | 49.55 | 51.29 | 0.56 | |

| Soybeans | 16.68 | 19.12 | 4.59 | 22.66 | 5.74 | |

| Sugar beet | 35.59 | 35.90 | 27.91 | 39.76 | 3.61 | |

| Sunflower | 47.37 | 47.63 | 38.96 | 51.70 | 3.81 | |

| RH75 | Dry corn | 69.99 | 69.97 | 63.93 | 72.97 | 3.07 |

| Green corn | 75.76 | 75.75 | 74.41 | 77.31 | 0.84 | |

| Soybeans | 39.30 | 40.52 | 24.67 | 49.08 | 6.68 | |

| Sugar beet | 59.97 | 60.76 | 54.12 | 64.40 | 3.16 | |

| Sunflower | 73.04 | 72.96 | 63.91 | 76.82 | 3.97 | |

| RH98 | Dry corn | 92.86 | 92.89 | 86.86 | 95.82 | 2.99 |

| Green corn | 97.91 | 98.05 | 96.85 | 98.27 | 0.45 | |

| Soybeans | 58.94 | 58.98 | 44.73 | 66.05 | 6.19 | |

| Sugar beet | 82.64 | 83.13 | 75.77 | 86.92 | 3.31 | |

| Sunflower | 94.96 | 94.90 | 86.86 | 98.61 | 3.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fareed, N.; Das, A.K.; Flores, J.P.; Mathew, J.J.; Mukaila, T.; Numata, I.; Janjua, U.U.R. UAS Quality Control and Crop Three-Dimensional Characterization Framework Using Multi-Temporal LiDAR Data. Remote Sens. 2024, 16, 699. https://doi.org/10.3390/rs16040699

Fareed N, Das AK, Flores JP, Mathew JJ, Mukaila T, Numata I, Janjua UUR. UAS Quality Control and Crop Three-Dimensional Characterization Framework Using Multi-Temporal LiDAR Data. Remote Sensing. 2024; 16(4):699. https://doi.org/10.3390/rs16040699

Chicago/Turabian StyleFareed, Nadeem, Anup Kumar Das, Joao Paulo Flores, Jitin Jose Mathew, Taofeek Mukaila, Izaya Numata, and Ubaid Ur Rehman Janjua. 2024. "UAS Quality Control and Crop Three-Dimensional Characterization Framework Using Multi-Temporal LiDAR Data" Remote Sensing 16, no. 4: 699. https://doi.org/10.3390/rs16040699

APA StyleFareed, N., Das, A. K., Flores, J. P., Mathew, J. J., Mukaila, T., Numata, I., & Janjua, U. U. R. (2024). UAS Quality Control and Crop Three-Dimensional Characterization Framework Using Multi-Temporal LiDAR Data. Remote Sensing, 16(4), 699. https://doi.org/10.3390/rs16040699