Fuzzy Information Retrieval Based on Continuous Bag-of-Words Model

Abstract

:1. Introduction

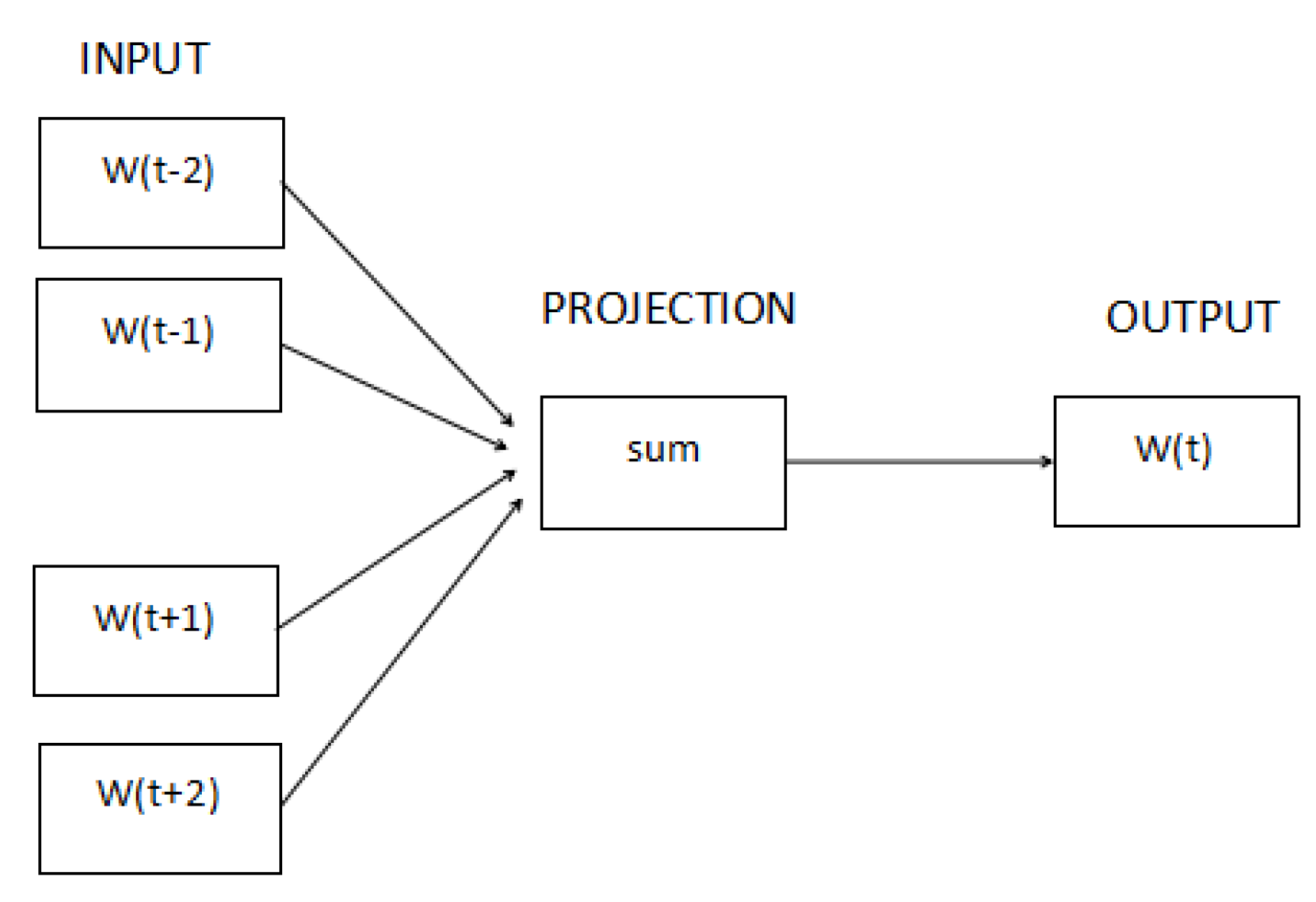

2. Fuzzy Information Retrieval

3. A Review of Continuous Bag-of-Word Model

4. Fuzzy Information Retrieval Based on Word Embedding

- Step 1

- Build a corpus. Then, the operations of word segment, part of speech tagging, and removal of the stop words are carried out. On this basis, the preprocessed corpus is trained by the continuous bag-of-words model. After that, the corresponding word embedding is obtained.

- Step 2

- Extract 20 keywords, these keywords can represent the meaning of the document in general, from each retrieved document. Set up the database with the names and keywords of each retrieved document.

- Step 3

- Input the query language. Do the same pretreatment as the trained corpus with the query.

- Step 4

- Calculate the modified vector cosine distance between keywords in each retrieved document and each word in the query language. Apply the fuzzy set theory and the membership function to get the degree of membership. The degree of membership expresses how much the retrieved documents belong to each word of the query language.

- Step 5

- Convert the query language into conjunctive normal form to obtain the membership value for each retrieved document that belongs to the query language.

- Step 6

- The documents whose membership values are greater than 0 are listed in descending order to make the fuzzy information retrieval system convenient for the user.

5. Experiments

5.1. Evaluation Methods

5.2. Corpora and Training Details

5.3. Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pasi, G.; Bordogna, G. The Role of Fuzzy Sets in Information Retrieval. Stud. Fuzziness Soft Comput. 2013, 299, 525–532. [Google Scholar]

- Manning, C.D.; Raghavan, P.; Schetze, H. An Introduction to Information Retrieval. J. Am. Soc. Inf. Sci. 1993, 43, 824–825. [Google Scholar]

- Ponte, J.M.; Croft, W.B. A language modeling approach to information retrieval. In Proceedings of the 21st Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Melbourne, Australia, 24–28 August 1998; pp. 275–281. [Google Scholar]

- Bordogna, G.; Pasi, G. A fuzzy linguistic approach generalizing Boolean Information Retrieval and Technology: A model and its evaluation. J. Assoc. Inf. Sci. 1993, 44, 70–82. [Google Scholar]

- Gupta, M.M. Fuzzy set theory and its applications. Fuzzy Sets Syst. 1992, 47, 396–397. [Google Scholar] [CrossRef]

- Kraft, D.H.; Bordogna, G.; Pasi, G. Fuzzy Set Techniques in Information Retrieval. In Fuzzy Sets in Approximate Reasoning and Information Systems; Springer: Boston, MA, USA, 1999; pp. 1029–1055. [Google Scholar]

- Miyamoto, S. Fuzzy Sets in Information Retrieval and Cluster Analysis; Springer: Dordrecht, The Netherlands, 1990. [Google Scholar]

- Lucarella, D. Uncertainty in information retrieval: An approach based on fuzzy sets. In Proceedings of the International Phoenix Conference on Computers and Communications, Scottsdale, AZ, USA, 21–23 March 1990; pp. 809–814. [Google Scholar]

- Miyamoto, S. Information retrieval based on fuzzy associations. Fuzzy Sets Syst. 1988, 38, 191–205. [Google Scholar] [CrossRef]

- Pasi, G. Fuzzy Sets in Information Retrieval: State of the Art and Research Trends. Stud. Fuzziness Soft Comput. 2008, 220, 517–535. [Google Scholar]

- Ogawa, Y.; Morita, T.; Kobayashi, K. A fuzzy document retrieval system using the keyword connection matrix and a learning method. Fuzzy Sets Syst. 1991, 39, 163–179. [Google Scholar] [CrossRef]

- Mandala, R.; Tokunaga, T.; Tanaka, H. Query expansion using heterogeneous thesauri. Inf. Process. Manag. 2000, 36, 361–378. [Google Scholar] [CrossRef]

- Chen, S.M.; Wang, J.Y. Document retrieval using knowledge-based fuzzy information retrieval techniques. IEEE Trans. Syst. Man Cybern. 1995, 25, 793–803. [Google Scholar] [CrossRef]

- Horng, Y.J.; Chen, S.M.; Chang, Y.C.; Lee, C.H. A new method for fuzzy information retrieval based on fuzzy hierarchical clustering and fuzzy inference techniques. IEEE Trans. Fuzzy Syst. 2005, 13, 216–228. [Google Scholar] [CrossRef]

- Kraft, D.H.; Chen, J.; Mikulcic, A. Combining fuzzy clustering and fuzzy inference in information retrieval. In Proceedings of the IEEE International Conference on Fuzzy Systems(FUZZ-IEEE’2000), San Antonio, TX, USA, 7–10 May 2000; Volume 1, pp. 375–380. [Google Scholar]

- Marrara, S.; Pasi, G. Fuzzy Approaches to Flexible Querying in XML Retrieval. Int. J. Comput. Intell. Syst. 2016, 9 (Suppl. 1), 95–103. [Google Scholar] [CrossRef] [Green Version]

- Sabour, A.A.; Gadallah, A.M.; Hefny, H.A. Flexible Querying of Relational Databases: Fuzzy Set Based Approach. Commun. Comput. Inf. Sci. 2014, 488, 446–455. [Google Scholar]

- Bengio, Y.; Ducharme, R.; Vincent, P.; Jauvin, C. A neural probabilistic language model. In Innovations in Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; pp. 137–186. [Google Scholar]

- Geoffrey, E.H.; Simon, O.; Yee-Whye, T. A fast learning algorithmfor deep belief nets. Read. Cogn. Sci. 2006, 323, 399–421. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and their Compositionality. Adv. Neural Inf. Process. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Zheng, X.; Chen, H.; Xu, T. Deep learning for Chinese word segmentation and POS tagging. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013. [Google Scholar]

- Zhang, X.; Zhao, J.; Lecun, Y. Character-level convolutional networks for text classification. In International Conference on Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; pp. 649–657. [Google Scholar]

- Kusner, M.; Sun, Y.; Kolkin, N.; Weinberger, K. From word embeddings to document distances. In International Conference on International Conference on Machine Learning; JMLR.org: New York, NY, USA, 2015; pp. 957–966. [Google Scholar]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Bengio, Y.; Senecal, J.S. Quick Training of Probabilistic Neural Nets by Importance Sampling. In Proceedings of the AISTATS, Key West, FL, USA, 1–4 June 2003; pp. 1–9. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Salton, G.; Mcgill, M.J. Introduction to Modern Information Retrieval; McGraw-Hill: New York, NY, USA, 1983; pp. 305–306. [Google Scholar]

- Wang, X.L.; Wang, D.; Yang, S.C.; Zhang, C. Word Semantic Similarity Algorithm Based on HowNet. Comput. Eng. 2014, 40, 177–181. [Google Scholar]

- Dong, Z.D.; Dong, Q. HowNet[EB/ OL]. 2013. Available online: http://www.keenage.com (accessed on 25 December 2019).

| Input | Similar Words | The Relevance Value | Input | Similar Words | The Relevance Value |

| 悲伤(Sorrowful) | 0.915266777 | 兔子(Rabbit) | 0.895219554 | ||

| 感伤(Mirthless) | 0.9149946506 | 小猫(Cat) | 0.8723238531 | ||

| 伤感(Sad) | 忧伤(Grieved) | 0.911691367 | 小狗(Dog) | 小羊(Lamb) | 0.8553598142 |

| 哀伤(Distressing) | 0.9067591549 | 老鼠(Mouse) | 0.8544498053 | ||

| 悲哀(Heartbroken) | 0.879857731 | 小鸡(Chick) | 0.8533311389 |

| Input | Similar Words | The Relevance Value | Input | Similar Words | The Relevance Value |

| 拖鞋(Slipper) | 0.06270077946 | 拖鞋(Slipper) | 0.31981765268 | ||

| 口水(Saliva) | 0.12312655669 | 口水(Saliva) | 0.19171946085 | ||

| 伤感(Sad) | 杯子(Glass) | 0.16550510413 | 小狗(Dog) | 杯子(Glass) | 0.36261000131 |

| 男人(Man) | 0.32145927466 | 男人(Man) | 0.5297929242 | ||

| 太阳(Sun) | 0.08916863175 | 太阳(Sun) | 0.18871291085 |

| Word One | Word Two | Word Frequency | HowNet | The Relevance Value |

| 宠物(Pet) | 运动(Sport) | 0 | 0.139181 | 0.321339 |

| 男人(Man) | 女人(Woman) | 0.2217 | 0.861111 | 0.985476 |

| 国家Country) | 太阳(Sun) | 0.024 | 0.208696 | 0.475797 |

| 周末(Weekend) | 晴天(Sunny day) | 0 | 0.044444 | 0.176057 |

| 开车(Drive a car) | 旅行(Travel) | 0.14 | 0.285714 | 0.541297 |

| 放学(Classes are over) | 回家(Go home) | 0.27 | 0.077949 | 0.764166 |

| 镜子(Mirror) | 化妆(Make up) | 0.063 | 0.074074 | 0.446568 |

| 云彩(Cloud) | 天空(Sky) | 0.011 | 0.285714 | 0.686303 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, D.; Jiang, H.; Chen, S. Fuzzy Information Retrieval Based on Continuous Bag-of-Words Model. Symmetry 2020, 12, 225. https://doi.org/10.3390/sym12020225

Qiu D, Jiang H, Chen S. Fuzzy Information Retrieval Based on Continuous Bag-of-Words Model. Symmetry. 2020; 12(2):225. https://doi.org/10.3390/sym12020225

Chicago/Turabian StyleQiu, Dong, Haihuan Jiang, and Shuqiao Chen. 2020. "Fuzzy Information Retrieval Based on Continuous Bag-of-Words Model" Symmetry 12, no. 2: 225. https://doi.org/10.3390/sym12020225