A Least Squares Estimator for Gradual Change-Point in Time Series with m-Asymptotically Almost Negatively Associated Errors

Abstract

:1. Introduction

2. Main Results

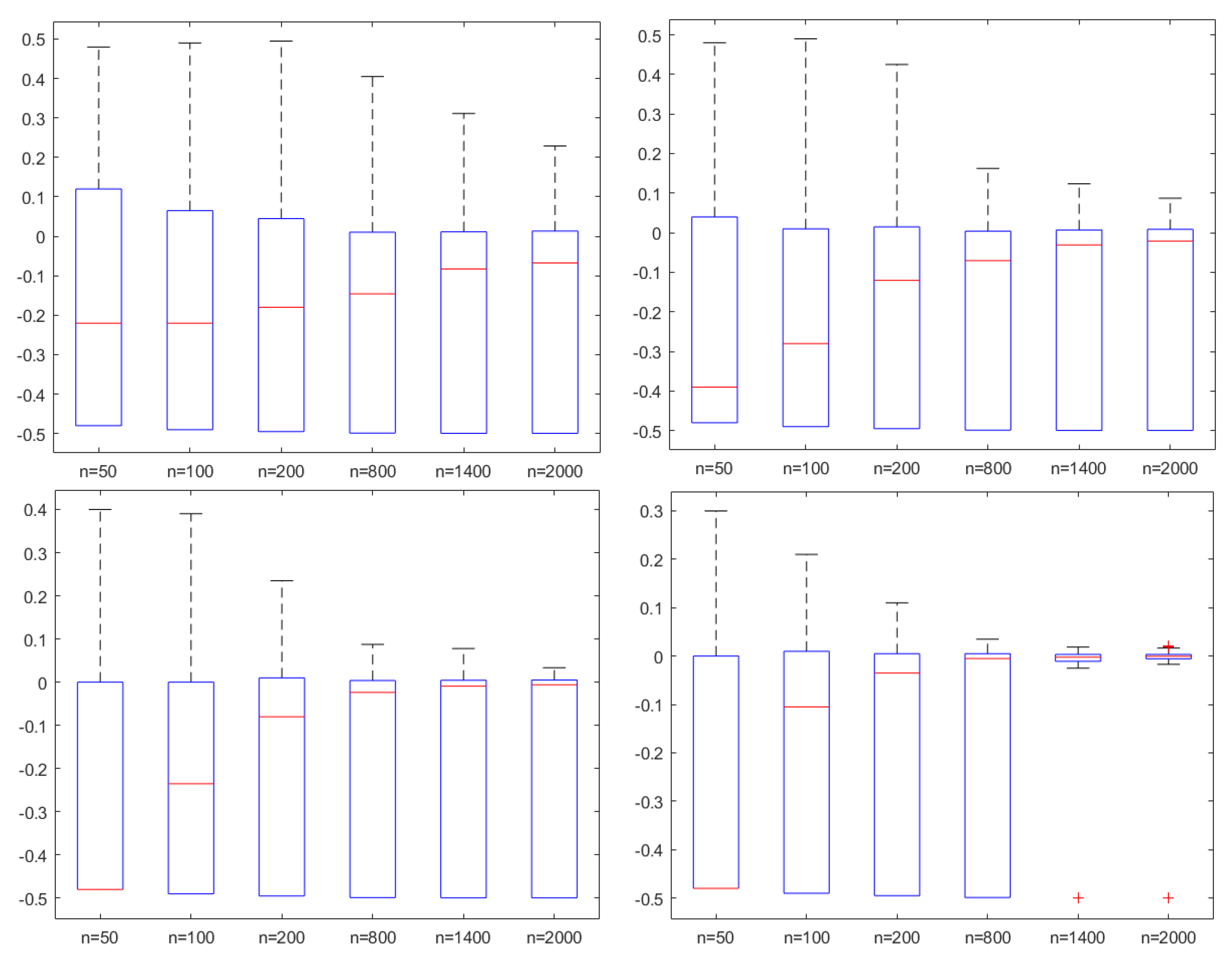

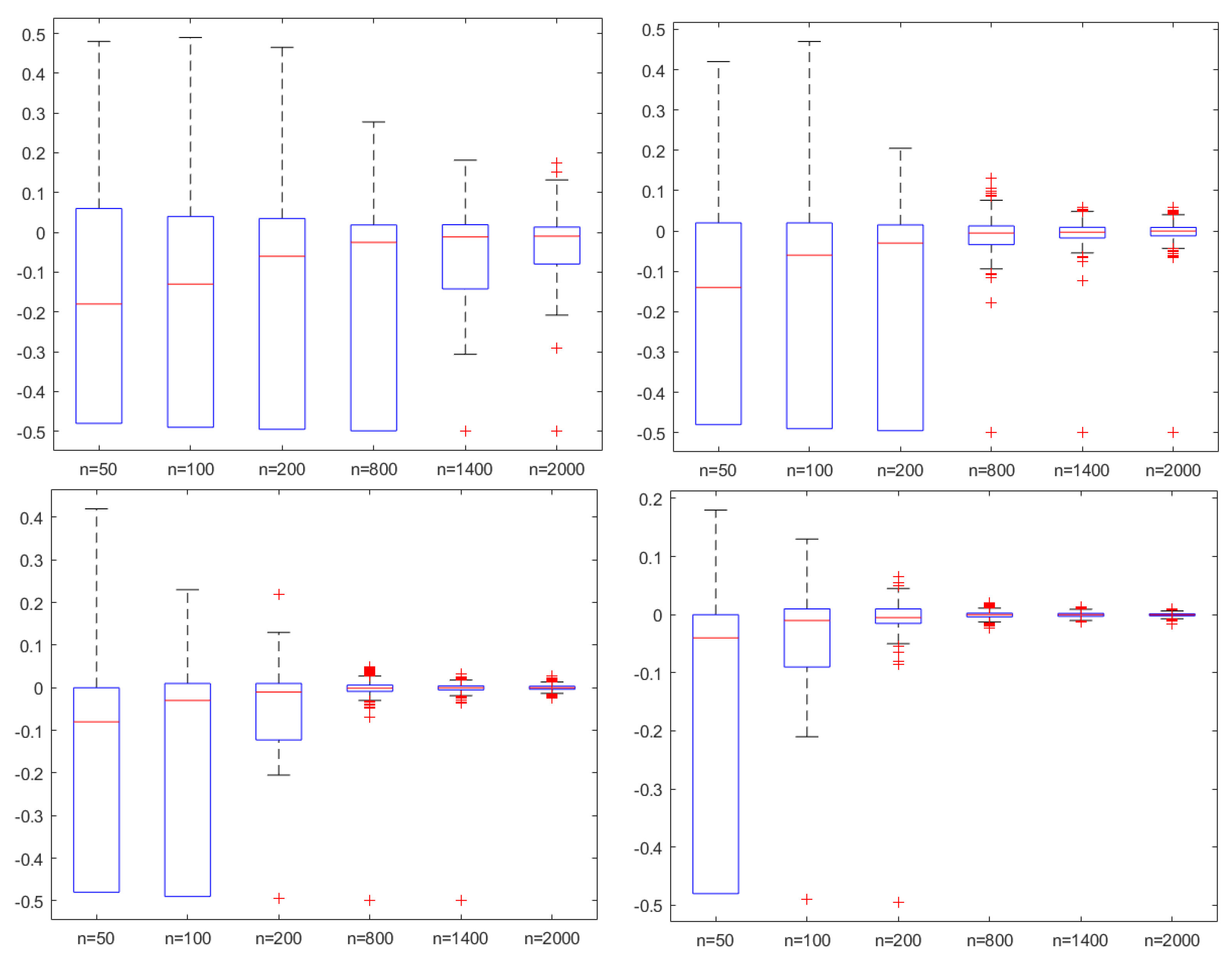

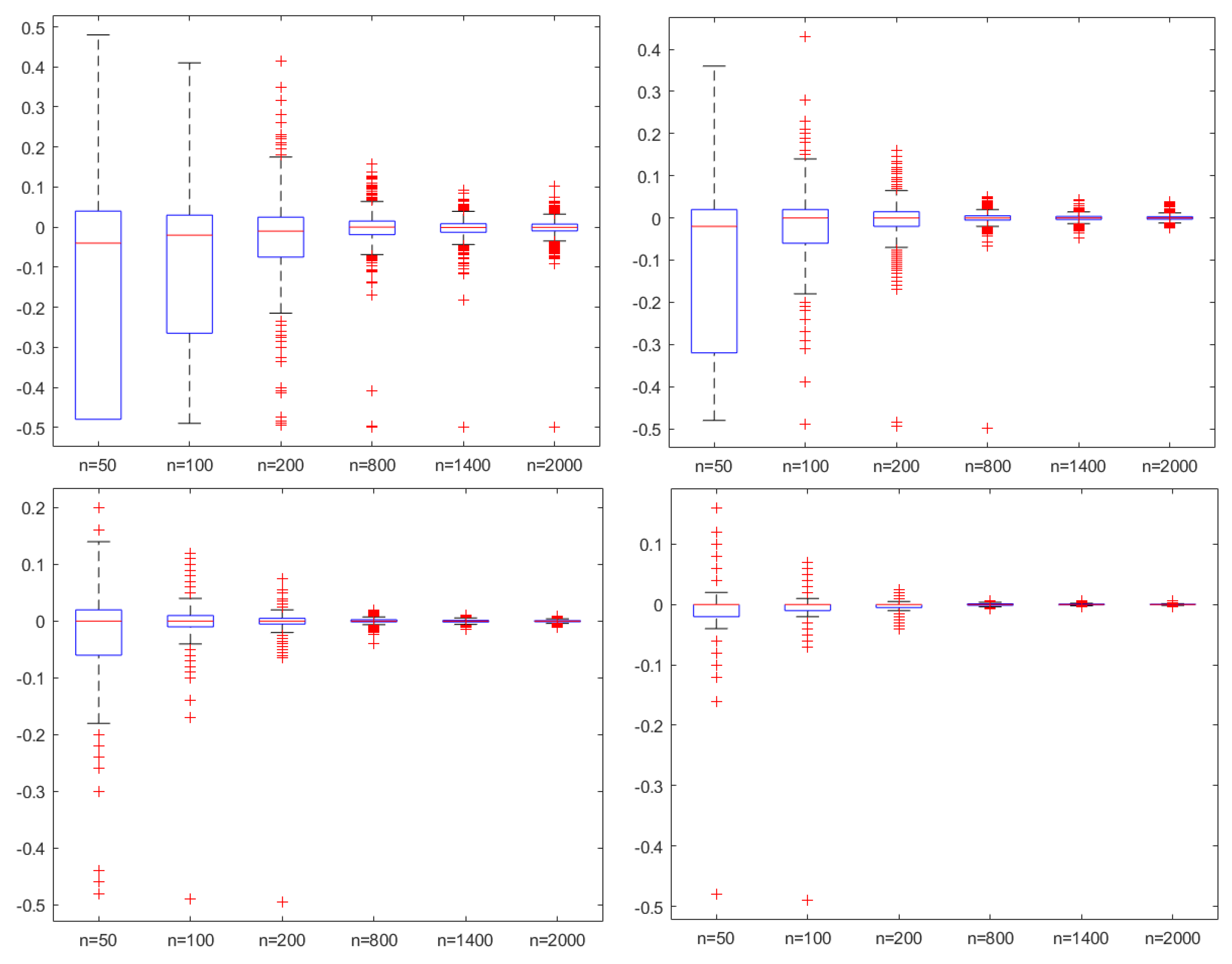

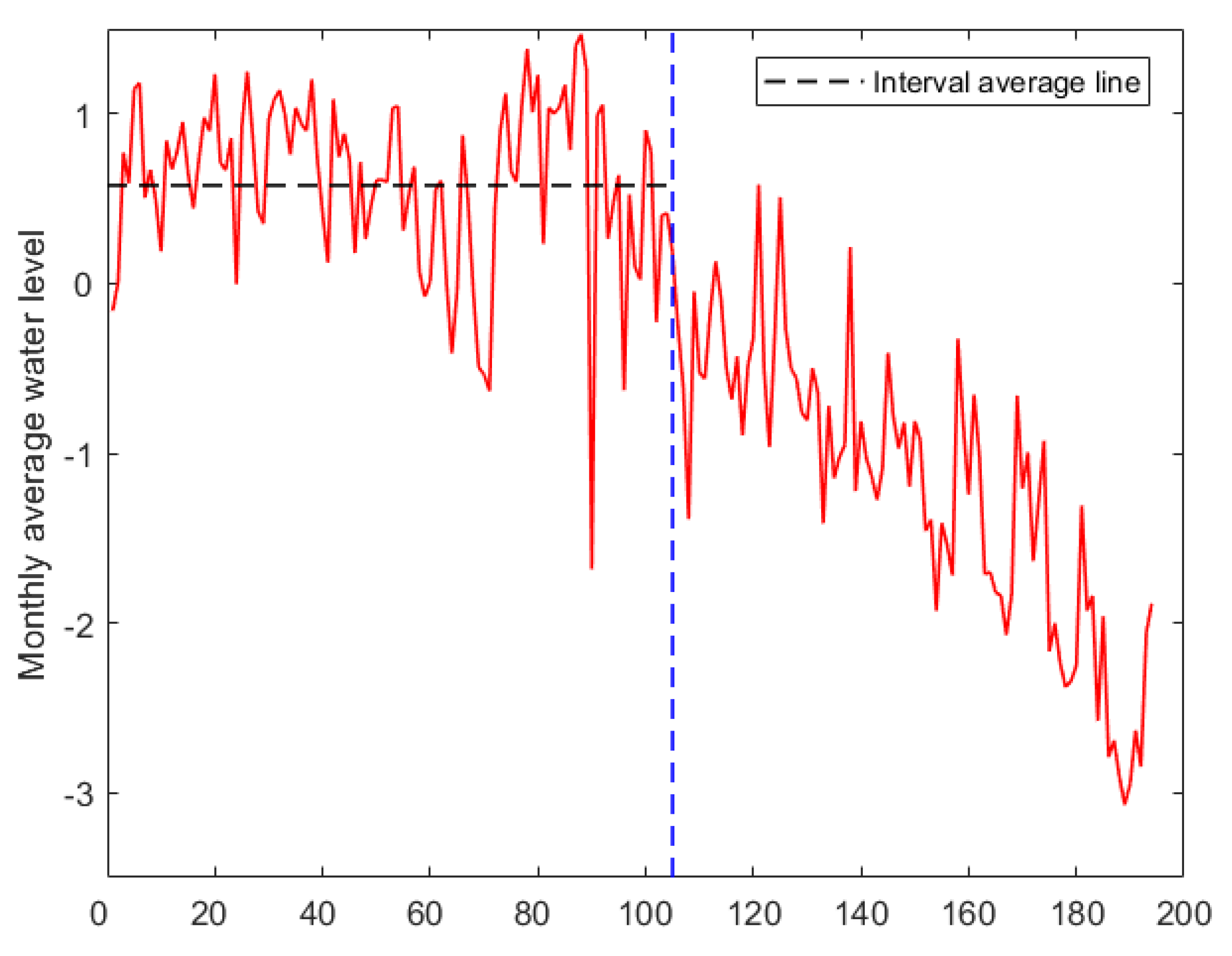

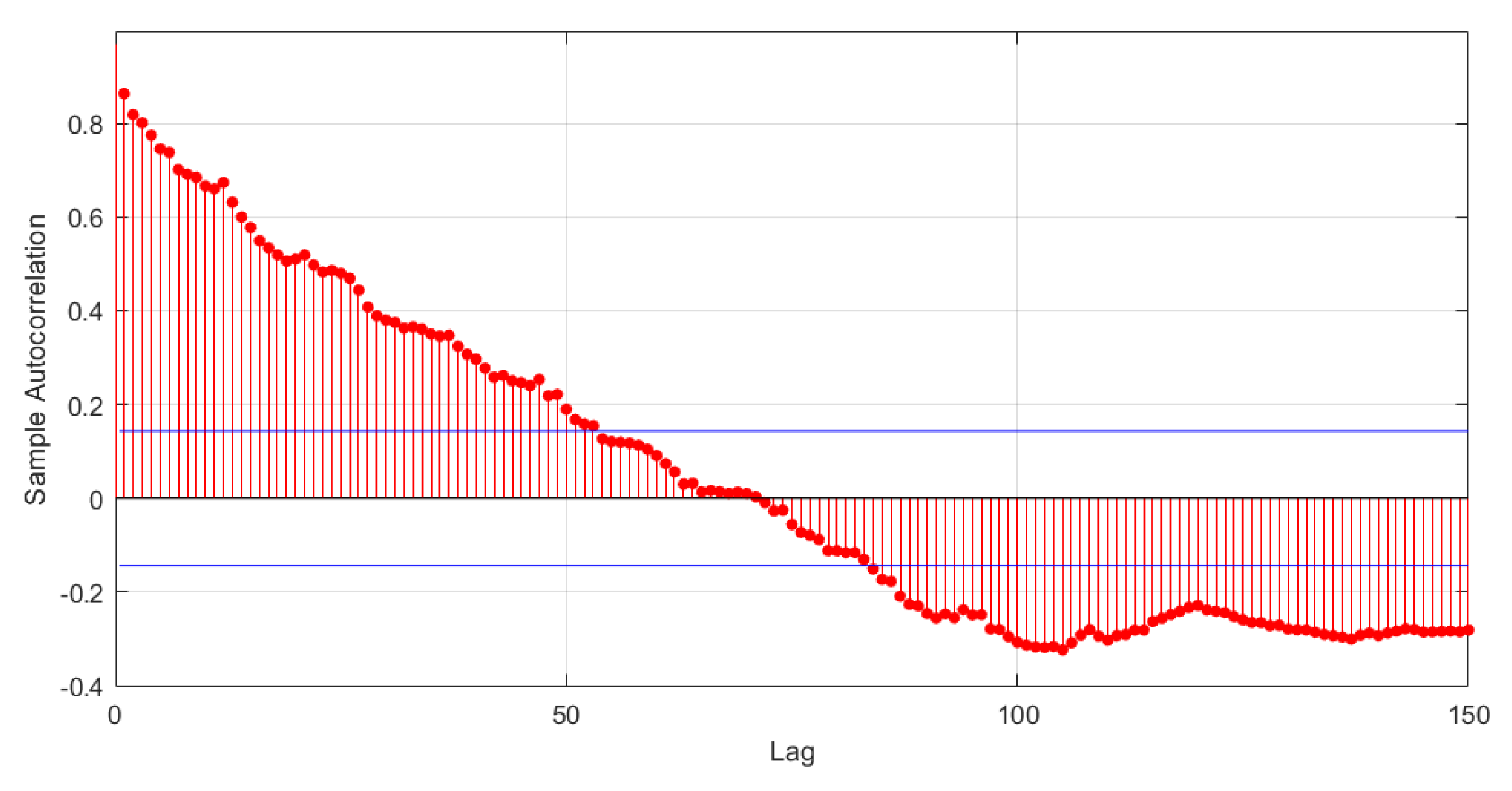

3. Simulations and Example

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Page, E.S. Continuous inspection schemes. Biometrika 1954, 41, 100–115. [Google Scholar] [CrossRef]

- Csörgo, M.; Horváth, L. Limit Theorems in Change-Point Analysis; Wiley: Chichester, UK, 1997. [Google Scholar]

- Horváth, L.; Hušková, M. Change-point detection in panel data. J. Time Ser. Anal. 2012, 33, 631–648. [Google Scholar] [CrossRef]

- Horváth, L.; Rice, G. Extensions of some classical methods in change point analysis. Test. 2014, 23, 219–255. [Google Scholar] [CrossRef]

- Xu, M.; Wu, Y.; Jin, B. Detection of a change-point in variance by a weighted sum of powers of variances test. J. Appl. Stat. 2019, 46, 664–679. [Google Scholar] [CrossRef]

- Gao, M.; Ding, S.S.; Wu, S.P.; Yang, W.Z. The asymptotic distribution of CUSUM estimator based on α-mixing sequences. Commun. Stat. Simul. Comput. 2020, 51, 6101–6113. [Google Scholar] [CrossRef]

- Jin, H.; Wang, A.M.; Zhang, S.; Liu, J. Subsampling ratio tests for structural changes in time series with heavy-tailed AR(p) errors. Commun. Stat. Simul. Comput. 2022, 2022, 2111584. [Google Scholar] [CrossRef]

- Hušková, M.; Prášková, Z.; Steinebach, G.J. Estimating a gradual parameter change in an AR(1)–process. Metrika 2022, 85, 771–808. [Google Scholar] [CrossRef]

- Tian, W.Z.; Pang, L.Y.; Tian, C.L.; Ning, W. Change Point Analysis for Kumaraswamy Distribution. Mathematics 2023, 11, 553. [Google Scholar] [CrossRef]

- Pepelyshev, A.; Polunchenko, A. Real-time financial surveillance via quickest change-point detection methods. Stat. Interface 2017, 10, 93–106. [Google Scholar] [CrossRef]

- Ghosh, P.; Vaida, F. Random change point modelling of HIV immunologic responses. Stat. Med. 2007, 26, 2074–2087. [Google Scholar] [CrossRef]

- Punt, A.E.; Szuwalski, C.S.; Stockhausen, W. An evaluation of stock-recruitment proxies and environmental change points for implementing the US Sustainable Fisheries Act. Fish Res. 2014, 157, 28–40. [Google Scholar] [CrossRef]

- Hinkley, D. Inference in two-phase regression. J. Am. Stat. Assoc. 1971, 66, 736–763. [Google Scholar] [CrossRef]

- Feder, P.I. On asymptotic distribution theory in segmented regression problems. Ann. Stat. 1975, 3, 49–83. [Google Scholar] [CrossRef]

- Hušková, M. Estimators in the location model with gradual changes. Comment. Math. Univ. Carolin. 1998, 1, 147–157. [Google Scholar]

- Jarušková, D. Testing appearance of linear trend. J. Stat. Plan. Infer. 1998, 70, 263–276. [Google Scholar] [CrossRef]

- Wang, L. Gradual changes in long memory processes with applications. Statistics 2007, 41, 221–240. [Google Scholar] [CrossRef]

- Timmermann, H. Monitoring Procedures for Detecting Gradual Changes. Ph.D. Thesis, Universität zu Köln, Köln, Germany, 2014. [Google Scholar]

- Schimmack, M.; Mercorelli, P. Contemporary sinusoidal disturbance detection and nano parameters identification using data scaling based on Recursive Least Squares algorithms. In Proceedings of the 2014 International Conference on Control, Decision and Information Technologies (CoDIT), Metz, France, 3–5 November 2014; pp. 510–515. [Google Scholar]

- Schimmack, M.; Mercorelli, P. An Adaptive Derivative Estimator for Fault-Detection Using a Dynamic System with a Suboptimal Parameter. Algorithms 2019, 12, 101. [Google Scholar] [CrossRef]

- Nam, T.; Hu, T.; Volodin, A. Maximal inequalities and strong law of large numbers for sequences of m-asymptotically almost negatively associated random variables. Commun. Stat. Theory Methods 2017, 46, 2696–2707. [Google Scholar] [CrossRef]

- Block, H.W.; Savits, T.H.; Shaked, M. Some concepts of negative dependence. Ann. Probab. 1982, 10, 765–772. [Google Scholar] [CrossRef]

- Hu, T.C.; Chiang, C.Y.; Taylor, R.L. On complete convergence for arrays of rowwise m-negatively associated random variables. Nonlinear Anal. 2009, 71, e1075–e1081. [Google Scholar] [CrossRef]

- Chandra, T.K.; Ghosal, S. The strong law of large numbers for weighted averages under dependence assumptions. J. Theor. Probab. 1996, 9, 797–809. [Google Scholar] [CrossRef]

- Przemysaw, M. A note on the almost sure convergence of sums of negatively dependent random variables. Stat. Probab. Lett. 1992, 15, 209–213. [Google Scholar]

- Yang, S.C. Uniformly asymptotic normality of the regression weighted estimator for negatively associated samples. Stat. Probab. Lett. 2003, 62, 101–110. [Google Scholar] [CrossRef]

- Cai, G.H. Strong laws of weighted SHINS of NA randomvariables. Metrika 2008, 68, 323–331. [Google Scholar] [CrossRef]

- Yuan, D.M.; An, J. Rosenthal type inequalities for asymptotically almost negatively associated random variables and applications. Chin. Ann. Math. B 2009, 40, 117–130. [Google Scholar] [CrossRef]

- Ko, M. Hájek-Rényi inequality for m-asymptotically almost negatively associated random vectors in Hilbert space and applications. J. Inequal. Appl. 2018, 2018, 80. [Google Scholar] [CrossRef]

- Ding, S.S.; Li, X.Q.; Dong, X.; Yang, W.Z. The consistency of the CUSUM–type estimator of the change-Point and its application. Mathematics 2020, 8, 2113. [Google Scholar] [CrossRef]

- Hušková, M. Gradual changes versus abrupt changes. J. Stat. Plan. Inference 1999, 76, 109–125. [Google Scholar] [CrossRef]

- Sun, Z.D.; Huang, Q.; Xue, B. Recent hydrological dynamic and its formation mechanism in Hulun Lake catchment. Arid Land Geogr. 2021, 44, 299–307. [Google Scholar]

- Billingsley, P. Convergence of Probability Measures; Wiley: New York, NY, USA, 1968. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, T.; Wei, Y. A Least Squares Estimator for Gradual Change-Point in Time Series with m-Asymptotically Almost Negatively Associated Errors. Axioms 2023, 12, 894. https://doi.org/10.3390/axioms12090894

Xu T, Wei Y. A Least Squares Estimator for Gradual Change-Point in Time Series with m-Asymptotically Almost Negatively Associated Errors. Axioms. 2023; 12(9):894. https://doi.org/10.3390/axioms12090894

Chicago/Turabian StyleXu, Tianming, and Yuesong Wei. 2023. "A Least Squares Estimator for Gradual Change-Point in Time Series with m-Asymptotically Almost Negatively Associated Errors" Axioms 12, no. 9: 894. https://doi.org/10.3390/axioms12090894