1. Introduction

Sound classification and recognition have been applied in different domains, e.g., speech recognition [

1], music classification [

2], environmental sound recognition, and biometric identification [

3]. Traditionally, in pattern recognition problems, features have been extracted from the actual audio traces (e.g., Statistical Spectrum Descriptor and Rhythm Histogram [

4]). However, by replacing audio traces by their visual representation, image classification techniques can be used to extract features on sound classification problems. The most commonly used visual representation of audio traces involves the display of their frequency spectrum as they vary in time, as in spectrograms [

5] and Mel-frequency Cepstral Coefficients spectrograms [

6]. A spectrogram can be described as a graph with two dimensions (time and frequency) plus a third dimension in terms of pixel intensity [

7] that represents the signal amplitude in a specific frequency at a particular time step. Costa et al. [

8,

9] applied several classification and texture analysis techniques to music genre classification using such a method. In [

9], the authors extracted grey level co-occurrence matrices (GLCMs) [

10] from spectrograms, while in [

8] they used the local binary pattern (LBP) [

11], which is a popular texture descriptor. In [

12], two other feature descriptors were extracted from audio images: local phase quantization (LPQ) and Gabor filters [

13]. In 2017, Nanni et al. [

2] demonstrated on multiple audio datasets how the fusion of acoustic features extracted from audio traces using state-of-the-art texture descriptors greatly improves the accuracy of acoustic and visual feature-based systems.

When deep learning became popular and Graphic Processing Units (GPUs) became more powerful at accessible costs, traditional pattern recognition changed, and attention focused even more on visual representations of acoustic traces. In the traditional machine learning framework, the optimization of the feature extraction step plays a key role, especially with the evolution of handcrafted features, which minimize the distance between patterns of the same class in the feature space while simultaneously attempting to maximize their distance from the patterns of other classes. Since deep classifiers learn the best features for describing patterns during the training process, these engineered features have diminished in significance, playing in the deep framework more of a supporting role when combined with features extracted from visual representations of acoustic traces that the deep classifiers determine are most informative. Another reason for the growing popularity of representing audio as images is the fact that the convolutional neural network (CNN), one of the most famous deep classifiers, requires images for its input. In their study, Humphrey and Bello [

14,

15] explored CNNs as an alternative approach to music classification problems, establishing the state-of-the-art in automatic chord detection and recognition. Nakashika et al. [

16] converted spectrograms into GCLM maps to train CNNs for music genre classification, and Costa et al. [

17] performed better than the state-of-the-art on the LMD dataset by fusing canonical approaches, e.g., LMP-trained SVMs with CNNs. Only a few studies, however, have focused on making these processes that were designed for image classification more specific for sound image recognition. In their study, Sigtia and Dixon [

18] focused on adjusting CNN parameters and structures and showed how using Rectified Linear Units (ReLu) instead of stochastic gradient descent with the Hessian Free optimization and sigmoid units reduced training time. Wang et al. [

19] presented an innovative CNN, which they named a

sparse coding CNN, for sound event recognition and retrieval, which, when evaluated under noisy and clean conditions, achieved competitive and sometimes better performance than the majority of other approaches. In Oramas [

20], a hybrid approach was presented that combined diverse modalities (album cover images, reviews, and audio tracks) for multi-label music genre classification by applying deep learning techniques appropriate for each modality, an approach that outperformed the single-modality methods. Finally, it should be mentioned that many methods in machine learning are also proposed for the human voice classification task: emotion recognition [

21], English accent classification, and gender classification [

22], to name a few.

Because deep classifiers have produced a patent improvement in music classification, researchers have begun to apply deep learning approaches to other sound recognition tasks, such as biodiversity assessment. Precise sound recognition systems can be of crucial importance in assessing and handling environmental threats like animal species loss and climate changes affecting wildlife fauna [

23]. Birds, for instance, have been acknowledged as an indicator species for ecological research, and their monitoring has become increasingly important for biodiversity preservation [

23], especially considering the minimal impact video and audio acquisition has on ecosystems. To date, many datasets are available to develop classifiers to identify and monitor different species, such as birds [

24,

25], whales [

26], frogs [

24], bats [

25], and cats [

27]. For instance, both Cap et al. [

28] and Salamon et al. [

29] have investigated the fusion of CNNs with other methods to classify animals. The former study combined CNNs with handcrafted features to classify marine animals [

30] using the Fish and MBARI benthic animal dataset [

31], while the latter fused deep learning with shallow learning for bird species identification based on 5428 bird flight calls from 43 species. In both cases, the fusion of CNNs with other canonical techniques outperformed the single approaches.

Existing approaches for animal audio classification can roughly be classified into two categories: fingerprinting and CNN approaches. Fingerprinting [

32] relies on the compact representation of audio traces so that each one can be efficiently matched against other audio clips to compare for similarity and dissimilarity [

33]. A sample of audio fingerprinting by CNN is shown in [

34], where the authors used a Siamese neural network to produce semantic representations of the audio traces. However, fingerprinting is useful only in finding an exact match; the problem addressed in this work involves audio classification. As already noted, CNN-based approaches [

35,

36] train networks for animal audio classification starting from an image representation of the audio signal. Unfortunately, CNNs require a large number of training examples to be effective (larger than available in most animal audio datasets) and cannot generalize to new classes without retraining the network. The objective of this work is to solve these issues by proposing an approach based on Dissimilarity Spaces. Recently, Agrawal [

37] proposed an approach that learns a distance model by training a Siamese neural network directly on dissimilarity values for brain image classification, and in [

38] an approach is proposed for online signature verification using a Siamese neural network and a contrastive loss function. In the latter work, the authors claim that the main advantage a Siamese network offers over a canonical CNN is the ability to generalize: the Siamese network approach they developed was shown to verify the authenticity of the signature of a new user without being trained on any examples from this user.

In this work, the dissimilarity space is created using a Siamese Neural Network (SNN) trained on the entire training set to define a distance function among the samples. The training phase for SNN is aimed at maximizing the distance between patterns of different classes; the testing phase of the SNN is used to compare two spectrograms to obtain a measure of their dissimilarity. In theory, all the training samples can be selected as centroids of the dissimilarity space. Dimensionality reduction is obtained by selecting a smaller number (

k) of prototypes via a clustering approach. The dissimilarity space is the space where each spectogram is represented by a its distance to each centroid/prototype: in this space, the SNN is used to compare the spectrogram to every centroid, obtaining the spectrogram’s dissimilarity vector, which is the final descriptor. The classification task is performed by a support vector machine (SVM) trained using the dissimilarity descriptors generated from the training samples. The proposed system is evaluated on two different datasets for animal audio classification: domestic cat sounds [

27] and bird sounds [

23]. Results for the different clustering methods and different values of the hyperparameter (

k) are reported.

In addition, an ensemble of SVMs trained on different dissimilarity spaces (by changing the value of

k) are combined by sum rule, and its performance is compared with (i) some canonical CNN approaches and (ii) the fusion of the SVMs and the CNNs. Experiments demonstrate for the first time that the use of dissimilarity spaces based on SNN is a feasible representation for image data and can, when combined with a general purpose classifier, achieve high classification performance. Because the descriptors obtained in the dissimilarity space show high diversity with respect to the representations based on CNNs, their fusion can be exploited in an ensemble, as proven by the high classification accuracy obtained by the fusion of CNNs with our approach. The MATLAB code used in this study is freely available at

https://github.com/LorisNanni.

2. Proposed Approach

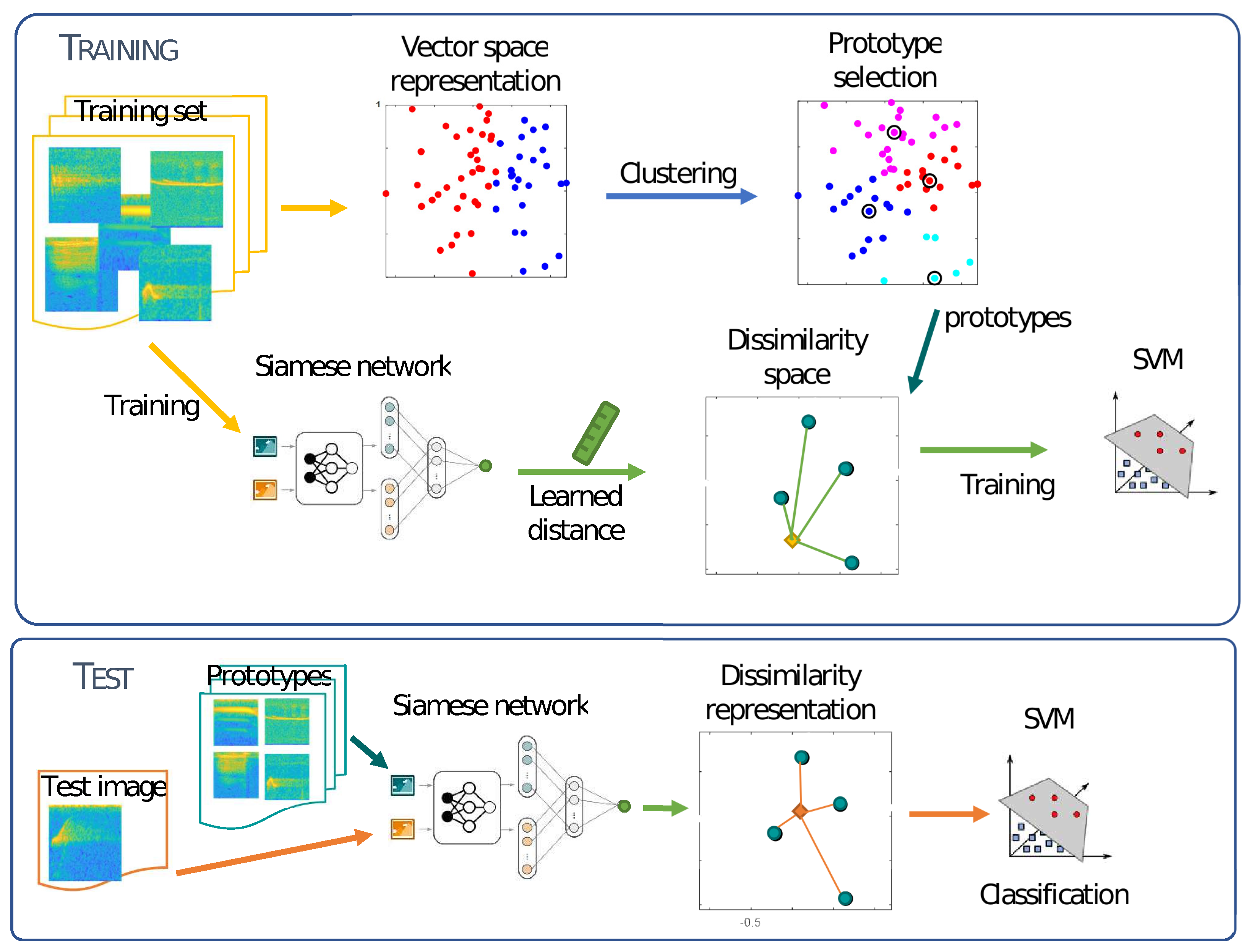

The proposed method for spectrogram classification using dissimilarity space is based on several steps which are schematized in

Figure 1. This figure is followed by the pseudo-code for each step (Algorithms 1 and 2). In order to define a similarity space, it is necessary to select a distance measure and a set of prototypes in the training phase. The distance measure

is learned by means of a SNN trained to maximize the similarity between couples of spectrograms in the same class, while minimizing the similarity for couples in different classes. The set of prototypes

are obtained as the

k centroids of the clusters generated by a supervised clustering procedure. The final step represents each training sample

x in the dissimilarity space by a feature vector

, where each component

is the distance between

x and the prototype

:

. These feature vectors are used to train a SVM for the final classification task. In the testing phase, each unlabeled spectrogram is first represented in the dissimilarity space by calculating its distance to all the prototypes, then the resulting feature vector is classified by SVM.

| Algorithm 1 Training phase |

- Input:

Training images (), training labels (), the number of training iterations (), batch size (), number of centroids (k), and the clustering technique (). - Output:

Trained SNN (), set of centroids (C), and trained SVM (). - 1:

trainSiamese() - 2:

clustering() - 3:

getDissSpaceProjection() - 4:

trainSVM()

|

| Algorithm 2 Testing phase |

- Input:

Test images (), trained SNN (), Set of centroids (C), Trained SVM (). - Output:

Actual test labels (). - 1:

getDissSpaceProjection() - 2:

predictSVM()

|

Each of the main functions used in the pseudo-code are described below.

2.1. Siamese Neural Network Training

The SNN, described in more detail in

Section 3, is trained to compare a pair of spectrograms by returning a measure of their similarity. Algorithm 3 presents the pseudocode for this phase and corresponds with step 1 of Algorithm 1. The SNN architecture is defined in steps 2 and 3 of algorithm Algorithm 3. Steps 5–8 are repeated for each training iteration. Step 5 extracts randomly

spectrograms pairs from the training set using the function

getSiameseBatch. Step 6 feeds the pairs to the network and computes loss and gradients for gradient descent. Steps 7 and 8 use the gradients and loss to update the weights of the fully connected layer and the twin subnetworks.

| Algorithm 3 Siamese training pseudocode |

- Input:

Training image (), training labels (), batch size (), and iterations (). - Output:

Trained SNN (). - 1:

functiontrainSiamese - 2:

network() - 3:

- 4:

for from 1 to do - 5:

getSiameseBatch() - 6:

evaluate() - 7:

update() - 8:

update() - 9:

end for - 10:

return - 11:

end function

|

Note: in the case where the SNN fails to converge on the training set, training is rerun.

2.2. Prototype Selection

In this phase,

k prototypes are extracted from the training set. In theory, every spectrogram in the training set could be selected as a prototype, but this would be too resource expensive and the dimensionality of the generated dissimilarity vectors would be too high. A better alternative is to employ clustering techniques to compute

k centroids for each class. Clustering would significantly reduce the dimension of the resulting dissimilarity space and thus make the process more viable. Algorithm 4 presents the pseudo code for prototype selection, which provides a selection from among four clustering procedures, which are used separately to cluster the training samples belonging to each class.

| Algorithm 4 Clustering pseudocode |

- Input:

Training images (), training labels (), number of clusters (k), and clustering technique (). - Output:

Centroids P. - 1:

functionclustering - 2:

number of classes from - 3:

- 4:

for from 1 to do - 5:

images of the class i from - 6:

switch do - 7:

case “k-means” kmeans(,) - 8:

case “k-medoids” kmedoids(,) - 9:

case “hierarchical” hierarchical(,) - 10:

case “spectral” spectral(,) - 11:

- 12:

end for - 13:

return P - 14:

end function

|

2.3. Projection in the Dissimilarity Space

Existent classification methods learn to classify patterns using their feature space. In this work, patterns are represented in a dissimilarity space in which every pattern

x is represented by its similarity to a selected set of prototypes

by a dissimilarity vector:

where the similarity among pattern

is obtained using a trained SNN. In order to project each image in the Dissimilarity space

, Algorithm 5 compares each input image (stored in

X in step 3) with the

k centroids (stored in

P) using the trained SNN

with the

predictSiamese function (step 4). The resulting feature space

F includes the projected features of all the input images.

| Algorithm 5 Projection in the Dissimilarity space pseudocode |

- Input:

Images (), Centroids (P), number of centroids (k), and trained SNN (). - Output:

Feature vectors (F). - 1:

function

getDissSpaceProjection - 2:

for from 1 to size() do - 3:

- 4:

predictSiamese() - 5:

end for - 6:

return F - 7:

end function

|

2.4. Support Vector Machine Training and Prediction

A Support Vector Machine (SVM) is a supervised learning model witch can be used to perform classification or regression. An SVM model represents each training example as a data point in space and is trained to construct one or more hyperplanes that divide the space in two, separating data points belonging to different classes (function

trainSVM). The model will predict (function

predictSVM) the class of a new pattern mapped in the space according to the side of the hyperplane the data point falls into. The hyperplane found by an SVM is defined as follows:

where

is the hyperplane,

x is the data point vector,

w is the hyperplane’s normal vector, and the

ratio is the hyperplane’s distance from the origin. The optimal hyperplane is the one that maximizes the distance to the nearest data point of any class, defined as

, which is also called the

margin. The

i-th point

will be assigned to the first class when

and to the second class when

. The points that lie on the margin line, defined by the equation

, completely describe the solution to the problem and are called

support vectors. An example of an optimal hyperplane with highlighted support vectors is shown in

Figure 2.

Because SVMs use hyperplanes to discriminate data, they do not work well with data that is not linearly separable in its original space. This problem can be solved using kernel functions, which map data into a much higher dimensional space, presumably to make the separation easier in that space. To keep the computational complexity to an acceptable level, the kernel function of choice has to be computationally efficient.

Being binary classifiers, SVMs can only determine the separation surface between two classes of data; however, it is possible to apply SVMs to multi-label problems by training an ensemble of SVMs and combining them. In this work, the One-Against-All approach is used, where for each class an SVM is trained to discriminate between a given class and all the other classes put together. The pattern is then assigned to the class that gives the higher confidence score.

4. Clustering

Clustering is the task of organizing data in groups (

Figure 4) so that patterns in the same cluster are more similar to each other than they are to patterns belonging to other clusters. Clustering is often used to find natural clusters in unlabeled data. Some clustering techniques calculate centroids during the process. A centroid is the mean vector of all the patterns in a cluster. Because it is a mean vector, it contains the most characterizing features of a cluster’s patterns. Centroids are computed to reduce the dissimilarity space size without losing too much information. The greater the number of centroids used for each class, the more information that is retained. In this work, samples are divided into classes before clustering, and the clustering procedure is applied to each class separately. The remainder of this section describes the four clustering techniques used in this study.

4.1. K-Means

K-means is a popular clustering algorithm that partitions a set of patterns into k clusters by assigning each observation to the cluster with the nearest centroid, or mean vector. There are several versions of this algorithm. In this study, the default implementation (with the Euclidean distance metric) in the MATLAB Statistics and Machine Learning Toolbox was applied. The standard k-means algorithm cycles through the following steps:

Choose k initial cluster centers (centroids) according to the k-means++ variation detailed below.

Compute point-to-cluster-centroid distances of all observations to each centroid.

Assign each observation to the cluster with the closest centroid.

Compute the average of the observations in each cluster to obtain k new centroids.

Repeat steps 2 through 4 until cluster assignments no longer change (i.e., until the algorithm converges) or until the maximum number of iterations is reached.

The k-means++ variation [

44] employs a heuristic to find the initial centroids:

Choose one center uniformly at random from among the data points.

For each data point x, compute , the distance between x and the nearest center that has already been chosen.

Choose one new data point at random as a new center, using a weighted probability distribution where a point x is chosen with probability proportional to .

Repeat Steps 2 and 3 until k centers have been chosen.

4.2. K-Medoids

K-medoids is a clustering technique very similar to k-means. It partitions a set of observations into k clusters by minimizing the sum of distances between a pattern and the center of that pattern’s cluster. The main difference between k-means and k-medoids is that, in the first case, the center of a cluster is its centroid, or mean, whereas, in the latter case, the center is a member, or medoid, of the cluster. A medoid is an observation in a cluster whose sum of distances from the other observations within the cluster is minimal. The basic algorithm for K-medoids loops through the following three steps:

Build-step: each k cluster is associated with a potential medoid. The first assignment can be performed in various ways; the standard MATLAB’s implementations uses the k-means++ heuristic.

Swap-step: within each cluster, each point is tested as a potential medoid by checking whether the sum of the within-cluster distances gets smaller using that particular point as the medoid. If so, the point is defined as a new medoid. Every point is then assigned to the cluster with the closest medoid.

Repeat steps 1–4 until medoids no longer swap (i.e., until the algorithm converges) or until the maximum number of iterations is reached.

4.3. Hierarchical

Hierarchical clustering is a clustering technique that groups data by building a hierarchy of clusters. The hierarchy tree that is obtained is divided into n levels chosen for the application at hand. There are two main categories of hierarchical clustering:

Agglomerative: each pattern starts in its own cluster; then, moving up the hierarchy, each cluster in one level is obtained by merging two clusters in the previous level.

Divisive: all patterns start in one cluster; then, by moving down the hierarchy, each pair of clusters is obtained by splitting a single cluster in the previous level.

In this work, the default MATLAB implementation of hierarchical clustering is used, which is the agglomerative type. The MATLAB algorithm loops through the following three steps:

Find the similarity or dissimilarity between every pair of objects in the dataset using a distance metric.

Group the objects into a binary hierarchical cluster tree by linking objects in pairs based on their distance. As objects are paired into binary clusters, the newly formed clusters are grouped into larger clusters until a hierarchical tree is formed.

Determine where to cut the hierarchical tree into clusters. Here, MATLAB’s function is used to prune branches off the bottom of the hierarchical tree and to assign all the objects below each cut to a single cluster. In this way, k clusters are obtained.

After applying this algorithm, centroids, as the mean vectors of each cluster, are computed.

4.4. Spectral

The spectral clustering technique splits data into groups using the data’s undirected similarity graph represented by a similarity matrix (also called an adjacency matrix). In the similarity graph, every·node is an observation, and two nodes are connected by an edge if their similarity is larger then a certain threshold, which is often 0. The algorithm uses four mathematical expressions:

Similarity Matrix: a square symmetrical matrix that represents the similarity graph. Letting M be the similarity matrix, each cell value is the similarity value of two connected nodes in the graph, which, in turn, represent the spectrogram pairs .

Degree Matrix: a diagonal matrix obtained by summing the similarity matrix rows. The degree matrix is defined by the equation

where

is the degree matrix, and

is a value of the similarity matrix.

Laplacian Matrix: another way of representing the similarity graph that is defined as

Here are the steps required by the spectral algorithm:

For each spectrogram in the dataset, define a local neighborhood. There are different ways such a neighborhood can be defined. The MATLAB implementation defaults to the nearest-neighbor method. Once the neighborhood is defined, compute the pairwise similarities of each spectrogram in the neighborhood using some distance metric.

Calculate the Laplacian matrix L.

Create a matrix V containing columns , where the columns are the k eigenvectors that correspond to the k smallest eigenvalues of the Laplacian matrix. The eigenvalues of the matrix are also called spectrum, hence the algorithm’s name.

Treating each row of V as a pattern, perform k-means clustering or k-medoids clustering.

Assign the original spectrograms in the dataset to the same clusters as their corresponding rows in V.

5. Experimental Results

The approach proposed in this paper is tested, along with some comparison canonical approaches, using a stratified ten-fold cross validation protocol and the classification accuracy as the performance indicator. Tests were performed on two datasets:

BIRDZ, which was also used as a control and as a real-world audio dataset in [

23]. The real-world tracks were obtained from the Xeno-canto Archive (

http://www.xeno-canto.org/) and cover 11 widespread North American bird species. Thus, the dataset contains 11 classes: (1) Blue Jay, (2) Song Sparrow, (3) Marsh Wren, (4) Common Yellowthroat, (5) Chipping Sparrow, (6) American Yellow Warbler, (7) Great Blue Heron, (8) American Crow, (9) Cedar Waxwing, (10) House Finch, and (11) Indigo Bunting.

BIRDZ is composed of five types of spectrograms: constant frequency, frequency modulated whistles, broadband pulses, broadband with varying frequency components, and strong harmonics, for a total of 2762 bird acoustic events with 339 detected “unknown” events corresponding to noise and other unknown species vocalizations. Including the “unknown class”,

BIRDZ has 3101 samples for 12 classes.

CAT, which was first presented in [

27,

45]. This dataset is composed of 10 balanced classes with about 300 samples per class: (1) Resting, (2) Warning, (3) Angry, (4) Defence, (5) Fighting, (6)·Happy, (7) Hunting mind, (8) Mating, (9) Mother call, and (10) Paining. The samples have an average duration of about 4s and were collected by the author from different sources: Kaggle, Youtube and Flickr.

CAT has a total of 2962 samples.

In

Table 2 and

Table 3, the performance of the four tested clustering algorithms is reported using different values of

(i.e., the number of clusters per class). As a baseline for comparison, the classification accuracy is also reported for the following well-known CNN models, each fine-tuned on the problem (for 30 epochs, using a batch size of 30, and a learning rate of 0.0001, no freezing):

Googlenet [

46], VGG16 and VGG19 [

47], all pretrained on ImageNet [

48];

GoogleNetP365, a GoogleNet model pretrained on Places365 [

49].

Moreover, in

Table 2 and

Table 3, the accuracy obtained by the following fusion approaches are reported:

KAll, fusion by sum rule of the four SVMs trained using the dissimilarity space built with all tested values for = 15, 30, 45, 60;

ALL, fusion by average rule of the four approaches KAll (one for each clustering method);

eCNN, fusion by sum rule of the four CNNs;

ALL+eCNN, fusion by sum rule between ALL and eCNN;

ALL+GoogleNet, fusion by sum rule between ALL and GoogleNet;

ALL+GoogleNetP365, fusion by sum rule between ALL and GoogleNetP365.

From the results reported in

Table 2 and

Table 3, the following conclusions can be drawn:

KAll outperforms each stand alone method based on a single value of ;

ALL outperforms each KAll in both datasets;

Performance of ALL is similar to that obtained by GoogleNet;

The ensemble ALL based on our dissimilarity space is a feasible representation for spectograms and achieves a performance that is comparable to the CNNs.

In both datasets, the best performance is obtained by ALL+eCNN, (even though the improvement with respect to eCNN is negligible).

ALL+GoogleNet strongly outperforms ALL and Googlenet; this light ensemble, which uses only one CNN, is our recommended method.

The proposed approach based on the representation of animal sound in a dissimilarity space has two main advantages: (1) it produces a compact representation on the signal (ranging from 15 to 60, depending on the number of clusters for the single space, to 150 for the KAll ensemble); (2) it generates a high diversity of classification results with respect to the baseline CNNs, which can be exploited to improve the performance in an ensemble method (i.e., ALL+GoogleNet).

In

Table 4, the ensembles proposed in this work are shown to achieve a performance on the two datasets that is similar to some of the state-of-the-art approaches reported in the literature. Two results are taken from [

27], and are labeled [

27] and [

27]-

.

Unfortunately, most published papers in the field of acoustic animal classification focus only on a single dataset. The authors of this paper are aware that evaluating the proposed approach on two different datasets instead of focusing on just one limits the strength of the conclusions drawn. Be that as it may, the experiments reported here prove the robustness of the proposed approach, which obtains good classification accuracy on two different problems without any ad-hoc parameter optimization and according to a clear and unambiguous testing protocol. As a result, the performances reported in this paper can be used for baseline comparisons with other audio classification methods developed in the future.