Cosine-Based Embedding for Completing Lightweight Schematic Knowledge in DL-Litecore †

Abstract

:1. Introduction

- We propose a cosine-based embedding model for completing lightweight schematic knowledge expressed in DL-Lite, in which two score functions are defined based on angle-based semantic space and translation-based semantic space so that the transitivity and symmetry of and relations can be preserved in our model simultaneously.

- We design a negative sampling strategy based on the mutual exclusion relationship between axioms and ones so that CosE can obtain better vector representations of concepts for schematic knowledge completion.

- We implement and evaluate our method based on four standard datasets constructed using real ontologies. Experiments on link prediction indicate that CosE could simultaneously preserve the logical properties (i.e., transitivity, symmetry) of relations and obtain better results than state-of-the-art models in most cases.

2. Related Work

2.1. Factual Knowledge Embedding

2.2. Schematic Knowledge Embedding

3. Preliminary

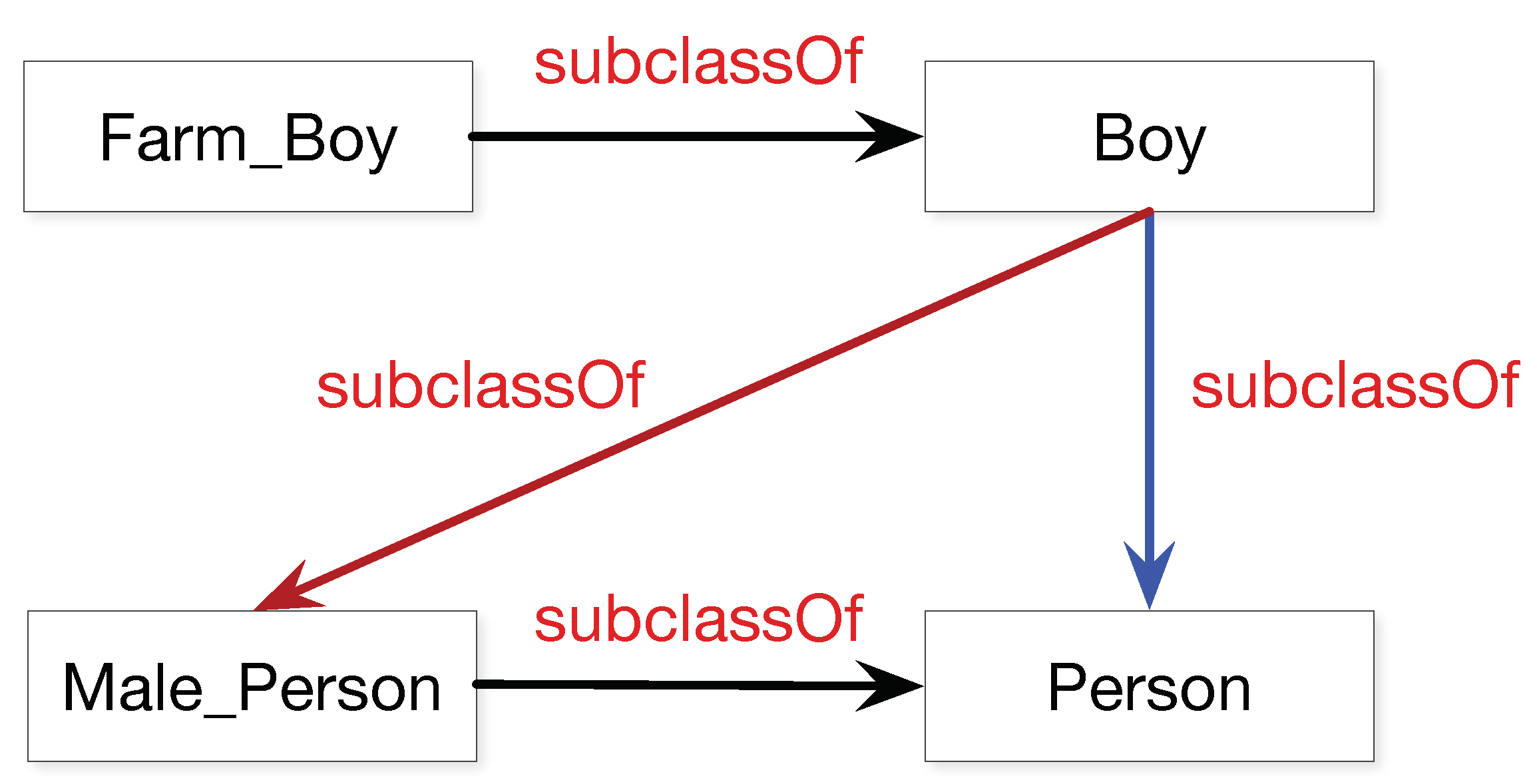

3.1. DL-Lite

3.2. Schematic Knowledge Embedding for DL-Lite

- 1.

- If and , are two axioms asserted in , then .

- 2.

- If and is an axiom asserted in , then ≈.

4. Method

4.1. The Framework of CosE

4.2. The Score Functions of CosE

4.3. Negative Sampling Based on Schematic Knowledge for the Training Model

| Algorithm 1: The algorithm of training CosE model |

|

5. Experiments and Results

5.1. Datasets

- FMA: It is an evolving ontology that has been maintained by University of Washington since 1994. It conceptualizes the phenotype structure of human in a machine-readable form, whose biomedical schematic knowledge has been open source in OAEI (http://oaei.ontologymatching.org/ accessed on 5 September 2022).

- FoodOn: It is a comprehensive ontology resource that spans various domains related to food, which can precisely describe foods commonly known in cultures from around the world.

- HeLiS: It is an ontology for promoting healthy lifestyles, which tries to conceptualize the domains of food and physical activity so that some unhealthy behaviors can be monitored.

- YAGO-On-t: It is built from the axioms in YAGO-On according to the transitivity property of . If there exist () and () in YAGO-On, we add an axiom () to YAGO-On-t.

- YAGO-On-s: It is built from the axioms in YAGO-On according to the symmetry property of . If there exists an axiom () in YAGO-On, we add an axiom () to YAGO-On-s.

5.2. Implementation Details

5.3. The Results of Link Prediction

5.4. The Results of Transitivity and Symmetry

5.5. Case Study

6. Discussion and Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, W.; Qi, G.; Ji, Q. Hybrid reasoning in knowledge graphs: Combing symbolic reasoning and statistical reasoning. Semant. Web 2020, 11, 53–62. [Google Scholar] [CrossRef]

- Fellbaum, C. WordNet; Springer: Dordrecht, The Netherlands, 2010; pp. 231–243. [Google Scholar]

- Lehmann, J.; Isele, R.; Jakob, M.; Jentzsch, A.; Kontokostas, D.; Mendes, P.N.; Hellmann, S.; Morsey, M.; Van Kleef, P.; Auer, S.; et al. DBpedia—A large-scale, multilingual knowledge base extracted from Wikipedia. Semant. Web 2015, 6, 167–195. [Google Scholar] [CrossRef] [Green Version]

- Suchanek, F.M.; Kasneci, G.; Weikum, G. YAGO: A Large Ontology from Wikipedia and WordNet. J. Web Semant. 2008, 6, 203–217. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Wang, R.; Liu, J.; Chen, Y.; Zhang, L.; Qi, G. Towards Empty Answers in SPARQL: Approximating Querying with RDF Embedding. In Proceedings of the 17th International Semantic Web Conference, Monterey, CA, USA, 8–12 October 2018; pp. 513–529. [Google Scholar]

- Ristoski, P.; Rosati, J.; Di Noia, T.; De Leone, R.; Paulheim, H. RDF2Vec: RDF graph embeddings and their applications. Semant. Web 2019, 10, 721–752. [Google Scholar] [CrossRef] [Green Version]

- Sun, Z.; Zhang, Q.; Hu, W.; Wang, C.; Chen, M.; Akrami, F.; Li, C. A Benchmarking Study of Embedding-based Entity Alignment for Knowledge Graphs. In Proceedings of the 46th International Conference on Very Large Data Bases, Tokyo, Japan, 31 August–4 September 2020; pp. 2326–2340. [Google Scholar]

- Weston, J.; Bordes, A.; Yakhnenko, O.; Usunier, N. Connecting Language and Knowledge Bases with Embedding Models for Relation Extraction. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Washington, DC, USA, 18–21 October 2013; pp. 1366–1371. [Google Scholar]

- Wang, Q.; Mao, Z.; Wang, B.; Guo, L. Knowledge Graph Embedding: A Survey of Approaches and Applications. IEEE Trans. Knowl. Data Eng. 2017, 29, 2724–2743. [Google Scholar] [CrossRef]

- Dai, Y.; Wang, S.; Xiong, N.N.; Guo, W. A Survey on Knowledge Graph Embedding: Approaches, Applications and Benchmarks. Electronics 2020, 9, 750. [Google Scholar] [CrossRef]

- Chen, X.; Jia, S.; Xiang, Y. A review: Knowledge reasoning over knowledge graph. Expert Syst. Appl. 2020, 141, 112948. [Google Scholar] [CrossRef]

- Rossi, A.; Barbosa, D.; Firmani, D.; Matinata, A.; Merialdo, P. Knowledge Graph Embedding for Link Prediction: A Comparative Analysis. IEEE Trans. Knowl. Data Eng. 2021, 15, 1–49. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-relational Data. In Proceedings of the 27th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013; pp. 2787–2795. [Google Scholar]

- Wang, Z.; Zhang, J.; Feng, J.; Chen, Z. Knowledge Graph Embedding by Translating on Hyperplanes. In Proceedings of the 28th AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; pp. 1112–1119. [Google Scholar]

- Lin, Y.; Liu, Z.; Sun, M.; Liu, Y.; Zhu, X. Learning Entity and Relation Embeddings for Knowledge Graph Completion. In Proceedings of the 29th AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 2181–2187. [Google Scholar]

- Nickel, M.; Tresp, V.; Kriegel, H.P. A Three-Way Model for Collective Learning on Multi-Relational Data. In Proceedings of the 28th International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011; pp. 809–816. [Google Scholar]

- Yang, B.; Yih, S.W.T.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Nickel, M.; Rosasco, L.; Poggio, T. Holographic Embeddings of Knowledge Graphs. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 1955–1961. [Google Scholar]

- Trouillon, T.; Welbl, J.; Riedel, S.; Gaussier, É.; Bouchard, G. Complex Embeddings for Simple Link Prediction. In Proceedings of the 33nd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2071–2080. [Google Scholar]

- Diaz, G.I.; Fokoue, A.; Sadoghi, M. EmbedS: Scalable, Ontology-aware Graph Embeddings. In Proceedings of the 21st International Conference on Extending Database Technology, Vienna, Austria, 26–29 March 2018; pp. 433–436. [Google Scholar]

- Lv, X.; Hou, L.; Li, J.; Liu, Z. Differentiating Concepts and Instances for Knowledge Graph Embedding. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 1971–1979. [Google Scholar]

- Zhang, Z.; Cai, J.; Zhang, Y.; Wang, J. Learning Hierarchy-Aware Knowledge Graph Embeddings for Link Prediction. In Proceedings of the 34th AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 3065–3072. [Google Scholar]

- Sun, Z.; Deng, Z.H.; Nie, J.Y.; Tang, J. RotatE: Knowledge Graph Embedding by Relational Rotation in Complex Space. In Proceedings of the 7th International Conference on Learning Representations, Los Angeles, CA, USA, 6–9 May 2019. [Google Scholar]

- Kulmanov, M.; Liu-Wei, W.; Yan, Y.; Hoehndorf, R. EL Embeddings: Geometric Construction of Models for the Description Logic EL++. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; pp. 6103–6109. [Google Scholar]

- Chen, J.; Hu, P.; Jiménez-Ruiz, E.; Holter, O.M.; Antonyrajah, D.; Horrocks, I. OWL2Vec*: Embedding of OWL ontologies. Mach. Learn. 2021, 110, 1813–1845. [Google Scholar] [CrossRef]

- Calvanese, D.; De Giacomo, G.; Lembo, D.; Lenzerini, M.; Rosati, R. DL-Lite: Tractable Description Logics for Ontologies. In Proceedings of the 20th National Conference on Artificial Intelligence, Pittsburgh, PA, USA, 9–13 July 2005; pp. 602–607. [Google Scholar]

- Fu, X.; Qi, G.; Zhang, Y.; Zhou, Z. Graph-based approaches to debugging and revision of terminologies in DL-Lite. Knowl.-Based Syst. 2016, 100, 1–12. [Google Scholar] [CrossRef]

- Ji, G.; He, S.; Xu, L.; Liu, K.; Zhao, J. Knowledge Graph Embedding via Dynamic Mapping Matrix. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics, Beijing, China, 26–31 July 2015; pp. 687–696. [Google Scholar]

- Xiao, H.; Huang, M.; Hao, Y.; Zhu, X. TransA: An Adaptive Approach for Knowledge Graph Embedding. arXiv 2015, arXiv:1509.05490. [Google Scholar]

- Dong, X.; Gabrilovich, E.; Heitz, G.; Horn, W.; Lao, N.; Murphy, K.; Strohmann, T.; Sun, S.; Zhang, W. Knowledge vault: A web-scale approach to probabilistic knowledge fusion. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 601–610. [Google Scholar]

- Liu, Q.; Jiang, H.; Evdokimov, A.; Ling, Z.H.; Zhu, X.; Wei, S.; Hu, Y. Probabilistic Reasoning via Deep Learning: Neural Association Models. arXiv 2016, arXiv:1603.07704. [Google Scholar]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; Berg, R.V.D.; Titov, I.; Welling, M. Modeling Relational Data with Graph Convolutional Networks. In Proceedings of the 15th Extended Semantic Web Conference, Heraklion, Crete, Greece, 3–7 June 2018; pp. 593–607. [Google Scholar]

- Shi, B.; Weninger, T. ProjE: Embedding Projection for Knowledge Graph Completion. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 1236–1242. [Google Scholar]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2D Knowledge Graph Embeddings. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 1811–1818. [Google Scholar]

- Zhang, W.; Chen, J.; Li, J.; Xu, Z.; Pan, J.Z.; Chen, H. Knowledge Graph Reasoning with Logics and Embeddings: Survey and Perspective. arXiv 2022, arXiv:2202.07412. [Google Scholar]

- Gutiérrez-Basulto, V.; Schockaert, S. From Knowledge Graph Embedding to Ontology Embedding? An Analysis of the Compatibility between Vector Space Representations and Rules. In Proceedings of the Sixteenth International Conference on Principles of Knowledge Representation and Reasoning., Tempe, AZ, USA, 30 October–2 November 2018; pp. 379–388. [Google Scholar]

- Guo, S.; Wang, Q.; Wang, L.; Wang, B.; Guo, L. Jointly Embedding Knowledge Graphs and Logical Rules. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–4 November 2016; pp. 192–202. [Google Scholar]

- Guo, S.; Wang, Q.; Wang, L.; Wang, B.; Guo, L. Knowledge Graph Embedding With Iterative Guidance From Soft Rules. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 4816–4823. [Google Scholar]

- Zhang, W.; Paudel, B.; Wang, L.; Chen, J.; Zhu, H.; Zhang, W.; Bernstein, A.; Chen, H. Iteratively Learning Embeddings and Rules for Knowledge Graph Reasoning. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2366–2377. [Google Scholar]

- Chen, M.; Tian, Y.; Chen, X.; Xue, Z.; Zaniolo, C. On2Vec: Embedding-based Relation Prediction for Ontology Population. In Proceedings of the 2018 SIAM International Conference on Data Mining, San Diego, CA, USA, 3–5 May 2018; pp. 315–323. [Google Scholar]

- Garg, D.; Ikbal, S.; Srivastava, S.K.; Vishwakarma, H.; Karanam, H.; Subramaniam, L.V. Quantum Embedding of Knowledge for Reasoning. In Proceedings of the Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 5595–5605. [Google Scholar]

- Staab, S.; Studer, R. Handbook on Ontologies, 2nd ed.; Springer: Dordrecht, The Netherlands, 2009. [Google Scholar]

- Noy, N.F.; Musen, M.A.; Mejino, J.L., Jr.; Rosse, C. Pushing the envelope: Challenges in a frame-based representation of human anatomy. Data Knowl. Eng. 2004, 48, 335–359. [Google Scholar] [CrossRef]

- Dooley, D.M.; Griffiths, E.J.; Gosal, G.S.; Buttigieg, P.L.; Hoehndorf, R.; Lange, M.C.; Schriml, L.M.; Brinkman, F.S.; Hsiao, W.W. FoodOn: A harmonized food ontology to increase global food traceability, quality control and data integration. npj Sci. Food 2018, 2, 23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dragoni, M.; Bailoni, T.; Maimone, R.; Eccher, C. HeLiS: An Ontology for Supporting Healthy Lifestyles. In Proceedings of the 17th International Semantic Web Conference, Monterey, Monterey, CA, USA, 8–12 October 2018; pp. 53–69. [Google Scholar]

- Gao, H.; Qi, G.; Ji, Q. Schema induction from incomplete semantic data. Intell. Data Anal. 2018, 22, 1337–1353. [Google Scholar] [CrossRef]

- Liu, H.; Wu, Y.; Yang, Y. Analogical Inference for Multi-relational Embeddings. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2168–2178. [Google Scholar]

- Han, X.; Cao, S.; Lv, X.; Lin, Y.; Liu, Z.; Sun, M.; Li, J. OpenKE: An Open Toolkit for Knowledge Embedding. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 139–144. [Google Scholar]

- Gao, H.; Zheng, X.; Li, W.; Qi, G.; Wang, M. Cosine-Based Embedding for Completing Schematic Knowledge. In Proceedings of the 8th CCF International Conference on Natural Language Processing and Chinese Computing, Dunhuang, China, 9–14 October 2019; pp. 249–261. [Google Scholar]

- Schlobach, S.; Cornet, R. Non-Standard Reasoning Services for the Debugging of Description Logic Terminologies. In Proceedings of the 18th International Joint Conference on Artificial Intelligence, Acapulco, Mexico, 9–15 August 2003; pp. 355–362. [Google Scholar]

- Poggi, A.; Lembo, D.; Calvanese, D.; Giacomo, G.D.; Lenzerini, M.; Rosati, R. Linking Data to Ontologies. J. Data Semant. 2008, 10, 133–173. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Sastry, G.; Askell, A.; Amodei, D. Language Models are Few-Shot Learners. In Proceedings of the Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020. [Google Scholar]

- Tay, Y.; Dehghani, M.; Bahri, D.; Metzler, D. Efficient Transformers: A Survey. ACM Comput. Surv. 2022; in press. [Google Scholar] [CrossRef]

- Xiao, G.; Calvanese, D.; Kontchakov, R.; Lembo, D.; Poggi, A.; Rosati, R.; Zakharyaschev, M. Ontology-Based Data Access: A Survey. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 5511–5519. [Google Scholar]

- Otero-Cerdeira, L.; Rodríguez-Martínez, F.J.; Gómez-Rodríguez, A. Ontology matching: A literature review. Expert Syst. Appl. 2015, 42, 949–971. [Google Scholar] [CrossRef]

- Paulheim, H. Knowledge graph refinement: A survey of approaches and evaluation methods. Semant. Web 2017, 8, 489–508. [Google Scholar] [CrossRef]

| Dataset | YAGO-On [4] | FMA [43] | FoodOn [44] | HeLiS [45] | YAGO-On-t | YAGO-On-s | |

|---|---|---|---|---|---|---|---|

| ♯ Concept | 46,109 | 78,988 | 28,182 | 17,550 | 46,109 | 46,109 | |

| Train | ♯ | 29,181 | 29,181 | 20,844 | 14,222 | 11,898 | 0 |

| ♯ | 32,673 | 32,673 | 17,398 | 13,782 | 0 | 10,000 | |

| Valid | ♯ | 1000 | 2000 | 1488 | 1015 | 1000 | 1000 |

| ♯ | 1000 | 2000 | 2714 | 1722 | 1000 | 1000 | |

| Test | ♯ | 1000 | 2000 | 2978 | 2032 | 5949 | 0 |

| ♯ | 1000 | 1000 | 2174 | 1722 | 0 | 10,000 | |

| YAGO-On | FMA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MRR | Hits@N(%) | MRR | Hits@N(%) | ||||||

| Raw | Filter | 10 | 3 | 1 | Raw | Filter | 10 | 3 | 1 | |

| TransE [13] | 0.241 † | 0.501 † | 0.784 † | 0.582 † | 0.343 † | 0.066 † | 0.325 † | 0.474 † | 0.371 † | 0.247 † |

| TransH [14] | 0.195 † | 0.196 † | 0.472 † | 0.252 † | 0.091 † | 0.008 † | 0.009 † | 0.018 † | 0.005 † | 0.003 † |

| TransR [15] | 0.090 † | 0.428 † | 0.588 † | 0.433 † | 0.355 † | 0.060 † | 0.411 † | 0.490 † | 0.440 † | 0.370 † |

| TransD [28] | 0.038 † | 0.176 † | 0.462 † | 0.305 † | 0.000 † | 0.034 | 0.149 † | 0.430 † | 0.250 † | 0.000 † |

| RESCAL [16] | 0.080 † | 0.339 † | 0.525 † | 0.392 † | 0.244 † | 0.047 † | 0.317 † | 0.469 † | 0.377 † | 0.236 † |

| HolE [18] | 0.155 | 0.231 | 0.523 | 0.254 | 0.099 | 0.039 | 0.112 | 0.311 | 0.120 | 0.033 |

| ComplEx [19] | 0.034 † | 0.237 † | 0.491 † | 0.403 † | 0.058 † | 0.033 † | 0.201 † | 0.484 † | 0.372 † | 0.011 † |

| Analogy [47] | 0.037 † | 0.301 † | 0.496 † | 0.429 † | 0.160 † | 0.037 † | 0.277 † | 0.487 † | 0.415 † | 0.130 † |

| TransC [21] | 0.112 ⋇ | 0.420 ⋇ | 0.698 ⋇ | 0.502 ⋇ | 0.298 ⋇ | – | – | – | – | – |

| RotatE [23] | 0.002 | 0.002 | 0.001 | 0.000 | 0.000 | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 |

| EL Embedding [24] | 0.008 | 0.008 | 0.005 | 0.000 | 0.000 | 0.014 | 0.014 | 0.019 | 0.001 | 0.001 |

| CosE | 0.229 | 0.558 | 0.859 | 0.648 | 0.495 | 0.093 | 0.386 | 0.628 | 0.391 | 0.271 |

| CosE | 0.247 | 0.657 | 0.861 | 0.714 | 0.550 | 0.117 | 0.507 | 0.640 | 0.545 | 0.423 |

| FoodOn | HeLiS | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MRR | Hits@N(%) | MRR | Hits@N(%) | ||||||

| Raw | Filter | 10 | 3 | 1 | Raw | Filter | 10 | 3 | 1 | |

| TransE [13] | 0.011 | 0.012 | 0.020 | 0.011 | 0.006 | 0.037 | 0.037 | 0.078 | 0.028 | 0.010 |

| TransH [14] | 0.010 | 0.012 | 0.020 | 0.013 | 0.006 | 0.026 | 0.026 | 0.050 | 0.020 | 0.006 |

| TransR [15] | 0.008 | 0.008 | 0.013 | 0.008 | 0.004 | 0.025 | 0.026 | 0.056 | 0.016 | 0.004 |

| TransD [28] | 0.003 | 0.003 | 0.007 | 0.004 | 0.000 | 0.008 | 0.008 | 0.018 | 0.005 | 0.000 |

| RESCAL [16] | 0.001 | 0.001 | 0.004 | 0.000 | 0.000 | 0.003 | 0.003 | 0.004 | 0.003 | 0.001 |

| HolE [18] | 0.002 | 0.002 | 0.007 | 0.001 | 0.000 | 0.035 | 0.035 | 0.078 | 0.024 | 0.008 |

| ComplEx [19] | 0.001 | 0.001 | 0.003 | 0.000 | 0.000 | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 |

| RotatE [23] | 0.009 | 0.009 | 0.017 | 0.008 | 0.004 | 0.023 | 0.023 | 0.033 | 0.026 | 0.012 |

| EL Embedding [24] | 0.001 | 0.001 | 0.002 | 0.001 | 0.000 | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 |

| CosE | 0.032 | 0.037 | 0.080 | 0.058 | 0.009 | 0.077 | 0.077 | 0.144 | 0.079 | 0.034 |

| CosE | 0.034 | 0.038 | 0.083 | 0.057 | 0.011 | 0.080 | 0.080 | 0.152 | 0.081 | 0.035 |

| YAGO-On | FMA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MRR | Hits@N(%) | MRR | Hits@N(%) | ||||||

| Raw | Filter | 10 | 3 | 1 | Raw | Filter | 10 | 3 | 1 | |

| TransE [13] | 0.375 † | 0.375 † | 0.722 † | 0.472 † | 0.179 † | 0.113 † | 0.113 † | 0.260 † | 0.110 † | 0.035 † |

| TransH [14] | 0.377 † | 0.377 † | 0.494 † | 0.179 † | 0.179 † | 0.110 † | 0.110 † | 0.295 † | 0.080 † | 0.040 † |

| TransR [15] | 0.063 † | 0.063 † | 0.216 † | 0.020 † | 0.000 † | 0.010 † | 0.010 † | 0.050 † | 0.050 † | 0.050 † |

| TransD [28] | 0.011 † | 0.011 † | 0.018 † | 0.008 † | 0.000 † | 0.050 † | 0.050 † | 0.050 † | 0.000 † | 0.000 † |

| RESCAL [16] | 0.069 † | 0.069 † | 0.143 † | 0.073 † | 0.035 † | 0.009 † | 0.009 † | 0.010 † | 0.005 † | 0.005 † |

| HolE [18] | 0.225 | 0.225 | 0.434 | 0.229 | 0.126 | 0.002 | 0.002 | 0.000 | 0.000 | 0.000 |

| ComplEx [19] | 0.001 † | 0.003 † | 0.002 † | 0.001 † | 0.001 † | 0.003 † | 0.003 † | 0.010 † | 0.000 † | 0.000 † |

| Analogy [47] | 0.003 † | 0.003 † | 0.035 † | 0.003 † | 0.003 † | 0.050 † | 0.050 † | 0.050 † | 0.050 † | 0.050 † |

| RotatE [23] | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 | 0.002 | 0.002 | 0.002 | 0.000 | 0.000 |

| EL Embedding [24] | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 |

| CosE | 0.393 | 0.393 | 0.724 | 0.471 | 0.226 | 0.128 | 0.128 | 0.295 | 0.165 | 0.030 |

| CosE | 0.397 | 0.397 | 0.726 | 0.458 | 0.240 | 0.145 | 0.145 | 0.290 | 0.140 | 0.065 |

| FoodOn | HeLiS | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MRR | Hits@N(%) | MRR | Hits@N(%) | ||||||

| Raw | Filter | 10 | 3 | 1 | Raw | Filter | 10 | 3 | 1 | |

| TransE [13] | 0.015 | 0.015 | 0.024 | 0.014 | 0.009 | 0.072 | 0.072 | 0.154 | 0.052 | 0.019 |

| TransH [14] | 0.011 | 0.014 | 0.025 | 0.015 | 0.006 | 0.050 | 0.050 | 0.097 | 0.037 | 0.012 |

| TransR [15] | 0.011 | 0.011 | 0.017 | 0.010 | 0.006 | 0.050 | 0.050 | 0.111 | 0.032 | 0.008 |

| TransD [28], | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 | 0.016 | 0.016 | 0.035 | 0.011 | 0.000 |

| RESCAL [16] | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 | 0.005 | 0.005 | 0.007 | 0.005 | 0.003 |

| HolE [18] | 0.004 | 0.004 | 0.013 | 0.002 | 0.000 | 0.070 | 0.070 | 0.155 | 0.048 | 0.016 |

| ComplEx [19] | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 |

| RotatE [23] | 0.017 | 0.017 | 0.033 | 0.016 | 0.017 | 0.046 | 0.046 | 0.065 | 0.051 | 0.024 |

| EL Embedding [24] | 0.001 | 0.001 | 0.001 | 0.001 | 0.000 | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 |

| CosE | 0.038 | 0.038 | 0.074 | 0.041 | 0.017 | 0.152 | 0.152 | 0.286 | 0.155 | 0.068 |

| CosE | 0.040 | 0.040 | 0.079 | 0.036 | 0.021 | 0.158 | 0.158 | 0.300 | 0.159 | 0.071 |

| YAGO-On | FMA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MRR | Hits@N(%) | MRR | Hits@N(%) | ||||||

| Raw | Filter | 10 | 3 | 1 | Raw | Filter | 10 | 3 | 1 | |

| TransE [13] | 0.120 † | 0.627 † | 0.846 † | 0.693 † | 0.507 † | 0.122 † | 0.639 † | 0.927 † | 0.741 † | 0.491 † |

| TransH [14] | 0.010 † | 0.014 † | 0.220 † | 0.010 † | 0.003 † | 0.005 † | 0.006 † | 0.002 † | 0.001 † | 0.001 † |

| TransR [15] | 0.132 † | 0.792 † | 0.974 † | 0.848 † | 0.710 † | 0.010 † | 0.010 † | 0.050 † | 0.050 † | 0.050 † |

| TransD [15] | 0.066 † | 0.774 † | 0.906 † | 0.621 † | 0.000 † | 0.066 † | 0.292 † | 0.873 † | 0.488 † | 0.000 † |

| RESCAL [16] | 0.100 † | 0.640 † | 0.920 † | 0.720 † | 0.500 † | 0.094 † | 0.640 † | 0.940 † | 0.750 † | 0.480 † |

| HolE [18] | 0.084 | 0.237 | 0.611 | 0.278 | 0.072 | 0.078 | 0.224 | 0.622 | 0.240 | 0.065 |

| ComplEx [19] | 0.066 † | 0.470 † | 0.970 † | 0.820 † | 0.110 † | 0.003 † | 0.003 † | 0.010 † | 0.000 † | 0.000 † |

| Analogy [47] | 0.074 † | 0.598 † | 0.988 † | 0.854 † | 0.317 † | 0.069 † | 0.557 † | 0.979 † | 0.823 † | 0.264 † |

| RotatERotatE [23] | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 |

| EL Embedding [24] | 0.016 | 0.016 | 0.010 | 0.001 | 0.000 | 0.028 | 0.028 | 0.037 | 0.002 | 0.001 |

| CosE | 0.066 | 0.723 | 0.994 | 0.824 | 0.764 | 0.058 | 0.644 | 0.962 | 0.617 | 0.512 |

| CosE | 0.097 | 0.917 | 0.996 | 0.970 | 0.860 | 0.090 | 0.870 | 0.990 | 0.950 | 0.780 |

| FoodOn | HeLiS | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MRR | Hits@N(%) | MRR | Hits@N(%) | ||||||

| Raw | Filter | 10 | 3 | 1 | Raw | Filter | 10 | 3 | 1 | |

| TransE [13] | 0.007 | 0.007 | 0.015 | 0.007 | 0.004 | 0.001 | 0.002 | 0.003 | 0.002 | 0.000 |

| TransH [14] | 0.010 | 0.010 | 0.015 | 0.011 | 0.006 | 0.002 | 0.002 | 0.003 | 0.002 | 0.000 |

| TransR [15] | 0.005 | 0.005 | 0.010 | 0.005 | 0.002 | 0.001 | 0.001 | 0.002 | 0.001 | 0.000 |

| TransD [28] | 0.005 | 0.006 | 0.014 | 0.009 | 0.001 | 0.001 | 0.001 | 0.002 | 0.000 | 0.000 |

| RESCAL [16] | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 | 0.001 | 0.001 | 0.001 | 0.001 | 0.000 |

| HolE [18] | 0.001 | 0.001 | 0.001 | 0.001 | 0.000 | 0.001 | 0.001 | 0.002 | 0.001 | 0.000 |

| ComplEx [19] | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 |

| RotatE [23] | 0.001 | 0.001 | 0.001 | 0.001 | 0.000 | 0.001 | 0.001 | 0.001 | 0.001 | 0.000 |

| EL Embedding [24] | 0.001 | 0.001 | 0.003 | 0.001 | 0.000 | 0.001 | 0.001 | 0.001 | 0.001 | 0.000 |

| CosE | 0.023 | 0.035 | 0.085 | 0.075 | 0.000 | 0.001 | 0.002 | 0.003 | 0.003 | 0.000 |

| CosE | 0.027 | 0.036 | 0.087 | 0.078 | 0.000 | 0.001 | 0.002 | 0.003 | 0.003 | 0.000 |

| YAGO-On-t | YAGO-On-s | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Metric | MRR | Hits@N(%) | MRR | Hits@N(%) | ||||||

| Raw | Filter | 10 | 3 | 1 | Raw | Filter | 10 | 3 | 1 | |

| TransE [13] | 0.064 † | 0.077 † | 0.142 † | 0.070 † | 0.001 † | 0.043 † | 0.369 † | 0.971 † | 0.514 † | 0.080 † |

| TransH [14] | 0.200 † | 0.238 † | 0.309 † | 0.214 † | 0.149 † | 0.001 † | 0.002 † | 0.001 † | 0.000 † | 0.000 † |

| TransR [15] | 0.012 † | 0.013 † | 0.003 † | 0.002 † | 0.001 † | 0.010 † | 0.010 † | 0.001 † | 0.000 † | 0.000 † |

| TransD [28] | 0.008 † | 0.009 † | 0.020 † | 0.001 † | 0.000 † | 0.001 † | 0.181 † | 0.512 † | 0.302 † | 0.000 † |

| RESCAL [16] | 0.016 † | 0.020 † | 0.055 † | 0.015 † | 0.004 † | 0.032 † | 0.166 † | 0.449 † | 0.226 † | 0.039 † |

| HolE [18] | 0.040 | 0.045 | 0.082 | 0.008 | 0.002 | 0.070 | 0.342 | 0.716 | 0.425 | 0.128 |

| ComplEx [19] | 0.001 † | 0.001 † | 0.001 † | 0.000 † | 0.000 † | 0.036 † | 0.253 | 0.743 | 0.439 | 0.000 |

| Analogy [47] | 0.001 † | 0.001 † | 0.001 † | 0.001 † | 0.000 † | 0.043 † | 0.315 † | 0.932 † | 0.538 † | 0.000 † |

| RotatE [23] | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 | 0.002 | 0.002 | 0.001 | 0.000 | 0.000 |

| EL Embedding [24] | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 | 0.003 | 0.003 | 0.075 | 0.017 | 0.003 |

| CosE | 0.203 | 0.260 | 0.403 | 0.246 | 0.177 | 0.038 | 0.322 | 0.983 | 0.554 | 0.000 |

| CosE | 0.207 | 0.284 | 0.408 | 0.261 | 0.218 | 0.038 | 0.323 | 0.992 | 0.557 | 0.000 |

| Head Concept | Relation | CosE | TransE [13] |

|---|---|---|---|

| Taksim_SK_footballers | subclassOf | person player site club football_player | person airport model peninsula singer |

| Soccer_clubs_in_the_ Greater_Los_Angeles_Area | subclassOf | site person player club football_player | person airport model singer writer |

| Tail Concept | Relation | CosE | TransE |

| Irish_male_models | DisjointWith | Filipino_female_models African_American_models LGBT_models | LGBT_models South_African_female_models American_male_models |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Zheng, X.; Gao, H.; Ji, Q.; Qi, G. Cosine-Based Embedding for Completing Lightweight Schematic Knowledge in DL-Litecore. Appl. Sci. 2022, 12, 10690. https://doi.org/10.3390/app122010690

Li W, Zheng X, Gao H, Ji Q, Qi G. Cosine-Based Embedding for Completing Lightweight Schematic Knowledge in DL-Litecore. Applied Sciences. 2022; 12(20):10690. https://doi.org/10.3390/app122010690

Chicago/Turabian StyleLi, Weizhuo, Xianda Zheng, Huan Gao, Qiu Ji, and Guilin Qi. 2022. "Cosine-Based Embedding for Completing Lightweight Schematic Knowledge in DL-Litecore" Applied Sciences 12, no. 20: 10690. https://doi.org/10.3390/app122010690

APA StyleLi, W., Zheng, X., Gao, H., Ji, Q., & Qi, G. (2022). Cosine-Based Embedding for Completing Lightweight Schematic Knowledge in DL-Litecore. Applied Sciences, 12(20), 10690. https://doi.org/10.3390/app122010690