A Novel Adaptive East–West Interface for a Heterogeneous and Distributed SDN Network

Abstract

:1. Introduction

2. Overview of Distributed SDN Network and Related Work

3. Proposed East–West Interface for a Heterogeneous and Distributed SDN Network

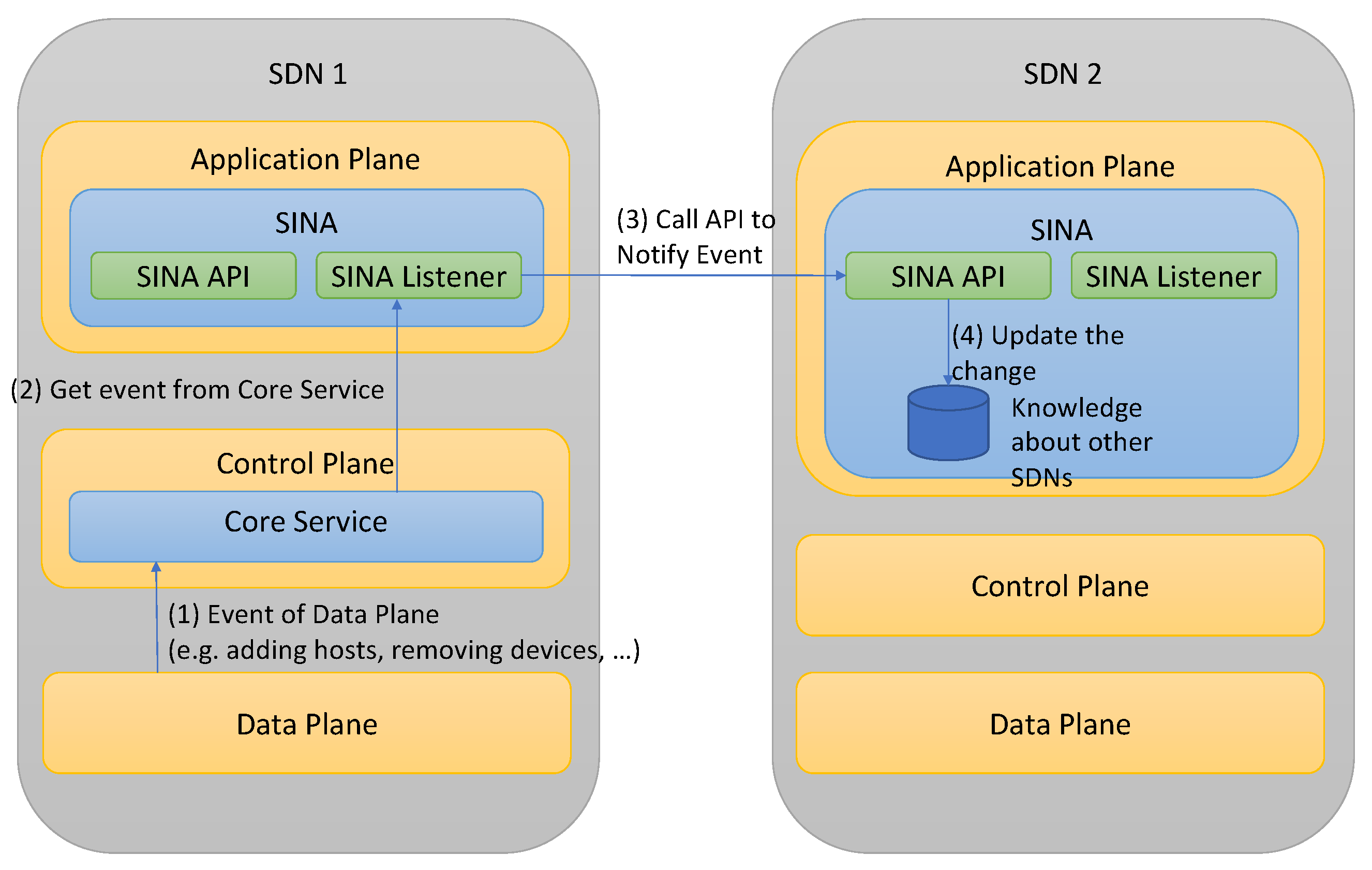

- SINA Listener component: Every network state update in the data plane is transmitted to the Core Service management module as an event. SINA Listener component, similar to a daemon process, aims to listen and capture these events in the core services of each controller. Then, they are disseminated to other SDN controllers.

- SINA API component: It is a set of definitions and protocols used to manage the network state database. SINA API allows controllers to make their resources accessible. For example, as shown in Figure 2, after capturing an event from the core service, controller one notifies it to controller two by calling the SINA API component. The latter is responsible for maintaining and updating the local network state database.

3.1. Development of SINA’s Components in ONOS

3.2. Development of SINA on OpenDaylight

3.3. Development of SINA on Faucet

3.4. Openness of SINA for Other SDN Controllers

4. Proposed RL-Based Consistency Algorithm

4.1. Quorum-Inspired Consistency Adaptation Strategy for SINA

- Strong consistency: The strict quorums require that the sum of write–quorum and read–quorum must be greater than the total number of controllers: . This consistency model guarantees that the read operation always obtains the latest data item value. However, it is costly in terms of network performance due to the high synchronization overheads.

- Eventual consistency: The partial quorums allow the sum of write–quorum and read–quorum not need to overlap the total number of replicas: .

4.2. Proposed Reinforcement Learning-Based Consistency Model for SINA

| Algorithm 1: Reinforcement learning consistency algorithm for SINA. |

|

4.3. Proposed Adaptive Architecture

5. Performance Evaluation

- SINA east–west interface validation: In the first step, we aim to examine the correctness of the SINA framework in applying the most straightforward consistency policy, the active replication, based on the broadcasting mechanism. Every update in each controller is sent to all other controllers. We also compare SINA’s performance with other east–west interfaces of three widely used frameworks: ONOS, OpenDaylight, and Faucet.

- SINA with adaptive consistency model: In the second phase, the performance of the SINA architecture in Figure 5 is evaluated in applying the quorum-based replication mechanism using the Q-Learning algorithm.

5.1. SINA East–West Interface Validation

5.1.1. Testbed Setup

- AARNet is initially established between the University of Melbourne in Australia, the Commonwealth Scientific and Industrial Research Organization (CSIRO) in all Australian state capitals and the Australian National University in Canberra. AARNet consists of 13 network switches and hosts.

- Abilene network generated by the Internet2 community with 11 network switches and hosts.

- AMRES network is the national research and education network of Serbia. It has 21 network switches and hosts.

- NORDU network, located in Nordic countries, consists of 5 network switches and their hosts.

- One virtual machine is used to emulate the network topology in using mininet.

- 12 remaining virtual machines are used to implement SDN controllers separately. Concretely, each one is launched in a virtual machine. Thus, we spent 12 virtual machines for the largest experiment where 12 controllers were deployed.

- Data transmission (bits): For each change of network topology, the SDN controller broadcasts the update information to others.

- Latency (second): The period between the sending of the notifications and the receipt of the last acknowledgment from the destination controllers.

- Overhead (%): The ratio between the controller packets to the total packets.

5.1.2. Experimental Results

5.2. SINA with Adaptive Consistency Model

5.2.1. Testbed Setup

5.2.2. Performance Metrics

- QoS-related metrics: Concerning the QoS-related metrics, we are based on the overhead and the delays. Concretely, they are WriteOverhead, ReadOverhead, WriteDelay, and ReadDelay. The WriteOverhead and ReadOverhead are the numbers of the inter-controller packets through TCP port 8787 for the read and the write commands, respectively. The WriteDelay is the latency the system takes to disseminate the message of topology update to controllers. The ReadDelay is the sum of the latency for sending topology requests to controllers and the latency for receiving all topology data from these requested controllers.

- Consistency-related metrics: Two staleness metrics are used: the time-based staleness (t_staleness) and the version-based staleness (v_staleness) [42,46]. The former is calculated by the period between the last time the local topology database is updated and the moment the controller performs a read operation on its local topology database. The latter is determined by the version difference between the real version of the local database and the one at the moment where the controller performs a read operation.

5.2.3. Experimental Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Prajapati, A.; Sakadasariya, A.; Patel, J. Software defined network: Future of networking. In Proceedings of the 2018 2nd International Conference on Inventive Systems and Control (ICISC), Coimbatore, India, 19–20 January 2018; pp. 1351–1354. [Google Scholar]

- Mokhtar, H.; Di, X.; Zhou, Y.; Hassan, A.; Ma, Z.; Musa, S. Multiple-level threshold load balancing in distributed SDN controllers. Comput. Netw. 2021, 198, 108369. [Google Scholar] [CrossRef]

- Midha, S.; Tripathi, K. Extended Security in Heterogeneous Distributed SDN Architecture. In Advances in Communication and Computational Technology; Springer: Berlin/Heidelberg, Germany, 2021; pp. 991–1002. [Google Scholar]

- Vizarreta, P.; Trivedi, K.; Mendiratta, V.; Kellerer, W.; Mas–Machuca, C. DASON: Dependability Assessment Framework for Imperfect Distributed SDN Implementations. IEEE Trans. Netw. Serv. Manag. 2020, 17, 652–667. [Google Scholar] [CrossRef]

- Li, M.; Wang, X.; Tong, H.; Liu, T.; Tian, Y. SPARC: Towards a scalable distributed control plane architecture for protocol-oblivious SDN networks. In Proceedings of the 2019 28th International Conference on Computer Communication and Networks (ICCCN), Valencia, Spain, 29 July–1 August 2019; pp. 1–9. [Google Scholar]

- Beiruti, M.A.; Ganjali, Y. Load migration in distributed sdn controllers. In Proceedings of the NOMS 2020—2020 IEEE/IFIP Network Operations and Management Symposium, Budapest, Hungary, 20–24 April 2020; pp. 1–9. [Google Scholar]

- Li, Z.; Hu, Y.; Hu, T.; Wei, P. Dynamic SDN controller association mechanism based on flow characteristics. IEEE Access 2019, 7, 92661–92671. [Google Scholar] [CrossRef]

- Sood, K.; Karmakar, K.K.; Yu, S.; Varadharajan, V.; Pokhrel, S.R.; Xiang, Y. Alleviating Heterogeneity in SDN-IoT Networks to Maintain QoS and Enhance Security. IEEE Internet Things J. 2019, 7, 5964–5975. [Google Scholar] [CrossRef]

- Moeyersons, J.; Maenhaut, P.J.; Turck, F.; Volckaert, B. Pluggable SDN framework for managing heterogeneous SDN networks. Int. J. Netw. Manag. 2020, 30, e2087. [Google Scholar] [CrossRef]

- Prasad, J.R.; Bendale, S.P.; Prasad, R.S. Semantic Internet of Things (IoT) Interoperability Using Software Defined Network (SDN) and Network Function Virtualization (NFV). In Semantic IoT: Theory and Applications. Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2021; Volume 941, pp. 399–415. [Google Scholar]

- Brockelsby, W.; Dutta, R. Traffic Analysis in Support of Hybrid SDN Campus Architectures for Enhanced Cybersecurity. In Proceedings of the 2021 24th Conference on Innovation in Clouds, Internet and Networks and Workshops (ICIN), Paris, France, 1–4 March 2021; pp. 41–48. [Google Scholar]

- Adedokun, E.; Adekale, A. Development of a Modified East–West Interface for Distributed Control Plane Network. Arid. Zone J. Eng. Technol. Environ. 2019, 15, 242–252. [Google Scholar]

- Gerola, M.; Lucrezia, F.; Santuari, M.; Salvadori, E.; Ventre, P.L.; Salsano, S.; Campanella, M. Icona: A peer-to-peer approach for software defined wide area networks using onos. In Proceedings of the 2016 Fifth European Workshop on Software-Defined Networks (EWSDN), Den Haag, The Netherlands, 10–11 October 2016; pp. 37–42. [Google Scholar]

- Almadani, B.; Beg, A.; Mahmoud, A. DSF: A Distributed SDN Control Plane Framework for the East/West Interface. IEEE Access 2021, 9, 26735–26754. [Google Scholar] [CrossRef]

- Yu, H.; Qi, H.; Li, K. WECAN: An efficient west–east control associated network for large-scale SDN systems. Mob. Netw. Appl. 2020, 25, 114–124. [Google Scholar] [CrossRef]

- Jin, X.; Gossels, J.; Rexford, J.; Walker, D. CoVisor: A Compositional Hypervisor for Software-Defined Networks. In Proceedings of the 12th USENIX Symposium on Networked Systems Design and Implementation (NSDI 15), Oakland, CA, USA, 4–6 May 2015; pp. 87–101. [Google Scholar]

- Yang, G.; Yu, B.y.; Jin, H.; Yoo, C. Libera for programmable network virtualization. IEEE Commun. Mag. 2020, 58, 38–44. [Google Scholar] [CrossRef]

- Ahmad, S.; Mir, A.H. Scalability, consistency, reliability and security in sdn controllers: A survey of diverse sdn controllers. J. Netw. Syst. Manag. 2021, 29, 9. [Google Scholar] [CrossRef]

- Gao, K.; Nojima, T.; Yu, H.; Yang, Y.R. Trident: Toward Distributed Reactive SDN Programming With Consistent Updates. IEEE J. Sel. Areas Commun. 2020, 38, 1322–1334. [Google Scholar] [CrossRef]

- Panda, A.; Scott, C.; Ghodsi, A.; Koponen, T.; Shenker, S. Cap for networks. In Proceedings of the Second ACM SIGCOMM Workshop on Hot Topics in Software Defined Networking, Hong Kong, China, 16 August 2013; pp. 91–96. [Google Scholar]

- Botelho, F.; Ribeiro, T.A.; Ferreira, P.; Ramos, F.M.; Bessani, A. Design and implementation of a consistent data store for a distributed SDN control plane. In Proceedings of the 2016 12th European Dependable Computing Conference (EDCC), Gothenburg, Sweden, 5–9 September 2016; pp. 169–180. [Google Scholar]

- Aslan, M.; Matrawy, A. Adaptive consistency for distributed SDN controllers. In Proceedings of the 2016 17th International Telecommunications Network Strategy and Planning Symposium (Networks), Montreal, QC, Canada, 26–28 September 2016; pp. 150–157. [Google Scholar]

- Zhang, T.; Giaccone, P.; Bianco, A.; De Domenico, S. The role of the inter-controller consensus in the placement of distributed SDN controllers. Comput. Commun. 2017, 113, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Benamrane, F.; Benaini, R. An East–West interface for distributed SDN control plane: Implementation and evaluation. Comput. Electr. Eng. 2017, 57, 162–175. [Google Scholar] [CrossRef]

- Tootoonchian, A.; Ganjali, Y. Hyperflow: A distributed control plane for openflow. In Proceedings of the 2010 Internet Network Management Conference on Research on Enterprise Networking, San Jose, CA, USA, 27 April 2010; Volume 3. [Google Scholar]

- Gude, N.; Koponen, T.; Pettit, J.; Pfaff, B.; Casado, M.; McKeown, N.; Shenker, S. NOX: Towards an operating system for networks. ACM SIGCOMM Comput. Commun. Rev. 2008, 38, 105–110. [Google Scholar] [CrossRef]

- Stribling, J.; Sovran, Y.; Zhang, I.; Pretzer, X.; Li, J.; Kaashoek, M.F.; Morris, R.T. Flexible, Wide-Area Storage for Distributed Systems with WheelFS. In Proceedings of the NSDI, Boston, MA, USA, 22–24 April 2009; Volume 9, pp. 43–58. [Google Scholar]

- ONF. Atomix: A Reactive Java Framework for Building Fault-Tolerant Distributed Systems. Available online: https://atomix.io (accessed on 15 February 2021).

- Koponen, T.; Casado, M.; Gude, N.; Stribling, J.; Poutievski, L.; Zhu, M.; Ramanathan, R.; Iwata, Y.; Inoue, H.; Hama, T.; et al. Onix: A distributed control platform for large-scale production networks. In Proceedings of the OSDI, Vancouver, BC, Canada, 4–6 October 2010; Volume 10, pp. 1–6. [Google Scholar]

- Medved, J.; Varga, R.; Tkacik, A.; Gray, K. OpenDaylight: Towards a model-driven sdn controller architecture. In Proceedings of the IEEE International Symposium on a World of Wireless, Mobile and Multimedia Networks 2014, Sydney, NSW, Australia, 19 June 2014; pp. 1–6. [Google Scholar]

- Akka: Build Powerful Reactive, Concurrent, and Distributed Applications More Easily. Available online: https://www.lightbend.com (accessed on 6 April 2021).

- Prometheus. Prometheus—Monitoring System & Time Series Database. Available online: https://prometheus.io/ (accessed on 17 May 2021).

- Hassas Yeganeh, S.; Ganjali, Y. Kandoo: A framework for efficient and scalable offloading of control applications. In Proceedings of the First Workshop on Hot Topics in Software Defined Networks, Helsinki, Finland, 13 August 2012; pp. 19–24. [Google Scholar]

- Dixit, A.; Hao, F.; Mukherjee, S.; Lakshman, T.; Kompella, R.R. ElastiCon; an elastic distributed SDN controller. In Proceedings of the 2014 ACM/IEEE Symposium on Architectures for Networking and Communications Systems (ANCS), Marina del Rey, CA, USA, 20–21 October 2014; pp. 17–27. [Google Scholar]

- Nguyen, H.N.; Tran, H.A.; Souihi, S. SINA: An SDN Inter-Clusters’ Network Application. Available online: https://gitlab.com/souihi/sina (accessed on 3 May 2021).

- Berde, P.; Gerola, M.; Hart, J.; Higuchi, Y.; Kobayashi, M.; Koide, T.; Lantz, B.; O’Connor, B.; Radoslavov, P.; Snow, W.; et al. ONOS: Towards an open, distributed SDN OS. In Proceedings of the Third Workshop on Hot Topics in Software Defined Networking, Chicago, IL, USA, 22 August 2014; pp. 1–6. [Google Scholar]

- Bailey, J.; Stuart, S. Faucet: Deploying SDN in the enterprise. Commun. ACM 2016, 60, 45–49. [Google Scholar] [CrossRef] [Green Version]

- Bjorklund, M. YANG—A Data Modeling Language for the Network Configuration Protocol (NETCONF); IETF RFC 2010. Available online: https://www.rfc-editor.org/info/rfc6020 (accessed on 9 February 2022).

- Asadollahi, S.; Goswami, B.; Sameer, M. Ryu controller’s scalability experiment on software defined networks. In Proceedings of the 2018 IEEE International Conference on Current Trends in Advanced Computing (ICCTAC), Bangalore, India, 1–2 February 2018; pp. 1–5. [Google Scholar]

- Mohammed, S.H.; Jasim, A.D. Evaluation of firewall and load balance in fat-tree topology based on floodlight controller. Indones. J. Electr. Eng. Comput. Sci. 2020, 17, 1157–1164. [Google Scholar] [CrossRef]

- Khan, M.A.; Goswami, B.; Asadollahi, S. Data visualization of software-defined networks during load balancing experiment using floodlight controller. In Data Visualization; Springer: Singapore, 2020; pp. 161–179. [Google Scholar]

- Bailis, P.; Venkataraman, S.; Franklin, M.J.; Hellerstein, J.M.; Stoica, I. Probabilistically bounded staleness for practical partial quorums. arXiv 2012, arXiv:1204.6082. [Google Scholar]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Brakmo, L.S.; O’Malley, S.W.; Peterson, L.L. TCP Vegas: New techniques for congestion detection and avoidance. In Proceedings of the Conference on Communications Architectures, Protocols and Applications, London, UK, 31 August–2 September 1994; pp. 24–35. [Google Scholar]

- Bailis, P.; Venkataraman, S.; Franklin, M.J.; Hellerstein, J.M.; Stoica, I. Quantifying eventual consistency with PBS. VLDB J. 2014, 23, 279–302. [Google Scholar] [CrossRef] [Green Version]

- Gomes, E.R.; Kowalczyk, R. Dynamic analysis of multiagent q-learning with epsilon-greedy exploration. In Proceedings of the 26th International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009. [Google Scholar]

- Jawaharan, R.; Mohan, P.M.; Das, T.; Gurusamy, M. Empirical evaluation of sdn controllers using mininet/wireshark and comparison with cbench. In Proceedings of the 2018 27th International Conference on Computer Communication and Networks (ICCCN), Hangzhou, China, 30 July–2 August 2018; pp. 1–2. [Google Scholar]

- Klassen, F. TcpReplay. Available online: https://tcpreplay.appneta.com/ (accessed on 19 July 2021).

- Haßlinger, G.; Hohlfeld, O. The Gilbert-Elliott model for packet loss in real time services on the Internet. In Proceedings of the 14th GI/ITG Conference-Measurement, Modelling and Evalutation of Computer and Communication Systems, Dortmund, Germany, 31 March–2 April 2008; pp. 1–15. [Google Scholar]

| Architecture | Design Type | Scalability Level | Consistency Level | Language | |||||

|---|---|---|---|---|---|---|---|---|---|

| Physically Centralized | Physically Distributed | Flat | Hierarchical | Strong | Eventual | Weak | |||

| Hyperflow | 🗸 | 🗸 | High | 🗸 | C++ | ||||

| POX | 🗸 | Weak | 🗸 | Python | |||||

| NOX | 🗸 | Weak | 🗸 | Python | |||||

| ONOS | 🗸 | 🗸 | Very high | 🗸 | 🗸 | Java | |||

| ONIX | 🗸 | 🗸 | Very high | 🗸 | 🗸 | Python C | |||

| OpenDaylight | 🗸 | 🗸 | Very high | 🗸 | Java | ||||

| Faucet | 🗸 | 🗸 | High | 🗸 | Python | ||||

| Kandoo | 🗸 | 🗸 | Very high | 🗸 | Python, C++, C | ||||

| Network | Pr | Nordu 1989 | Abilene | Aarnet | Amres | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Low. Lim. | Up. Lim. | Mean | Low. Lim. | Up. Lim. | Mean | Low. Lim. | Up. Lim. | Mean | Low. Lim. | Up. Lim. | Mean | ||

| 6 diff. | DT | 2981.3 | 4251.3 | 3616.3 | 7372.1 | 9145.5 | 8258.8 | 9186.6 | 12,936 | 11,061.3 | 17,887.4 | 19,061 | 18,474.2 |

| Ltc | 0.011 | 0.051 | 0.031 | 0.03 | 0.078 | 0.054 | 0.044 | 0.066 | 0.055 | 0.081 | 0.111 | 0.096 | |

| 6 ONOS | DT | 3075.2 | 4764.6 | 3919.9 | 9181 | 9945.8 | 9563.4 | 10,710.6 | 13,448 | 12,079.3 | 13,107 | 17,680 | 15,393.5 |

| Ltc | 0.029 | 0.047 | 0.038 | 0.033 | 0.087 | 0.06 | 0.073 | 0.085 | 0.079 | 0.063 | 0.167 | 0.115 | |

| 6 ODL | DT | 2301.2 | 6014.2 | 4157.7 | 9633.8 | 10,072.6 | 9853.2 | 12,503.6 | 14,572 | 13,537.8 | 18,321.6 | 22,623 | 20,472.3 |

| Ltc | 0.043 | 0.081 | 0.062 | 0.028 | 0.078 | 0.053 | 0.087 | 0.105 | 0.096 | 0.103 | 0.145 | 0.124 | |

| 6 Faucet | DT | 3532 | 4113.8 | 3822.9 | 9716.6 | 10,258.2 | 9987.4 | 10,509.2 | 13,991 | 12,250.1 | 16,108.6 | 22,271 | 19,189.8 |

| Ltc | 0.013 | 0.051 | 0.032 | 0.022 | 0.084 | 0.053 | 0.025 | 0.071 | 0.048 | 0.081 | 0.095 | 0.088 | |

| 9 diff. | DT | 3214.5 | 5004.3 | 4109.4 | 11,995.5 | 13,825.3 | 12,910.4 | 10,487.6 | 15,964 | 13,225.8 | 22,114 | 24,676 | 23,395 |

| Ltc | 0.034 | 0.042 | 0.038 | 0.034 | 0.088 | 0.061 | 0.056 | 0.084 | 0.07 | 0.12 | 0.136 | 0.128 | |

| 9 ONOS | DT | 5204.8 | 8107.6 | 6656.2 | 11,610.2 | 12,056.8 | 11,833.5 | 12,636.8 | 13,253 | 12,944.9 | 17,453 | 19,472 | 18,462.5 |

| Ltc | 0.019 | 0.063 | 0.041 | 0.052 | 0.076 | 0.064 | 0.067 | 0.137 | 0.102 | 0.124 | 0.162 | 0.143 | |

| 9 ODL | DT | 3793.6 | 5384.8 | 4589.2 | 10,489.8 | 12,534.8 | 11,512.3 | 13,621 | 15,726 | 14,673.5 | 22,144.4 | 23,362 | 22,753.2 |

| Ltc | 0.049 | 0.077 | 0.063 | 0.049 | 0.077 | 0.063 | 0.107 | 0.143 | 0.125 | 0.093 | 0.131 | 0.112 | |

| 9 Faucet | DT | 3930.2 | 6152.4 | 5041.3 | 9680.4 | 10,245.6 | 9963 | 11,229.6 | 15,404 | 13,316.8 | 18,595.6 | 21,983 | 20,289.3 |

| Ltc | 0.023 | 0.055 | 0.039 | 0.0445 | 0.0535 | 0.049 | 0.049 | 0.067 | 0.058 | 0.101 | 0.159 | 0.13 | |

| 12 diff. | DT | 2441.9 | 6014.3 | 4228.1 | 9916.9 | 14,352.3 | 12,134.6 | 12,226 | 13,234 | 12,730 | 18,922 | 20,701 | 19,811.5 |

| Ltc | 0.082 | 0.092 | 0.087 | 0.077 | 0.091 | 0.084 | 0.125 | 0.141 | 0.133 | 0.105 | 0.177 | 0.141 | |

| 12 ONOS | DT | 3558.8 | 6825.4 | 5192.1 | 12,694 | 13,894.2 | 13,294.1 | 11,902.6 | 13,280 | 12,591.3 | 16,710.6 | 19,784 | 18,247.3 |

| Ltc | 0.07 | 0.11 | 0.09 | 0.05 | 0.132 | 0.091 | 0.117 | 0.135 | 0.126 | 0.175 | 0.193 | 0.184 | |

| 12 ODL | DT | 2725 | 6279.4 | 4502.2 | 13,039.5 | 15,437.1 | 14,238.3 | 10,348.4 | 12,148 | 11,248.2 | 16,884.2 | 21,364 | 19,124.1 |

| Ltc | 0.057 | 0.085 | 0.071 | 0.059 | 0.143 | 0.101 | 0.157 | 0.225 | 0.191 | 0.1508 | 0.1712 | 0.161 | |

| 12 Faucet | DT | 6158.7 | 7425.1 | 6791.9 | 8765.8 | 12,440 | 10,602.9 | 13,756.6 | 14,084 | 13,920.3 | 17,272.4 | 21,876 | 19,574.2 |

| Ltc | 0.017 | 0.047 | 0.032 | 0.077 | 0.093 | 0.085 | 0.065 | 0.097 | 0.081 | 0.14 | 0.158 | 0.149 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoang, N.-T.; Nguyen, H.-N.; Tran, H.-A.; Souihi, S. A Novel Adaptive East–West Interface for a Heterogeneous and Distributed SDN Network. Electronics 2022, 11, 975. https://doi.org/10.3390/electronics11070975

Hoang N-T, Nguyen H-N, Tran H-A, Souihi S. A Novel Adaptive East–West Interface for a Heterogeneous and Distributed SDN Network. Electronics. 2022; 11(7):975. https://doi.org/10.3390/electronics11070975

Chicago/Turabian StyleHoang, Nam-Thang, Hai-Nam Nguyen, Hai-Anh Tran, and Sami Souihi. 2022. "A Novel Adaptive East–West Interface for a Heterogeneous and Distributed SDN Network" Electronics 11, no. 7: 975. https://doi.org/10.3390/electronics11070975