The use of social robots has significantly expanded over the past decade, finding applications in various real-world settings such as healthcare, education, and customer service [

4]. In healthcare, social robots like Paro and Pepper provide companionship and support to patients, particularly the elderly and those with cognitive impairments [

5,

6]. In education, robots like NAO and Robovie enhance learning experiences and engage students in interactive activities [

7,

8]. In the customer service sector, robots such as Pepper and various hotel concierge robots assist customers and improve service efficiency [

9]. These examples highlight the growing acceptance of social robots and their utility in enhancing human–robot interactions across diverse environments. The Shadow robot, designed explicitly for human-following tasks, is particularly useful in care environments and customer or visitor service settings.

For social robots to be effective, they must possess several key characteristics. Firstly, they need robust human detection and interaction capabilities to understand and respond to human cues accurately [

10]. Secondly, they should exhibit adaptive behaviors to cater to user needs and preferences, ensuring a personalized interaction experience [

2]. Additionally, they must be designed with safety and reliability, mainly when operating near humans, to prevent accidents and ensure user trust [

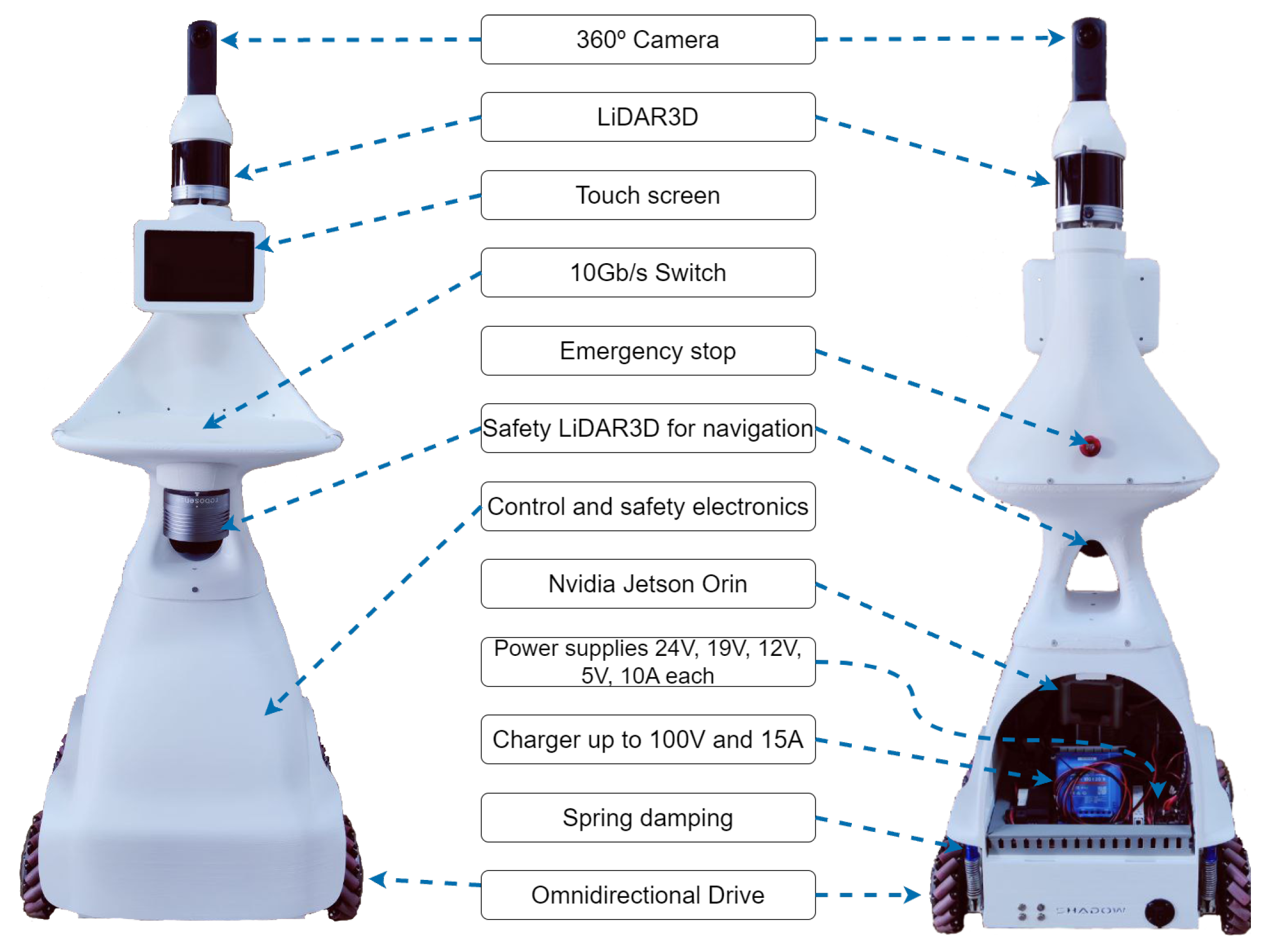

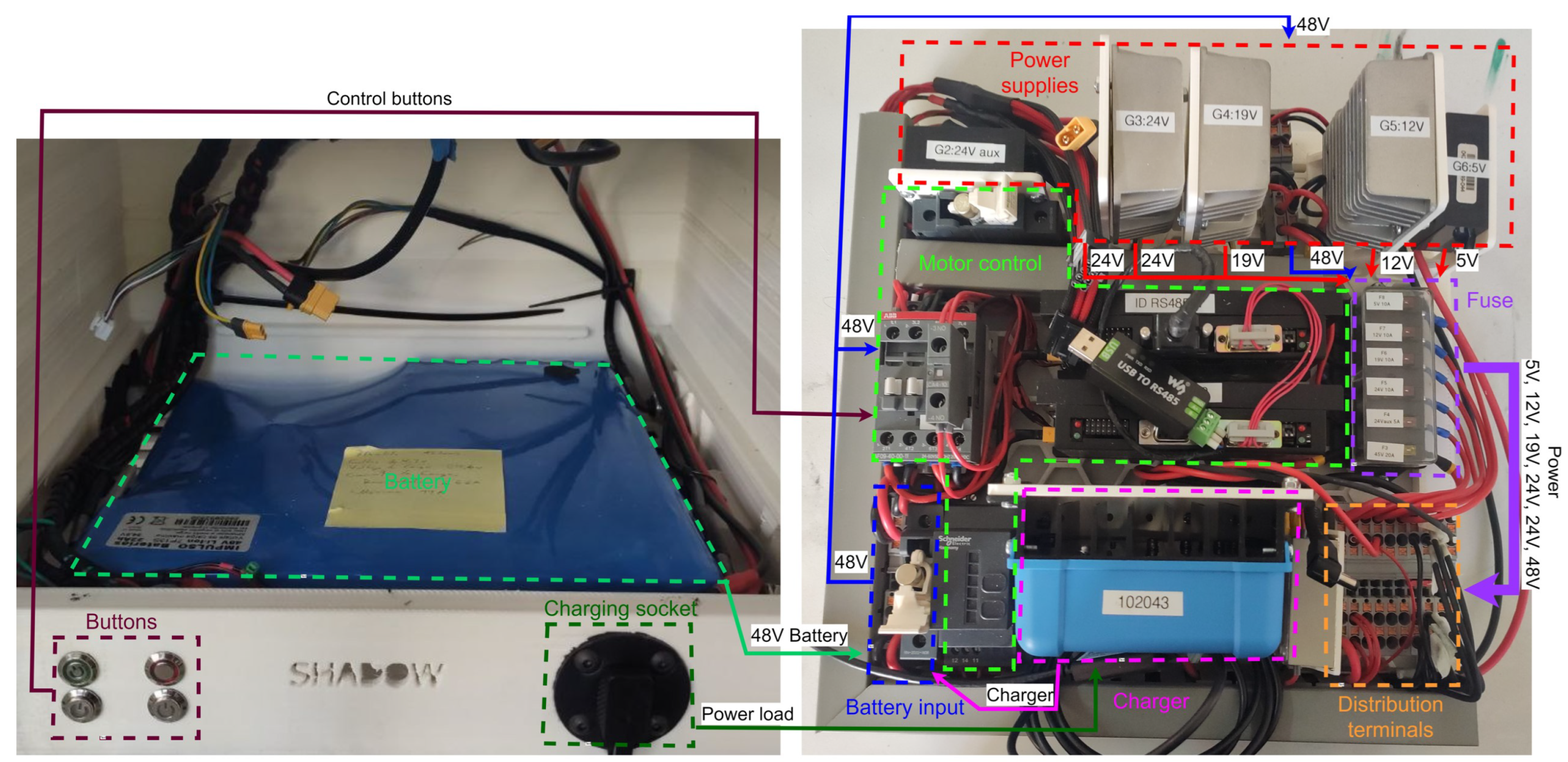

11]. These characteristics are essential for successfully integrating social robots into daily life, as they directly impact the robot’s ability to interact meaningfully and safely with humans. Our Shadow robot is equipped with various sensors, including 360

o cameras and 3D LiDAR, along with a Jetson Orin, which enables the execution of specific algorithms for human detection, the calculation of safe and socially accepted routes, and interaction with humans in the environment.

Robot Comparison

As part of the design process of Shadow, we analyzed and compared several well-known commercial robots available for purchase and some custom-made robots built in research labs [

4]. The comparison shown in the

Table 1 considers only the functionalities offered by these units and advertised by the manufacturers.

One of the key aspects of Shadow’s design is its omnidirectional movement capability, which allows it to navigate in all directions with great agility. This feature is essential for maneuvering in complex and narrow environments. In contrast, most of the other compared robots, such as Morphia [

19], WaPOCHI [

21], Dinerbot T5 [

22], Bellabot [

23], Amy Waitress [

24], Hobbit [

25,

26], and Giraff [

27], lack this capability and rely on more traditional movements. TIAGo [

20,

28,

29] has similar capabilities but they depend on the specific model or conditions.

All the robots analyzed, including Shadow, Morphia, TIAGo, WaPOCHI, Dinerbot T5, Bellabot, Amy Waitress, Hobbit and Giraff, feature autonomous navigation. This functionality enables robots to move independently within a defined environment, using sensors and algorithms to avoid obstacles and follow predefined routes. Autonomous navigation is critical for ensuring that robots can operate without constant human supervision, making them more efficient and practical for various applications.

Shadow, along with Morphia, TIAGo, WaPOCHI, Hobbit, and Giraff, includes the ability to detect people, which is essential for social interactions and human-assisted tasks. The capability to detect people allows these robots to interact meaningfully with their environment and the humans within it. However, Dinerbot T5, Bellabot, and Amy Waitress do not offer this functionality, potentially limiting their usefulness in applications where human interaction is crucial. The absence of this feature in some robots restricts their potential in settings where recognizing and responding to human presence is important.

Object manipulation is an advanced feature that allows robots to interact with their environment physically. Hobbit excels in this area, providing significant capabilities for handling objects. Shadow and TIAGo have limited object manipulation capabilities that depend on the model or conditions. On the other hand, Morphia, WaPOCHI, Dinerbot T5, Bellabot, Amy Waitress, and Giraff lack this capability, restricting their functionality to tasks that do not require direct physical manipulation. The ability to manipulate objects is precious in industrial and service applications where handling and moving items are necessary.

Video calling is a valuable feature for remote communication and telepresence. Although Shadow does not currently include this capability, it is designed to support it in the future, as it is equipped with the necessary components, such as a camera, microphone, and screen. In contrast, robots like Morphia, TIAGo, Hobbit, and Giraff already offer video-calling functionality, making them suitable for remote assistance and communication with distant users. However, WaPOCHI, Dinerbot T5, Bellabot, and Amy Waitress lack this feature, which may limit their effectiveness in scenarios requiring remote interaction. Incorporating video-calling capabilities would enhance the versatility of these robots, making them more effective in environments where visual and audio communication is crucial.

All the compared robots, including Shadow, have transportation capabilities. This feature allows robots to carry objects from one place to another within a defined environment, which is fundamental for logistics, services, and customer care applications. Efficient transportation of items is necessary in various settings, and the ability to perform this task makes these robots highly valuable for tasks involving movement and delivery of goods.

The ability to track and follow a specific person is an essential feature for assistive and social robots. Shadow, Morphia, TIAGo, WaPOCHI, Hobbit, and Giraff include this functionality, which is critical for applications where the robot needs to maintain proximity to a human user. Dinerbot T5, Bellabot, and Amy Waitress lack this capability, limiting their use in applications where human tracking is essential. Tracking capabilities ensure that robots can provide continuous assistance and support by staying close to the user.

Expandability is another important aspect of robot design. Shadow is designed to be expandable, allowing for the addition of new components and upgrades. TIAGo offers limited expandability, depending on the model, while Giraff has some expandable capabilities. Morphia, WaPOCHI, Dinerbot T5, Bellabot, Amy Waitress, and Hobbit are not expandable, which may limit their ability to adapt to new technologies and future needs. Expandability ensures that robots can be updated and improved, extending their usefulness and relevance.

Lastly, a low-cost focus is a significant consideration for making robots accessible for various applications. Shadow and several other robots, such as Morphia, Bellabot, Amy Waitress, Hobbit, and Giraff, are designed with a low-cost focus, making them accessible for various applications. TIAGo and other more advanced models do not emphasize cost reduction, which may make them less accessible for some users or applications where budget is a primary concern. The focus on cost efficiency allows for broader adoption and deployment of robotic technologies in different fields.

For a more extensive but similar comparison, see [

30]. The table shows significant similarities in many of the compared functionalities. However, the day-to-day use of robots in research labs involves more subtle aspects that condition their long-term availability, such as adding more powerful computers, connectivity, software updates, part replacement, adaptation to new sensors, warranty, etc.

Shadow addresses the deficiencies found in many commercial robots, such as TIAGo, WaPOCHI, and Dinerbot T5. These robots often come with closed software and operating systems customized by the manufacturer, making upgrades to sensors, hardware, and processing components difficult. They also suffer from challenging maintenance procedures and high costs, with no option for users to create or customize the robot to their specific needs, and sometimes even require a subscription for the use of different functions. Shadow, on the other hand, provides solutions to these problems by offering an open platform that allows for easy customization and upgrades, making it more adaptable and user-friendly at a lower cost. This is particularly valuable to researchers, educators, and developers.