Virtual Hand Deformation-Based Pseudo-Haptic Feedback for Enhanced Force Perception and Task Performance in Physically Constrained Teleoperation

Abstract

:1. Introduction

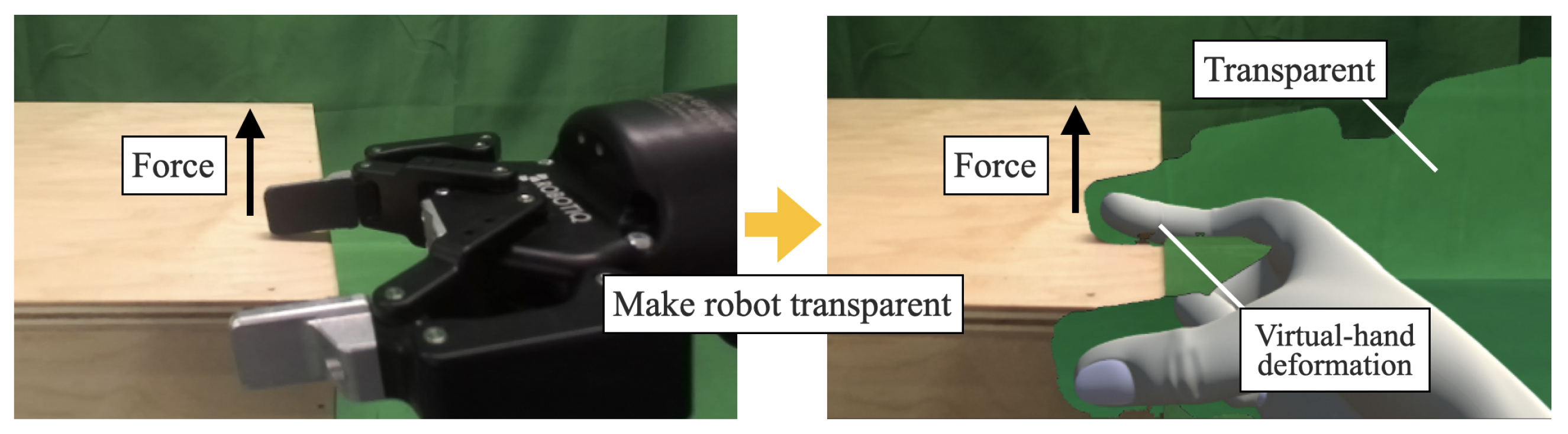

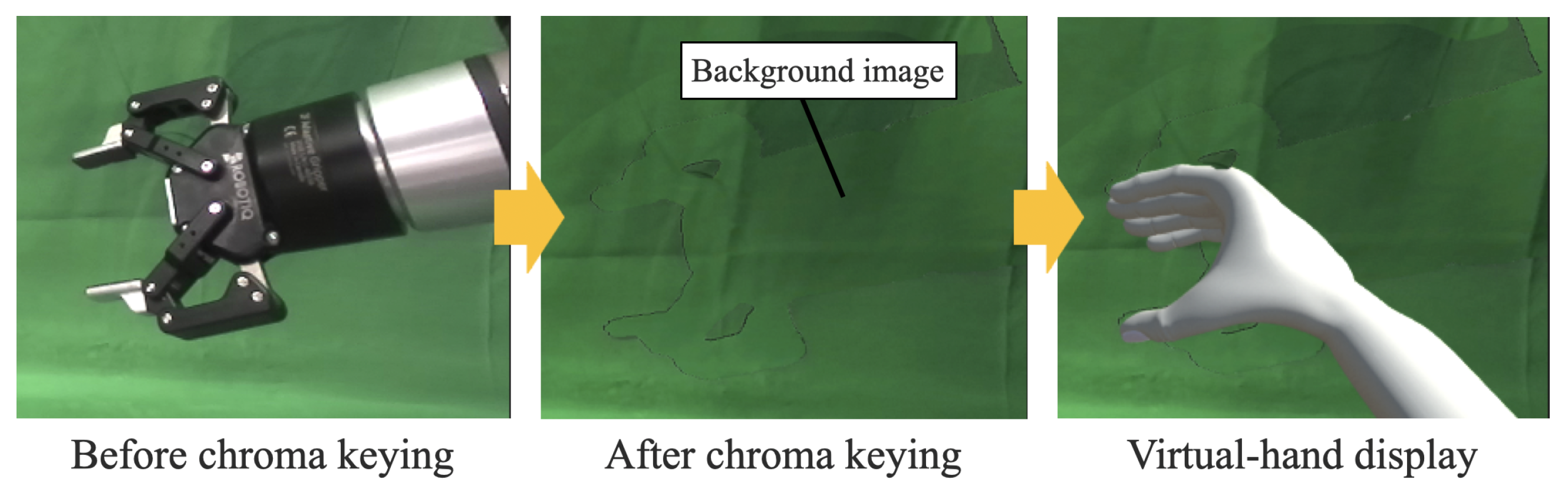

- A teleoperation interface was developed that displays virtual hands on transparent robotic hands. This telepresence interface is designed to minimize the discrepancy between human and robot appearance and motion, thereby improving the transparency of teleoperation.

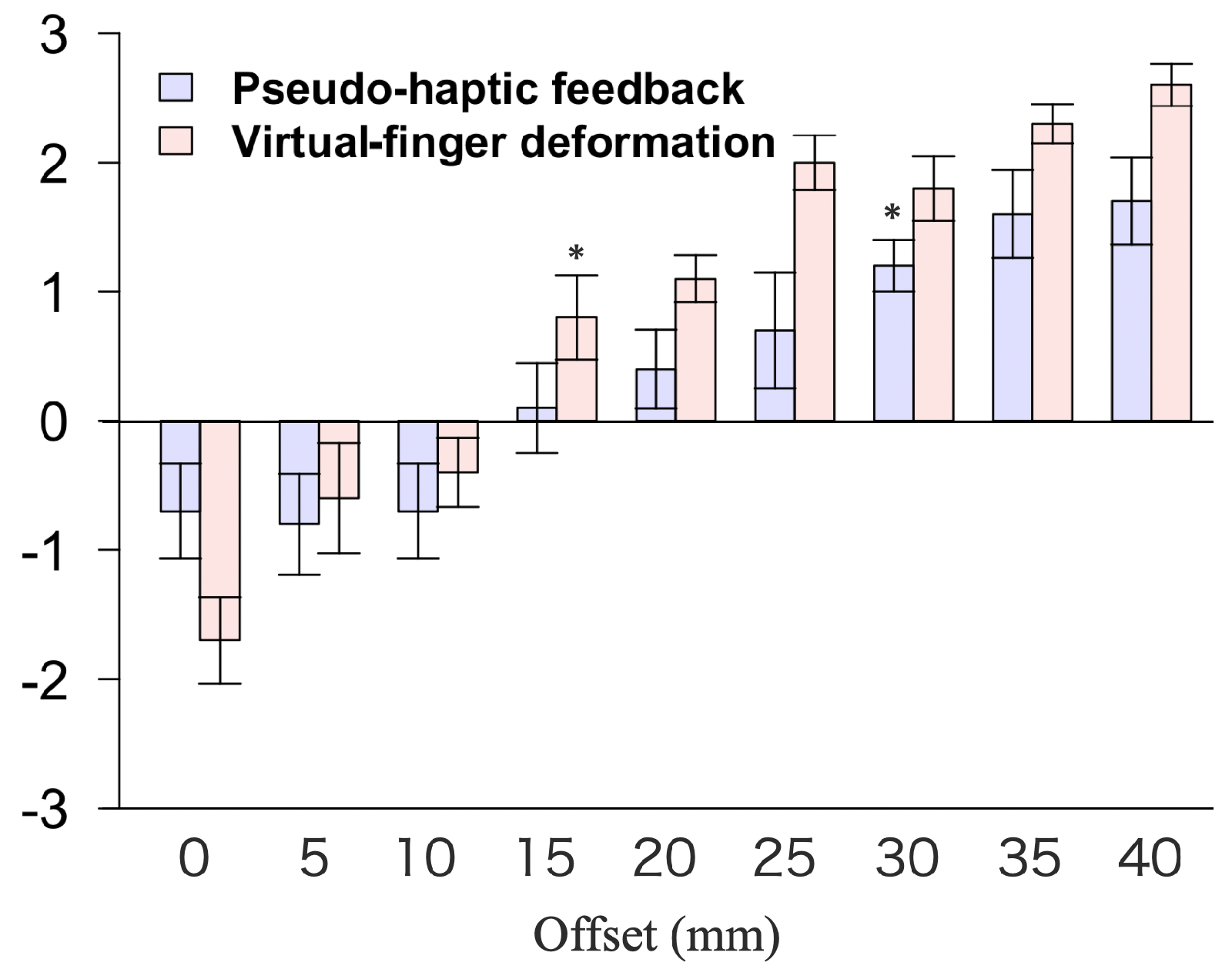

- A system was constructed in which the displayed virtual hands were deformed by the force applied to the robotic hands. This design allows for more sensitive force perception than conventional pseudo-haptic feedback by creating the illusion that the operator’s hands are being deformed.

- The basic performance of the proposed interface was evaluated and compared with conventional pseudo-haptic feedback systems and physical force-feedback devices, demonstrating the effectiveness of this new interface.

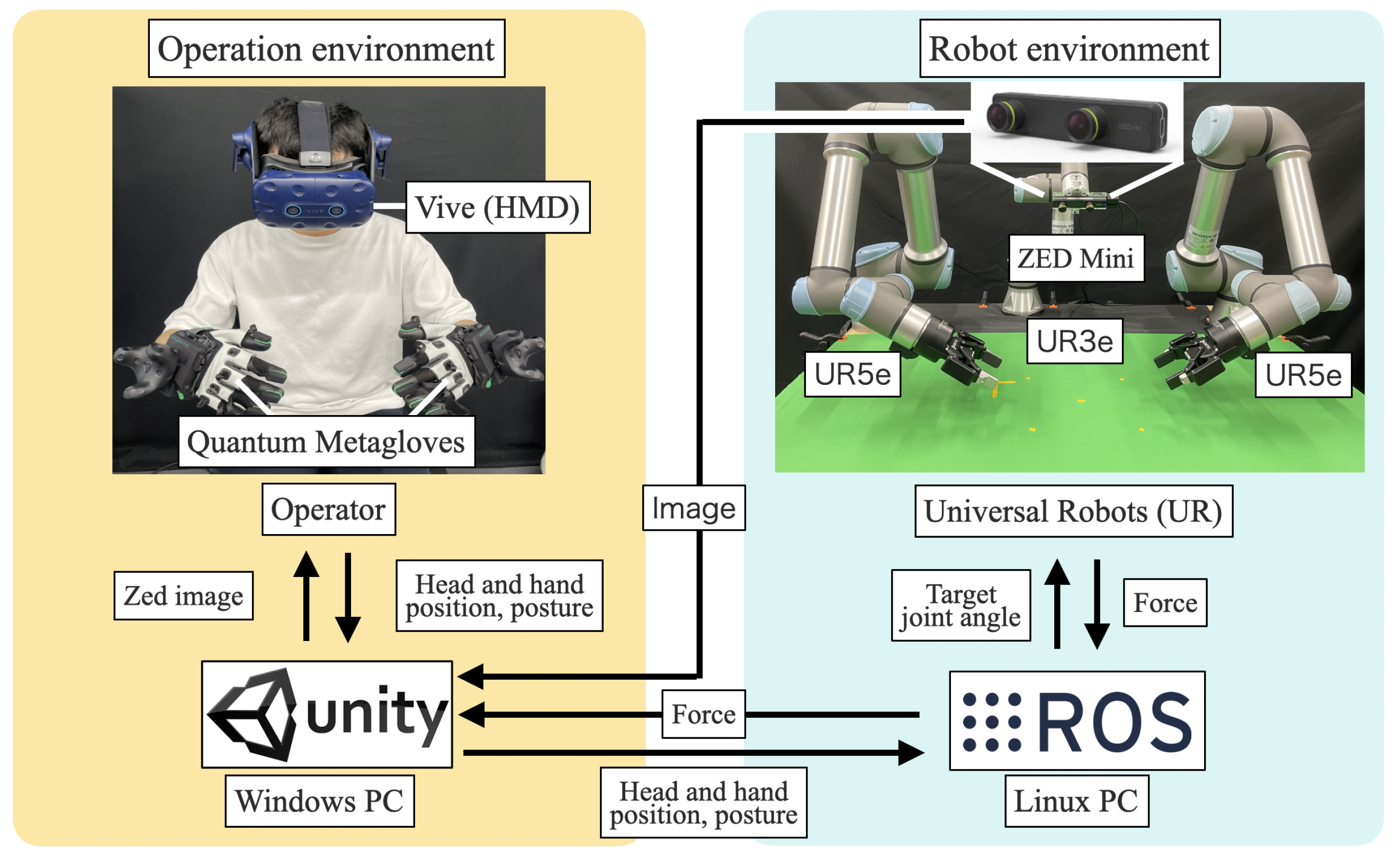

2. Teleoperation System

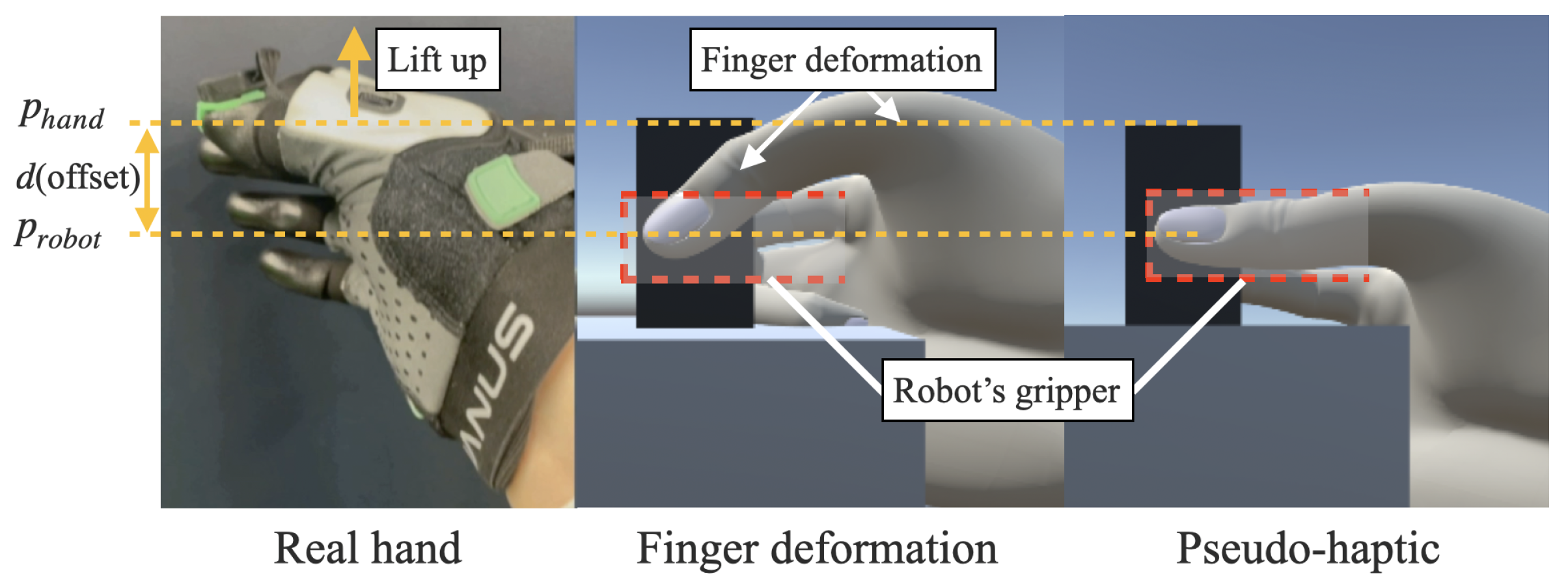

3. Conventional Pseudo-Haptic Feedback and Finger Deformation Methods

3.1. Conventional Pseudo-Haptic Feedback Method in Experiment 1

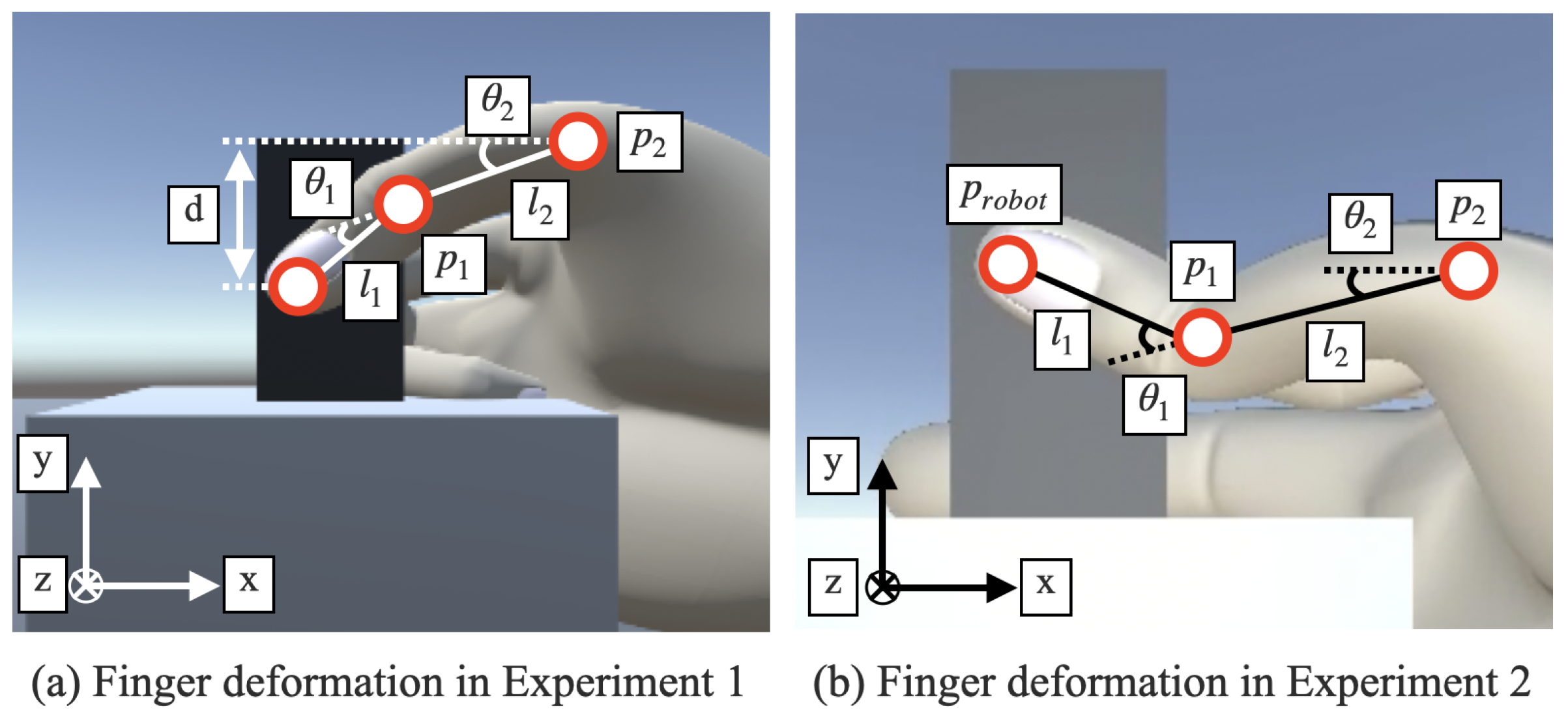

3.2. Finger Deformation Method in Experiment 1

3.3. Finger Deformation Method in Experiment 2

4. Experiment 1—Weight Comparison Experiment

4.1. Participants

4.2. Experimental Condition

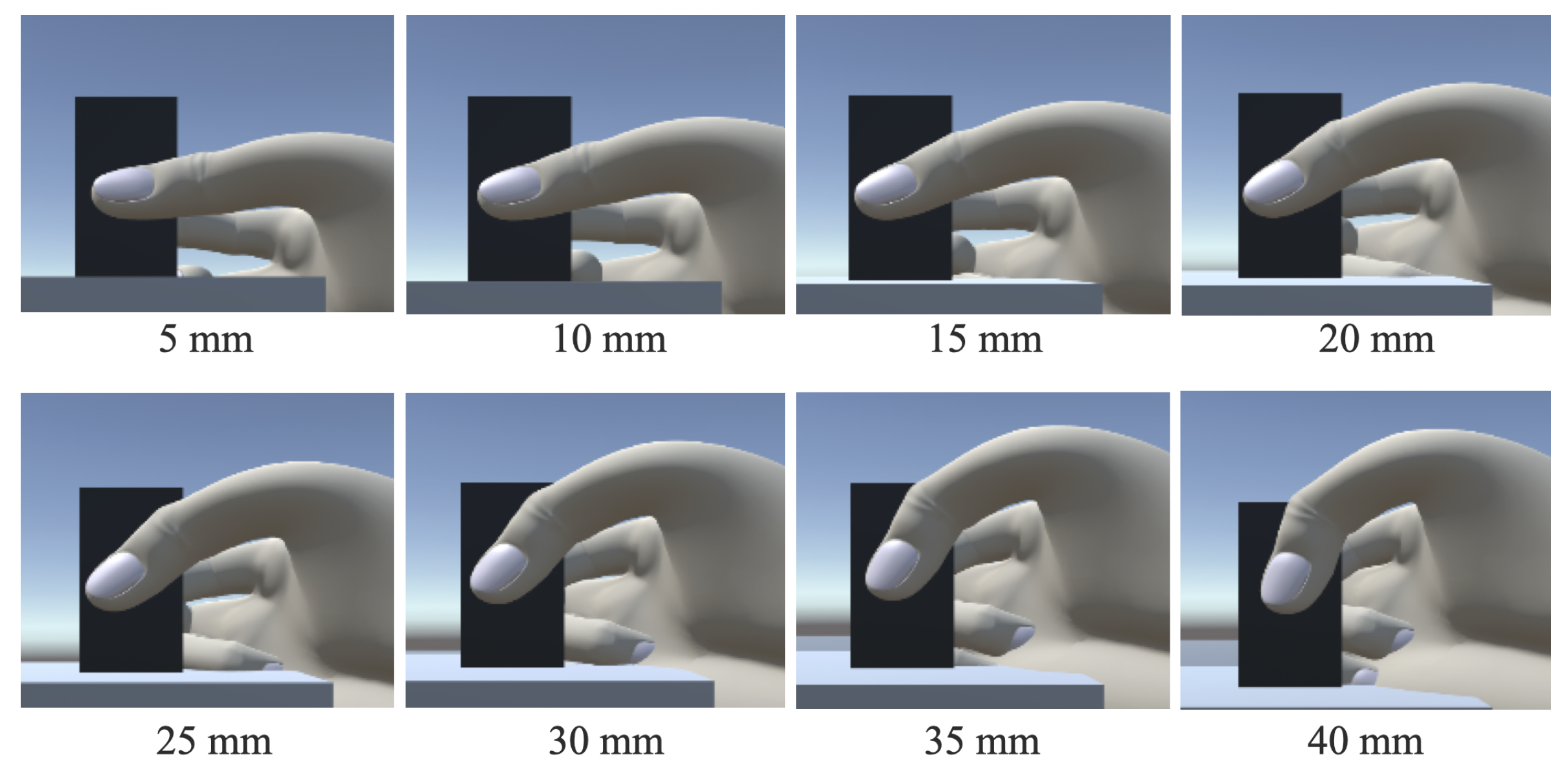

4.2.1. Task Design

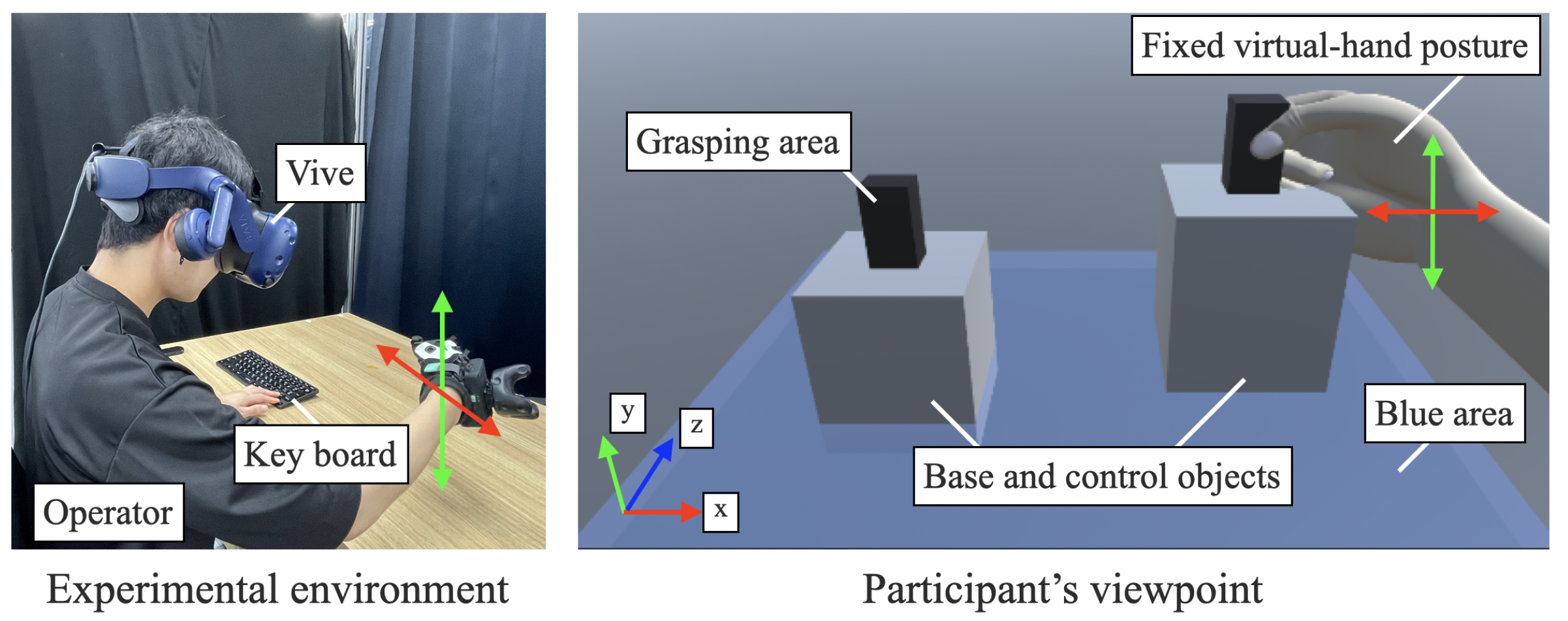

4.2.2. Experimental Setup

4.2.3. Procedure

4.3. Static Analysis

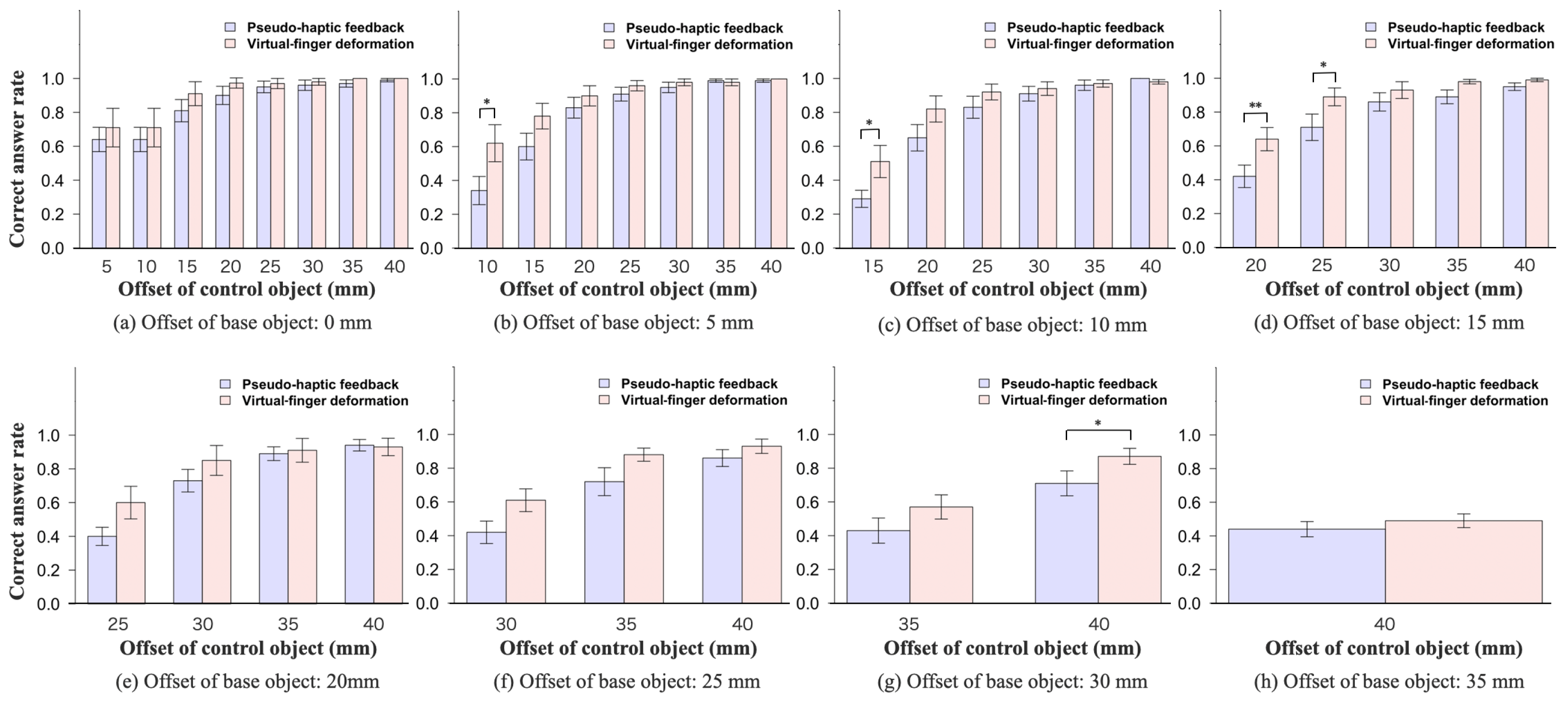

4.4. Results

4.5. Discussion

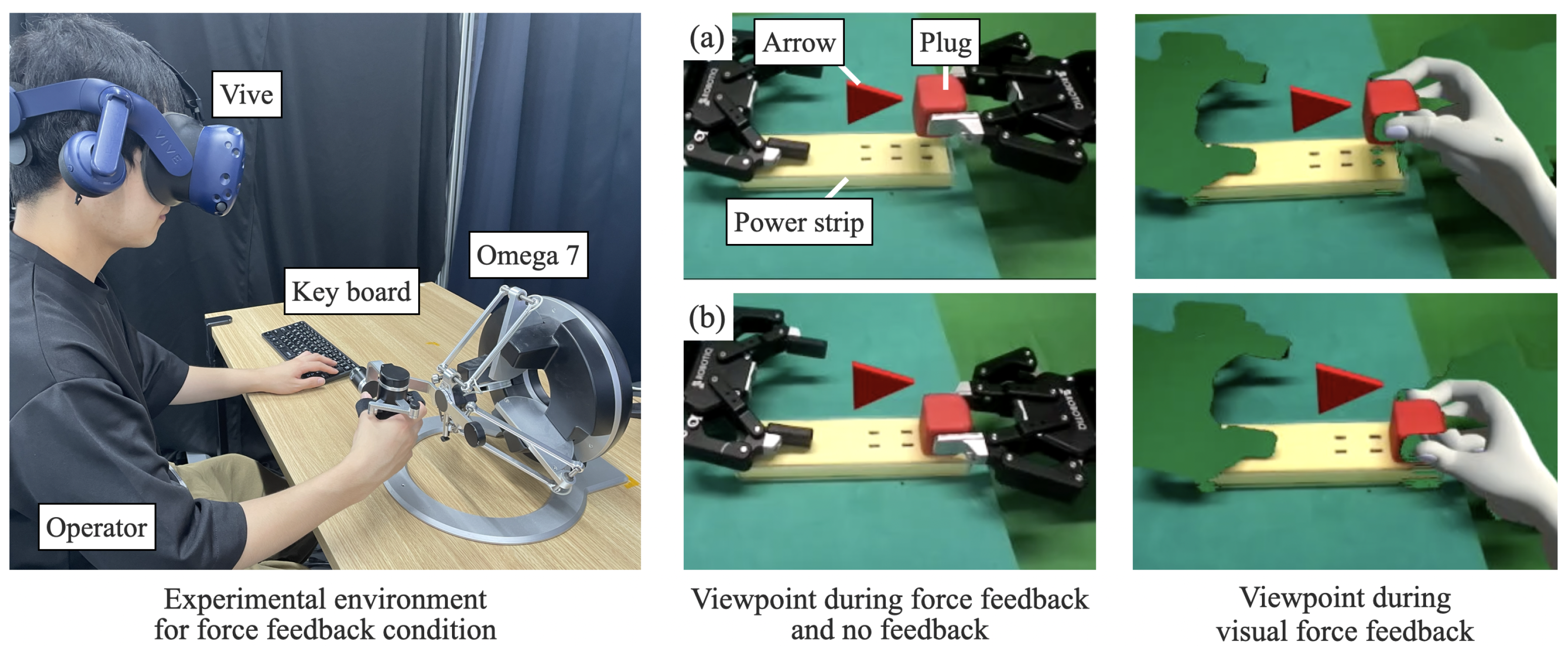

5. Experiment 2—Touch-and-Plugging Judgment Experiment

5.1. Experimental Condition

5.1.1. Task Design

5.1.2. Experimental Setup

5.1.3. Procedure

5.2. Statistical Analyses

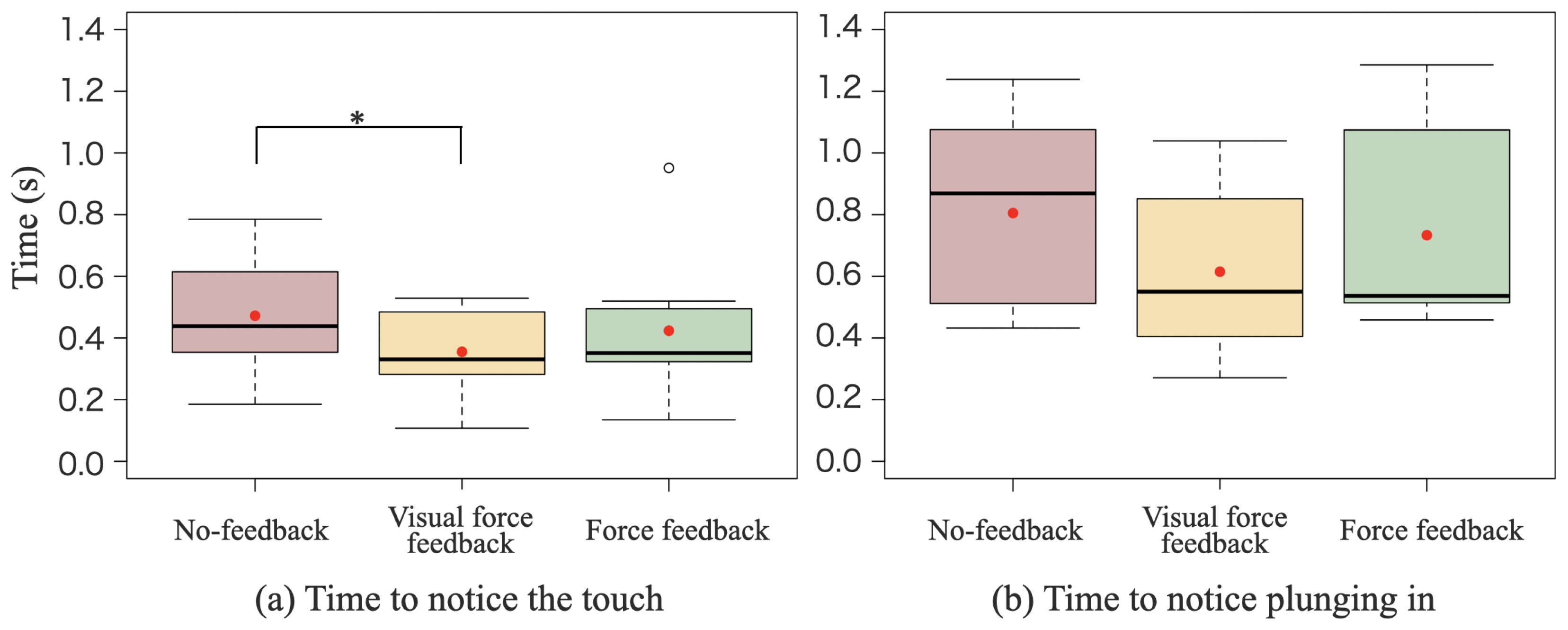

5.3. Results

5.4. Discussion

6. Conclusions and Discussion

6.1. Conclusions

6.2. Discussion

6.2.1. Applications of This Interface

6.2.2. Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| VR | Virtual reality; |

| C/D ratio | Control-to-display ratio; |

| ROS | Robot operating system. |

References

- Sheridan, T.B. Telerobotics, Automation, and Human Supervisory Control; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Yoon, W.K.; Goshozono, T.; Kawabe, H.; Kinami, M.; Tsumaki, Y.; Uchiyama, M.; Oda, M.; Doi, T. Model-based space robot teleoperation of ETS-VII manipulator. IEEE Trans. Robot. Autom. 2004, 20, 602–612. [Google Scholar] [CrossRef]

- Guo, Y.; Freer, D.; Deligianni, F.; Yang, G.Z. Eye-tracking for performance evaluation and workload estimation in space telerobotic training. IEEE Trans. Hum.-Mach. Syst. 2021, 52, 1–11. [Google Scholar] [CrossRef]

- Roquet, P. Telepresence enclosure: VR, remote work, and the privatization of presence in a shrinking Japan. Media Theory 2020, 4, 33–62. [Google Scholar]

- Martins, H.; Oakley, I.; Ventura, R. Design and evaluation of a head-mounted display for immersive 3D teleoperation of field robots. Robotica 2015, 33, 2166–2185. [Google Scholar] [CrossRef]

- Kot, T.; Novák, P. Utilization of the Oculus Rift HMD in mobile robot teleoperation. Appl. Mech. Mater. 2014, 555, 199–208. [Google Scholar] [CrossRef]

- Zhu, Y.; Fusano, K.; Aoyama, T.; Hasegawa, Y. Intention-reflected predictive display for operability improvement of time-delayed teleoperation system. Robomech J. 2023, 10, 17. [Google Scholar] [CrossRef]

- Schwarz, M.; Lenz, C.; Memmesheimer, R.; Pätzold, B.; Rochow, A.; Schreiber, M.; Behnke, S. Robust immersive telepresence and mobile telemanipulation: Nimbro wins ana avatar xprize finals. In Proceedings of the 2023 IEEE-RAS 22nd International Conference on Humanoid Robots (Humanoids), Austin, TX, USA, 12–14 December 2023; IEEE: New York, NY, USA, 2023; pp. 1–8. [Google Scholar]

- Zhu, Y.; Jiang, B.; Chen, Q.; Aoyama, T.; Hasegawa, Y. A shared control framework for enhanced grasping performance in teleoperation. IEEE Access 2023, 11, 69204–69215. [Google Scholar] [CrossRef]

- Desbats, P.; Geffard, F.; Piolain, G.; Coudray, A. Force-feedback teleoperation of an industrial robot in a nuclear spent fuel reprocessing plant. Ind. Robot. Int. J. 2006, 33, 178–186. [Google Scholar] [CrossRef]

- Wildenbeest, J.G.; Abbink, D.A.; Heemskerk, C.J.; Van Der Helm, F.C.; Boessenkool, H. The impact of haptic feedback quality on the performance of teleoperated assembly tasks. IEEE Trans. Haptics 2012, 6, 242–252. [Google Scholar] [CrossRef]

- Selvaggio, M.; Cognetti, M.; Nikolaidis, S.; Ivaldi, S.; Siciliano, B. Autonomy in physical human-robot interaction: A brief survey. IEEE Robot. Autom. Lett. 2021, 6, 7989–7996. [Google Scholar] [CrossRef]

- Michel, Y.; Rahal, R.; Pacchierotti, C.; Giordano, P.R.; Lee, D. Bilateral teleoperation with adaptive impedance control for contact tasks. IEEE Robot. Autom. Lett. 2021, 6, 5429–5436. [Google Scholar] [CrossRef]

- Zhu, Q.; Du, J.; Shi, Y.; Wei, P. Neurobehavioral assessment of force feedback simulation in industrial robotic teleoperation. Autom. Constr. 2021, 126, 103674. [Google Scholar] [CrossRef]

- Abi-Farrajl, F.; Henze, B.; Werner, A.; Panzirsch, M.; Ott, C.; Roa, M.A. Humanoid teleoperation using task-relevant haptic feedback. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York, NY, USA, 2018; pp. 5010–5017. [Google Scholar]

- Lécuyer, A.; Coquillart, S.; Kheddar, A.; Richard, P.; Coiffet, P. Pseudo-haptic feedback: Can isometric input devices simulate force feedback? In Proceedings of the IEEE Virtual Reality 2000 (Cat. No. 00CB37048), New Brunswick, NJ, USA, 18–22 March 2000; IEEE: New York, NY, USA, 2000; pp. 83–90. [Google Scholar]

- Dominjon, L.; Lécuyer, A.; Burkhardt, J.M.; Richard, P.; Richir, S. Influence of control/display ratio on the perception of mass of manipulated objects in virtual environments. In Proceedings of the IEEE Proceedings VR 2005, Virtual Reality, Bonn, Germany, 12–16 March 2005; IEEE: New York, NY, USA, 2005; pp. 19–25. [Google Scholar]

- Son, E.; Song, H.; Nam, S.; Kim, Y. Development of a Virtual Object Weight Recognition Algorithm Based on Pseudo-Haptics and the Development of Immersion Evaluation Technology. Electronics 2022, 11, 2274. [Google Scholar] [CrossRef]

- Lécuyer, A.; Burkhardt, J.M.; Etienne, L. Feeling bumps and holes without a haptic interface: The perception of pseudo-haptic textures. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; pp. 239–246. [Google Scholar]

- Rietzler, M.; Geiselhart, F.; Gugenheimer, J.; Rukzio, E. Breaking the tracking: Enabling weight perception using perceivable tracking offsets. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar]

- Lawrence, D.A. Stability and transparency in bilateral teleoperation. IEEE Trans. Robot. Autom. 1993, 9, 624–637. [Google Scholar] [CrossRef]

- Almeida, L.; Menezes, P.; Dias, J. Interface transparency issues in teleoperation. Appl. Sci. 2020, 10, 6232. [Google Scholar] [CrossRef]

- Xavier, R.; Silva, J.L.; Ventura, R.; Jorge, J. Pseudo-Haptics Survey: Human-Computer Interaction in Extended Reality & Teleoperation. IEEE Access 2024, 12, 80442–80467. [Google Scholar]

- Jauregui, D.A.G.; Argelaguet, F.; Olivier, A.H.; Marchal, M.; Multon, F.; Lecuyer, A. Toward “pseudo-haptic avatars”: Modifying the visual animation of self-avatar can simulate the perception of weight lifting. IEEE Trans. Vis. Comput. Graph. 2014, 20, 654–661. [Google Scholar] [CrossRef]

- Samad, M.; Gatti, E.; Hermes, A.; Benko, H.; Parise, C. Pseudo-haptic weight: Changing the perceived weight of virtual objects by manipulating control-display ratio. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Kim, J.; Kim, S.; Lee, J. The effect of multisensory pseudo-haptic feedback on perception of virtual weight. IEEE Access 2022, 10, 5129–5140. [Google Scholar] [CrossRef]

- Botvinick, M.; Cohen, J. Rubber hands ‘feel’touch that eyes see. Nature 1998, 391, 756. [Google Scholar] [CrossRef]

- Haggard, P. Sense of agency in the human brain. Nat. Rev. Neurosci. 2017, 18, 196–207. [Google Scholar] [CrossRef]

- Sato, Y.; Hiraki, T.; Tanabe, N.; Matsukura, H.; Iwai, D.; Sato, K. Modifying texture perception with pseudo-haptic feedback for a projected virtual hand interface. IEEE Access 2020, 8, 120473–120488. [Google Scholar] [CrossRef]

- Rabellino, D.; Frewen, P.A.; McKinnon, M.C.; Lanius, R.A. Peripersonal space and bodily self-consciousness: Implications for psychological trauma-related disorders. Front. Neurosci. 2020, 14, 586605. [Google Scholar] [CrossRef] [PubMed]

- Yamamoto, K.; Zhu, Y.; Aoyama, T.; Takeuchi, M.; Hasegawa, Y. Improvement in the Manipulability of Remote Touch Screens Based on Peri-Personal Space Transfer. IEEE Access 2023, 11, 43962–43974. [Google Scholar] [CrossRef]

- Fechner, G.T. Elements of Psychophysics, 1860; Appleton-Century-Crofts: Norwalk, CT, USA, 1948. [Google Scholar]

- Ng, A.W.; Chan, A.H. Finger response times to visual, auditory and tactile modality stimuli. In Proceedings of the International Multiconference of Engineers and Computer Scientists, IMECS, Hong Kong, China, 14–16 March 2012; Volume 2, pp. 1449–1454. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yamamoto, K.; Zhu, Y.; Aoyama, T.; Hasegawa, Y. Virtual Hand Deformation-Based Pseudo-Haptic Feedback for Enhanced Force Perception and Task Performance in Physically Constrained Teleoperation. Robotics 2024, 13, 143. https://doi.org/10.3390/robotics13100143

Yamamoto K, Zhu Y, Aoyama T, Hasegawa Y. Virtual Hand Deformation-Based Pseudo-Haptic Feedback for Enhanced Force Perception and Task Performance in Physically Constrained Teleoperation. Robotics. 2024; 13(10):143. https://doi.org/10.3390/robotics13100143

Chicago/Turabian StyleYamamoto, Kento, Yaonan Zhu, Tadayoshi Aoyama, and Yasuhisa Hasegawa. 2024. "Virtual Hand Deformation-Based Pseudo-Haptic Feedback for Enhanced Force Perception and Task Performance in Physically Constrained Teleoperation" Robotics 13, no. 10: 143. https://doi.org/10.3390/robotics13100143