GOYA: Leveraging Generative Art for Content-Style Disentanglement †

Abstract

:1. Introduction

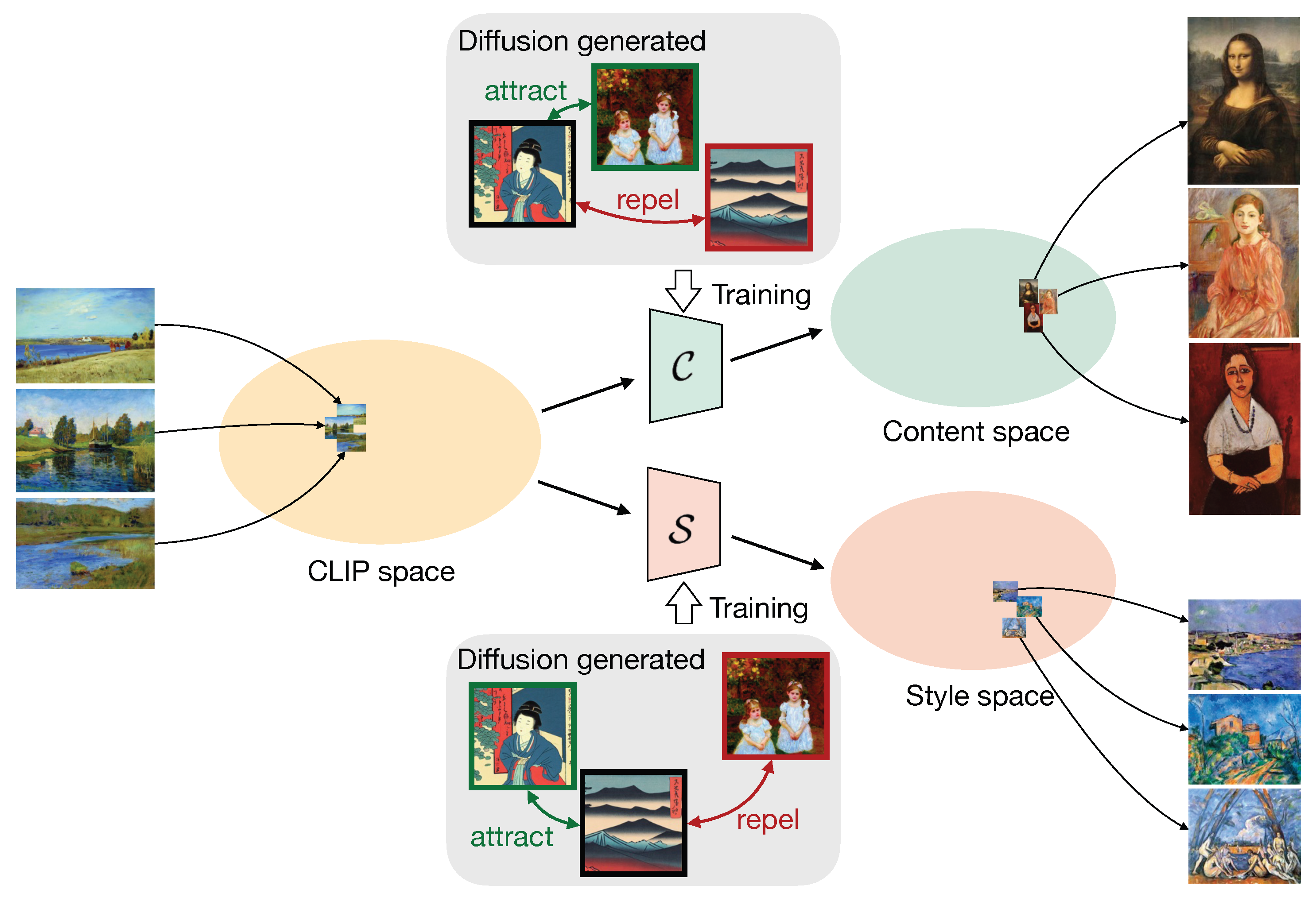

- We design a disentanglement model to obtain disentangled content and style space derived from CLIP’s latent space.

- We train our model with synthetic images rather than real paintings, leveraging the capabilities of Stable Diffusion and prompt design.

- Results indicate that the knowledge in Stable Diffusion can be effectively distilled for art analysis, performing well in content-style disentanglement, art retrieval, and art classification.

2. Related Work

2.1. Art Analysis

2.2. Representation Disentanglement

2.3. Text-to-Image Generation

2.4. Training on Synthetic Images

3. Preliminaries

3.1. Stable Diffusion

3.2. CLIP

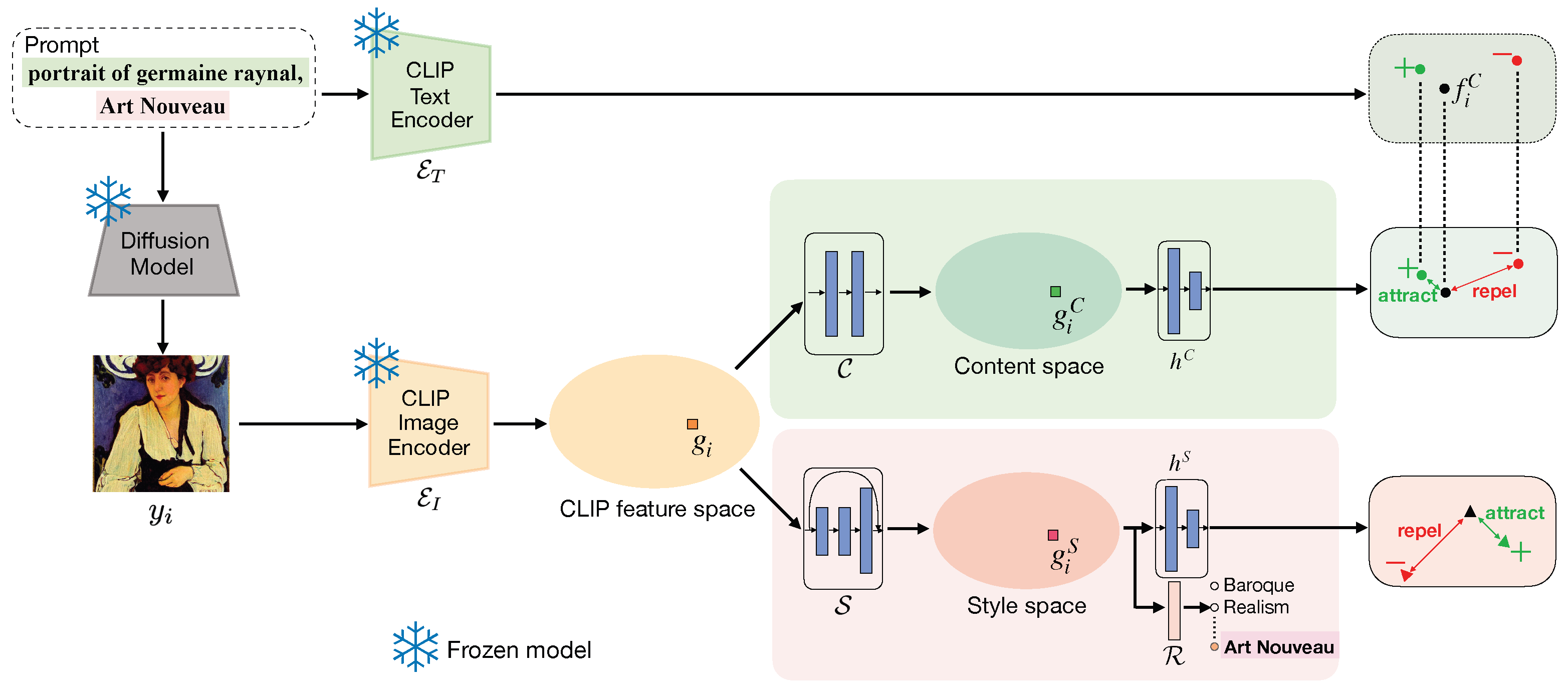

4. GOYA

4.1. Content Encoder

4.2. Style Encoder

4.3. Content Contrastive Loss

4.4. Style Contrastive Loss

4.5. Style Classification Loss

4.6. Total Loss

5. Evaluation

5.1. Evaluation Data

5.2. Training Data

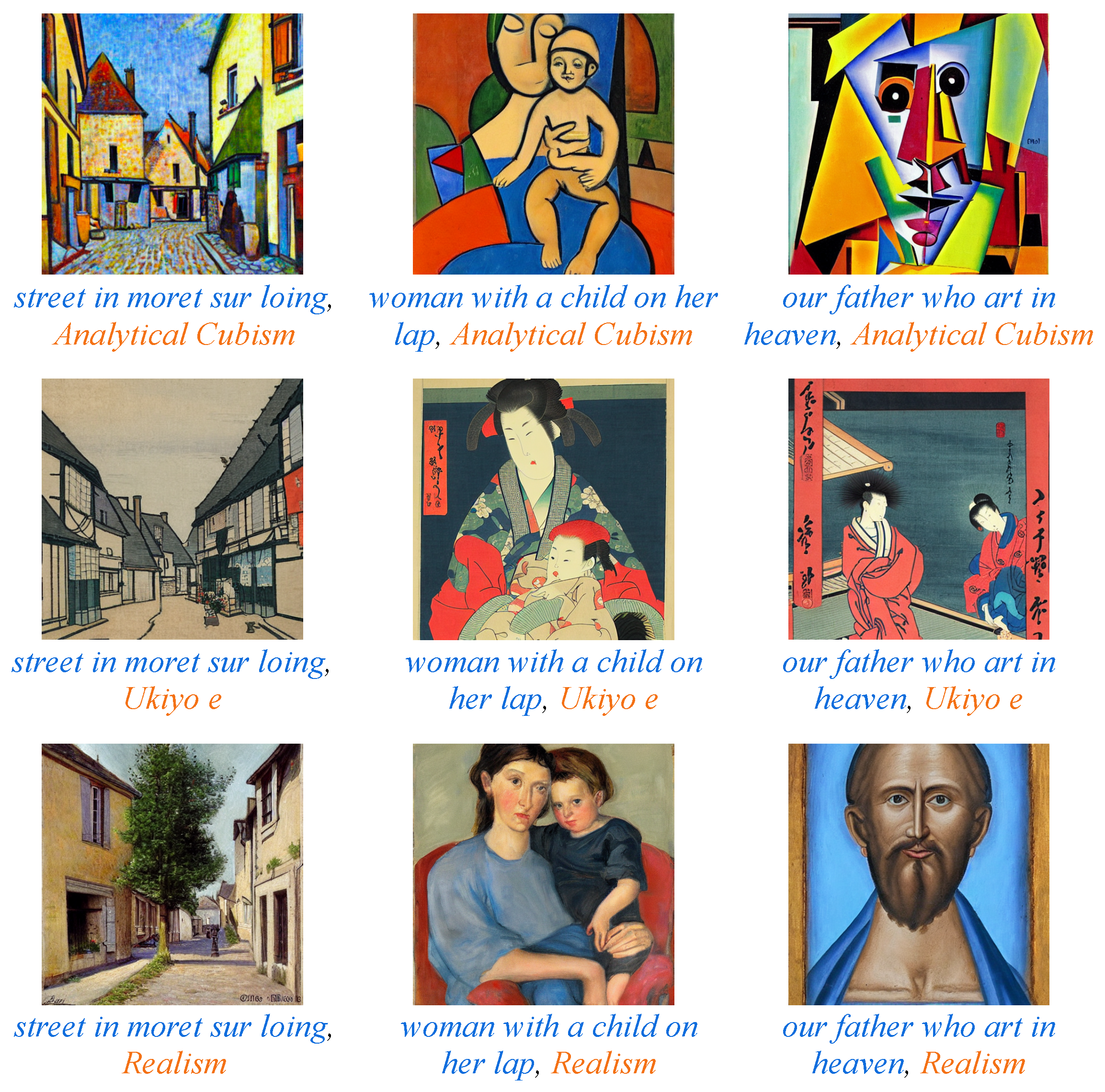

5.3. Image Generation Details

5.4. GOYA Details

5.5. Disentanglement Evaluation

5.5.1. Baselines

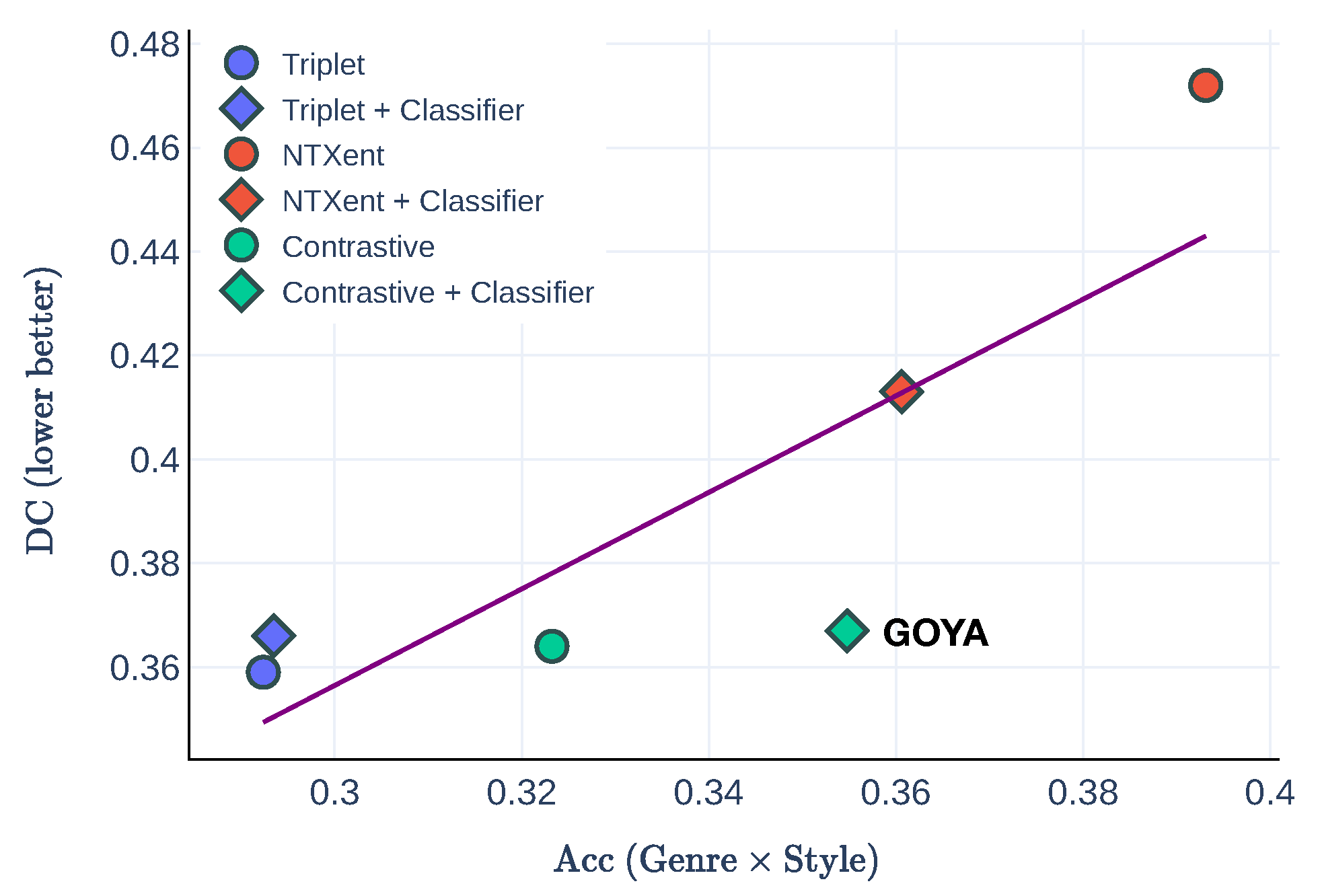

5.5.2. Results

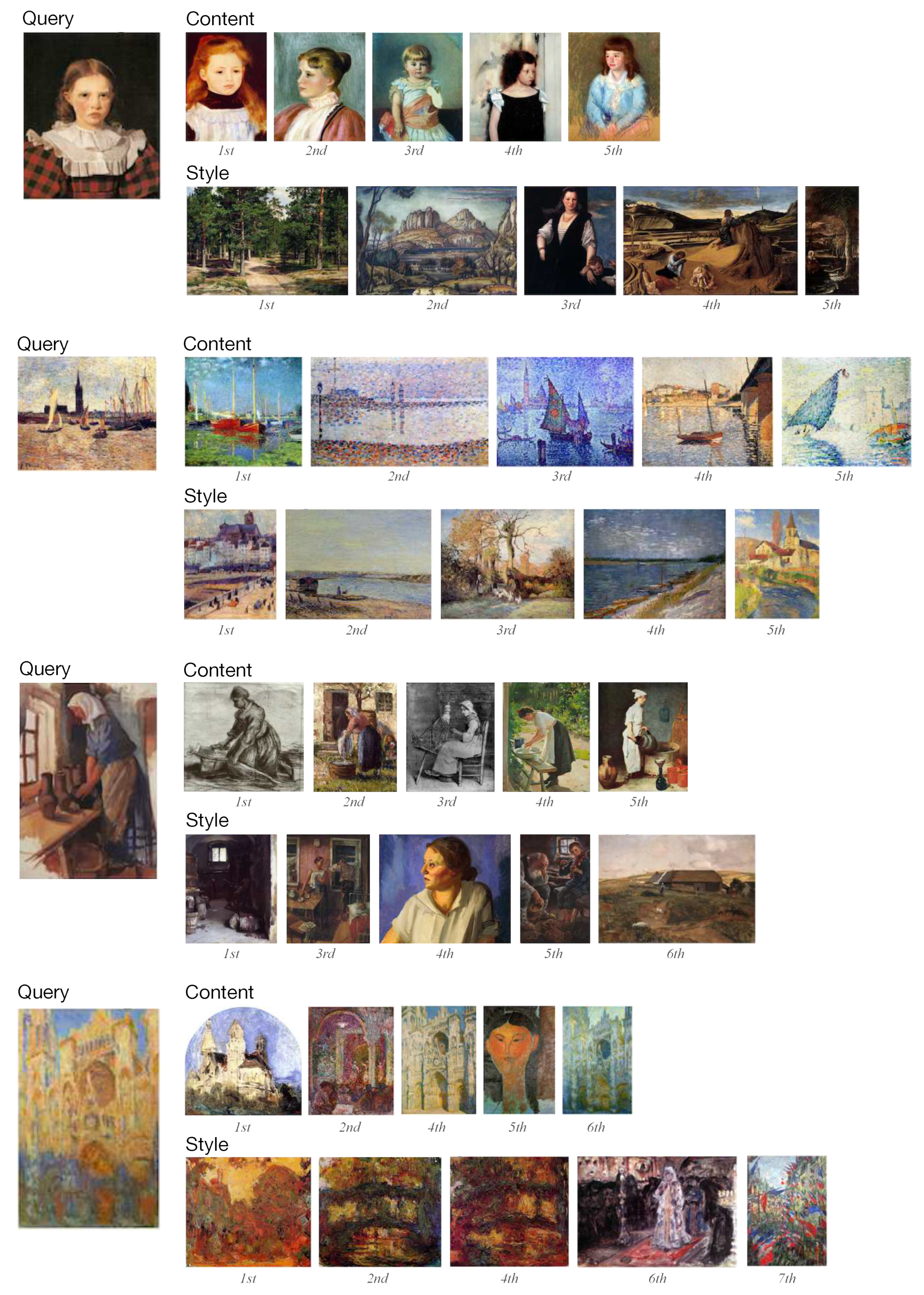

5.6. Similarity Retrieval

Results

5.7. Classification Evaluation

5.7.1. Baselines

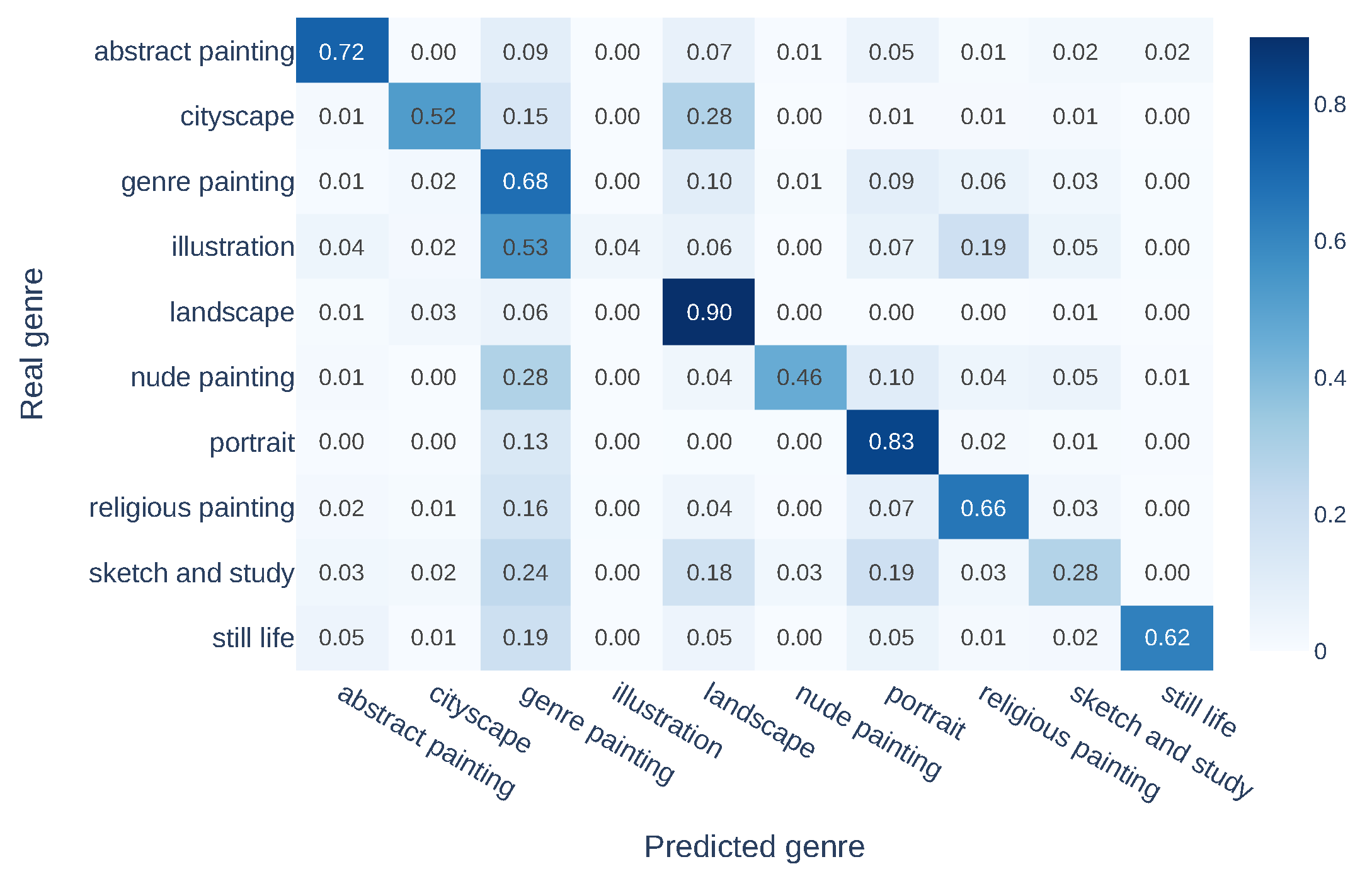

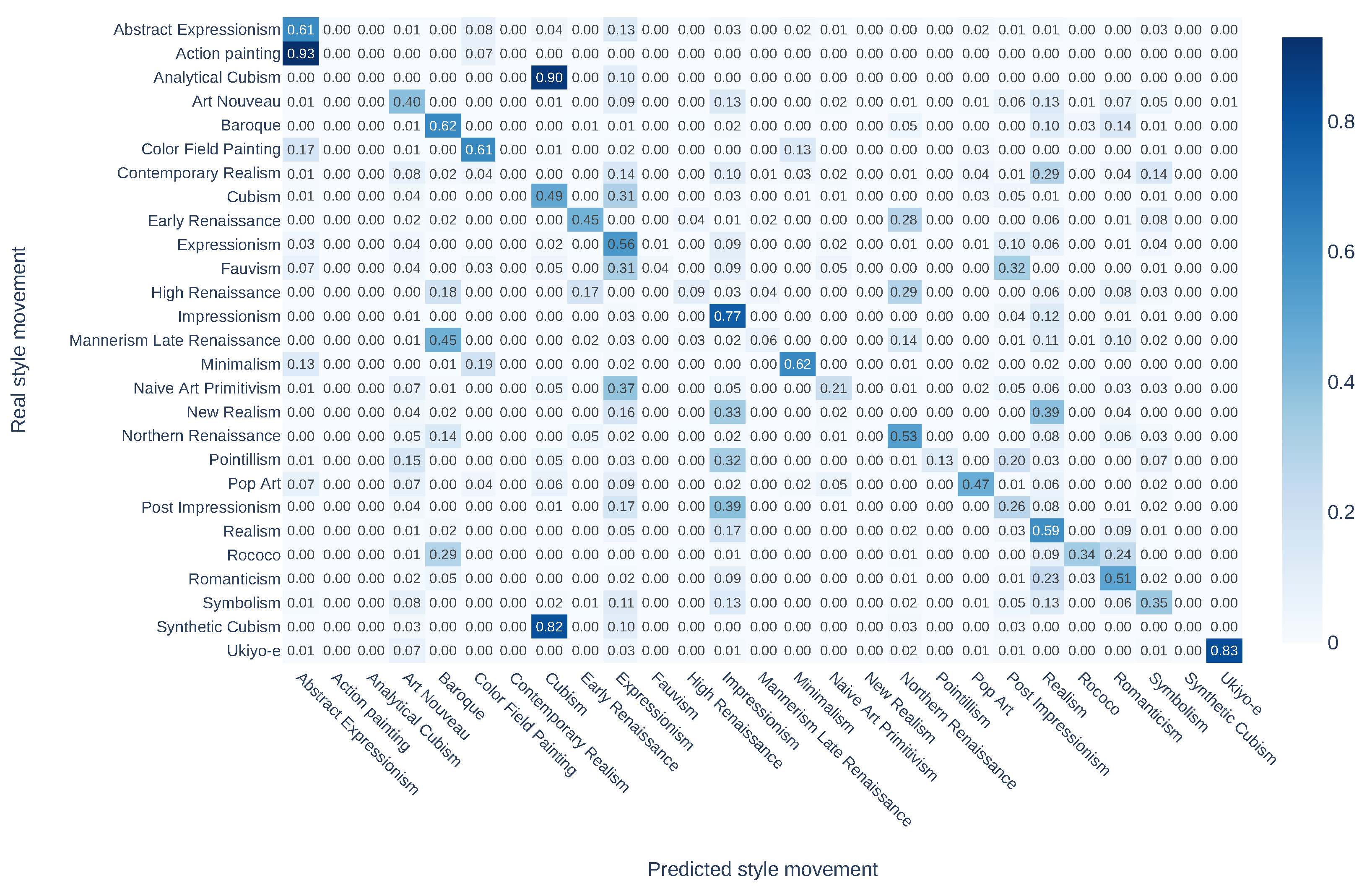

5.7.2. Results

5.8. Ablation Study

5.8.1. Losses

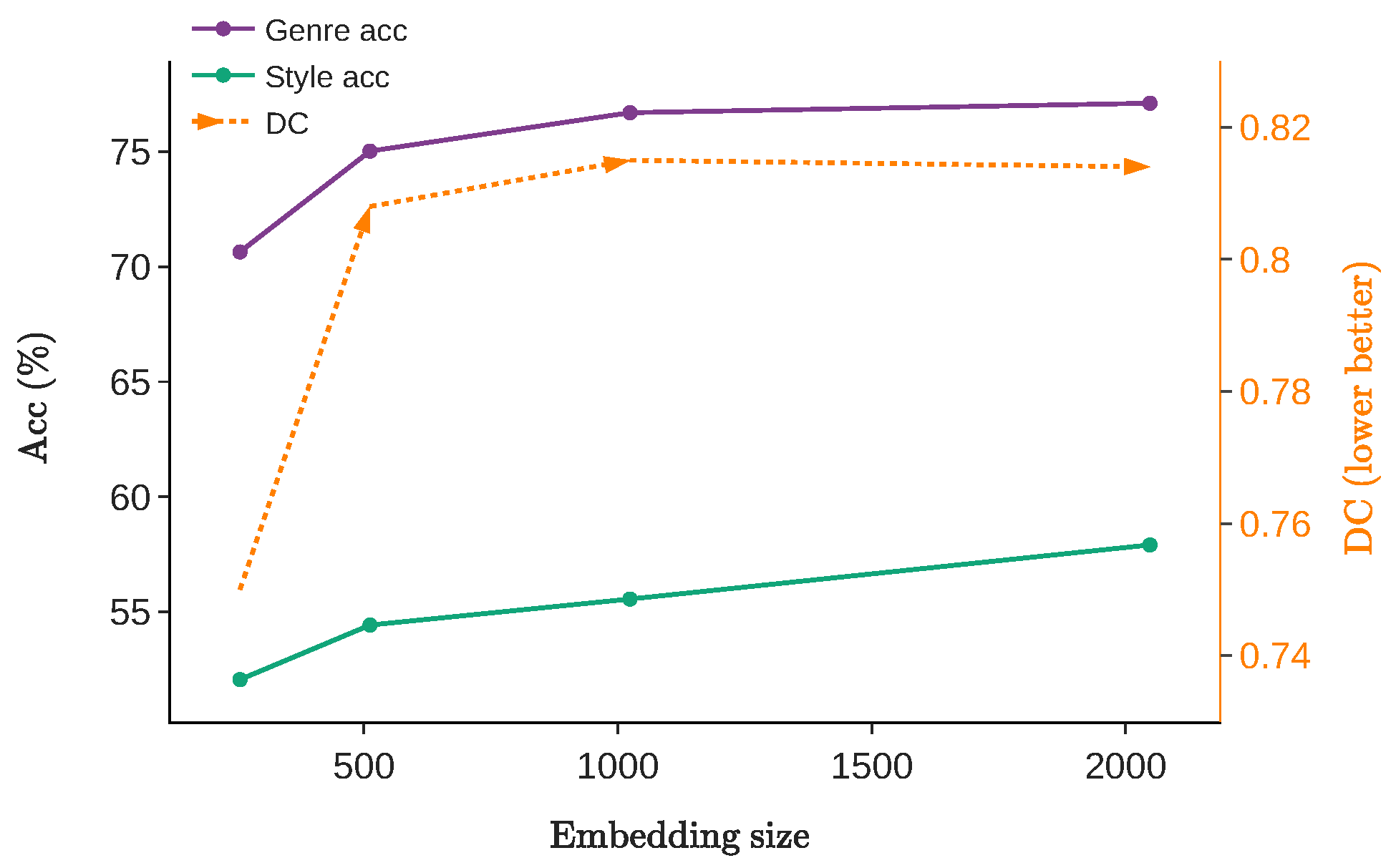

5.8.2. Embedding Size

6. Discussion

6.1. Image Generation

- Prompt design: In this study, we used a combination of content and style descriptions as prompts, where the content description comprises the title of paintings, and the style description employs the style labels of the WikiArt dataset. Alternatively, more specialized prompt designs could be implemented to attain even finer control over the generated images. For example, captions from vision-language datasets could be employed as content descriptions, while detailed style descriptions could be extracted from external knowledge such as Wikipedia.

- Data replication: As demonstrated in previous research [71,72], Stable Diffusion might produce forgeries, generating images that closely resemble the training data. However, the extent of these replicated images within our training data remains uncertain, and their potential impact on model training has yet to be thoroughly explored.

6.2. Model Training

- Encoder structure: For the content and style encoders, we employ small networks consisting of only two and three layers, respectively. We found that a higher-dimensional hidden layer (2048) and fewer layers (3) are effective for learning content embeddings, while a lower-dimensional hidden layer (512) and more layers (2) yield better style embeddings. We hypothesize that content embedding, which reflects semantic information, benefits from a large number of neutrons, while style embedding, containing low-level features, is more efficiently represented with lower dimensions.

- Partition of synthetic images: We performed style movement classification on a training dataset comprising both synthetic and real data. Results presented in Figure 9 indicate that, as the number of synthetic images increases during training, the accuracy decreases. We attribute this phenomenon to the domain gap between synthetic and real images. In addition, we suggest that contrastive learning may help alleviate the impact of this domain gap.

6.3. Limitation on the WikiArt Dataset

6.4. Applications

- Art applications: Our work can potentially be extended into various practical scenarios. For instance, it could be integrated into an art retrieval system, enabling users to find paintings based on text descriptions or a given artwork. Additionally, it could be employed in a painting recommendation system, offering personalized suggestions to users according to their preferred paintings. These applications have the potential to enhance user experience and engagement, thus contributing to the improvement of art production and consumption.

- Digital humanities: While our work mainly focuses on the analysis of fine art, there is potential for our work to be applied in other areas within digital humanities, such as graphic design and historical document analysis.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Similarity Retrieval

References

- Carneiro, G.; Silva, N.P.d.; Bue, A.D.; Costeira, J.P. Artistic image classification: An analysis on the printart database. In Proceedings of the ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 143–157. [Google Scholar]

- Garcia, N.; Renoust, B.; Nakashima, Y. Context-aware embeddings for automatic art analysis. In Proceedings of the ICMR, Ottawa, ON, Canada, 10–13 June 2019; pp. 25–33. [Google Scholar]

- Cetinic, E.; Lipic, T.; Grgic, S. Fine-tuning convolutional neural networks for fine art classification. Expert Syst. Appl. 2018, 114, 107–118. [Google Scholar] [CrossRef]

- Van Noord, N.; Hendriks, E.; Postma, E. Toward discovery of the artist’s style: Learning to recognize artists by their artworks. IEEE Signal Process. Mag. 2015, 32, 46–54. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 2414–2423. [Google Scholar]

- Ypsilantis, N.A.; Garcia, N.; Han, G.; Ibrahimi, S.; Van Noord, N.; Tolias, G. The Met dataset: Instance-level recognition for artworks. In Proceedings of the NeurIPS Datasets and Benchmarks Track, Virtual, 6 December 2021. [Google Scholar]

- Lang, S.; Ommer, B. Reflecting on how artworks are processed and analyzed by computer vision. In Proceedings of the ECCV Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Garcia, N.; Renoust, B.; Nakashima, Y. ContextNet: Representation and exploration for painting classification and retrieval in context. Int. J. Multimed. Inf. Retr. 2020, 9, 17–30. [Google Scholar] [CrossRef]

- Tan, W.R.; Chan, C.S.; Aguirre, H.; Tanaka, K. Improved ArtGAN for Conditional Synthesis of Natural Image and Artwork. Trans. Image Process. 2019, 28, 394–409. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Garcia, N.; Li, L.; Nakashima, Y. Retrieving Emotional Stimuli in Artworks. In Proceedings of the 2024 ACM International Conference on Multimedia Retrieval, Phuket, Thailand, 10–14 June 2024. [Google Scholar]

- Bai, Z.; Nakashima, Y.; Garcia, N. Explain me the painting: Multi-topic knowledgeable art description generation. In Proceedings of the ICCV, Virtual, 11–17 October 2021; pp. 5422–5432. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 10684–10695. [Google Scholar]

- Sariyildiz, M.B.; Alahari, K.; Larlus, D.; Kalantidis, Y. Fake it till you make it: Learning transferable representations from synthetic ImageNet clones. In Proceedings of the CVPR, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the ICML, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Tan, W.R.; Chan, C.S.; Aguirre, H.E.; Tanaka, K. Ceci n’est pas une pipe: A deep convolutional network for fine-art paintings classification. In Proceedings of the ICIP, Phoenix, AZ, USA, 25–28 September 2016; pp. 3703–3707. [Google Scholar]

- El Vaigh, C.B.; Garcia, N.; Renoust, B.; Chu, C.; Nakashima, Y.; Nagahara, H. GCNBoost: Artwork classification by label propagation through a knowledge graph. In Proceedings of the ICMR, Taipei, Taiwan, 21–24 August 2021; pp. 92–100. [Google Scholar]

- Gonthier, N.; Gousseau, Y.; Ladjal, S.; Bonfait, O. Weakly Supervised Object Detection in Artworks. In Proceedings of the ECCV Workshops, Munich, Germany, 8–14 September 2018; pp. 692–709. [Google Scholar]

- Shen, X.; Efros, A.A.; Aubry, M. Discovering visual patterns in art collections with spatially-consistent feature learning. In Proceedings of the CVPR, Long Beach, CA, USA, 16–20 June 2019; pp. 9278–9287. [Google Scholar]

- Saleh, B.; Elgammal, A. Large-scale Classification of Fine-Art Paintings: Learning The Right Metric on The Right Feature. Int. J. Digit. Art Hist. 2016, 2, 70–93. [Google Scholar]

- Mao, H.; Cheung, M.; She, J. DeepArt: Learning joint representations of visual arts. In Proceedings of the ACM MM, Mountain View, CA, USA, 23–27 October 2017; pp. 1183–1191. [Google Scholar]

- Mensink, T.; Van Gemert, J. The rijksmuseum challenge: Museum-centered visual recognition. In Proceedings of the ICMR, Glasgow, UK, 1–4 April 2014; pp. 451–454. [Google Scholar]

- Wilber, M.J.; Fang, C.; Jin, H.; Hertzmann, A.; Collomosse, J.; Belongie, S. BAM! The behance artistic media dataset for recognition beyond photography. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017; pp. 1202–1211. [Google Scholar]

- Strezoski, G.; Worring, M. OmniArt: A large-scale artistic benchmark. TOMM 2018, 14, 1–21. [Google Scholar] [CrossRef]

- Khan, S.J.; van Noord, N. Stylistic Multi-Task Analysis of Ukiyo-e Woodblock Prints. In Proceedings of the BMVC, Virtual, 22–25 November 2021; pp. 1–5. [Google Scholar]

- Chu, W.T.; Wu, Y.L. Image style classification based on learnt deep correlation features. Trans. Multimed. 2018, 20, 2491–2502. [Google Scholar] [CrossRef]

- Sabatelli, M.; Kestemont, M.; Daelemans, W.; Geurts, P. Deep transfer learning for art classification problems. In Proceedings of the ECCV Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Sandoval, C.; Pirogova, E.; Lech, M. Two-stage deep learning approach to the classification of fine-art paintings. IEEE Access 2019, 7, 41770–41781. [Google Scholar] [CrossRef]

- Kotovenko, D.; Sanakoyeu, A.; Lang, S.; Ommer, B. Content and style disentanglement for artistic style transfer. In Proceedings of the ICCV, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4422–4431. [Google Scholar]

- Xie, X.; Li, Y.; Huang, H.; Fu, H.; Wang, W.; Guo, Y. Artistic Style Discovery With Independent Components. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 19870–19879. [Google Scholar]

- Shi, Y.; Yang, X.; Wan, Y.; Shen, X. SemanticStyleGAN: Learning Compositional Generative Priors for Controllable Image Synthesis and Editing. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 11254–11264. [Google Scholar]

- Xu, Z.; Lin, T.; Tang, H.; Li, F.; He, D.; Sebe, N.; Timofte, R.; Van Gool, L.; Ding, E. Predict, Prevent, and Evaluate: Disentangled Text-Driven Image Manipulation Empowered by Pre-Trained Vision-Language Model. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 18229–18238. [Google Scholar]

- Yu, X.; Chen, Y.; Liu, S.; Li, T.; Li, G. Multi-mapping image-to-image translation via learning disentanglement. Adv. Neural Inf. Process. Syst. 2019, 32, 2994–3004. [Google Scholar]

- Gabbay, A.; Hoshen, Y. Improving style-content disentanglement in image-to-image translation. arXiv 2020, arXiv:2007.04964. [Google Scholar]

- Denton, E.L. Unsupervised learning of disentangled representations from video. In Proceedings of the NeurIPS, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Higgins, I.; Matthey, L.; Pal, A.; Burgess, C.; Glorot, X.; Botvinick, M.; Mohamed, S.; Lerchner, A. β-VAE: Learning basic visual concepts with a constrained variational framework. In Proceedings of the ICLR, Toulon, France, 24–26 April 2017. [Google Scholar]

- Kwon, G.; Ye, J.C. Diffusion-based image translation using disentangled style and content representation. In Proceedings of the ICLR, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Ruta, D.; Motiian, S.; Faieta, B.; Lin, Z.; Jin, H.; Filipkowski, A.; Gilbert, A.; Collomosse, J. ALADIN: All layer adaptive instance normalization for fine-grained style similarity. In Proceedings of the ICCV, Virtual, 11–17 October 2021; pp. 11926–11935. [Google Scholar]

- Huang, X.; Belongie, S. Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tumanyan, N.; Bar-Tal, O.; Bagon, S.; Dekel, T. Splicing ViT Features for Semantic Appearance Transfer. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 10748–10757. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the ICCV, Virtual, 11–17 October 2021; pp. 9650–9660. [Google Scholar]

- Ramesh, A.; Dhariwal, P.; Nichol, A.; Chu, C.; Chen, M. Hierarchical text-conditional image generation with CLIP latents. arXiv 2022, arXiv:2204.06125. [Google Scholar]

- Ding, M.; Yang, Z.; Hong, W.; Zheng, W.; Zhou, C.; Yin, D.; Lin, J.; Zou, X.; Shao, Z.; Yang, H.; et al. CogView: Mastering text-to-image generation via transformers. NeurIPS 2021, 34, 19822–19835. [Google Scholar]

- Zhou, Y.; Zhang, R.; Chen, C.; Li, C.; Tensmeyer, C.; Yu, T.; Gu, J.; Xu, J.; Sun, T. Towards Language-Free Training for Text-to-Image Generation. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 17907–17917. [Google Scholar]

- Li, Z.; Min, M.R.; Li, K.; Xu, C. StyleT2I: Toward Compositional and High-Fidelity Text-to-Image Synthesis. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 18197–18207. [Google Scholar]

- Kwon, G.; Ye, J.C. CLIPstyler: Image style transfer with a single text condition. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 18062–18071. [Google Scholar]

- Tao, M.; Tang, H.; Wu, F.; Jing, X.Y.; Bao, B.K.; Xu, C. DF-GAN: A Simple and Effective Baseline for Text-to-Image Synthesis. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 16515–16525. [Google Scholar]

- Tan, H.; Liu, X.; Liu, M.; Yin, B.; Li, X. KT-GAN: Knowledge-transfer generative adversarial network for text-to-image synthesis. Trans. Image Process. 2020, 30, 1275–1290. [Google Scholar] [CrossRef]

- Katirai, A.; Garcia, N.; Ide, K.; Nakashima, Y.; Kishimoto, A. Situating the social issues of image generation models in the model life cycle: A sociotechnical approach. arXiv 2023, arXiv:2311.18345. [Google Scholar]

- Ostmeyer, J.; Schaerf, L.; Buividovich, P.; Charles, T.; Postma, E.; Popovici, C. Synthetic images aid the recognition of human-made art forgeries. PLoS ONE 2024, 19, e0295967. [Google Scholar] [CrossRef]

- Tian, Y.; Fan, L.; Isola, P.; Chang, H.; Krishnan, D. StableRep: Synthetic images from text-to-image models make strong visual representation learners. In Proceedings of the NeurlPS, Vancouver, BC, Canada, 9–15 December 2024. [Google Scholar]

- Hataya, R.; Bao, H.; Arai, H. Will Large-scale Generative Models Corrupt Future Datasets? In Proceedings of the ICCV, Paris, France, 2–6 October 2023. [Google Scholar]

- Azizi, S.; Kornblith, S.; Saharia, C.; Norouzi, M.; Fleet, D.J. Synthetic data from diffusion models improves imagenet classification. arXiv 2023, arXiv:2304.08466. [Google Scholar]

- Chen, T.; Hirota, Y.; Otani, M.; Garcia, N.; Nakashima, Y. Would Deep Generative Models Amplify Bias in Future Models? In Proceedings of the CVPR, Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Ravuri, S.; Vinyals, O. Classification accuracy score for conditional generative models. In Proceedings of the NeurlPS, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. NeurIPS 2020, 33, 6840–6851. [Google Scholar]

- Cheng, R.; Wu, B.; Zhang, P.; Vajda, P.; Gonzalez, J.E. Data-efficient language-supervised zero-shot learning with self-distillation. In Proceedings of the CVPR, Virtual, 19–25 June 2021; pp. 3119–3124. [Google Scholar]

- Zhang, R.; Guo, Z.; Zhang, W.; Li, K.; Miao, X.; Cui, B.; Qiao, Y.; Gao, P.; Li, H. PointCLIP: Point cloud understanding by CLIP. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 8552–8562. [Google Scholar]

- Kim, G.; Kwon, T.; Ye, J.C. DiffusionCLIP: Text-Guided Diffusion Models for Robust Image Manipulation. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 2426–2435. [Google Scholar]

- Gatys, L.; Ecker, A.; Bethge, M. A Neural Algorithm of Artistic Style. arXiv 2015, arXiv:1508.06576. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the ICML, PMLR, Virtual, 12–18 July 2020; pp. 1597–1607. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the CVPR, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Schuhmann, C.; Beaumont, R.; Vencu, R.; Gordon, C.; Wightman, R.; Cherti, M.; Coombes, T.; Katta, A.; Mullis, C.; Wortsman, M.; et al. LAION-5B: An open large-scale dataset for training next generation image-text models. In Proceedings of the NeurIPS, New Orleans, LA, USA, 28 November–2 December 2022. [Google Scholar]

- Liu, L.; Ren, Y.; Lin, Z.; Zhao, Z. Pseudo numerical methods for diffusion models on manifolds. In Proceedings of the ICLR, Virtual, 25–29 April 2022. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Liu, X.; Thermos, S.; Valvano, G.; Chartsias, A.; O’Neil, A.; Tsaftaris, S.A. Measuring the Biases and Effectiveness of Content-Style Disentanglement. In Proceedings of the BMVC, Virtual, 22–25 November 2021. [Google Scholar]

- Chen, X.; He, K. Exploring simple siamese representation learning. In Proceedings of the CVPR, Virtual, 19–25 June 2021; pp. 15750–15758. [Google Scholar]

- Gatys, L.; Ecker, A.S.; Bethge, M. Texture synthesis using convolutional neural networks. In Proceedings of the NeurIPS, Montreal, QC, USA, 7–12 December 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the CVPR, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Sohn, K. Improved deep metric learning with multi-class n-pair loss objective. In Proceedings of the NeurIPS, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Somepalli, G.; Singla, V.; Goldblum, M.; Geiping, J.; Goldstein, T. Diffusion art or digital forgery? Investigating data replication in diffusion models. In Proceedings of the CVPR, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Carlini, N.; Hayes, J.; Nasr, M.; Jagielski, M.; Sehwag, V.; Tramer, F.; Balle, B.; Ippolito, D.; Wallace, E. Extracting training data from diffusion models. In Proceedings of the USENIX Security Symposium, Anaheim, CA, USA, 9–11 August 2023. [Google Scholar]

- Wang, K.; Peng, Y.; Huang, H.; Hu, Y.; Li, S. Mining hard samples locally and globally for improved speech separation. In Proceedings of the ICASSP, Virtual, 7–13 May 2022. [Google Scholar]

- Peng, Z.; Wu, H.; Song, Z.; Xu, H.; Zhu, X.; He, J.; Liu, H.; Fan, Z. Emotalk: Speech-driven emotional disentanglement for 3d face animation. In Proceedings of the ICCV, Paris, France, 2–6 October 2023. [Google Scholar]

- Jin, X.; Li, B.; Xie, B.; Zhang, W.; Liu, J.; Li, Z.; Yang, T.; Zeng, W. Closed-Loop Unsupervised Representation Disentanglement with β-VAE Distillation and Diffusion Probabilistic Feedback. arXiv 2024, arXiv:2402.02346. [Google Scholar]

| Components | Layer Details |

|---|---|

| Content encoder | Linear layer, ReLU, Linear layer |

| Style encoder | Linear layer, ReLU, Linear layer, ReLU, Linear layer |

| Projector | Linear layer, ReLU, Linear layer |

| Style classifier | Linear layer |

| Model | Training | Training | Emb. Size | Emb. Size | DC ↓ |

|---|---|---|---|---|---|

| Params | Data | Content | Style | ||

| Labels | - | - | 27 | 27 | 0.269 |

| ResNet50 [61] | WikiArt | 2048 | 204 | ||

| CLIP [14] | WikiArt | 512 | 512 | ||

| DINO [40] | − | − | 616,225 | 768 | |

| GOYA (Ours) | Diffusion | 2048 | 2048 | 0.367 |

| Model | Training | Label | Num. | Emb. Size | Emb. Size | Accuracy | Accuracy |

|---|---|---|---|---|---|---|---|

| Data | Train | Content | Style | Genre | Style | ||

| Pre-trained | |||||||

| Gram Matrix [59,67] | - | - | - | 4096 | 4096 | ||

| ResNet50 [61] | - | - | - | 2048 | 2048 | ||

| CLIP [14] | - | - | - | 512 | 512 | ||

| DINO [40] | - | - | - | 616,225 | 768 | ||

| Trained on WikiArt | |||||||

| ResNet50 [61] (Genre) | WikiArt | Genre | 57,025 | 2048 | 2048 | ||

| ResNet50 [61] (Style) | WikiArt | Style | 57,025 | 2048 | 2048 | ||

| CLIP [14] (Genre) | WikiArt | Genre | 57,025 | 512 | 512 | ||

| CLIP [14] (Style) | WikiArt | Style | 57,025 | 512 | 512 | ||

| SimCLR [60] | WikiArt | - | 57,025 | 2048 | 2048 | ||

| SimSiam [66] | WikiArt | - | 57,025 | 2048 | 2048 | ||

| Trained on Diffusion generated | |||||||

| ResNet50 [61] (Movement) | Diffusion | Movement | 981,225 | 2048 | 2048 | ||

| CLIP [14] (Movement) | Diffusion | Movement | 981,225 | 512 | 512 | ||

| SimCLR [60] | Diffusion | - | 981,225 | 2048 | 2048 | ||

| GOYA (Ours) | Diffusion | - | 981,225 | 2048 | 2048 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Nakashima, Y.; Garcia, N. GOYA: Leveraging Generative Art for Content-Style Disentanglement. J. Imaging 2024, 10, 156. https://doi.org/10.3390/jimaging10070156

Wu Y, Nakashima Y, Garcia N. GOYA: Leveraging Generative Art for Content-Style Disentanglement. Journal of Imaging. 2024; 10(7):156. https://doi.org/10.3390/jimaging10070156

Chicago/Turabian StyleWu, Yankun, Yuta Nakashima, and Noa Garcia. 2024. "GOYA: Leveraging Generative Art for Content-Style Disentanglement" Journal of Imaging 10, no. 7: 156. https://doi.org/10.3390/jimaging10070156

APA StyleWu, Y., Nakashima, Y., & Garcia, N. (2024). GOYA: Leveraging Generative Art for Content-Style Disentanglement. Journal of Imaging, 10(7), 156. https://doi.org/10.3390/jimaging10070156