Improved Osprey Optimization Algorithm with Multi-Strategy Fusion

Abstract

:1. Introduction

2. OOA

3. IOOA

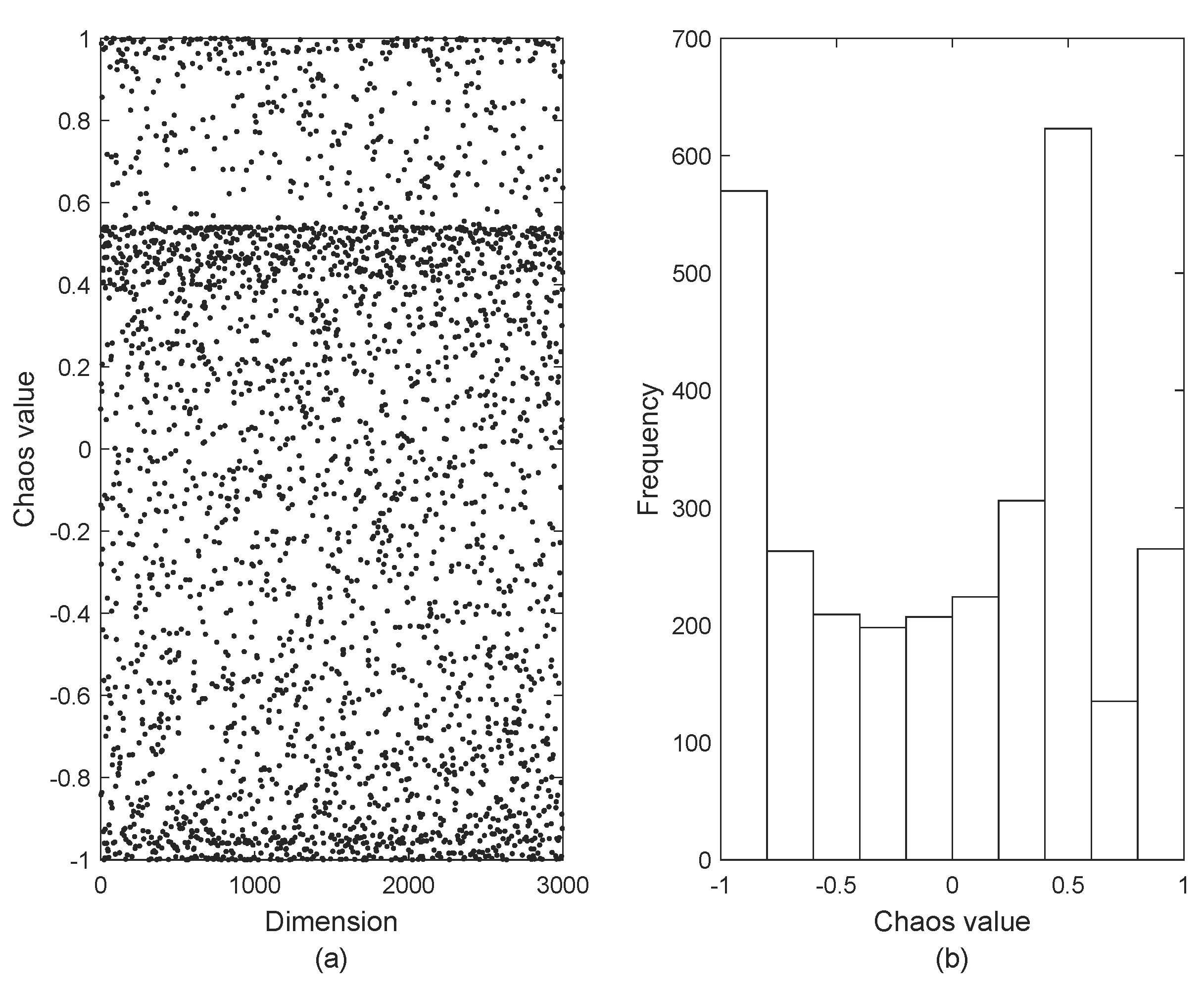

3.1. Fuch Chaotic Mapping

3.2. Adaptive Weighting Factor

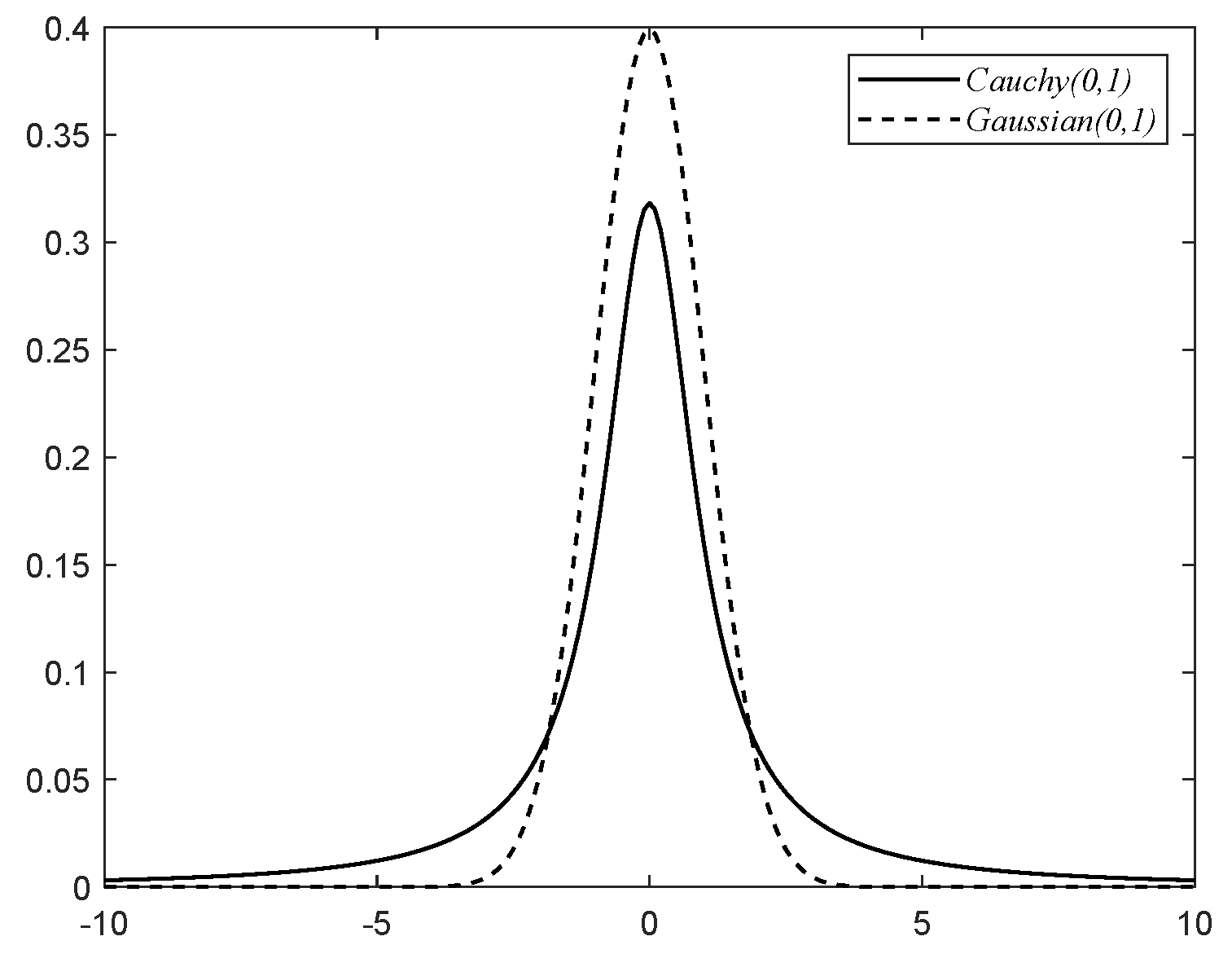

3.3. Cauchy Variation Strategy

3.4. Integration of the Sparrow Search Algorithm Warner Mechanism

3.5. Overall Flow of the IOOA

3.6. Time Complexity Analysis

4. Simulation Experiments and Result Analysis

4.1. Experimental Environment and Test Functions

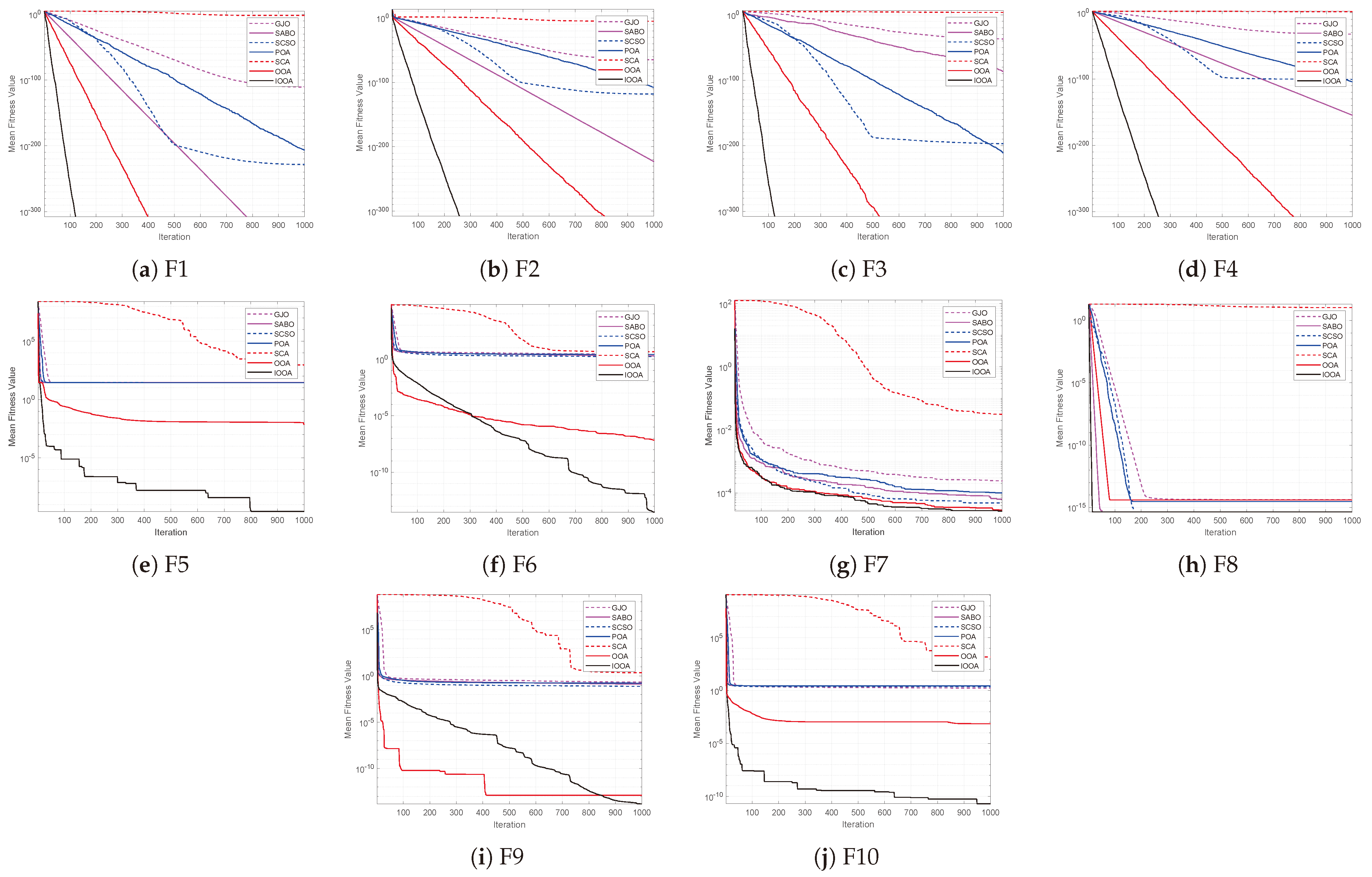

4.2. Convergence Curve Comparison Analysis

4.3. Optimization Accuracy Comparison

4.4. Wilcoxon Rank-Sum Test

4.5. The IOOA Solves CEC2017 Test Functions

5. Engineering Design Problem

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Xiao, P.F.; Ju, H.H.; Li, Q.D.; Chen, F.F. Task planning of space-robot clusters based on modified differential evolution algorithm. Appl. Sci. 2020, 10, 5000. [Google Scholar] [CrossRef]

- Wang, H.Y.; Zhang, Z.Q.; Yang, C.J.; Wang, Z.Q. Improved ABC Algorithm for Multi-Objective Fire Fighting and Rescue Mission Planning for UAV Swarms. Fire Sci. Technol. 2023, 42, 838–843. [Google Scholar]

- Lu, S.Q. Multi-objective workshop scheduling of marine production based on improved ant colony algorithm. J. Coast. Res. 2020, 107, 222–225. [Google Scholar] [CrossRef]

- Ding, J.Y.; Chang, D.F.; Qian, Z.H.; Mu, H. Ship Curved Segment Workshop Scheduling Based on Improved Differential Evolution Algorithm. Mar. Eng. 2023, 45, 116–124. [Google Scholar]

- Venkateswarlu, B.; Vaisakh, K. Adaptive unified differential evolution algorithm for optimal operation of power systems with static security, transient stability and SSSC device. Int. J. Innov. Technol. Explor. Eng 2019, 9, 2238–2253. [Google Scholar] [CrossRef]

- Xia, A.M.; Wu, X.D. Optimized scheduling of power system based on improved multi-objective Haitaru swarm algorithm. Electr. Meas. Instrum. 2023, 60, 77–82. [Google Scholar]

- Zou, A.W.; Wang, L.; Li, W.-M.; Cai, J.C.; Wang, H.; Tan, T.L. Mobile robot path planning using improved mayfly optimization algorithm and dynamic window approach. J. Supercomput. 2023, 79, 8340–8367. [Google Scholar] [CrossRef]

- Cai, J.; Zhong, Z.Y. Improved Ant Colony Algorithm for Food Delivery Robot Path Planning. J. Intell. Syst. 2023, 19, 370–380. [Google Scholar]

- Dehghani, M.; Trojovskỳ, P. Osprey optimization algorithm: A new bio-inspired metaheuristic algorithm for solving engineering optimization problems. Front. Mech. Eng. 2023, 8, 1126450. [Google Scholar] [CrossRef]

- Yu, X.W.; Huang, L.P.; Liu, Y.; Zhang, K.; Li, P.; Li, Y. WSN node location based on beetle antennae search to improve the gray wolf algorithm. Wirel. Netw. 2022, 28, 539–549. [Google Scholar] [CrossRef]

- Yu, F.; Tong, L.; Xia, X.W. Adjustable driving force based particle swarm optimization algorithm. Inf. Sci. 2022, 609, 60–78. [Google Scholar] [CrossRef]

- Xiao, Y.N.; Sun, X.; Guo, Y.L.; Li, S.P.; Zhang, Y.P.; Wang, Y.W. An Improved Gorilla Troops Optimizer Based on Lens Opposition-Based Learning and Adaptive β-Hill Climbing for Global Optimization. CMES-Comput. Model. Eng. Sci. 2022, 131, 815–850. [Google Scholar] [CrossRef]

- Wang, Y.F.; Liao, R.H.; Liang, E.H.; Sun, J.W. Improved whale optimization algorithm based on siege mechanism. Control. Decis. Mak. 2023, 38, 2773–2782. [Google Scholar]

- Zhang, L.; Liu, S.; Gao, W.X.; Guo, Y.X. Elite Inverse Golden Sine Ocean Predator Algorithm. Comput. Eng. Sci. 2023, 45, 355–362. [Google Scholar]

- Yue, X.F.; Zhang, H.B.; Yu, H.Y. A hybrid grasshopper optimization algorithm with invasive weed for global optimization. IEEE Access 2020, 8, 5928–5960. [Google Scholar] [CrossRef]

- Gu, W.J.; Yu, Y.G.; Hu, W. Artificial bee colony algorithmbased parameter estimation of fractional-order chaotic system with time delay. IEEE/CAA J. Autom. Sin. 2017, 4, 107–113. [Google Scholar] [CrossRef]

- Lei, W.L.; Jia, K.; Zhang, X.; Lei, Y. Research on Chaotic Chimp Optimization Algorithm Based on Adaptive Tuning and Its Optimization for Engineering Application. J. Sens. 2023, 2023, 5567629. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, P.T. Research on reactive power optimization based on hybrid osprey optimization algorithm. Energies 2023, 16, 7101. [Google Scholar] [CrossRef]

- Yuan, Y.L.; Yang, Q.K.; Ren, J.J.; Mu, X.K.; Wang, Z.X.; Shen, Q.L.; Zhao, W. Attack-defense strategy assisted osprey optimization algorithm for PEMFC parameters identification. Renew. Energy 2024, 225, 120211. [Google Scholar] [CrossRef]

- Chen, X.Y.; Zhang, M.J.; Wang, D.G. Improved Moby Dick Optimization Algorithm Based on Fuch Mapping and Applications. Comput. Eng. Sci. 2024, 46, 1482–1492. [Google Scholar]

- Xue, J.K.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control. Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Chopra, N.; Ansari, M.M. Golden jackal optimization: A novel nature-inspired optimizer for engineering applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Trojovskỳ, P.; Dehghani, M. Subtraction-average-based optimizer: A new swarm-inspired metaheuristic algorithm for solving optimization problems. Biomimetics 2023, 8, 149. [Google Scholar] [CrossRef] [PubMed]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Trojovskỳ, P.; Dehghani, M. Pelican optimization algorithm: A novel nature-inspired algorithm for engineering applications. Sensors 2022, 22, 855. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

| Function | Range | Min |

|---|---|---|

| F1(Sphere) | [−100, 100] | 0 |

| F2(Schwefel 2.22) | [−10, 10] | 0 |

| F3(Schwefel 1.2) | [−100, 100] | 0 |

| F4(Schwefel 2.21) | [−100, 100] | 0 |

| F5(Rosenbrock) | [−30, 30] | 0 |

| F6(Step) | [−100, 100] | 0 |

| F7(Quartic) | [−1.28, 1.28] | 0 |

| F8(Ackley) | [−32, 32] | 0 |

| F9(Penalized 1.1) | [−50, 50] | 0 |

| F10(Penalized 1.2) | [−50, 50] | 0 |

| Function | Range | Min |

|---|---|---|

| F11(CEC-1) | [−100, 100] | 100 |

| F12(CEC-3) | [−100, 100] | 300 |

| F13(CEC-4) | [−100, 100] | 400 |

| F14(CEC-8) | [−100, 100] | 800 |

| F15(CEC-11) | [−100, 100] | 1100 |

| F16(CEC-12) | [−100, 100] | 1200 |

| F17(CEC-13) | [−100, 100] | 1300 |

| F18(CEC-15) | [−100, 100] | 1500 |

| F19(CEC-19) | [−100, 100] | 1900 |

| F20(CEC-22) | [−100, 100] | 2200 |

| F21(CEC-25) | [−100, 100] | 2500 |

| F22(CEC-26) | [−100, 100] | 2600 |

| F23(CEC-28) | [−100, 100] | 2800 |

| F24(CEC-29) | [−100, 100] | 2900 |

| F25(CEC-30) | [−100, 100] | 3000 |

| Algorithm | Parameters |

|---|---|

| IOOA | , I = 1 or 2, |

| GJO | , , , , |

| SABO | v = 1 or 2, |

| SCSO | |

| POA | I = 1 or 2, R = 0.2 |

| SCA | a = 2, , , |

| OOA | , I = 1 or 2 |

| Function | Index | GJO | SABO | SCSO | POA | SCA | OOA | IOOA |

|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 0 | 0 | ||||

| Std | 0 | 0 | 0 | 0 | 0 | |||

| Rank | 6 | 2 | 4 | 5 | 7 | 2 | 2 | |

| F2 | Mean | 0 | 0 | |||||

| Std | 0 | 0 | 0 | |||||

| Rank | 6 | 3 | 4 | 5 | 7 | 1.5 | 1.5 | |

| F3 | Mean | 0 | 0 | |||||

| Std | 0 | 0 | 0 | 0 | ||||

| Rank | 6 | 5 | 4 | 3 | 7 | 1.5 | 1.5 | |

| F4 | Mean | 0 | 0 | |||||

| Std | 0 | 0 | ||||||

| Rank | 6 | 3 | 5 | 4 | 7 | 1.5 | 1.5 | |

| F5 | Mean | |||||||

| Std | ||||||||

| Rank | 4 | 6 | 5 | 3 | 7 | 2 | 1 | |

| F6 | Mean | 2.62 | 1.97 | 1.64 | 2.59 | 4.55 | ||

| Std | ||||||||

| Rank | 5 | 4 | 3 | 6 | 7 | 2 | 1 | |

| F7 | Mean | |||||||

| Std | ||||||||

| Rank | 6 | 4 | 3 | 5 | 7 | 2 | 1 | |

| F8 | Mean | |||||||

| Std | 0 | 0 | 9.57 | 0 | 0 | |||

| Rank | 6 | 2 | 2 | 4 | 7 | 5 | 2 | |

| F9 | Mean | 2.31 | ||||||

| Std | 2.65 | |||||||

| Rank | 6 | 4 | 3 | 5 | 7 | 1 | 2 | |

| F10 | Mean | 1.66 | 2.76 | 2.31 | 2.74 | |||

| Std | ||||||||

| Rank | 3 | 6 | 4 | 5 | 7 | 2 | 1 | |

| Rank-Count | 54 | 39 | 37 | 45 | 70 | 20.5 | 14.5 | |

| Ave-Rank | 5.4 | 3.9 | 3.7 | 4.5 | 7.0 | 2.05 | 1.45 | |

| Overall-Rank | 6 | 4 | 3 | 5 | 7 | 2 | 1 | |

| Function | Index | GJO | SABO | SCSO | POA | SCA | OOA | IOOA |

|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 0 | 0 | ||||

| Std | 0 | 0 | 0 | 0 | 0 | |||

| Rank | 6 | 2 | 4 | 5 | 7 | 2 | 2 | |

| F2 | Mean | 0 | 0 | |||||

| Std | 0 | 0 | 0 | |||||

| Rank | 6 | 3 | 4 | 5 | 7 | 1.5 | 1.5 | |

| F3 | Mean | 0 | 0 | |||||

| Std | 0 | 0 | 0 | 0 | ||||

| Rank | 6 | 5 | 4 | 3 | 7 | 1.5 | 1.5 | |

| F4 | Mean | 0 | 0 | |||||

| Std | 7.05 | 0 | 0 | |||||

| Rank | 6 | 3 | 5 | 4 | 7 | 1.5 | 1.5 | |

| F5 | Mean | |||||||

| Std | ||||||||

| Rank | 3 | 5 | 6 | 4 | 7 | 2 | 1 | |

| F6 | Mean | 6.02 | 5.32 | 4.80 | 5.32 | |||

| Std | ||||||||

| Rank | 6 | 4 | 3 | 5 | 7 | 2 | 1 | |

| F7 | Mean | |||||||

| Std | ||||||||

| Rank | 6 | 3 | 4 | 5 | 7 | 2 | 1 | |

| F8 | Mean | |||||||

| Std | 0 | 0 | 7.26 | 0 | 0 | |||

| Rank | 6 | 2 | 2 | 4 | 7 | 5 | 2 | |

| F9 | Mean | |||||||

| Std | ||||||||

| Rank | 6 | 5 | 3 | 4 | 7 | 2 | 1 | |

| F10 | Mean | 3.49 | 4.97 | 4.62 | 4.91 | |||

| Std | ||||||||

| Rank | 3 | 6 | 4 | 5 | 7 | 2 | 1 | |

| Rank-Count | 54 | 38 | 39 | 44 | 70 | 21.5 | 13.5 | |

| Ave-Rank | 5.4 | 3.8 | 3.9 | 4.4 | 70 | 2.15 | 1.35 | |

| Overall-Rank | 6 | 3 | 4 | 5 | 7 | 2 | 1 | |

| Function | Index | GJO | SABO | SCSO | POA | SCA | OOA | IOOA |

|---|---|---|---|---|---|---|---|---|

| F1 | Mean | 0 | 0 | 0 | ||||

| Std | 0 | 0 | 0 | 0 | 0 | |||

| Rank | 6 | 2 | 4 | 5 | 7 | 2 | 2 | |

| F2 | Mean | 2.43 | 0 | 0 | ||||

| Std | 0 | 3.11 | 0 | 0 | ||||

| Rank | 6 | 3 | 5 | 4 | 7 | 1.5 | 1.5 | |

| F3 | Mean | 0 | 0 | |||||

| Std | 0 | 0 | 0 | 0 | ||||

| Rank | 6 | 5 | 4 | 3 | 7 | 1.5 | 1.5 | |

| F4 | Mean | 1.22 | 0 | 0 | ||||

| Std | 4.26 | 2.97 | 0 | 0 | ||||

| Rank | 6 | 3 | 5 | 4 | 7 | 1.5 | 1.5 | |

| F5 | Mean | |||||||

| Std | ||||||||

| Rank | 4 | 5 | 6 | 3 | 7 | 2 | 1 | |

| F6 | Mean | |||||||

| Std | 1.47 | 1.15 | ||||||

| Rank | 6 | 5 | 3 | 4 | 7 | 2 | 1 | |

| F7 | Mean | |||||||

| Std | ||||||||

| Rank | 6 | 3 | 4 | 5 | 7 | 2 | 1 | |

| F8 | Mean | |||||||

| Std | 0 | 0 | 3.28 | 0 | 0 | |||

| Rank | 6 | 2 | 2 | 4 | 7 | 5 | 2 | |

| F9 | Mean | |||||||

| Std | ||||||||

| Rank | 6 | 5 | 3 | 4 | 7 | 2 | 1 | |

| F10 | Mean | 8.54 | 9.95 | 9.66 | 9.95 | |||

| Std | ||||||||

| Rank | 3 | 6 | 4 | 5 | 7 | 2 | 1 | |

| Rank-Count | 55 | 39 | 40 | 41 | 70 | 21.5 | 13.5 | |

| Ave-Rank | 5.5 | 3.9 | 4.0 | 4.1 | 7.0 | 2.15 | 1.35 | |

| Overall-Rank | 6 | 3 | 4 | 5 | 7 | 2 | 1 | |

| dim | Function | GJO | SABO | SCSO | POA | SCA | OOA |

|---|---|---|---|---|---|---|---|

| D = 30 | F1 | NAN | NAN | ||||

| F2 | NAN | ||||||

| F3 | NAN | ||||||

| F4 | NAN | ||||||

| F5 | |||||||

| F6 | |||||||

| F7 | |||||||

| F8 | NAN | NAN | |||||

| F9 | |||||||

| F10 | |||||||

| +/=/− | 10/0/0 | 8/2/0 | 9/1/0 | 10/0/0 | 10/0/0 | 6/4/0 | |

| D = 50 | F1 | NAN | NAN | ||||

| F2 | NAN | ||||||

| F3 | NAN | ||||||

| F4 | NAN | ||||||

| F5 | |||||||

| F6 | |||||||

| F7 | |||||||

| F8 | NAN | NAN | |||||

| F9 | |||||||

| F10 | |||||||

| +/=/− | 10/0/0 | 8/2/0 | 9/1/0 | 10/0/0 | 10/0/0 | 6/4/0 | |

| D = 100 | F1 | NAN | NAN | ||||

| F2 | NAN | ||||||

| F3 | NAN | ||||||

| F4 | NAN | ||||||

| F5 | |||||||

| F6 | |||||||

| F7 | |||||||

| F8 | NAN | NAN | |||||

| F9 | |||||||

| F10 | |||||||

| +/=/− | 10/0/0 | 8/2/0 | 9/1/0 | 10/0/0 | 10/0/0 | 6/4/0 |

| Function | Index | GJO | SABO | SCSO | POA | SCA | OOA | IOOA |

|---|---|---|---|---|---|---|---|---|

| F11 | Mean | |||||||

| Std | ||||||||

| Rank | 4 | 3 | 2 | 5 | 6 | 7 | 1 | |

| F12 | Mean | |||||||

| Std | ||||||||

| Rank | 5 | 4 | 3 | 1 | 6 | 7 | 2 | |

| F13 | Mean | |||||||

| Std | ||||||||

| Rank | 3 | 4 | 2 | 5 | 6 | 7 | 1 | |

| F14 | Mean | |||||||

| Std | ||||||||

| Rank | 2 | 5 | 4 | 3 | 6 | 7 | 1 | |

| F15 | Mean | |||||||

| Std | ||||||||

| Rank | 4 | 6 | 3 | 2 | 5 | 7 | 1 | |

| F16 | Mean | |||||||

| Std | ||||||||

| Rank | 4 | 3 | 2 | 5 | 6 | 7 | 1 | |

| F17 | Mean | |||||||

| Std | ||||||||

| Rank | 5 | 4 | 3 | 2 | 6 | 7 | 1 | |

| F18 | Mean | |||||||

| Std | ||||||||

| Rank | 5 | 4 | 3 | 2 | 6 | 7 | 1 | |

| F19 | Mean | |||||||

| Std | ||||||||

| Rank | 5 | 4 | 3 | 2 | 6 | 7 | 1 | |

| F20 | Mean | |||||||

| Std | ||||||||

| Rank | 5 | 2 | 3 | 4 | 7 | 6 | 1 | |

| F21 | Mean | |||||||

| Std | ||||||||

| Rank | 3 | 5 | 2 | 4 | 6 | 7 | 1 | |

| F22 | Mean | |||||||

| Std | ||||||||

| Rank | 2 | 6 | 3 | 4 | 5 | 7 | 1 | |

| F23 | Mean | |||||||

| Std | ||||||||

| Rank | 3 | 5 | 2 | 4 | 6 | 7 | 1 | |

| F24 | Mean | |||||||

| Std | ||||||||

| Rank | 2 | 6 | 4 | 3 | 5 | 7 | 1 | |

| F25 | Mean | |||||||

| Std | ||||||||

| Rank | 5 | 4 | 3 | 2 | 6 | 7 | 1 | |

| Rank-Count | 57 | 65 | 42 | 48 | 88 | 104 | 16 | |

| Ave-Rank | 3.80 | 4.33 | 2.80 | 3.20 | 5.87 | 6.93 | 1.07 | |

| Overall-Rank | 4 | 5 | 2 | 3 | 6 | 7 | 1 | |

| Algorithm | Parameters | Best | |

|---|---|---|---|

| GJO | 0.792652 | 0.397171 | 263.913033 |

| SABO | 0.782390 | 0.427893 | 264.082722 |

| SCSO | 0.784455 | 0.420320 | 263.909287 |

| POA | 0.411249 | 0.411249 | 263.896682 |

| SCA | 0.796310 | 0.391495 | 264.379949 |

| OOA | 0.747933 | 0.537742 | 265.321646 |

| IOOA | 0.788764 | 0.407998 | 263.895849 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, W.; Han, J.; Wu, X. Improved Osprey Optimization Algorithm with Multi-Strategy Fusion. Biomimetics 2024, 9, 670. https://doi.org/10.3390/biomimetics9110670

Lei W, Han J, Wu X. Improved Osprey Optimization Algorithm with Multi-Strategy Fusion. Biomimetics. 2024; 9(11):670. https://doi.org/10.3390/biomimetics9110670

Chicago/Turabian StyleLei, Wenli, Jinping Han, and Xinghao Wu. 2024. "Improved Osprey Optimization Algorithm with Multi-Strategy Fusion" Biomimetics 9, no. 11: 670. https://doi.org/10.3390/biomimetics9110670

APA StyleLei, W., Han, J., & Wu, X. (2024). Improved Osprey Optimization Algorithm with Multi-Strategy Fusion. Biomimetics, 9(11), 670. https://doi.org/10.3390/biomimetics9110670