Assessing Maize Yield Spatiotemporal Variability Using Unmanned Aerial Vehicles and Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

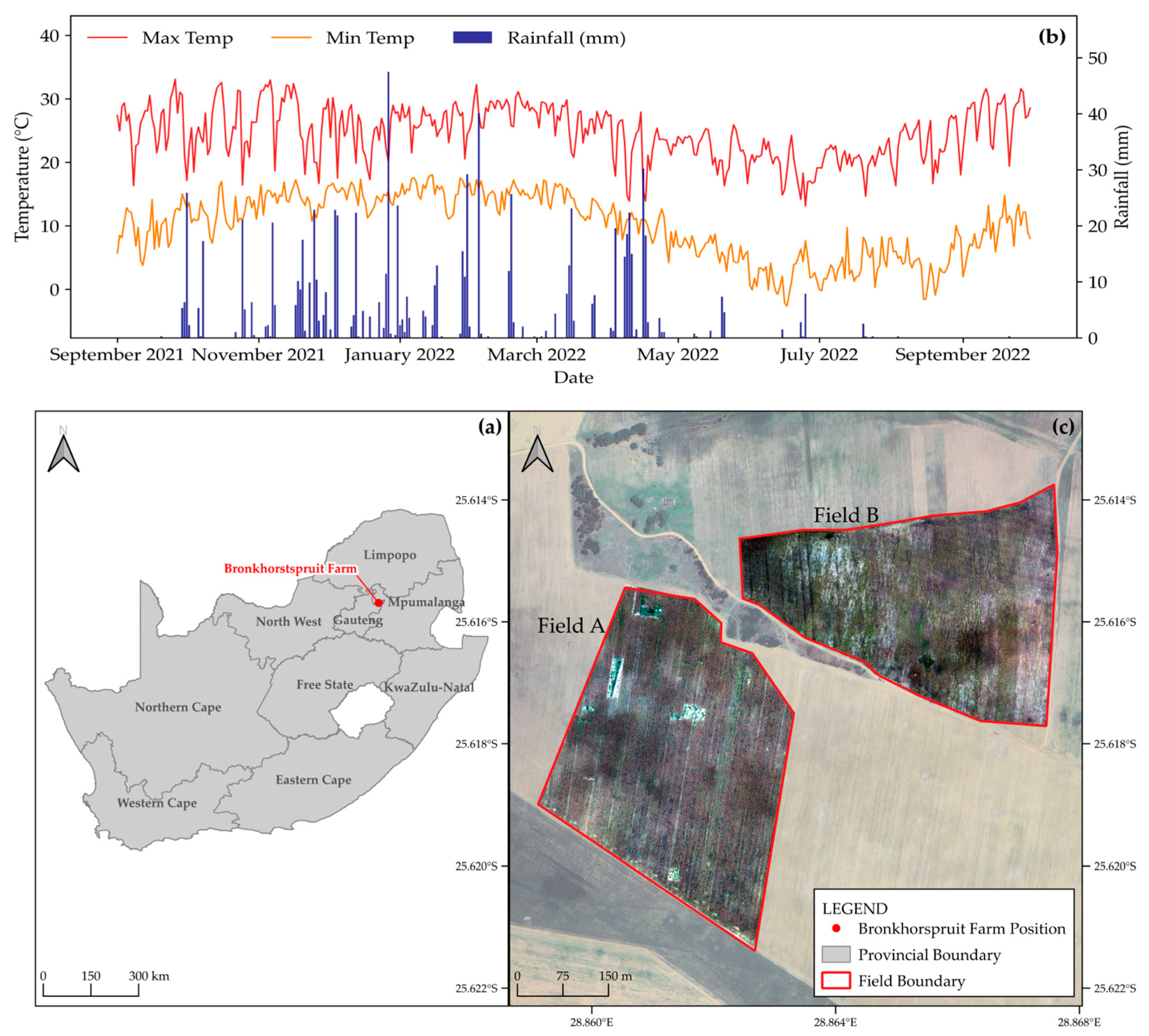

2.1. Study Site

2.2. Field Yield Measurements

2.3. Remote Sensing Imagery and Preprocessing

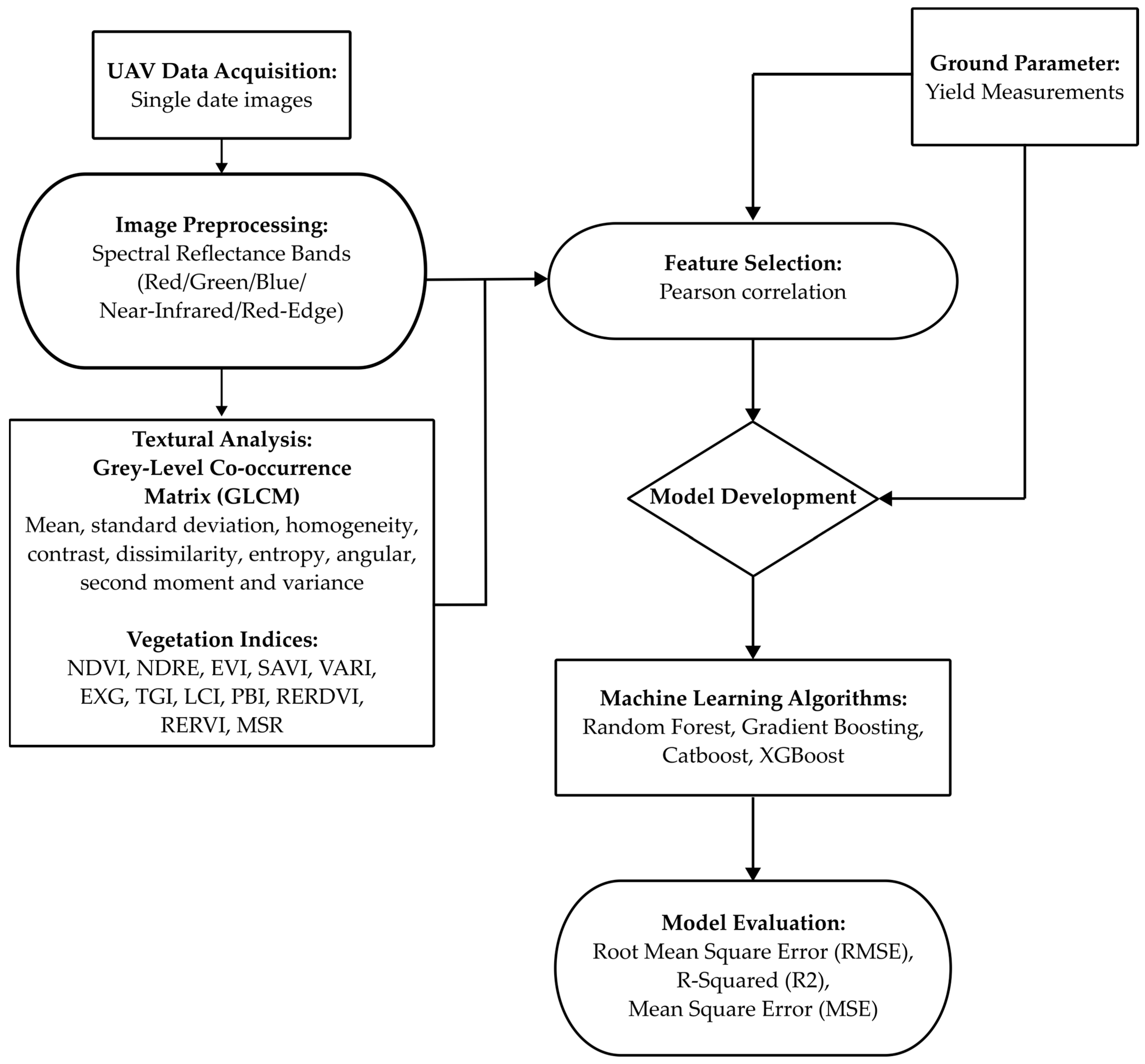

2.4. An Overview of the Methodology

2.4.1. Textural Properties

2.4.2. Spectral Vegetation Indices

| Features | Formula | References |

|---|---|---|

| th entry in normalized grey-tone spatial dependence matrix | Haralick et al. [60] | |

| The distinct number of grey levels in the image | ||

| Mean | ||

| Homogeneity | ||

| Dissimilarity | ||

| Contrast | ||

| Entropy | ||

| Angular second moment | ||

| Variance | ||

| Leaf chlorophyll index (LCI) | Datt [61] | |

| Excess green index (EGI/EXG) | Woebbecke et al. [62] | |

| Modified simple ratio (MSR) | Chen [63] | |

| Red-edge re-normalized difference vegetation index (RERDVI) | Cao et al. [64] | |

| Plant biochemical index (PBI) | Rao et al. [65] | |

| Red-edge ratio vegetation index (RERVI) | Jasper et al. [66] | |

| Visible atmospheric resistant index (VARI) | Gitelson et al. [67] | |

| Triangular greenness index (TGI) | Hunt Jr et al. [68] | |

| Normalized difference vegetation index (NDVI) | Tucker [69] | |

| Enhanced vegetation index (EVI) | Huete et al. [70] | |

| Soil-adjusted vegetation index (SAVI) | Huete [71] | |

| Normalized difference red-edge index (NDRE) | Barnes et al. [72] |

2.5. Feature Selection

2.6. Machine Learning Algorithms

2.7. Accuracy Assessment

3. Results

3.1. Correlation Analysis of Maize Yield and UAV Data for Feature Selection

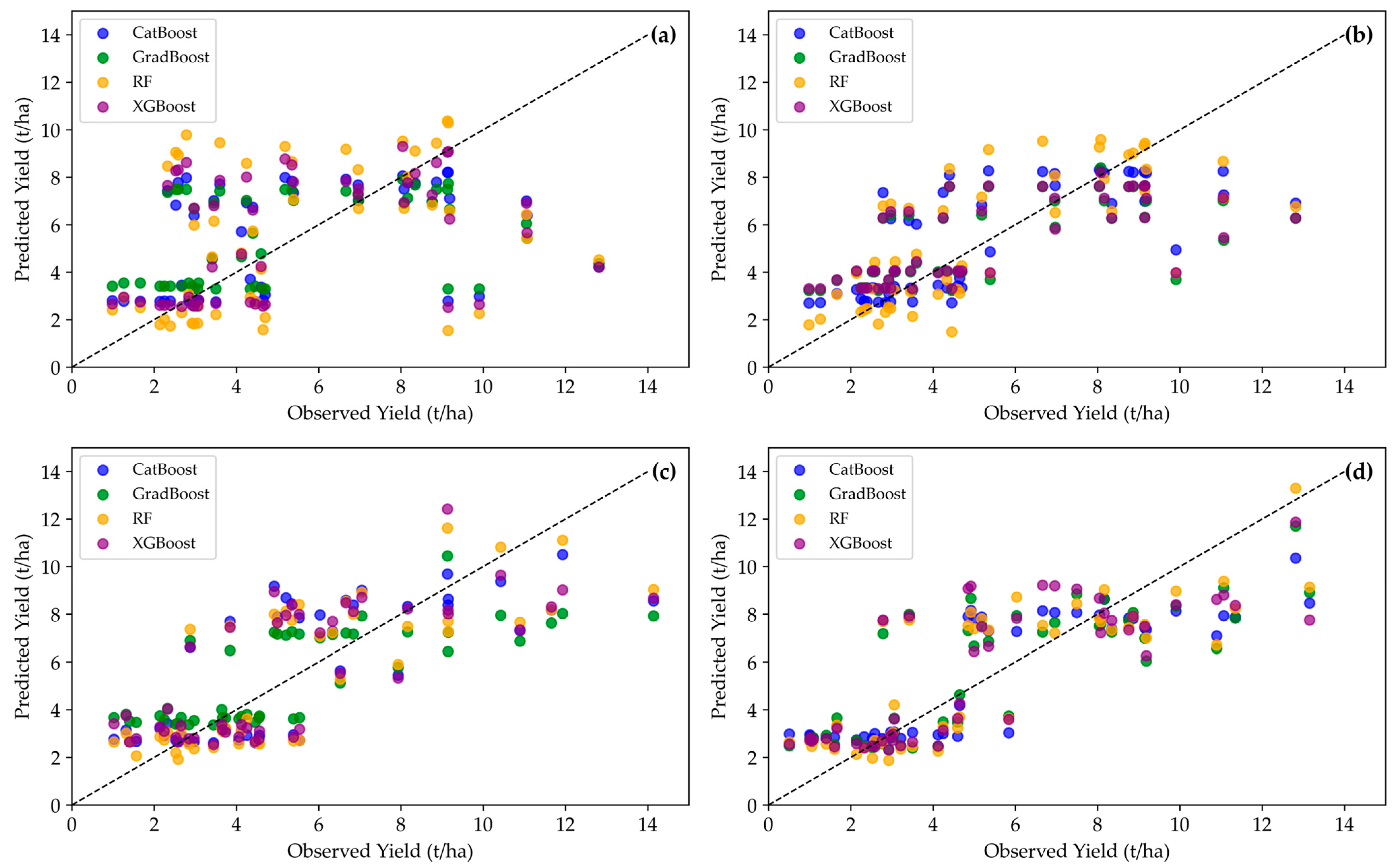

3.2. Development of Maize Yield Prediction Models

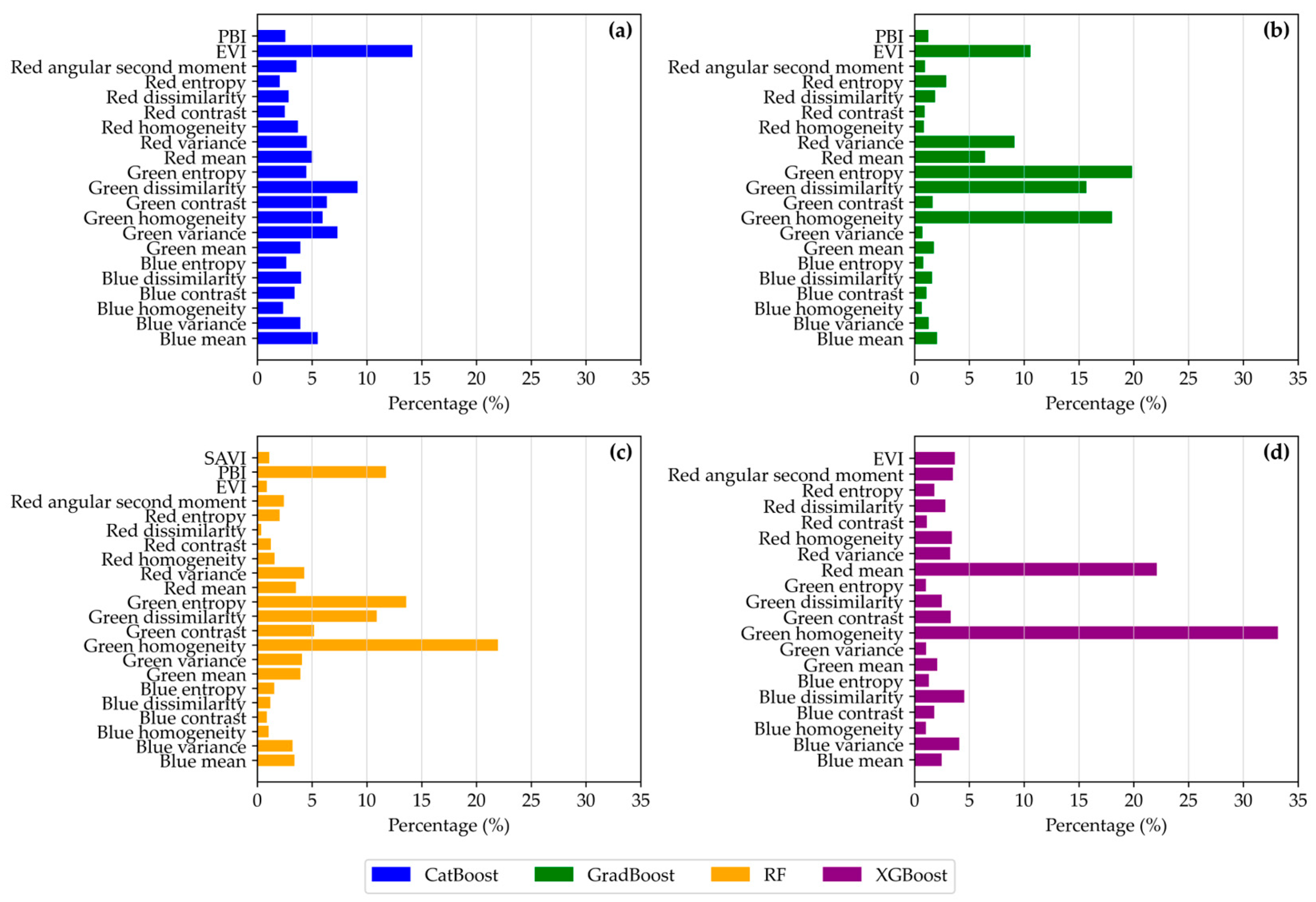

3.3. Input Features of Importance for Maize Yield Prediction

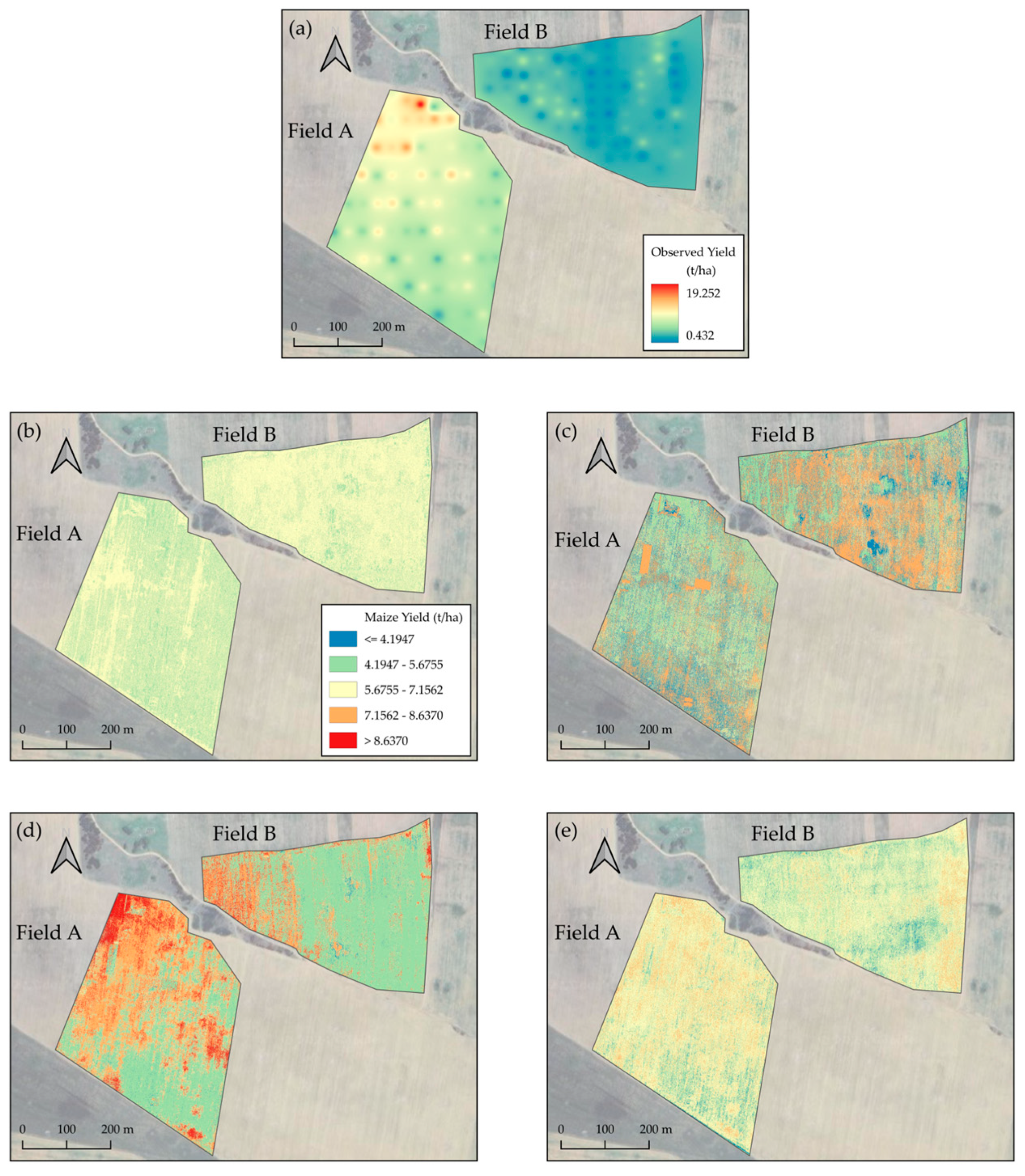

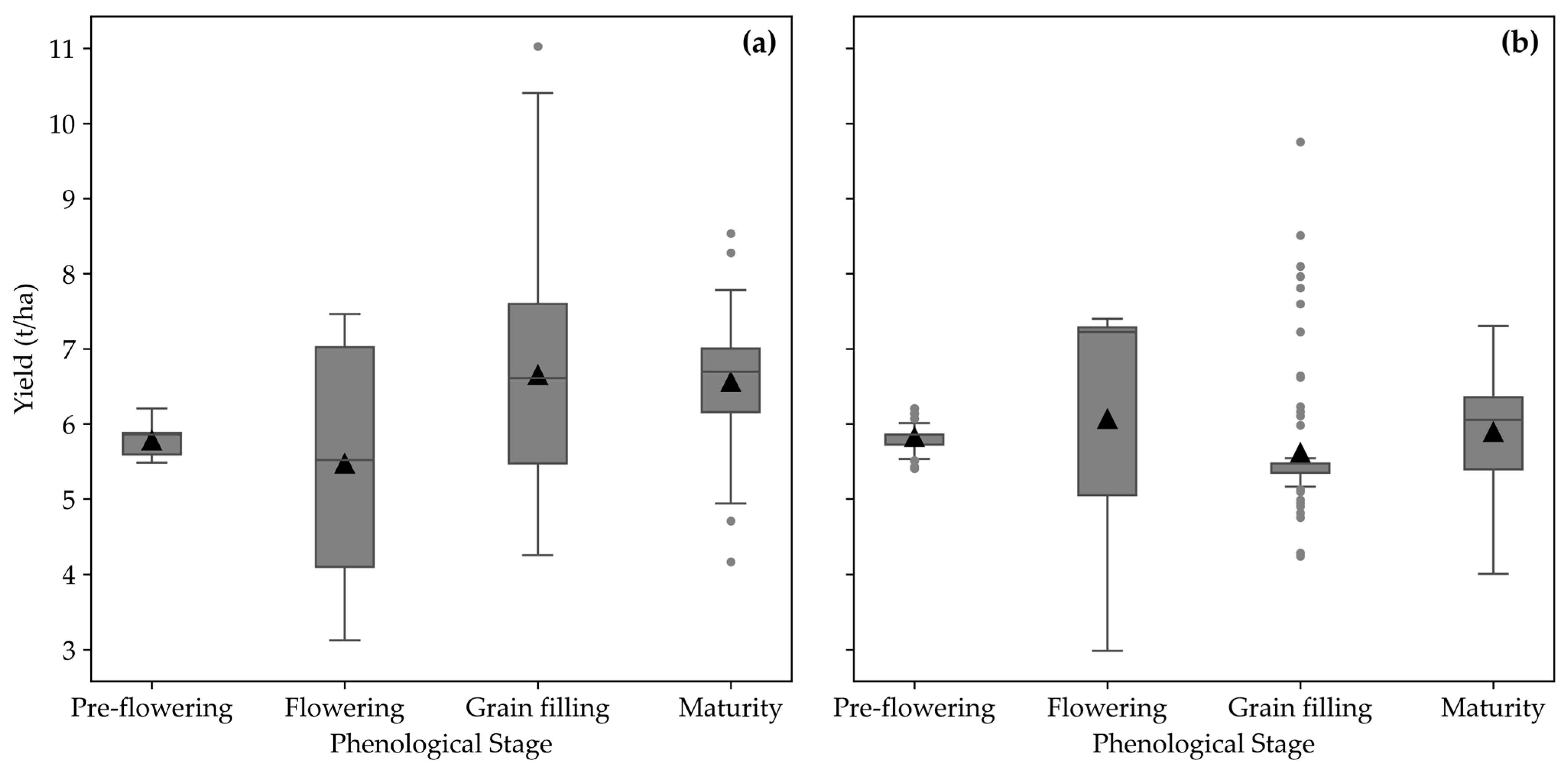

3.4. Visualizing Temporal Analysis of Maize Yield Variability

3.5. Maize Yield Spatiotemporal Variability

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nuss, E.T.; Tanumihardjo, S.A. Maize: A paramount staple crop in the context of global nutrition. Compr. Rev. Food Sci. Food Saf. 2010, 9, 417–436. [Google Scholar] [CrossRef] [PubMed]

- Tanumihardjo, S.A.; McCulley, L.; Roh, R.; Lopez-Ridaura, S.; Palacios-Rojas, N.; Gunaratna, N.S. Maize agro-food systems to ensure food and nutrition security in reference to the Sustainable Development Goals. Glob. Food Secur. 2020, 25, 100327. [Google Scholar] [CrossRef]

- FAOSTAT. Food, Agriculture Organization of the United, Nations. Statistical Database; FAO: Rome, Italy, 2023. [Google Scholar]

- Erenstein, O.; Jaleta, M.; Sonder, K.; Mottaleb, K.; Prasanna, B. Global maize production, consumption and trade: Trends and R&D implications. Food Secur. 2022, 14, 1295–1319. [Google Scholar]

- Cairns, J.E.; Chamberlin, J.; Rutsaert, P.; Voss, R.C.; Ndhlela, T.; Magorokosho, C. Challenges for sustainable maize production of smallholder farmers in sub-Saharan Africa. J. Cereal Sci. 2021, 101, 103274. [Google Scholar] [CrossRef]

- Kouame, A.K.; Bindraban, P.S.; Kissiedu, I.N.; Atakora, W.K.; El Mejahed, K. Identifying drivers for variability in maize (Zea mays L.) yield in Ghana: A meta-regression approach. Agric. Syst. 2023, 209, 103667. [Google Scholar]

- Shi, W.; Tao, F. Vulnerability of African maize yield to climate change and variability during 1961–2010. Food Secur. 2014, 6, 471–481. [Google Scholar] [CrossRef]

- Mumo, L.; Yu, J.; Fang, K. Assessing impacts of seasonal climate variability on maize yield in Kenya. Int. J. Plant Prod. 2018, 12, 297–307. [Google Scholar] [CrossRef]

- Omoyo, N.N.; Wakhungu, J.; Oteng’i, S. Effects of climate variability on maize yield in the arid and semi arid lands of lower eastern Kenya. Agric. Food Secur. 2015, 4, 8. [Google Scholar] [CrossRef]

- Akpalu, W.; Rashid, H.M.; Ringler, C. Climate variability and maize yield in the Limpopo region of South Africa: Results from GME and MELE methods. Clim. Dev. 2011, 3, 114–122. [Google Scholar] [CrossRef]

- Githongo, M.; Kiboi, M.; Ngetich, F.; Musafiri, C.; Muriuki, A.; Fliessbach, A. The effect of minimum tillage and animal manure on maize yields and soil organic carbon in sub-Saharan Africa: A meta-analysis. Environ. Chall. 2021, 5, 100340. [Google Scholar] [CrossRef]

- Haarhoff, S.J.; Kotzé, T.N.; Swanepoel, P.A. A prospectus for sustainability of rainfed maize production systems in South Africa. Crop Sci. 2020, 60, 14–28. [Google Scholar] [CrossRef]

- Zampieri, M.; Ceglar, A.; Dentener, F.; Dosio, A.; Naumann, G.; Van Den Berg, M.; Toreti, A. When will current climate extremes affecting maize production become the norm? Earth’s Future 2019, 7, 113–122. [Google Scholar] [CrossRef]

- Anderson, W.; Seager, R.; Baethgen, W.; Cane, M.; You, L. Synchronous crop failures and climate-forced production variability. Sci. Adv. 2019, 5, eaaw1976. [Google Scholar] [CrossRef] [PubMed]

- Wahab, I. In-season plot area loss and implications for yield estimation in smallholder rainfed farming systems at the village level in Sub-Saharan Africa. Geo J. 2020, 85, 1553–1572. [Google Scholar] [CrossRef]

- Schwalbert, R.A.; Amado, T.J.; Nieto, L.; Varela, S.; Corassa, G.M.; Horbe, T.A.; Rice, C.W.; Peralta, N.R.; Ciampitti, I.A. Forecasting maize yield at field scale based on high-resolution satellite imagery. Biosyst. Eng. 2018, 171, 179–192. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Gatto, S.; Marinello, F.; Pirotti, F. Monitoring within-field variability of corn yield using Sentinel-2 and machine learning techniques. Remote Sens. 2019, 11, 2873. [Google Scholar] [CrossRef]

- Battude, M.; Al Bitar, A.; Morin, D.; Cros, J.; Huc, M.; Sicre, C.M.; Le Dantec, V.; Demarez, V. Estimating maize biomass and yield over large areas using high spatial and temporal resolution Sentinel-2 like remote sensing data. Remote Sens. Environ. 2016, 184, 668–681. [Google Scholar] [CrossRef]

- Li, C.; Chimimba, E.G.; Kambombe, O.; Brown, L.A.; Chibarabada, T.P.; Lu, Y.; Anghileri, D.; Ngongondo, C.; Sheffield, J.; Dash, J. Maize yield estimation in intercropped smallholder fields using satellite data in southern Malawi. Remote Sens. 2022, 14, 2458. [Google Scholar] [CrossRef]

- Jiang, G.; Grafton, M.; Pearson, D.; Bretherton, M.; Holmes, A. Integration of precision farming data and spatial statistical modelling to interpret field-scale maize productivity. Agriculture 2019, 9, 237. [Google Scholar] [CrossRef]

- Lobell, D.B.; Azzari, G.; Burke, M.; Gourlay, S.; Jin, Z.; Kilic, T.; Murray, S. Eyes in the sky, boots on the ground: Assessing satellite-and ground-based approaches to crop yield measurement and analysis. Am. J. Agric. Econ. 2020, 102, 202–219. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed mapping in early-season maize fields using object-based analysis of unmanned aerial vehicle (UAV) images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef]

- dos Santos, R.A.; Mantovani, E.C.; Filgueiras, R.; Fernandes-Filho, E.I.; da Silva, A.C.B.; Venancio, L.P. Actual evapotranspiration and biomass of maize from a red-green-near-infrared (RGNIR) sensor on board an unmanned aerial vehicle (UAV). Water 2020, 12, 2359. [Google Scholar] [CrossRef]

- Adewopo, J.; Peter, H.; Mohammed, I.; Kamara, A.; Craufurd, P.; Vanlauwe, B. Can a combination of uav-derived vegetation indices with biophysical variables improve yield variability assessment in smallholder farms? Agronomy 2020, 10, 1934. [Google Scholar] [CrossRef]

- Guo, Y.; Xiao, Y.; Li, M.; Hao, F.; Zhang, X.; Sun, H.; de Beurs, K.; Fu, Y.H.; He, Y. Identifying crop phenology using maize height constructed from multi-sources images. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103121. [Google Scholar] [CrossRef]

- Gilliot, J.-M.; Michelin, J.; Hadjard, D.; Houot, S. An accurate method for predicting spatial variability of maize yield from UAV-based plant height estimation: A tool for monitoring agronomic field experiments. Precis. Agric. 2021, 22, 897–921. [Google Scholar] [CrossRef]

- Yonah, I.B.; Mourice, S.K.; Tumbo, S.D.; Mbilinyi, B.P.; Dempewolf, J. Unmanned aerial vehicle-based remote sensing in monitoring smallholder, heterogeneous crop fields in Tanzania. Int. J. Remote Sens. 2018, 39, 5453–5471. [Google Scholar] [CrossRef]

- Buthelezi, S.; Mutanga, O.; Sibanda, M.; Odindi, J.; Clulow, A.D.; Chimonyo, V.G.; Mabhaudhi, T. Assessing the prospects of remote sensing maize leaf area index using UAV-derived multi-spectral data in smallholder farms across the growing season. Remote Sens. 2023, 15, 1597. [Google Scholar] [CrossRef]

- Brewer, K.; Clulow, A.; Sibanda, M.; Gokool, S.; Naiken, V.; Mabhaudhi, T. Predicting the chlorophyll content of maize over phenotyping as a proxy for crop health in smallholder farming systems. Remote Sens. 2022, 14, 518. [Google Scholar] [CrossRef]

- Lu, J.; Cheng, D.; Geng, C.; Zhang, Z.; Xiang, Y.; Hu, T. Combining plant height, canopy coverage and vegetation index from UAV-based RGB images to estimate leaf nitrogen concentration of summer maize. Biosyst. Eng. 2021, 202, 42–54. [Google Scholar] [CrossRef]

- García-Martínez, H.; Flores-Magdaleno, H.; Ascencio-Hernández, R.; Khalil-Gardezi, A.; Tijerina-Chávez, L.; Mancilla-Villa, O.R.; Vázquez-Peña, M.A. Corn grain yield estimation from vegetation indices, canopy cover, plant density, and a neural network using multispectral and RGB images acquired with unmanned aerial vehicles. Agriculture 2020, 10, 277. [Google Scholar] [CrossRef]

- Osco, L.P.; Junior, J.M.; Ramos, A.P.M.; Furuya, D.E.G.; Santana, D.C.; Teodoro, L.P.R.; Gonçalves, W.N.; Baio, F.H.R.; Pistori, H.; Junior, C.A.d.S. Leaf nitrogen concentration and plant height prediction for maize using UAV-based multispectral imagery and machine learning techniques. Remote Sens. 2020, 12, 3237. [Google Scholar] [CrossRef]

- Marcial-Pablo, M.d.J.; Ontiveros-Capurata, R.E.; Jimenez-Jimenez, S.I.; Ojeda-Bustamante, W. Maize crop coefficient estimation based on spectral vegetation indices and vegetation cover fraction derived from UAV-based multispectral images. Agronomy 2021, 11, 668. [Google Scholar] [CrossRef]

- Schut, A.G.T.; Traore, P.C.S.; Blaes, X.; de By, R.A. Assessing yield and fertilizer response in heterogeneous smallholder fields with UAVs and satellites. Field Crops Res. 2018, 221, 98–107. [Google Scholar] [CrossRef]

- Wahab, I.; Hall, O.; Jirström, M. Remote sensing of yields: Application of UAV imagery-derived ndvi for estimating maize vigor and yields in complex farming systems in Sub-Saharan Africa. Drones 2018, 2, 28. [Google Scholar] [CrossRef]

- Ramos, A.P.M.; Osco, L.P.; Furuya, D.E.G.; Gonçalves, W.N.; Santana, D.C.; Teodoro, L.P.R.; da Silva Junior, C.A.; Capristo-Silva, G.F.; Li, J.; Baio, F.H.R.; et al. A random forest ranking approach to predict yield in maize with uav-based vegetation spectral indices. Comput. Electron. Agric. 2020, 178, 105791. [Google Scholar] [CrossRef]

- Pinto, A.A.; Zerbato, C.; Rolim, G.d.S.; Barbosa Júnior, M.R.; Silva, L.F.V.d.; Oliveira, R.P.d. Corn grain yield forecasting by satellite remote sensing and machine-learning models. Agron. J. 2022, 114, 2956–2968. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, X.; Chen, S.; Wang, H.; Jayavelu, S.; Cammarano, D.; Fu, Y. Integrated UAV-Based Multi-Source Data for Predicting Maize Grain Yield Using Machine Learning Approaches. Remote Sens. 2022, 14, 6290. [Google Scholar] [CrossRef]

- Yang, B.; Zhu, W.; Rezaei, E.E.; Li, J.; Sun, Z.; Zhang, J. The Optimal Phenological Phase of Maize for Yield Prediction with High-Frequency UAV Remote Sensing. Remote Sens. 2022, 14, 1559. [Google Scholar] [CrossRef]

- Killeen, P.; Kiringa, I.; Yeap, T.; Branco, P. Corn grain yield prediction using UAV-based high spatiotemporal resolution imagery, machine learning, and spatial cross-validation. Remote Sens. 2024, 16, 683. [Google Scholar] [CrossRef]

- Danilevicz, M.F.; Bayer, P.E.; Boussaid, F.; Bennamoun, M.; Edwards, D. Maize yield prediction at an early developmental stage using multispectral images and genotype data for preliminary hybrid selection. Remote Sens. 2021, 13, 3976. [Google Scholar] [CrossRef]

- Kumar, C.; Mubvumba, P.; Huang, Y.; Dhillon, J.; Reddy, K. Multi-Stage Corn Yield Prediction Using High-Resolution UAV Multispectral Data and Machine Learning Models. Agronomy 2023, 13, 1277. [Google Scholar] [CrossRef]

- Fan, J.; Zhou, J.; Wang, B.; de Leon, N.; Kaeppler, S.M.; Lima, D.C.; Zhang, Z. Estimation of maize yield and flowering time using multi-temporal UAV-based hyperspectral data. Remote Sens. 2022, 14, 3052. [Google Scholar] [CrossRef]

- Bao, L.; Li, X.; Yu, J.; Li, G.; Chang, X.; Yu, L.; Li, Y. Forecasting spring maize yield using vegetation indices and crop phenology metrics from UAV observations. Food Energy Secur. 2024, 13, e505. [Google Scholar] [CrossRef]

- Sibanda, M.; Buthelezi, S.; Mutanga, O.; Odindi, J.; Clulow, A.D.; Chimonyo, V.; Gokool, S.; Naiken, V.; Magidi, J.; Mabhaudhi, T. Exploring the prospects of UAV-Remotely sensed data in estimating productivity of Maize crops in typical smallholder farms of Southern Africa. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 10, 1143–1150. [Google Scholar] [CrossRef]

- Chivasa, W.; Mutanga, O.; Burgueño, J. UAV-based high-throughput phenotyping to increase prediction and selection accuracy in maize varieties under artificial MSV inoculation. Comput. Electron. Agric. 2021, 184, 106128. [Google Scholar] [CrossRef]

- Ren, Y.; Li, Q.; Du, X.; Zhang, Y.; Wang, H.; Shi, G.; Wei, M. Analysis of corn yield prediction potential at various growth phases using a process-based model and deep learning. Plants 2023, 12, 446. [Google Scholar] [CrossRef] [PubMed]

- Du Plessis, M. 1: 250,000 Geological Series. 2528 Pretoria; Council for Geoscience: Pretoria, South Africa, 1978. [Google Scholar]

- Moeletsi, M.E. Mapping of maize growing period over the free state province of South Africa: Heat units approach. Adv. Meteorol. 2017, 2017, 7164068. [Google Scholar] [CrossRef]

- Ciampitti, I.A.; Elmore, R.W.; Lauer, J. Corn growth and development. Dent 2011, 5, 1–24. [Google Scholar]

- Bernardi, M.; Deline, J.; Durand, W.; Zhang, N. Crop Yield Forecasting: Methodological and Institutional Aspects; FAO: Rome, Italy, 2016; Volume 33. [Google Scholar]

- Micasense. MicaSense RedEdge-MX™ and DLS 2 Integration Guide. Available online: https://support.micasense.com/hc/article_attachments/1500011727381/RedEdge-MX-integration-guide.pdf (accessed on 31 August 2023).

- Zvoleff, A. Glcm: Calculate Textures from Grey-Level Co-Occurrence Matrices (GLCMs) Version 1.6. 5 from CRAN. CRAN Package ‘Glcm. 2020. Available online: https://cran.r-project.org/web/packages/glcm/glcm.pdf (accessed on 6 June 2024).

- Burns, B.W.; Green, V.S.; Hashem, A.A.; Massey, J.H.; Shew, A.M.; Adviento-Borbe, M.A.A.; Milad, M. Determining nitrogen deficiencies for maize using various remote sensing indices. Precis. Agric. 2022, 23, 791–811. [Google Scholar] [CrossRef]

- De Almeida, G.S.; Rizzo, R.; Amorim, M.T.A.; Dos Santos, N.V.; Rosas, J.T.F.; Campos, L.R.; Rosin, N.A.; Zabini, A.V.; Demattê, J.A. Monitoring soil–plant interactions and maize yield by satellite vegetation indexes, soil electrical conductivity and management zones. Precis. Agric. 2023, 24, 1380–1400. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhao, W.; Zhou, D.; Gong, H. Sensitivity analysis of vegetation reflectance to biochemical and biophysical variables at leaf, canopy, and regional scales. IEEE Trans. Geosci. Remote Sens. 2013, 52, 4014–4024. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Z. Remote sensing indicators for crop growth monitoring at different scales. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 4062–4065. [Google Scholar]

- Ballesteros, R.; Moreno, M.A.; Barroso, F.; González-gómez, L.; Ortega, J.F. Assessment of maize growth and development with high- and medium-resolution remote sensing products. Agronomy 2021, 11, 940. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 610–621. [Google Scholar] [CrossRef]

- Datt, B. A new reflectance index for remote sensing of chlorophyll content in higher plants: Tests using Eucalyptus leaves. J. Plant Physiol. 1999, 154, 30–36. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Cao, Q.; Miao, Y.; Wang, H.; Huang, S.; Cheng, S.; Khosla, R.; Jiang, R. Non-destructive estimation of rice plant nitrogen status with Crop Circle multispectral active canopy sensor. Field Crops Res. 2013, 154, 133–144. [Google Scholar] [CrossRef]

- Rao, N.R.; Garg, P.; Ghosh, S.; Dadhwal, V. Estimation of leaf total chlorophyll and nitrogen concentrations using hyperspectral satellite imagery. J. Agric. Sci. 2008, 146, 65–75. [Google Scholar]

- Jasper, J.; Reusch, S.; Link, A. Active sensing of the N status of wheat using optimized wavelength combination: Impact of seed rate, variety and growth stage. Precis. Agric. 2009, 9, 23–30. [Google Scholar]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Daughtry, C.; Eitel, J.U.; Long, D.S. Remote sensing leaf chlorophyll content using a visible band index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Huete, A.; Justice, C.; Van Leeuwen, W. MODIS vegetation index (MOD13). Algorithm Theor. Basis Doc. 1999, 3, 295–309. [Google Scholar]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Barnes, E.; Clarke, T.; Richards, S.; Colaizzi, P.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000; p. 6. [Google Scholar]

- Rezaei, E.E.; Ghazaryan, G.; González, J.; Cornish, N.; Dubovyk, O.; Siebert, S. The use of remote sensing to derive maize sowing dates for large-scale crop yield simulations. Int. J. Biometeorol. 2021, 65, 565–576. [Google Scholar] [CrossRef] [PubMed]

- Laudien, R.; Schauberger, B.; Makowski, D.; Gornott, C. Robustly forecasting maize yields in Tanzania based on climatic predictors. Sci. Rep. 2020, 10, 19650. [Google Scholar] [CrossRef] [PubMed]

- Dormann, C.F.; Elith, J.; Bacher, S.; Buchmann, C.; Carl, G.; Carré, G.; Marquéz, J.R.G.; Gruber, B.; Lafourcade, B.; Leitão, P.J. Collinearity: A review of methods to deal with it and a simulation study evaluating their performance. Ecography 2013, 36, 27–46. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. Adv. Neural Inf. Process. Syst. 2018, 31, 1–11. [Google Scholar] [CrossRef]

- Welch, B.L. On the comparison of several mean values: An alternative approach. Biometrika 1951, 38, 330–336. [Google Scholar] [CrossRef]

- Sandakova, G.; Besaliev, I.; Panfilov, A.; Karavaitsev, A.; Kiyaeva, E.; Akimov, S. Influence of agrometeorological factors on wheat yields. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Kurgan, Russia, 18–19 April 2019; p. 012022. [Google Scholar]

- Adak, A.; Murray, S.C.; Božinović, S.; Lindsey, R.; Nakasagga, S.; Chatterjee, S.; Anderson, S.L.; Wilde, S. Temporal vegetation indices and plant height from remotely sensed imagery can predict grain yield and flowering time breeding value in maize via machine learning regression. Remote Sens. 2021, 13, 2141. [Google Scholar] [CrossRef]

- Shrestha, A.; Bheemanahalli, R.; Adeli, A.; Samiappan, S.; Czarnecki, J.M.P.; McCraine, C.D.; Reddy, K.R.; Moorhead, R. Phenological stage and vegetation index for predicting corn yield under rainfed environments. Front. Plant Sci. 2023, 14, 1168732. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Miao, Y.; Feng, G.; Yuan, F.; Yue, S.; Gao, X.; Liu, Y.; Liu, B.; Ustin, S.L.; Chen, X. Improving estimation of summer maize nitrogen status with red edge-based spectral vegetation indices. Field Crops Res. 2014, 157, 111–123. [Google Scholar] [CrossRef]

- Furukawa, F.; Maruyama, K.; Saito, Y.K.; Kaneko, M. Corn height estimation using UAV for yield prediction and crop monitoring. In Unmanned Aerial Vehicle: Applications in Agriculture and Environment; Springer Nature: Cham, Switzerland, 2020; pp. 51–69. [Google Scholar]

- Zhang, Y.; Xia, C.; Zhang, X.; Cheng, X.; Feng, G.; Wang, Y.; Gao, Q. Estimating the maize biomass by crop height and narrowband vegetation indices derived from UAV-based hyperspectral images. Ecol. Indic. 2021, 129, 107985. [Google Scholar] [CrossRef]

- Khanal, S.; Fulton, J.; Klopfenstein, A.; Douridas, N.; Shearer, S. Integration of high resolution remotely sensed data and machine learning techniques for spatial prediction of soil properties and corn yield. Comput. Electron. Agric. 2018, 153, 213–225. [Google Scholar] [CrossRef]

- Du, Z.; Yang, L.; Zhang, D.; Cui, T.; He, X.; Xiao, T.; Xie, C.; Li, H. Corn variable-rate seeding decision based on gradient boosting decision tree model. Comput. Electron. Agric. 2022, 198, 107025. [Google Scholar] [CrossRef]

- Saravanan, K.S.; Bhagavathiappan, V. Prediction of crop yield in India using machine learning and hybrid deep learning models. Acta Geophys. 2024, 1–20. [Google Scholar] [CrossRef]

- Uribeetxebarria, A.; Castellón, A.; Aizpurua, A. Optimizing wheat yield prediction integrating data from Sentinel-1 and Sentinel-2 with CatBoost algorithm. Remote Sens. 2023, 15, 1640. [Google Scholar] [CrossRef]

- Shu, M.; Zuo, J.; Shen, M.; Yin, P.; Wang, M.; Yang, X.; Tang, J.; Li, B.; Ma, Y. Improving the estimation accuracy of SPAD values for maize leaves by removing UAV hyperspectral image backgrounds. Int. J. Remote Sens. 2021, 42, 5862–5881. [Google Scholar] [CrossRef]

- de Lara, A.; Longchamps, L.; Khosla, R. Soil water content and high-resolution imagery for precision irrigation: Maize yield. Agronomy 2019, 9, 174. [Google Scholar] [CrossRef]

- Shen, J.; Wang, Q.; Zhao, M.; Hu, J.; Wang, J.; Shu, M.; Liu, Y.; Guo, W.; Qiao, H.; Niu, Q. Mapping Maize Planting Densities Using Unmanned Aerial Vehicles, Multispectral Remote Sensing, and Deep Learning Technology. Drones 2024, 8, 140. [Google Scholar] [CrossRef]

- Zhu, W.; Rezaei, E.E.; Nouri, H.; Sun, Z.; Li, J.; Yu, D.; Siebert, S. UAV-based indicators of crop growth are robust for distinct water and nutrient management but vary between crop development phases. Field Crops Res. 2022, 284, 108582. [Google Scholar] [CrossRef]

- De Villiers, C.; Munghemezulu, C.; Mashaba-Munghemezulu, Z.; Chirima, G.J.; Tesfamichael, S.G. Weed detection in rainfed maize crops using UAV and planetscope imagery. Sustainability 2023, 15, 13416. [Google Scholar] [CrossRef]

- Herrmann, I.; Bdolach, E.; Montekyo, Y.; Rachmilevitch, S.; Townsend, P.A.; Karnieli, A. Assessment of maize yield and phenology by drone-mounted superspectral camera. Precis. Agric. 2020, 21, 51–76. [Google Scholar] [CrossRef]

- Ji, Z.; Pan, Y.; Zhu, X.; Wang, J.; Li, Q. Prediction of crop yield using phenological information extracted from remote sensing vegetation index. Sensors 2021, 21, 1406. [Google Scholar] [CrossRef]

| Model Type | Life Cycle | Training R2 Score | Test R2 Score | Cross-Validation Mean R2 | Cross-Validation Mean RMSE | Cross-Validation Mean MSE |

|---|---|---|---|---|---|---|

| CatBoost | Pre-flowering | 0.52 | 0.06 | 0.42 | 2.78 | 8.23 |

| CatBoost | Flowering | 0.58 | 0.55 | 0.49 | 2.60 | 7.12 |

| CatBoost | Grain filling | 0.69 | 0.56 | 0.49 | 2.48 | 6.55 |

| CatBoost | Maturity | 0.71 | 0.64 | 0.53 | 2.46 | 6.58 |

| GradBoost | Pre-flowering | 0.56 | 0.05 | 0.36 | 2.93 | 9.11 |

| GradBoost | Flowering | 0.61 | 0.43 | 0.45 | 2.73 | 7.86 |

| GradBoost | Grain filling | 0.69 | 0.54 | 0.41 | 2.69 | 7.76 |

| GradBoost | Maturity | 0.88 | 0.67 | 0.49 | 2.54 | 6.85 |

| RF | Pre-flowering | 0.79 | −0.32 | 0.36 | 2.88 | 8.81 |

| RF | Flowering | 0.82 | 0.47 | 0.42 | 2.85 | 7.66 |

| RF | Grain filling | 0.88 | 0.56 | 0.43 | 2.49 | 6.54 |

| RF | Maturity | 0.85 | 0.68 | 0.50 | 2.52 | 6.77 |

| XGBoost | Pre-flowering | 0.70 | −0.10 | 0.37 | 2.88 | 8.74 |

| XGBoost | Flowering | 0.59 | 0.44 | 0.45 | 2.71 | 7.73 |

| XGBoost | Grain filling | 0.82 | 0.52 | 0.42 | 2.53 | 6.96 |

| XGBoost | Maturity | 0.86 | 0.63 | 0.51 | 2.49 | 6.66 |

| Date 1 | Date 2 | F-Statistic | p-Value | |

|---|---|---|---|---|

| Field A | ||||

| Pre-flowering | Flowering | 3.99 | 0.047 | |

| Pre-flowering | Grain filling | 34.75 | <0.001 | |

| Pre-flowering | Maturity | 109.49 | <0.001 | |

| Flowering | Grain filling | 31.10 | <0.001 | |

| Flowering | Maturity | 41.83 | <0.001 | |

| Grain filling | Maturity | 0.30 | 0.58 | |

| Field B | ||||

| Pre-flowering | Flowering | 2.14 | 0.15 | |

| Pre-flowering | Grain filling | 5.85 | 0.02 | |

| Pre-flowering | Maturity | 0.64 | 0.42 | |

| Flowering | Grain filling | 5.96 | 0.02 | |

| Flowering | Maturity | 0.91 | 0.34 | |

| Grain filling | Maturity | 5.59 | 0.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Villiers, C.; Mashaba-Munghemezulu, Z.; Munghemezulu, C.; Chirima, G.J.; Tesfamichael, S.G. Assessing Maize Yield Spatiotemporal Variability Using Unmanned Aerial Vehicles and Machine Learning. Geomatics 2024, 4, 213-236. https://doi.org/10.3390/geomatics4030012

de Villiers C, Mashaba-Munghemezulu Z, Munghemezulu C, Chirima GJ, Tesfamichael SG. Assessing Maize Yield Spatiotemporal Variability Using Unmanned Aerial Vehicles and Machine Learning. Geomatics. 2024; 4(3):213-236. https://doi.org/10.3390/geomatics4030012

Chicago/Turabian Stylede Villiers, Colette, Zinhle Mashaba-Munghemezulu, Cilence Munghemezulu, George J. Chirima, and Solomon G. Tesfamichael. 2024. "Assessing Maize Yield Spatiotemporal Variability Using Unmanned Aerial Vehicles and Machine Learning" Geomatics 4, no. 3: 213-236. https://doi.org/10.3390/geomatics4030012