Inertial Sensor-Based Two Feet Motion Tracking for Gait Analysis

Abstract

: Two feet motion is estimated for gait analysis. An inertial sensor is attached on each shoe and an inertial navigation algorithm is used to estimate the movement of both feet. To correct inter-shoe position error, a camera is installed on the right shoe and infrared LEDs are installed on the left shoe. The proposed system gives key gait analysis parameters such as step length, stride length, foot angle and walking speed. Also it gives three dimensional trajectories of two feet for gait analysis.1. Introduction

Gait analysis is the systematic study of human walking motion [1]. Gait analysis is used to evaluate individuals with conditions affecting their ability to walk. It can be used for health diagnostics or rehabilitation.

There are mainly two kinds of systems for gait analysis: outside observation systems and wearable sensor systems. In outside observation systems, a camera [2], sensors on the floor [3] and optical remote sensors [4] are used to observe walking motion. Advantages of outside observation systems are their high accuracy. The disadvantage is that it requires a dedicated experiment space and the walking range is rather limited.

Various wearable sensors [5] are used for gait analysis, including force sensors [6], goniometer [1] and inertial sensors [7,8]. The main advantage of wearable sensor systems is that it does not require a dedicated space for experiments. Thus gait analysis can be performed during everyday life, where more natural walking can be observed.

Recently inertial sensors have received lots of attention as wearable sensors for gait analysis. There are two types of inertial sensor-based systems. In [7,8], angles of leg joints are estimated, where attitude estimation algorithms are applied using inertial sensor data. In [9], an inertial navigation algorithm [10] is used to estimate a foot movement. By installing inertial sensors on a shoe, foot motion (position, velocity and attitude) can be estimated quantitatively. Many similar systems [11–13] are also developed for personal navigation systems. This paper is closely related to the latter approach, where an inertial navigation algorithm is used to track foot motion.

Inertial navigation algorithm-based foot motion analysis is in most cases [9,11–13] done only for a single foot. However, two feet motion tracking provides more information for the gait analysis. Theoretically, two feet motion tracking can be done by simply attaching an inertial sensor on each shoe instead of on a single shoe. However, inter-shoe distance error diverges as time goes by and the relative position between the left and right foot becomes very large. To maintain the accurate relative position between two feet, it is necessary to measure inter-shoe distance.

In [14], two feet motion is estimated in the context of a personal navigation system. In the system, an inertial sensor is attached on each foot and the distance between two feet is measured using a sonar sensor. Since the system is developed for a personal navigation system, the main interest is the accurate position estimation of a person.

In this paper, we propose inertial-sensor based two feet motion tracking system for gait analysis. An inertial sensor unit is installed on each shoe. The position and attitude between two shoes are estimated using a camera on one shoe and infrared LEDs on the other shoe. Using the proposed system, two feet motion (position, velocity and attitude) can be estimated. We note that only the inter-shoe distance (scalar quantity) is measured in [14]. Thus the relative position between two feet can only be obtained after many walking steps. The fact that the relative position cannot be computed is not a problem in [14] since the goal is to estimate a person's position. On the other hand, the relative position and attitude between two feet can be accurately estimated in the proposed system. Thus the relative location between two feet can be obtained from the first step as long as a camera on the right shoe can see the landmark on the left shoe.

2. System Overview

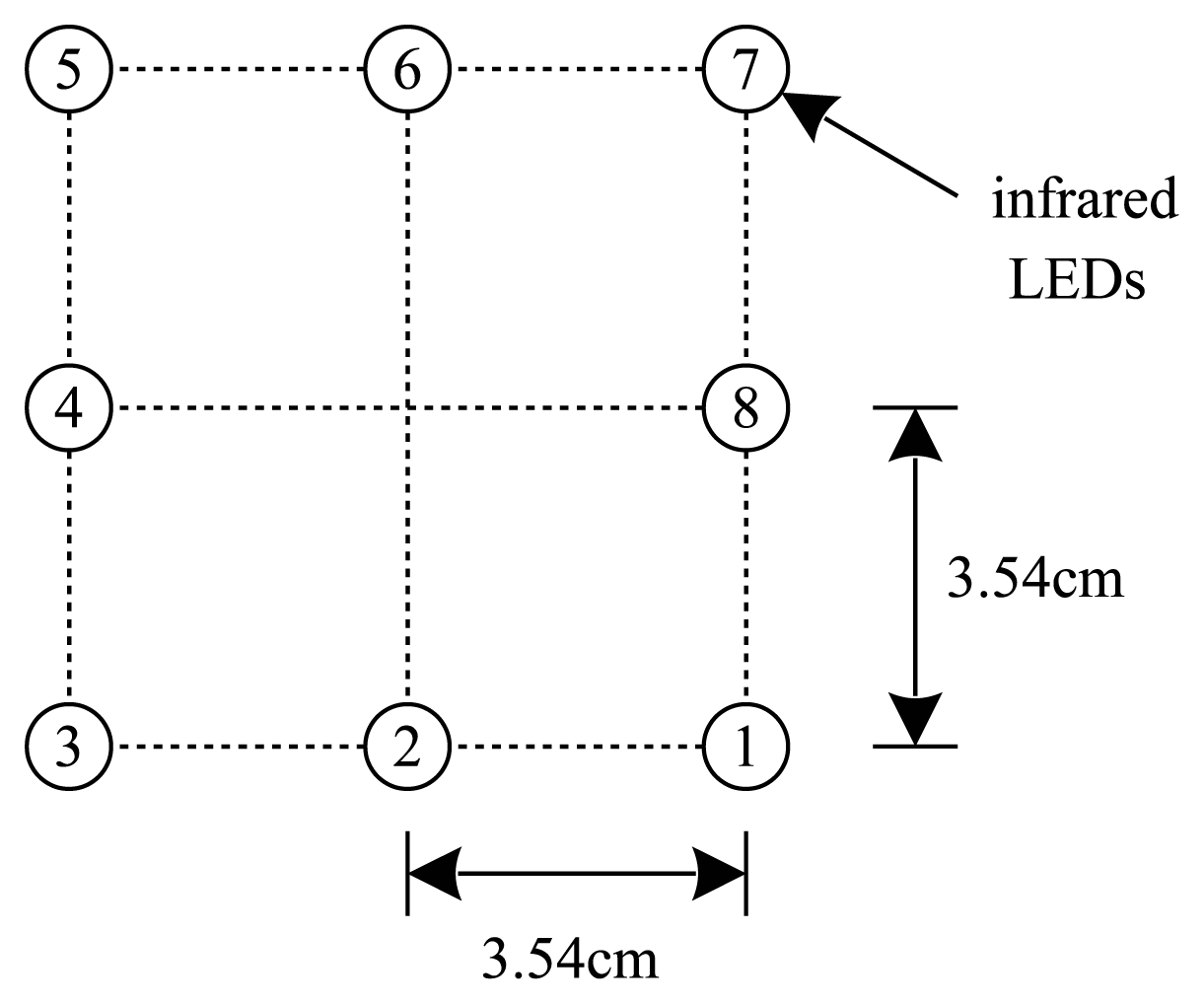

The picture of the proposed system is given in Figure 1. Two IMUs (XSens MTi) are attached on both feet. An USB camera (Pointgrey FireFly MV; is attached on the right foot and eight infrared LEDs are attached on the left foot. The movement of two feet is estimated using an inertial navigation algorithm. The relative position between two feet is estimated by capturing the LEDs on the left foot from the camera on the right foot.

As we can see in Figure 1, the sensor unit size is rather large. This may affect the walking patterns. We note that no conscious effort was given to make the system smaller since the purpose of this paper is to demonstrate feasibility of a wearable gait analysis system combining a camera and an inertial sensor unit.

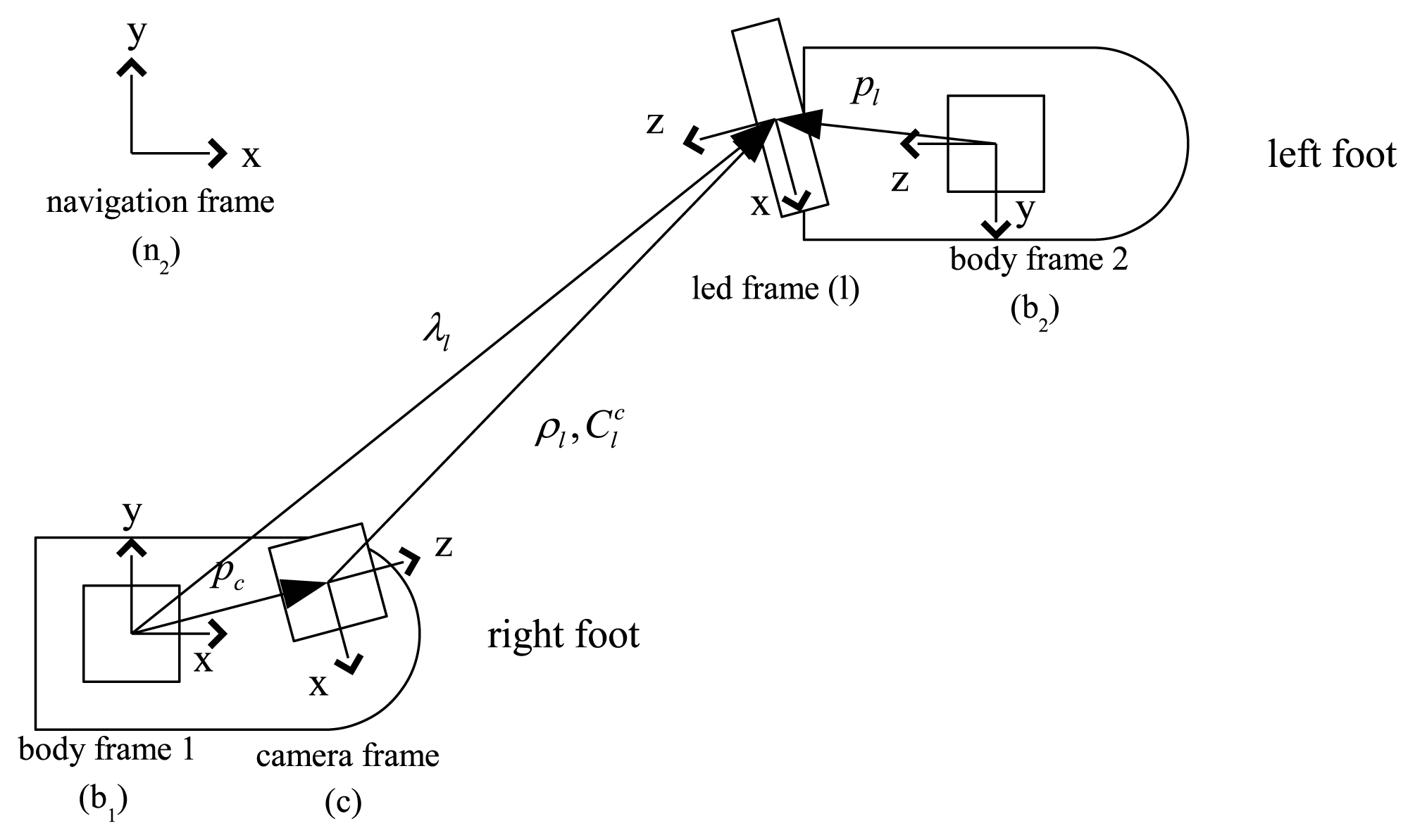

Five coordinate systems are used in the paper (see Figure 2). Three axes of the body 1 (2) coordinate system coincide with three axes of IMU on the right (left) foot. The origin of the camera coordinate system coincides with the pinhole of the camera. The LED coordinate system is defined as in Figure 2. The navigation coordinate system is used as the reference coordinate system. The z axis of the navigation coordinate system coincides with the local gravity vector and the x axis can be chosen arbitrarily.

A vector p ∈ R3 expressed in the “A” coordinate system is sometimes denoted by [p]A to emphasize that a vector p is expressed in the “A” coordinate system. When there are no concerns for confusion, [p]A is just denoted by p. A symbol is used to denote the rotation matrix between “A” and “B” coordinate systems. In this paper, symbols b1, b2, n, c, l are used to denote body 1, body 2, navigation, camera and LED coordinate systems, respectively.

Let [r1]n ∈ R3 and [r2]n ∈ R3 be the origins of the body 1 and 2 coordinate systems, respectively. [r1]n and [r2]n denote the positions of the left and right foot in the navigation coordinate systems. The objective of this paper is to estimate [r1]n and [r2]n, which are estimated separately using an inertial navigation algorithm. The errors in [r1]n and [r2]n are compensated by computing [r1]n − [r2]n using vision. To compute [r1]n − [r2]n, we introduce some variables in the following.

In Figure 2, [pc]b1 ∈ R3 denotes the origin of the camera coordinate system in the body 1 coordinate system and [pl]b2 denotes the origin of the LED coordinate system in the body 2 coordinate system. Note that pc and are constant since the camera and IMU 1 are attached on a shoe. Similarly, pl and are also constant.

[λl]b1 and [ρl]c denote the origin of the LED coordinate system in the body 1 and camera coordinate systems, respectively. As a person is walking, λl and ρl are continuously changing. We note that λl and ρl can be estimated when the camera captures the LED image.

From the vector relationship in Figure 2, we have

The origin of the LED coordinate system can be expressed in the navigation coordinate system as follows:

Inserting Equation (1) into Equation (2), we have

3. Motion Estimation Algorithm

In this section, an inertial navigation algorithm to estimate two feet motion is given. In Sections 3.1 and 3.2, a basic inertial navigation algorithm using an indirect Kalman filter is given. In Sections 3.3 and 3.4, measurement equations for the Kalman filter are given. In Section 3.5, an implementation issue of the proposed algorithm is discussed.

3.2. Indirect Kalman Filter

Mainly due to measurement noises, r̂1, υ̂1 and q̂1 (position, velocity and attitude estimates of the right foot) have some errors. These errors are estimated using a Kalman filter. This kind of Kalman filters is called an indirect Kalman filter since errors in r̂1, υ̂1 and q̂1 are estimated instead of directly estimating r1, υ1 and q1.

Let re,1, υe,1, qe,1 and be,1 be errors in r̂1, υ̂1, q̂1 and b̂g,1, which are defined by

The multiplicative attitude error term qe,1 in Equation (8) is commonly used in the attitude estimation [16]. If we express the last Equation of (8) in the rotation matrix with the assumption (9), we have the following:

For the left foot, re,2, υe,2, qe,2 and be,2 can be defined similarly. If we combine the left and right foot variables, the state of a Kalman filter is defined by

The state space equation for one foot is a standard inertial navigation algorithm and is given in [9]. The state space equation for two feet is just a combination and is given by

There are two measurement equations for the state x(t). One is from vision data (Section 3.3). The other measurement equation (Section 3.4) is derived using the fact that the velocity of a foot is zero and z axis values are the same while a foot is on the flat floor.

3.3. Measurement Equation from the Vision Data

This section explains how the vision data is used in the Kalman filter.

There are eight infrared LEDs on the left foot as in Figure 3. A number is assigned to each LED. These LEDs are captured using the camera on the right foot. To simplify the image processing algorithm, an infrared filter is placed in front of the camera.

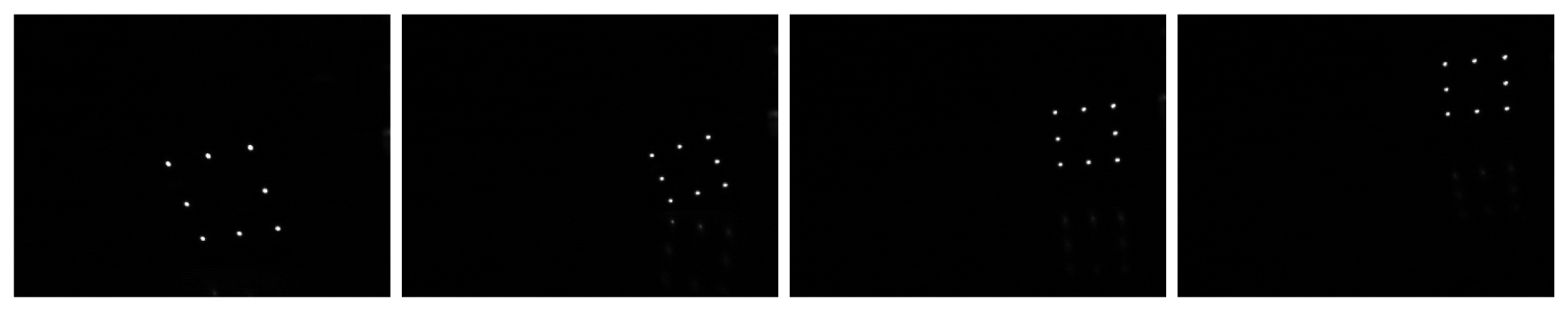

The typical infrared LED images during walking are given in Figure 4. A simple image processing algorithm can be used to obtain the center points of infrared LEDs.

Let the coordinates of the LEDs in the LED coordinate system be [ledi]l ∈ R3 (1 ≤ i ≤ 8). Let [ui υi]′ ∈ R2 be the image coordinates of eight LEDs on the normalized image plane, which are obtained by applying the camera calibration parameters [17] to the pixel coordinates of eight LEDs. [ledi]l and [υi υi]′ satisfy the following relationship:

To use Equation (13), we need to identify LED numbers from the LED image. In a general case where LEDs can rotate freely, it is impossible to uniquely identify the LED number. However, in our case, LEDs are attached on a shoe and the rotation is rather limited due to the mechanical structure of ankles. Thus it is not difficult to identify LED numbers from the images in Figure 4.

Let the estimated value of ρl from the algorithm in [18] be defined by ρ̂l:

Inserting Equations (8), (10) and (14) into Equation (3), we have

Assuming q̄e,1 and υvision are small, we can ignore the product term in Equation (15):

The left hand side of Equation (16) is denoted by zvision ∈ R3 and is used as a measurement equation in the Kalman filter:

Whenever the camera on the right foot captures the LEDs on the left foot, Equation (17) can be used as a measurement equation.

3.4. Measurement Equations from Zero Velocity and Flat Floor Assumptions

During normal walking, a foot touches the floor almost periodically for a short interval. During this short interval, the velocity of a foot is zero and this interval is called a “zero velocity interval”.

The zero velocity interval is detected using accelerometers and gyroscopes [19]. In this paper, the detection method in [9] is used: the foot is assumed to be in the zero velocity interval if the change of the accelerometer is small and gyroscope values are small. The zero velocity intervals are detected separately for the left and right foot.

We assume that a person is walking on a flat floor. Thus, the z axis value of a foot in the navigation coordinate returns to a constant during the zero velocity interval (when a foot is on the floor). Using both zero velocity intervals and the flat floor assumptions, the measurement equation for the zero velocity interval of the right foot is given by

The measurement equation for the zero velocity interval of the left foot is given by

3.5. Kalman Filter Implementation

Here the implementation of the indirect Kalman filter is briefly explained. Detailed explanation for a similar problem can be found in [20]. All computations are done in the discrete time with the sampling period T = 0.01 second. The discrete time index k is used as usual; for example, discrete time value r1,k denotes the sampled value of continuous time value r1(kT).

The procedure to estimate q1,k, υ1,k, r1,k, q2,k, υ2,k and r2,k is as follows:

q̂1,k, υ̂1,k, r̂1,k, q̂2,k, υ̂2,k and r̂1,k are computed using the discretized Equation of (6).

The time update step [21] of the Kalman filter using Equation (12) is performed.

The measurement update step using Equations (17)–(19) is performed to compute x̂.

Using x̂, q̂1,k, υ̂1,k,r̂1,k and b̂g,1 are updated as follows:

Similarly, q̂2,k, υ̂2,k, r̂2,k and b̂g,2 are updated.

After the update, x̂ is set to a zero vector.

The discrete time index k is increased and the procedure is repeated.

4. Smoother

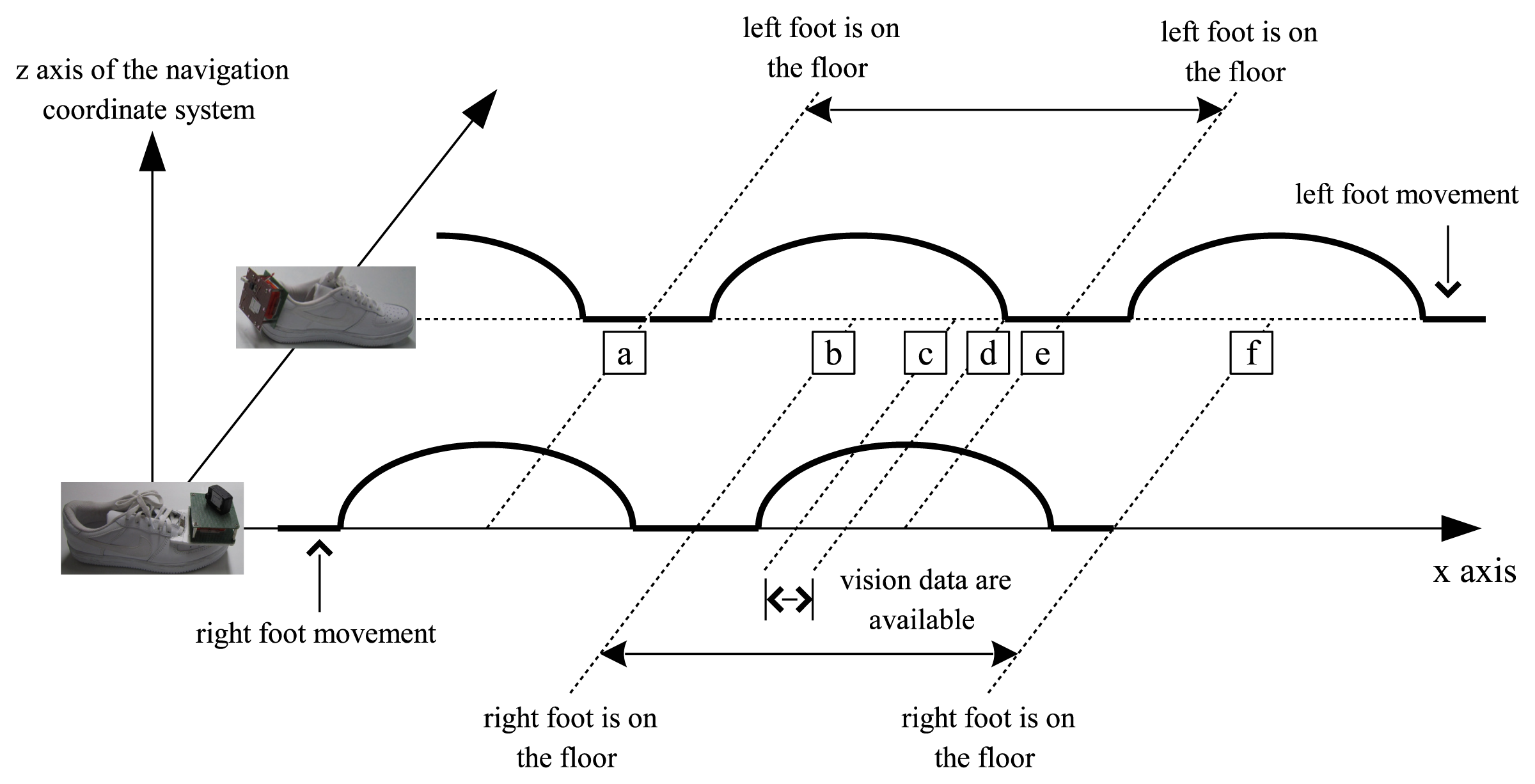

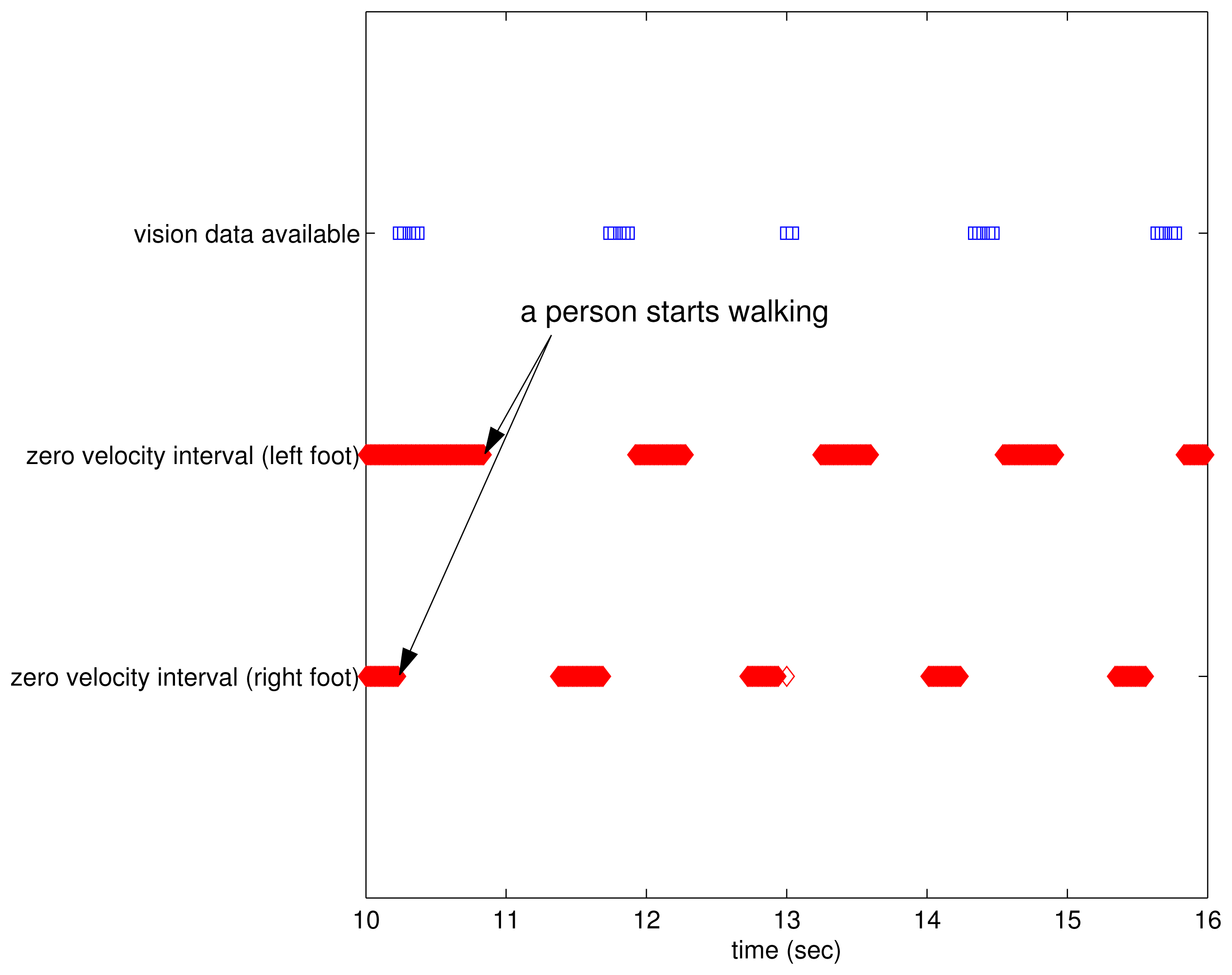

In Figure 5, typical two feet movement during walking is illustrated in the navigation coordinate system. Suppose the right foot is on the floor in the area around (b). As the right foot is taking off the floor ((b)–(d) area), the left foot is touching on the floor in the area around (e). From the configuration of the camera, LED images are available in the (c)–(d) interval.

For the left foot, the measurement data are available in the area around (a) (zero velocity update) and (c)–(d) (vision data update). When the measurement data are not available, the motion estimation depends on double integration of acceleration, whose errors tend to increase quickly even for a short time. To get a smooth motion trajectory, a forward-backward smoother (Section 8.5 in [21]) is applied.

A smoother algorithm is applied for each walking step separately on the left and right foot movement. For example, consider the left foot movement between (a) and (e). After computing the forward Kalman filter (that is, a filter in Section 3.2) up to the point (e), the backward Kalman filter is computed from (e) to (a) with the final value of the forward Kalman filter as an initial value. Since the final value of the forward filter is used in the backward filter, the forward and the backward filter become correlated. Thus the smoother is not optimal. However, we found that the smoothed output is good enough for our application.

Note that r̂2,k is the position of the left foot, which is computed by the forward filter in Section 3.2. Let r̂2,b,k be the position of the left foot, which is computed by a backward Kalman filter. Two values r̂2,k and r̂2,b,k are combined using simple weighting functions w2,f,k and w2,b,k as follows:

A smoother algorithm can be applied to the velocity and attitude similarly.

5. Experiments

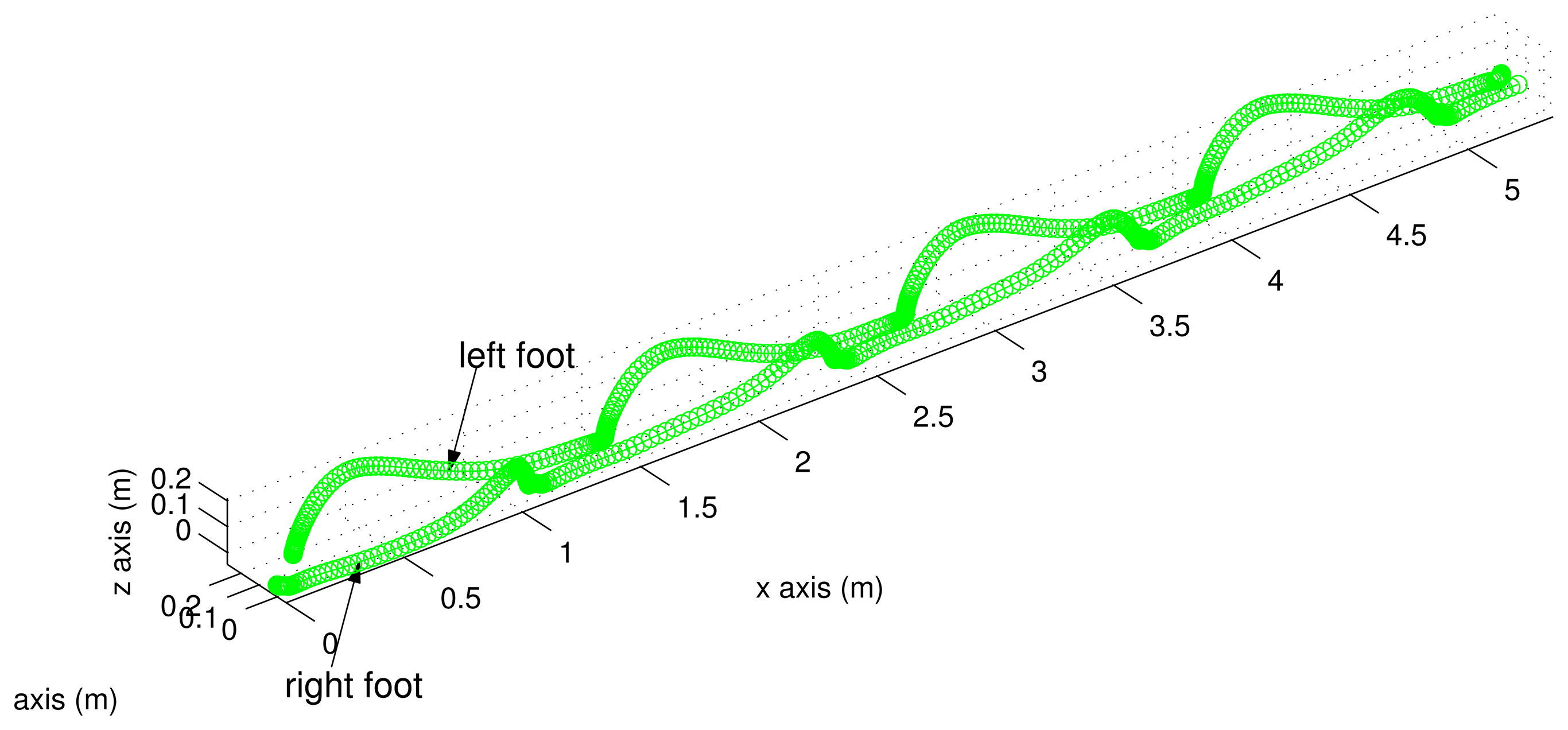

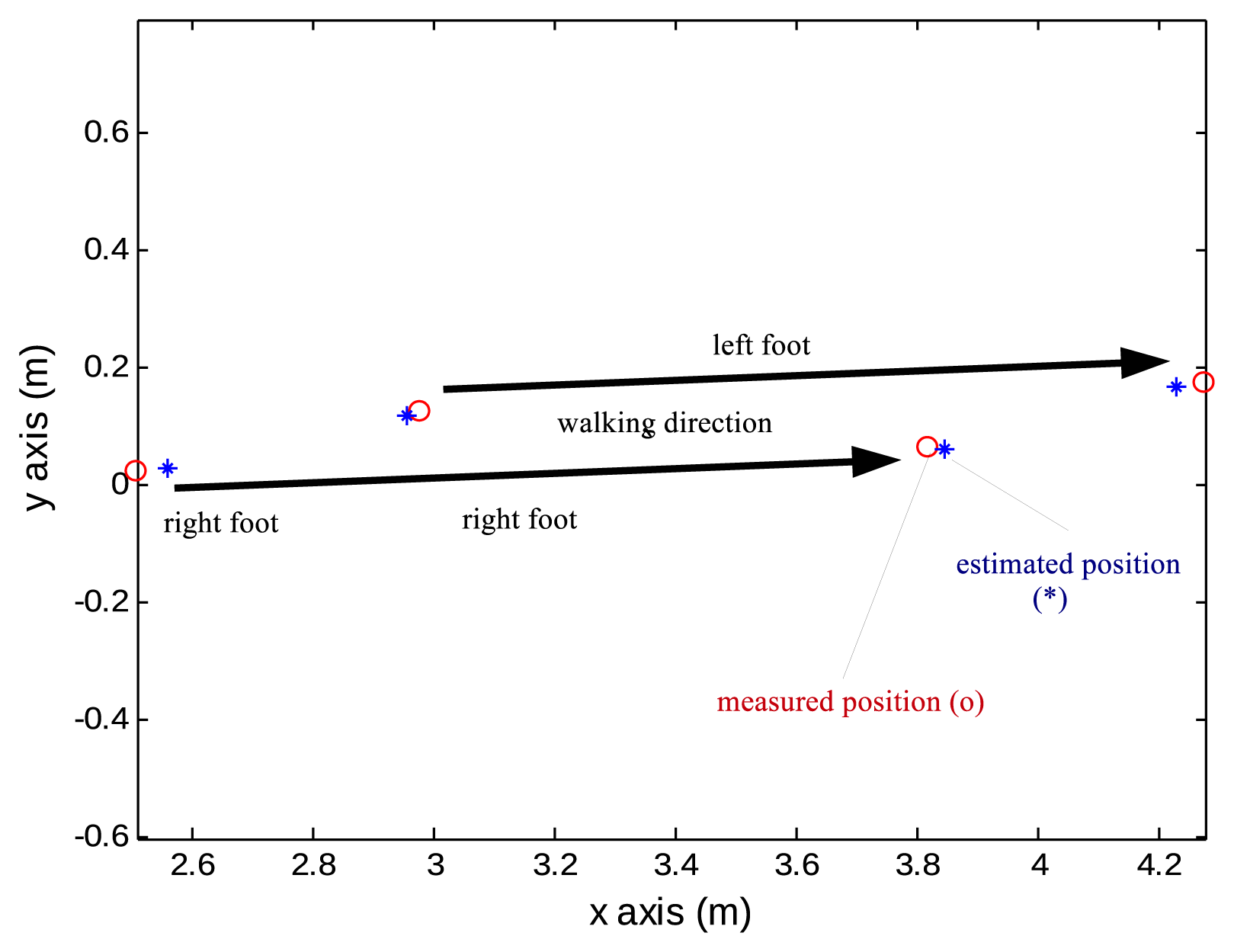

To verify the proposed system, a person walked on the floor and the two feet motion was estimated using the proposed algorithm. The estimated two feet trajectory on the xy plane in the navigation coordinate system is given in Figure 6. Since the x direction of the navigation coordinate system can be chosen arbitrarily, the trajectories are rotated so that the walking direction coincides with the x axis. The left foot trajectory is the upper one and the right foot trajectory is the lower one. The zero velocity intervals are indicated with the diamond symbols. The rectangle symbols indicate that vision data are available at those positions (that is, LEDs on the left foot can be seen from the camera on the right foot).

In the time domain, the relationship between zero velocity intervals and vision data available intervals is given in Figure 7. As illustrated in Figure 5, vision data are available between the right foot zero velocity intervals and the left foot zero velocity intervals during walking.

Three dimensional trajectories are given in Figure 8. There is a difference between the left and right foot motion patterns. This is due to the difference in the positions of inertial sensors: the inertial sensor unit is on the front in the case of the right foot and on the back in the case of the left foot (see Figure 1).

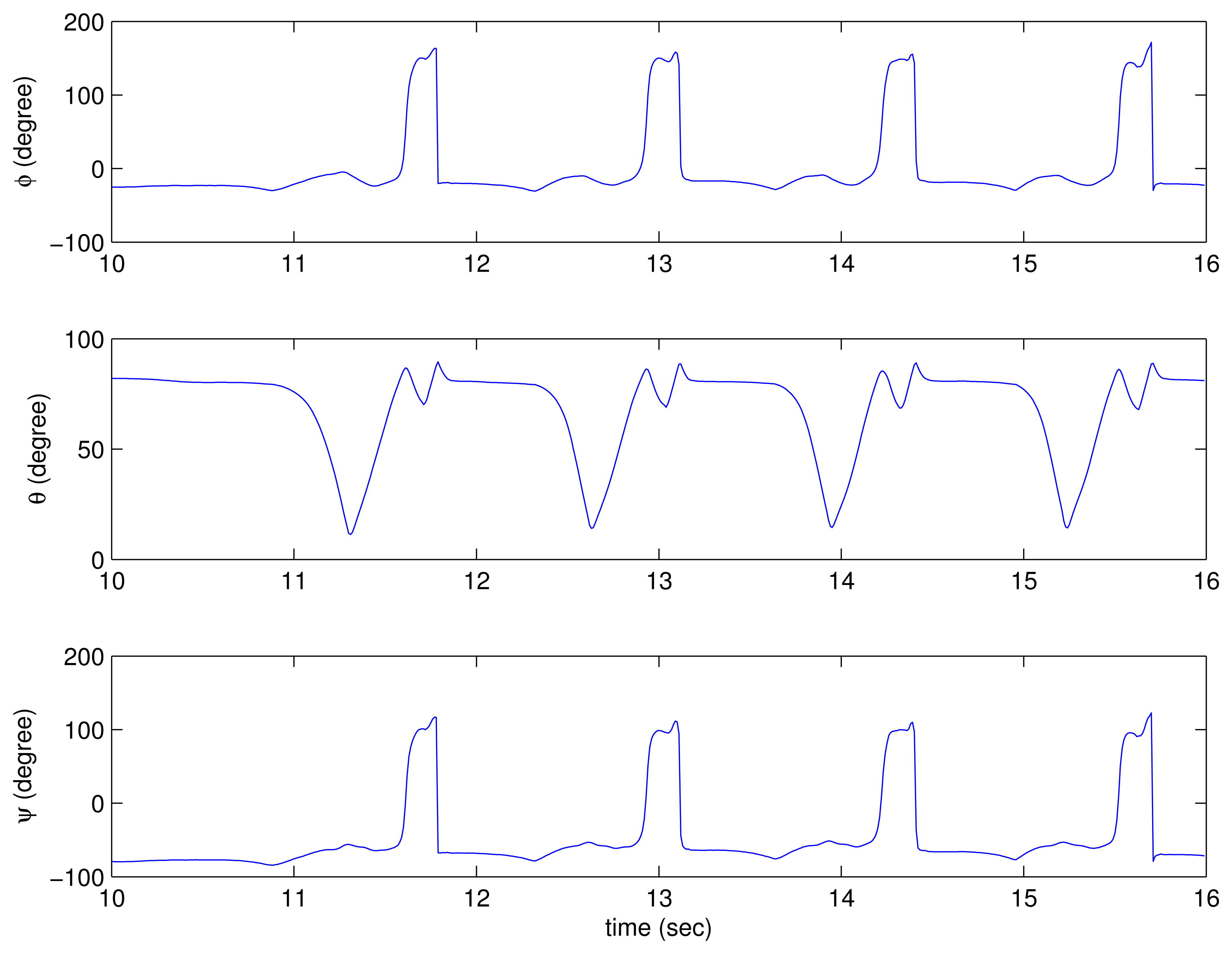

In addition to trajectories, attitude and velocity are also available from the inertial navigation algorithm. For example, estimated attitude (in Euler angles) of the left foot is given in Figure 9.

Thus we can obtain key gait analysis parameters such as step length, stride length, foot angle and walking speed using the proposed system.

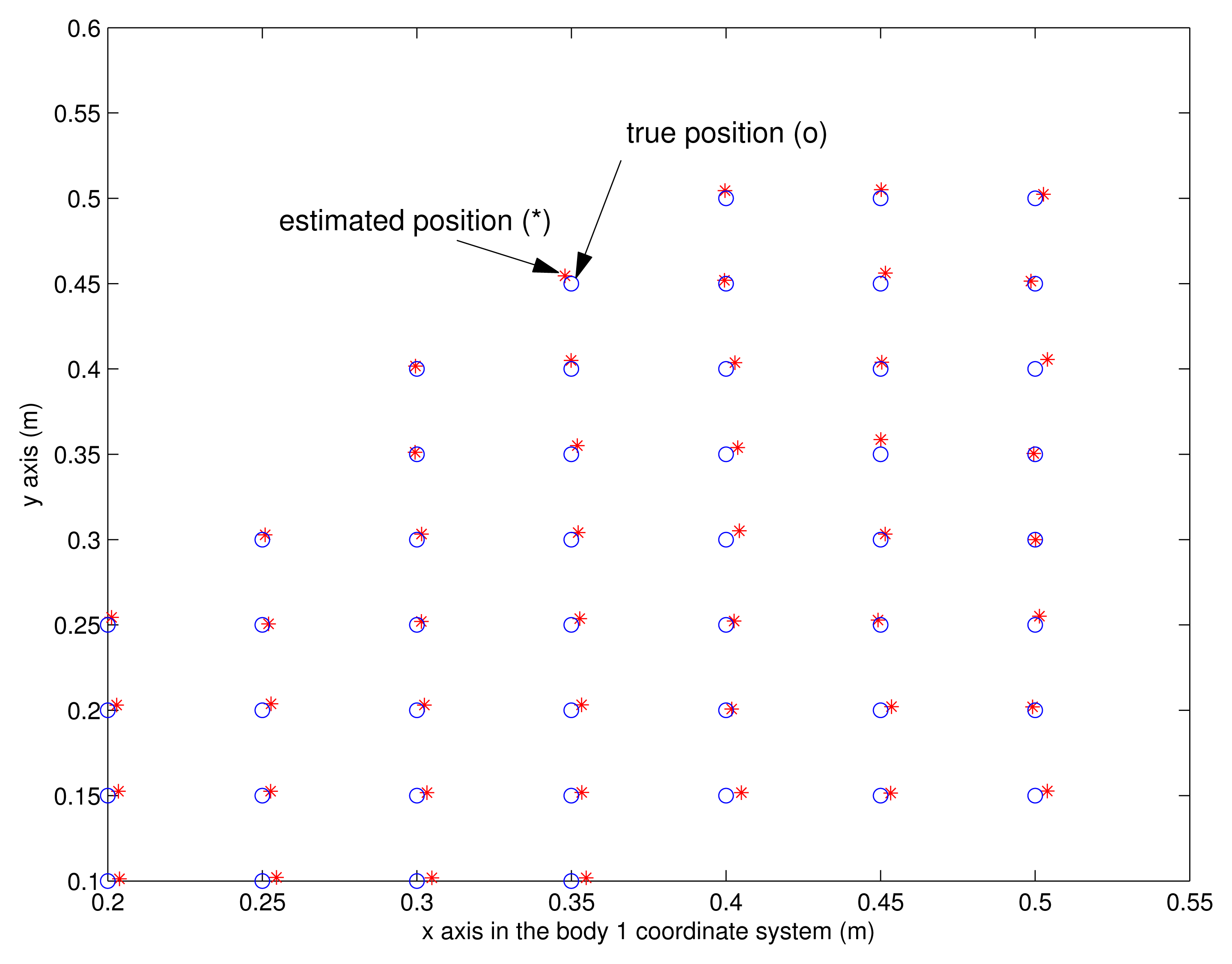

Now the accuracy of the proposed system is evaluated. First, we test the accuracy of the vision-based position estimation, which is used to estimate the vector between two feet. The left shoe is located on different positions of the grid while the right shoe is located on the fixed position. The estimated left shoe position with respect to the right shoe is compared with the true value, which can be obtained from the grid. The result is given in Figure 10, where the position represents the origin of the body 2 coordinate system in the body 1 coordinate system. We can see the position can be accurately estimated using the proposed system (eight infrared LEDs). The mean error distance is 0.4 cm and the maximum error distance is 0.8 cm.

The next task is to evaluate the accuracy of the trajectories. A person walked on the long white paper with marker pens attached on both shoes. Marker pens are attached on shoes so that dots are marked on the white paper whenever a foot touches the floor. Marked dot positions are measured with a ruler and these values are considered as true values. The estimation positions during zero velocity intervals (when one foot is on the floor) are compared with marked dots. One step result is given in Figure 11.

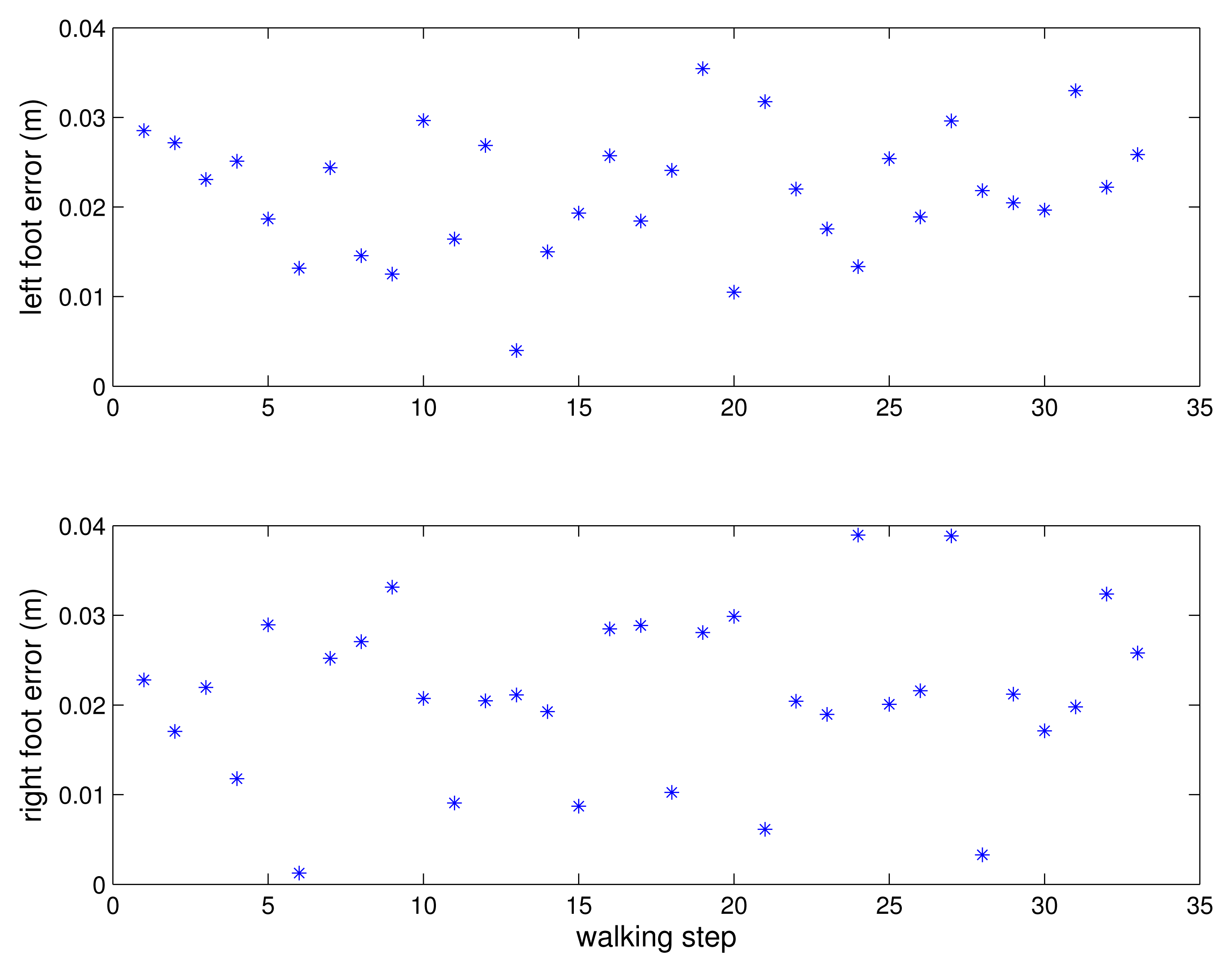

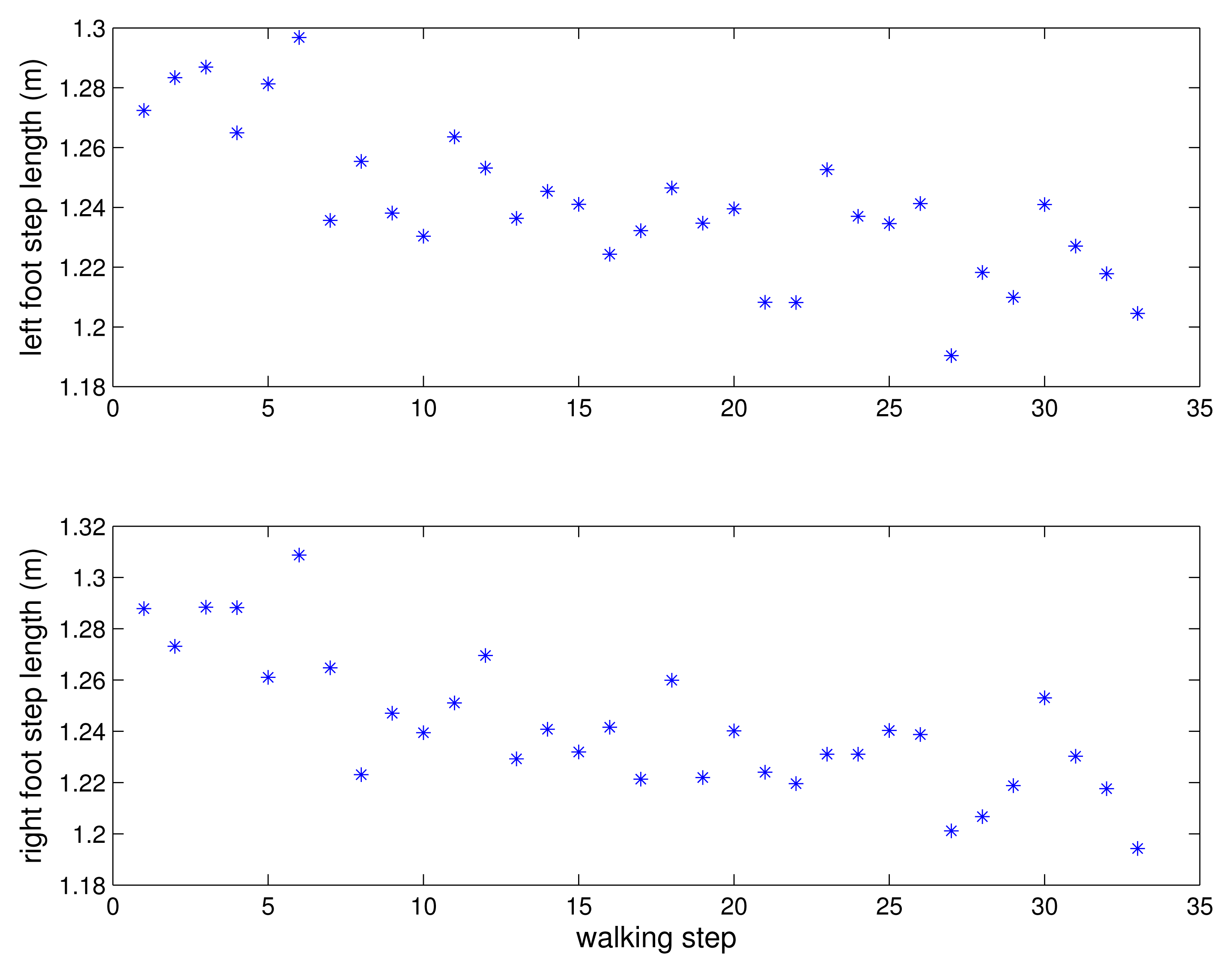

A person walked 33 steps and the errors between the estimated positions and the marked positions are given in Figure 12 for each step. The estimated step length is given in Figure 13. The mean errors are 2.2 cm for the left foot and 2.1 cm for the right foot. The maximum errors are 3.6 cm for the left foot and 3.89 cm for the right foot. Two more experiments were done and the mean errors are 2.5 cm and 1.2 cm for the left foot and 2.5 cm and 1.7 cm for the right foot. The maximum errors are 4.1 cm and 2.8 cm for the left foot and 3.9 cm and 5.4 cm for the right foot. The 2 cm level error is too large for the kinetic calculations. However, the proposed system is suitable for the gait analysis system requiring basic gait parameters such as walking step length and walking speed.

6. Conclusions

Using inertial sensors on shoes, two feet motion is estimated using an inertial navigation algorithm. When two feet motion is estimated, it is necessary to measure the relative position between the two feet. In the proposed system, a vision system is used to measure the relative position and attitude between two feet.

Using the proposed system, we can obtain quantitative gait analysis parameters such as step length, stride length, foot angle and walking speed. Also we can see three dimensional trajectories of the two feet, which give qualitative information for gait analysis.

The accuracy of the proposed system is evaluated by measuring the position of a foot when a foot touches the floor. The mean position error is 1.2–2.5 cm and the maximum position error is 5.4 cm. For gait analysis, we believe the error is in an acceptable range.

The main contribution of the proposed system is that two feet motion can be observed at any place as long as the floor is flat. In commercial motion tracking using a camera such as Vicon, a dedicated experiment space is required. Thus we believe natural walking patterns can be observed using the proposed system.

Acknowledgments

This work was supported by the 2013 Research Fund of University of Ulsan.

Conflict of Interest

The authors declare no conflict of interest. References

References

- Perry, J. Gait Analysis: Normal and Pathological Function; SLACK Incoporated: Thorofare, NJ, USA, 1992. [Google Scholar]

- Karaulova, I.A.; Hall, P.M.; Marshall, A.D. Tracking people in three dimensions using a hierarchical model of dynamics. Image Vision Comput. 2002, 20, 691–700. [Google Scholar]

- Yun, J. User identification using gait patterns on UbiFloorII. Sensors 2011, 11, 2611–2639. [Google Scholar]

- Teixido, M.; Palleja, T.; Tresanchez, M.; Nogues, M.; Palacin, J. Measuring oscillating walking paths with a LIDAR. Sensors 2011, 11, 5071–5086. [Google Scholar]

- Zhang, B.; Jiang, S.; Wei, D.; Marschollek, M.; Zhang, W. State of the Art in Gait Analysis Using Wearable Sensors for Healthcare Applications. Proceedings of 2012 IEEE/ACIS 11th International Conference on the Computer and Information Science, (ICIS), Shanghai, China, 30 May 2012; pp. 213–218.

- Kappel, S.L.; Rathleff, M.S.; Hermann, D.; Simonsen, O.; Karstoft, H.; Ahrendt, P. A novel method for measuring in-shoe navicular drop during gait. Sensors 2012, 12, 11697–11711. [Google Scholar]

- Tao, W.; Liu, T.; Zheng, R.; Feng, H. Gait analysis using wearable sensors. Sensors 2012, 12, 2255–2283. [Google Scholar]

- Schepers, H.M.; Koopman, H.F.J.M.; Veltink, P.H. Ambulatory assessment of ankle and foot dynamics. IEEE Trans. Biomed. Eng. 2007, 54, 895–902. [Google Scholar]

- Do, T.N.; Suh, Y.S. Gait analysis using floor markers and inertial sensors. Sensors 2012, 12, 1594–1611. [Google Scholar]

- Titterton, D.H.; Weston, J.L. Strapdown Inertial Navigation Technology; IPeter Peregrinus Ltd.: Reston, VA, USA, 1997. [Google Scholar]

- Foxlin, E. Pedestrian tracking with shoe-mounted inertial sensors. IEEE Comput. Graph. Appl. 2005, 25, 38–46. [Google Scholar]

- Ojeda, L.; Borenstein, J. Non-GPS navigation for security personnel and first responders. J. Navig. 2007, 60, 391–407. [Google Scholar]

- Bebek, O.; Suster, M.A.; Rajgopal, S.; Fu, M.J.; Xuemei, H.; Cauvusoglu, M.C.; Young, D.J.; Mehregany, M.; van den Bogert, A.J.; Mastrangelo, C.H. Personal navigation via high-resolution gait-corrected inertial measurement units. IEEE Trans. Instrum. Meas. 2010, 59, 3018–3027. [Google Scholar]

- Kelly, A. Personal Navigation System based on Dual Shoe-Mounted IMUs and Intershoe Ranging. Proceedings of the Precision Personnel Locator Workshop 2011, Worcester, MA, USA, 1–2 August 2011.

- Suh, Y.S.; Phuong, N.H.Q.; Kang, H.J. Distance estimation using inertial sensor and vision. Int. J. Control, Automation Syst. 2013, 11, 211–215. [Google Scholar]

- Markley, F.L. Multiplicative vs. Additive Filtering for Spacecraft Attitude Determination. Proceedings of 6th Cranfield Conference on Dynamics and Control of Systems and Structures in Space, Riomaggiore, Italy, 18–22 July 2004; pp. 467–474.

- Forsyth, D.A.; Ponce, J. Computer Vision: A Modern Approach; Prentice Hall: New York, NY, USA, 2003. [Google Scholar]

- Lu, C.P.; Hager, G.D.; Mjolsness, E. Fast and globally convergent pose estimation from video images. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 610–622. [Google Scholar]

- Peruzzi, A.; Croce, U.D.; Cereatti, A. Estimation of stride length in level walking using an inertial measurement unit attached to the foot: A validation of the zero velocity assumption during stance. J. Biomechan. 2011, 44, 1991–1994. [Google Scholar]

- Suh, Y.S. A smoother for attitude and position estimation using inertial sensors with zero velocity intervals. IEEE Sens. J. 2012, 12, 1255–1262. [Google Scholar]

- Brown, R.G.; Hwang, P.Y.C. Introduction to Random Signals and Applied Kalman Filtering; John Wiley & Sons: New York, NY, USA, 1997. [Google Scholar]

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Hung, T.N.; Suh, Y.S. Inertial Sensor-Based Two Feet Motion Tracking for Gait Analysis. Sensors 2013, 13, 5614-5629. https://doi.org/10.3390/s130505614

Hung TN, Suh YS. Inertial Sensor-Based Two Feet Motion Tracking for Gait Analysis. Sensors. 2013; 13(5):5614-5629. https://doi.org/10.3390/s130505614

Chicago/Turabian StyleHung, Tran Nhat, and Young Soo Suh. 2013. "Inertial Sensor-Based Two Feet Motion Tracking for Gait Analysis" Sensors 13, no. 5: 5614-5629. https://doi.org/10.3390/s130505614