A Vehicle Steering Recognition System Based on Low-Cost Smartphone Sensors

Abstract

:1. Introduction

- (1)

- Smartphone posture alignment: we need to understand the smartphone’s posture before proceeding to the gyroscope data collection, otherwise the vehicle state will not be accurately derived;

- (2)

- Steering time detection: to automate the initialization of a vehicle steering, it is critical to pinpoint the steering time at the starting point;

- (3)

- Vehicle steering maneuver recognition: we need to recognize the steering maneuver of the situation that is based on the vehicle’s angular speed and the surrounding environment conditions.

- We propose a smartphone-based recognition system for vehicle steering maneuvers including turns, lane-changes and U-turns, and these maneuvers are characterized by gyroscope data from a smartphone mounted in the vehicle.

- We design a new algorithm for the recognition of vehicle steering maneuvers. The algorithm can recognize different steering maneuvers by combining the vehicle’s angular velocity, the condition of turn signals, the heading angle change and the weather conditions, which improves the recognition accuracy.

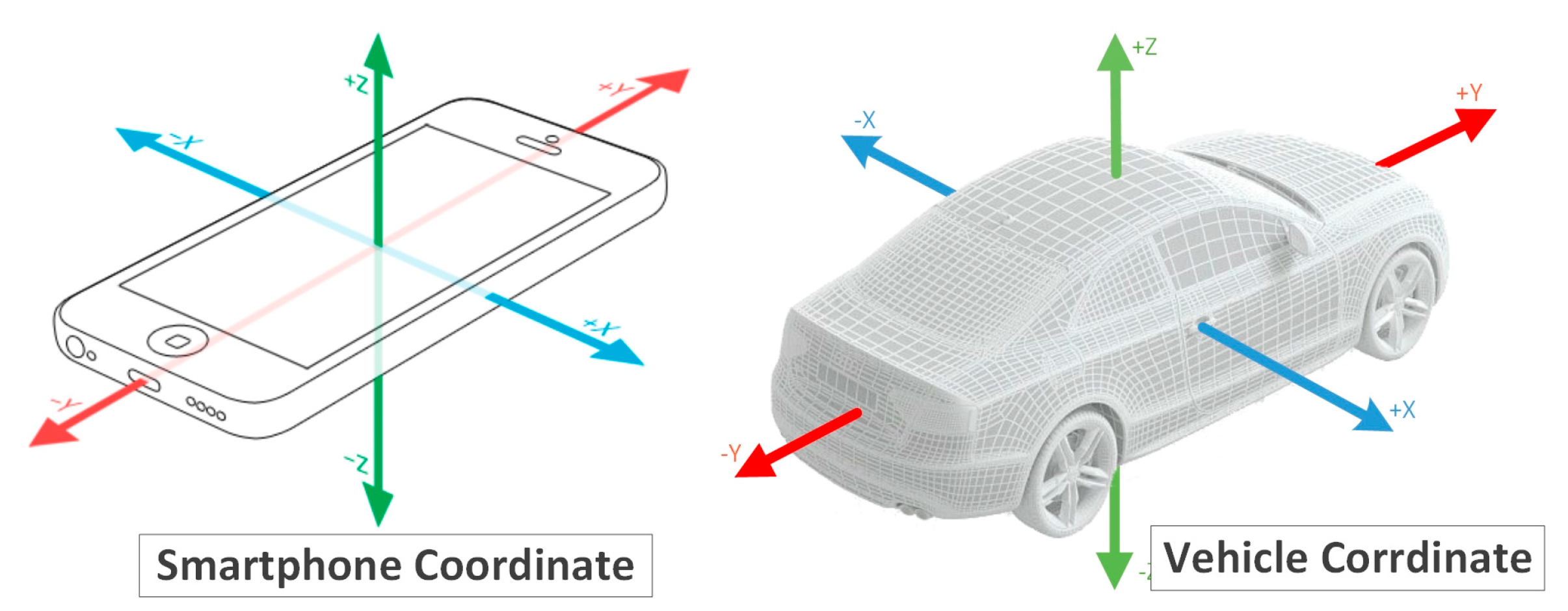

- We align the gyro’s coordinate system with the vehicle’s coordinate system by rotating the gyro’s coordinate system twice.

- Extensive experiments are conducted and the experimental results demonstrate the feasibility and efficiency of our recognition system.

2. Related Work

2.1. Based on Video

2.2. Based on Vehicle Sensors

2.3. Based on Smartphone Sensors

3. Vehicle Steering Recognition

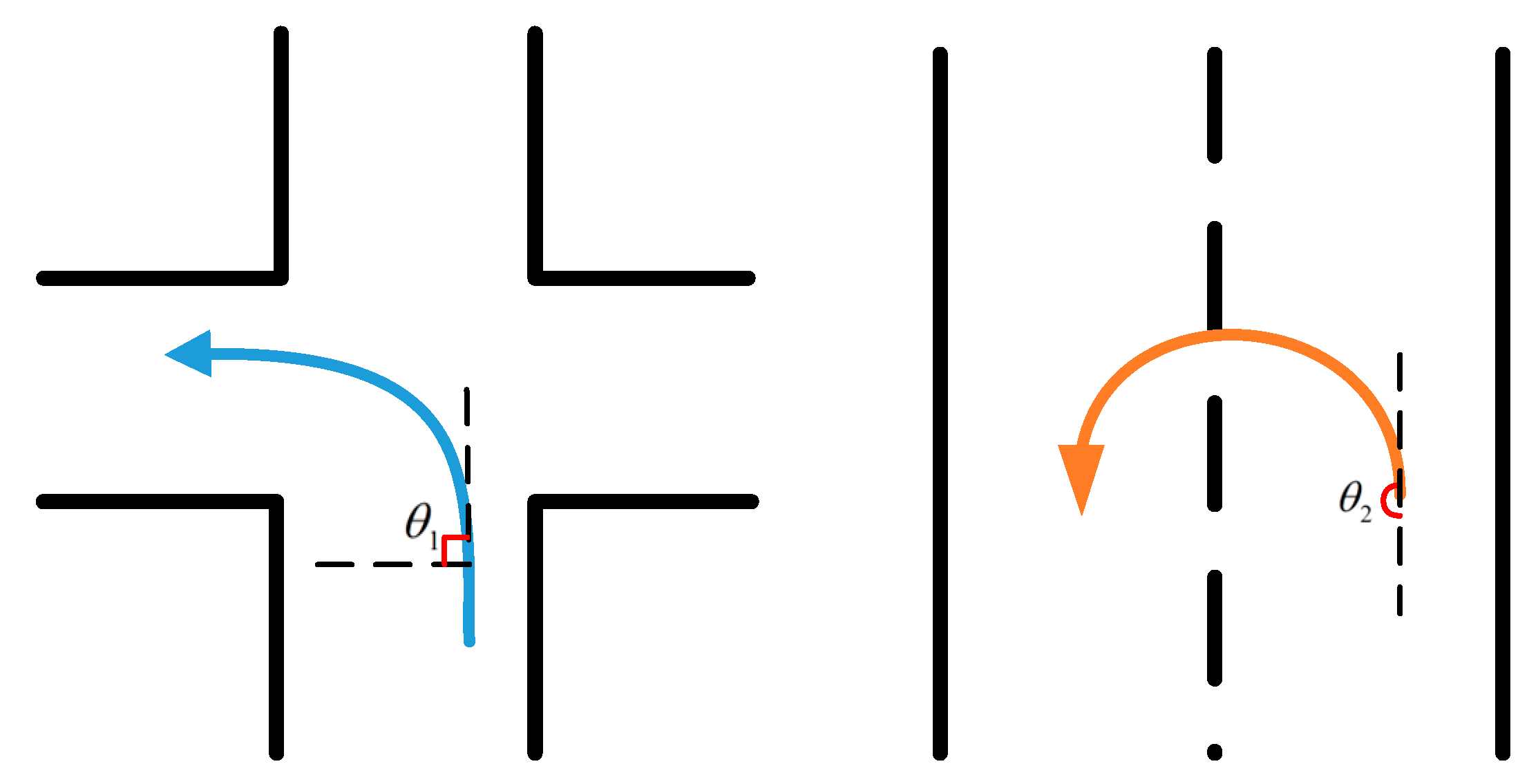

3.1. Steering Maneuver Modeling

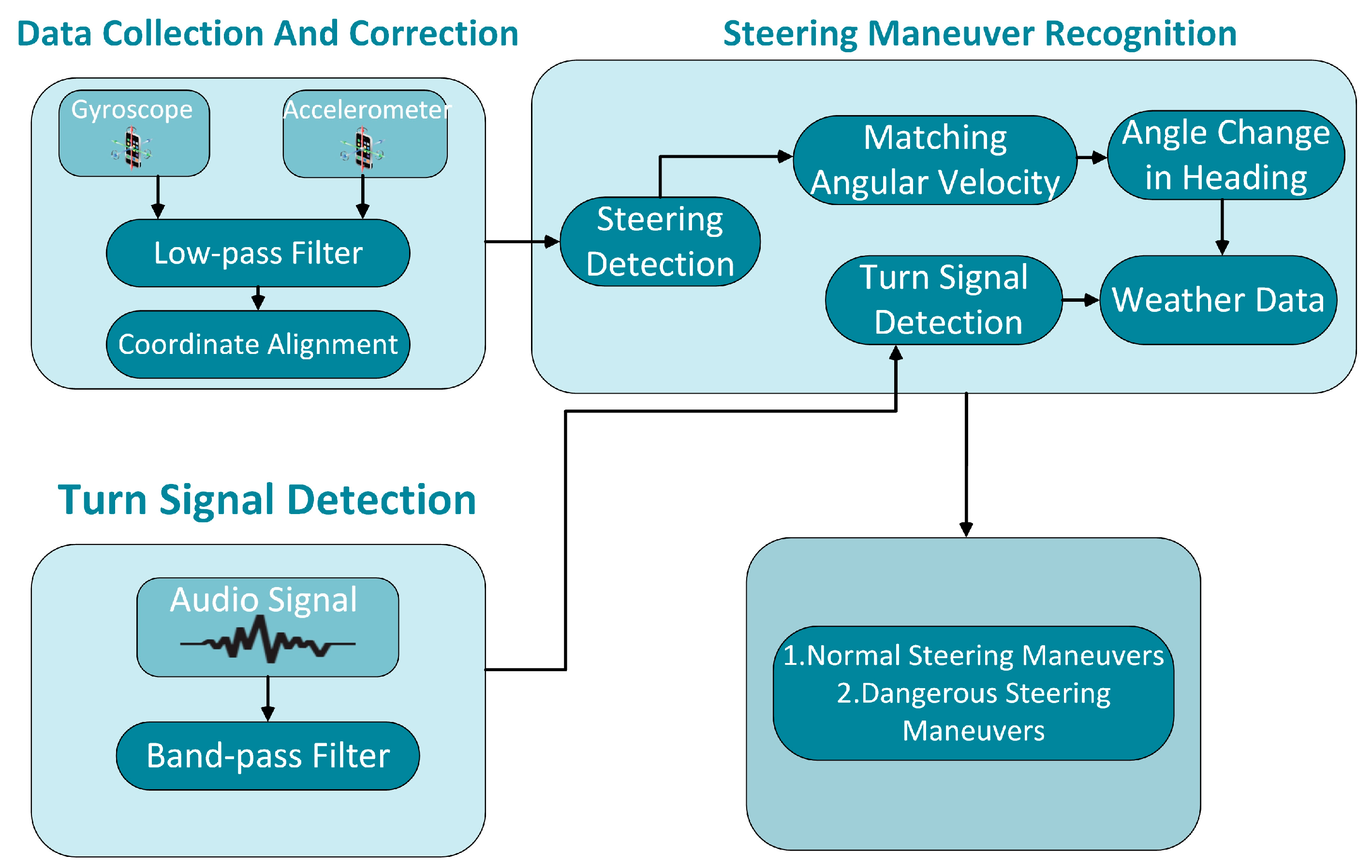

3.2. System Overview

- Data Collection and Correction Module: Due to the non-fixed location of the smartphone in the vehicle, the inertial sensor data can’t directly reflect the angular velocity information when the angle of the vehicle’s body changes. We obtain the correct angular velocity by aligning the three-axis gyroscope coordinate system with the vehicle’s coordinate system with the help of the accelerated velocity data.

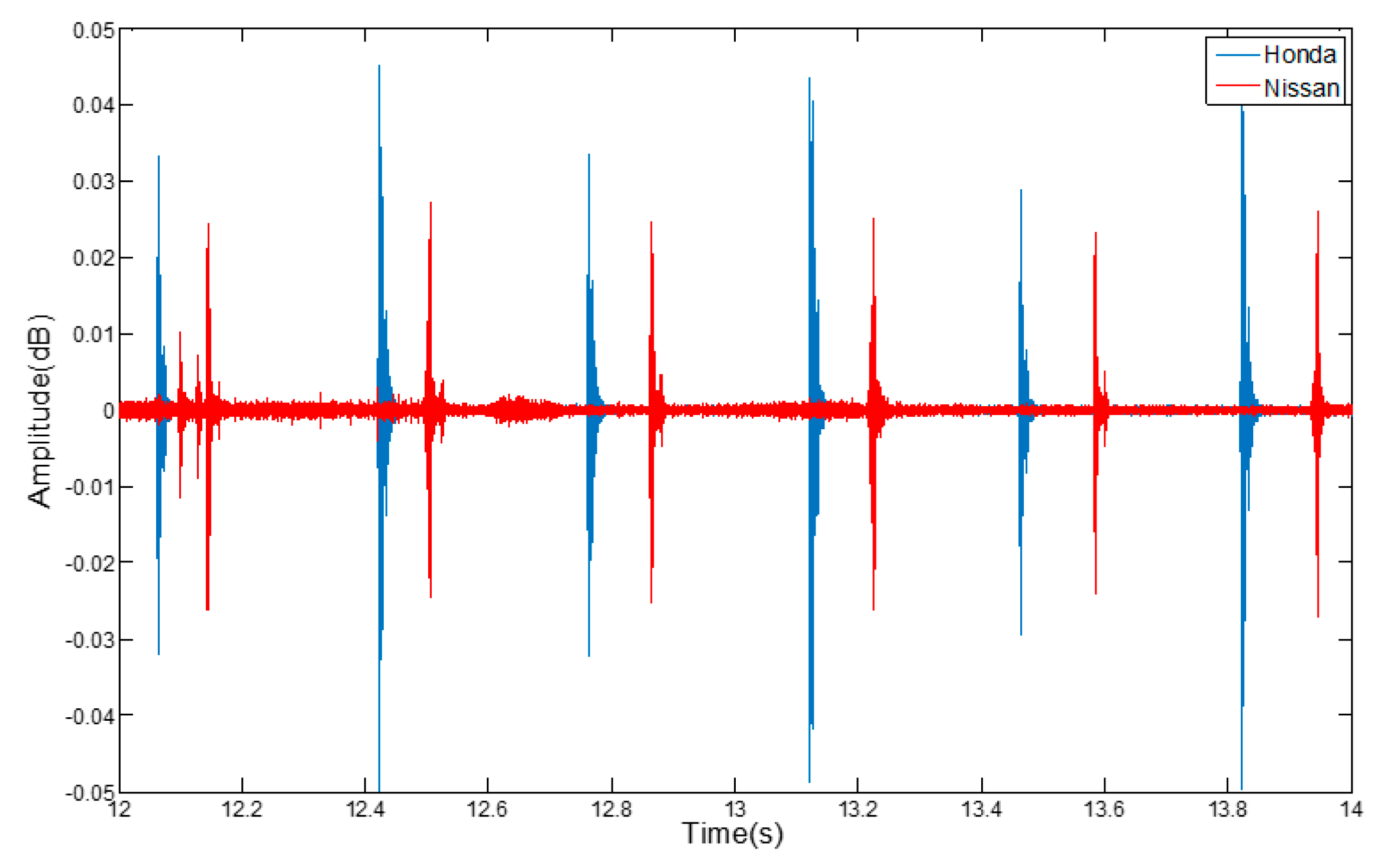

- Turn Signal Detection Module: Many traffic accidents are caused by drivers who don’t use the signal lights when turning or changing lanes. Therefore, we use the microphone sensor to determine whether the driver turned on the turn signal by detecting the audio of the turn signal.

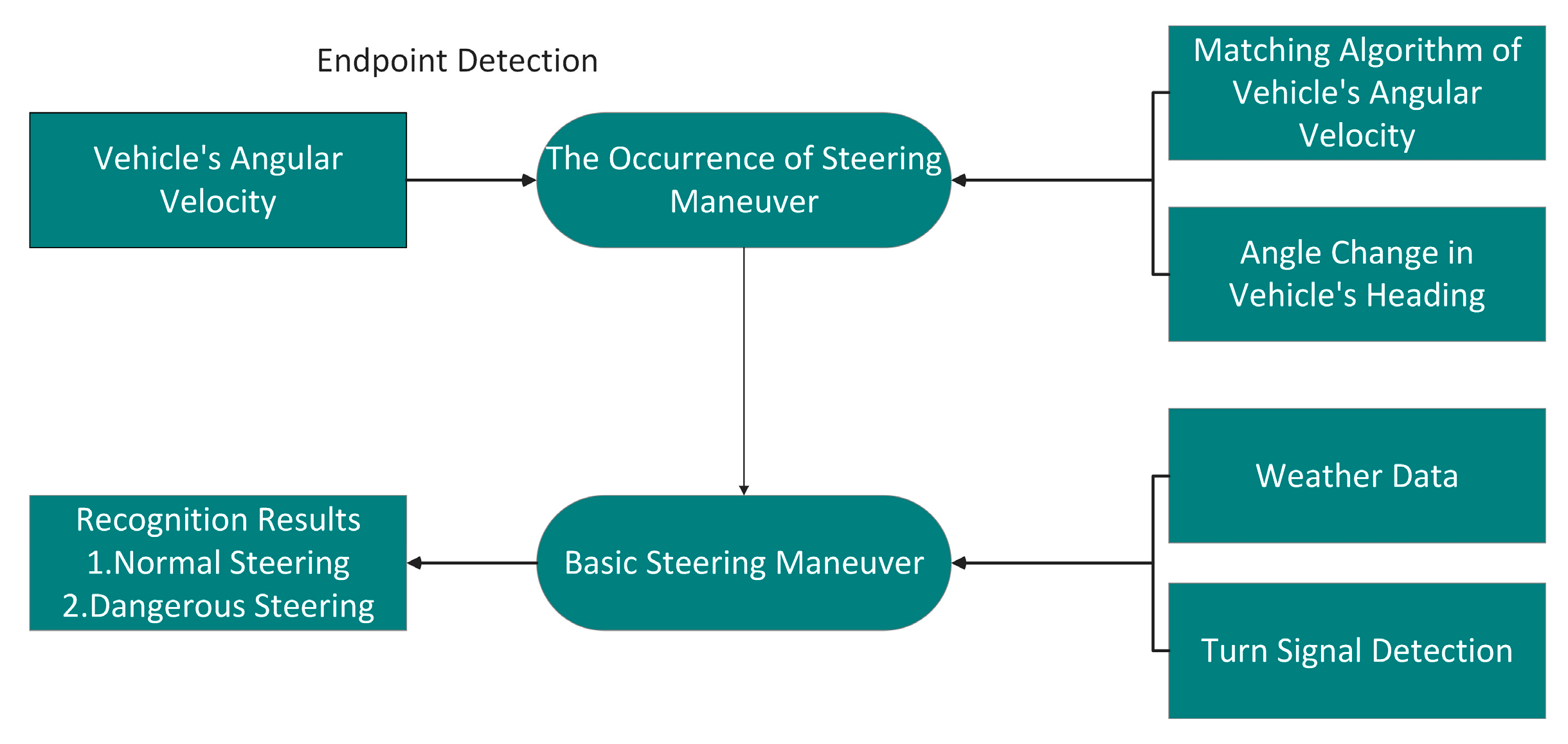

- Steering Recognition Module: The current recognition algorithm for vehicle maneuvers is not comprehensive enough. Therefore, in this part, we first design a reasonable endpoint detection algorithm to determine the occurrence of steering maneuvers. Then we get the recognition algorithm based on waveform matching of the vehicle’s angular velocity, steering angle of the vehicle’s heading, the audio frequency of turn signals and weather conditions.

3.3. Data Collection and Correction

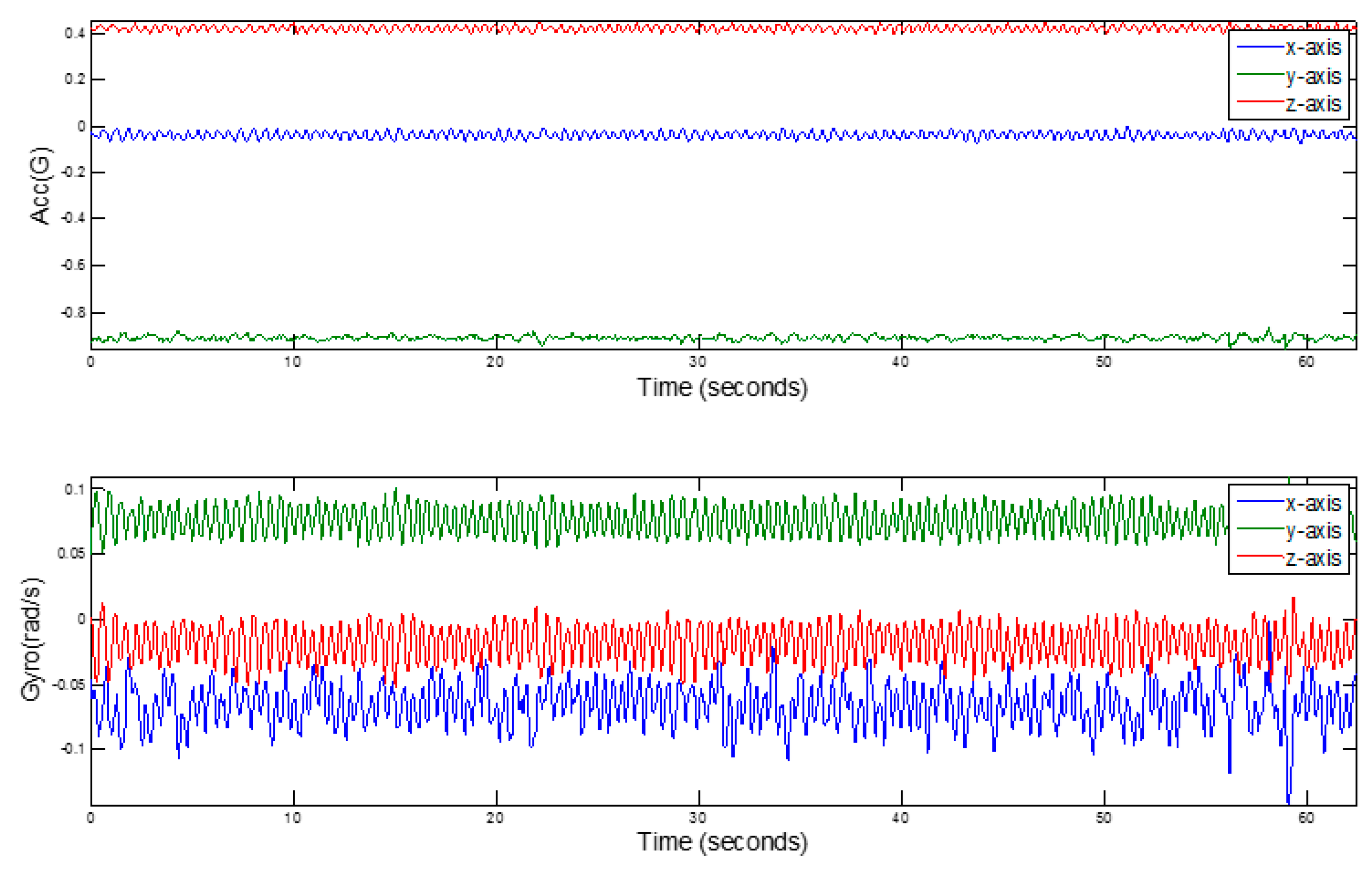

3.3.1. Data Collection of the Inertial Sensor

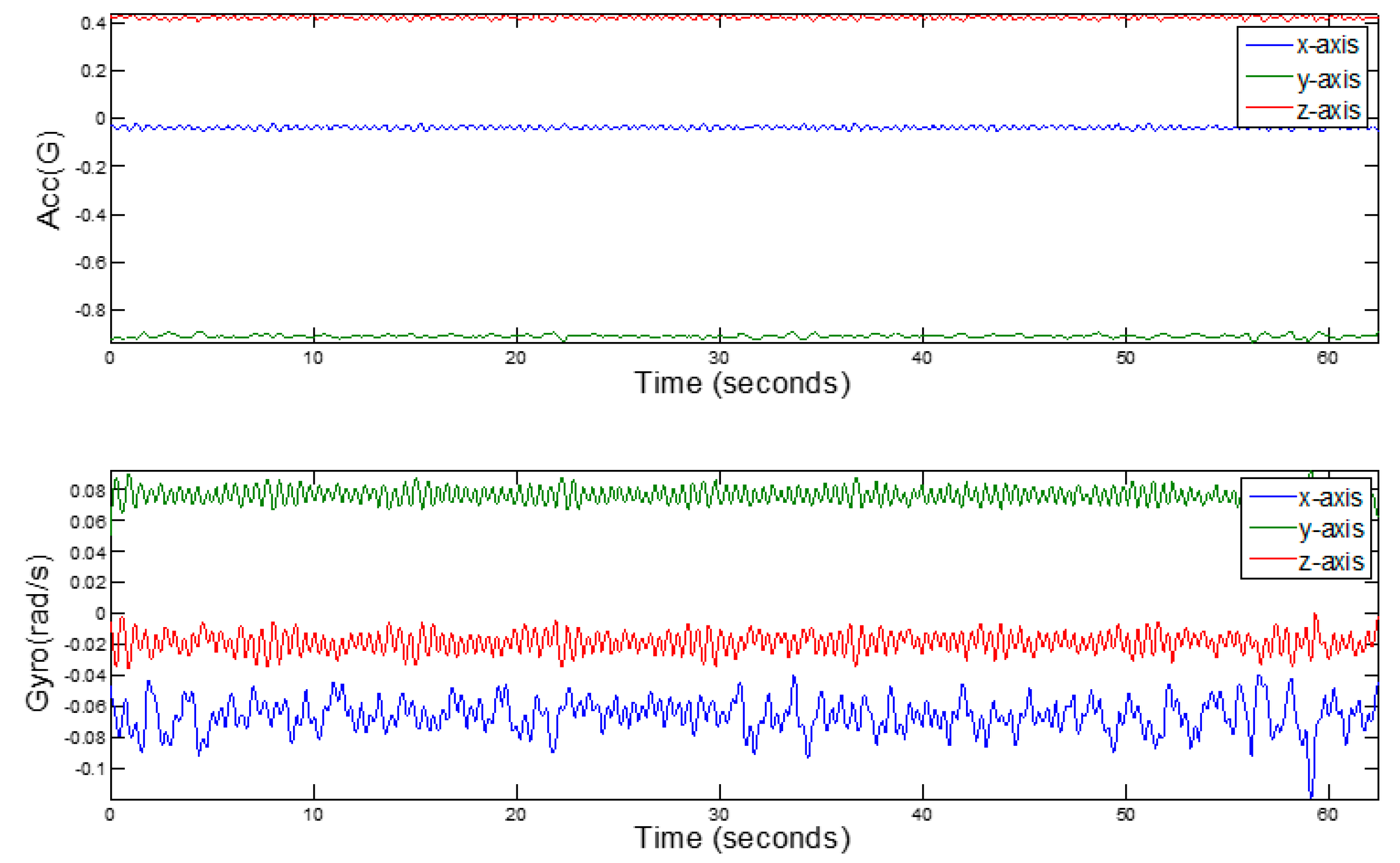

3.3.2. Data Filtering of the Inertial Sensor

3.3.3. Coordinate Alignment

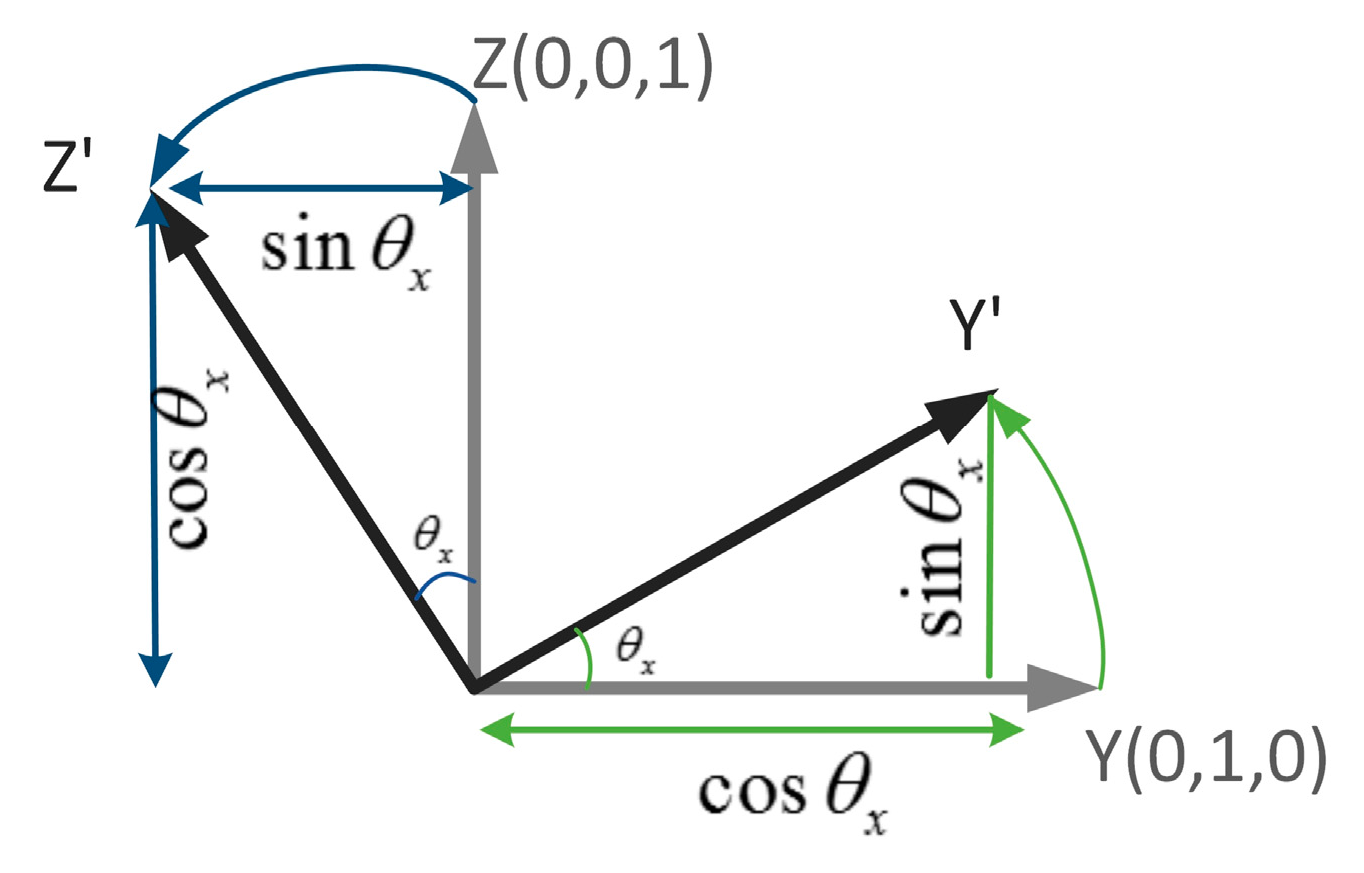

- The first rotation: at first, rotating the smartphone from the current posture to the reference coordinate system through the Inverse Matrix of the Rotation Matrix. Assuming the original reading of the accelerometer as , while the original reading of three-axis gyroscope as , then we can obtain the acceleration and angular velocity as shown in Figure 2c after the first rotation:

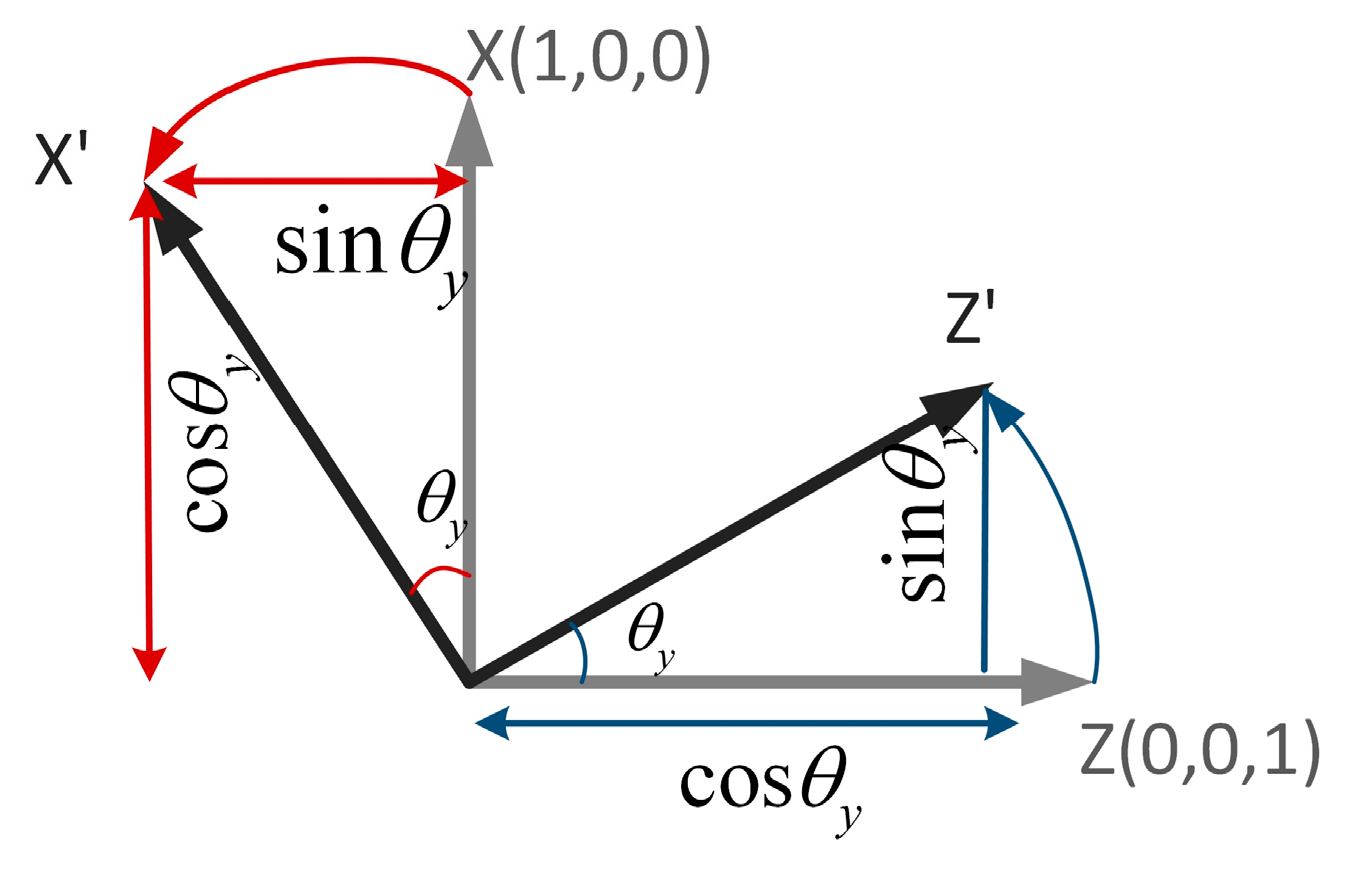

- With the second rotation: we can achieve the goal of the second alignment by obtaining the angle between the x, y-axis of the smartphone’s coordinate system and the vehicle’s. The process of rotation is shown in Figure 9.

3.4. Turn Signal Detection

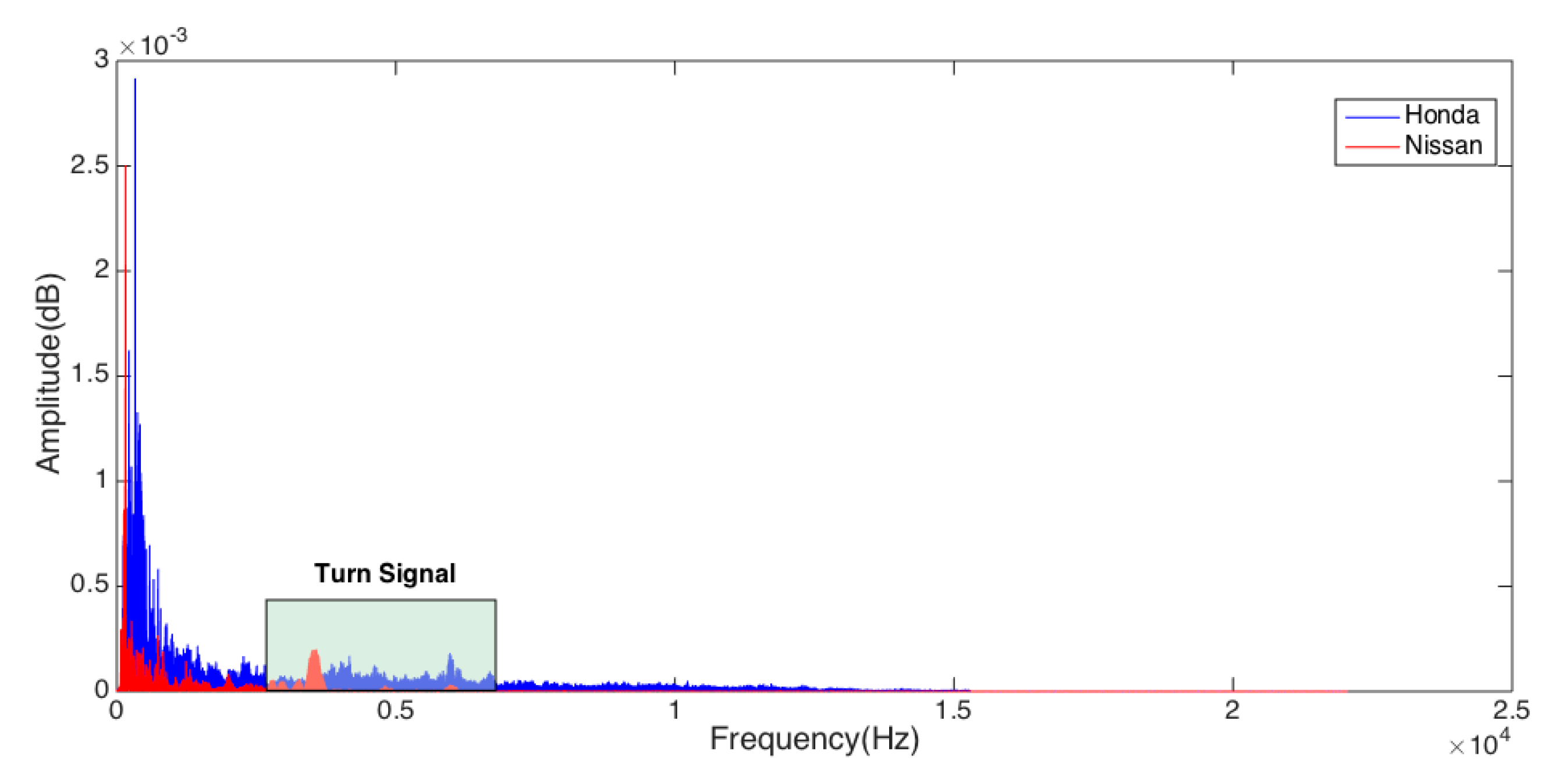

3.4.1. The Features of Turn Signals

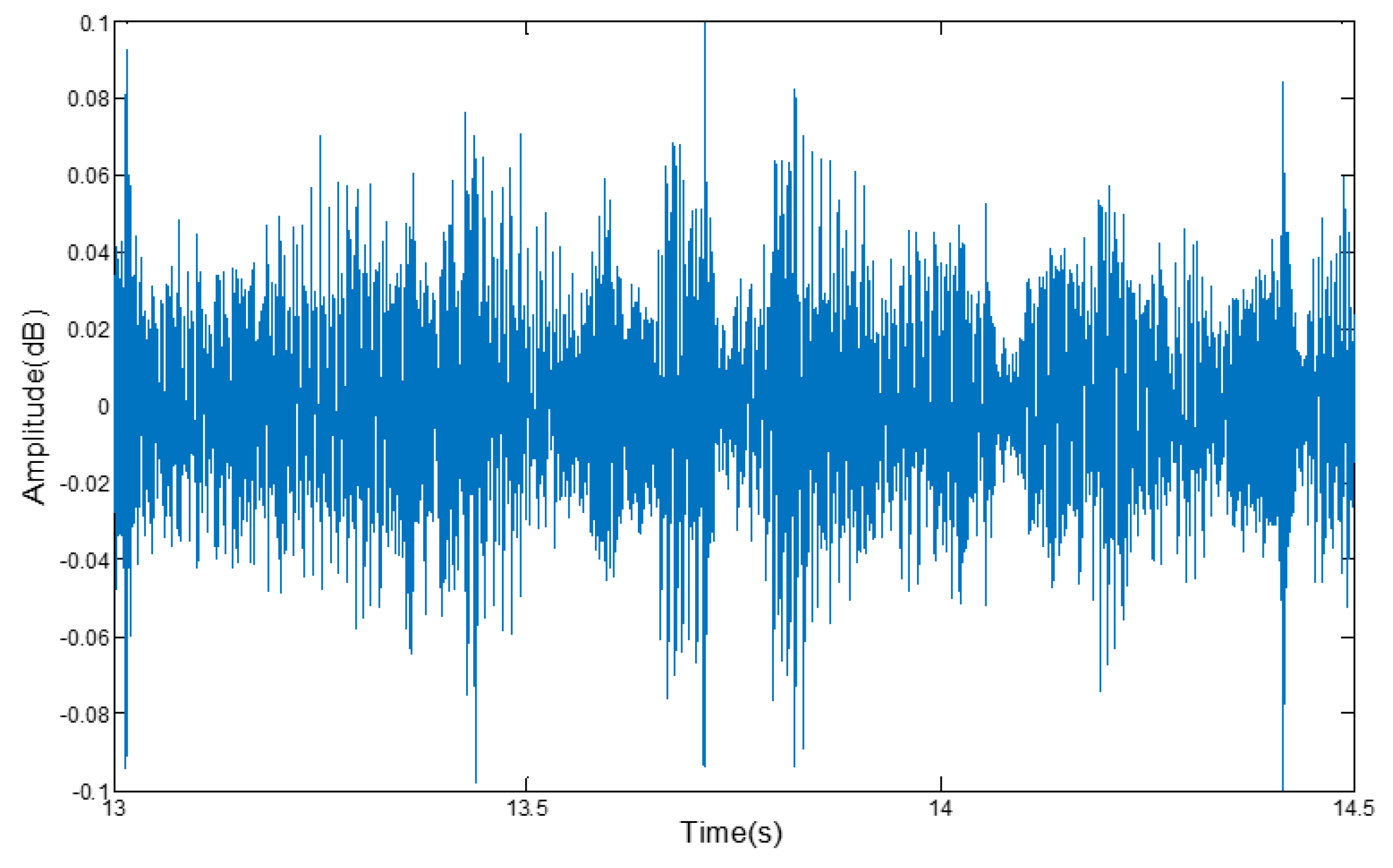

3.4.2. Collect the Audio Signal

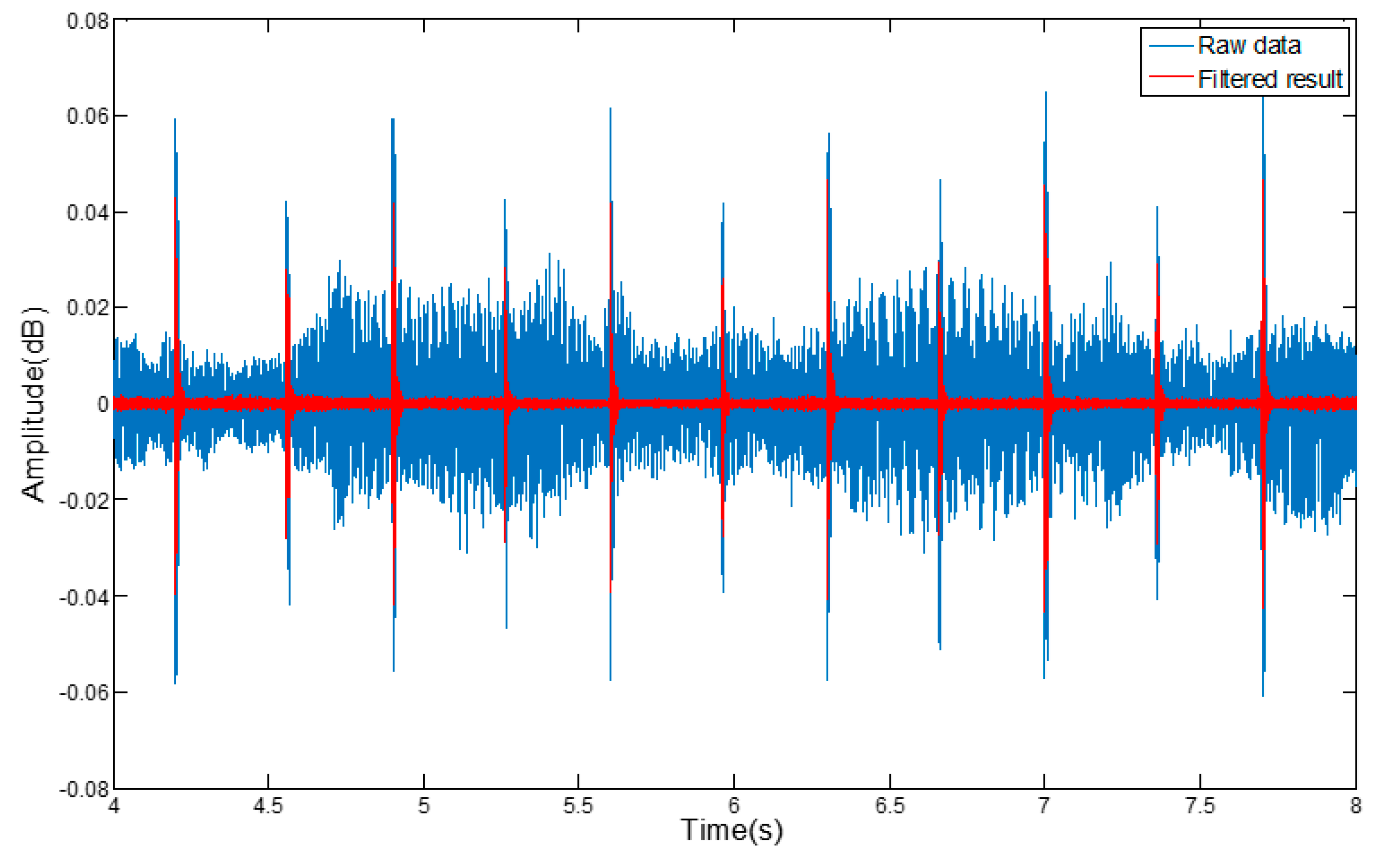

3.4.3. Extract the Audio Signal

3.4.4. Turn Signal Detection

3.5. Vehicle Steering Recognition

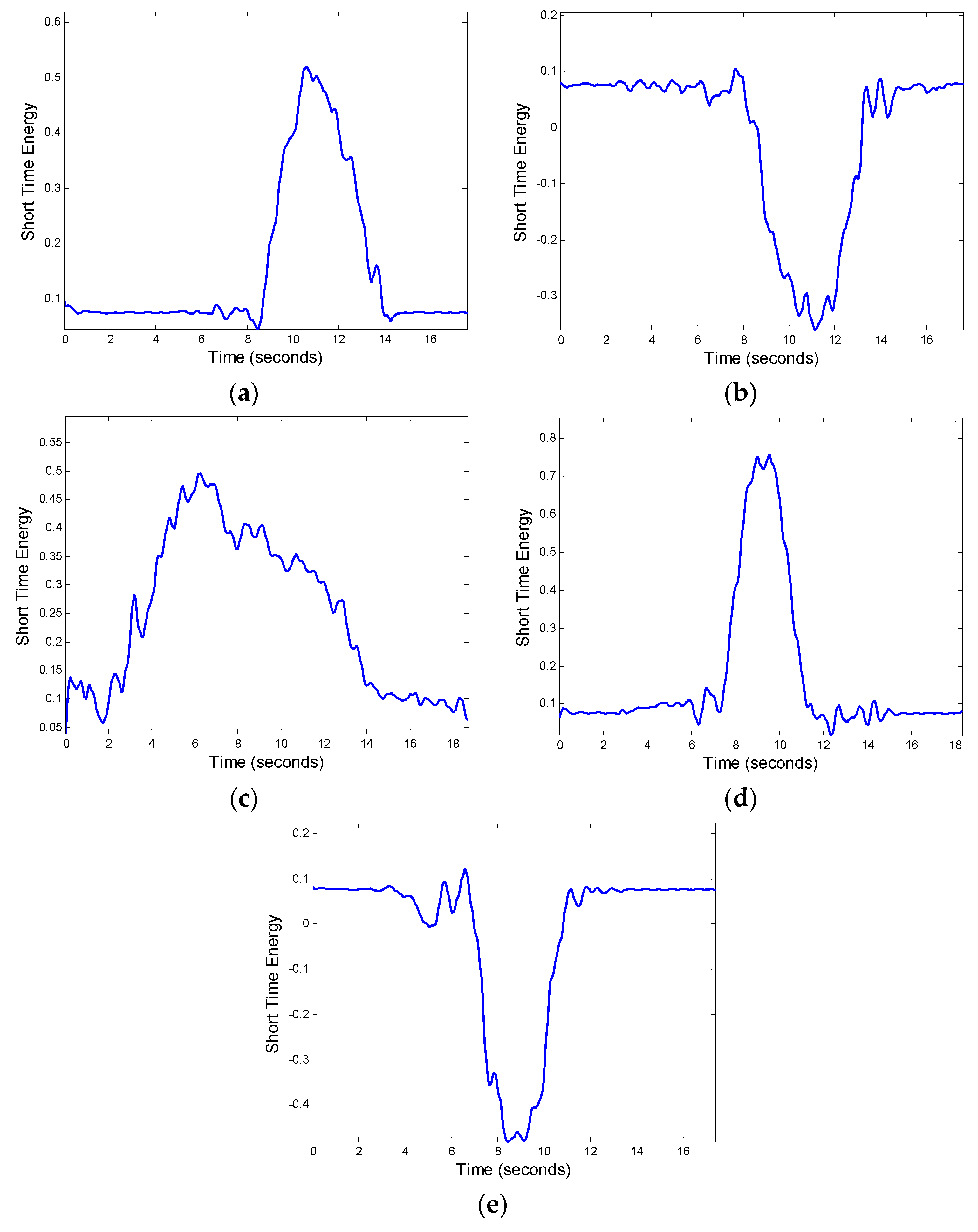

3.5.1. Steering Maneuver Detection

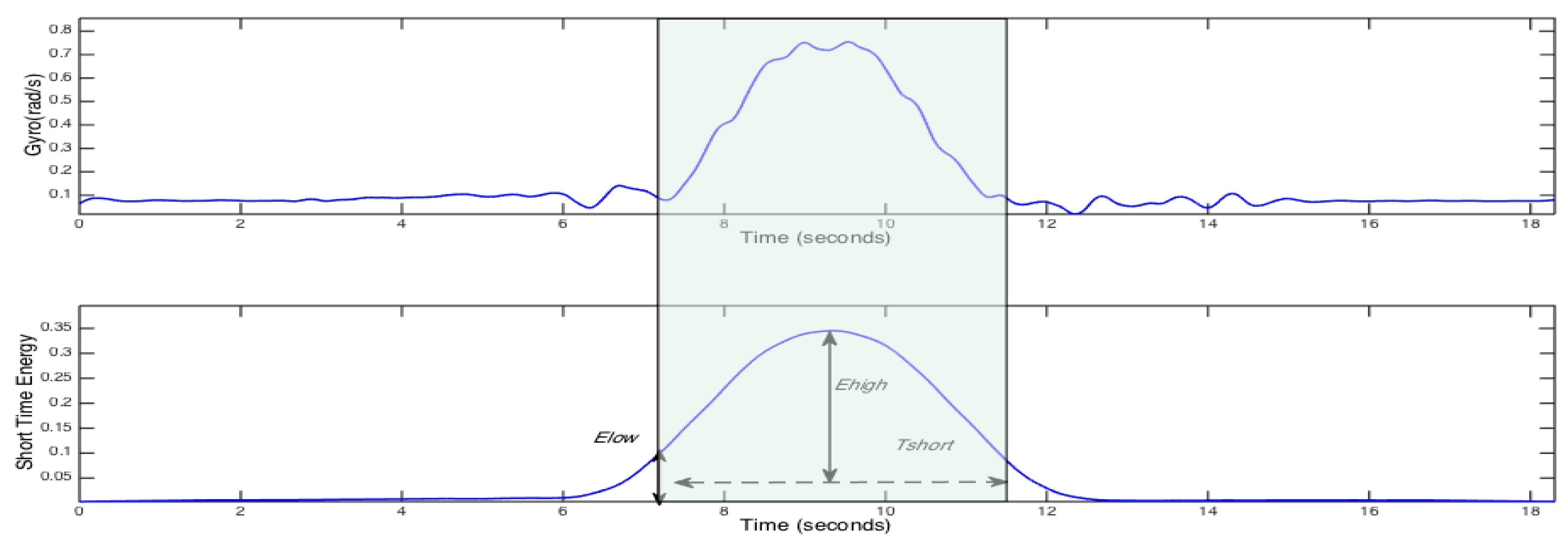

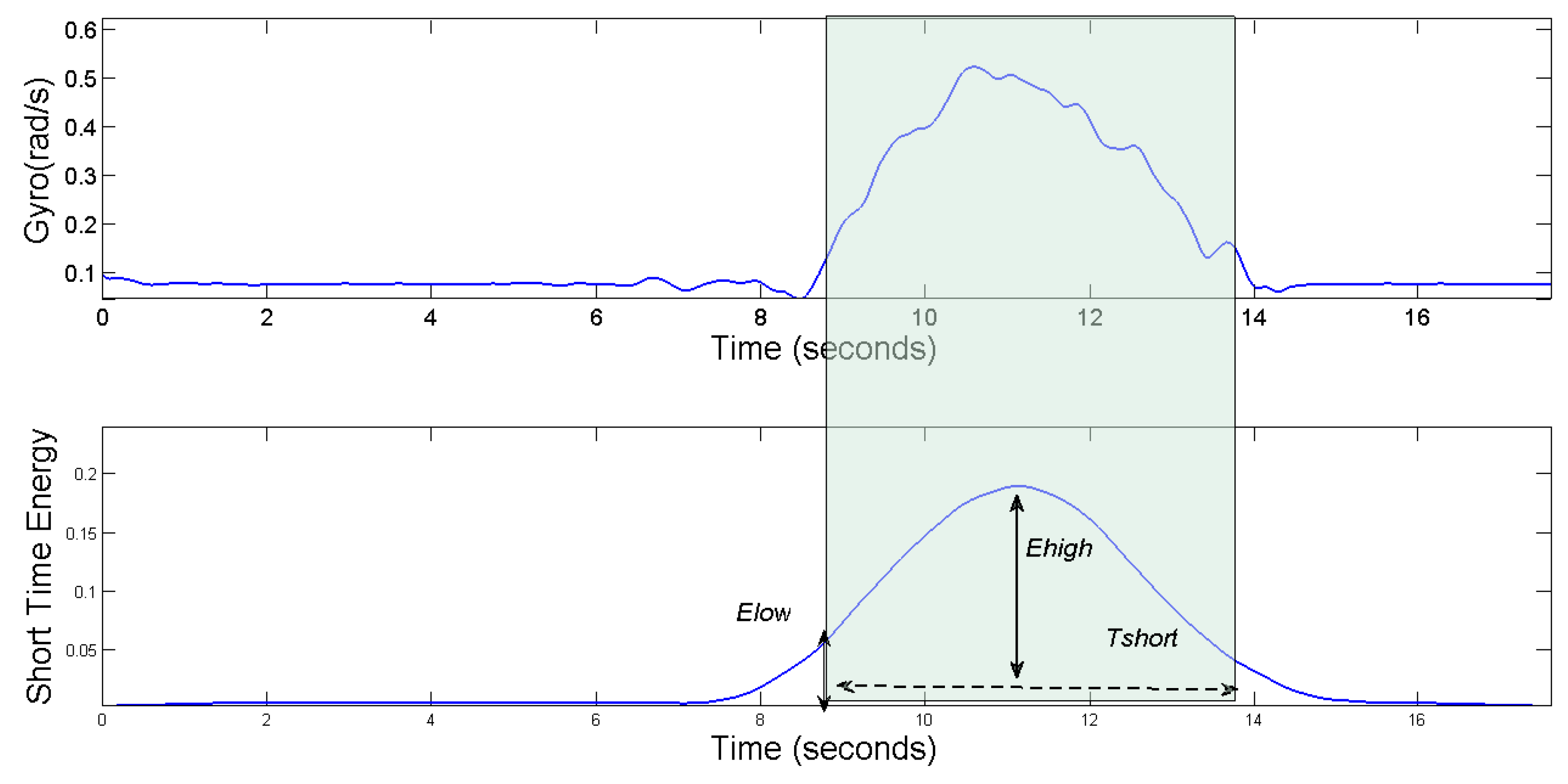

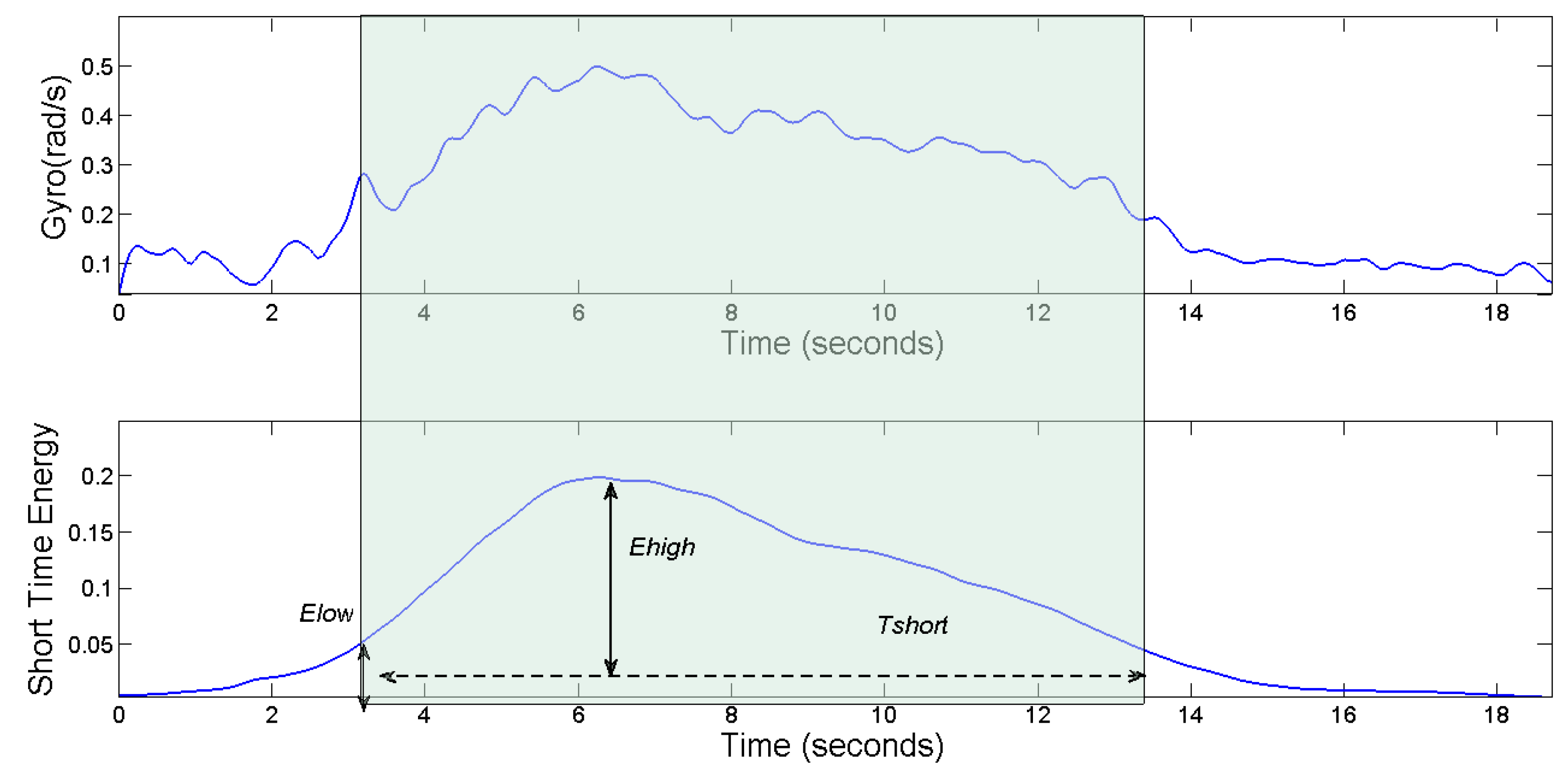

- Short-term energy: the main difference between signal and noise lies in their energy. Signal energy equals the sum of the superposition of the effective signal energy and the noise segment, and the energy of the signal segment is more than that of the noise segment [24,25]. The energy of the signal changes obviously along with time, and for the sequence , the energy is defined as:

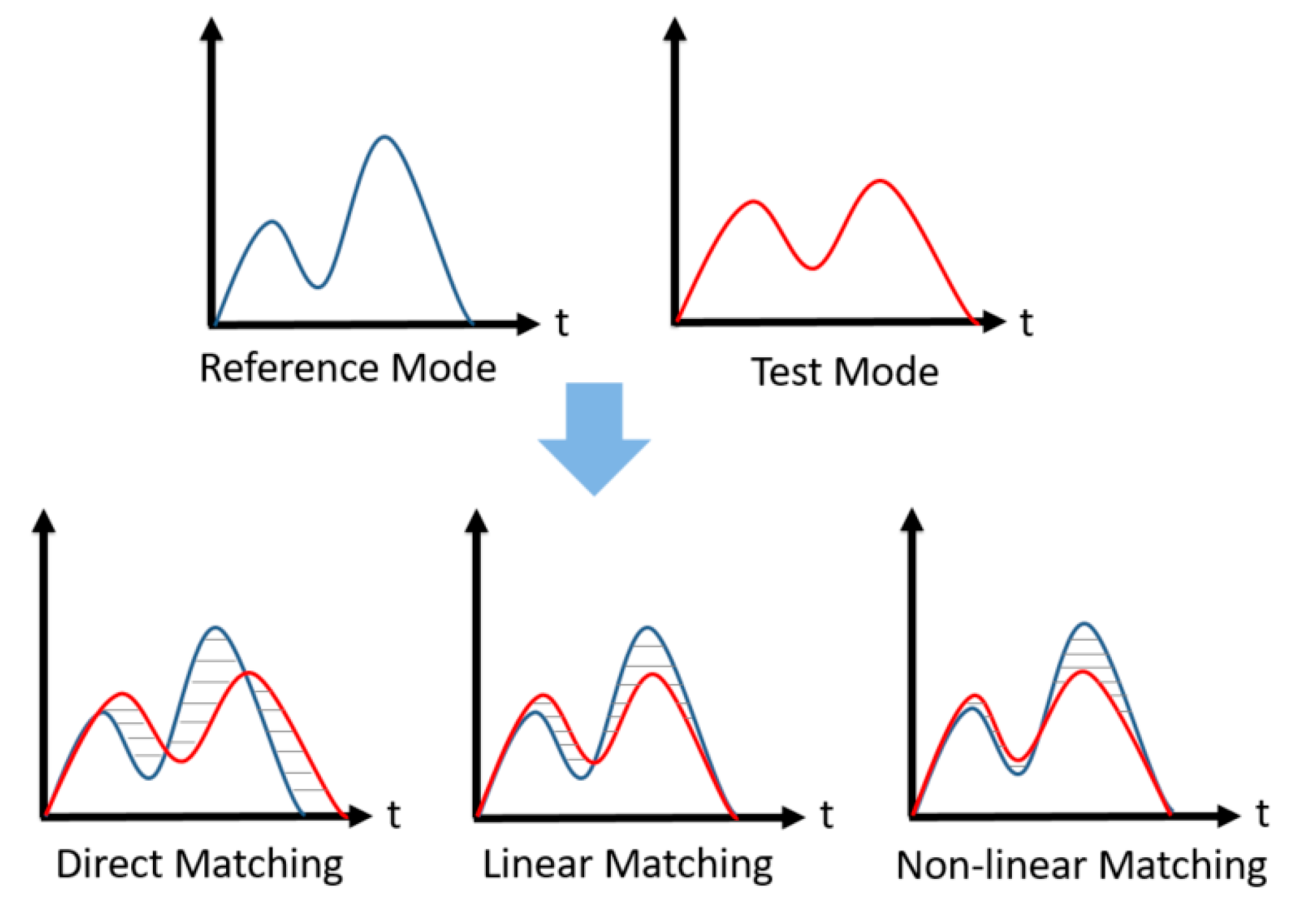

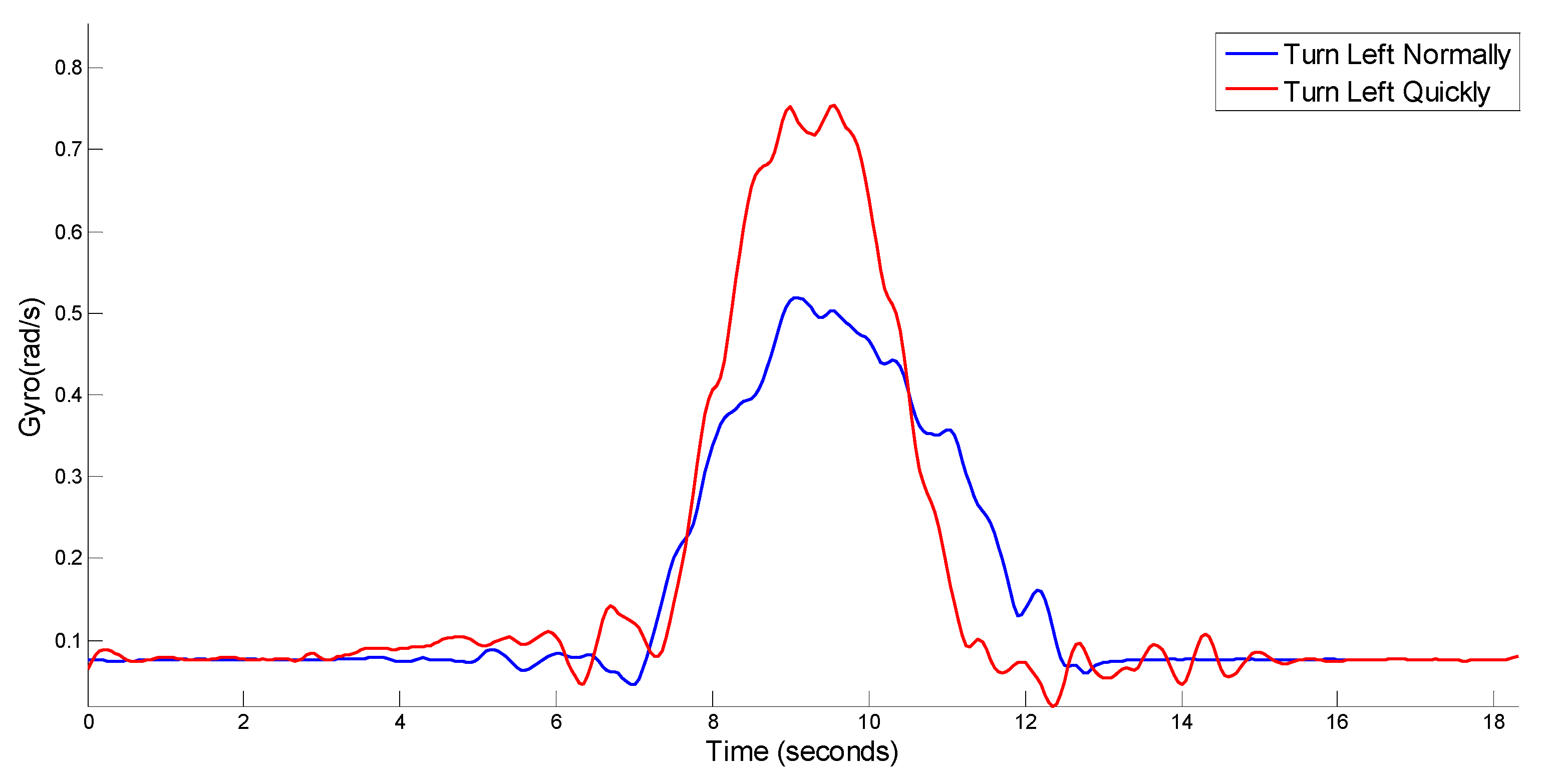

3.5.2. Matching Vehicle’s Angular Velocity

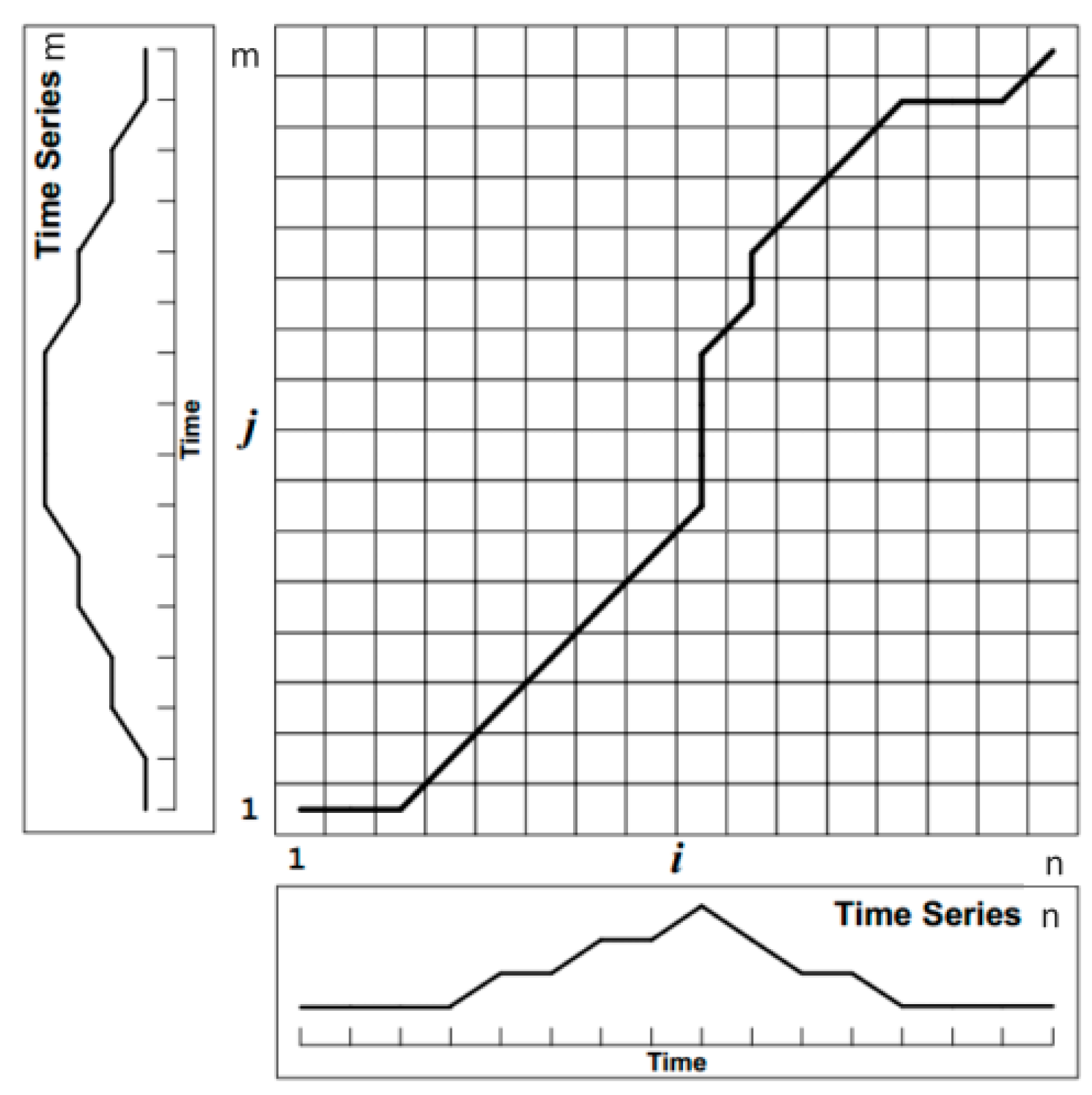

- Boundary Constraints: The search must occur between the start and end points, since the order of the time series does not change, therefore, in Figure 19 it appears from the lower left corner to the upper right corner of the end, which is:

- Continuity Constraints: in the search process, it can’t cross a certain element to match, so as to ensure that the reference template R and the template T to be tested for each coordinate in W appear. Suppose , need to meet:

- Monotonic Constraint: To ensure that the search process is monotonically increasing with time. Suppose , need to meet Equation (17).

- (1)

- Reduce the resolution: contraction time series as far as possible with smaller data points to represent the original time series, so that one can reduce the dimension of the template matrix, that is to reduce the resolution.

- (2)

- Projection: Perform a DTW algorithm on a lower-resolution template matrix. The optimal path is searched for.

- (3)

- Increasing resolution: The template matrix cells that pass through the regular path, obtained at low resolution, are further refined to a higher resolution on the template matrix. As shown in Figure 20, in order to find the optimal regular path, we need to add search radius r at higher resolution, that is to extend K cells to both sides of the regular path.

3.5.3. Heading Angle Change

3.5.4. Determine the Dangerous Steering Maneuvers Based on Weather Conditions

3.6. Steering Maneuver Recognition Process

| Algorithm 1. Recognition Process Algorithm of Steering Maneuver | |

| 1: | Begin: |

| 2: | Inputs: State, Gyro, En, numsModule, Yaw, Gyro_t, Turn_flag |

| 3: | switch State |

| 4: | case Slience and Excess |

| 5: | if En > En_low |

| 6: | State < −Excess |

| 7: | Record the holdTime and startPoint |

| 8: | else if En < En_high |

| 9: | State < −Bump |

| 10: | Record the start time and holdTime |

| 11: | else |

| 12: | State < −Slience |

| 13: | clear the holdTime |

| 14: | case Bump |

| 15: | if En > En_low |

| 16: | Record the holdTime |

| 17: | else |

| 18: | if holdTime < MinHoldTime |

| 19: | State < −Slience |

| 20: | clear the holdTime |

| 21: | endPoint = startPoint + holdTime |

| 22: | |

| 23: | distArray[numsModule] |

| 24: | for(times = 1;times < numsModule;times++) |

| 25: | { |

| 26: | distArray[times] = fastDTW (Gyro.startPoint, Gyro.endPoint, ref_Gyro) |

| 27: | } |

| 28: | driveResult = min(distArray) and Yaw |

| 29: | Gyro_t = Weather() |

| 30: | If Turn_flag == No |

| 31: | If Gyro > Gyro_t |

| 32: | driveResult = Sharp_CarelessDriving |

| 33: | else |

| 34: | driveResult = CarelessDriving |

| 35: | else |

| 36: | If Gyro > Gyro_t |

| 37: | driveResult = SharpDriving |

| 38: | else |

| 39: | driveResult = NomalDriving |

4. Experimental Results

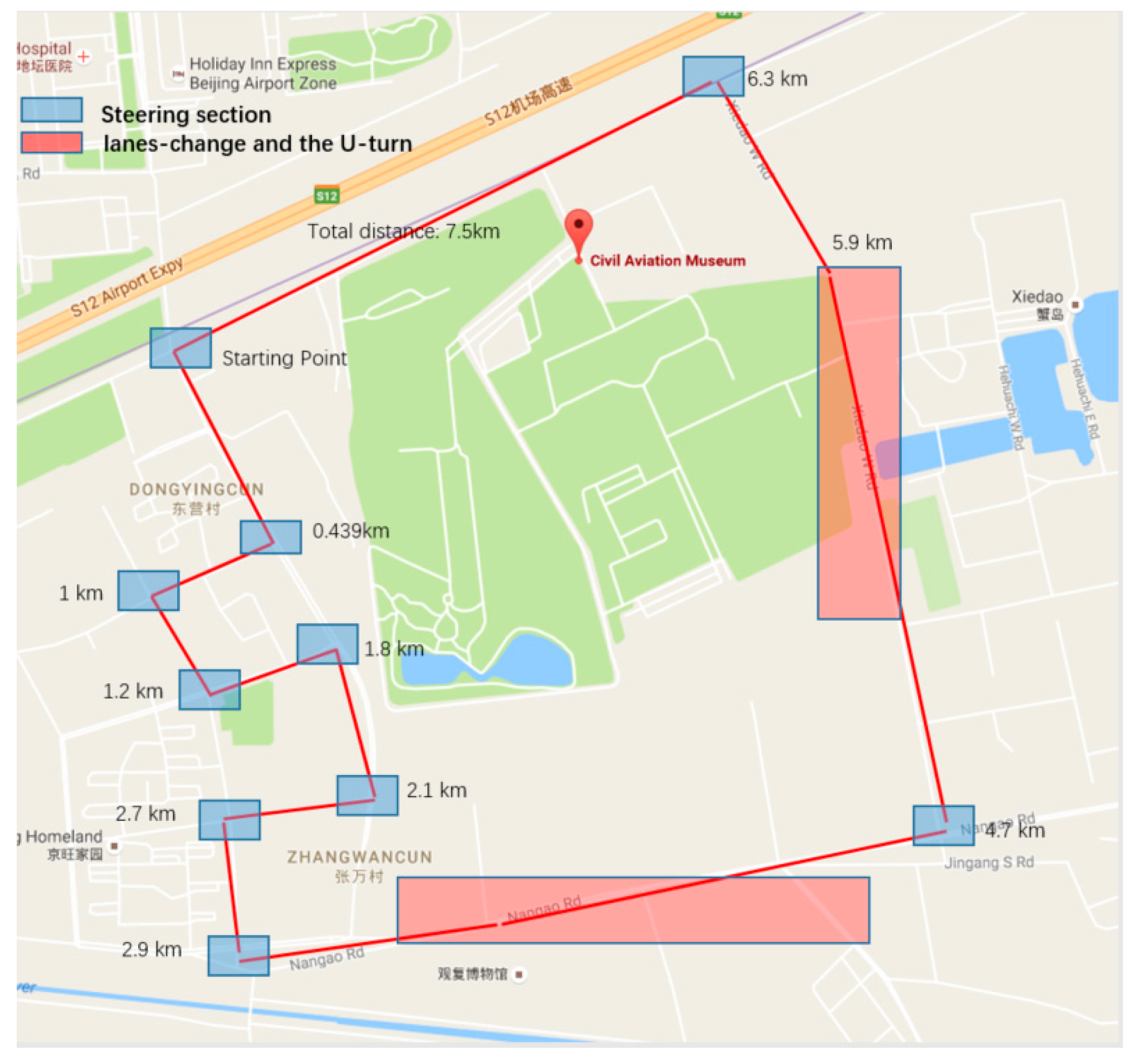

4.1. Experimental Design

4.2. Reference Template for Steering Maneuvers

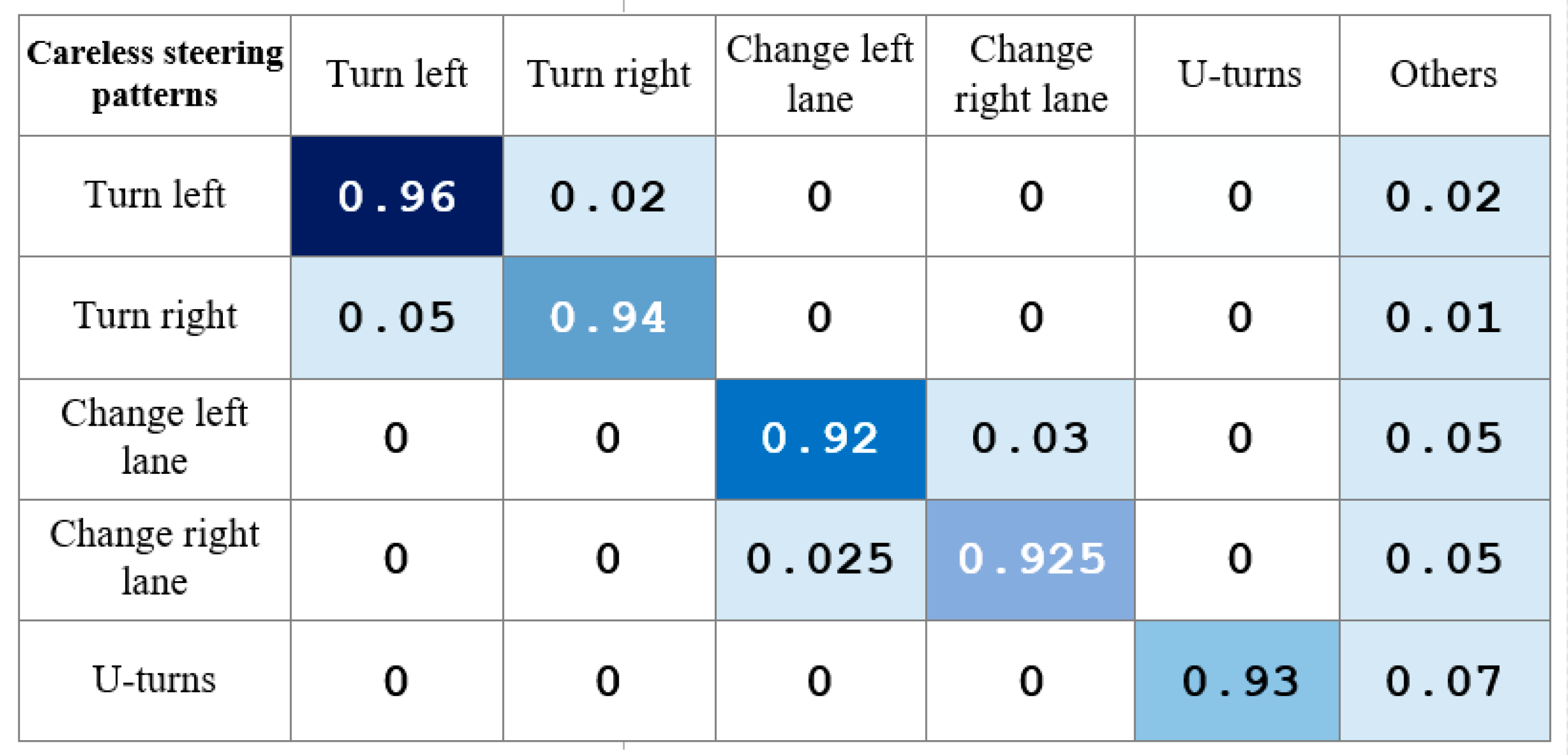

4.3. Analysis and Comparison of Experimental Results

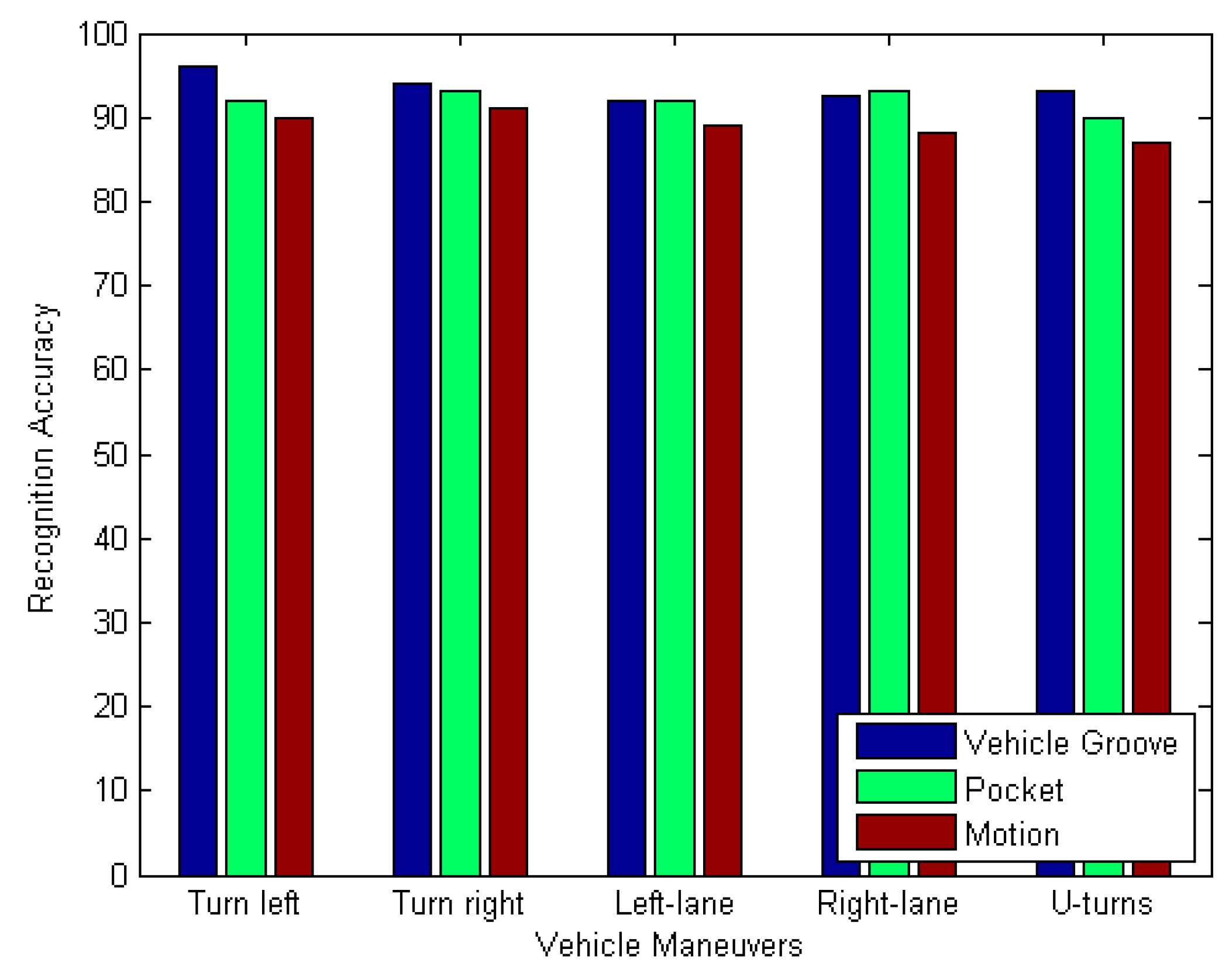

4.4. The Recognition Accuracy of Different Smartphone Positions

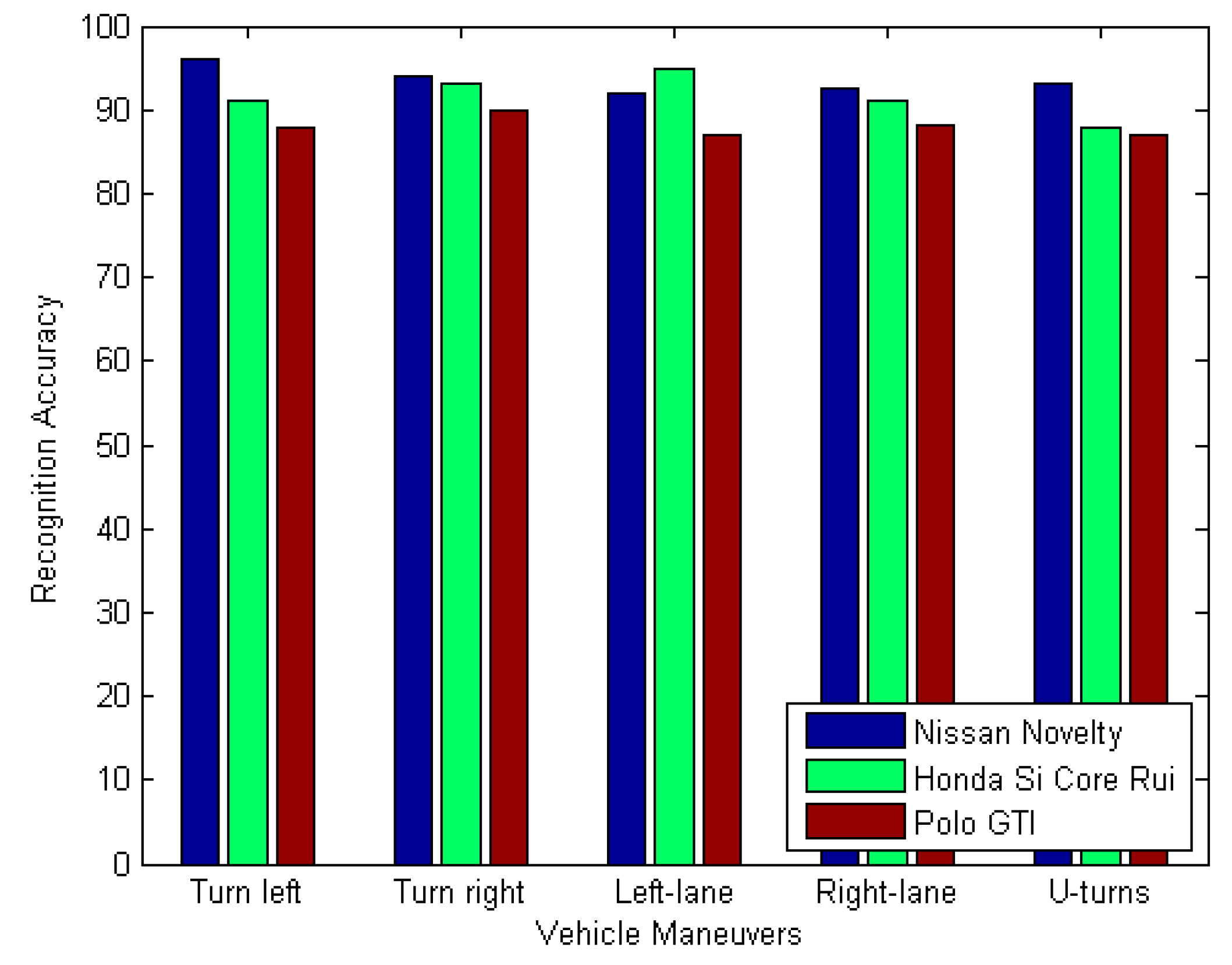

4.5. The Recognition Accuracy of Different Vehicles and Drivers

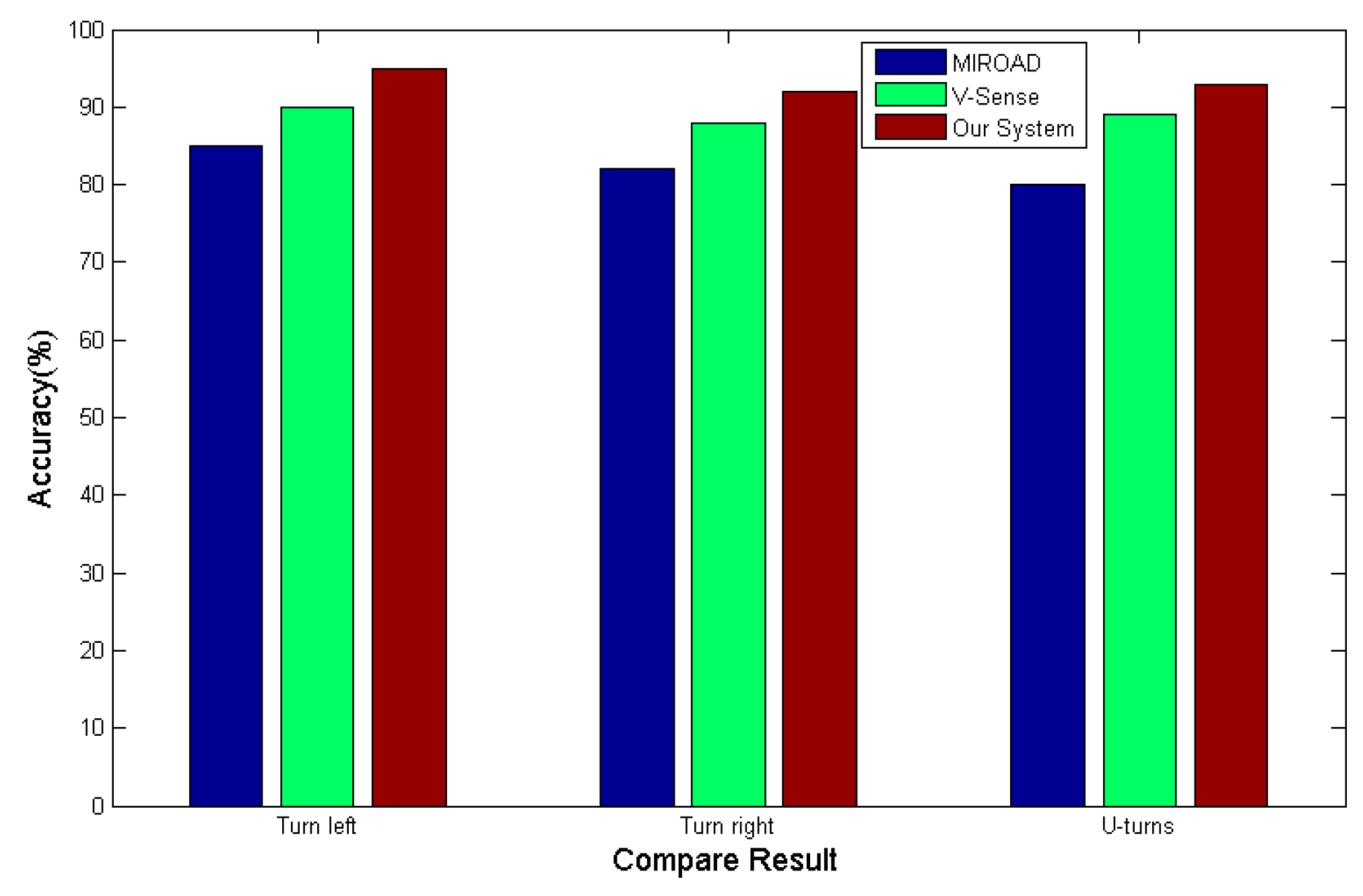

4.6. Comparison with Other Systems

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Tefft, B.C. Car Crashes Rank Among the Leading Causes of Death in the United States–Impact Speed and a Pedestrian’s Risk of Severe Injury or Death; Foundation for Traffic Safety: Washington, DC, USA, 2010. [Google Scholar]

- Jain, A.; Koppula, H.S.; Raghavan, B.; Soh, S.; Saxena, A. Car that knows before you do: Anticipating maneuvers via learning temporal driving models. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3182–3190.

- Jiang, Y.; Qiu, H.; McCartney, M.; Halfond, W.G.J.; Bai, F.; Grimm, D.; Govindan, R. Carlog: A platform for flexible and efficient automotive sensing. In Proceedings of the 12th ACM Conference on Embedded Network Sensor Systems, Memphis, TN, USA, 3–6 November 2014; pp. 221–235.

- Jiang, Y.; Qiu, H.; McCartney, M.; Sukhatme, G.; Gruteser, M.; Bai, F.; Grimm, D.; Govindan, R. CARLOC: Precisely Tracking Automobile Position. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Seoul, Korea, 1–4 November 2015; pp. 411–412.

- Mitrović, D. Reliable method for driving events recognition. IEEE Trans. Intell. Transp. Syst. 2005, 6, 198–205. [Google Scholar] [CrossRef]

- Hanggoro, A.; Putra, M.A.; Reynaldo, R.; Sari, R.F. Green house monitoring and controlling using Android mobile application. In Proceedings of the 2013 International Conference on QiR (Quality in Research), Yogyaharta, Indonesia, 25–28 June 2013; pp. 79–85.

- Miranda-Moreno, L.F.; Chung, C.; Amyot, D.; Chapon, H. A System for Collecting and Mapping Traffic Congestion in a Network Using GPS Smartphones from Regular Drivers. In Proceedings of the Transportation Research Board 94th Annual Meeting, Washington, DC, USA, 11–15 January 2015.

- Incel, O.D.; Kose, M.; Ersoy, C. A Review and Taxonomy of Activity Recognition on Mobile Phones. BioNanoScience 2013, 3, 145–171. [Google Scholar] [CrossRef]

- Tang, F.; You, I.; Tang, C.; Guo, M. An efficient classification approach for large-scale mobile ubiquitous computing. Inform. Sci. 2013, 232, 419–436. [Google Scholar] [CrossRef]

- Rodrigues, J.J.P.C.; Lopes, I.M.C.; Silva, B.M.C.; de La Torre, I. A new mobile ubiquitous computing application to control obesity: SapoFit. Inform. Health Soc. Care 2013, 38, 37–53. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yang, J.; Liu, H.; Chen, Y.; Gruteser, M.; Martin, R.P. Sensing vehicle dynamics for determining driver phone use. In Proceedings of the 11th Annual International Conference on Mobile Systems, Applications, and Services, Taipei, Taiwan, 25–28 June 2013; pp. 41–54.

- Yang, J.; Sidhom, S.; Chandrasekaran, G.; Vu, T.; Liu, H.; Cecan, N.; Chen, Y.; Gruteser, M.; Martin, R.P. Detecting driver phone use leveraging car speakers. In Proceedings of the 17th Annual International Conference on Mobile Computing and Networking, Las Vegas, NV, USA, 19–23 September 2011; pp. 97–108.

- Dai, J.; Teng, J.; Bai, X.; Shen, Z.; Xuan, D. Mobile phone based drunk driving detection. In Proceedings of the 4th International Conference on Pervasive Computing Technologies for Healthcare, Munich, Germany, 22–25 March 2010; pp. 1–8.

- White, J.; Thompson, C.; Turner, H.; Dougherty, B.; Schmidt, D.C. WreckWatch: Automatic traffic accident detection and notification with smartphones. Mob. Netw. Appl. 2011, 16, 285–303. [Google Scholar] [CrossRef]

- Johnson, D.A.; Trivedi, M.M. Driving style recognition using a smartphone as a sensor platform. In Proceedings of the 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; pp. 1609–1615.

- Chen, D.; Cho, K.T.; Han, S.; Jin, Z.; Shin, K.G. Invisible sensing of vehicle steering with smartphones. In Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services, Florence, Italy, 18–22 May 2015; pp. 1–13.

- Eriksson, J.; Girod, L.; Hull, B.; Newton, R.; Madden, S.; Balakrishnan, H. The Pothole Patrol: Using a Mobile Sensor Network for Road Surface Monitoring; ACMMobiSys: Breckenridge, CO, USA, 2008. [Google Scholar]

- Mednis, A.; Strazdins, G.; Zviedris, R.; Kanonirs, G.; Selavo, L. Real Time Pothole Detection Using Android Smartphones with Accelerometers; IEEE DCOSS: Barcelona, Spain, 2011. [Google Scholar]

- Mohan, P.; Padmanabhan, V.; Ramjee, R. Nericell: Rich Monitoring of Road and Traffic Conditions Using Mobile Smartphones; ACMSenSys: Raleigh, NC, USA, 2008. [Google Scholar]

- NHTSA. Available online: http://www.nhtsa.gov/ (accessed on 18 March 2017).

- Zhou, P.; Li, M.; Shen, G. Use it free: Instantly knowing your phone attitude. In Proceedings of the 20th annual international conference on Mobile computing and networking, Maui, HI, USA, 7–11 September 2014; pp. 605–616.

- MatrixTransforms. Available online: https://developer.apple.com/library/content/documentation/AudioVideo/Conceptual/HTML-canvas-guide/MatrixTransforms/MatrixTransforms.html (accessed on 18 March 2017).

- Gubner, J.A. Probability and Random Processes for Electrical and Computer Engineers; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Lamel, L.F.; Rabiner, L.R.; Rosenberg, A.E.; Wilpon, J. An improved endpoint detector for isolated word recognition. IEEE Trans. Acoust. Speech Signal Proc. 1981, 29, 777–785. [Google Scholar] [CrossRef]

- Jalil, M.; Butt, F.A.; Malik, A. Short-time energy, magnitude, zero crossing rate and autocorrelation measurement for discriminating voiced and unvoiced segments of speech signals. In Proceedings of International Conference on Technological Advances in Electrical, Electronics and Computer Engineering, Konya, Turkey, 9–11 May 2013; pp. 208–212.

- Keogh, E.; Ratanamahatana, C.A. Exact indexing of dynamic time warping. Knowl. Inf. Syst. 2005, 7, 358–386. [Google Scholar] [CrossRef]

- Berndt, D.J.; Clifford, J. Using Dynamic Time Warping to Find Patterns in Time Series. KDD Workshop 1994, 10, 359–370. [Google Scholar]

- Keogh, E.J.; Pazzani, M.J. Derivative Dynamic Time Warping. SDM 2001, 1, 5–7. [Google Scholar]

- Salvador, S.; Chan, P. Toward accurate dynamic time warping in linear time and space. Intell. Data Anal. 2007, 11, 561–580. [Google Scholar]

- Kim, J.; Kim, S.; Schaumburg, E.; Sims, C.A.; Schaumburg, E. Calculating and using second-order accurate solutions of discrete time dynamic equilibrium models. J. Econ. Dyn. Control 2008, 32, 3397–3414. [Google Scholar] [CrossRef]

- Ajtay, D.; Weilenmann, M.; Soltic, P. Towards accurate instantaneous emission models. Atmos. Environ. 2005, 39, 2443–2449. [Google Scholar] [CrossRef]

- Salvador, S.; Chan, P. FastDTW: Toward Accurate Dynamic Time Warping in Linear Time Space. In Presented at the 3rd Workshop on Mining Temporal and Sequential Data, Seattle, WA, USA, 22–25 August 2004.

| No. | Names | Description |

|---|---|---|

| 1 | Normal turns | Normal maneuvers of turns |

| 2 | Normal lane-changes | Normal maneuvers of changing lanes |

| 3 | Normal U-turns | Normal maneuvers of U-turns |

| 4 | Sudden turns | Sudden turns with turn signal on |

| 5 | Sudden lane-changes | Sudden lane changes with turn signal on |

| 6 | Sudden U-turns | Sudden U-turns with turn signal on |

| 7 | Turns without scruple | Normal turns or Sudden turns with turn signal off |

| 8 | Lane-changes without scruple | Normal lane-changes or Sudden lane-changes with turn signal off |

| 9 | U-turns without scruple | Normal U-turns or Sudden U-turns with turn signal off |

| Driving Behaviors | Sunny Days | Rainy Days | Sonwy Days | Foggy Days |

|---|---|---|---|---|

| Sudden Turns | 0.65 rad/s | 0.5 rad/s | 0.35 rad/s | 0.45 rad/s |

| Sudden lane changes | 0.45 rad/s | 0.35 rad/s | 0.15 rad/s | 0.3 rad/s |

| Sudden U-turns | 0.75 rad/s | 0.4 rad/s | 0.2 rad/s | 0.35 rad/s |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Mei, H.; Lu, H.; Kuang, H.; Ma, X. A Vehicle Steering Recognition System Based on Low-Cost Smartphone Sensors. Sensors 2017, 17, 633. https://doi.org/10.3390/s17030633

Liu X, Mei H, Lu H, Kuang H, Ma X. A Vehicle Steering Recognition System Based on Low-Cost Smartphone Sensors. Sensors. 2017; 17(3):633. https://doi.org/10.3390/s17030633

Chicago/Turabian StyleLiu, Xinhua, Huafeng Mei, Huachang Lu, Hailan Kuang, and Xiaolin Ma. 2017. "A Vehicle Steering Recognition System Based on Low-Cost Smartphone Sensors" Sensors 17, no. 3: 633. https://doi.org/10.3390/s17030633