A Framework for the Multi-Level Fusion of Electronic Nose and Electronic Tongue for Tea Quality Assessment

Abstract

:1. Introduction

2. Materials and Methods

2.1. Sample Collection

2.2. Electronic Nose Measurement

2.3. Electronic Tongue Measurement

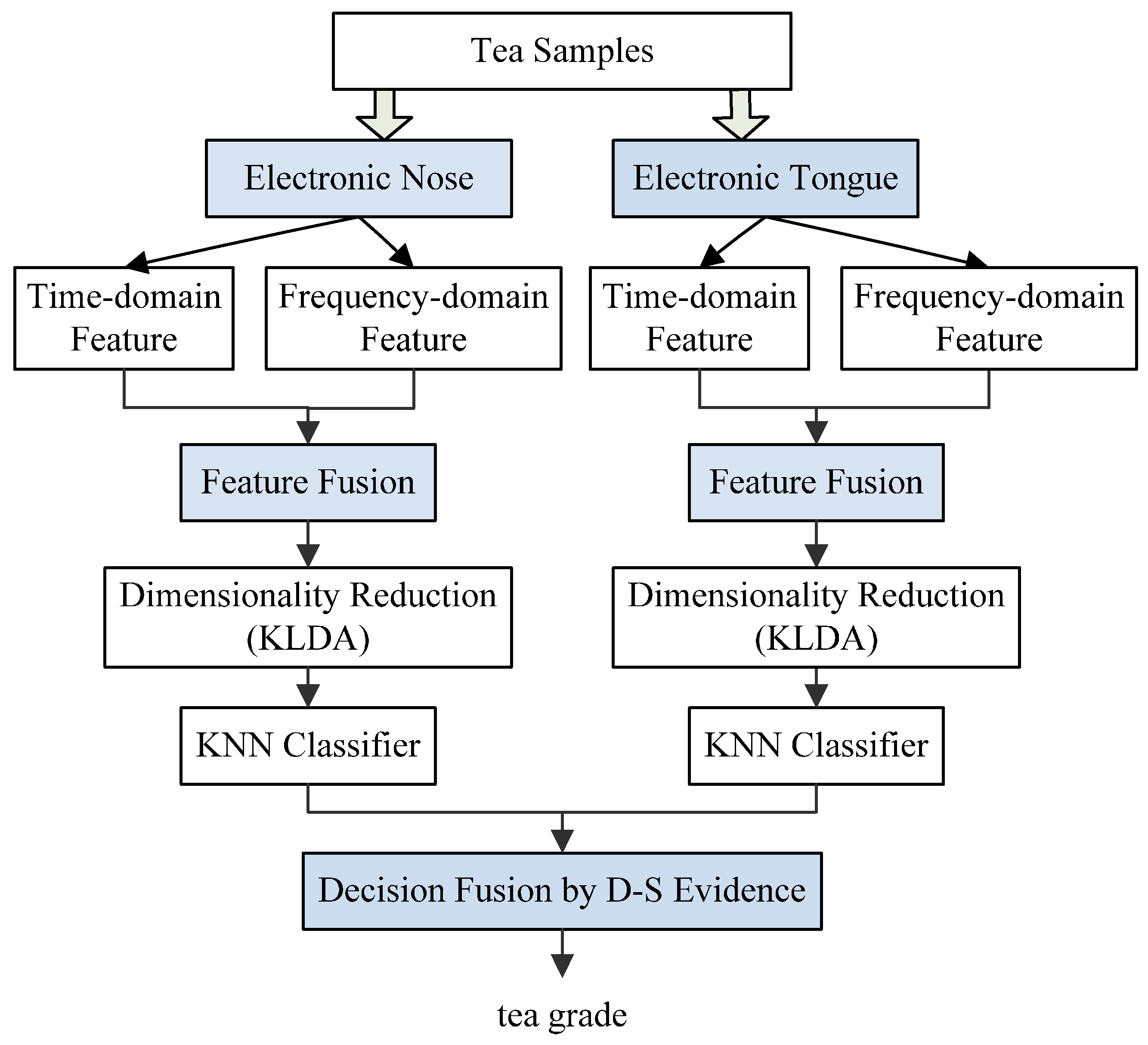

2.4. Multi-Level Fusion System

2.4.1. Feature Extraction and Fusion

2.4.2. Nonlinear Subspace Embedding

2.4.3. Classification

2.4.4. Decision Fusion Based on D-S Evidence

2.4.5. Decision Fusion for the E-Nose and E-Tongue

3. Results and Discussion

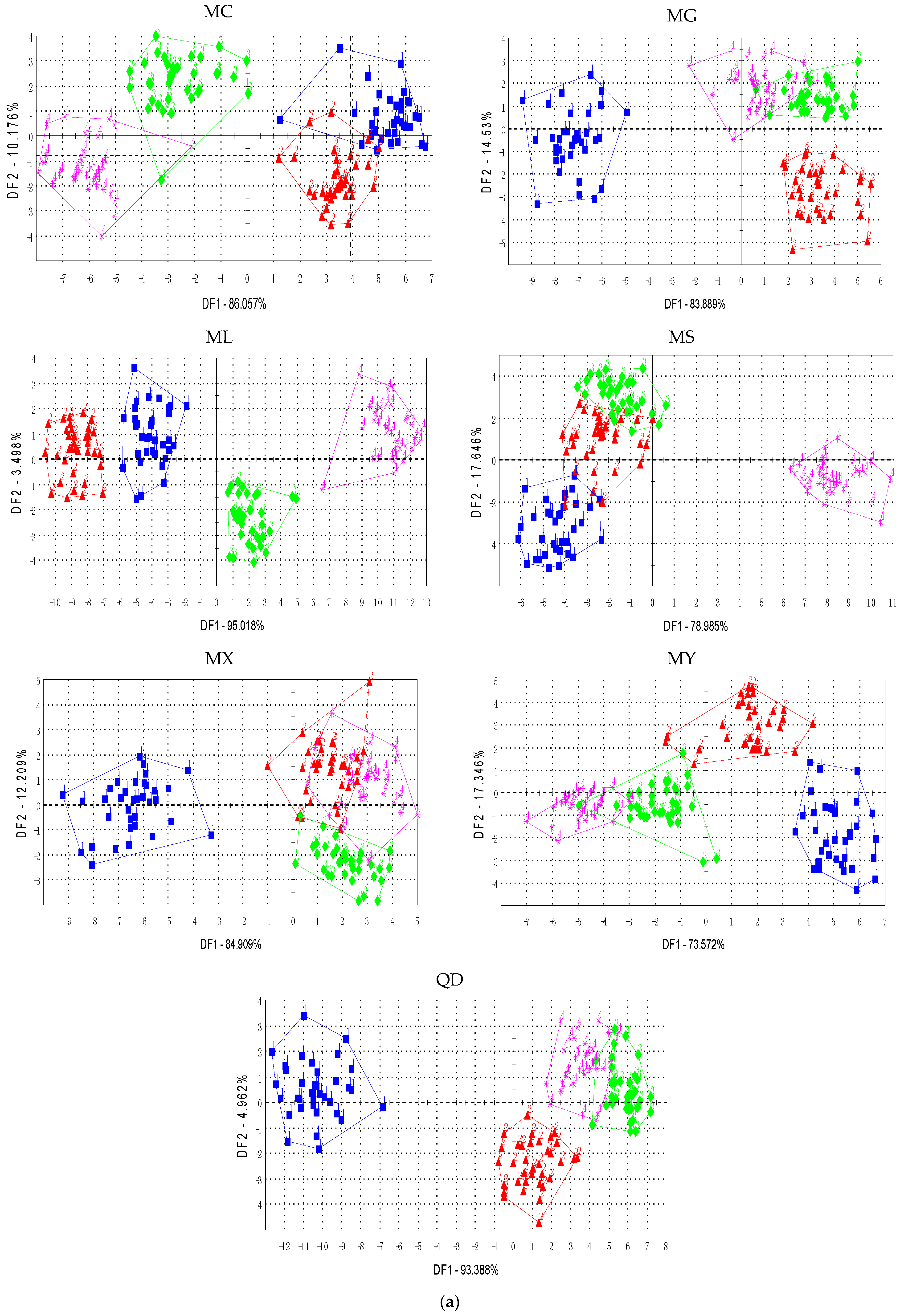

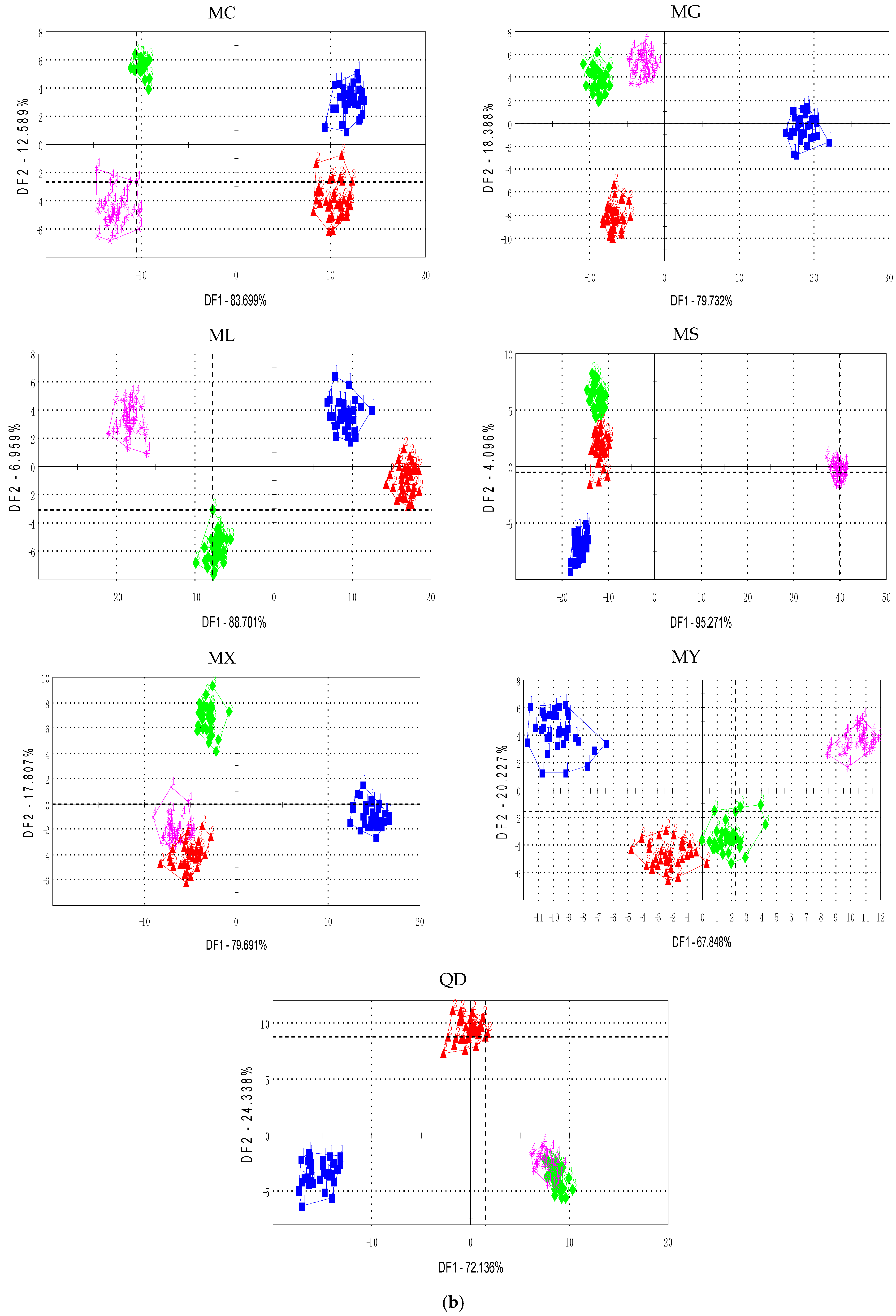

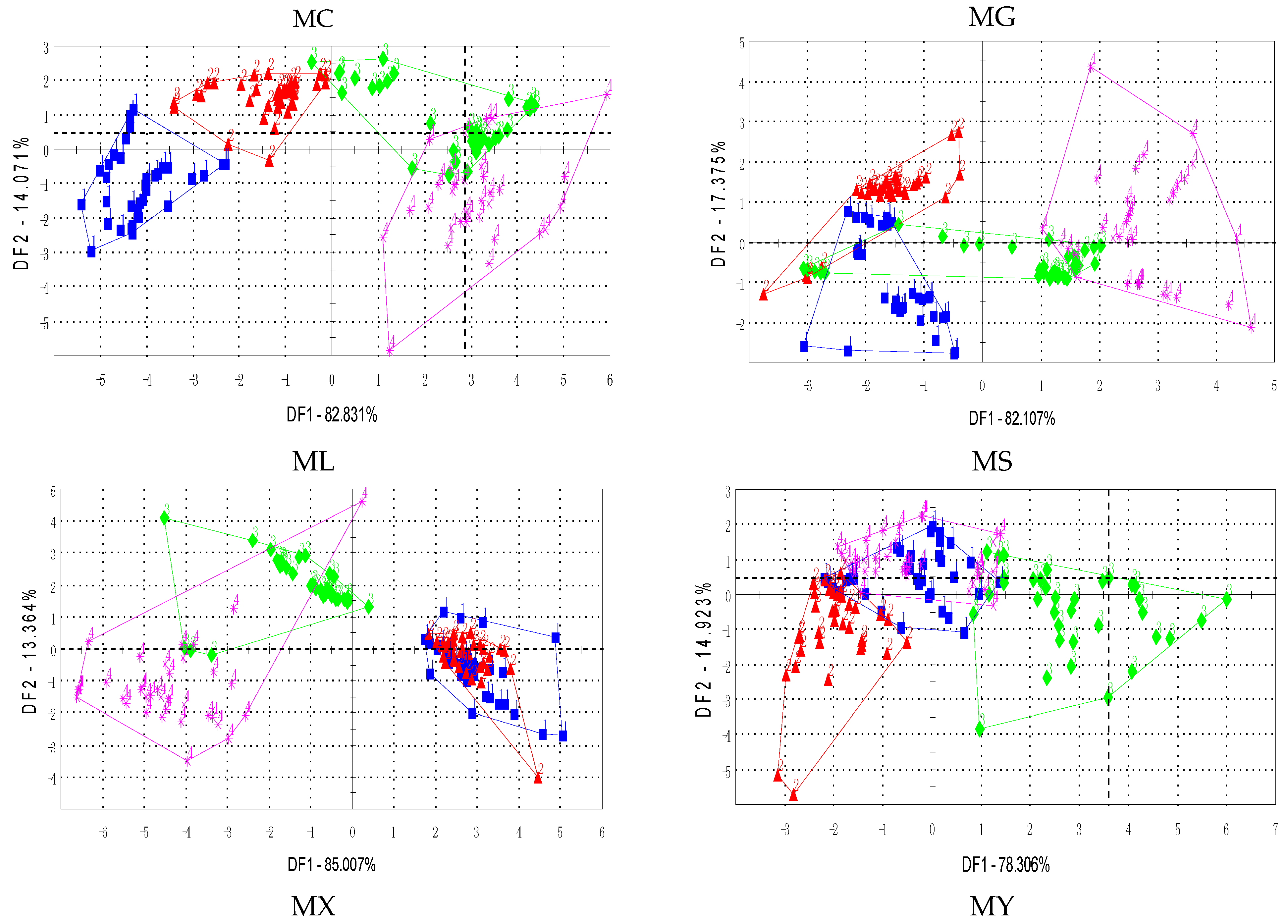

3.1. Feature Representation from Sensors

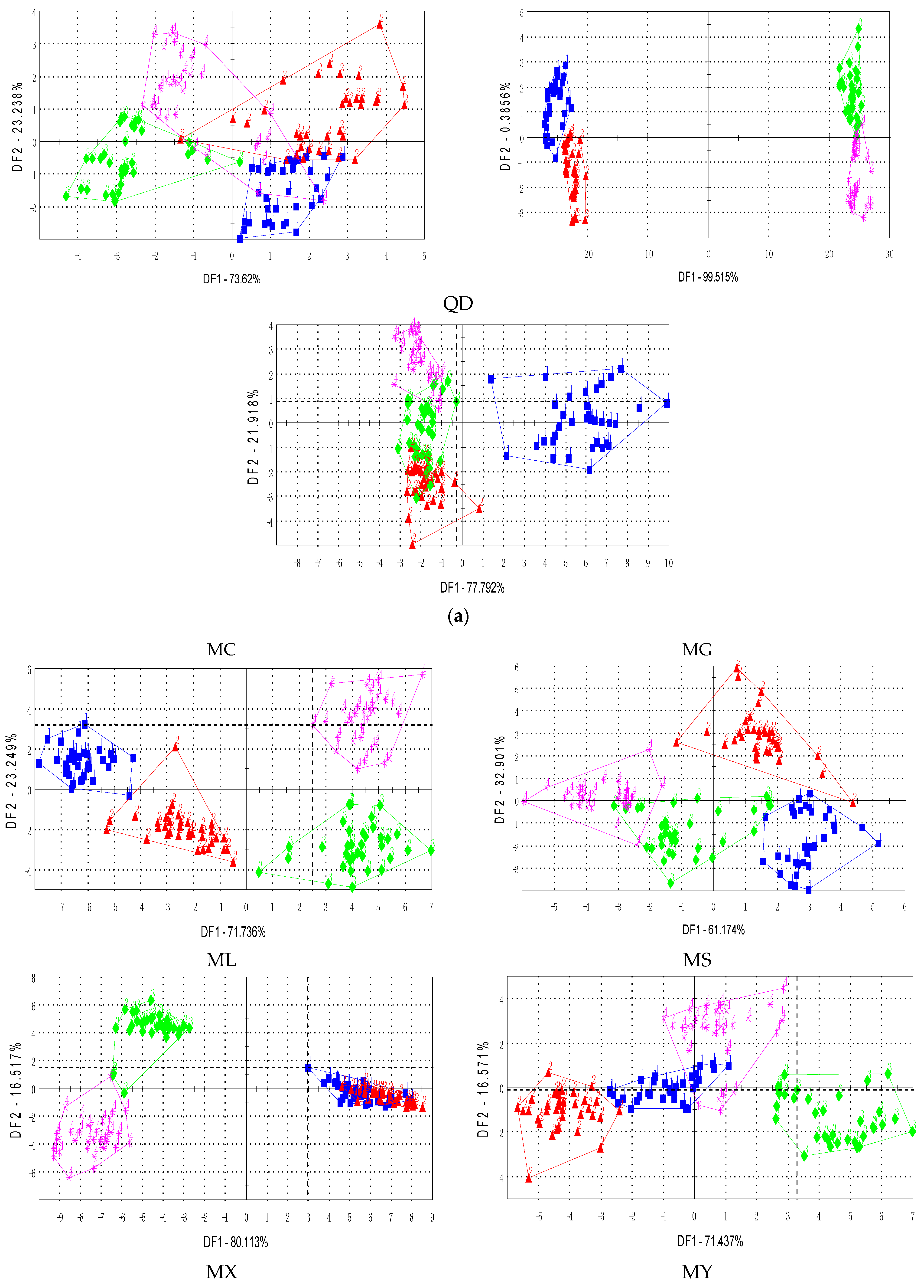

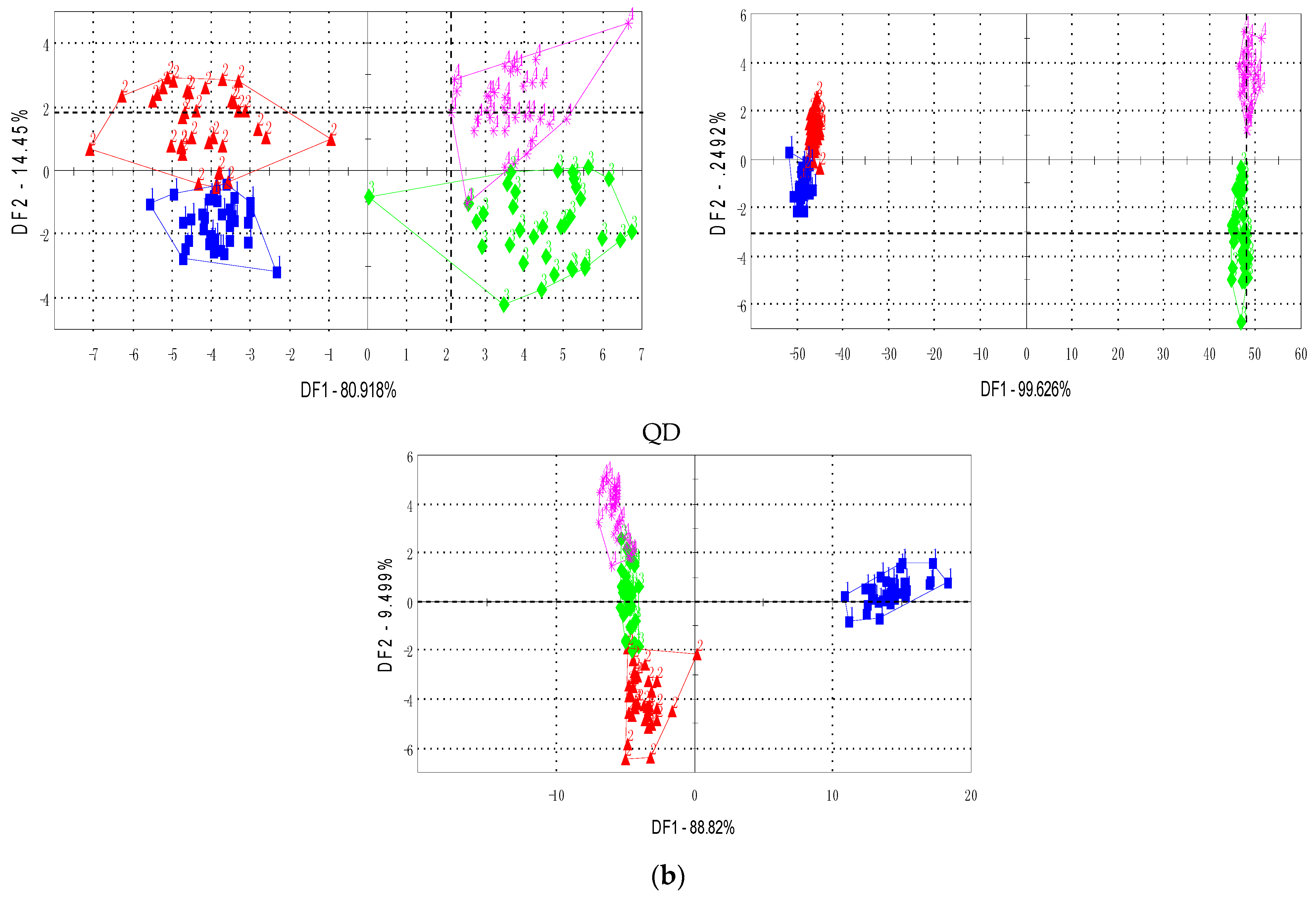

3.2. Dimensionality Reduction of Fused Feature

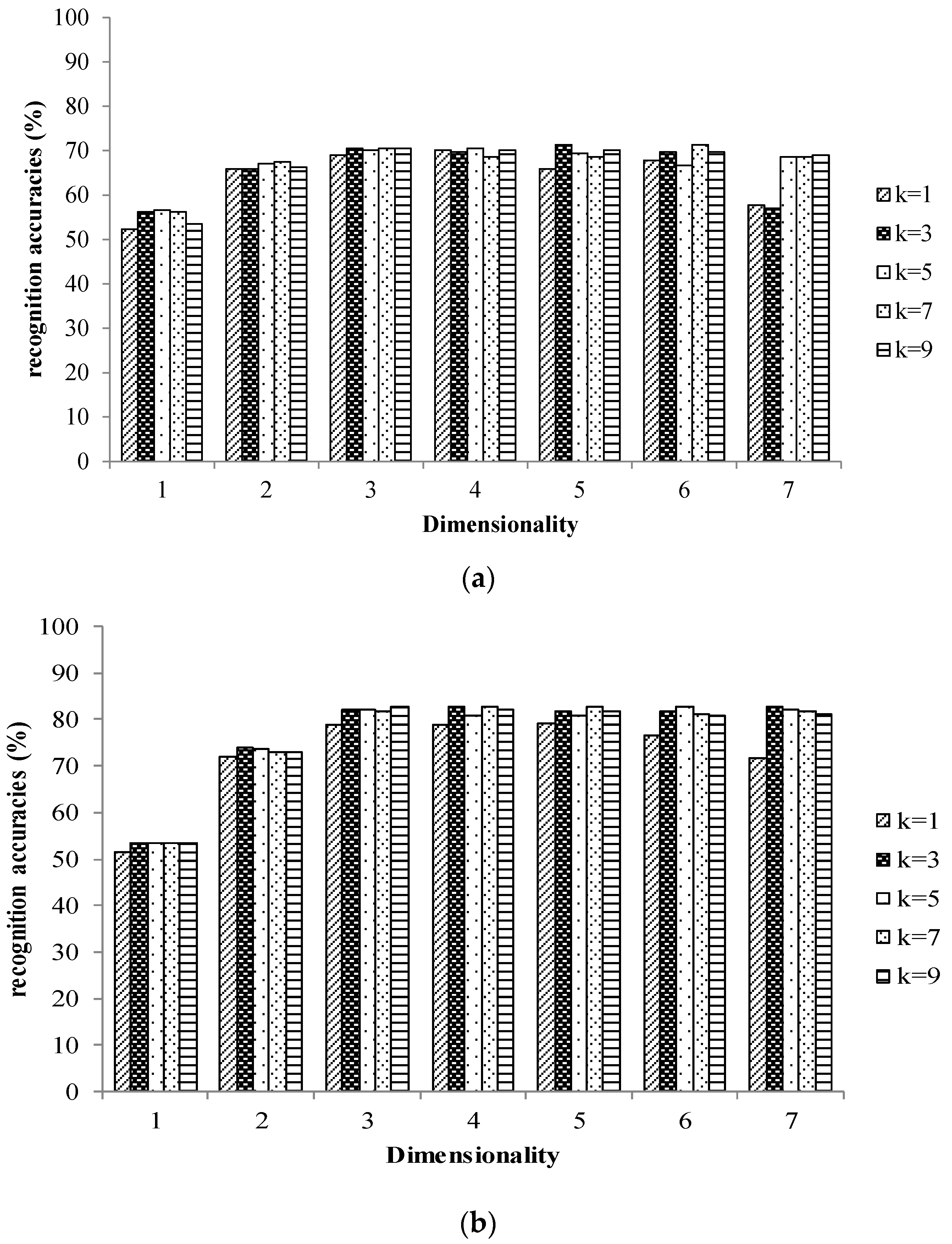

3.3. Decision Level Fusion for Tea Quality Identification

4. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bhattacharyya, N.; Bandyopadhyay, R.; Bhuyan, M.; Tudu, B.; Ghosh, D.; Jana, A. Electronic Nose for Black Tea Classification and Correlation of Measurements with “Tea Taster” Marks. IEEE Trans. Instrum. Meas. 2008, 57, 1313–1321. [Google Scholar] [CrossRef]

- Dutta, R.; Hines, E.L.; Gardner, J.W.; Kashwan, K.R.; Bhuyan, M. Tea Quality Prediction Using a Tin Oxide-Based Electronic Nose: An Artificial Intelligence Approach. Sens. Actuators B Chem. 2003, 94, 228–237. [Google Scholar] [CrossRef]

- Kiani, S.; Minaei, S.; Ghasemi-Varnamkhasti, M. Fusion of Artificial Senses as a Robust Approach to Food Quality Assessment. J. Food Eng. 2016, 171, 230–239. [Google Scholar] [CrossRef]

- Ghasemi-Varnamkhasti, M.; Mohtasebi, S.S.; Siadat, M.; Razavi, S.H.; Ahmadi, H.; Dicko, A. Discriminatory Power Assessment of The Sensor Array of an Electronic Nose System for the Detection of Non-Alcoholic Beer Aging. Czech J. Food Sci. 2012, 30, 236–240. [Google Scholar]

- Banerjee, R.; Tudu, B.; Bandyopadhyay, R.; Bhattacharyya, N. A Review on Combined Odor and Taste Sensor Systems. J. Food Eng. 2016, 190, 10–21. [Google Scholar] [CrossRef]

- Baldwin, E.A.; Bai, J.; Plotto, A.; Dea, S. Electronic Noses and Tongues: Applications for the Food and Pharmaceutical industries. Sensors 2011, 11, 4744–4766. [Google Scholar] [CrossRef] [PubMed]

- Persaun, K. Electronic Noses and Tongues in the Food Industry. In Electronic Noses and Tongues in Food Science, 1st ed.; Mendez, M.L.R., Preedy, V.R., Eds.; Elsevier Academic Press: London, UK, 2016; Chapter 1; pp. 1–12. [Google Scholar]

- Chen, Q.; Zhao, J.; Chen, Z.; Lin, H.; Zhao, D.A. Discrimination of Green Tea Quality Using the Electronic Nose Technique and the Human Panel Test, Comparison of Linear and Nonlinear Classification Tools. Sens. Actuators B Chem. 2011, 159, 294–300. [Google Scholar] [CrossRef]

- Kaur, R.; Kumar, R.; Gulati, A.; Ghanshyam, C.; Kapur, P.; Bhondekar, A.P. Enhancing Electronic Nose Performance: A Novel Feature Selection Approach Using Dynamic Social Impact Theory and Moving Window Time Slicing for Classification of Kangra Orthodox Black Tea (Camellia sinensis (L.) O. Kuntze). Sens. Actuators B Chem. 2012, 166, 309–319. [Google Scholar] [CrossRef]

- Yu, H.; Wang, Y.; Wang, J. Identification of Tea Storage Times by Linear Discrimination Analysis and Back-Propagation Neural Network Techniques Based on the Eigenvalues of Principal Components Analysis of E-nose Sensor Signals. Sensors 2009, 9, 8073–8082. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Wang, J.; Zhang, H.; Yu, Y.; Yao, C. Identification of Green Tea Grade Using Different Feature of Response Signal from E-Nose Sensors. Sens. Actuators B Chem. 2008, 128, 455–461. [Google Scholar] [CrossRef]

- Bhattacharyya, N.; Seth, S.; Tudu, B.; Tamuly, P.; Jana, A.; Ghosh, D.; Bandyopadhyay, R.; Bhuyan, M. Monitoring of Black Tea Fermentation Process Using Electronic Nose. J. Food Eng. 2007, 80, 1146–1156. [Google Scholar] [CrossRef]

- Ivarsson, P.; Holmin, S.; Höjer, N.; Krantz-Rülcker, C.; Winquist, F. Discrimination of tea by means of a voltammetric electronic tongue and different applied waveforms. Sens. Actuators B Chem. 2001, 76, 449–454. [Google Scholar] [CrossRef]

- Palit, M.; Tudu, B.; Dutta, P.K.; Dutta, A.; Jana, A.; Roy, J.K.; Bhattacharyya, N.; Bandyopadhyay, R.; Chatterjee, A. Classification of Black Tea Taste and CORRELATION with Tea Taster’s Mark Using Voltammetric Electronic Tongue. IEEE Trans. Instrum. Meas. 2010, 59, 2230–2239. [Google Scholar] [CrossRef]

- Xiao, H.; Wang, J. Discrimination of Xihulongjing Tea Grade Using an Electronic Tongue. Afr. J. Biotechnol. 2009, 8, 6985–6992. [Google Scholar]

- Chen, Q.; Zhao, J.; Vittayapadung, S. Identification of the Green Tea Grade Level Using Electronic Tongue and Pattern Recognition. Food Res. Int. 2008, 41, 500–504. [Google Scholar] [CrossRef]

- Wu, J.; Liu, J.; Fu, M.; Li, G. Classification of Chinese Green Tea by a Voltammetric Electronic Tongue. Chin. J. Sens. Actuators 2006, 19, 963–965. [Google Scholar]

- Lvova, L.; Legin, A.; Vlasov, Y.; Cha, G.S.; Nam, H. Multicomponent Analysis of Korean Green Tea by Means of Disposable All-Solid-State Potentiometric Electronic Tongue Microsystem. Sens. Actuators B Chem. 2003, 95, 391–399. [Google Scholar] [CrossRef]

- Zhi, R.; Zhao, L.; Shi, B.; Jin, Y. New Dimensionality Reduction Model (Manifold Learning) Coupled with Electronic Tongue for Green Tea Grade Identification. Eur. Food Res. Technol. 2014, 239, 157–167. [Google Scholar] [CrossRef]

- Haddi, Z.; Mabrouk, S.; Bougrini, M.; Tahri, K.; Sghaier, K.; Barhoumi, H.; Bari, N.E.; Maaref, A.; Jaffrezic-Renault, N.; Bouchikhi, B. E-Nose and E-tongue Combination for Improved Recognition of Fruit Juice Samples. Food Chem. 2014, 150, 246–253. [Google Scholar] [CrossRef] [PubMed]

- Rudnitskaya, A.; Kirsanov, D.; Legin, A.; Beullens, K.; Lammertyn, J.; Nicolaï, B.M.; Irudayaraj, J. Analysis of Apples Varieties—Comparison of Electronic Tongue with Different Analytical Techniques. Sens. Actuators B Chem. 2006, 116, 23–28. [Google Scholar] [CrossRef]

- Natale, C.D.; Paolesse, R.; Macagnano, A.; Mantini, A.; D’Amico, A.; Legin, A.; Lvova, L.; Rudnitskaya, A.; Vlasov, Y. Electronic Nose and Electronic Tongue Integration for Improved Classification of Clinical and Food Samples. Sens. Actuators B Chem. 2000, 64, 15–21. [Google Scholar] [CrossRef]

- Sole, M.; Covington, J.A.; Gardner, J.W. Combined Electronic Nose and Tongue for a Flavour Sensing System. Sens. Actuators B Chem. 2011, 156, 832–839. [Google Scholar]

- Hong, X.; Wang, J. Detection of Adulteration in Cherry Tomato Juices Based on Electronic Nose and Tongue: Comparison of Different Data Fusion Approaches. J. Food Eng. 2014, 126, 89–97. [Google Scholar] [CrossRef]

- Ouyang, Q.; Zhao, J.; Chen, Q. Instrumental Intelligent Test of Food Sensory Quality as Mimic of Human Panel Test Combining Multiple Cross-Perception Sensors and Dada Fusion. Anal. Chim. Acta 2014, 841, 68–76. [Google Scholar] [CrossRef] [PubMed]

- Qiu, S.; Wang, J.; Tang, C.; Du, D. Comparison of ELM, RF, and SVM on E-nose and E-tongue to Trace the Quality Status of Mandarin (Citrus unshiu Marc.). J. Food Eng. 2015, 166, 193–203. [Google Scholar] [CrossRef]

- Buratti, S.; Ballabio, D.; Giovanelli, G.; Dominguez, C.M.Z.; Moles, A.; Benedetti, S.; Sinelli, N. Monitoring of Alcoholic Fermentation Using Near Infrared and Mid Infrared Spectroscopies Combined with Electronic Nose and Electronic Tongue. Anal. Chim. Acta 2011, 697, 67–74. [Google Scholar] [CrossRef] [PubMed]

- Zakaria, A.; Shakaff, A.Y.; Masnan, M.J.; Ahmad, M.N.; Adom, A.H.; Jaafar, M.N.; Ghani, S.A.; Abdullah, A.H.; Aziz, A.H.; Kamarudin, L.M.; et al. A Biomimetic Sensor for the Classification of Honeys of Different Floral Original and the Detection of Adulteration. Sensors 2011, 11, 7799–7822. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Mendez, M.L.; Apetrei, C.; Gay, M.; Medina-Plaza, C.; De Saja, J.A.; Vidal, S.; Aagaard, O.; Ugliano, M.; Wirth, J.; Cheynier, V. Evaluation of Oxygen Exposure Levels and Plyphenolic Content of Red Wines Using an Electronic Panel Formed by an Electronic Nose and an Electronic Tongue. Food Chem. 2014, 155, 91–97. [Google Scholar] [CrossRef] [PubMed]

- Zakaria, A.; Shakaff, A.Y.M.; Adom, A.H.; Ahmad, M.N.; Masnan, M.J.; Aziz, A.H.A.; Fikri, N.A.; Abdullah, A.H.; Kamarudin, L.M. Improved Classification of Orthosiphon stamineus by Data Fusion of Electronic Nose and Tongue Sensors. Sensors 2010, 10, 8782–8796. [Google Scholar] [CrossRef] [PubMed]

- Fikri, N.A.; Adom, A.H.; Md. Shakaff, A.Y.; Ahmad, M.N.; Abdullah, A.H.; Zakaria, A.; Markom, M.A. Development of Human Sensory Mimicking System. Sens. Lett. 2011, 9, 423–427. [Google Scholar] [CrossRef]

- Banerjee, R.; Chattopadhyay, P.; Tudu, B.; Bhattacharyya, N.; Bandyopadhyay, R. Artificial flavor perception of black tea using fusion of electronic nose and tongue response: A Bayesian statistical approach. J. Food Eng. 2014, 142, 87–93. [Google Scholar] [CrossRef]

- Banerjee, R.; Tudu, B.; Shaw, L.; Jana, A.; Bhattacharyya, N.; Bandyopadhyay, R. Instrumental Testing of Tea by Combining the Responses of Electronic Nose and Tongue. J. Food Eng. 2012, 110, 356–363. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, W.; Yin, B.; Huang, L. Green Tea Storage and Preservation. Agric. Mach. Technol. Ext. 2004, 9, 29–32. [Google Scholar]

- Shi, B.; Zhao, L.; Zhi, R.; Xi, X. Optimization of Electronic Nose Sensor Array by Genetic Algorithms in Xihu-Longjing Tea Quality Analysis. Math. Comput. Model. 2013, 58, 752–758. [Google Scholar] [CrossRef]

- Dai, Y.; Zhi, R.; Zhao, L.; Gao, H.; Shi, B.; Wang, H. Longjing Tea Quality Classification by Fusion of Features Collected from E-nose. Chemom. Intell. Lab. Syst. 2015, 144, 63–70. [Google Scholar] [CrossRef]

- Ma, B.; Qu, H.; Wong, H. Kernel clustering-based discriminant analysis. Pattern Recognit. 2007, 40, 324–327. [Google Scholar] [CrossRef]

- Lin, T. Improving D-S evidence Theory for Data Fusion System. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.115.652&rep=rep1&type=pdf (accessed on 10 April 2017).

- Guan, J.W.; Bell, D.A. Evidence Theory and its Applications; Elsevier Science Inc.: New York, NY, USA, 1992. [Google Scholar]

- Hegarat-Mascle, S.L.; Bloch, I.; Vidal-Madjar, D. Application of Dempster-Shafer Evidence Theory to Unsupervised Classification in Multisource Remote Sensing. IEEE Trans. Geosci. Remote Sens. 1997, 35, 1018–1031. [Google Scholar] [CrossRef]

- Walley, P.; Moral, S. Upper Probabilities Based Only on the Likelihood Function. J. R. Stat. Soc. 1999, 61, 831–847. [Google Scholar] [CrossRef]

| E-Nose Feature | E-Tongue Feature | Decision Fusion | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| T | Y | E | S | T | Y | E | S | T | Y | E | S | |

| T | 92.0 | 8.0 | 0 | 0 | 78.7 | 16.0 | 5.3 | 0 | 93.3 | 2.7 | 4.0 | 0 |

| Y | 19.0 | 78.6 | 1.2 | 1.2 | 8.3 | 85.7 | 4.8 | 1.2 | 1.2 | 94.0 | 4.8 | 0 |

| E | 2.5 | 26.6 | 58.2 | 12.7 | 1.3 | 8.9 | 82.3 | 7.6 | 1.3 | 3.8 | 86.1 | 8.9 |

| S | 4.9 | 18.3 | 20.7 | 56.1 | 2.4 | 4.9 | 8.5 | 84.1 | 0 | 0 | 8.5 | 91.5 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhi, R.; Zhao, L.; Zhang, D. A Framework for the Multi-Level Fusion of Electronic Nose and Electronic Tongue for Tea Quality Assessment. Sensors 2017, 17, 1007. https://doi.org/10.3390/s17051007

Zhi R, Zhao L, Zhang D. A Framework for the Multi-Level Fusion of Electronic Nose and Electronic Tongue for Tea Quality Assessment. Sensors. 2017; 17(5):1007. https://doi.org/10.3390/s17051007

Chicago/Turabian StyleZhi, Ruicong, Lei Zhao, and Dezheng Zhang. 2017. "A Framework for the Multi-Level Fusion of Electronic Nose and Electronic Tongue for Tea Quality Assessment" Sensors 17, no. 5: 1007. https://doi.org/10.3390/s17051007