New Keypoint Matching Method Using Local Convolutional Features for Power Transmission Line Icing Monitoring

Abstract

:1. Introduction

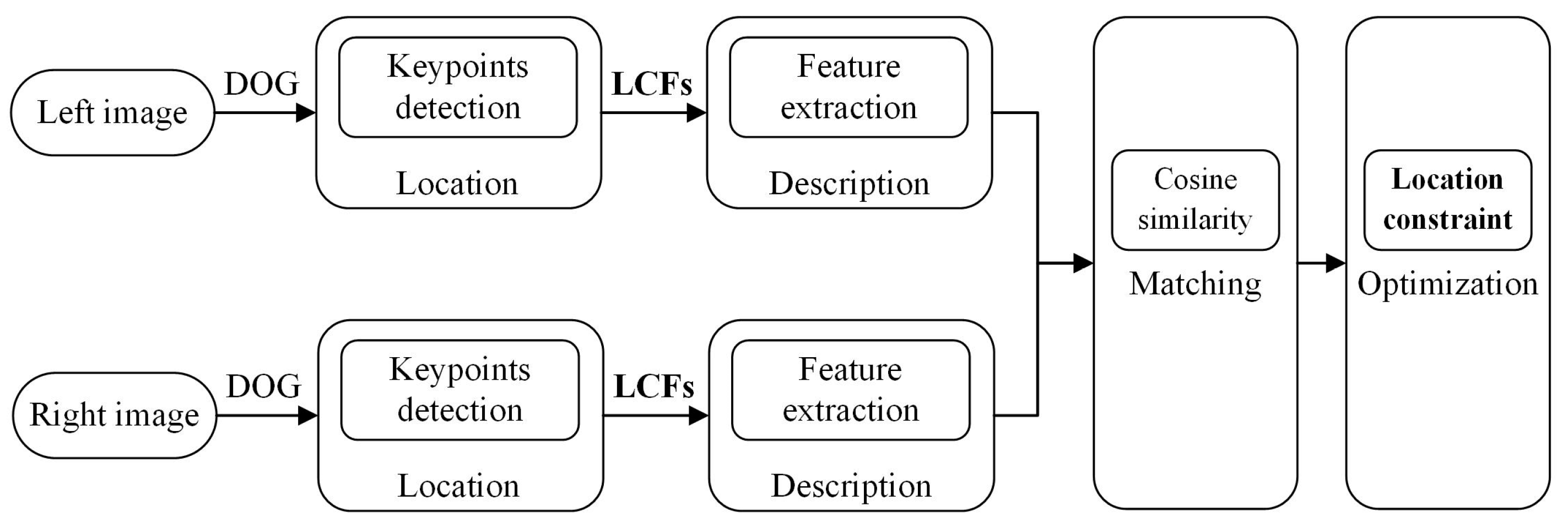

- A keypoint description method based on CNN deep features is proposed to extract discriminative features.

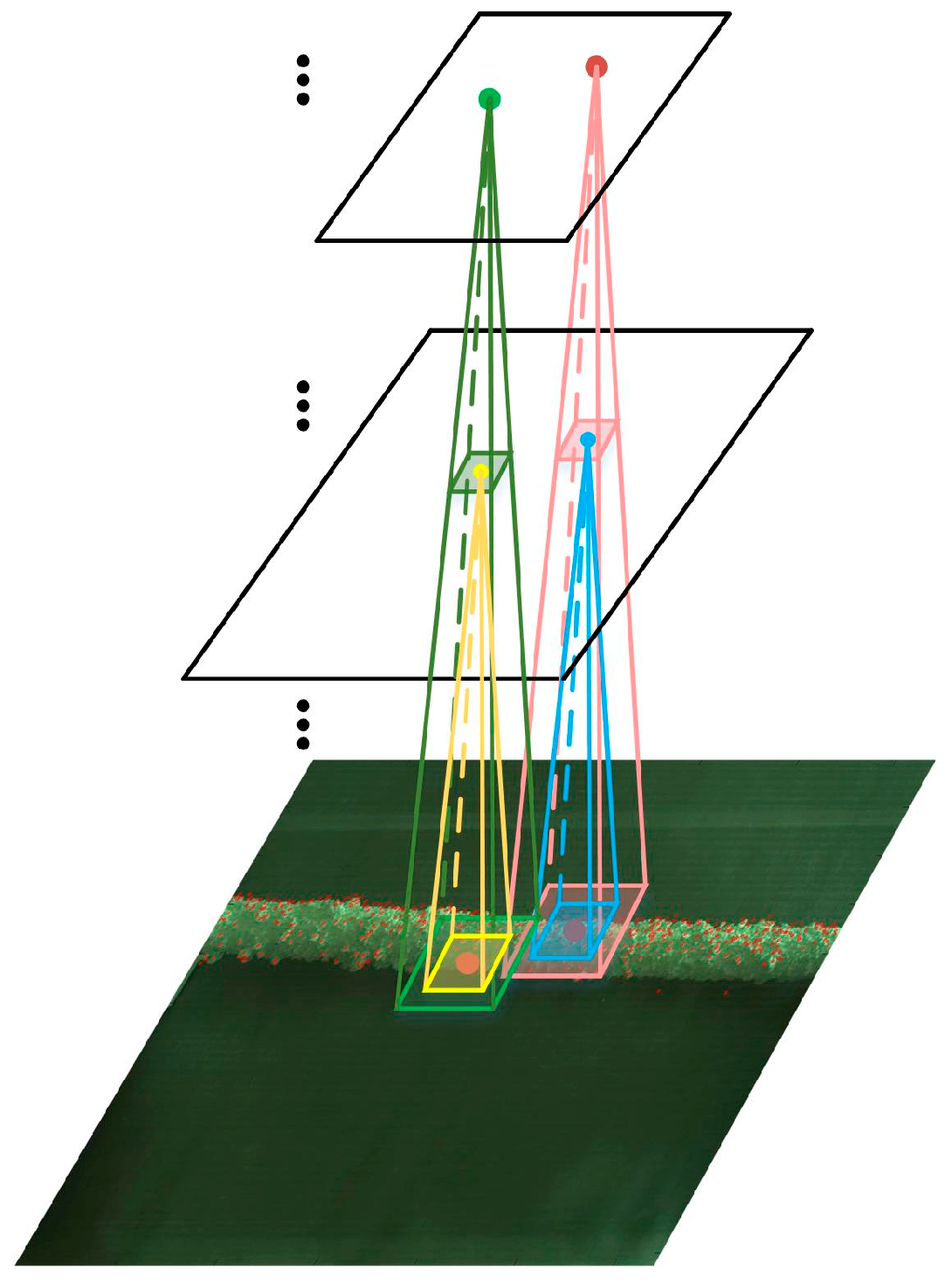

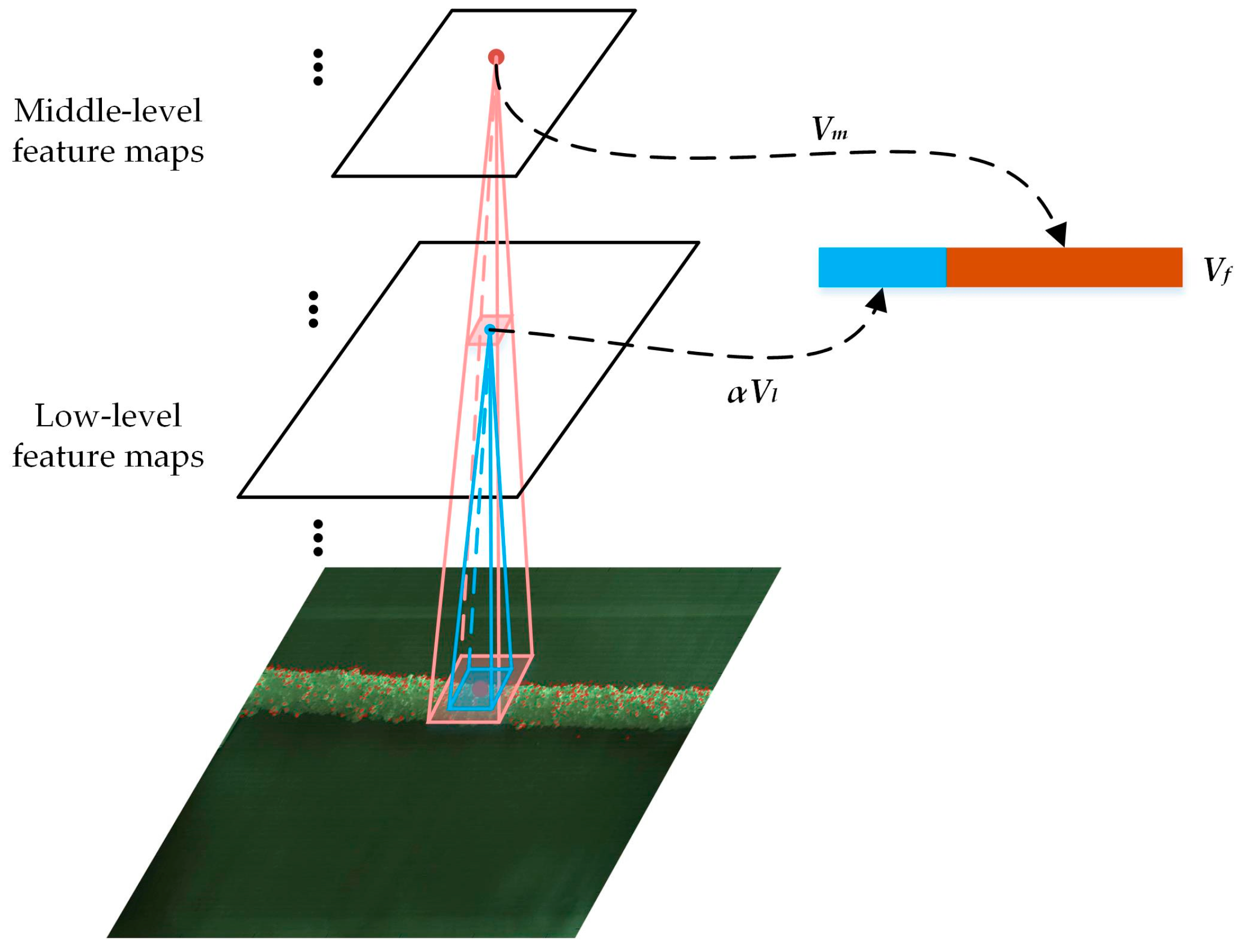

- A multi-layer features fusion scheme is exploited to further boost the discrimination of features.

- A location constraint method is deployed to refine the matching performance of neighboring keypoints.

2. Feature Extraction Based on Convolutional Neural Networks

3. Proposed Approach

3.1. Keypoints Detection

3.2. Local Convolutional Features

3.2.1. Keypoints Description Using CNN Features

3.2.2. Multi-Layer Features Fusion

3.3. Feature Matching

3.4. Matching Optimization Based on Location Constraint

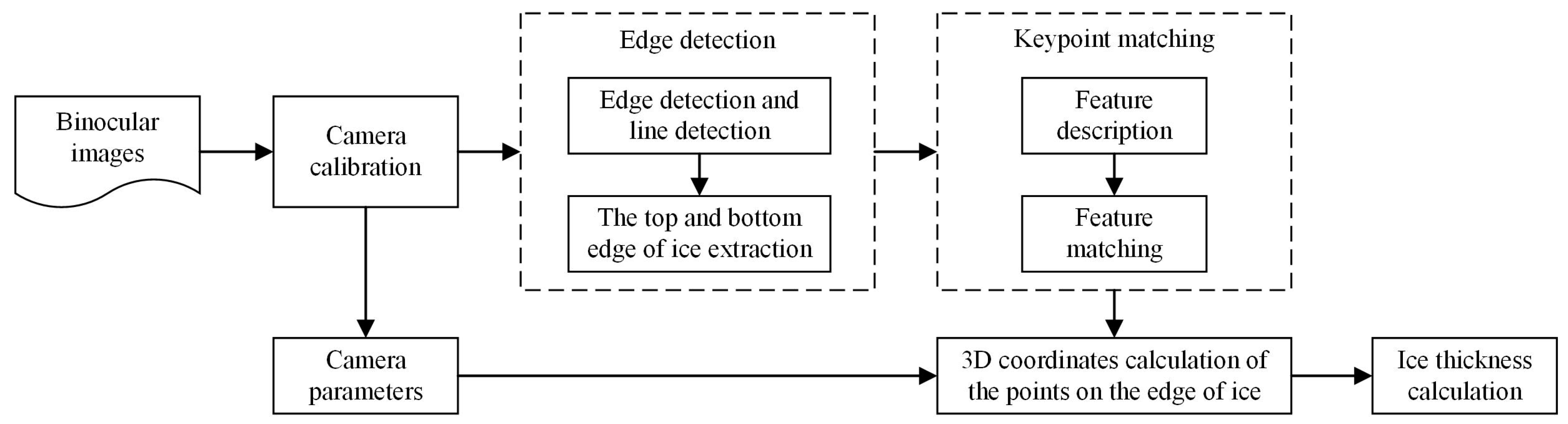

4. Ice Thickness Calculation Using 3D Measurement

5. Experiments and Evaluation

5.1. Datasets and Evaluation Metrics

5.1.1. Datasets

5.1.2. Evaluation Metrics

5.2. Performance of the Proposed Method

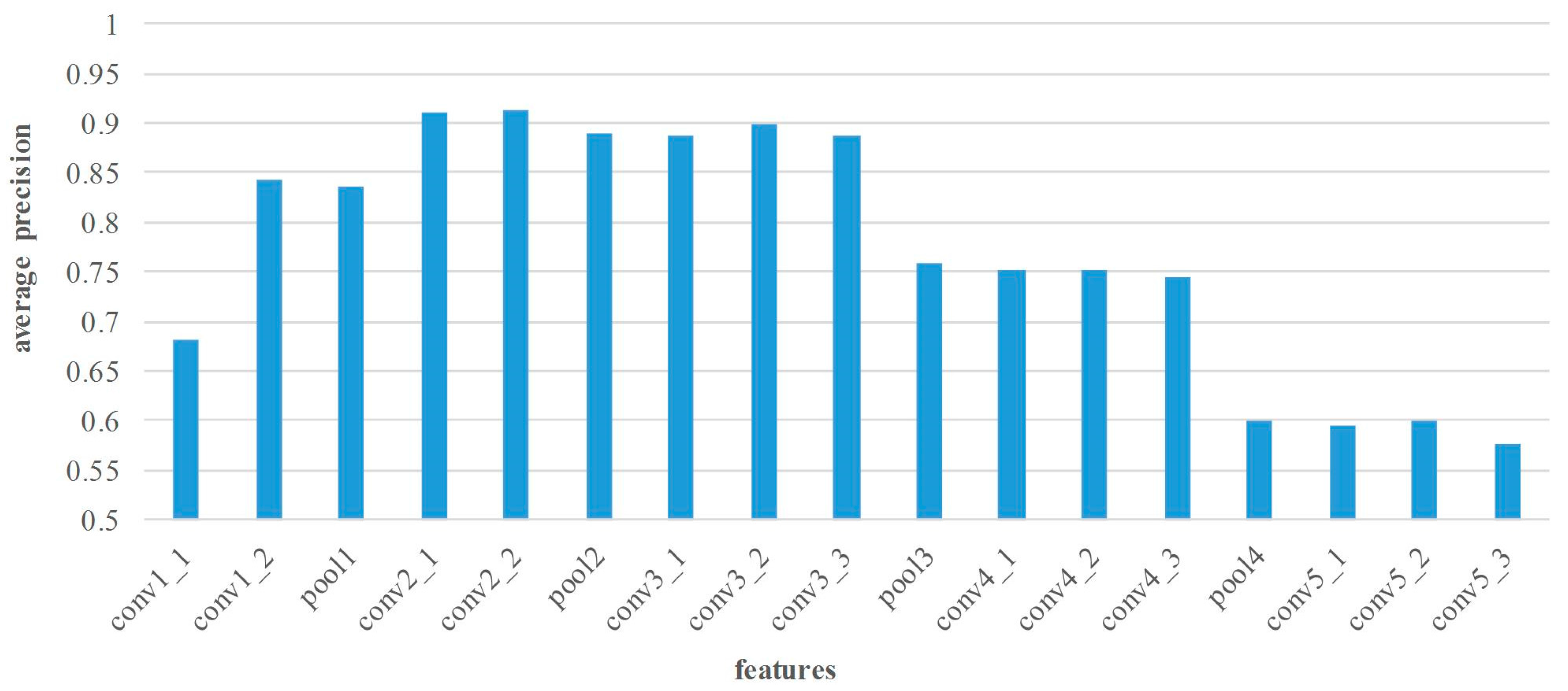

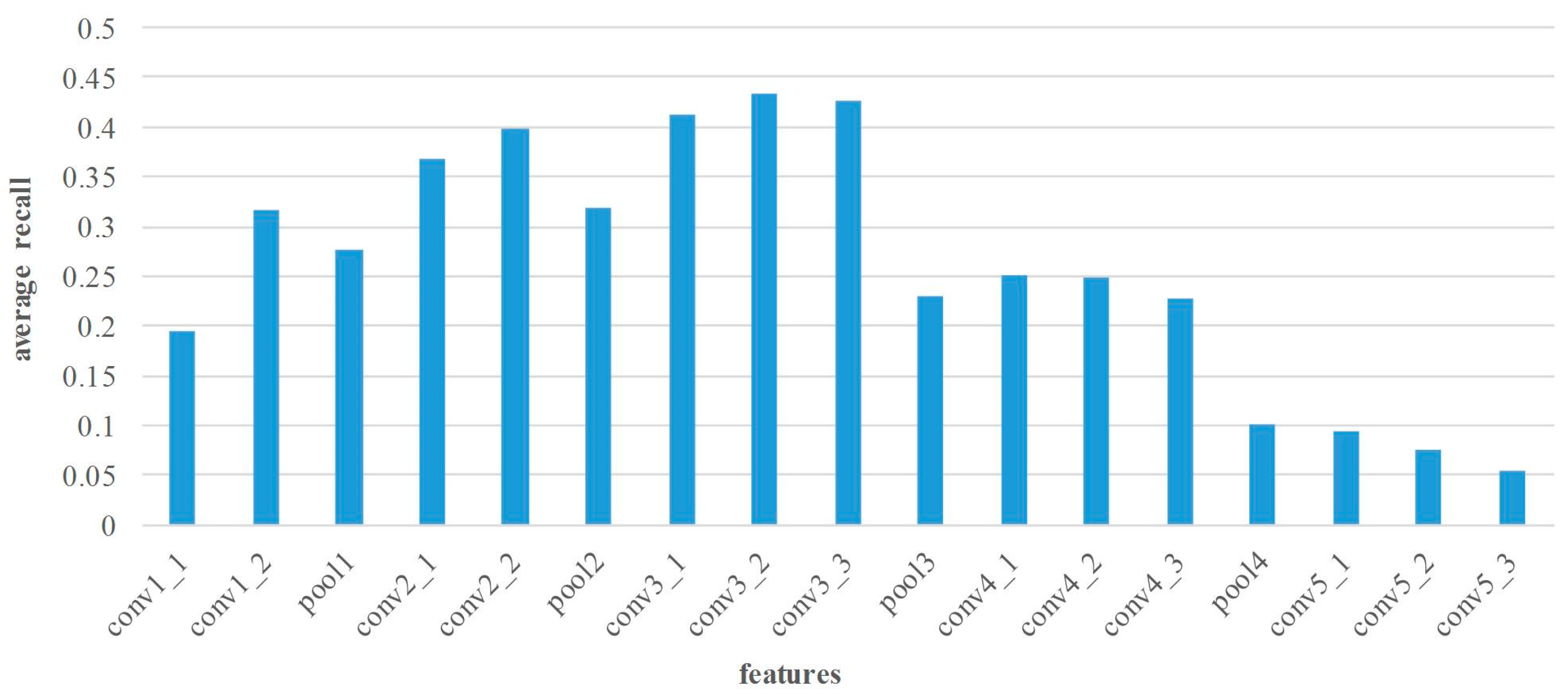

5.2.1. Experiments Using Various Layers’ Features

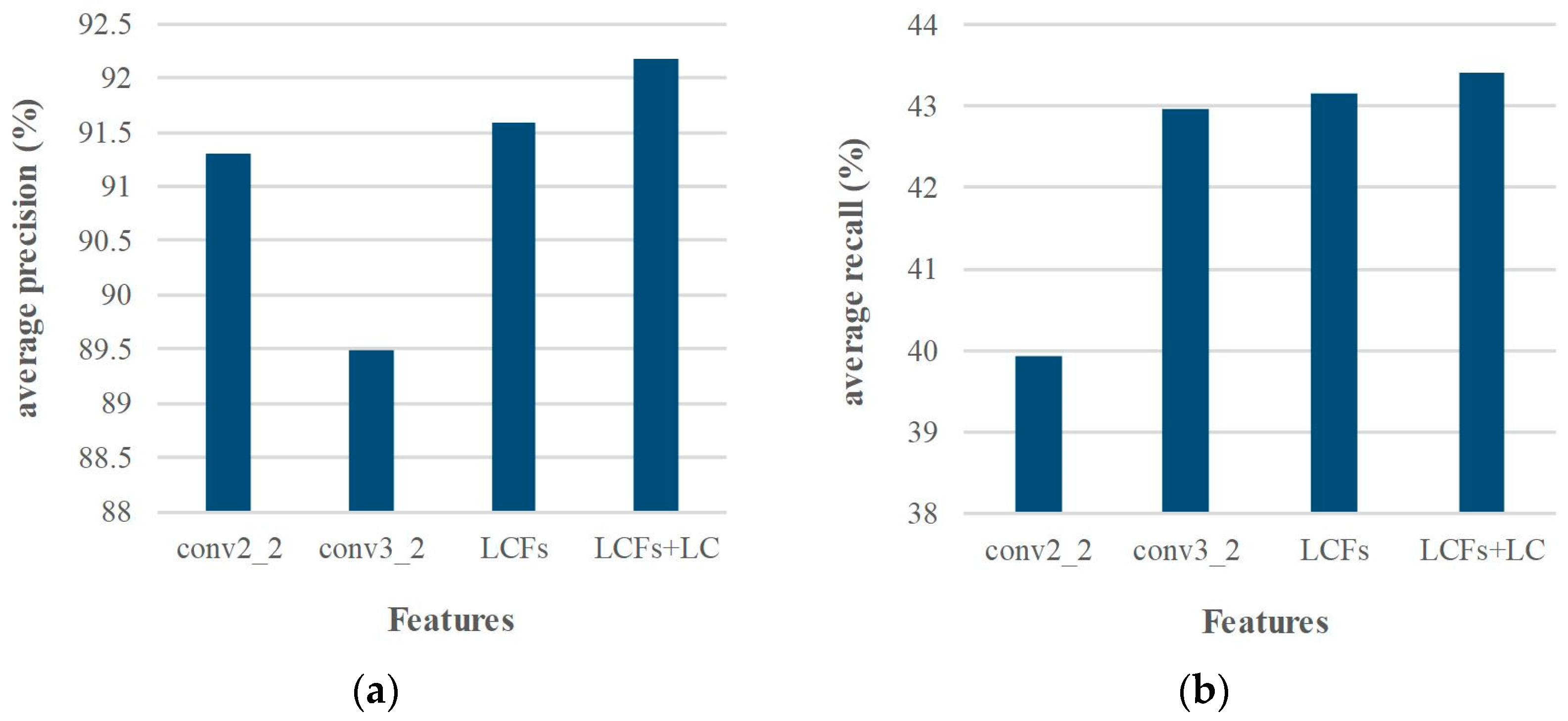

5.2.2. Importance of the Proposed Feature Fusion scheme and Location Constraint Method

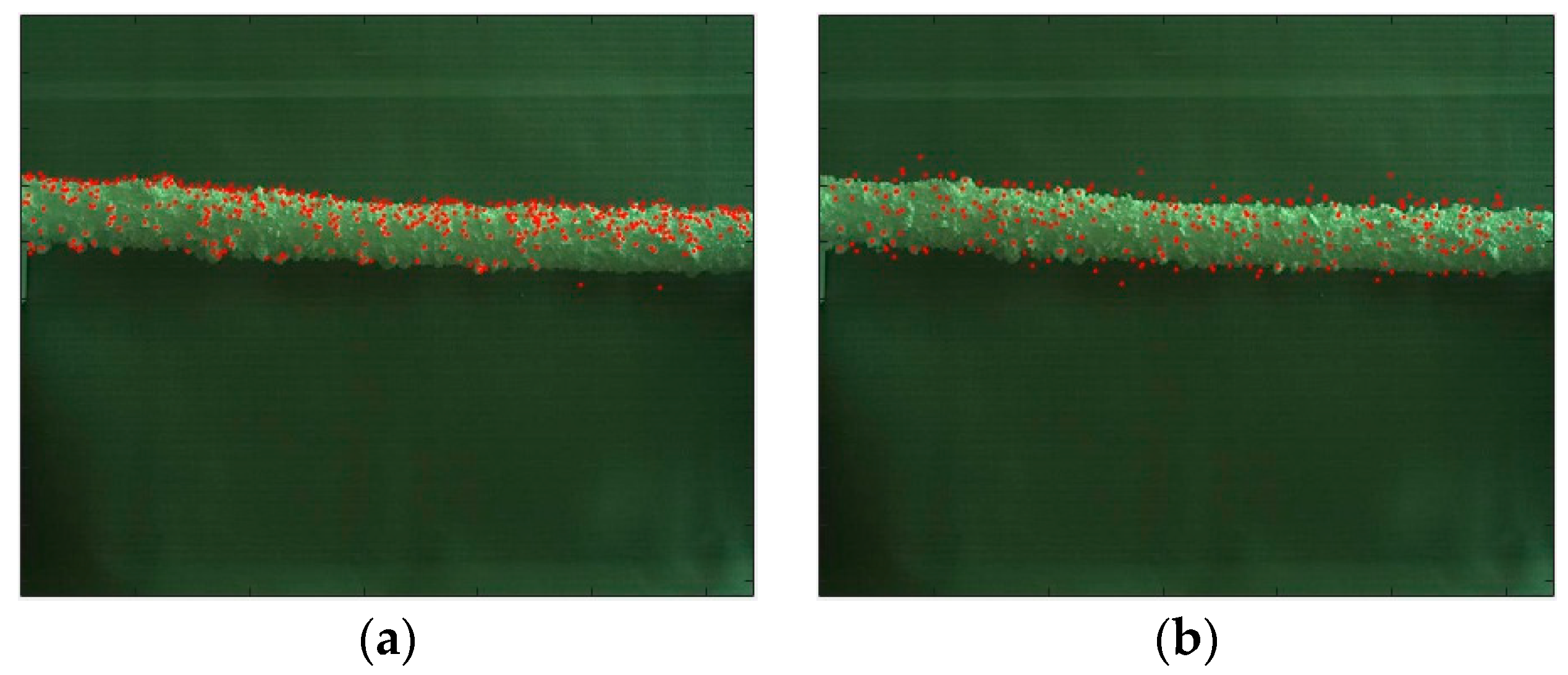

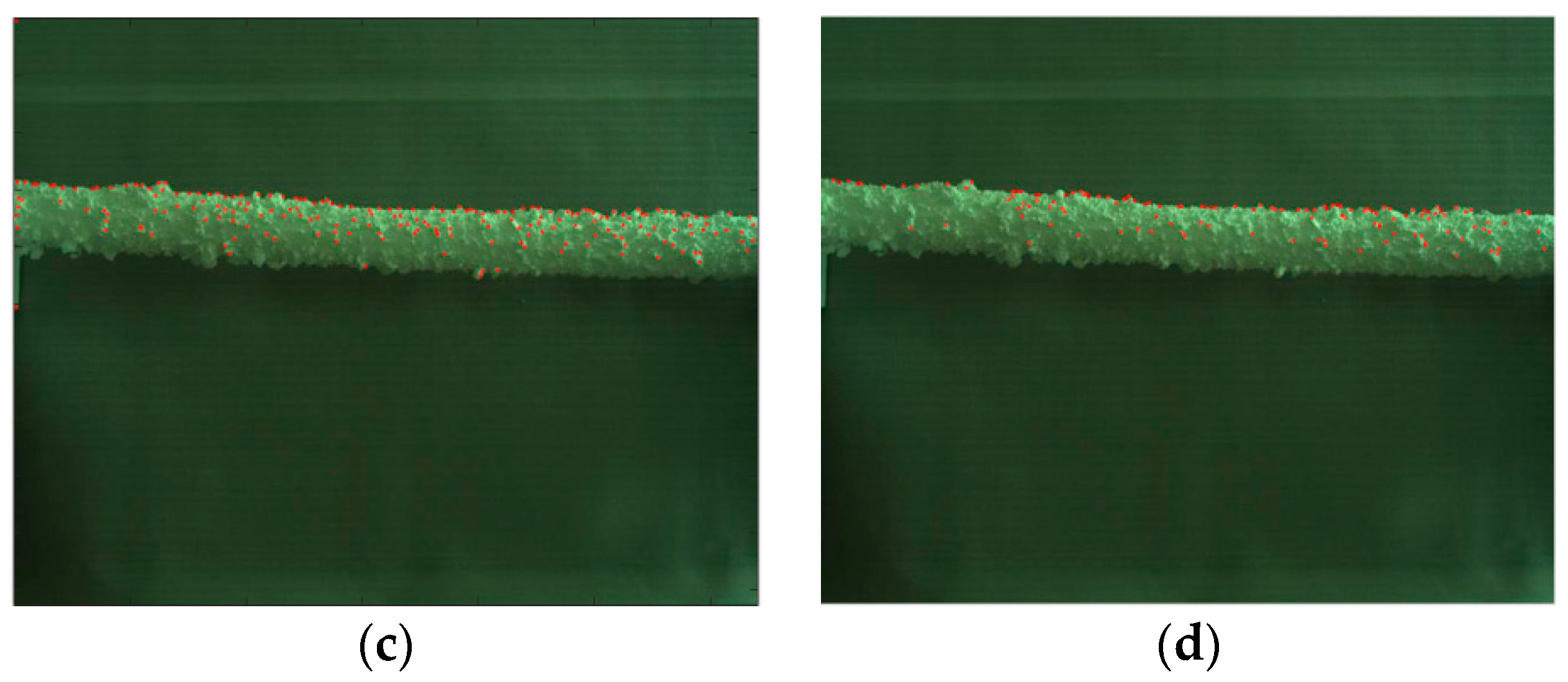

5.3. Ice Thickness Measurement

5.3.1. Keypoint Detection with Different Operators

5.3.2. Results of Ice Thickness Measurement

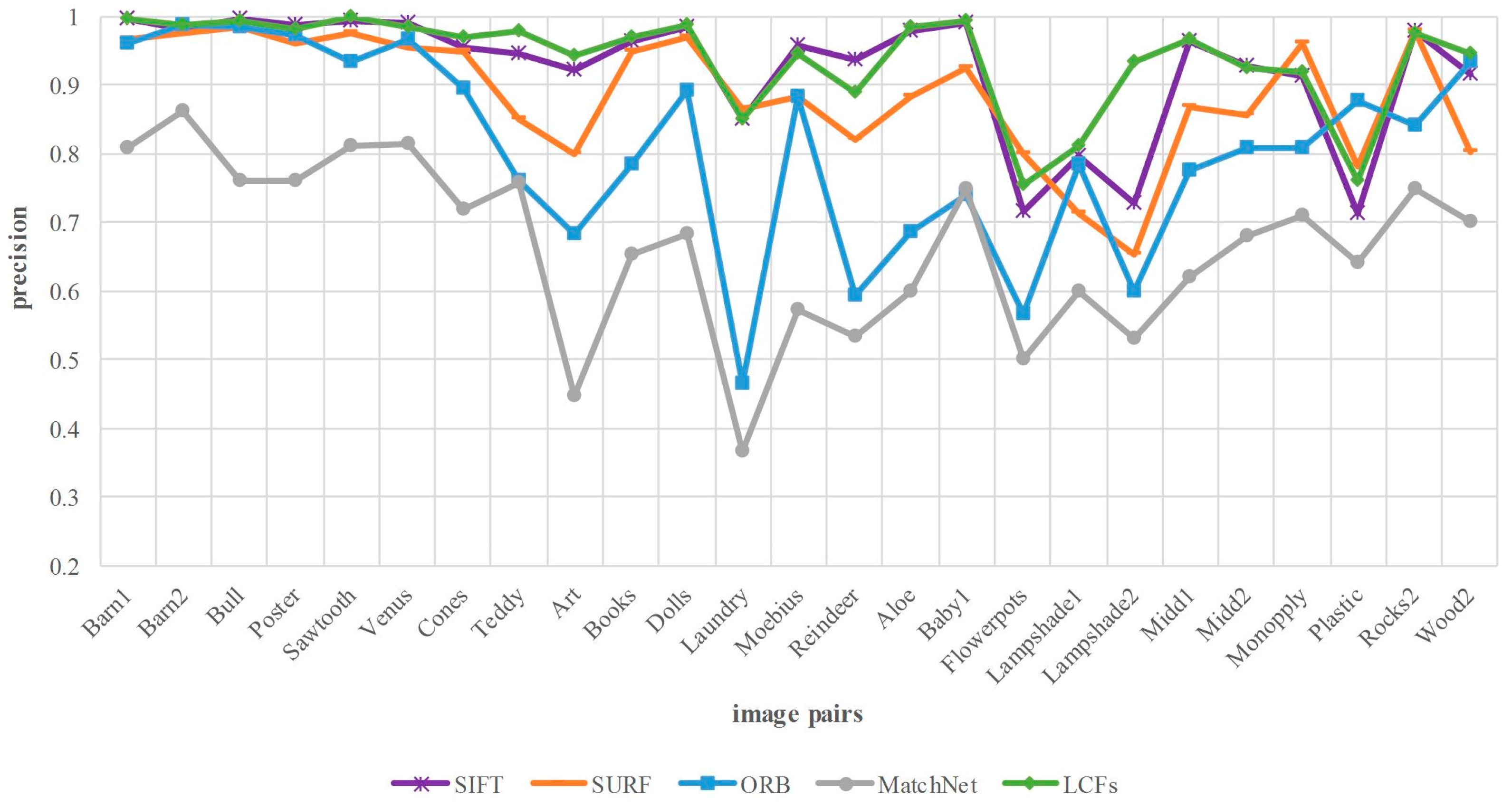

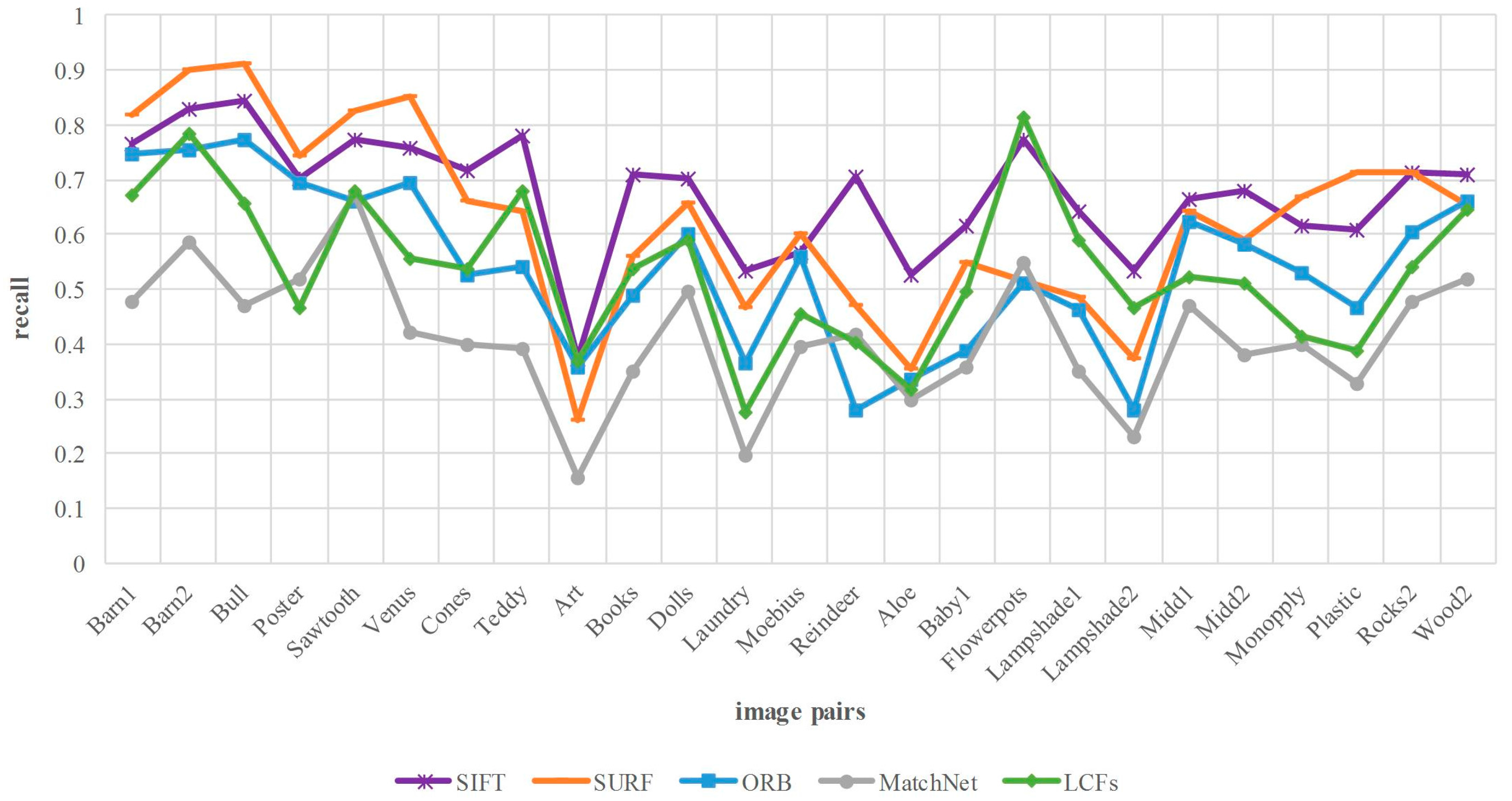

5.4. Benchmark Comparisons

5.4.1. Comparison with Other Feature Description Methods

5.4.2. Comparison with Outlier Removal Method

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Jiang, X.; Xiang, Z.; Zhang, Z.; Hu, J.; Hu, Q.; Shu, L. Predictive model for equivalent ice thickness load on overhead transmission lines based on measured insulator string deviations. IEEE Trans. Power Deliv. 2014, 29, 1659–1665. [Google Scholar] [CrossRef]

- Ma, G.M.; Li, C.R.; Quan, J.T.; Jiang, J.; Cheng, Y.C. A fiber bragg grating tension and tilt sensor applied to icing monitoring on overhead transmission lines. IEEE Trans. Power Deliv. 2011, 26, 2163–2170. [Google Scholar] [CrossRef]

- Zarnani, A.; Musilek, P.; Shi, X.; Ke, X.; He, H.; Greiner, R. Learning to predict ice accretion on electric power lines. Eng. Appl. Artif. Intell. 2012, 25, 609–617. [Google Scholar] [CrossRef]

- Farzaneh, M.; Savadjiev, K. Statistical analysis of field data for precipitation icing accretion on overhead power lines. IEEE Trans. Power Del. 2005, 20, 1080–1087. [Google Scholar] [CrossRef]

- Lu, J.Z.; Zhang, H.X.; Fang, Z.; Li, B. Application of self-adaptive segmental threshold to ice thickness identification. High Volt. Eng. 2009, 3, 563–567. [Google Scholar]

- Gu, I.Y.H.; Berlijn, S.; Gutman, I.; Bollen, M.H.J. Practical applications of automatic image analysis for overhead lines. In Proceedings of the 22nd International Conference and Exhibition on Electricity Distribution (CIRED 2013), Stockholm, Sweden, 10–13 June 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Hao, Y.; Liu, G.; Xue, Y.; Zhu, J.; Shi, Z.; Li, L. Wavelet image recognition of ice thickness on transmission lines. High Volt. Eng. 2014, 40, 368–373. [Google Scholar] [CrossRef]

- Yu, C.; Peng, Q.; Wachal, R.; Wang, P. An Image-Based 3D Acquisition of Ice Accretions on Power Transmission Lines. In Proceedings of the CCECE’06 Canadian Conference on Electrical and Computer Engineering, Ottawa, ON, Canada, 7–10 May 2006; pp. 2005–2008. [Google Scholar] [CrossRef]

- Wachal, R.; Stoezel, J.; Peckover, M.; Godkin, D. A computer vision early-warning ice detection system for the Smart Grid. In Proceedings of the Transmission and Distribution Conference and Exposition, Orlando, FL, USA, 7–10 May 2012; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, H.; Wu, W. On-line monitoring method of icing transmission lines based on 3D reconstruction. Autom. Electr. Power Syst. 2012, 36, 103–108. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Yuille, A L. Non-rigid point set registration by preserving global and local structures. IEEE Trans. Image Process. 2016, 25, 53–64. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Zhou, H.; Zhao, J.; Gao, Y.; Jiang, J.; Tian, J. Robust feature matching for remote sensing image registration via locally linear transforming. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6469–6481. [Google Scholar] [CrossRef]

- Ma, J.; Qiu, W.; Zhao, J.; Ma, Y.; Yuile, A.L.; Tu, Z. Robust L2E Estimation of Transformation for Non-Rigid Registration. IEEE Trans. Signal Process. 2015, 63, 1115–1129. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Tian, J.; Yuile, A.L.; Tu, Z. Robust point matching via vector field consensus. IEEE Trans. Image Process. 2014, 23, 1706–1721. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Zhang, B.; Yang, Y.; Chen, C.; Yang, L.; Han, J.; Shao, L. Action recognition using 3D histograms of texture and a multi-class boosting classifier. IEEE Trans. Image Process. 2017, 26, 4648–4660. [Google Scholar] [CrossRef] [PubMed]

- Fischer, P.; Dosovitskiy, A.; Brox, T. Descriptor matching with convolutional neural networks: a comparison to sift. arXiv. 2014. Available online: https://arxiv.org/abs/1405.5769 (accessed on 1 June 2016).

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. Comput. Vis. Pattern Recogn. 2015, 4353–4361. [Google Scholar] [CrossRef] [Green Version]

- Han, X.; Leung, T.; Jia, Y.; Sukthankar, R.; Berg, A.C. MatchNet: Unifying feature and metric learning for patch-based matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3279–3286. [Google Scholar] [CrossRef]

- Simo-Serra, E.; Trulls, E.; Ferraz, L.; Kokkinos, I.; Fua, P.; Morenonoguer, F. Discriminative Learning of Deep Convolutional Feature Point Descriptors. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 118–126. [Google Scholar] [CrossRef]

- Zbontar, J.; LeCun, Y. Stereo matching by training a convolutional neural network to compare image patches. J. Mach. Learn. Res. 2016, 17, 1–32. [Google Scholar]

- Luo, W.; Schwing, A.G.; Urtasun, R. Efficient Deep Learning for Stereo Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5695–5703. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, B.; Han, J.; Shen, L.; Qian, C.S. Robust object representation by boosting-like deep learning architecture. Signal Process. Image Commun. 2016, 47, 490–499. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Li, D.; Zhou, H.; Lam, K.M. High-resolution face verification using pore-scale facial features. IEEE Trans. Image Process. 2015, 24, 2317–2327. [Google Scholar] [CrossRef] [PubMed]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. High-Accuracy Stereo Depth Maps Using Structured Light. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003. [Google Scholar] [CrossRef]

- Scharstein, D.; Pal, C. Learning Conditional Random Fields for Stereo. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Hirschmuller, H.; Scharstein, D. Evaluation of Cost Functions for Stereo Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Scharstein, D.; Hirschmüller, H.; Kitajima, Y.; Krathwohl, G.; Nešić, N.; Wang, X.; Westling, P. High-Resolution Stereo Datasets with Subpixel-Accurate Ground Truth. In Pattern Recognition; Lecture Notes in Computer Science; Jiang, X., Hornegger, J., Koch, R., Eds.; Springer: Cham, Switzerland, 2014; Volume 8753, pp. 31–42. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Li, Z.; Liu, Y.; Walker, R.; Hayward, R.; Zhang, J. Towards automatic power line detection for a UAV surveillance system using pulse coupled neural filter and an improved Hough transform. Mach. Vis. Appl. 2010, 21, 677–686. [Google Scholar] [CrossRef] [Green Version]

- Zhang, B.; Liu, W.; Mao, Z.; Liu, J.; Shen, L. Cooperative and geometric learning algorithm (CGLA) for path planning of UAVs with limited information. Automatica 2014, 50, 809–820. [Google Scholar] [CrossRef]

- Zhang, B.; Luan, S.; Chen, C.; Han, J.; Wang, W.; Perina, A.; Shao, L. Latent Constrained Correlation Filter. IEEE Trans. Image Process. 2018, 27, 1038–1048. [Google Scholar] [CrossRef]

| Name | Type | Output Dim. | Kernel Size | Stride |

|---|---|---|---|---|

| conv1_1 | C | 64 | 3 × 3 | 1 |

| conv1_2 | C | 64 | 3 × 3 | 1 |

| pool1 | MP | 64 | 2 × 2 | 2 |

| conv2_1 | C | 128 | 3 × 3 | 1 |

| conv2_2 | C | 128 | 3 × 3 | 1 |

| pool2 | MP | 128 | 2 × 2 | 2 |

| conv3_1 | C | 256 | 3 × 3 | 1 |

| conv3_2 | C | 256 | 3 × 3 | 1 |

| conv3_3 | C | 256 | 3 × 3 | 1 |

| pool3 | MP | 256 | 2 × 2 | 2 |

| conv4_1 | C | 512 | 3 × 3 | 1 |

| conv4_2 | C | 512 | 3 × 3 | 1 |

| conv4_3 | C | 512 | 3 × 3 | 1 |

| pool4 | MP | 512 | 2 × 2 | 2 |

| conv5_1 | C | 512 | 3 × 3 | 1 |

| conv5_2 | C | 512 | 3 × 3 | 1 |

| conv5_3 | C | 512 | 3 × 3 | 1 |

| pool5 | MP | 512 | 2 × 2 | 2 |

| Number | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| Manual measurement | 7.12 | 10.10 | 13.98 | 8.92 | 11.85 | 10.60 | 5.85 | 10.50 | 8.34 | 9.80 |

| LCFs | 6.83 | 11.26 | 12.85 | 8.60 | 10.98 | 11.32 | 7.46 | 11.31 | 9.26 | 9.49 |

| Absolute error | 0.29 | 1.16 | 1.13 | 0.32 | 0.87 | 0.72 | 1.61 | 0.81 | 0.92 | 0.31 |

| Method | Precision (Average) | Recall (Average) |

|---|---|---|

| SIFT | 92.3% | 67.4% |

| LCFs | 93.8% | 53.6% |

| SIFT + [14] | 98.1% | 66.9% |

| LCFs + [14] | 99.6% | 53.1% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Q.; Xiao, J.; Hu, X. New Keypoint Matching Method Using Local Convolutional Features for Power Transmission Line Icing Monitoring. Sensors 2018, 18, 698. https://doi.org/10.3390/s18030698

Guo Q, Xiao J, Hu X. New Keypoint Matching Method Using Local Convolutional Features for Power Transmission Line Icing Monitoring. Sensors. 2018; 18(3):698. https://doi.org/10.3390/s18030698

Chicago/Turabian StyleGuo, Qiangliang, Jin Xiao, and Xiaoguang Hu. 2018. "New Keypoint Matching Method Using Local Convolutional Features for Power Transmission Line Icing Monitoring" Sensors 18, no. 3: 698. https://doi.org/10.3390/s18030698