Object-Based Land-Cover Mapping with High Resolution Aerial Photography at a County Scale in Midwestern USA

Abstract

:1. Introduction

2. Data and Study Area

3. Methods

3.1. Spectral Information of Land-Cover Maps and Image Pre-Processing

3.2. Object-Based Image Analysis

3.2.1. Image Segmentation

| Parameters | |||||

|---|---|---|---|---|---|

| Segmentation Methods | Domain | Scale | Band Weight | Threshold | |

|

Vegetation Part Band Included: PCA2; PCA3 | MT1 | All pixels | 50 | PCA2: 1 | non-vegetation ≤ 0 |

| QT1 | All pixels | 100 | PCA2: 1 | -- | |

| MT2 | Unclassified | 25 | PCA3: 1 | 0 < grass ≤ 18 | |

| QT2 | Unclassified | 25 | PCA2: 0.5 PCA3: 0.5 | -- | |

|

Non-vegetation Part Band Included: PCA1; DOQ4; NDSM | MT1 | All pixels | 50 | DOQ4: 1 | vegetation ≤ 0 15 < water ≤ 80 |

| QT | All pixels | 250 | PCA1: 0.5 DOQ4: 0.5 | -- | |

| MT2 | All pixels | 100 | NDSM: 1 | non-elevated ≤ 0 | |

3.2.2. Vegetation Classification

3.2.3. Non-Vegetation Classification

| Road Class 1 | Road Class 2 | Road Class 3 | Road Class 4 | |

|---|---|---|---|---|

| Mean PCA1 ≥ 320 | Mean PCA1 ≥ 350 | Density Mean ≤ 1.5 | Mean NDSM ≤ 5 Rel. border to road3 ≥ 0.25 Mean Absolute difference of Mean PCA1 compare to road1 ≤ 50 | |

| Density ≤ 1.5 | Mean NDSM ≤ 5 | Mean PCA1 ≥ 100 | ||

| Mean NDSM ≤ 5 | Rel. border to road1 > 0 | |||

| 100 ≤ Area ≤ 10,000 pixels | Shape index ≤ 2 | |||

| Road Class 5 | Road Class 6 | |||

| Area ≤ 2000 pixels | Mean NDSM ≤ 5 | |||

| Mean PCA1 ≥ 300 | Rel. border to road5 ≥ 0.4 | |||

| Building Class 1 | Building Class 2 | Building Class 3 | Building Class 4 |

|---|---|---|---|

| NDSM > 0 (elevated) | NDSM > 10 | Rel. Border to Building1 > 0 | Mean PCA1 > 300 |

| Mean Absolute difference of Mean NDSM compare to elevated ≤ 50 | Rel. Border to Building1 ≥ 0.5 | Mean Absolute difference of Mean PCA1 compare to Buildings 1 and 2 ≤ 20 | Density ≥ 1.8 |

| Density > 1 | |||

| Area ≥ 64 pixels |

| To Road Class 1 | To Road Class 2 | To Road Class 3 | To Road Class 4 | To Road Class 5 | |

|---|---|---|---|---|---|

| Building Class | Density < 1.2 | Rel. border to Road > 0.75 | |||

| Area ≤ 100 pixels | Mean NDSM < 8 | ||||

| Water Class | Mean NDSM > 0 | Area ≤ 500 pixels | Area ≤ 1000 pixels | Area ≤ 2000 pixels | Area ≤ 5000 pixels |

| Mean PCA1 ≥ 200 | Rel. border to Road ≥ 0.5 | Rel. border to Road > 0.75 | Rel. border to Road > 0.25 |

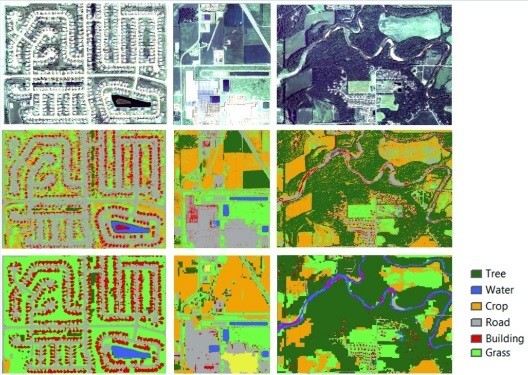

4. Results and Discussion

| Kappa % | Reference Total Count | Map Total Count | Number of Correct | Producer’s Accuracy (PA) % | User’s Accuracy (UA) % | |

|---|---|---|---|---|---|---|

| Building | 84.51 | 62 | 72 | 62 | 100.00 | 86.11 |

| Road | 95.40 | 78 | 75 | 72 | 92.31 | 96.00 |

| Tree/Forest | 91.81 | 89 | 86 | 80 | 89.89 | 93.02 |

| Grass | 87.71 | 77 | 84 | 75 | 97.40 | 89.29 |

| cropland | 96.11 | 159 | 140 | 136 | 85.53 | 97.14 |

| Water | 88.83 | 63 | 70 | 63 | 100.00 | 90.00 |

| Openland/ Bare soil | 96.89 | 72 | 73 | 71 | 98.61 | 97.26 |

| Overall accuracy = 93.17%; Overall Kappa statistics = 91.9% | ||||||

| Kappa % | Reference Total Count | Map Total Count | Number of Correct | Producer’s Accuracy (PA) % | User’s Accuracy (UA) % | |

|---|---|---|---|---|---|---|

| Building | 60.33 | 67 | 82 | 53 | 79.10 | 64.63 |

| Road | 76.50 | 92 | 80 | 64 | 69.57 | 80.00 |

| Tree/Forest | 76.31 | 96 | 110 | 88 | 91.67 | 80.00 |

| Grass | 75.97 | 98 | 94 | 75 | 76.53 | 79.79 |

| Cropland | 82.49 | 108 | 90 | 77 | 71.30 | 85.56 |

| Water | 87.07 | 80 | 80 | 71 | 88.75 | 88.75 |

| Openland/ Bare soil | 73.25 | 76 | 81 | 62 | 81.58 | 76.54 |

| Overall accuracy = 79.42%; Overall Kappa statistics = 75.95% | ||||||

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ben Dor, E. Imagery spectrometry for urban applications. In Imaging Spectrometry; van der Meer, F.D., de Jong, S.M., Eds.; Springer: Dordrecht, The Netherlands, 2006; pp. 243–281. [Google Scholar]

- Tooke, T.R.; Coops, N.C.; Goodwin, N.R.; Voogt, J.A. Extracting urban vegetation characteristics using spectral mixture analysis and decision tree classifications. Remote Sens. Environ. 2009, 113, 398–407. [Google Scholar]

- Li, X.; Myint, S.W.; Zhang, Y.; Galletti, C.; Zhang, X.; Turner, B.L., II. Object-based land-cover classification for metropolitan Phoenix, Arizona, using aerial photography. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 321–330. [Google Scholar] [CrossRef]

- Turner, B.L.; Janetos, A.C.; Verburg, P.H.; Murray, A.T. Land system architecture: Using land systems to adapt and mitigate global environmental change. Glob. Environ. Change 2013, 23, 395–397. [Google Scholar] [CrossRef]

- Wu, J.G. Landscape sustainability science: Ecosystem services and human well-being in changing landscapes. Landsc. Ecol. 2013, 28, 999–1023. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clark, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Ellis, E.C.; Wang, H.Q.; Xiao, H.S.; Peng, K.; Liu, X.P.; Li, S.C.; Ouyang, H.; Cheng, X.; Yang, L.Z. Measuring long-term ecological changes in densely populated landscapes using current and historical high resolution imagery. Remote Sens. Environ. 2006, 100, 457–473. [Google Scholar] [CrossRef]

- Zhou, W.; Troy, A.; Grove, M. Object-based land cover classification and change analysis in the Baltimore metropolitan area using multitemporal high resolution remote sensing data. Sensors 2008, 8, 1613–1636. [Google Scholar] [CrossRef]

- Chen, Y.; Shi, P.; Fung, T.; Wang, J.; Li, X. Object-oriented classification for urban land cover mapping with ASTER imagery. Int. J. Remote Sens. 2007, 28, 4645–4651. [Google Scholar] [CrossRef]

- Nichol, J.; King, B.; Quattrochi, D.; Dowman, I.; Ehlers, M; Ding, X. Earth observation for urban planning and management: State of the art and recommendations for application of earth observation in urban planning. Photogramm. Eng. Remote Sens. 2007, 73, 973–979. [Google Scholar]

- Nichol, J.; Lee, C.M. Urban vegetation monitoring in Hong Kong using high resolution multispectral images. Int. J. Remote Sens. 2005, 26, 903–918. [Google Scholar] [CrossRef]

- Cadenasso, M.L.; Pickett, S.T.A.; Schwarz, K. Spatial heterogeneity in urban ecosystems: Reconceptualizing land cover and a framework for classification. Front. Ecol. Environ. 2007, 5, 80–88. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Weng, Q.; Resch, B. Collective sensing: Integrating geospatial technologies to understand urban systems—An overview. Remote Sens. 2011, 3, 1743–1776. [Google Scholar] [CrossRef]

- Stow, D.; Coulter, L.; Kaiser, J.; Hope, A.; Service, D.; Schutte, K. Irrigated vegetation assessment for urban environments. Photogramm. Eng. Remote Sens. 2003, 69, 381–390. [Google Scholar] [CrossRef]

- Guisan, A.; Thuiller, W. Predicting species distribution: Offering more than simple habitat models. Ecol. Lett. 2005, 8, 993–1009. [Google Scholar] [CrossRef]

- Mehrabian, A.; Naqinezhad, A.; Mahiny, A.S.; Mostafavi, H.; Liaghati, H.; Kouchekzadeh, M. Vegetation mapping of the Mond Protected Area of Bushehr Province (south-west Iran). J. Integr. Plant Biol. 2009, 51, 251–260. [Google Scholar] [CrossRef] [PubMed]

- Hester, D.B.; Cakir, H.I.; Nelson, S.A.C.; Khorram, S. Per-pixel classification of high spatial resolution satellite imagery for urban land-cover mapping. Photogramm. Eng. Remote Sens. 2008, 74, 463–471. [Google Scholar] [CrossRef]

- Park, M.H.; Stenstrom, M.K. Classifying environmentally significant urban land uses with satellite imagery. J. Environ. Manag. 2008, 86, 181–192. [Google Scholar] [CrossRef]

- Myint, S.W.; Mesev, V.; Lam, N. Urban textural analysis from remote sensor data: Lacunarity measurements based on the differential box counting method. Geogr. Anal. 2006, 38, 371–390. [Google Scholar] [CrossRef]

- Weng, Q.; Lu, D. A sub-pixel analysis of urbanization effect on land surface temperature and its interplay with impervious surface and vegetation coverage in Indianapolis, United States. Int. J. Appl. Earth Obs. Geoinf. 2008, 10, 68–83. [Google Scholar] [CrossRef]

- Mallinis, G.; Koutsias, N.; Tsakiri-Strati, M.; Karteris, M. Object-based classification using Quickbird imagery for delineating forest vegetation polygons in a Mediterranean test site. ISPRS J. Photogramm. Remote Sens. 2008, 63, 237–250. [Google Scholar] [CrossRef]

- Zhou, W.; Huang, G.; Troy, A.; Cadenasso, M.L. Object-based land cover classification of shaded areas in high spatial resolution imagery of urban areas: A comparison study. Remote Sens. Environ. 2009, 113, 1769–1777. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic object-based image analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Blaschke, T.; Lang, S.; Lorup, E.; Strobl, J.; Zeil, P. Object-oriented image processing in an integrated GIS/remote sensing environment and perspectives for environmental applications. In Environmental Information for Planning, Politics and the Public; Cremers, A., Greve, K., Eds.; Metropolis Verlag: Marburg, Germany, 2000; pp. 555–570. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Li, X.; Shao, G. Object-based urban vegetation mapping with high-resolution aerial photography as a single data source. Int. J. Remote Sens. 2012, 34, 771–789. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, M. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informations-Verarbeitung XII; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Wichmann Verlag: Karlsruhe, Germany, 2000; pp. 12–23. [Google Scholar]

- Blaschke, T.; Strobl, J. What’s wrong with pixels? Some recent developments interfacing remote sensing and GIS. GeoBIT/GIS 2001, 6, 12–17. [Google Scholar]

- Burnett, C.; Blaschke, T. A multi-scale segmentation/object relationship modelling methodology for landscape analysis. Ecol. Model. 2003, 168, 233–249. [Google Scholar] [CrossRef]

- Blaschke, T.; Burnett, C.; Pekkarinen, A. Image segmentation methods for object-based analysis and classification. In Remote Sensing Image Analysis: Including the Spatial Domain; Springer: Berlin, Germany, 2004; pp. 211–236. [Google Scholar]

- Hay, G.J.; Castilla, G.; Wulder, M.A.; Ruiz, J.R. An automated object-based approach for the multiscale image segmentation of forest scenes. Int. J. Appl. Earth Obs. Geoinf. 2005, 7, 339–359. [Google Scholar] [CrossRef]

- Xian, G.; Homer, C. Updating the 2001 National Land Cover Database impervious surface products to 2006 using Landsat imagery change detection methods. Remote Sens. Environ. 2010, 114, 1676–1686. [Google Scholar] [CrossRef]

- National Agricultural Statistics Database. 2010; National Agricultural Statistics Service-Official Site. Available online: http://www.nass.usda.gov/ (accessed on 3 May 2010). [Google Scholar]

- Liu, Z.; Wang, J.; Liu, W. Building extraction from high resolution imagery based on multi-scale object oriented classification and probabilistic Hough transform. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, IGARSS ’05, Seoul, Korea, 25–29 July 2005; 2005; pp. 2250–2253. [Google Scholar]

- Indiana Spatial Data Portal. Indiana Geological Survey-Official Site. 2010. Available online: http:// igs.indiana.edu/ (accessed on 3 June 2010).

- Census Gazetteer Data for United States counties. 2010; United States Census Bureau. Available online: http://www.census.gov/tiger/tms/gazetteer/county2k.txt (accessed on 11 December 2010). [Google Scholar]

- Tovari, D.; Vogtle, T. Object classification in laser scanning data. In Proceedings of the ISPRS Working Group VIII/2, Laser-Scanners for Forest and Landscape Assessment, Freiburg, Germany, 3–6 October 2004; pp. 45–49.

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Elmqvist, B.; Ardo, J.; Olsson, L. Land use studies in drylands: An evaluation of object-oriented classification of very high resolution panchromatic imagery. Int. J. Remote Sens. 2008, 29, 7129–7140. [Google Scholar] [CrossRef]

- Definiens. In Definiens eCognition Developer 8 Reference Book; Definiens AG: München, Germany, 2009.

- Feitosa, R.Q.; Costa, G.A.; Cazes, T.B.; Feijó, B. A genetic approach for the automatic adaptation of segmentation parameters. In Proceedings of the First International Conference on Object-Based Image Analysis, Salzburg, Austria, 4–5 July 2006.

- Chang, S.; Messerschmitt, D.G. Comparison of transform coding techniques for arbitrarily-shaped image segments. Multimed. Syst. 1994, 1, 231–239. [Google Scholar] [CrossRef]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Shao, G. Object-Based Land-Cover Mapping with High Resolution Aerial Photography at a County Scale in Midwestern USA. Remote Sens. 2014, 6, 11372-11390. https://doi.org/10.3390/rs61111372

Li X, Shao G. Object-Based Land-Cover Mapping with High Resolution Aerial Photography at a County Scale in Midwestern USA. Remote Sensing. 2014; 6(11):11372-11390. https://doi.org/10.3390/rs61111372

Chicago/Turabian StyleLi, Xiaoxiao, and Guofan Shao. 2014. "Object-Based Land-Cover Mapping with High Resolution Aerial Photography at a County Scale in Midwestern USA" Remote Sensing 6, no. 11: 11372-11390. https://doi.org/10.3390/rs61111372