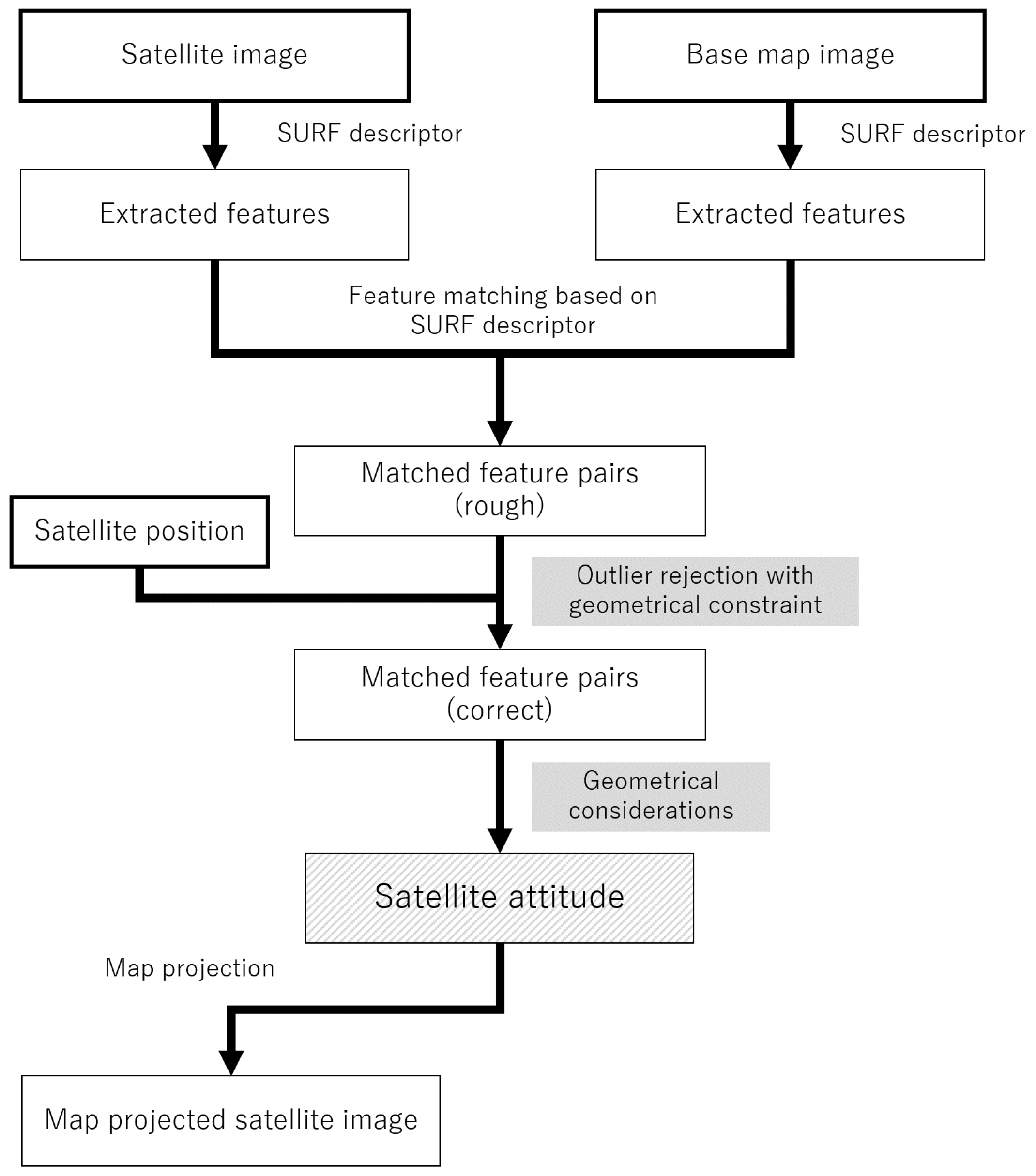

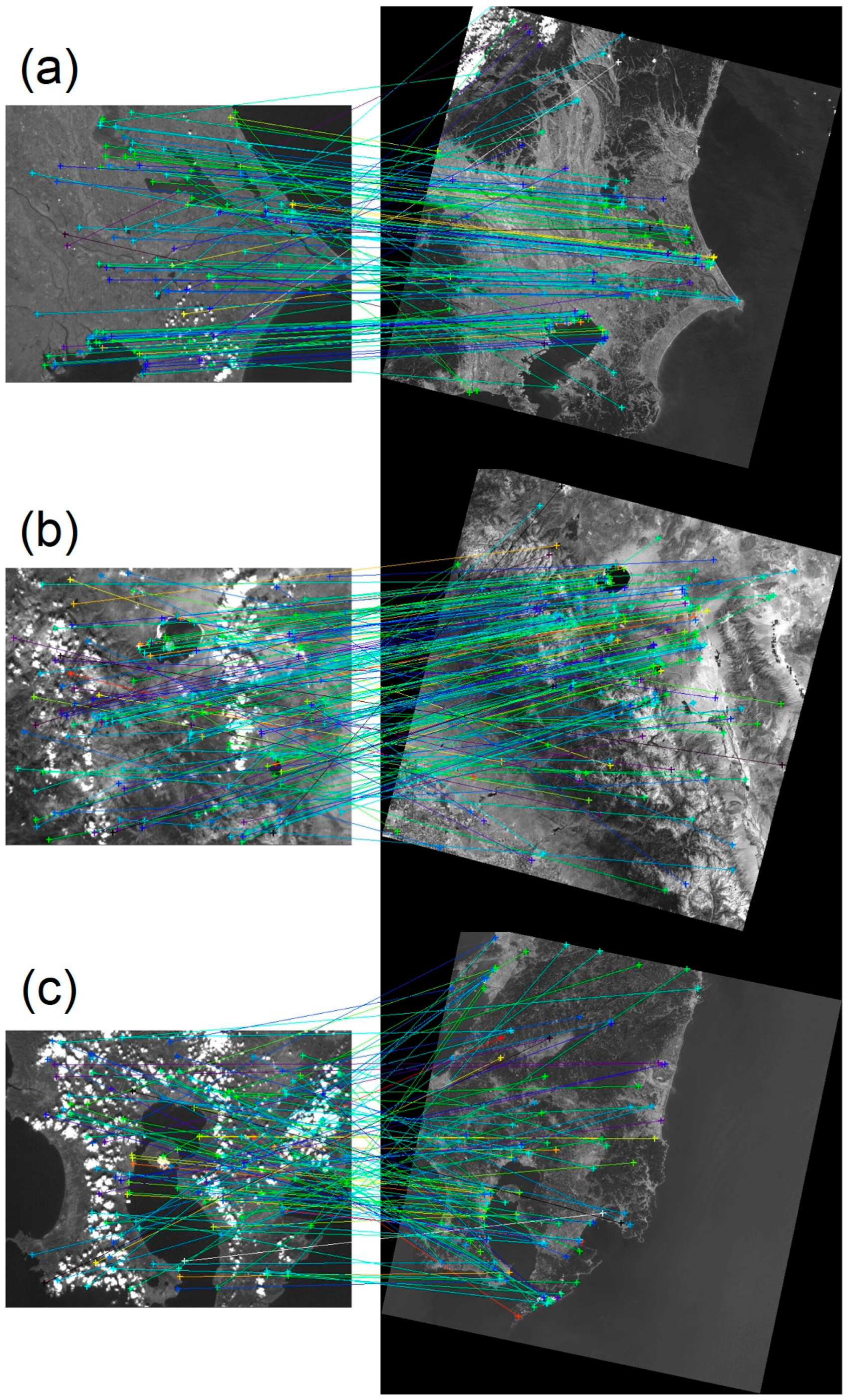

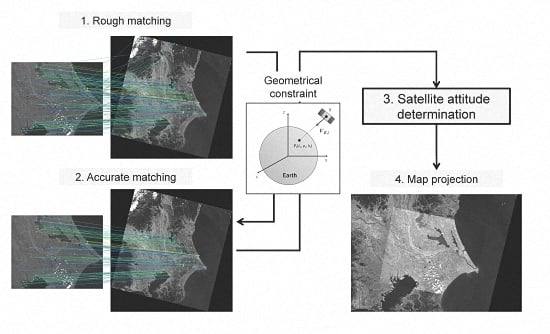

The framework of the proposed method consists of: (1) extracting feature points from a base map with known latitude and longitude and from a satellite image with unknown attitude parameters using SURF; (2) matching pairs of these feature points roughly based on SURF descriptors; (3) eliminating incorrect feature pairs, or outliers, with RANSAC or its variant utilizing the geometric constraints; and (4) deriving satellite attitude from remained inliers.

Figure 2 shows a flowchart of the proposed scheme. In this section, we will provide mathematical basis of satellite attitude determination from observed image with known satellite position at first, then how we extract and match feature pairs from satellite and base map images, and finally how we complete map-projection. As an outlier rejection technique, we examine four algorithms from the RANSAC family. In this study, the position information is obtained from the two-line elements (TLEs) distributed by the North American Aerospace Defense Command (NORAD).

3.1. Satellite Attitude Determination from Images

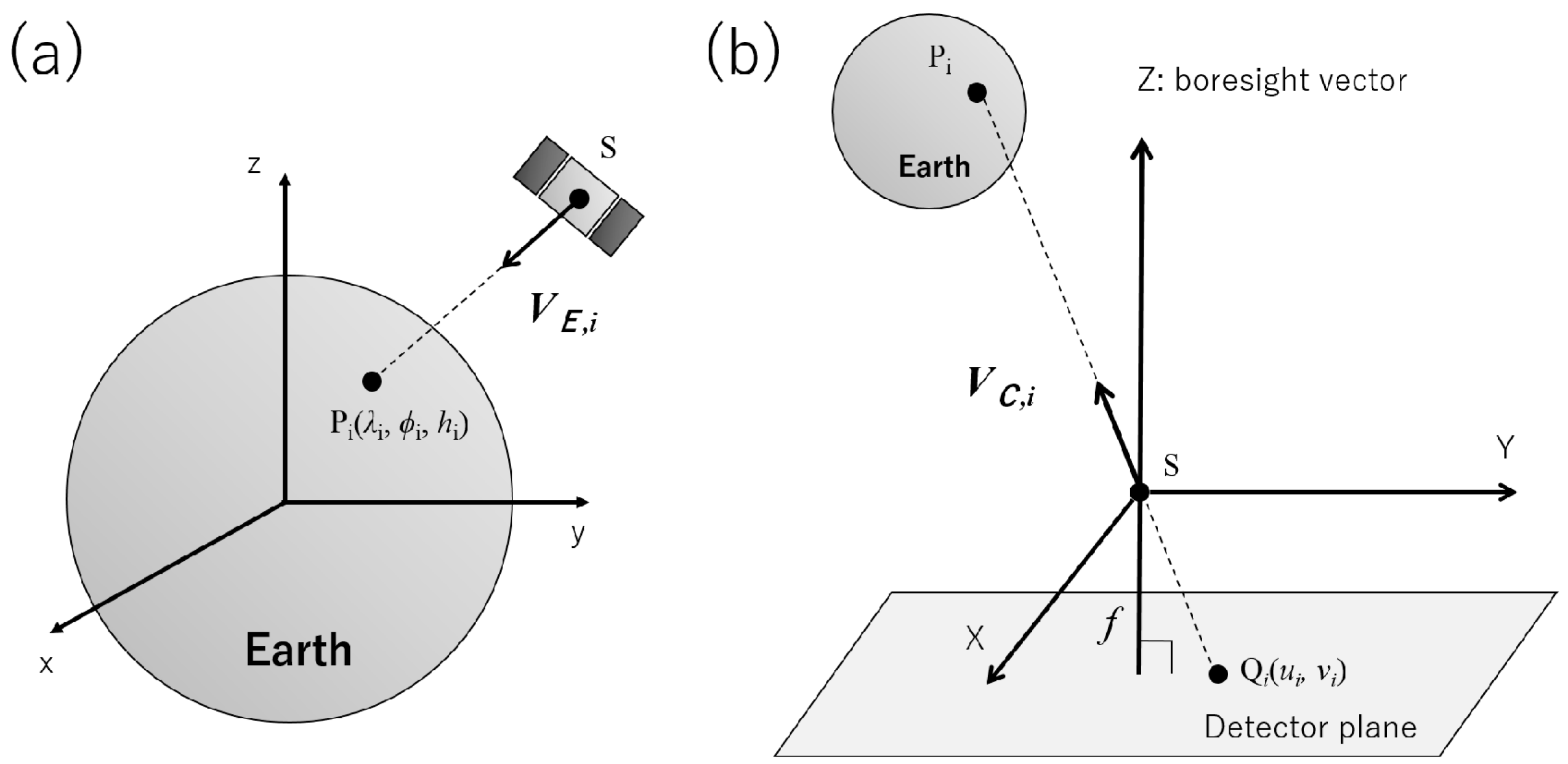

Given the position (latitude, longitude, and altitude) of a satellite and its attitude, we can uniquely determine the appearance of Earth from the satellite. This geometric fact indicates that, if the satellite position at an observation is well determined, the satellite attitude can be measured by matching a satellite image to the expected Earth appearance. In these matchings, we identify land feature locations in the satellite image by comparing feature points on Earth which can be extracted from base map images containing recognized geographical information. In this subsection, we formulate the geometrical relationship between the feature points extracted from a satellite image and their corresponding positions on Earth. Here, we assume that the images are already well matched; finding correct matchings is deferred until the next subsection (

Section 3.2).

We first define a feature-pointing vector representing the direction of each extracted land feature. We assume that all land features in a single image were observed at the same time. This assumption is realistic in observations by 2-D array detectors, which we target in this paper. For the

i-th land feature extracted from a satellite image, we specify a unit vector

pointing from the satellite position

to a land position

. The planetographic longitude, latitude, and altitude of

Pi, denoted by

,

, and

, respectively, are described in Earth-centered coordinates (

Figure 3a) as

where

is the equatorial radius of Earth,

is the pole radius, and

is a parameter defined in terms of

,

, and the eccentricity of Earth (

),

In this study, we set

and

[

18].

Next, we represent the same vector in the camera-centered coordinate system, with origin at the pinhole position of the camera onboard the satellite (

Figure 3b). In this coordinate system,

is represented by a vector

, which is expressed in terms of the projected position of

on the detector plane,

, as

where

is the focal length of the camera.

As the vectors

and

point in the same direction but are expressed in different coordinate systems, we can rigorously describe their relationship. Given a rotation matrix that connects the Earth-centered coordinate to the camera-centered coordinate systems,

then we can connect

and

directly through

as follows:

Finding the rotation matrix is equivalent to finding the satellite attitude; rotates the Earth center coordinates onto the camera coordinates attached to the satellite.

Theoretically, the parameters for solving Equation (5) could be determined from just three points. However, to improve the statistical accuracy of

M, our scheme utilizes many (more than ten) matched pairs of vectors

and

generated from the feature detection and robust matching procedure described in

Section 3.2. As Equation (5) is a linear system, the nine parameters

to

are easily estimated by the least squares method,

The estimates from the least squares method do not necessarily form a rotation matrix, because the orthogonality and unit-norm conditions might be violated by errors in the measurements

and

. Therefore, we constrain the matrix to be rotational by the following procedure:

Calculate the cross product of and to form an orthogonal vector.

Calculate the cross product of and to form an orthogonal vector.

Normalize , , and to unit vectors.

Construct .

The new matrix

satisfies the mathematical requirements of a rotation matrix. The accuracy of the estimated rotation matrices will be experimentally verified in

Section 4.

When mapping a satellite image onto the latitude–longitude coordinate, we need to find the corresponding pixel position in the satellite image for each latitude–longitude position, following [

19]. To find the pixel position for a point

on Earth’s surface, we compute the projected position on the detector plane

as follows:

where

is the coordinates for the latitude–longitude position in the camera center coordinate system calculated as

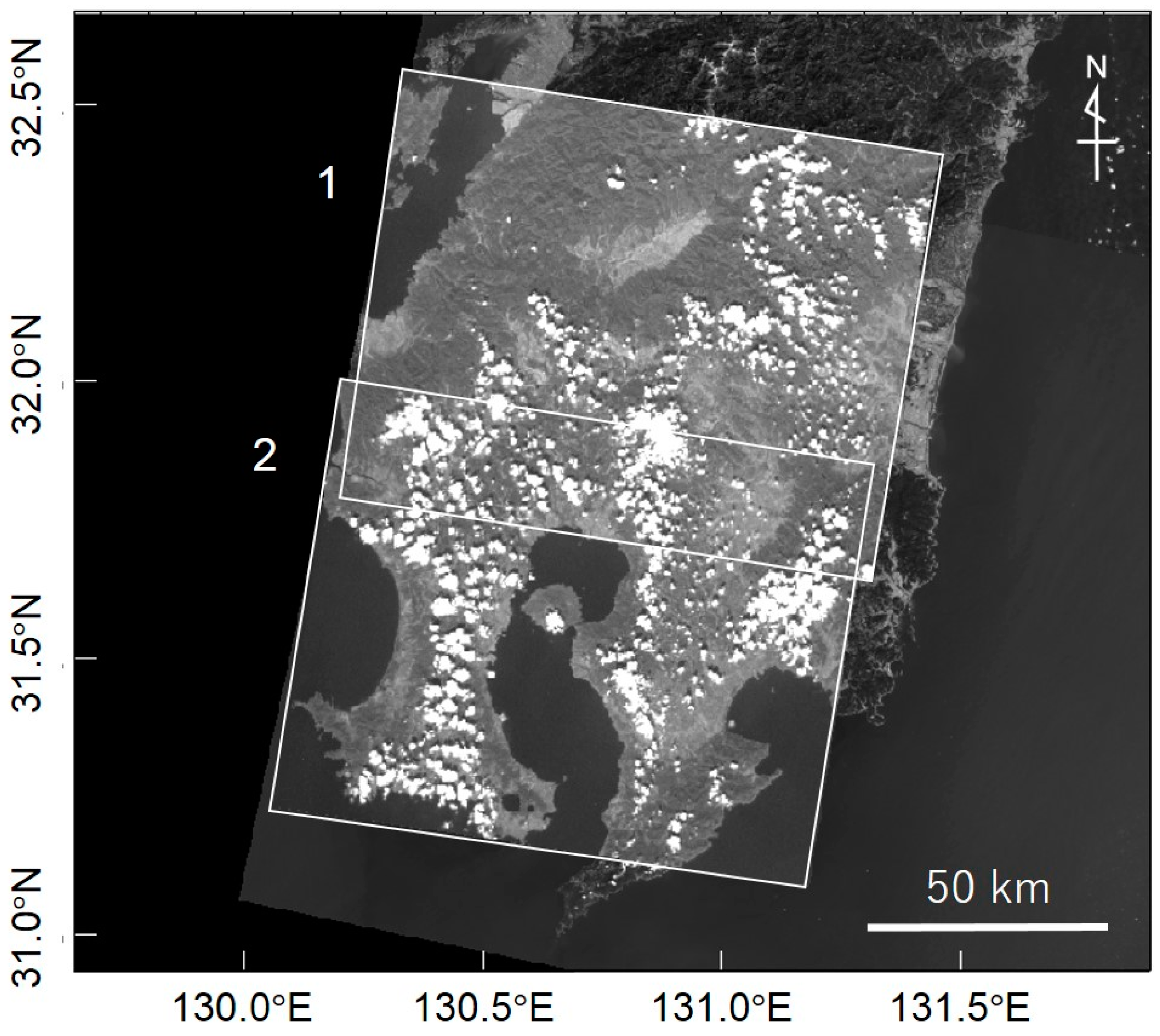

Based on Equations (7) and (8), we determine the brightness at by interpolating between the values on the neighbor pixels around . Here we adopt a simple bilinear interpolation method. We can then draw a map projected image of the satellite image.

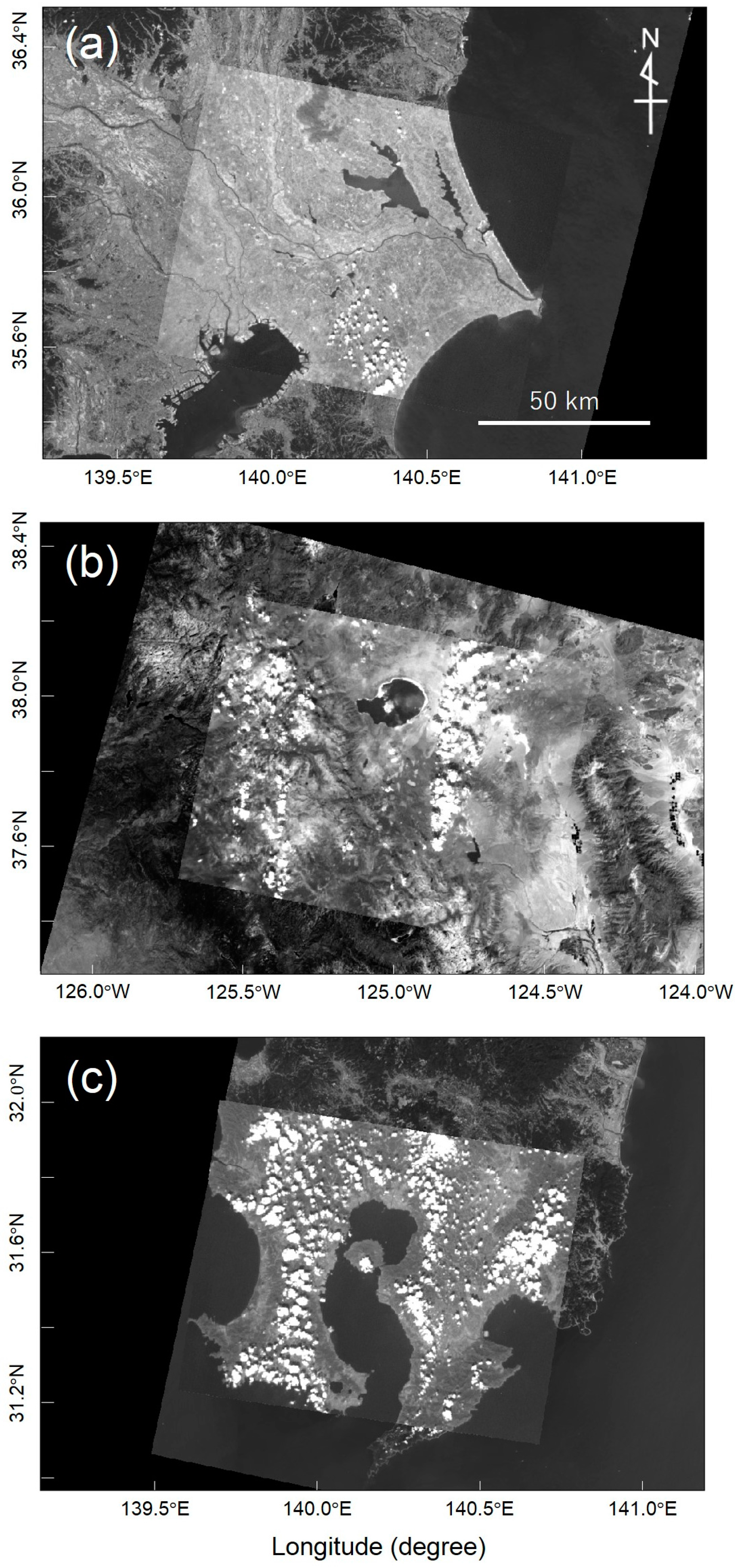

Above, we supposed that the satellite position at each observation is accurately determined. In practice, we can use signals from the global positioning system (GPS) and/or trajectory predictions with the TLEs distributed by NORAD. For each VIS image, we have determined the observation time by GPS and the satellite position by TLE using the SPICE Toolkit distributed by NASA [

20]. The accuracy of the satellite position determined by TLE is reported as 1 km [

21].

It should be noted that optical distortion can affect the accuracy of satellite attitude estimation, because it changes the projected location of an object onto the detector plane, changing

VC. Since optical distortion can be calibrated by tracking land features, observing star positions, and observing planet limbs [

22], the incorporation of such calibration techniques in satellite operations can be important to improve the accuracy of deriving satellite attitudes.