Enhanced Resolution of Microwave Sounder Imagery through Fusion with Infrared Sensor Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Mathematical Notation

2.2. Data Fusion

2.3. Data

2.4. Infrared Precipitation Retrieval

- (1)

- Collocating IR (~11 m) from the Visible and Infrared Scanner (VIRS) with precipitation rate from Precipitation Radar (PR), both aboard the Tropical Rainfall Measuring Mission (TRMM). The collocation was obtained from the University of Utah TRMM precipitation and cloud feature database [28] and used as our training datasets.

- (2)

- Establishing an empirical relationship between collocated IR brightness temperatures and PR precipitation estimates to map IR imagery to surface rainfall rates. This was performed using probability/histogram matching methods in which the cumulative distribution functions of precipitation rates and IR brightness temperatures are matched to provide IR-rain-rate equations under the general assumption that colder clouds statistically produce more intense rainfall [29,30,31].

- (3)

- The established relationship between IR brightness temperature and precipitation rate was used to retrieve precipitation intensity from AVHRR IR images, providing IR data (~11 m) similar to VIRS.

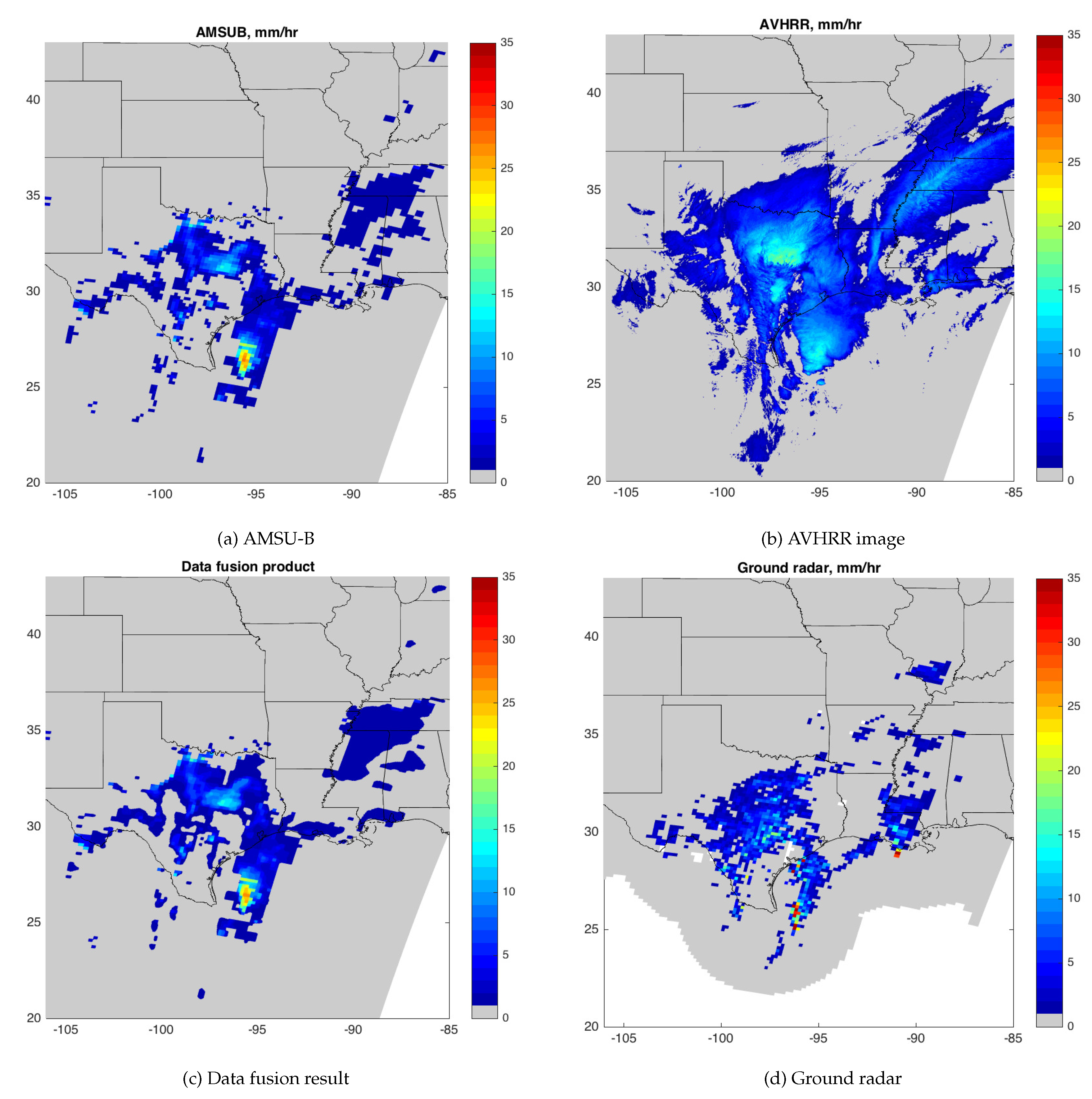

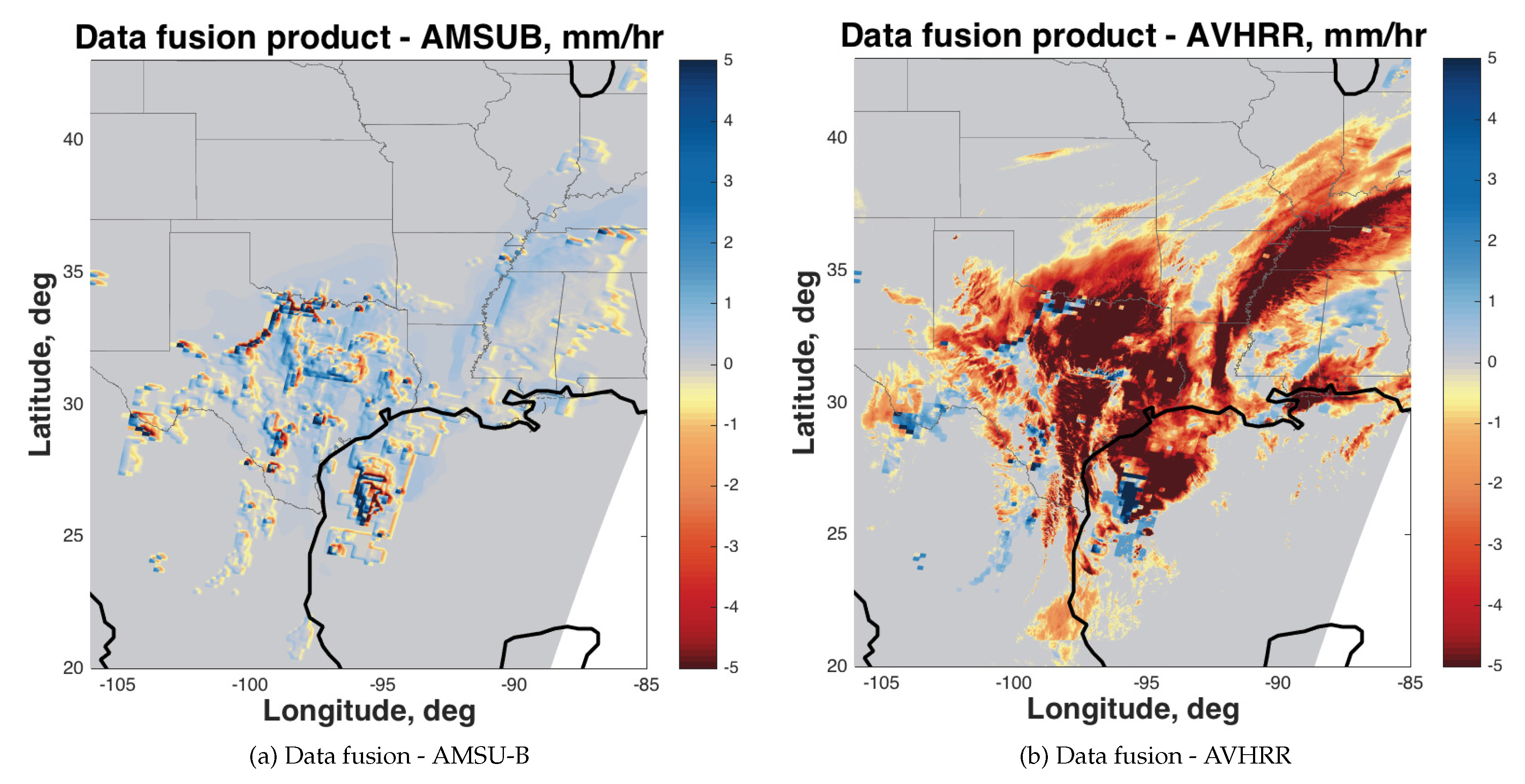

3. Results

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| ADMM | Alternating Direction Method of Multipliers |

| AMSU-B | Advanced Microwave Sounding Unit - B |

| AVHRR | Advanced Very High Resolution Radiometer |

| CC | Correlation Coefficient |

| FoV | Field of View |

| FRAC | Full-Resolution Area Coverage |

| GAC | Global Area Coverage |

| GPM | Global Precipitation Measurement |

| IR | Infrared |

| MAE | Mean Absolute Error |

| MRMS | Multi-Radar/Multi-Sensor |

| MW | Microwave |

| PR | Precipitation Radar |

| QPE | Quantitative Precipitation Estimation |

| RMSE | Root-Mean-Square Error |

| TRMM | Tropical Rainfall Measuring Mission |

| TV | Total Variation |

| VIRS | Visible and Infrared Scanner |

References

- Yanovsky, I.; Lambrigtsen, B.; Tanner, A.; Vese, L. Efficient deconvolution and super-resolution methods in microwave imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4273–4283. [Google Scholar] [CrossRef]

- Rudin, L.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Physica D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Glowinski, R.; Marrocco, A. Sur l’approximation, par elements finis d’ordre un, et la resolution, par, penalisation-dualité, d’une classe de problems de Dirichlet non lineares. J. Equine Vet. Sci. 1975, 9, 41–76. [Google Scholar]

- Gabay, D.; Mercier, B. A dual algorithm for the solution of nonlinear variational problems via finite element approximations. Comput. Math. Appl. 1976, 2, 17–40. [Google Scholar] [CrossRef]

- Glowinski, R.; Lions, J.L.; Tremolieres, R. Numerical Analysis of Variational Inequalities; North-Holland: Amsterdam, The Netherlands; New York, NY, USA; Oxford, UK, 1981. [Google Scholar]

- Wang, Y.; Yang, J.; Yin, W.; Zhang, Y. A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imaging Sci. 2008, 1, 248–272. [Google Scholar] [CrossRef]

- Yang, J.; Yin, W.; Zhang, Y.; Wang, Y. A fast algorithm for edge-preserving variational multichannel image restoration. SIAM J. Imaging Sci. 2009, 2, 569–592. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Y.; Yin, W. An efficient TVL1 algorithm for deblurring multichannel images corrupted by impulsive noise. SIAM J. Sci. Comput. 2009, 31, 2842–2865. [Google Scholar] [CrossRef]

- Goldstein, T.; Osher, S. The split bregman method for L1-regularized problems. SIAM J. Imaging Sci. 2009, 2, 323–343. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Y.; Yin, W. A fast alternating direction method for TVL1-L2 signal reconstruction from partial fourier data. IEEE J. Sel. Top. Signal Process. 2010, 4, 288–297. [Google Scholar] [CrossRef]

- Guo, W.; Yin, W. Edge guided reconstruction for compressive imaging. SIAM J. Imaging Sci. 2012, 5, 809–834. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.; Razavi, S. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Atrey, P.K.; Hossain, M.A.; El Saddik, A.; Kankanhalli, M.S. Multimodal fusion for multimedia analysis: A survey. Multimedia Syst. 2010, 16, 345–379. [Google Scholar] [CrossRef]

- Yuksel, S.E.; Wilson, J.N.; Gader, P.D. Twenty years of mixture of experts. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 1177–1193. [Google Scholar] [CrossRef] [PubMed]

- Kittler, J.; Hatef, M.; Duin, R.P.W.; Matas, J. On combining classifiers. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 226–239. [Google Scholar] [CrossRef]

- Vergara, L.; Soriano, A.; Safont, G.; Salazar, A. On the fusion of non-independent detectors. Digit. Signal Process. 2016, 50, 24–33. [Google Scholar] [CrossRef]

- Rodger, J.A. Toward reducing failure risk in an integrated vehicle health maintenance system: A fuzzy multi-sensor data fusion Kalman filter approach for IVHMS. Expert Syst. Appl. 2012, 39, 9821–9836. [Google Scholar] [CrossRef]

- Berliner, L.; Wikle, C.; Milliff, R. Multiresolution wavelet analyses in hierarchical bayesian turbulence models. In Bayesian Inference in Wavelet-Based Models; Muller, P., Vidakovic, B., Eds.; Springer: New York, NY, USA, 1999; Volume 141. [Google Scholar]

- Wikle, C.K.; Milliff, R.F.; Nychka, D.; Berliner, L.M. Spatiotemporal hierarchical bayesian modeling: Tropical ocean surface winds. J. Am. Stat. Assoc. 2001, 96, 382–397. [Google Scholar] [CrossRef]

- Banerjee, S.; Gelfand, A.E.; Finley, A.O.; Sang, H. Gaussian prediction process models for large spatial data sets. J. R. Stat. Soc. Ser. B 2008, 70, 825–848. [Google Scholar] [CrossRef] [PubMed]

- Cressie, N.; Johannesson, G. Fixed rank kriging for very large spatial data sets. J. R. Stat. Soc. Ser. B 2008, 70, 209–226. [Google Scholar] [CrossRef]

- Cressie, N.; Shi, T.; Kang, E.L. Fixed rank filtering for spatio-temporal data. J. Comput. Graph. Stat. 2010, 19, 724–745. [Google Scholar] [CrossRef]

- Nguyen, H.; Katzfuss, M.; Cressie, N.; Braverman, A. Spatio-temporal data fusion for very large remote sensing datasets. Technometrics 2014, 56, 174–185. [Google Scholar] [CrossRef]

- Yin, W.; Osher, S.; Goldfarb, D.; Darbon, J. Bregman iterative algorithms for L1-minimization with applications to compressed sensing. SIAM J. Imaging Sci. 2008, 1, 143–168. [Google Scholar] [CrossRef]

- Osher, S.; Burger, M.; Goldfarb, D.; Xu, J.; Yin, W. An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 2005, 4, 460–489. [Google Scholar] [CrossRef]

- Donoho, D.L.; Johnstone, I.M. Adapting to unknown smoothness via wavelet shrinkage. J. Am. Stat. Assoc. 1995, 90, 1200–1224. [Google Scholar] [CrossRef]

- Wang, Y.; Yin, W.; Zhang, Y. A Fast Algorithm for Image Deblurring with Total Variation Regularization; CAAM Technical Report TR07-10; Rice University: Houston, TX, USA, 2007. [Google Scholar]

- Liu, C.; Zipser, E.J.; Cecil, D.J.; Nesbitt, S.W.; Sherwood, S. A cloud and precipitation feature database from nine years of TRMM observations. J. Appl. Meteorol. Climatol. 2008, 47, 2712–2728. [Google Scholar] [CrossRef]

- Huffman, G.J.; Adler, R.F.; Bolvin, D.T.; Gu, G.J.; Nelkin, E.J.; Bowman, K.P.; Hong, Y.; Stocker, E.F.; Wolff, D.B. The TRMM multisatellite precipitation analysis (TMPA): Quasi-global, multiyear, combined-sensor precipitation estimates at fine scales. J. Hydrometeorol. 2007, 8, 38–55. [Google Scholar] [CrossRef]

- Kidd, C.; Kniveton, D.R.; Todd, M.C.; Bellerby, T.J. Satellite rainfall estimation using combined passive microwave and infrared algorithms. J. Hydrometeorol. 2003, 4, 1088–1104. [Google Scholar] [CrossRef]

- Behrangi, A.; Hsu, K.; Imam, B.; Sorooshian, S.; Huffman, G.J.; Kuligowski, R.J. PERSIANN-MSA: A precipitation estimation method from satellite-based multispectral analysis. J. Hydrometeorol. 2009, 10, 1414–1429. [Google Scholar] [CrossRef]

- Zhang, J.; Howard, K.; Langston, C.; Kaney, B.; Qi, Y.; Tang, L.; Grams, H.; Wang, Y.; Cocks, S.; Martinaitis, S.; et al. Multi-radar multi-sensor (MRMS) quantitative precipitation estimation: Initial operating capabilities. Bull. Am. Meteorol. Soc. 2016, 97, 621–638. [Google Scholar] [CrossRef]

| Relative Bias (%) | CC | MAE (mm/h) | RMSE (mm/h) | |

|---|---|---|---|---|

| AMSU-B | 2.58 | 0.20 | 2.25 | 4.11 |

| AVHRR | 185.95 | 0.26 | 3.72 | 5.03 |

| Fusion product | −1.78 | 0.29 | 1.44 | 3.21 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yanovsky, I.; Behrangi, A.; Wen, Y.; Schreier, M.; Dang, V.; Lambrigtsen, B. Enhanced Resolution of Microwave Sounder Imagery through Fusion with Infrared Sensor Data. Remote Sens. 2017, 9, 1097. https://doi.org/10.3390/rs9111097

Yanovsky I, Behrangi A, Wen Y, Schreier M, Dang V, Lambrigtsen B. Enhanced Resolution of Microwave Sounder Imagery through Fusion with Infrared Sensor Data. Remote Sensing. 2017; 9(11):1097. https://doi.org/10.3390/rs9111097

Chicago/Turabian StyleYanovsky, Igor, Ali Behrangi, Yixin Wen, Mathias Schreier, Van Dang, and Bjorn Lambrigtsen. 2017. "Enhanced Resolution of Microwave Sounder Imagery through Fusion with Infrared Sensor Data" Remote Sensing 9, no. 11: 1097. https://doi.org/10.3390/rs9111097