1. Introduction

Hyperspectral images have become a well-established source of information in remote sensing and have been used in various applications such as environmental monitoring, agriculture, military issues and others. Typically, however, HSI have a limited spatial resolution, while many of the practical applications require images with a high spectral as well as spatial resolution. In order to enhance the spatial resolution of HSI, different techniques have been recently introduced [

1,

2,

3]. Most techniques are based on the fusion of HSI with multispectral images that are acquired from the same scene of a higher spatial resolution. These fusion methods can be generally divided into two main groups: spectral unmixing based and sparse representation based approaches.

In spectral unmixing (SU) based fusion methods, the original images are decomposed into endmember and abundance fraction matrices [

4,

5]. The endmember matrix is extracted from the existing low resolution HSI (LRHSI) and abundance fractions are estimated from the MSI. For example, in [

5], the endmember matrix was first extracted from HSI using vertex component analysis (VCA) [

6] after which coupled non-negative matrix factorization (CNMF) is applied in order to alternately update the abundance fractions from the MSI and the endmember spectra from the HSI. In [

7], the abundance fractions are estimated by formulating a convex subspace-based regularization problem. Another method based on spectral unmixing is introduced in [

8]. In this approach, the endmember signatures and abundances are jointly estimated from the observed images. The optimization with respect to the endmember signatures and the abundances are solved using the alternating direction method of multipliers (ADMM).

Recently, a spectral resolution enhancement method (SREM) for remotely sensed MSI has been introduced using auxiliary multispectral/hyperspectral data [

9]. In this method, a number of spectra of different materials is extracted from both the MSI and HSI data. Then, a set of transformation matrices is generated based on linear relationships between MSI and HSI of specific materials. In [

10], a computationally efficient algorithm for fusion of HSI and MSI based on spectral unmixing (CoEf-MHI) is described. The CoEf-MHI algorithm is based on incorporating spatial details of the MSI into the HSI, without introducing spectral distortions. To achieve this goal, the CoEf-MHI algorithm first spatially upsamples, by means of a bilinear interpolation, the input HSI to the spatial resolution of the input MSI, and then it independently refines each pixel of the resulting image by linearly combining the MSI and HSI pixels in its neighborhood. Similarly, based on the fact that neighboring pixels normally share fractions of the same underlying material, Bieniarz et al. [

11] employed a jointly sparse model to perform the unmixing of these neighboring pixels. In general, the use of spectral unmixing reduces the spectral distortion in the reconstructed images. However, the gain in spatial resolution is rather limited, in comparison with sparse representation based methods.

In sparse representation (SR) based fusion methods, the self-similarity property of natural images is exploited to reconstruct a high resolution HSI (HRHSI). These methods involve the construction of a dictionary from the available data, and a sparse coding step, in which a reconstruction is represented as a (linear) combination of the dictionary atoms. Different methods based on SR for fusion of HSI and MSI have been recently introduced. In [

12] a pansharpening sparse method for fusion of remote sensing images is introduced. This method utilizes two types of training dictionaries: one dictionary contains patches from a high spatial resolution image while the other consists of patches from a lower-resolution image. In [

13], a dictionary is constructed from HSI to create a spectral basis, after which a greedy pursuit algorithm is applied to construct a sparse coding from the MSI. In [

14], the spectral basis is obtained from a singular value decomposition of the HSI and the sparse code from the MSI is estimated by using orthogonal matching pursuit (OMP). Another efficient method based on SR is introduced in [

15]. In this method, a Bayesian sparse (BS) approach is considered. First, principal component analysis is applied to the LRHSI in order to reduce the spectral dimensionality. After that, a proper dictionary is constructed using the LRHSI and MSI. The fusion problem is solved via an alternating optimization method. Typically, sparse based methods generate HRHSI with higher spatial resolution than the spectral unmixing based methods, but with higher spectral distortion. In a recent experimental work, ten state-of-the-art HSI-MSI fusion methods are compared by assessing their fusion performance both quantitatively and visually as well as indirectly by performing classification on the fused results [

16]. The method which showed the highest performance most consistently in all tests was the HySure method [

7].

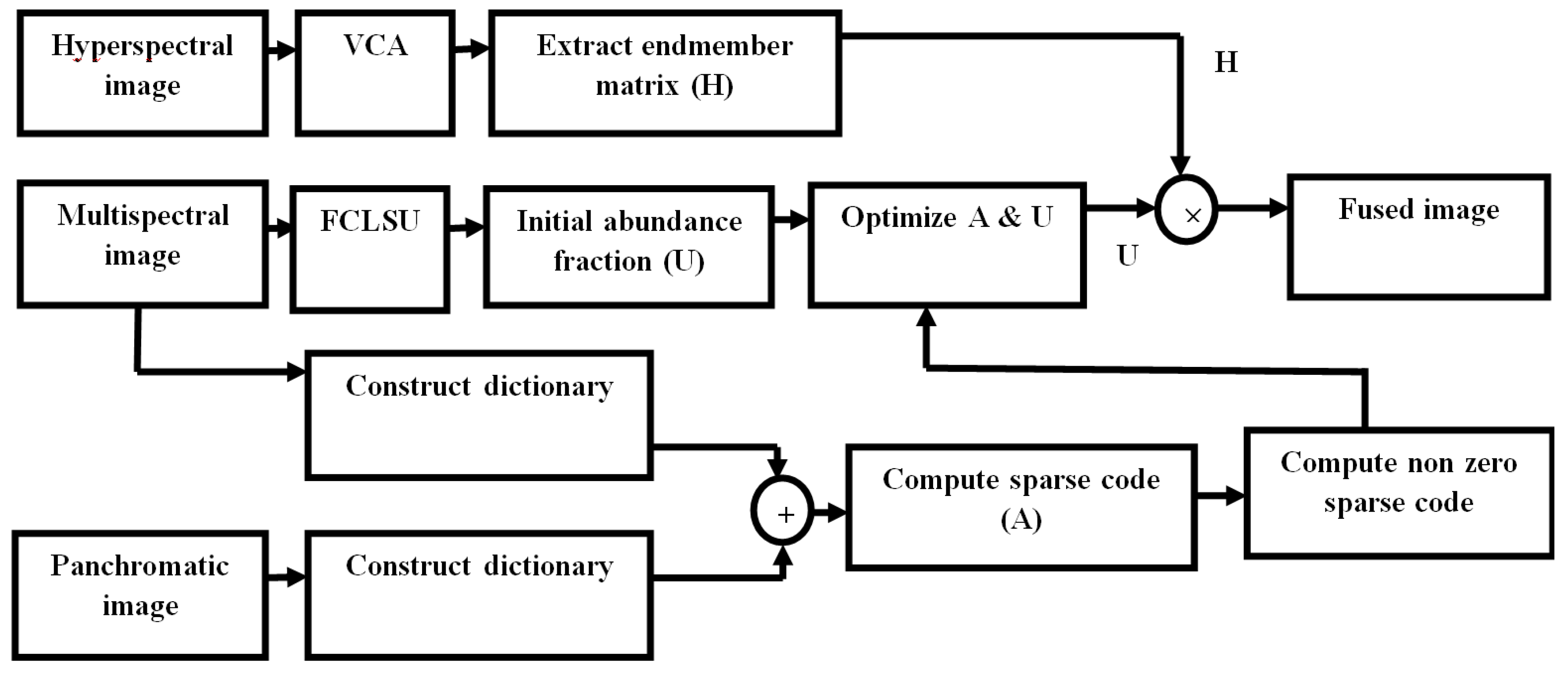

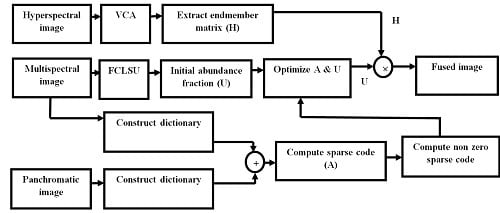

Recently, we introduced a fusion method based on the combination of spectral unmixing and sparse coding (SUSC) [

17]. This method showed better performance than the spectral unmixing [

18] and sparse coding [

13] methods. In this paper, we will improve on this method by the following specific contributions:

Rather than using the sparse representation method of [

13], we use the BS method [

15]. In [

19], BS has been shown to be superior to popular fusion techniques such as modulation transfer function generalized Laplacian pyramid (MTF-GLP) [

20], modulation transfer function generalized Laplacian pyramid with high-pass modulation (MTF-GLP-HPM) [

21] and guided filter PCA (GFPCA) [

22].

Spectral unmixing is introduced in the BS procedure. In particular, SU is applied and the endmember matrix is directly extracted from the LRHSI. The abundance fractions are then estimated using BS. In fact, the SU procedure replaces the PCA dimensionality reduction step of the original BS.

Another modification is related to the selected dictionary for the sparse representation. In the original BS method, this dictionary is constructed from the MSI and HSI. In the proposed method, we consider two weighted dictionaries as a sparse regularizer, constructed from some high resolution panchromatic or synthetic aperture radar images and the MSI. The extra dictionary improves the spatial resolution of the reconstruction.

Compared to the SUSC method, where a dictionary is estimated for the whole abundance matrix, in the proposed method, a dictionary is estimated for each endmember separately. In addition, the proposed method takes into account the Gaussian noises of the HSI and MSI, hereby reducing the noise in the fusion process.

By using a combination of SU and BS, the proposed method (SUBS) simultaneously increases the spatial resolution and decreases the spectral distortions.The proposed method is applied to real datasets and compared with the spectral unmixing based method CNMF [

5], the sparse representation methods SC [

13] and BS [

15], and the combined method SUSC [

17]. The remaining of the paper is organized as follows. The proposed method is explained in

Section 2. Experimental results are shown in

Section 3.

Section 4 and

Section 5 describe the discussion and give some concluding remarks.

4. Discussion

In this paper, a new method for spatial resolution enhancement of HSI is introduced. The method is based on fusion of the HSI with an MSI combining spectral unmixing (SU) and sparse coding, hereby combining the advantages of SU methods (i.e., a reduction of spectral distortions) and sparse coding (i.e., improved spatial resolution). The proposed method (SUBS) is based on a spectral unmixing procedure for which the endmember matrix and the abundance fractions are respectively estimated from the HSI and an MSI from the same scene. The unmixing procedure is merged with a Bayesian sparse (BS) coding method, similar to the one used in [

15]. In the proposed method, two weighted dictionaries are constructed from the MSI and from a number of high resolution PAN or SAR images.

In the experimental section, the proposed method has been compared with the spectral unmixing based fusion method CNMF [

5], the sparse coding based fusion methods SC [

13] and Bayesian sparse coding [

15], and an earlier developed method (SUSC) that combines spectral unmixing and sparse coding [

17].

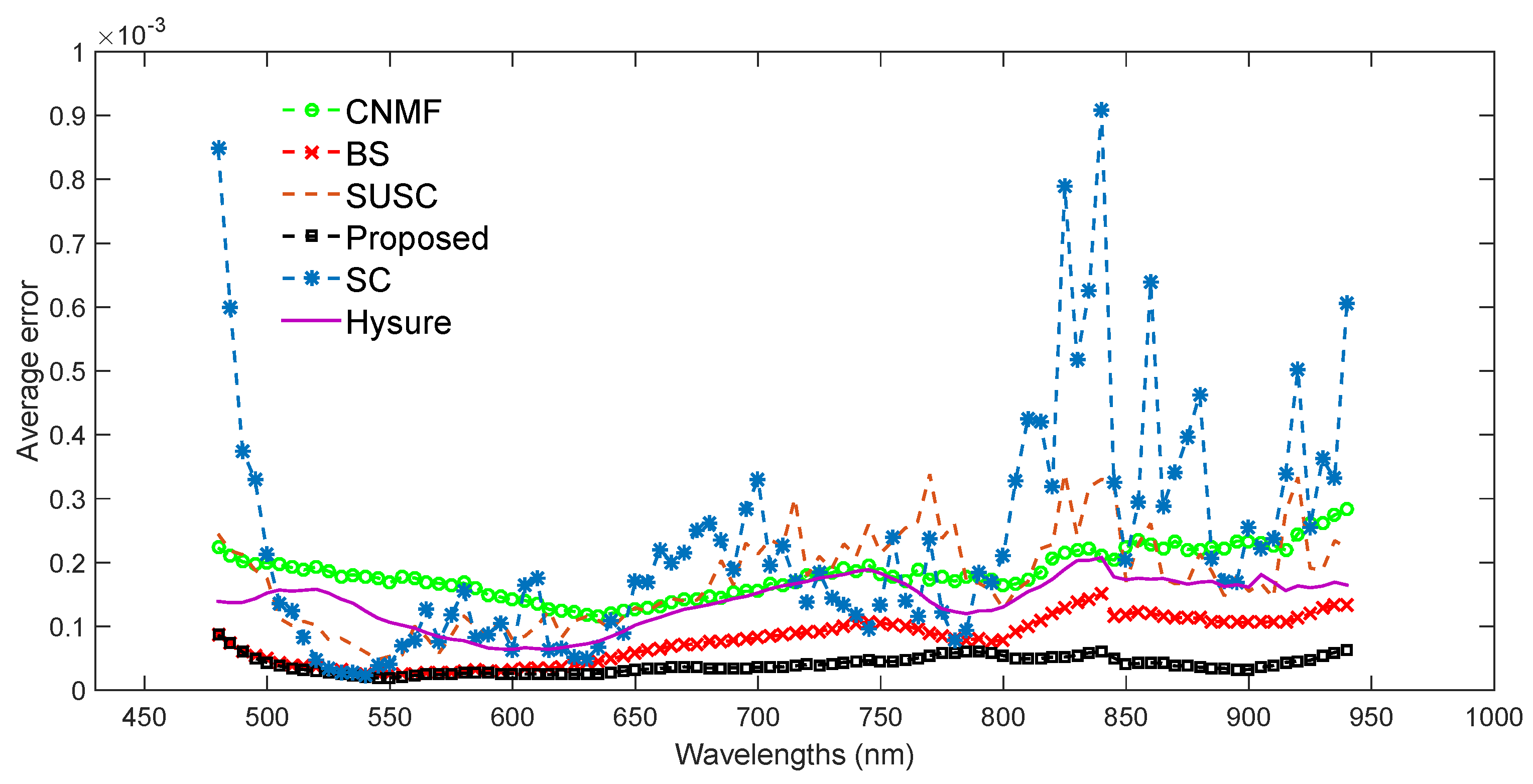

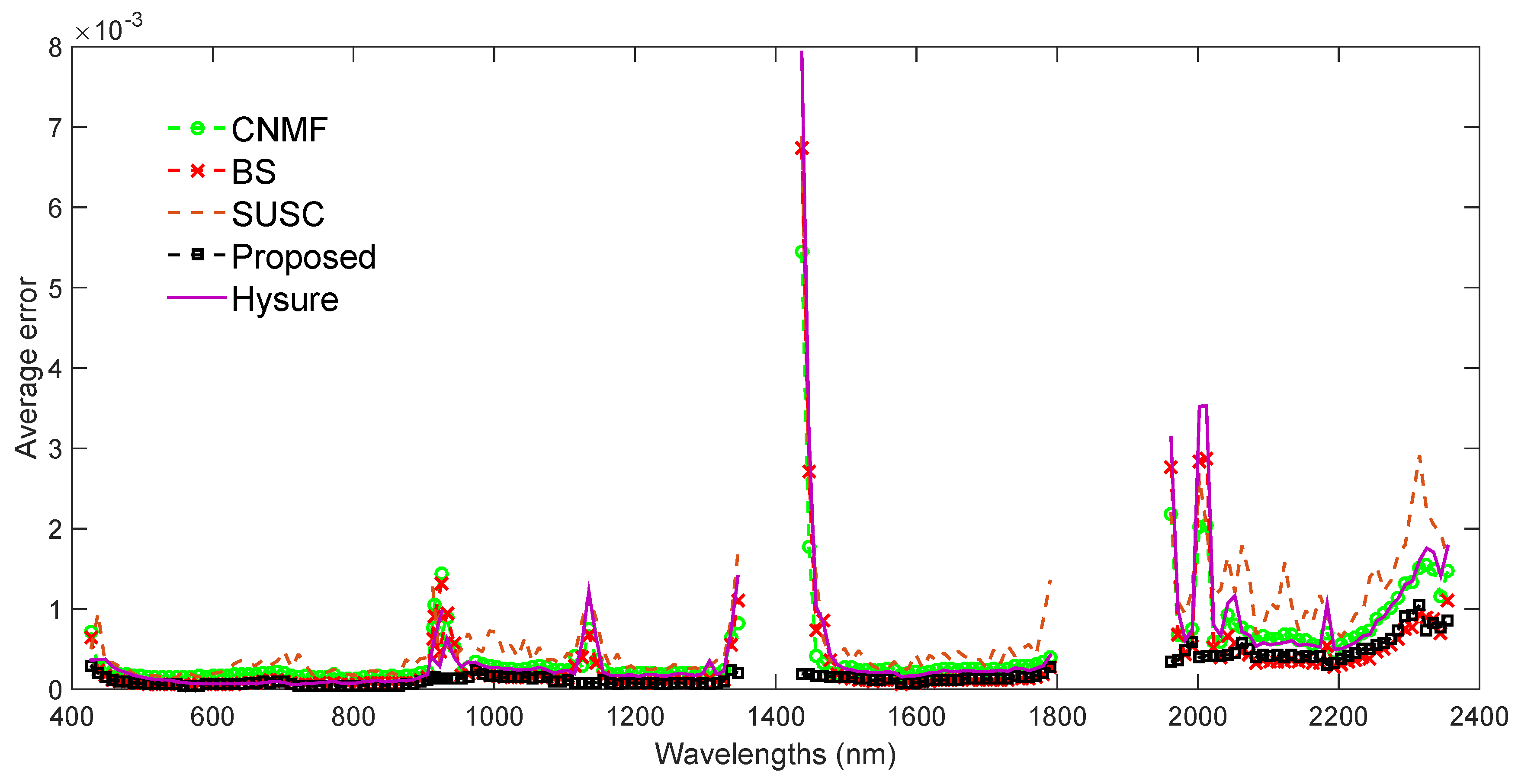

From the experimental comparison on two datasets, it can be concluded that the proposed approach outperforms the other fusion methods. The superior performance of the proposed method can be attributed to an improved spatial resolution (SNR and UIQI) compared to the unmixing-based method (CNMF), and a reduced spectral distortion (SAM, ERGAS and DD) compared to the sparse coding based methods (SC and BS).

In general, the unmixing based CNMF performs better than the sparse coding based SC. The combined method SUSC produces images with higher quality than SC because of the combined use of sparse coding and spectral unmixing. The sparse coding based BS, however, performs better than SUSC. We see two reasons for this. First, the SC-based methods do not take into account the Gaussian noise model in the fusion process (see Equation (

6)); second, they use two-dimensional dictionaries, i.e., the dictionaries are the same for all bands [

17].

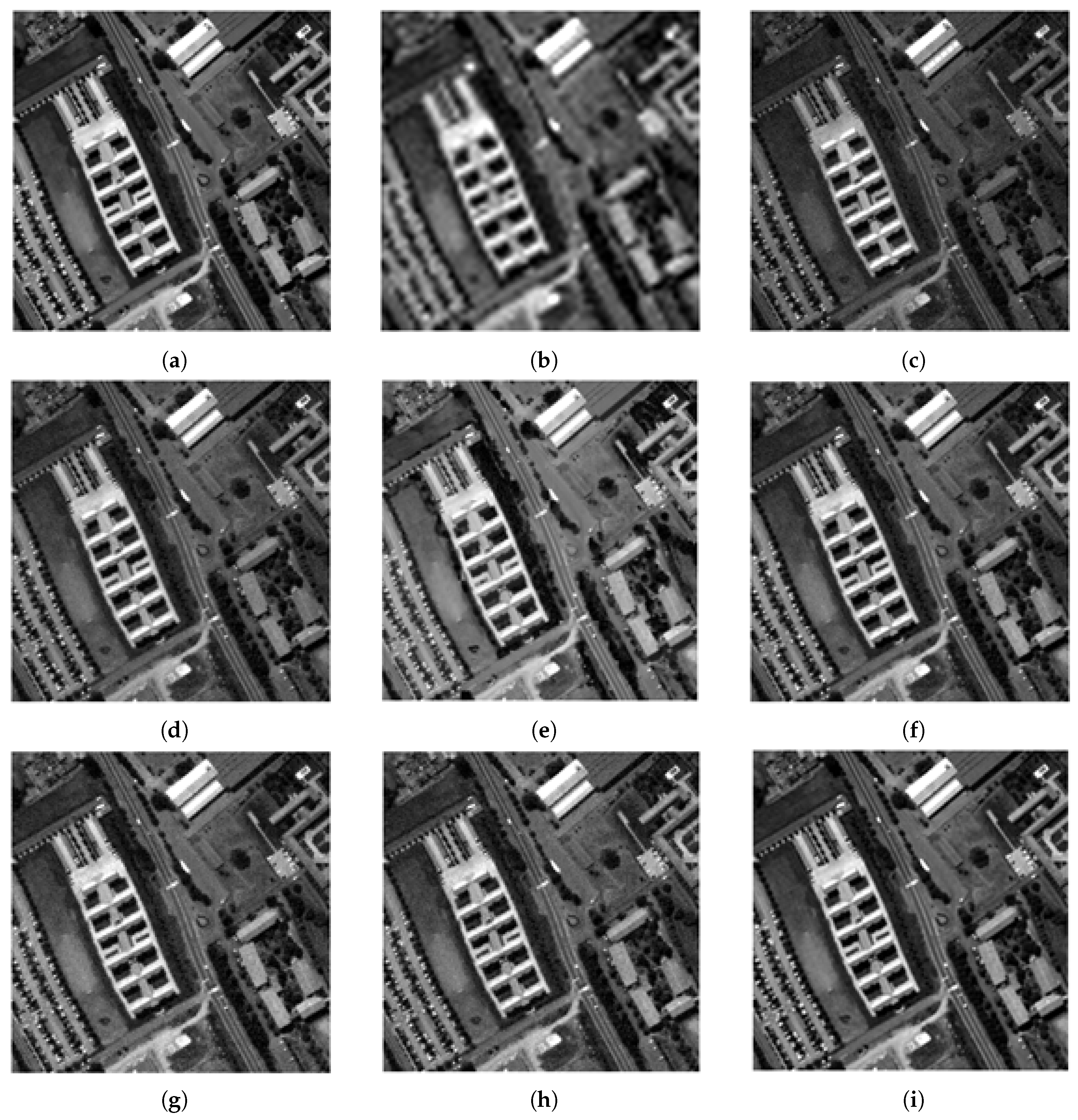

The proposed method (SUBS) shows the best performance, with a reduced spectral distortion, because of the spectral unmixing procedure, and an improved spatial resolution, because of the modified BS procedure. In fact, the reconstructed images by the proposed method are visually very close to the ground truth image. The squared error between ground truth and reconstructed spectra of the Pavia and Shiraz datasets are plotted. The average and max-min errors for all methods show that the proposed method has the lowest spectral errors. These errors are the least sensitive to the band regions. The spectral error of the SC method is very high because, in this method, the dictionary does not account for the spectral correlation of the HSI, which induces spectral distortion in the reconstructed images.

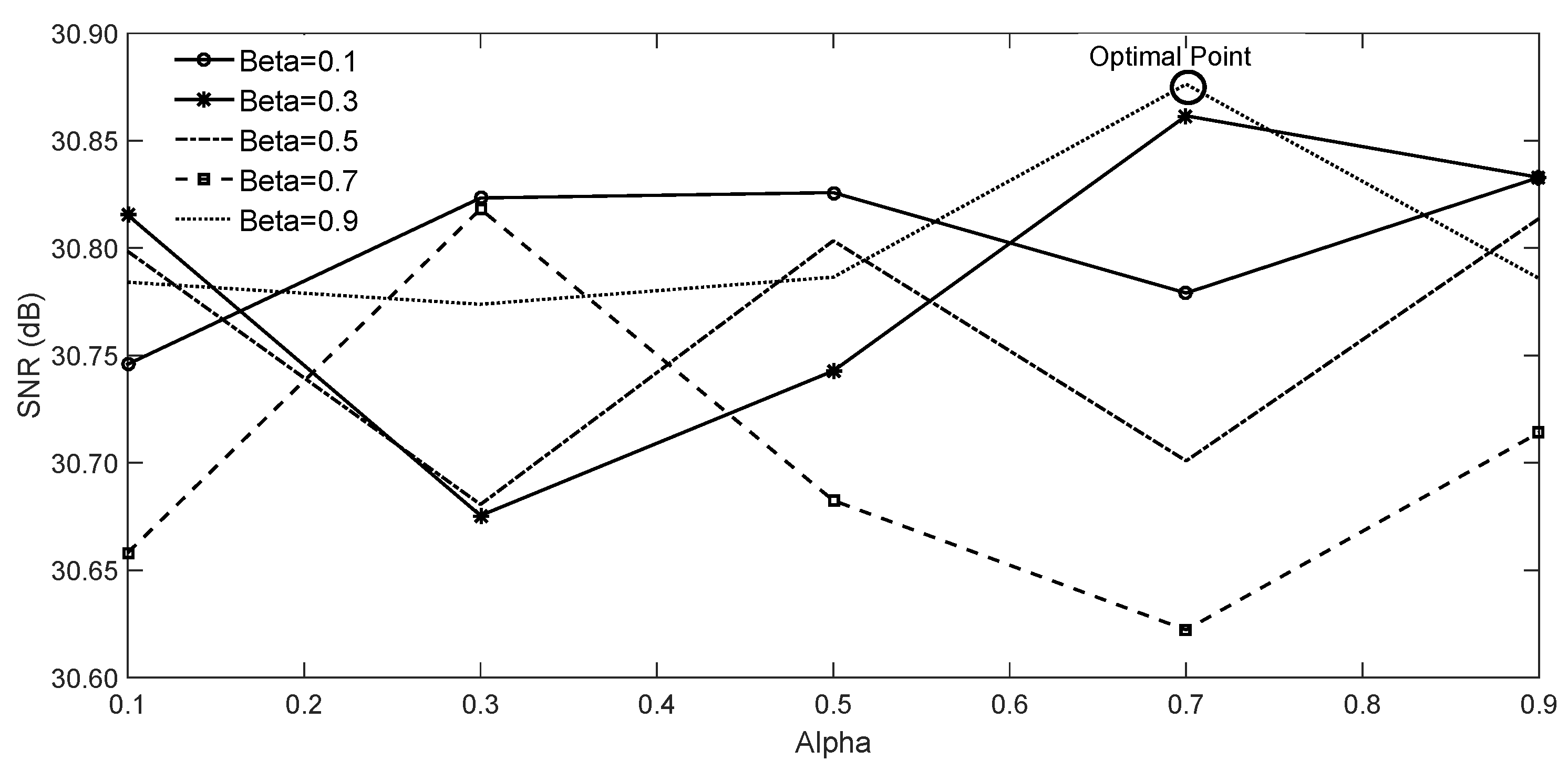

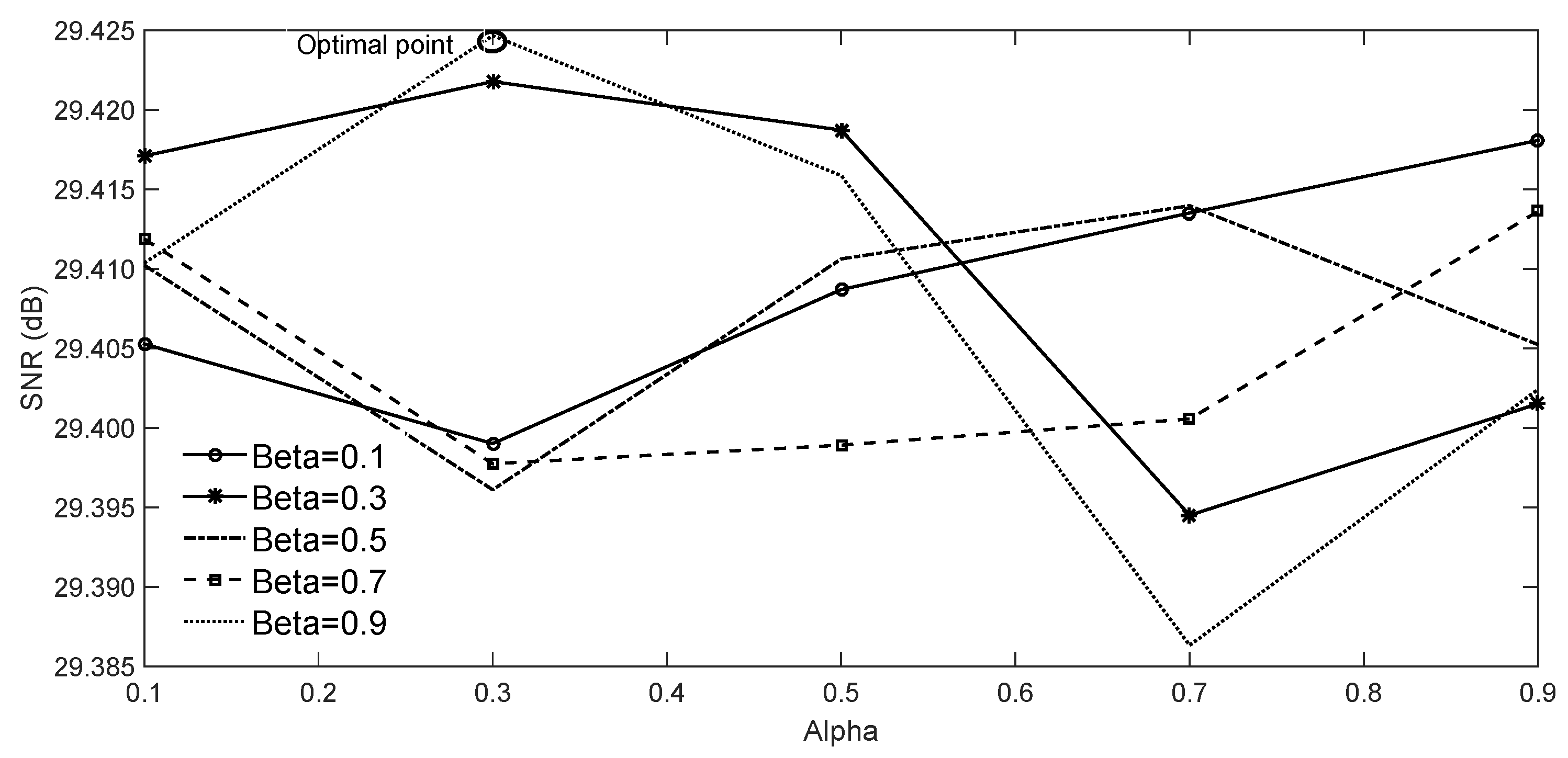

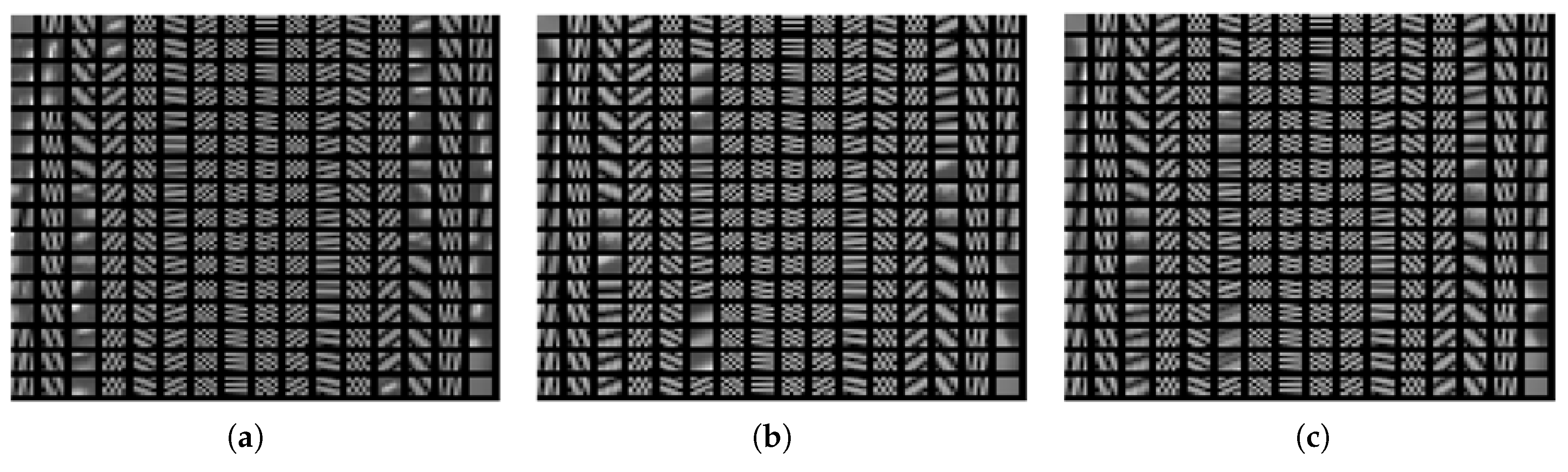

In general, there is no unique rule to select the dictionary size and the number of atoms. The smaller the patches, the more objects the atoms can approximate. However, patches that are too small are not efficient to properly capture the textures, edges, etc. With larger patch size, a larger number of atoms is required to guarantee the overcompleteness (which requires larger computational cost). In general, the size of patches is empirically selected. The figures of the learned dictionaries clearly show the sparsity. Some atoms are frequently present and represent quite common spatial details, while other atoms represent details that are characteristic for specific patches.

Although image content can vary greatly from image to image, all images can be represented by a small number of structural primitives (e.g., edges, line segments and other elementary features). These microstructures are the same for all images. Dictionary learning and sparse coding rely on this observation by constructing a dictionary of such primitives from a number of high quality images (such as PAN and multispectral images) and using this dictionary to reconstruct a particular image from the smallest possible number of dictionary atoms. For the construction of the dictionary, the most important point is to select high quality images that represent the structural primitives well.

We have studied the effect of different types of high quality images such as IKONOS, ALI and QuickBirds PAN, MSI and SAR images and their combinations on the fusion performance. The obtained results show that the proposed method performs well in all cases, even in the cases where only one of the dictionaries is applied. Therefore, we can conclude that, besides the use of the MSI image of the same scene, any of these unrelated images and combinations can successfully be used for the dictionary construction. It should be emphasized that the PAN or SAR dictionaries can be learned offline, which is an important merit of the proposed method. We can also conclude that any of the applied types of images or combinations of them to construct the dictionary leads to acceptable results, as long as the images are of sufficiently high spatial resolution. We, however, suggest Quickbird as a viable option because it has the highest spatial resolution in comparison with the other images (IKONOS, ALI and SAR).

It is noteworthy to mention that the output of the proposed method is a fused HSI, and not an unmixing result. Although the endmembers are inferred from the LRHSI using VCA, the final HRHSI is obtained by multiplying the endmembers with the abundance values obtained from the method. Since VCA is not robust and gives each run a different set of endmembers, we repeated the fusion algorithms 10 times. The fusion results only changed slightly and the variance was small. Therefore, we can conclude that if one or more endmembers are not inferred well from VCA, the effect of this on the fusion results will be minimal.

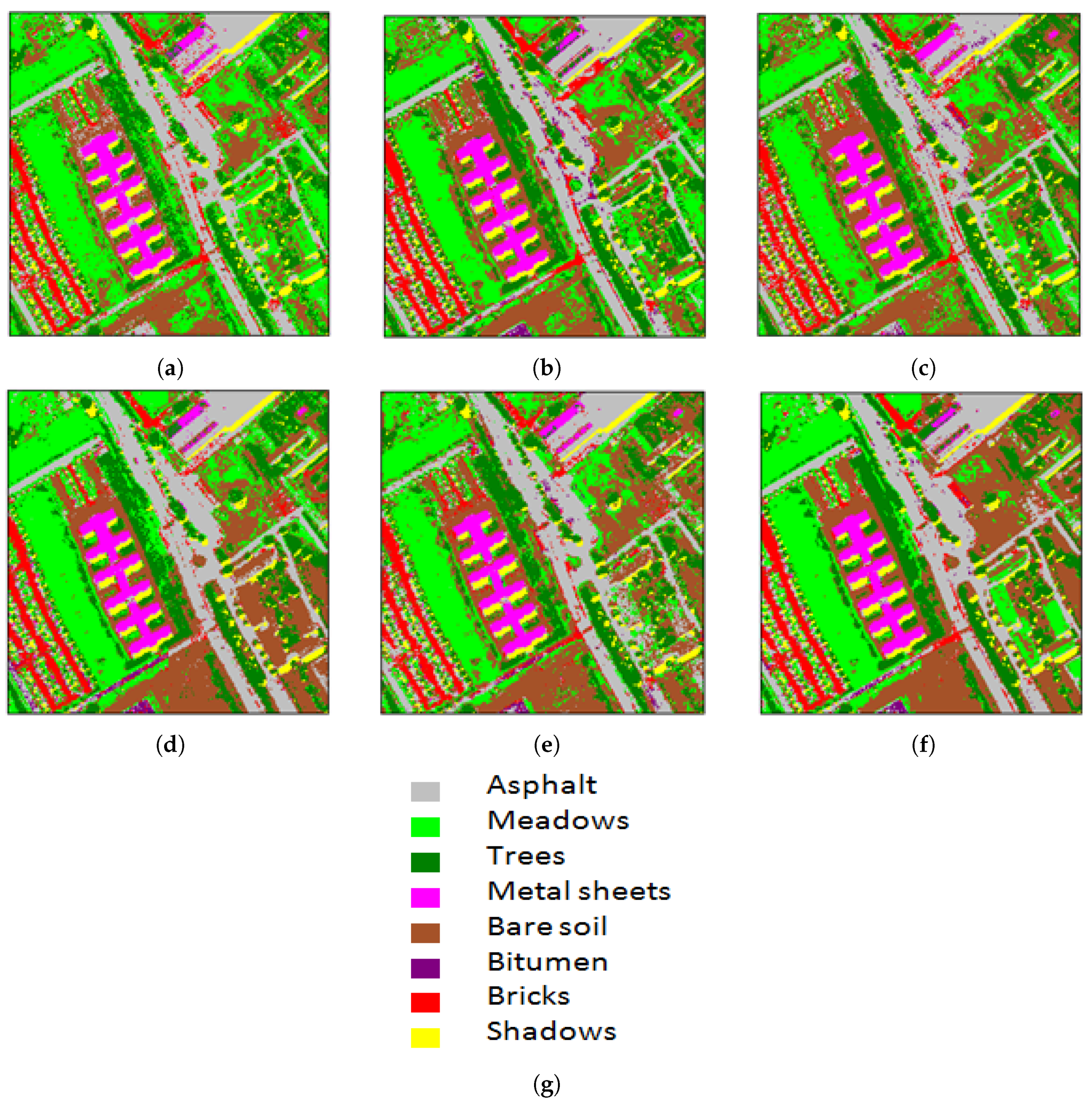

For the Pavia dataset, the various fusion methods are also compared by classifying the obtained fused results. The proposed method obtained the highest overall accuracy and kappa coefficient.

All the algorithms have been implemented in MATLAB (Version R2014b, MATLAB software Company,USA) on a computer with an

Core i7 central processor (ASUS,China) of 3.1 GHz, 8 GB RAM and a 64-bit operating system. Computing times for the proposed algorithm and the other algorithms are reported in

Table 1 and

Table 4. For the sparse coding based methods, the construction of the dictionaries and the estimation of the sparse codes takes a larger amount of time in comparison with the CNMF method. Moreover, in the proposed method, the abundance fractions and the sparse codes are iteratively updated, which makes the proposed method time-consuming.