Assessing Lodging Severity over an Experimental Maize (Zea mays L.) Field Using UAS Images †

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

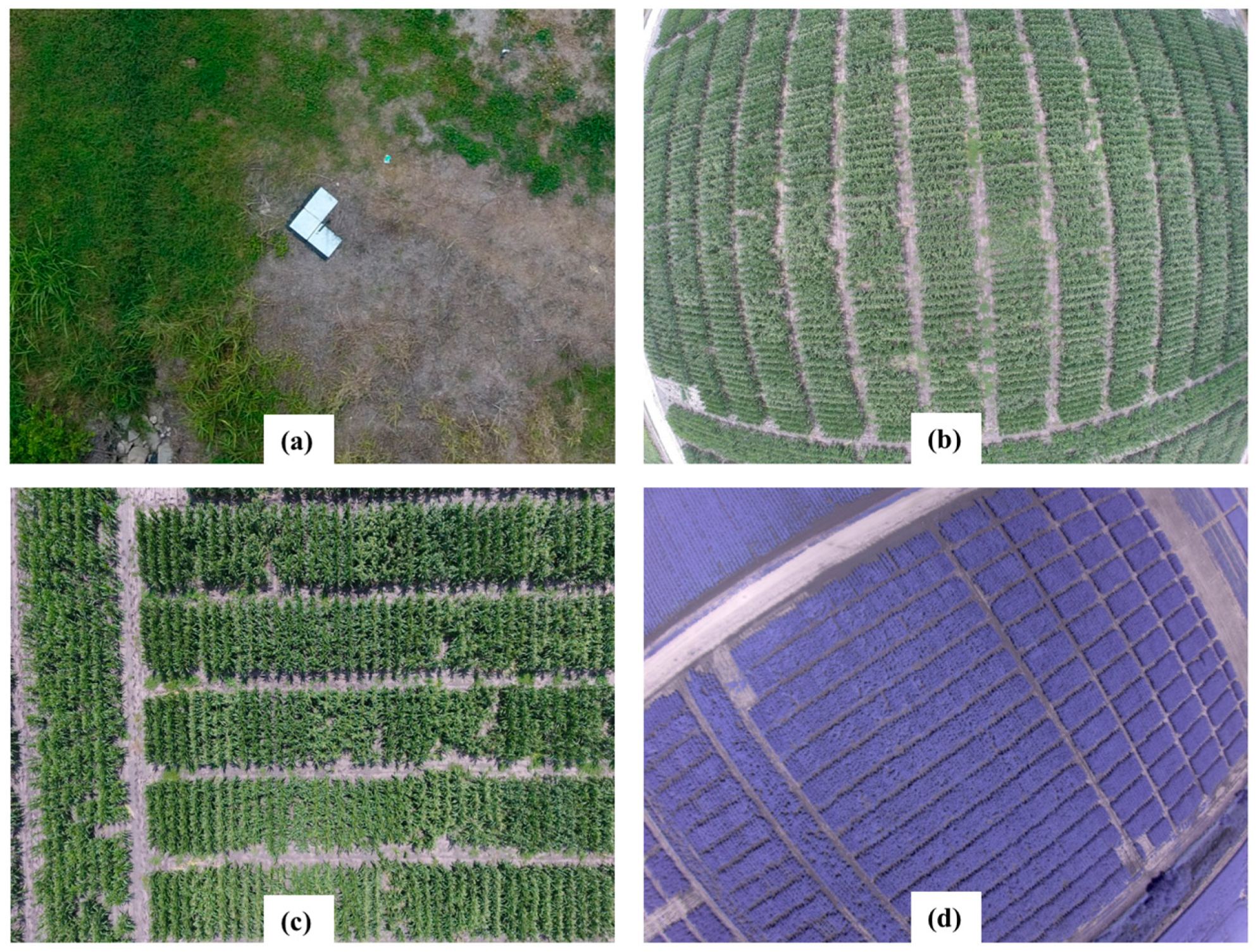

2.2. Image Collection from UAS Platforms

2.3. Field Data Collection

- (1)

- Plant stand counts. This was necessary to calculate the lodging rate. Stand counts are not constant within fields because of germination differences across varieties and field positions.

- (2)

- Number of lodged maize plants. Any maize stalks that had laid over due to environmental factors with an approximate inclination of 60° from a vertical position and would not likely be processed by the combine were deemed as lodging plants, including both root and stalk lodging.

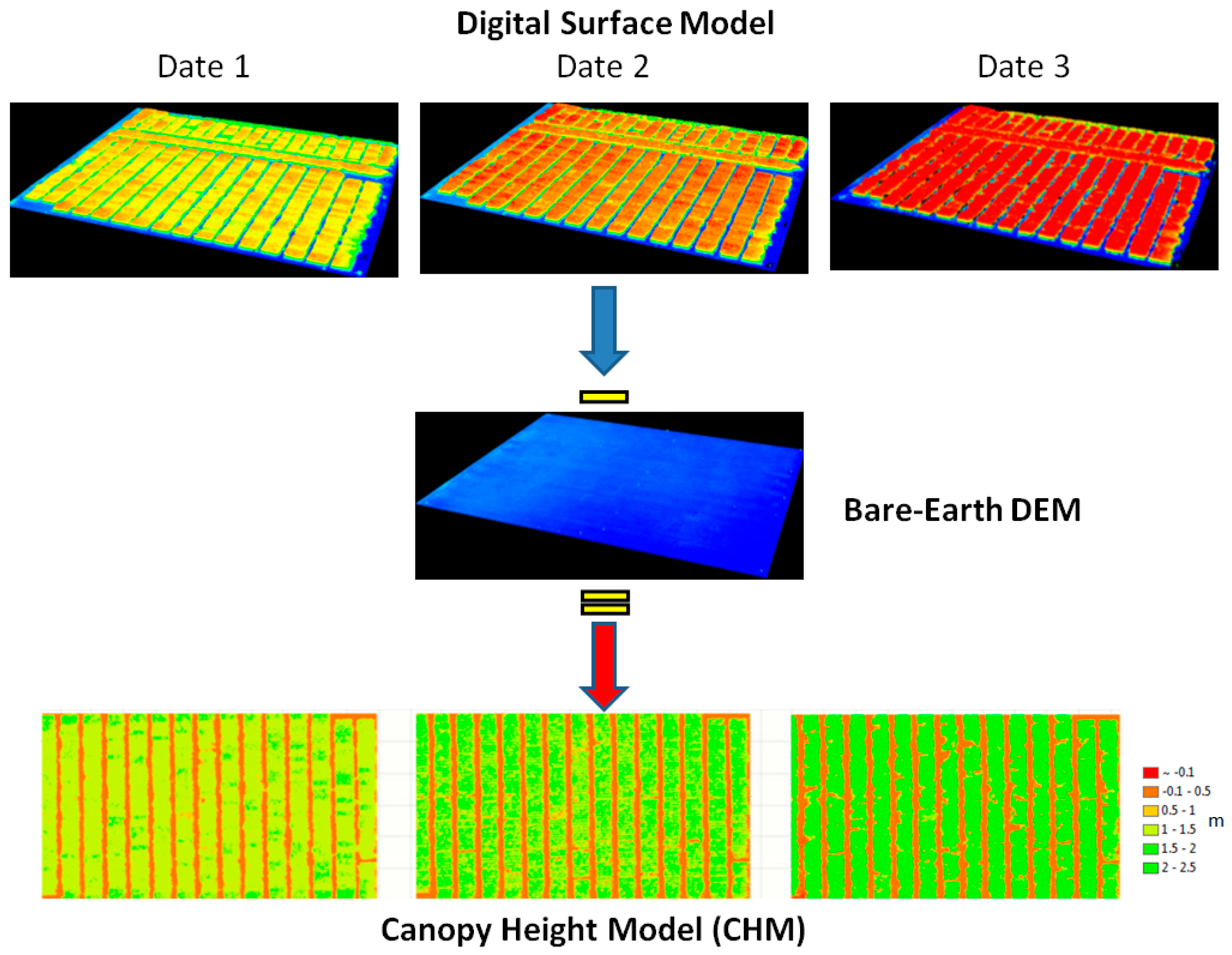

2.4. Canopy Height Model Generation

2.5. Plant Height Extraction from DSM

- (1)

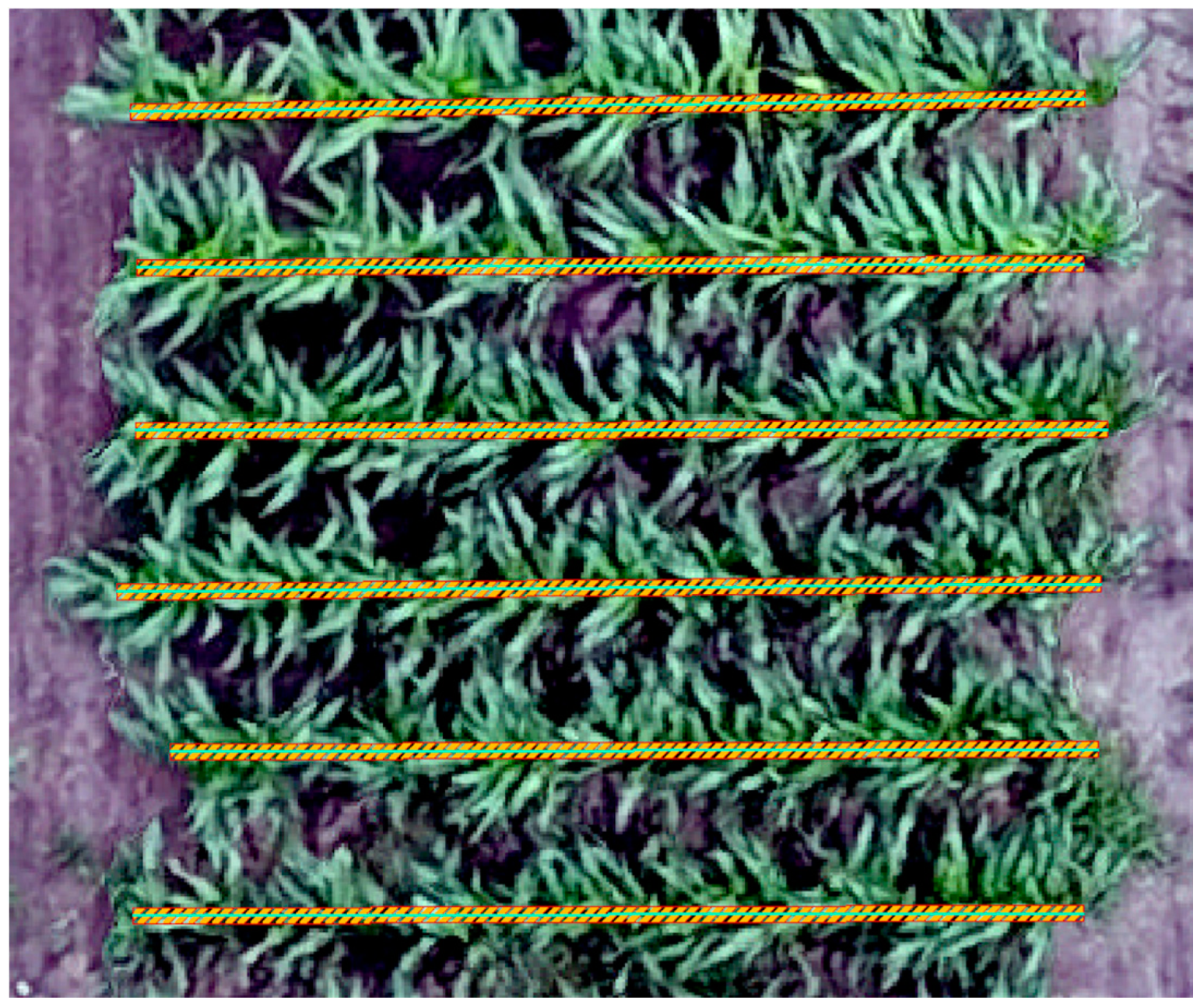

- Determination of the centerline on the georeferenced orthoimage or CHM raster for each row. In this step, two endpoints for each of the crop rows are manually selected and the centerline is drawn along the row. As demonstrated in Figure 4, the cyan solid line on each row represents the centerline. The lengths of the row centerlines may slightly differ, and a centerline is supposed to reasonably cover an entire row.

- (2)

- Drawing row polygons according to the centerlines. The polygons are regular rectangles with the long edges being the centerlines while the short edges being adjustable. Specifically, a centerline width of 10 cm was determined to filter out as many pixels as possible representing soil and lower leaves.

- (3)

- Computation of height information. Height is estimated on a per-row (or per-plot) basis using CHM values in the polygons painted with stripes along the row centerlines, as illustrated in Figure 4.

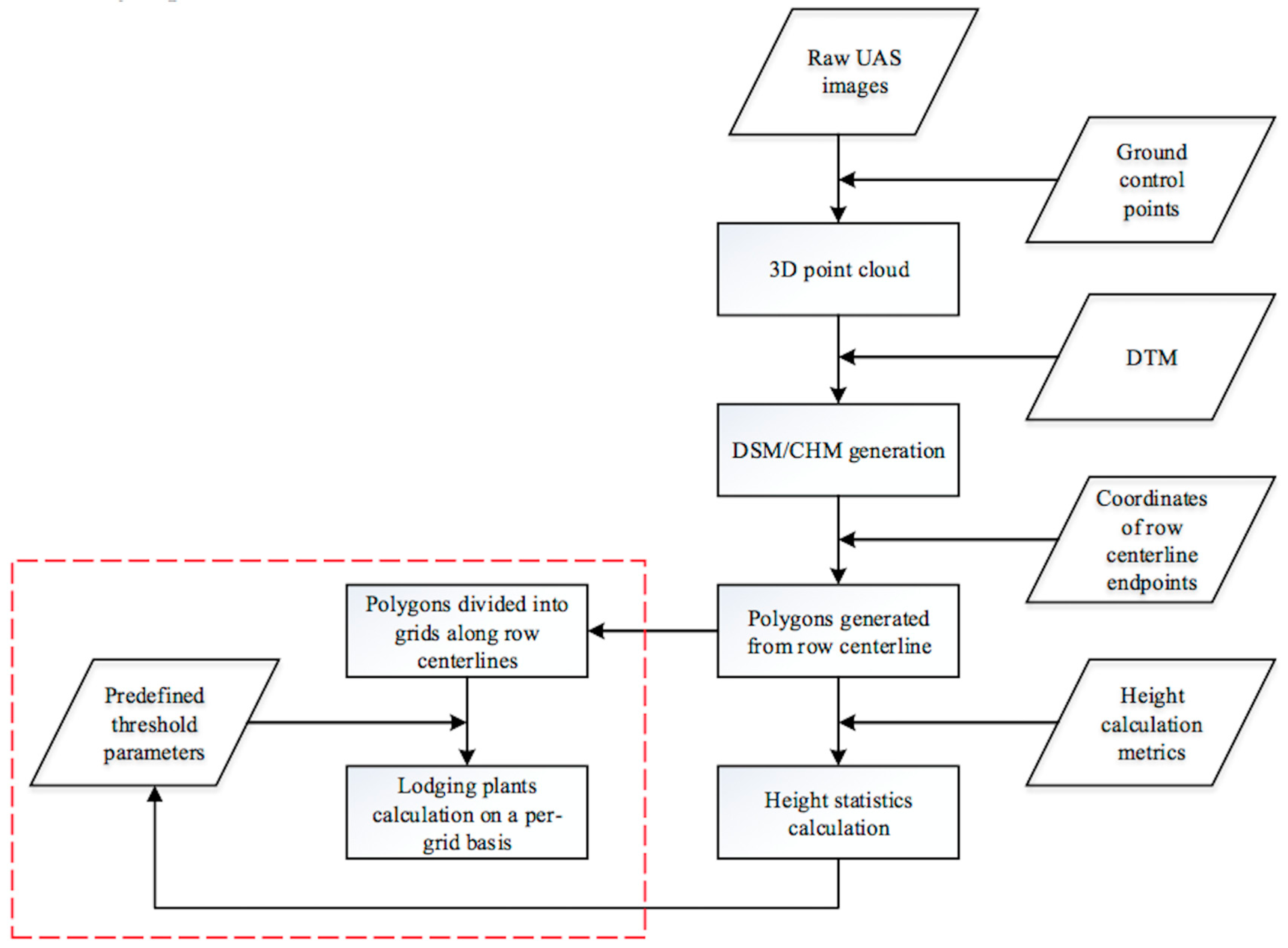

2.6. Lodging Detection Method

THEN non-lodging

ELSE

THEN lodging

3. Results and Discussion

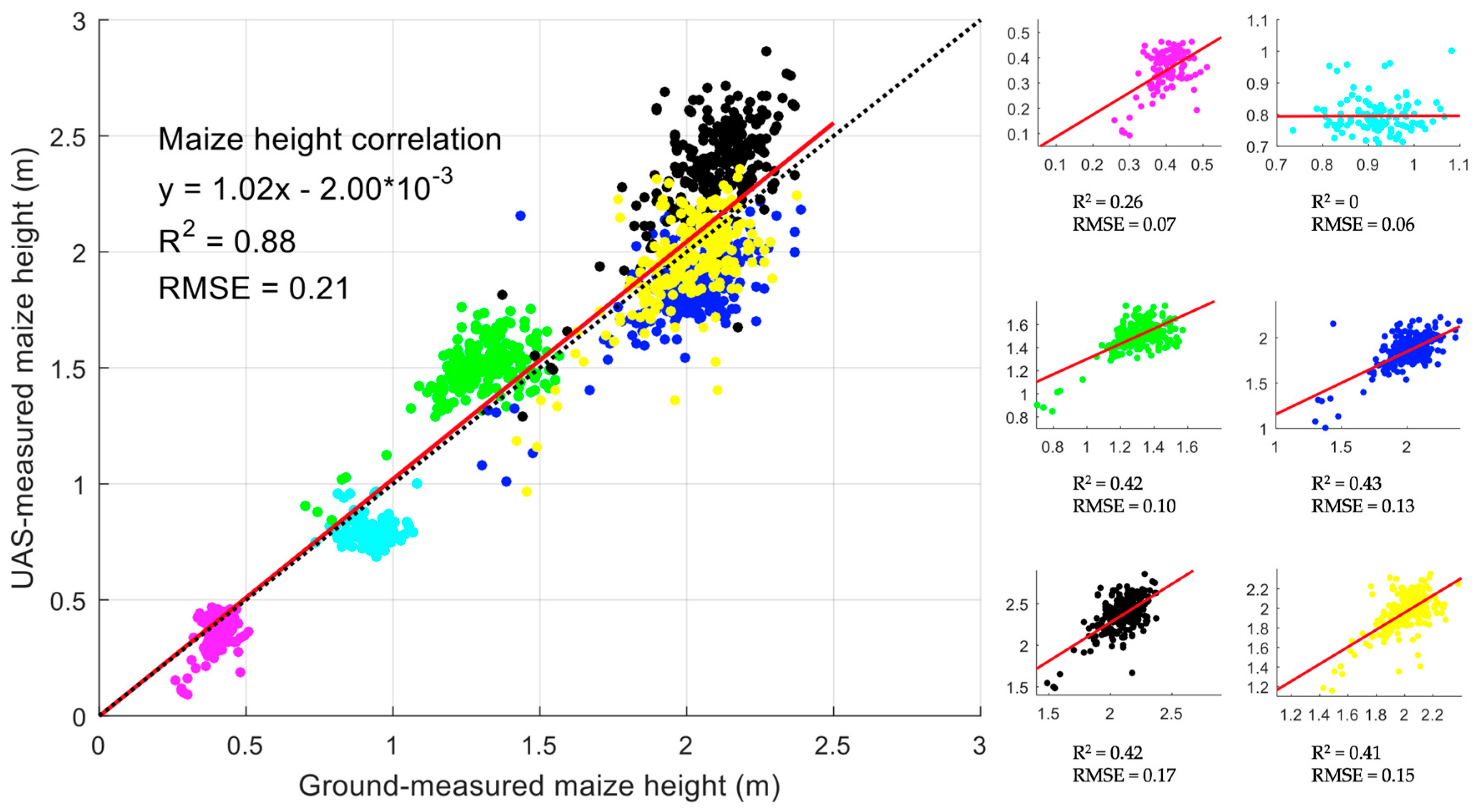

3.1. Plant Height Validation

3.2. Lodging and Non-lodging Comparison over the Growing Season

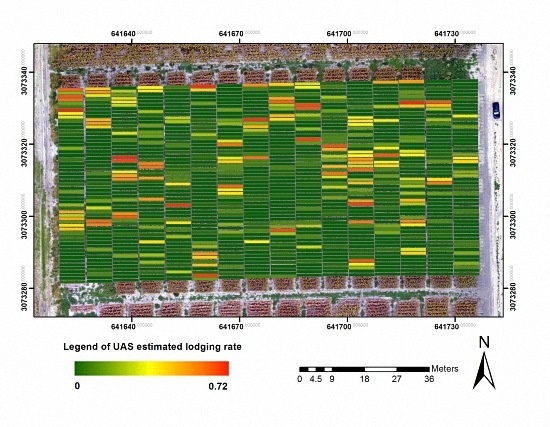

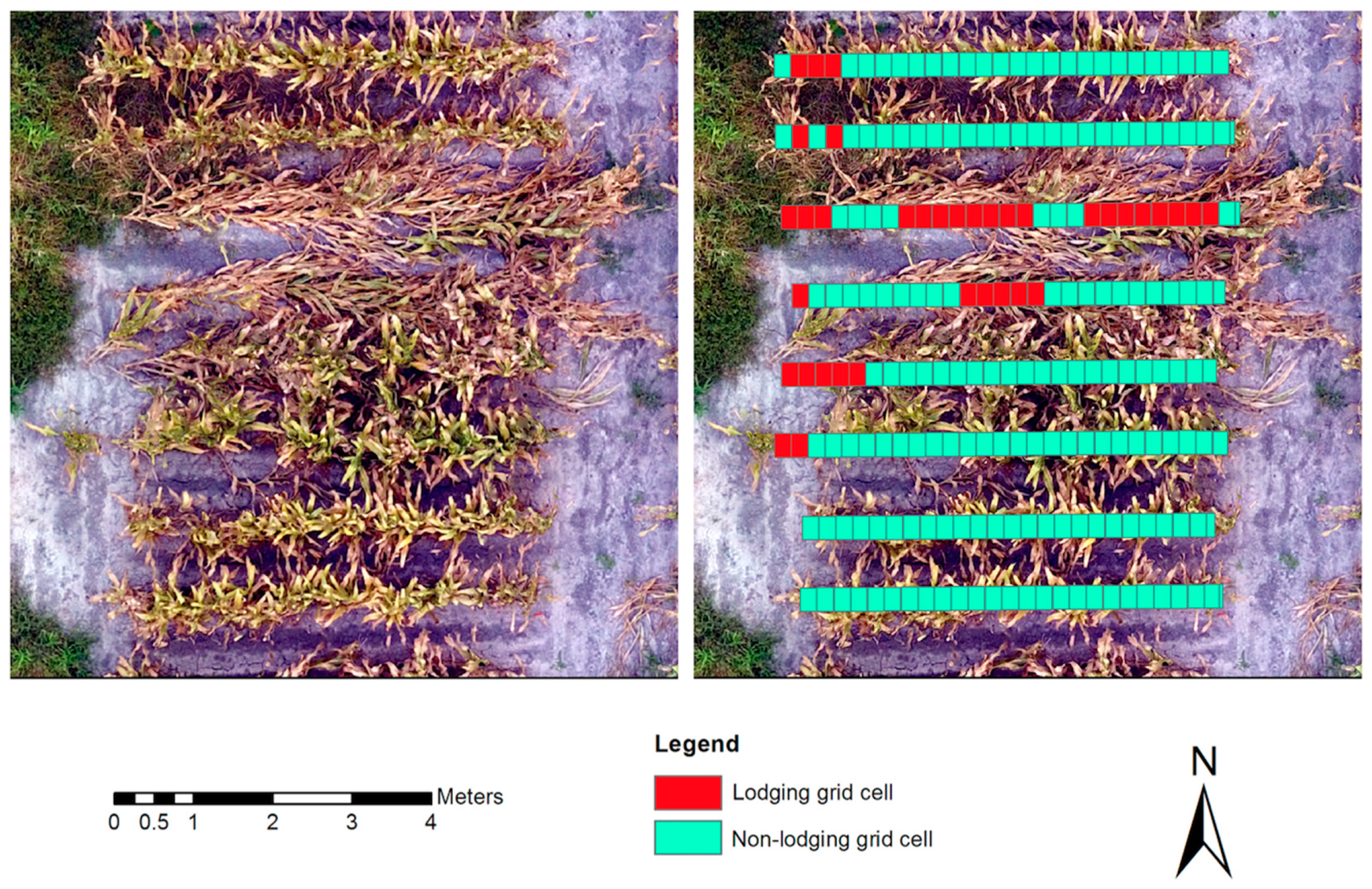

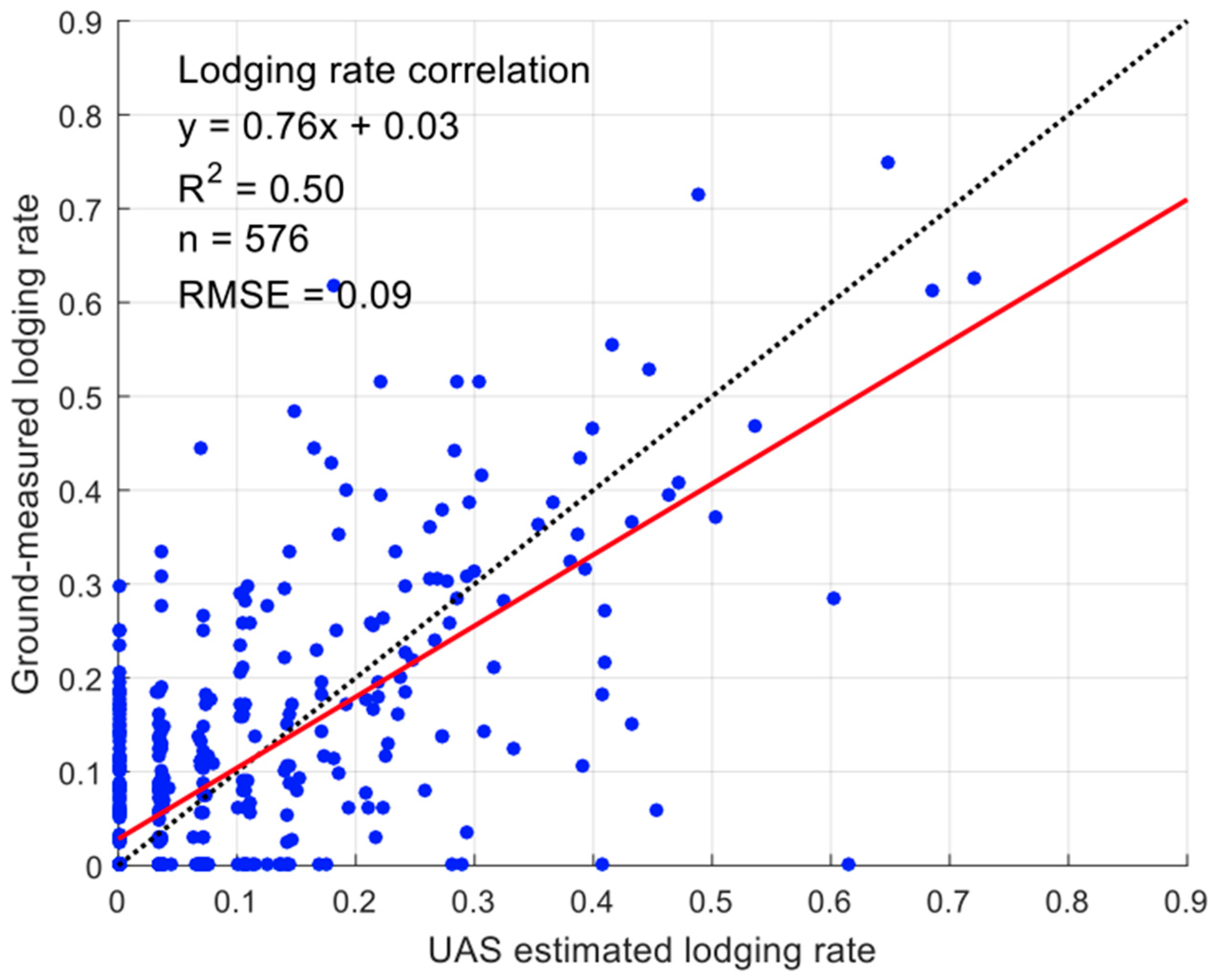

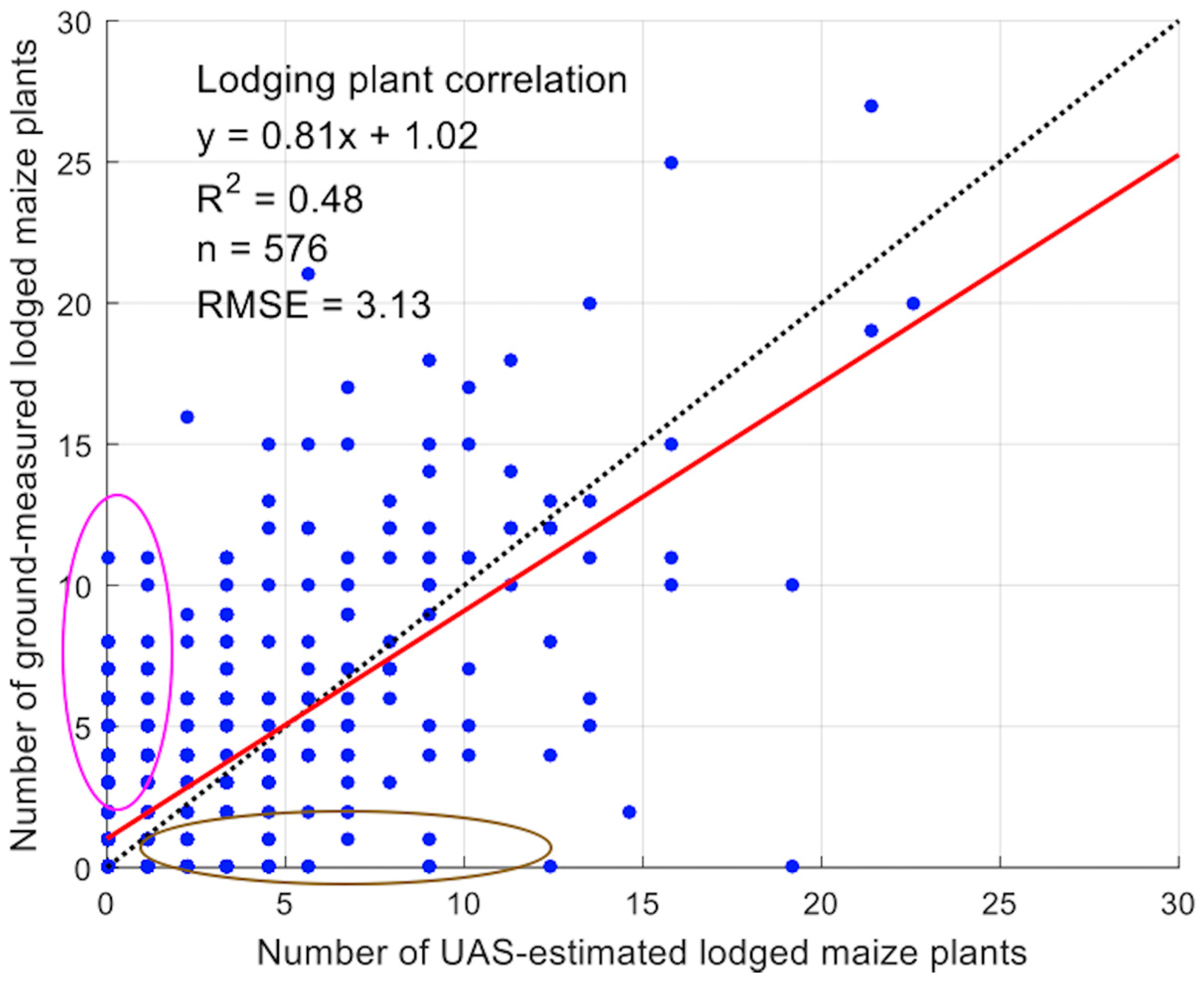

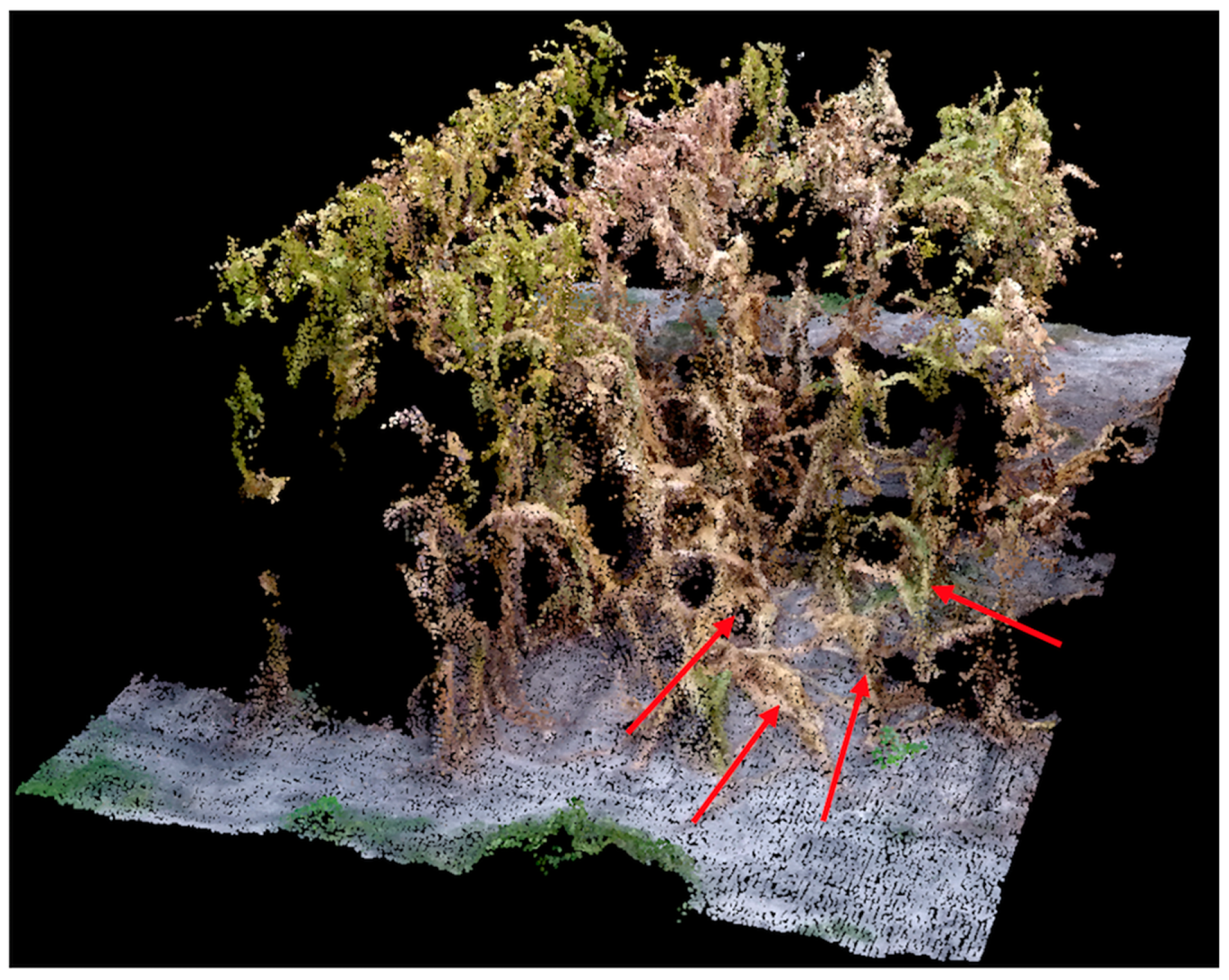

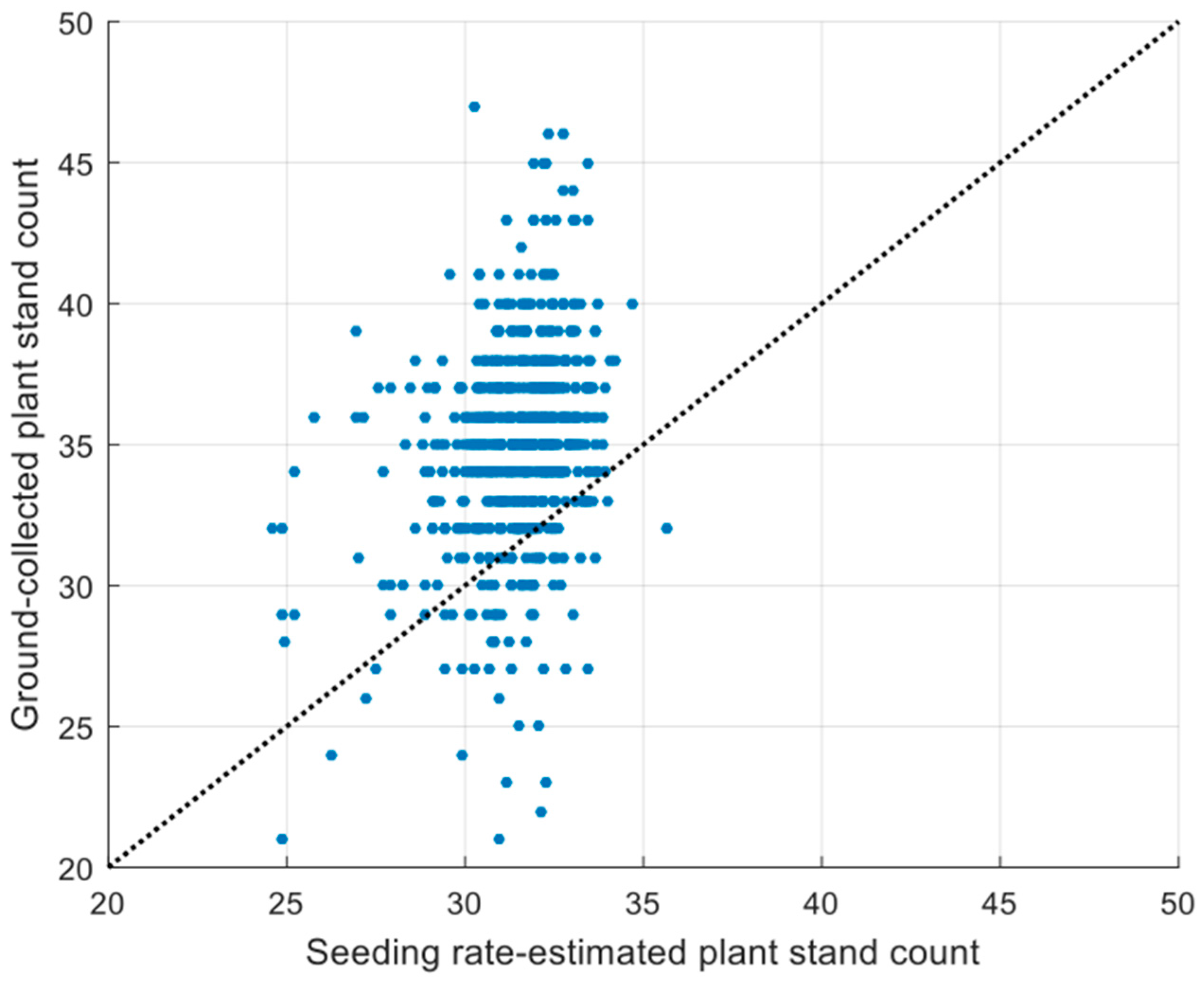

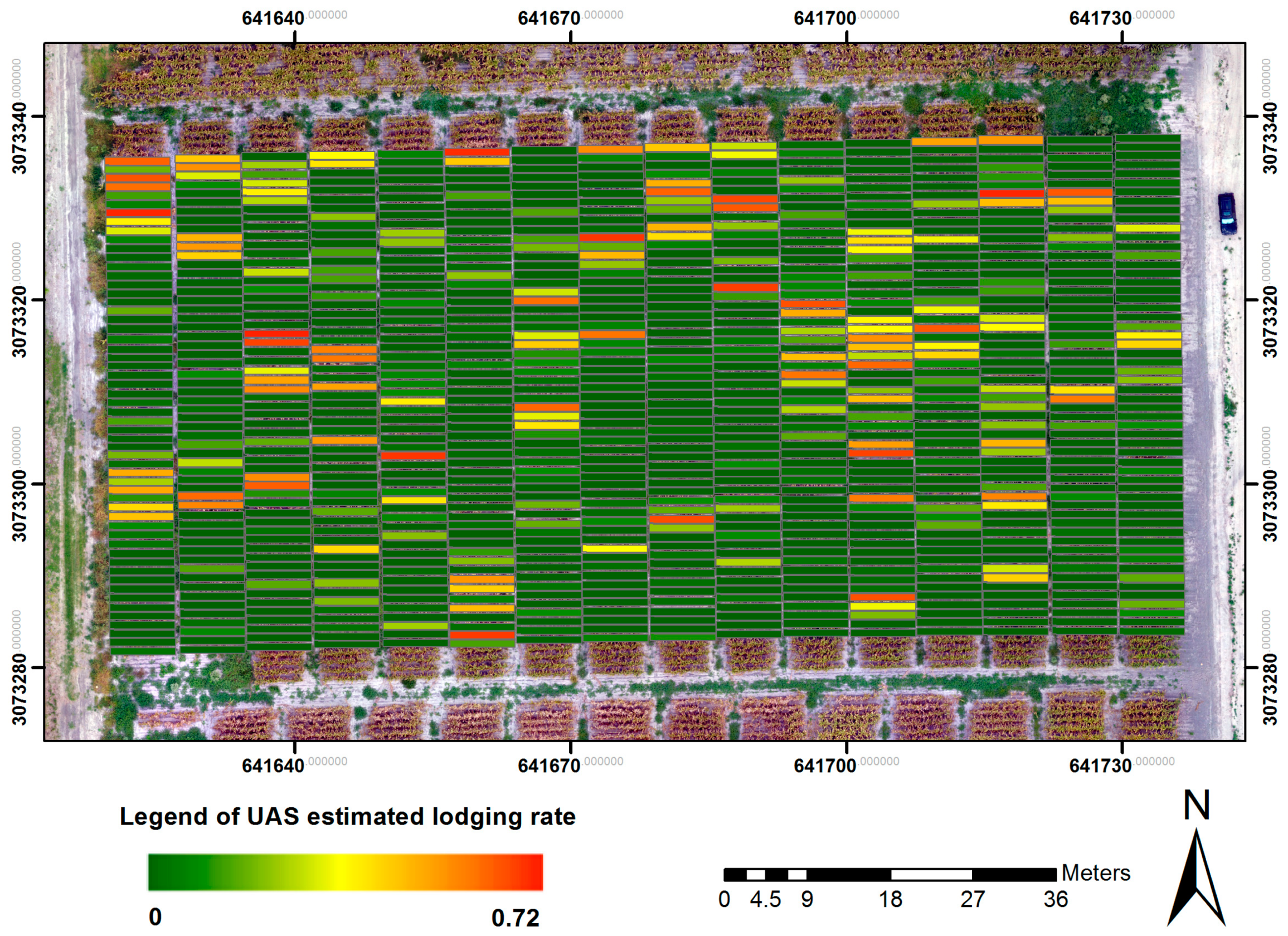

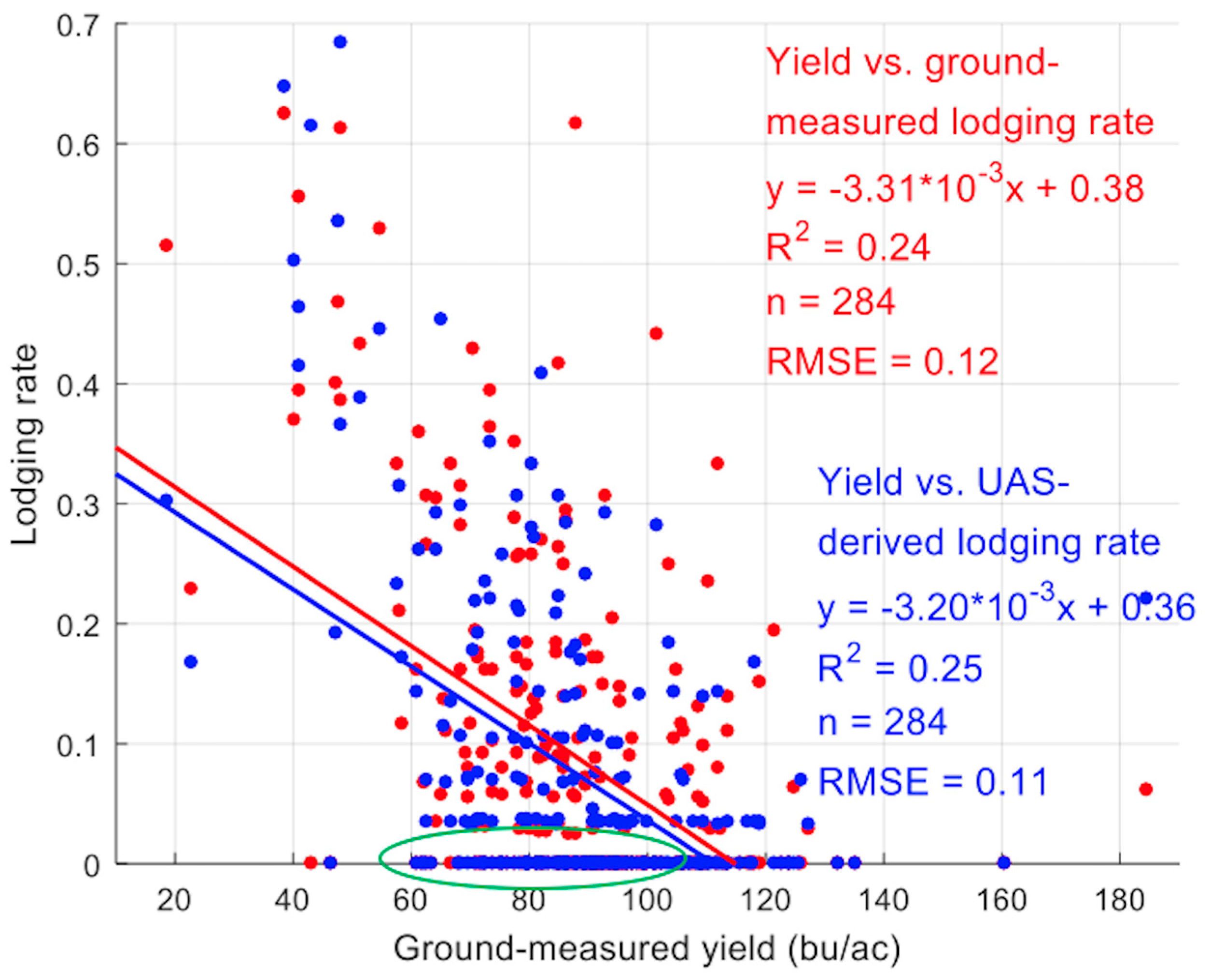

3.3. Lodging Assessment

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Nielsen, R.L.; Colville, D. Stalk Lodging in Corn: Guidelines for Preventive Management; Cooperative Extension Service, Purdue University: West Lafayette, IN, USA, 1988. [Google Scholar]

- Wu, W.; Ma, B.-L. A new method for assessing plant lodging and the impact of management options on lodging in canola crop production. Sci. Rep. 2016, 6, 31890. [Google Scholar] [CrossRef] [PubMed]

- Robertson, D.J.; Julias, M.; Lee, S.Y.; Cook, D.D. Maize stalk lodging: Morphological determinants of stalk strength. Crop Sci. 2017, 57, 926–934. [Google Scholar] [CrossRef]

- Grant, B.L. Types of Plant Lodging: Treating Plants Affected by Lodging. Available online: https://www.gardeningknowhow.com/edible/vegetables/vgen/plants-affected-by-lodging.htm/?print=1&loc=top (accessed on 13 March 2017).

- Elmore, R. Mid- to Late-Season Lodging. Iowa State University Extension and Outreach. Available online: http://crops.extension.iastate.edu/corn/production/management/mid/silking.html (accessed on 3 April 2017).

- Farfan, I.D.B.; Murray, S.C.; Labar, S.; Pietsch, D. A multi-environment trial analysis shows slight grain yield improvement in Texas commercial maize. Field Crop. Res. 2013, 149, 167–176. [Google Scholar] [CrossRef]

- Ogden, R.T.; Miller, C.E.; Takezawa, K.; Ninomiya, S. Functional regression in crop lodging assessment with digital images. J. Agric. Biol. Environ. Stat. 2002, 7, 389–402. [Google Scholar] [CrossRef]

- Zhang, J.; Gu, X.; Wang, J.; Huang, W.; Dong, Y.; Luo, J.; Yuan, L.; Li, Y. Evaluating maize grain quality by continuous wavelet analysis under normal and lodging circumstances. Sens. Lett. 2012, 10, 1–6. [Google Scholar] [CrossRef]

- Yang, H.; Chen, E.; Li, Z.; Zhao, C.; Yang, G.; Pignatti, S.; Casa, R.; Zhao, L. Wheat lodging monitoring using polarimetric index from RADARSAT-2 data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 157–166. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Gómez-Candón, D.; De Castro, A.I.; López-Granados, F. Assessing the accuracy of mosaics from unmanned aerial vehicle (UAV) imagery for precision agriculture purposes in wheat. Precis. Agric. 2014, 15, 44–56. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The applications of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Chen, R.; Chu, T.; Landivar, J.A.; Yang, C.; Maeda, M.M. Monitoring cotton (Gossypium hirsutum L.) germination using ultrahigh-resolution UAS images. Precis. Agric. 2017. [Google Scholar] [CrossRef]

- Chu, T.; Chen, R.; Landivar, J.A.; Maeda, M.M.; Yang, C.; Starek, M.J. Cotton growth modeling and assessment using unmanned aircraft system visual-band imagery. J. Appl. Remote Sens. 2016, 10, 036018. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV−LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A.J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Murakami, T.; Yui, M.; Amaha, K. Canopy height measurement by photogrammetric analysis of aerial images: Application to buckwheat (Fagopyrum esculentum Moench) lodging evaluation. Comput. Electron. Agric. 2012, 89, 70–75. [Google Scholar] [CrossRef]

- Yang, M.-D.; Huang, K.-S.; Kuo, Y.-H.; Tsai, H.P.; Lin, L.-M. Spatial and spectral hybrid image classification for rice lodging assessment through UAV imagery. Remote Sens. 2017, 9, 583. [Google Scholar] [CrossRef]

- Friedli, M.; Kirchgessner, N.; Grieder, C.; Liebisch, F.; Mannale, M.; Walter, A. Terrestrial 3D laser scanning to track the increase in canopy height of both monocot and dicot crop species under field conditions. Plant Methods 2016, 12, 9. [Google Scholar] [CrossRef] [PubMed]

- Khanna, R.; Möller, M.; Pfeifer, J.; Liebisch, F.; Walter, A.; Siegwart, R. Beyond point clouds–3D mapping and field parameter measurements using UAVs. In Proceedings of the 20th IEEE Conference on Emerging Technologies&Factory Automation (ETFA), Luxembourg, 8–11 September 2015. [Google Scholar]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Anthony, D.; Elbaum, S.; Lorenz, A.; Detweiler, C. On crop height estimation with UAVs. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- De Souza, C.H.W.; Lamparelli, R.A.C.; Rocha, J.V.; Magalhães, P.S.G. Height estimation of sugarcane using an unmanned aerial system (UAS) based on structure from motion (SfM) point clouds. Int. J. Remote Sens. 2017, 38, 2218–2230. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV−based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Stanton, C.; Starek, M.J.; Elliott, N.; Brewer, M.; Maeda, M.M.; Chu, T. Unmanned aircraft system-derived crop height and normalized difference vegetation index metrics for sorghum yield and aphid stress assessment. J. Appl. Remote Sens. 2017, 11, 026035. [Google Scholar] [CrossRef]

- Chu, T.; Starek, M.J.; Brewer, M.J.; Masiane, T.; Murray, S.C. UAS imaging for automated crop lodging detection: A case study over an experimental maize field. In Proceedings of the SPIE 10218, Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping II, Anaheim, CA, USA, 10–11 April 2017. [Google Scholar]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Willkomm, M.; Bolten, A.; Bareth, G. Non-destructive monitoring of rice by hyperspectral in-field spectrometry and UAV-based remote sensing: Case study of field-grown rice in north Rhine-Westphalia, Germany. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 1071–1077. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Calculating Harvest Yields. Purdue University Research Repository. Available online: https://purr.purdue.edu/publications/1600/serve/1/3332?el=3&download=1 (accessed on 25 May 2017).

- Starek, M.J.; Davis, T.; Prouty, D.; Berryhill, J. Small-scale UAS for geoinformatics applications on an island campus. In Proceedings of the 2014 Ubiquitous Positioning Indoor Navigation and Location Based Service (UPINLBS), Corpus Christi, TX, USA, 20–21 November 2014. [Google Scholar]

- Li, W.; Niu, Z.; Gao, S.; Huang, N.; Chen, H. Correlating the horizontal and vertical distribution of LiDAR point clouds with components of biomass in a Picea crassifolia forest. Forests 2014, 5, 1910–1930. [Google Scholar] [CrossRef]

- Næsset, E.; Bollandsås, O.M.; Gobakken, T.; Gregoire, T.G.; Ståhl, G. Model-assisted estimation of change in forest biomass over an 11 year period in a sample survey supported by airborne LiDAR: A case study with post-stratification to provide “activity data”. Remote Sens. Environ. 2013, 128, 299–314. [Google Scholar] [CrossRef]

- Pike, R.J.; Wilson, S.E. Elevation–relief ratio, hypsometric integral, and geomorphic area–altitude analysis. Geol. Soc. Am. Bull. 1971, 82, 1079–1084. [Google Scholar] [CrossRef]

- Montealegre, A.L.; Lamelas, M.T.; Tanase, M.A.; de la Riva, J. Forest fire severity assessment using ALS data in a mediterranean environment. Remote Sens. 2014, 6, 4240–4265. [Google Scholar] [CrossRef]

- Parker, G.G.; Russ, M.E. The canopy surface and stand development: Assessing forest canopy structure and complexity with near-surface altimetry. For. Ecol. Manag. 2004, 189, 307–315. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D. Characterizing canopy structural complexity for the estimation of maize LAI based on ALS data and UAV stereo images. Int. J. Remote Sens. 2017, 38, 2106–2116. [Google Scholar] [CrossRef]

- Nielsen, R.L. Root Lodging Concerns in Corn. Purdue University. Available online: https://www.agry.purdue.edu/ext/corn/news/articles.02/RootLodge-0711.html (accessed on 13 March 2017).

- Tang, L. Robotic Technologies for Automated High-Throughput Plant Phenotyping. Iowa State University. Available online: http://www.me.iastate.edu/smartplants/files/2013/12/Robotic-Technologies-for-Automated-High-Throughput-Phenotyping-Tang.pdf (accessed on 26 April 2017).

- Gnädinger, F.; Schmidhalter, U. Digital counts of maize plants by unmanned aerial vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef]

- Benassi, F.; Dall’Asta, E.; Diotri, F.; Forlani, G.; Morra di Cella, U.; Roncella, R.; Santise, M. Testing accuracy and repeatability of UAV blocks oriented with GNSS-supported aerial triangulation. Remote Sens. 2017, 9, 172. [Google Scholar] [CrossRef]

- Romeo, J.; Pajares, G.; Montalvo, M.; Guerrero, J.M.; Guijarro, M.; Ribeiro, A. Crop row detection in maize fields inspired on the human visual perception. Sci. World J. 2012, 2012, 484390. [Google Scholar] [CrossRef] [PubMed]

| Canon PowerShot S110 | GoPro HERO3+ Black Edition | DJI FC330 | DJI FC200 | |

|---|---|---|---|---|

| UAS platform | senseFly eBee fixed-wing | 3DR Solo quadcopter | DJI Phantom 4 quadcopter | DJI Phantom 2 Vision+ quadcopter |

| Camera band | R-G-NIR * | R-G-B | R-G-B | R-G-B |

| Lens type | Perspective | Fisheye | Perspective | Fisheye |

| Array (pixels) | 4048 × 3048 | 4000 × 3000 | 4000 × 3000 | 4608 × 3456 |

| Sensor size (mm × mm) | 7.4 × 5.6 | 6.2 × 4.7 | 6.3 × 4.7 | 6.2 × 4.6 |

| Focal length (mm) ** | 24 | 15 | 20 | 30 |

| Exposure time (sec) | 1/2000 | Auto | Auto | Auto |

| F-stop *** | f/2 | f/2.8 | f/2.8 | f/2.8 |

| ISO | 80 | 100 | 100 | 100 |

| Image format | TIFF | JPEG | JPEG | JPEG |

| Flight Date | Platform | Sensor Type | Flight Height (m) | Image Number Taken | GSD (mm/Pixel) | Point Density (Points/m2) |

|---|---|---|---|---|---|---|

| 12 April 2016 | DJI Phantom 2 Vision+ | RGB | 40 | 84 | 17.1 | 1548.9 |

| 22 April 2016 | DJI Phantom 4 | RGB | 50 | 89 | 21.8 | 649.6 |

| 27 April 2016 | DJI Phantom 4 | RGB | 50 | 84 | 23.3 | 764.5 |

| 6 May 2016 | DJI Phantom 4 | RGB | 30 | 540 | 13.7 | 1333.4 |

| 17 May 2016 | DJI Phantom 4 | RGB | 40 | 276 | 19.1 | 686.1 |

| 20 May 2016 | DJI Phantom 4 | RGB | 30 | 418 | 13.5 | 3145.1 |

| 26 May 2016 | eBee | NIR | 101 | 179 | 38.4 | 81.6 |

| 31 May 2016 | DJI Phantom 4 | RGB | 40 | 414 | 19.0 | 1545.1 |

| 8 June 2016 | 3DR Solo | RGB | 30 | 691 | 20.0 | 865.1 |

| 9 June 2016 | eBee | NIR | 101 | 178 | 40.7 | 76.2 |

| 10 June 2016 | 3DR Solo | RGB | 30 | 674 | 20.7 | 748.8 |

| 14 June 2016 | 3DR Solo | RGB | 30 | 685 | 21.4 | 869.2 |

| 23 June 2016 | 3DR Solo | RGB | 30 | 674 | 19.7 | 868.1 |

| 23 June 2016 | eBee | NIR | 101 | 200 | 39.4 | 59.6 |

| 28 June 2016 | 3DR Solo | RGB | 30 | 657 | 21.0 | 794.9 |

| 30 June 2016 | DJI Phantom 4 | RGB | 20 | 656 | 7.7 | 5995.1 |

| 12 July 2016 | 3DR Solo | RGB | 30 | 676 | 19.3 | 989.5 |

| 13 July 2016 | DJI Phantom 4 | RGB | 20 | 585 | 10.2 | 1540.3 |

| 15 July 2016 | eBee | NIR | 101 | 168 | 40.1 | 51.2 |

| Dot Color in Figure 6 | Date Ground Height Collected | Flight Experiment Date Closest to the Ground Data Collection Date | UAS Platform | Portion of the Field Trial Included |

|---|---|---|---|---|

| Magenta | 26 April 2016 | 27 April 2016 | DJI Phantom 4 | Upper |

| Cyan | 6 May 2016 | 6 May 2016 | DJI Phantom 4 | Lower |

| Green | 13 May 2016 | 17 May 2016 | DJI Phantom 4 | Upper and lower |

| Blue | 27 May 2016 | 26 May 2016 | eBee | Upper and lower |

| Black | 6 June 2016 | 8 June 2016 | 3DR Solo | Upper and lower |

| Yellow | 1 July 2016 | 30 June 2016 | DJI Phantom 4 | Upper and lower |

| GLR | Hmin | Hmax | Hmean | Hstd | H50 | H80 | H99 | Herr | Hcv | Yield | ULR | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GLR | 1.00 | |||||||||||

| Hmin | −0.05 | 1.00 | ||||||||||

| Hmax | −0.08 | 0.06 | 1.00 | |||||||||

| Hmean | −0.59 * | 0.22 * | 0.29 * | 1.00 | ||||||||

| Hstd | 0.33 * | −0.30 * | 0.33 * | −0.47 * | 1.00 | |||||||

| H50 | −0.58 * | 0.17 * | 0.25 * | 0.96 * | −0.35 * | 1.00 | ||||||

| H80 | −0.48 | 0.12 * | 0.52 * | 0.83 * | 0.04 | 0.82 * | 1.00 | |||||

| H99 | −0.12 * | 0.08 | 0.86 * | 0.43 * | 0.31 * | 0.38 * | 0.68 * | 1.00 | ||||

| Herr | −0.60 * | 0.14 * | −0.04 | 0.94 * | −0.58 * | 0.92 * | 0.70 * | 0.16 * | 1.00 | |||

| Hcv | 0.64 * | −0.21 * | −0.08 ** | −0.92 * | 0.57 * | −0.90 * | −0.72 * | −0.19 * | −0.94 * | 1.00 | ||

| Yield | −0.49 * | −0.53 * | 0.27 * | 0.62 * | −0.17 * | 0.60 * | 0.59 * | 0.36 * | 0.55 * | −0.54 * | 1.00 | |

| ULR | 0.71 * | −0.11 | −0.14 * | −0.74 * | 0.44 * | −0.74 * | −0.60 * | −0.21 * | −0.75 * | 0.83 * | −0.50 * | 1.00 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chu, T.; Starek, M.J.; Brewer, M.J.; Murray, S.C.; Pruter, L.S. Assessing Lodging Severity over an Experimental Maize (Zea mays L.) Field Using UAS Images. Remote Sens. 2017, 9, 923. https://doi.org/10.3390/rs9090923

Chu T, Starek MJ, Brewer MJ, Murray SC, Pruter LS. Assessing Lodging Severity over an Experimental Maize (Zea mays L.) Field Using UAS Images. Remote Sensing. 2017; 9(9):923. https://doi.org/10.3390/rs9090923

Chicago/Turabian StyleChu, Tianxing, Michael J. Starek, Michael J. Brewer, Seth C. Murray, and Luke S. Pruter. 2017. "Assessing Lodging Severity over an Experimental Maize (Zea mays L.) Field Using UAS Images" Remote Sensing 9, no. 9: 923. https://doi.org/10.3390/rs9090923