The Fusion of an Ultrasonic and Spatially Aware System in a Mobile-Interaction Device

Abstract

:1. Introduction

2. Related Work

- Paper systems: Many interactive paper systems have been developed to combine the benefits of paper and digital media. The Anoto technology [10] is a pen-based interaction system which can track handwriting on a physical paper and augment paper documents with digital information. Liao [11] designed a pen-based command system for interactive paper. He proposed pen-top multimodal feedback which combines visual, tactile, and auditory feedback to support paper-computer interaction. Hotpaper [12] aims at augmenting paper information with multimedia annotations (such as video or audio). The system can analyze the physical information which is a captured document patch image or video frame to identify the corresponding digital information such as electronic document, page number, and location on the page. Paper Composer [13] is an interactive paper interface for music composition. This system supports composers’ expressions and explorations in a music book by computer-aided composition tools. S-Notebook [14] is a mobile application that connects mobile devices with interactive paper using an Anoto pen. It allows users to add annotations or drawings to anchors in digital images without learning pre-defined pen gestures and commands. These paper systems combine traditional paper with digital information. Most of the approaches, however, utilized computers or special pens. Our approach improve the portability of interactive paper system using touch-based mobile devices.

- Spatial aware computing: Spatial aware computing had been applied in interactive paper systems. MouseLight [15] is one of the examples. A spatially aware projector is made with a mobile laser projector. It can detect the position of the digital pen and track the handwriting from the end user. This application, however, is a bimanual hardware. It is very hard for users to write and operate the system at the same time. In our paper, we are presenting a new spatial aware interactive system which can be operated by a single-handed device.

- Augmented reality: Camera-based approach: Currently, camera-based augmented reality technique has been widely applied in the field of digital images and traditional paper document interaction. The SESIL [16], an augmented reality environment for students' improved Learning, is a novel approach to setting up a digital environment to perform the recognition of physical book pages and of specific elements of interest within pages. The pages in books can be captured by a camera. The system can recognize images from the camera and produce an electronic page which can produces an interaction with actual books and pens/pencils. Jee et al. [17] designed an electronic learning system which can allow users to read 3D virtual content from traditional textbooks. This system creates a 3D modeling environment based on the content of physical book. To improve the portability, integrated cameras in handheld devices have been adopted in the field of camera-based augmented reality. Hansen et al. [18] used integrated cameras in mobile devices to address how mixed interaction spaces can have different identities, can be mapped to applications, and can be visualized. By applying image analysis algorithms to the camera pictures, movements or actions such as rotation and marking, can be determined. These camera based approaches require high computing power to identify objects in a real environment.

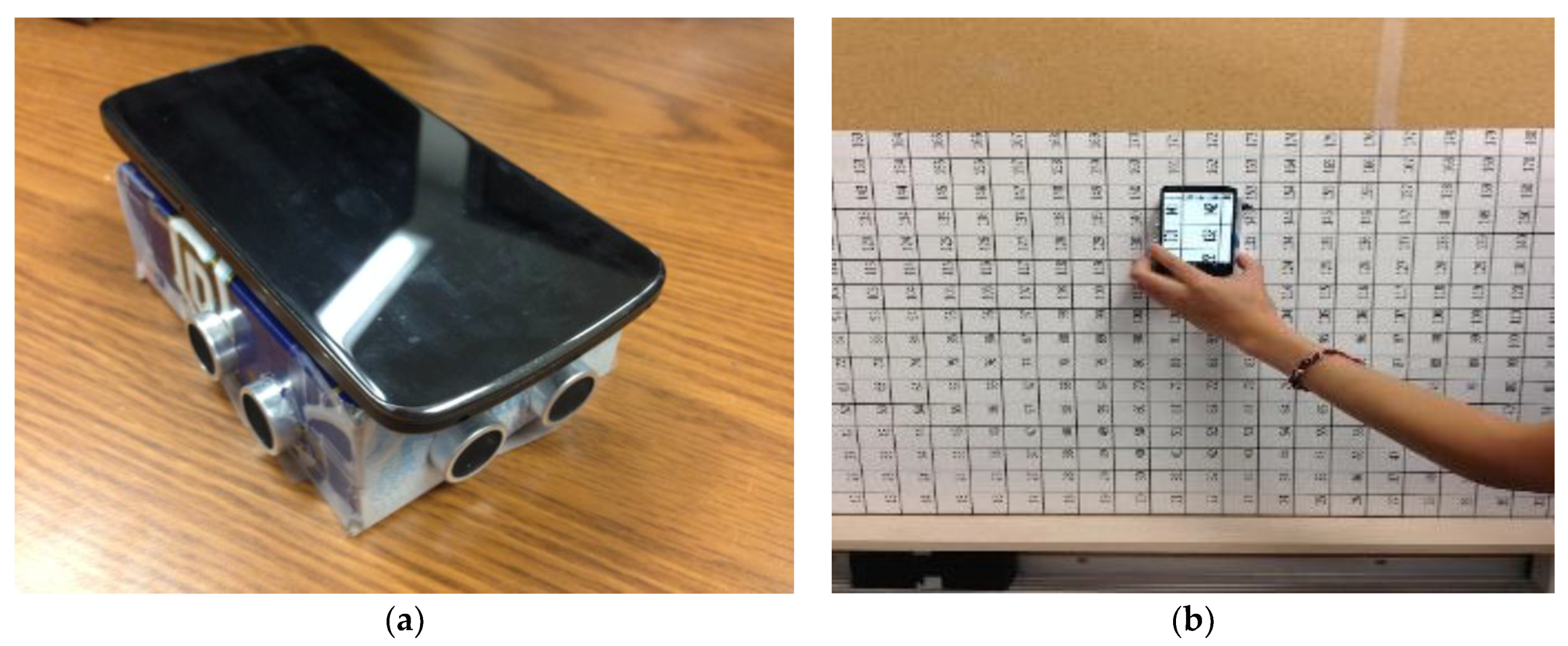

3. Ultrasonic PhoneLens

3.1. Hardware Components

- The physical size of an Arduino board with Ultrasonic sound sensors is very compact and space-saving, which makes Ultrasonic PhoneLens easy to use and carry.

- Ultrasonic sound sensors and Arduino boards (<$30) are inexpensive. Furthermore, smartphones and paper documents are quite common in our daily life.

3.2. System Architecture Overview

3.3. Data Preprocessing

- 1.

- Previous data comparison;

- 2.

- Average distance calculation;

- 3.

- Linear regression; and

- 4.

- Noise elimination.

3.3.1. Previous Data Comparison

3.3.2. Average Distance Calculation

3.3.3. Linear Regression

3.3.4. Noise Elimination

3.3.5. Accuracy Evaluation

3.4. Multi-User Communication

4. Empirical Evaluation

4.1. Methodology

4.2. Subjects and Evaluation Document

4.3. Tasks

4.3.1. Navigation Task

- a.

- Browse the audio plan, and find the position of the junction box on the plan.

- b.

- Browse the electric power plan, and find the position of a circuit breaker on the plan.

4.3.2. Multi-User Communication Task

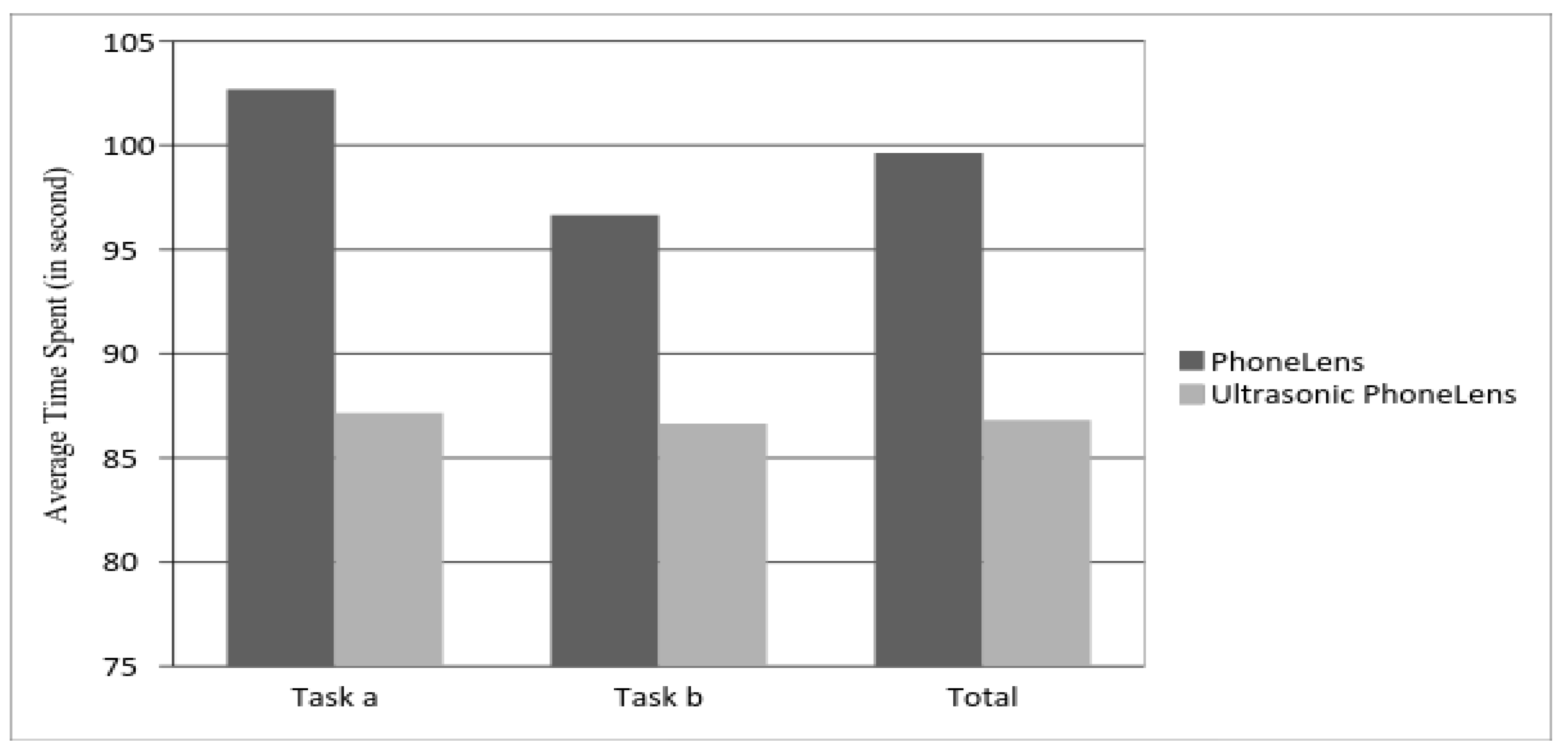

5. Result and Analysis

5.1. Comparison of Ultrsonic Phonelens and Wiimote Phonelens

5.1.1. Browsing Efficiency

5.1.2. Subjective Feedback

5.2. Evaluation on the Performance of Multi-Users Communication

6. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gherghina, A.; Olteanu, A.; Tapus, N. A marker-based augmented reality system for mobile devices. In Proceedings of the 11th IEEE Roedunet International Conference (RoEduNet), Sinaia, Romania, 17–19 January 2013; pp. 1–6. [Google Scholar]

- Rohs, M. Marker-based embodied interaction for handheld augmented reality games. J. Virtual Real. Broadcast. 2007, 4, 1860–2037. [Google Scholar]

- Nishino, H. A shape-free, designable 6-DoF marker tracking method for camera-based interaction in mobile environment. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 1055–1058. [Google Scholar]

- Sun, K.; Yu, J. Video affective content recognition based on genetic algorithm combined HMM. Proceedings of 2007 International Conference of Entertainment Computing, Berlin/Heidelberg, Germany, 2007; pp. 249–254. [Google Scholar]

- Arif, T.; Singh, T.; Bose, J. A system for intelligent context based content mode in camera applications. In Proceedings of the ICACCI, 2014 International Conference on Advances in Computing, Communications and Informatics, New Delhi, India, 24–27 September 2014; pp. 1504–1508. [Google Scholar]

- Mottola, L.; Cugola, G.; Picco, G.P. A self-repairing tree topology enabling content-based routing in mobile ad hoc networks. IEEE Trans. Mob. Comput. 2008, 7, 946–960. [Google Scholar] [CrossRef]

- Reilly, D.; Rodgers, M.; Argue, R.; Nunes, M.; Inkpen, K. Marked-up maps: Combining paper maps and electronic information resources. Pers. Ubiquit. Comput. 2006, 10, 215–226. [Google Scholar] [CrossRef]

- Lee, J.C. Hacking the nintendo wii remote. Perv. Comput. IEEE 2008, 39–45. [Google Scholar] [CrossRef]

- Roudaki, A.; Kong, J.; Walia, G.S. PhoneLens: A low-cost, spatially aware, mobile-interaction device. IEEE Trans. Hum. Mach. Syst. 2014, 44, 301–314. [Google Scholar]

- Anoto, A.B. Development Guide for Services Enabled by Anoto Functionality. 2002. Available online: http://www.citeulike.org/user/johnsogg/article/4294876 (accessed on 4 June 2017).

- Liao, C.; Guimbretieere, F. Evaluating and understanding the usability of a pen-based command system for interactive paper. ACM Trans. Comput. Hum. Interact. 2012, 19. [Google Scholar] [CrossRef]

- Erol, B.; Antunez, E.; Hull, J.J. Hotpaper: Multimedia interaction with paper using mobile phones. Proc. Multimed. 2008, 399–408. [Google Scholar] [CrossRef]

- Garcia, J.; Tsandilas, T.; Agon, C.; Mackay, W. PaperComposer: Creating interactive paper interfaces for music composition. In Proceedings of the 26th Conference l’Interaction Homme-Machine, Lille, France, 28–31 October 2014; pp. 1–8. [Google Scholar]

- Pietrzak, T.; Malacria, S.; Lecolinet, E. S-Notebook: Augmenting Mobile Devices with Interactive Paper for Data Management. In Proceedings of the International Working Conference on Advanced Visual Interfaces, Capri Island, Italy, 21–25 May 2012; pp. 733–736. [Google Scholar]

- Song, H.; Guimbretiere, F.; Grossman, T.; Fitzmaurice, G. MouseLight: Bimanual interactions on digital paper using a pen and a spatially-aware mobile projector. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 2451–2460. [Google Scholar]

- Margetis, G.; Zabulis, X.; Koutlemanis, P.; Antona, M.; Stephanidis, C. Augmented interaction with physical books in an Ambient Intelligence learning environment. Multimed. Tools Appl. 2013, 67, 473–495. [Google Scholar] [CrossRef]

- Jee, H.K.; Lim, S.; Youn, J.; Lee, J. An augmented reality-based authoring tool for E-learning applications. Multimed. Tools Appl. 2014, 68, 225–235. [Google Scholar] [CrossRef]

- Hansen, T.R.; Eriksson, E.; Lykke-Olesen, A. Mixed interaction space: Designing for camera based interaction with mobile devices. In Proceedings of the CHI’05 Extended Abstracts on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; pp. 1933–1936. [Google Scholar]

- Masutani, Y.; Dohi, T.; Yamane, F.; Iseki, H.; Takakura, K. Augmented reality visualization system for intravascular neurosurgery. Comput. Aided Surg. 1998, 3, 239–247. [Google Scholar] [CrossRef] [PubMed]

- Klemmer, S.R.; Graham, J.; Wolff, G.J.; Landay, J.A. Books with voices: Paper transcripts as a physical interface to oral histories. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’03), Ft. Lauderdale, FL, USA, 5–10 April 2003; pp. 89–96. [Google Scholar]

- Rekimoto, J. CyberCode: Designing Augmented Reality Environments. In Proceedings of the DARE: Designing Augmented Reality Environments, Elsinore, Denmark, 12–14 April 2000; pp. 1–10. [Google Scholar]

- Rohs, M. Visual code widgets for marker-based interaction. In Proceedings of the 25th IEEE International Conference Distributed Computing Systems Workshops, Columbus, OH, USA, 6–10 June 2005; pp. 506–513. [Google Scholar]

- Wen, D.; Huang, Y.; Liu, Y.; Wang, Y. Study on an indoor tracking system with infrared projected markers for large-area applications. In Proceedings of the 8th International Conference Virtual Reality Continuum and its Applications in Industry, Yokohama, Japan, 14–15 December 2009; pp. 245–249. [Google Scholar]

- Kuantama, E.; Setyawan, L.; Darma, J. Early flood alerts using short message service (SMS). In Proceedings of the International Conference System Engineering and Technology (ICSET), Bandung, Indonesia, 11–12 September 2012; pp. 1–5. [Google Scholar]

- Freedman, D.A. Statistical Models: Theory and Practice; Cambridge University Press: Cambridge, UK, 2009; p. 26. [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions With Formulas, Graphs, And Mathematical Tables, 9th Printing; Dover: New York, NY, USA, 1972; p. 14. [Google Scholar]

- Basili, V.R.; Caldiera, G.; Rombach, H.D. The Goal Question Metric Approach; Technical Report; Department of Computer Science, University of Maryland: College Park, MD, USA, 1994. [Google Scholar]

- Cozby, P. Methods in Behavioral Research, 10th ed.; McGraw Hill: New York, NY, USA, 2009. [Google Scholar]

- Winkler, C.; Seifert, J.; Reinartz, C.; Krahmer, P.; Rukzio, E. Penbook: Bringing pen+ paper interaction to a tablet device to facilitate paper-based workflows in the hospital domain. In Proceedings of the 2013 ACM international conference on Interactive tabletops and surfaces, St. Andrews, Scotland, UK, 6–9 October 2013; pp. 283–286. [Google Scholar]

| Data Processed by Previous Data Comparison | |||||

|---|---|---|---|---|---|

| Predefined Point | 1 | 2 | 3 | 4 | 5 |

| Actual Distance (cm) | 10 | 20 | 30 | 40 | 50 |

| Average Distance (cm) | 10.02 | 20.44 | 30.33 | 39.25 | 50.26 |

| Standard Deviation | 0.09 | 0.10 | 0.06 | 0.12 | 0.15 |

| Data Processed by Average Distance Calculation | |||||

|---|---|---|---|---|---|

| Predefine Point | 1 | 2 | 3 | 4 | 5 |

| Actual Distance (cm) | 10 | 20 | 30 | 40 | 50 |

| Average Distance (cm) | 10.04 | 20.24 | 30.10 | 39.70 | 50.28 |

| Standard Deviation | 0 | 0.09 | 0.10 | 0 | 0.12 |

| Point | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Actual Distance (cm) | 10 | 20 | 30 | 40 | 50 |

| Raw Data Error Rate | 0.56% | 1.68% | 1.13% | 0.27% | 0.57% |

| Processed Data Error Rate | 0.01% | 2.14% | 1.17% | 0.02% | 0.01% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Zhao, C.; Kong, J. The Fusion of an Ultrasonic and Spatially Aware System in a Mobile-Interaction Device. Symmetry 2017, 9, 137. https://doi.org/10.3390/sym9080137

Wang D, Zhao C, Kong J. The Fusion of an Ultrasonic and Spatially Aware System in a Mobile-Interaction Device. Symmetry. 2017; 9(8):137. https://doi.org/10.3390/sym9080137

Chicago/Turabian StyleWang, Di, Chunying Zhao, and Jun Kong. 2017. "The Fusion of an Ultrasonic and Spatially Aware System in a Mobile-Interaction Device" Symmetry 9, no. 8: 137. https://doi.org/10.3390/sym9080137