1. Introduction

Crowdsourcing techniques have recently gained broad popularity in the research domain of software engineering [

1]. One of the key advantages of crowdsourcing techniques is that they provide engineers with information on the operations of real users, and those users provide data from tasks performed on real, diverse software and hardware platforms. For example, crowdsourced testing (e.g., GUI testing [

2]) provides user experience results for a large population of widely varying users, hardware, and operating systems and versions.

The Android bug tracker system [

3] is a crowdsourced testing tool that manages test reports collected from various sources, including development teams, testing teams, and end users, who are regarded as crowdsourced workers. Then, the Android development team manually analyzes the test reports and assigns a priority to each test report to represent how urgent it is from a business perspective that the bug gets fixed. This test report priority is an important assessment that depends on the severity of the test report, namely, the severity of impact of the bug on the successful execution of the software system. Some test reports are labeled as severe test reports (i.e., “severe” in testing parlance), whose associated bugs are found to be severe problems. Severe test reports generally have a higher fix priority than non-severe test reports (i.e., “non-severe”), the subset of test reports that are believed not to have any severe impact. In this way, crowdsourced workers help the centralized developers to reveal faults. However, the Android bug tracker system does not maintain severity labels for test reports. Because of the large number of test reports generated in crowdsourced testing, manually marking the severity of test reports can be a time-consuming and tedious task. Thus, the ability to automatically classify the severity of large numbers of test reports would significantly facilitate this process.

Several previous studies have been conducted to investigate the classification of issue reports for open-source projects using supervised machine learning algorithms [

4,

5,

6,

7]. Feng et al. [

8,

9] proposed test report prioritization methods for use in crowdsourced testing. They designed strategies for dynamically selecting the riskiest and most diversified test reports for inspection in each iteration. Wang et al. [

10,

11] proposed a cluster-based classification approach for effective classification of crowdsourced reports that addresses the local bias problem. Unfortunately, the Android bug reports do not have the severity labels for use as training data, and these approaches often require users to manually label a large number of training data, which is both time-consuming and labor-intensive in practice. Therefore, it is crucial to reduce the onerous burden of manual labeling while still being able to achieve good performance.

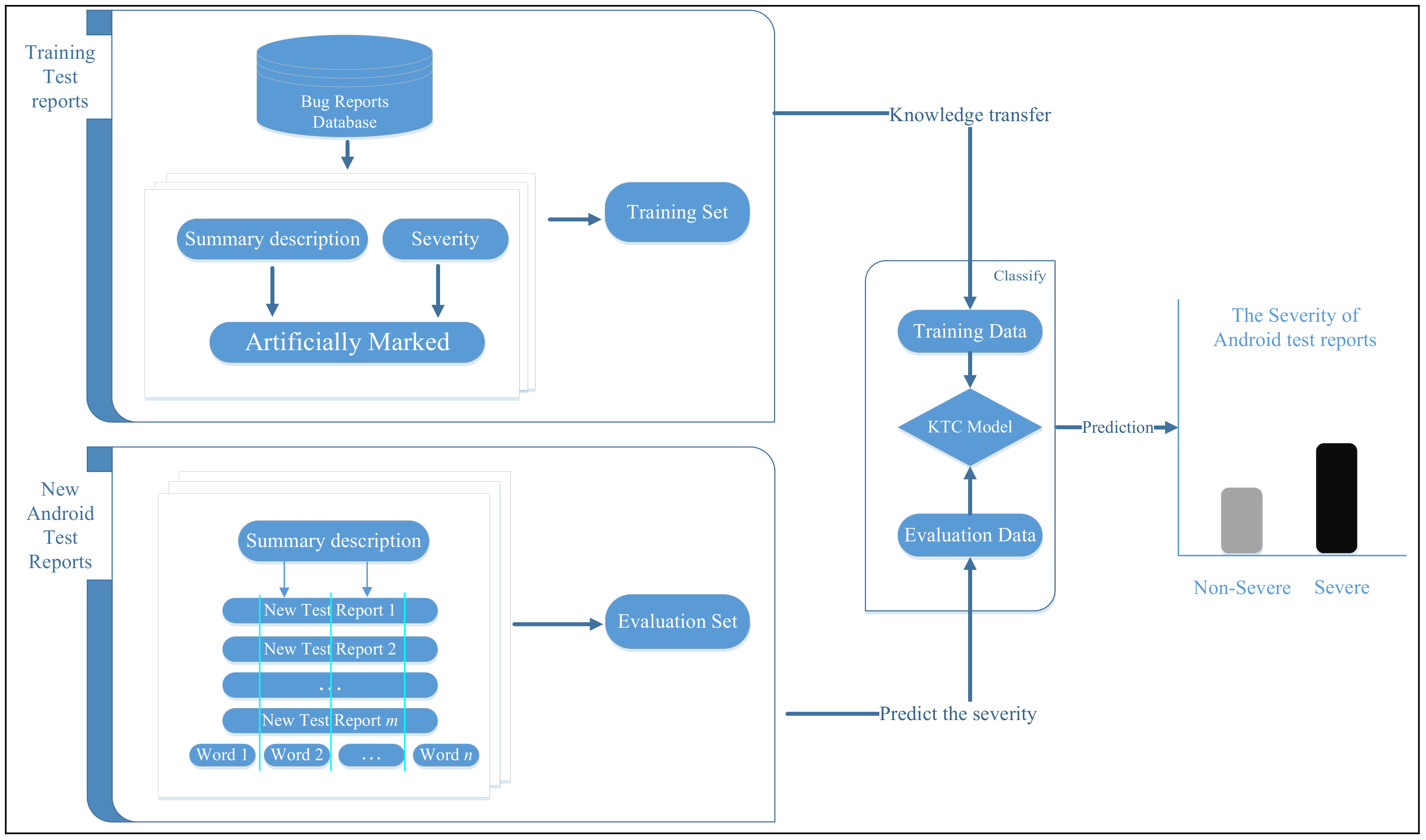

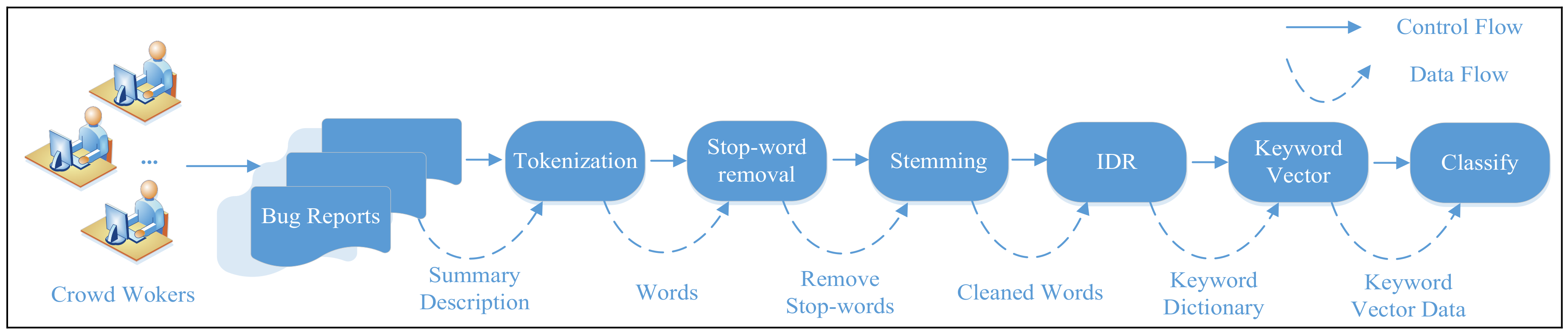

In this paper, we propose a Knowledge Transfer Classification (KTC) approach based on text mining and machine learning methods for predicting the severity of test reports generated in crowdsourced testing. To address the lack of severity-labeled training data available for Android test reports, our approach obtains labeled training data from bug repositories and uses knowledge transfer to predict the severity of Android test reports. We apply natural language processing (NLP) techniques, namely, tokenization, stop-word removal, and stemming [

12], to extract keywords from the test reports. These keywords are used to predict the severity of the test reports. Although consensus methods are effective in practice, it cannot be denied that a level of noise still exists in the set of keyword labels. In this study, an Importance Degree Reduction (IDR) strategy based on rough set is used to extract characteristic keywords, reduce the noise in the integrated labels, and, consequently, enhance the training data and model quality. Several experiments are designed and performed to demonstrate that the presented approach can be used to effectively predict the severity of Android test reports. We attempt to evaluate the performance of the proposed KTC method in a crowdsourced environment based on the measures of accuracy, precision, and recall.

The main contributions of this paper are as follows:

We propose a KTC approach based on text mining and machine learning methods to predict the severity of test reports from crowdsourced testing. Our approach obtains labeled training data from bug repositories and uses knowledge transfer to predict the severity of Android test reports.

We use an IDR strategy based on rough set to extract characteristic keywords, reduce the noise in the integrated labels, and, consequently, enhance the training data and model quality.

We use two bug repository datasets (Eclipse, Mozilla) for knowledge transfer to predict the severity of Android test reports. Several experiments demonstrate the prediction accuracy of our approach in various cases.

The remainder of this paper is organized as follows: the design of our proposed approach is discussed in

Section 2, the experimental design and results are presented in

Section 3 and

Section 4, related studies are discussed in

Section 5, the shortcomings of the IDR strategy are illustrated in

Section 6, and conclusions and plans for future studies are discussed in

Section 7.

4. Experimental Results

In this section, the experimental results are discussed in relation to the specific research question.

RQ1: Can the IDR improve the accuracy of predicting the severity of android test reports?

In the first experiment, we compare the accuracy for each of selected components from Eclipse and Mozilla to predict the severity of android bug reports, as shown in

Table 4. In this table here, we highlighted the best results in bold. The Project column and Product + Component column show the products and components, which we selected from the projects to predict the severity of android bug reports; and the other columns show the accuracy of predicting the severity of android test reports by the four classifiers and the four classifiers with our approach.

Table 4 shows the accuracy of using the

Mozilla and

Eclipse components to predict the severity of android bug reports. For example, for the UI component of the JDT product from the

Eclipse project, the accuracy of

NB classification to predict the severity of android test reports is 0.632, and the accuracy of

NB classification with our approach (

IDR +

NB) to predict the severity of android test reports is 0.683. In addition, the average accuracy of NB classification for

Eclipse to predict the severity of android test reports is 0.725, and the average accuracy of

NB classification with our approach (

IDR +

NB) for Eclipse to predict the severity of android test reports is 0.758.

From these results, we indeed notice that for most of predicting the severity of android bug reports, the classifier with our approach is more accurate than the standard classifier. Also, the average accuracy of standard classifiers with our approach is higher than the standard classifier to predict the accuracy of android bug reports. In addition, in this table we can see that the NB classification with our approach (IDR + NB) has the highest accuracy of using Eclipse and Mozilla to predict the severity of android test reports. The classifiers based on SVM and KNN with our approach have an accuracy nearly as good as the NB classifier with our approach. Furthermore, we see that the J48 classifier is a less accurate approach.

We use two bug repository datasets (Eclipse, Mozilla) for knowledge transfer to predict the severity of Android test reports. However, the different expressions of the test reports with natural language and the different reliability of the crowdsourced worker, which may have caused the noise in the prediction of the severity of test reports. In this case, the number of high-quality bug reports for training is rather low and thus we are dealing with an insufficiently trained classifier, resulting naturally in poor accuracy. In order to solve this problem, our approach uses IDR strategy based on rough set for the extraction of characteristic keywords to reduce the noise. Firstly, we apply natural language processing (NLP) techniques to get feature keywords from the bug reports. And, we use a bunch of feature keywords to represent the bug reports and the severity of bug reports. Secondly, we use the feature keywords to build a decision information table. Finally, we use an IDR approach to the decision information table for keywords reduction. And, we could remove the redundant keywords and get the classification rules under without affecting the classification ability. This result shows that the NB classification with our approach (IDR + NB) is suitable for using two bug repository datasets (Eclipse, Mozilla) for knowledge transfer to predict the severity of Android test reports.

RQ2: what is the performance of our approach (IDR)?

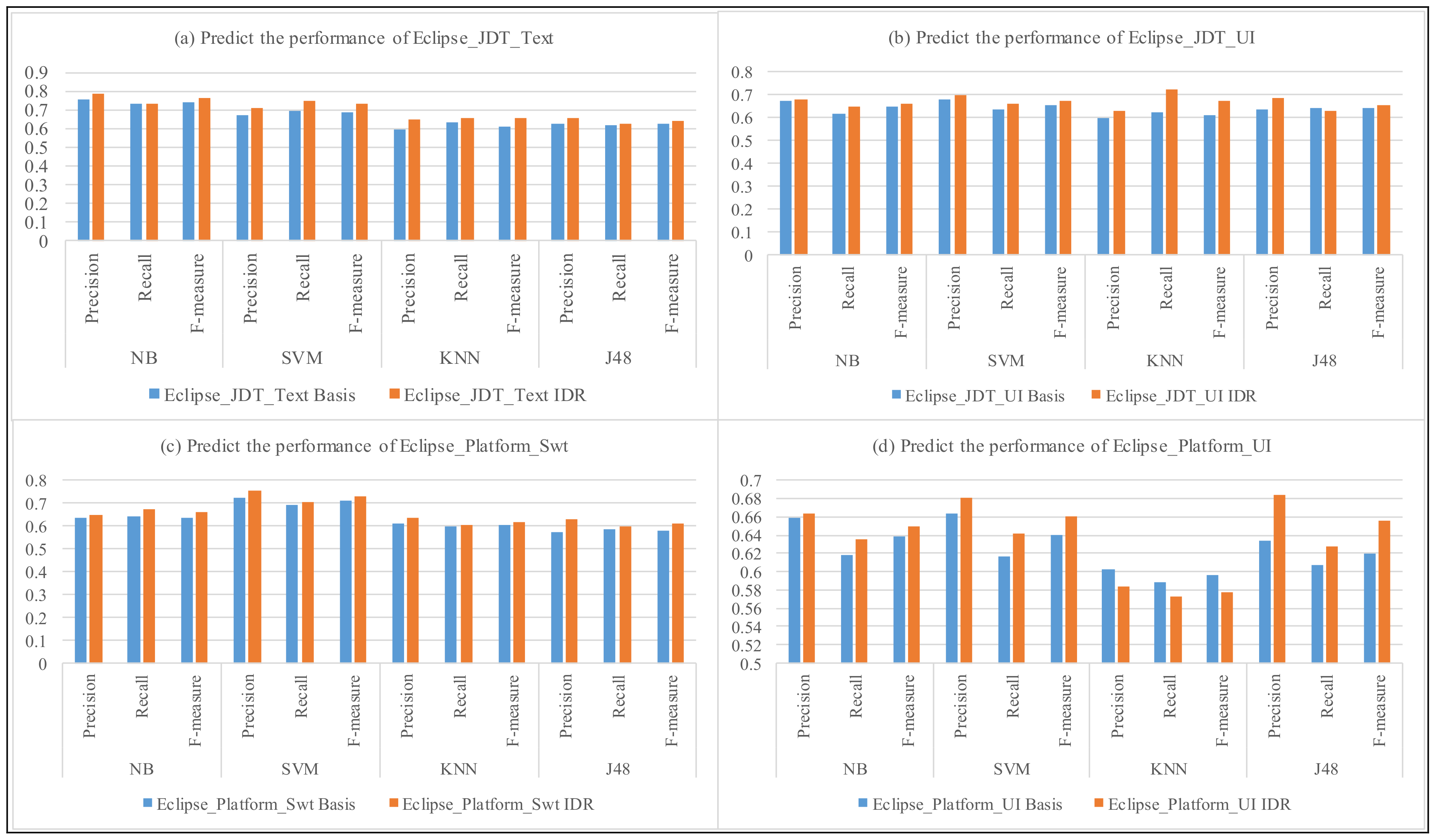

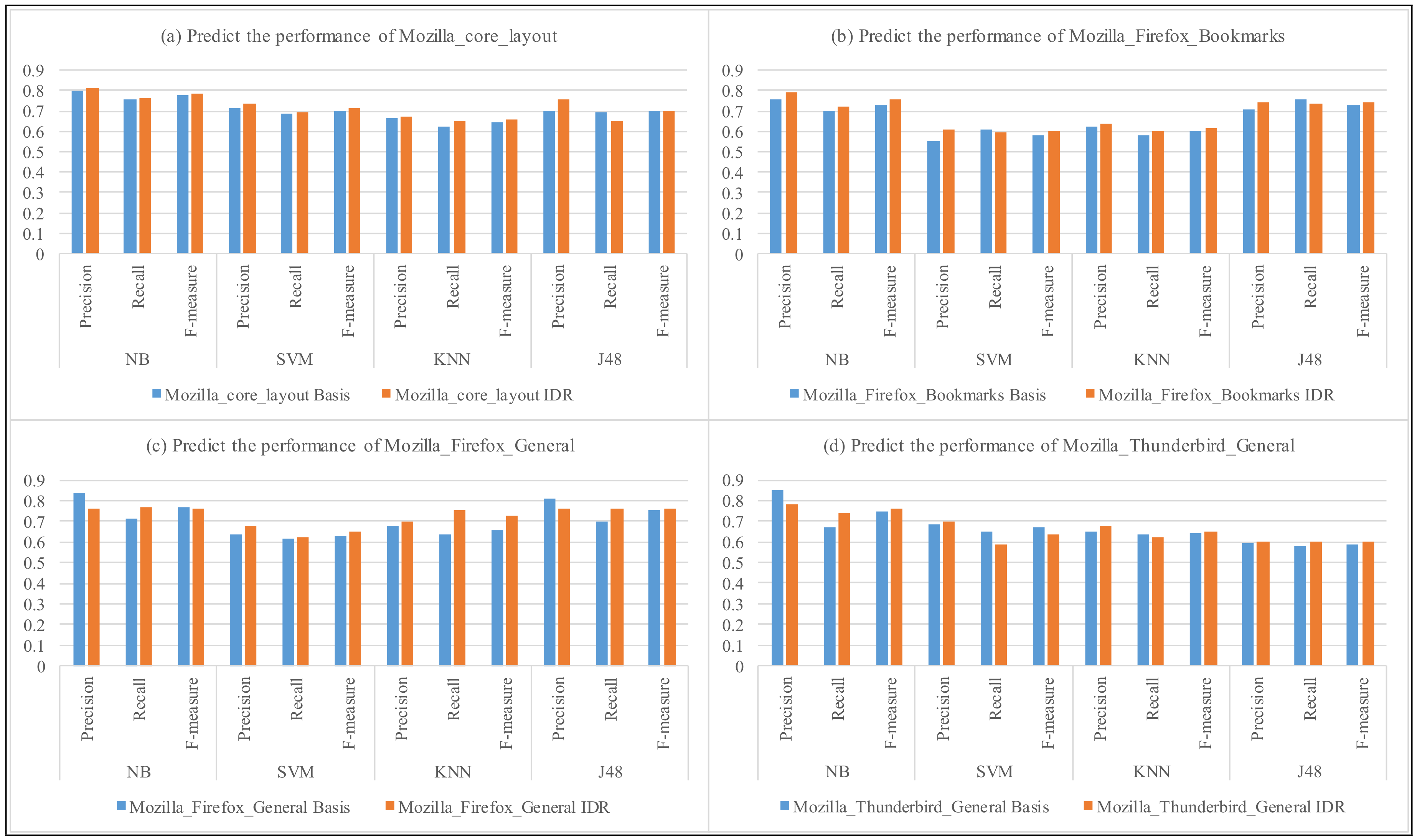

In

Figure 3 and

Figure 4, we compare the precision and recall for each of selected components from Eclipse and Mozilla to predict the severity of Android bug reports. The vertical axis represents the precision and recall of predicting the severity of Android bug reports by the four classifiers and the four classifiers with our approach (IDR). The horizontal axis represents the results of classifiers (Basic) and the four classifiers with our approach (IDR), respectively.

As we see in

Figure 3 and

Figure 4, the precision and recall of using the Mozilla and Eclipse components to predict the severity of android bug reports are similar, where we note that both the four classifiers and the four classifiers with our approach vary between the values 0.58–0.85. For example, for component Layout of Core product, in

Figure 4a, the precision and recall of NB classification to predict the severity of android test reports is 0.801 and 0.755, respectively; and the precision and recall of NB classification with our approach (IDR + NB) to predict the severity of android test reports is 0.812 and 0.763. In addition, the F-measure of NB classification for Eclipse to predict the severity of android test reports is 0.777, and the F-measure of NB classification with our approach (IDR + NB) for Eclipse to predict the severity of Android bug reports is 0.787. The average

F-measure of NB, SVM, KNN, J48 classifiers to predict the severity of Android bug reports is 0.712, 0.661, 0.621, 0.654, respectively. And, the average

F-measure of the four classifiers with our approach is 0.725, 0.674, 0.648, 0.671, respectively.

From these results, we indeed notice that the classifier with our approach is more effective than the standard classifier for the most cases. Meanwhile, the average performance of the four classifiers with our approach are all higher than the four classifiers. The results show that our approach could reduce the noise in the bug reports, and, consequently, enhance the training data and model quality.

The general way of calculating the accuracy is by calculating the percentage of bug reports from the evaluation set that are correctly classified. Similarly, Precision and Recall are widely used as evaluation measures.

However, these measures are not fit when dealing with data that has an unbalanced category distribution because of the dominating effect of the major category. Furthermore, most classifiers also produce probability estimations of their classifications. These estimations also contain interesting evaluation information but unfortunately are ignored when using the standard

Accuracy,

Precision, and

Recall approaches [

18].

The Receiver Operating Characteristic (ROC) was used as an evaluation method as this is a better way for not only evaluating classifier accuracy, but also for easier comparison of different classification algorithms [

19]. However, comparing curves visually can be a cumbersome activity, especially when the curves are close together. Therefore, the area beneath the ROC curve is calculated which serves as a single number expressing the accuracy. If the Area Under Curve (AUC) is close to 0.5 then the classifier is practically random, whereas a number close to 1.0 means that the classifier makes practically perfect predictions. This number allows more rational discussions when comparing the accuracy of different classifiers [

20].

The same conclusions can also be drawn from the other selected cases based on an analysis of the Area Under Curve measures in

Table 5. In this table here, we highlighted the best results in bold. From these results, we indeed notice that the

NB classifier with our approach (

IDR +

NB) is the most accurate to predict the severity of android test reports.

In addition,

Table 5 shows that the AUC values of

IDR +

NB using Eclipse components to predict the severity of android test reports is 0.758, 0.739, 0.647, and 0.66, respectively. And, the AUC for all Eclipse components are approximately 0.701. The AUC values of

IDR +

NB using Mozilla components to predict the severity of android test reports is 0.778, 0.725, 0.647, 0.621, respectively. We notice an improvement with the Mozilla components where we observe an AUC of approximately 0.72. In this case, this means that our approach performs around 22% better than if we would randomly guess the severity of each bug.

Therefore, we conclude that our approach efficiently obtains training data from bug repositories and uses knowledge transfer to predict the severity of Android test reports, which is based on the provided information, particularly the one-line summary using the NB classifier with our approach (IDR + NB). The accuracy of the approach is reasonable, yet it depends on the case.

7. Conclusions and the Further Work

A critical item of a bug report is the so-called “severity”, and consequently tool support for the person reporting the bug in the form of a recommender or verification system is desirable. In this paper, we propose a Knowledge Transfer Classification (KTC) approach based on text mining and machine learning methods. Our approach obtains training data from bug repositories and uses knowledge transfer to predict the severity of Android test reports. Keywords are extracted from the test reports using NLP techniques. Thus, KV may contain a large number of keyword dimensions and considerable noise. To solve this problem, we propose an Importance Degree Reduction (IDR) strategy based on rough set for the extraction of characteristic keywords to obtain more accurate reduction results. Experimental results indicate that the proposed KTC method can be used to accurately predict the severity of Android test reports.

This paper compares four well-known document classification algorithms (namely, Naïve Bayes (NB), K-Nearest Neighbor (KNN), Decision Tree (J48), and Support Vector Machines (SVM)) to find out which particular algorithm is best suited for classifying Android bug reports in either a “severe” or a “non-severe” category. We found out that for the cases under investigation, the average accuracy of predicting the Android bug reports severity by classifiers is 0.715, 0.716, 0.721, 0.659, respectively. However, the average accuracy of predicting the Android bug reports severity by classifiers with our approach (IDR) is 0.735, 0.731, 0.734, 0.663, respectively. The results shown that our approach (IDR) could be beneficial for predicting the severity of Android bug reports. Therefore, the NB classifier with our approach (IDR + NB) is the most suitable for predicting Android bug report severity.

Future work is aimed at including additional sources of data to support our predictions. Information from the (longer) description will be more thoroughly preprocessed so that it can be used for the predictions. Also, we will investigate other cases, where fewer bug reports get submitted but where the bug reports get reviewed consciously. Meanwhile, we may use topic model to label the severity of Android bug reports.