3D Point Clouds in Archaeology: Advances in Acquisition, Processing and Knowledge Integration Applied to Quasi-Planar Objects

Abstract

:1. Introduction

2. Digital Reconstruction in Archaeology: A Review

2.1. Archaeological Field Work

2.2. Integration of 3D Data

3. Materials and Methods

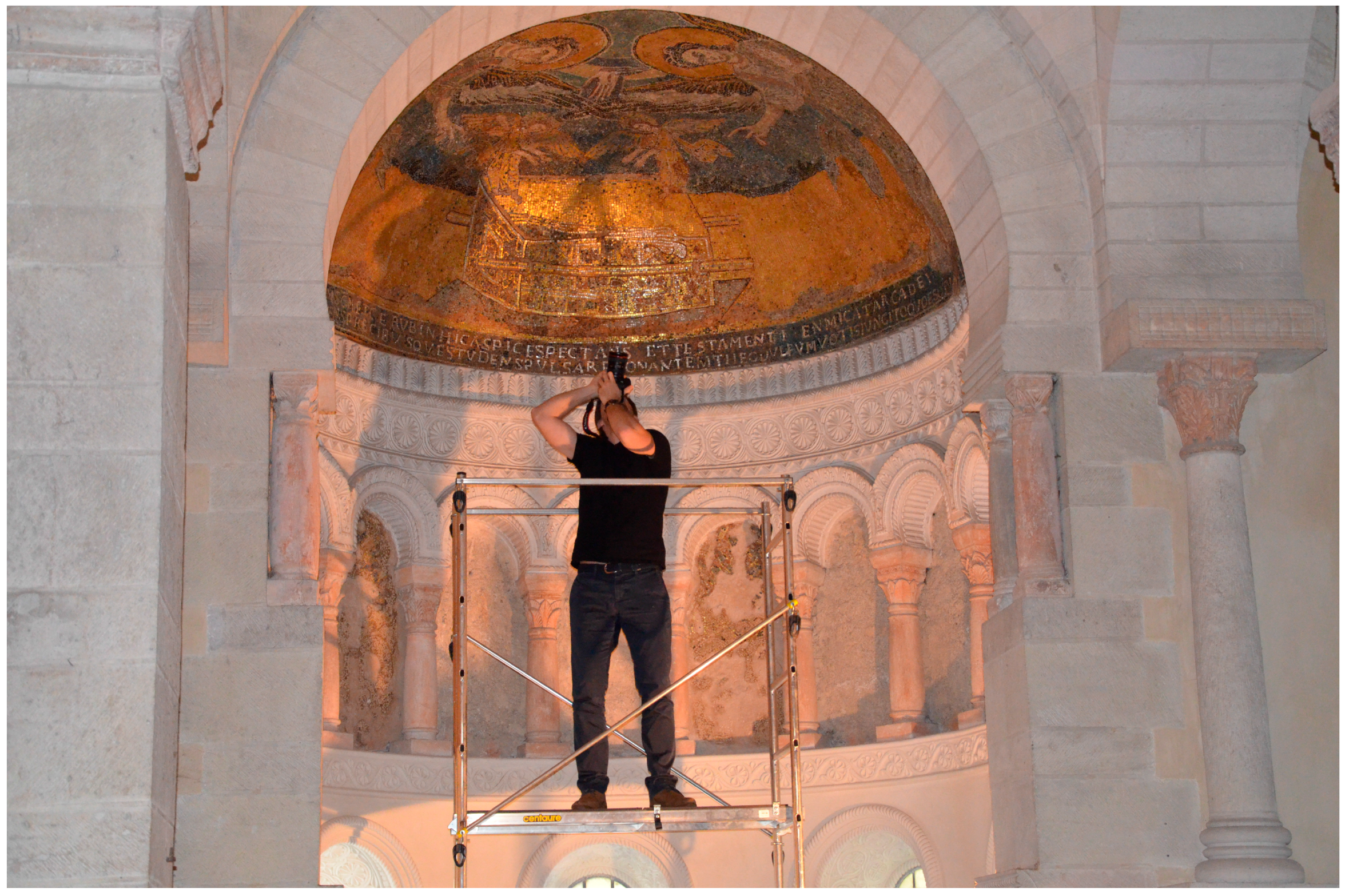

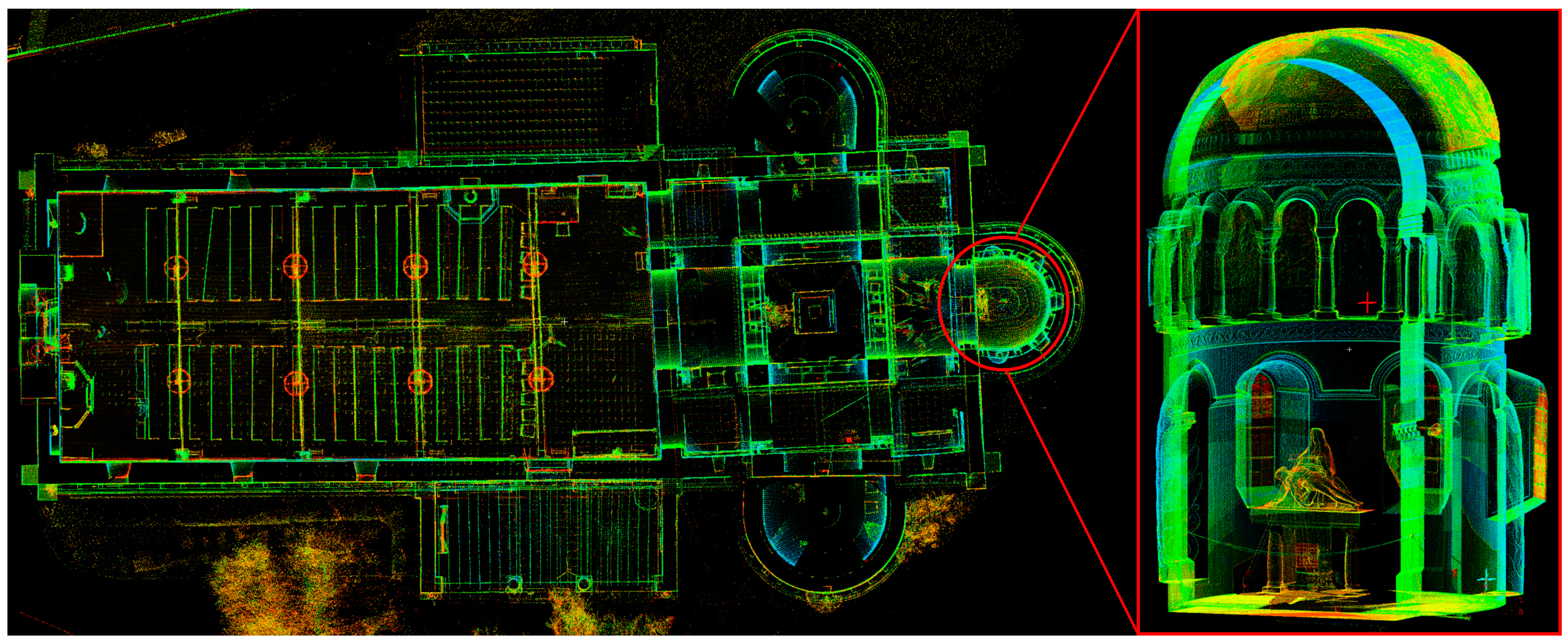

3.1. Point Cloud Data Acquisition and Pre-Processing

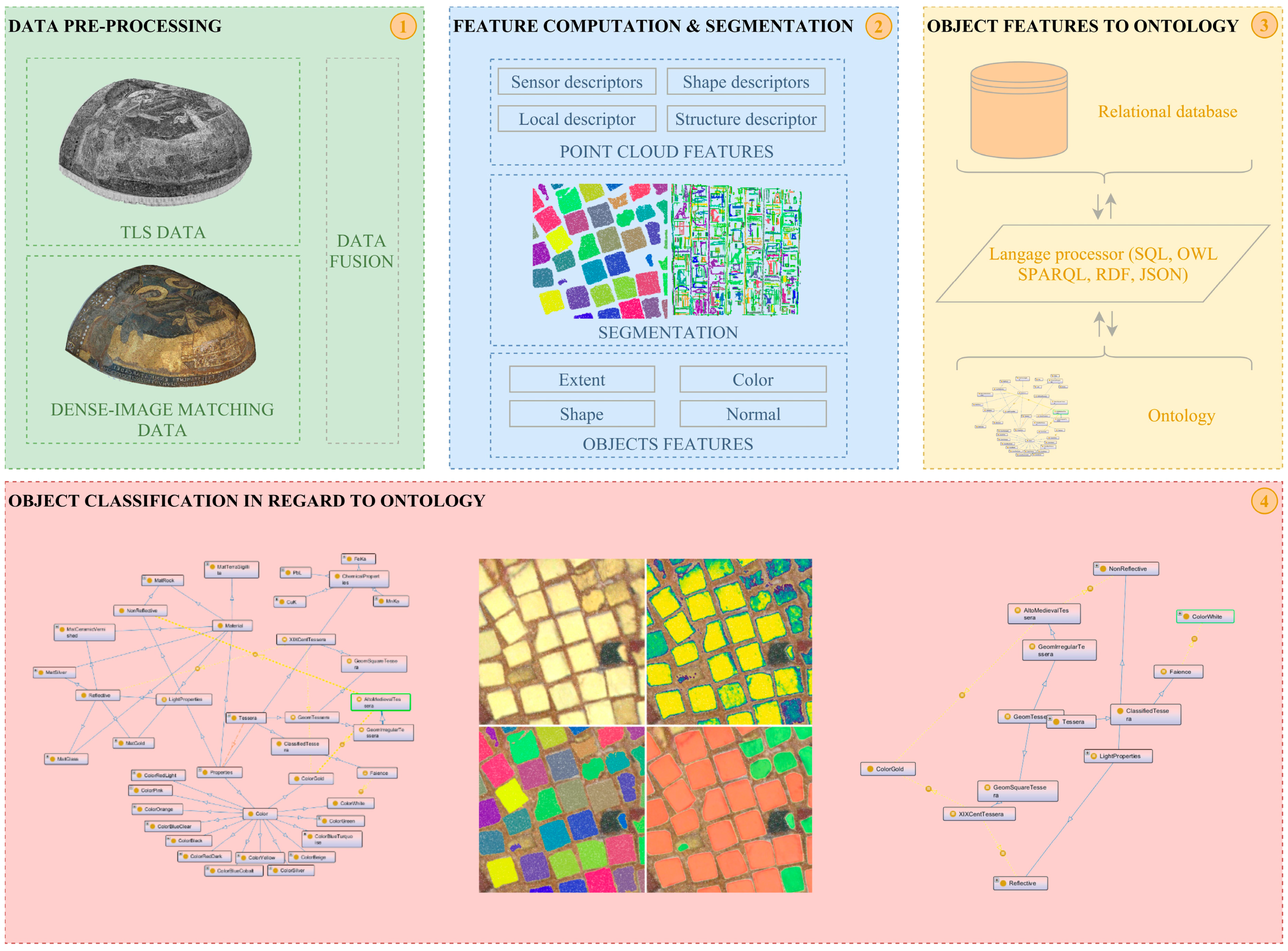

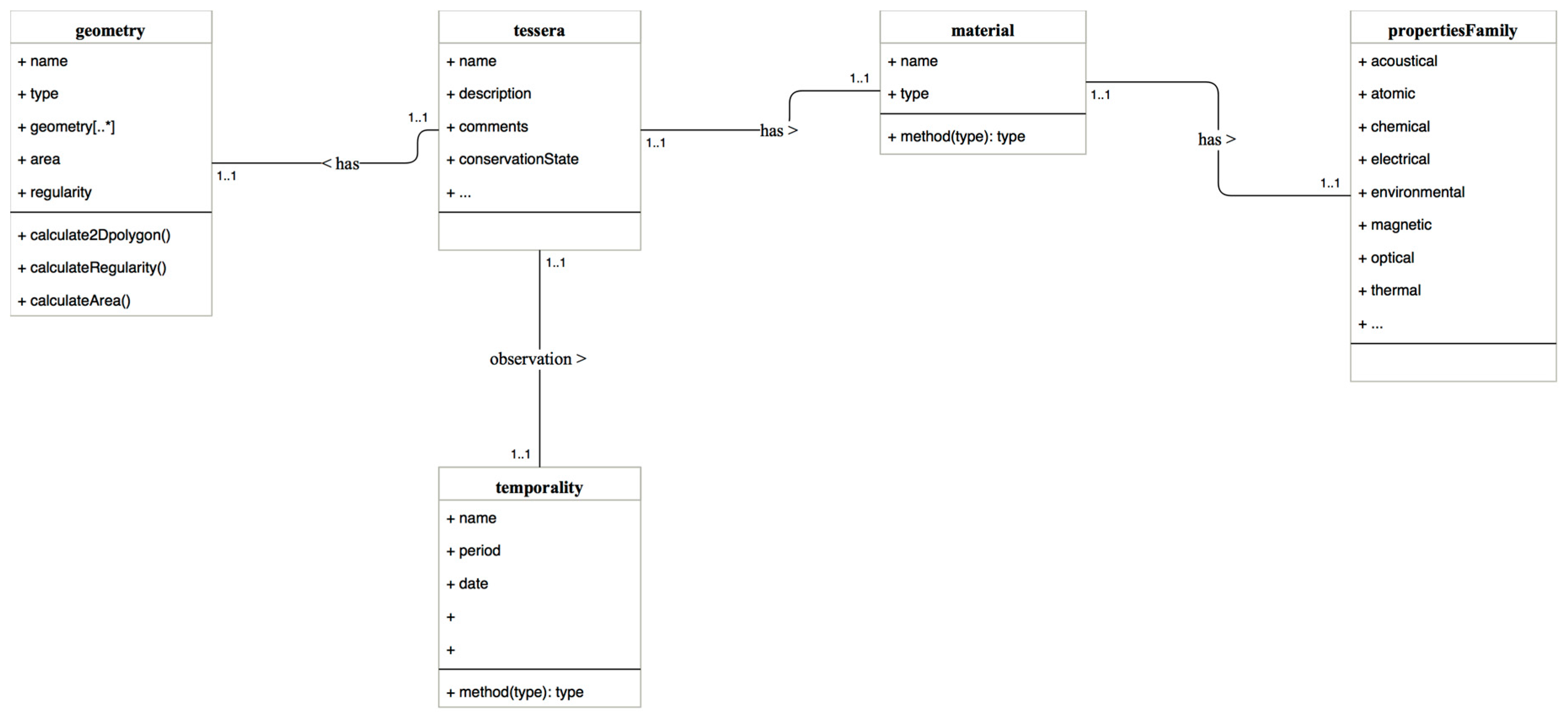

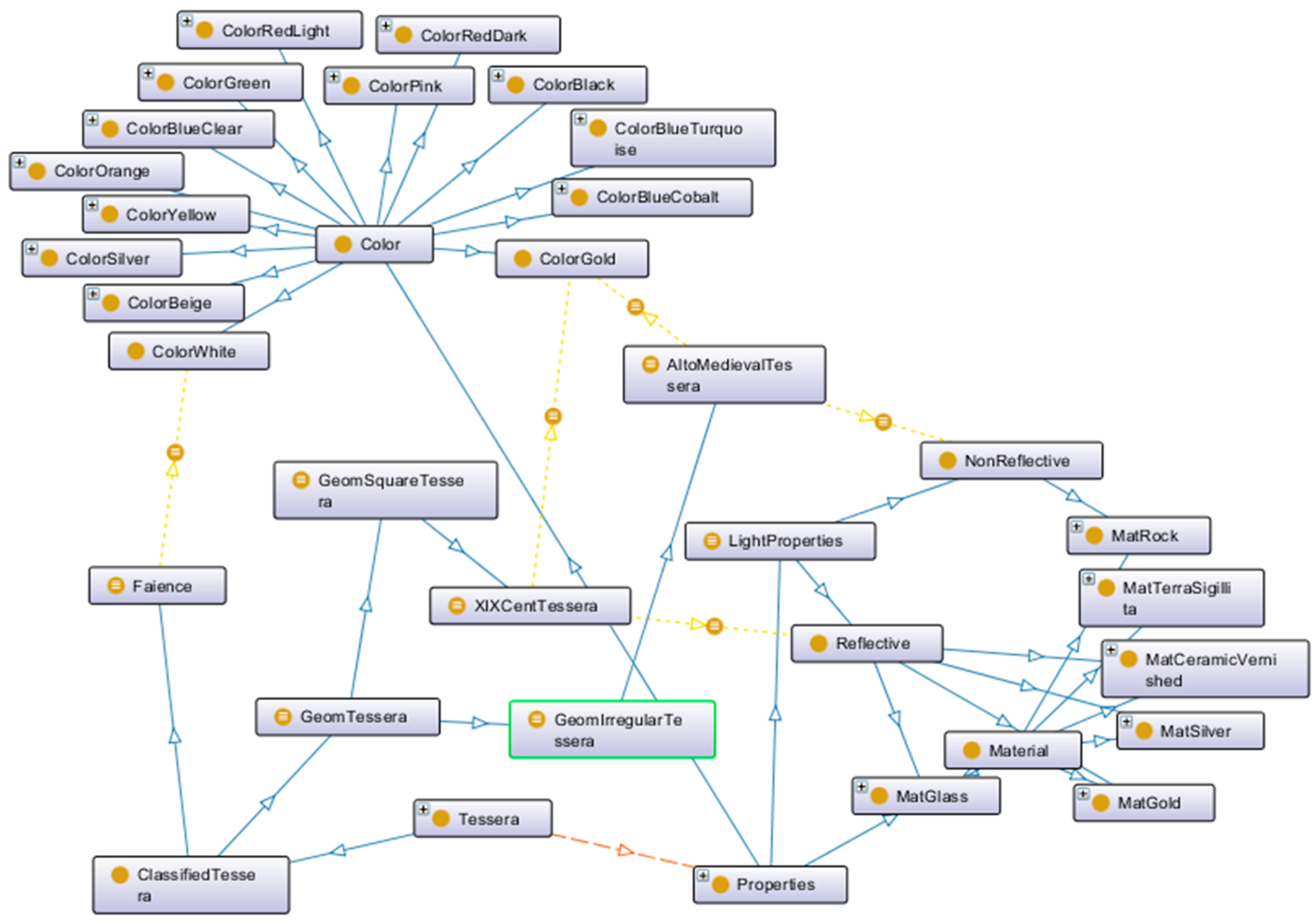

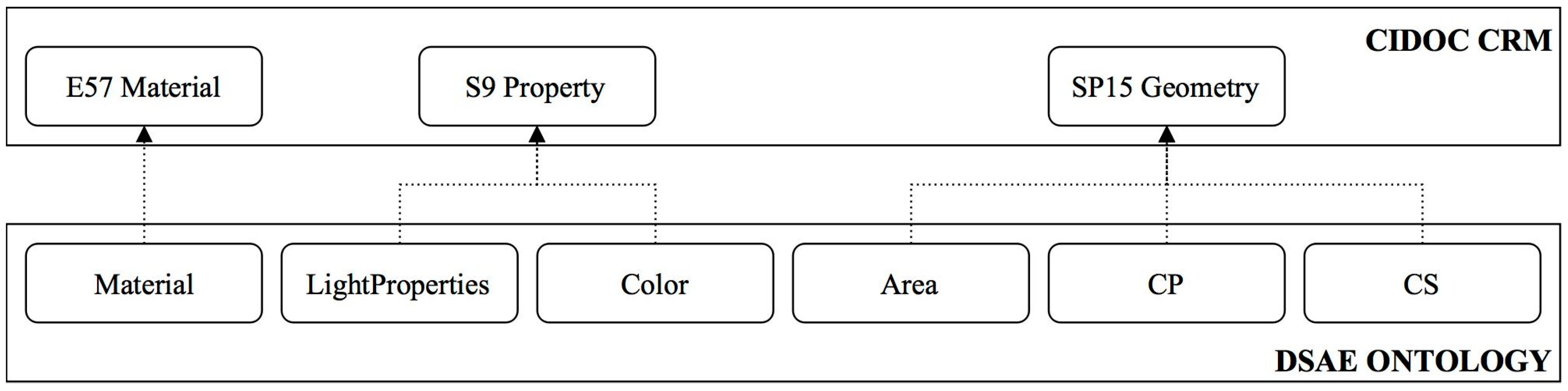

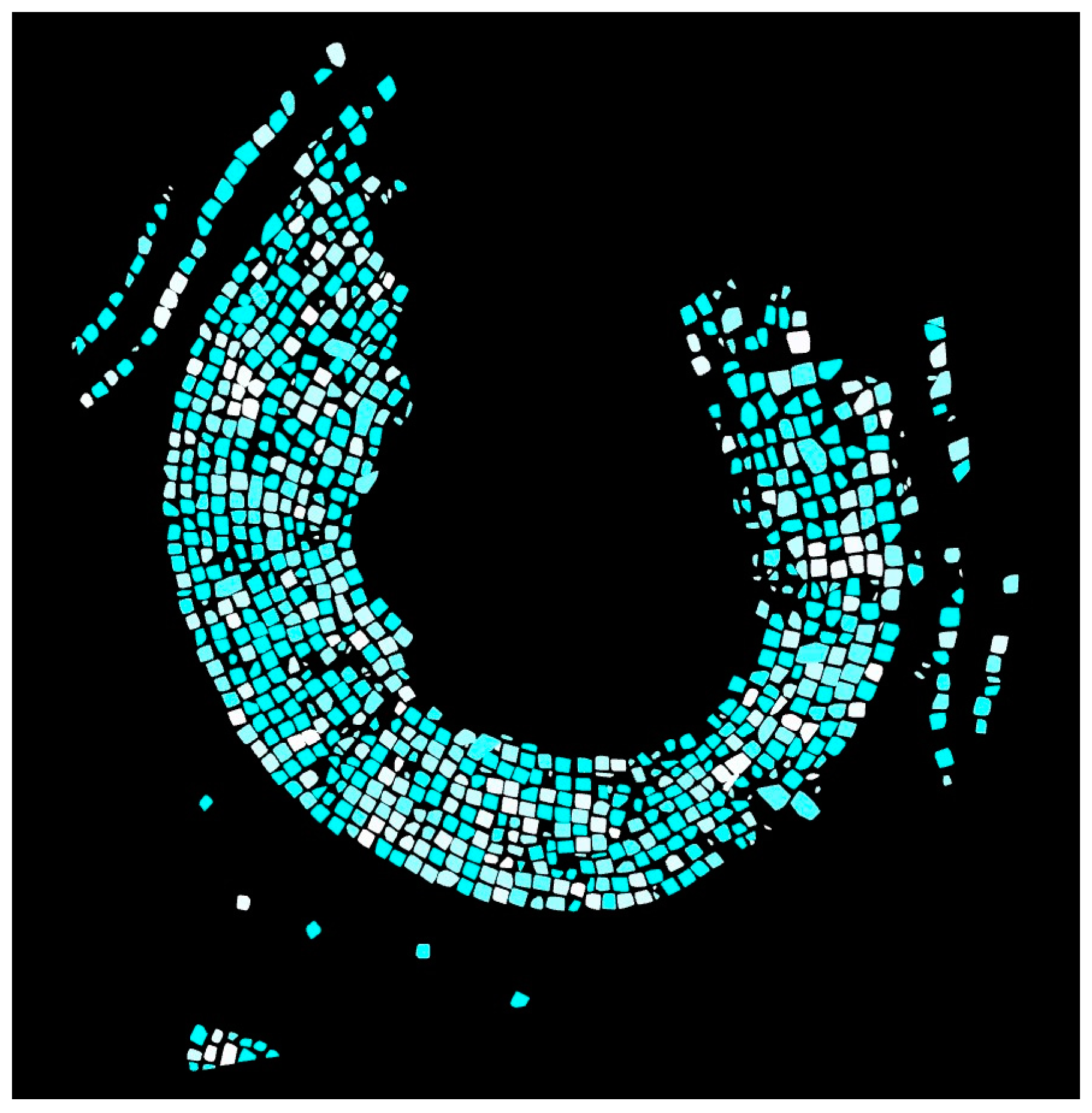

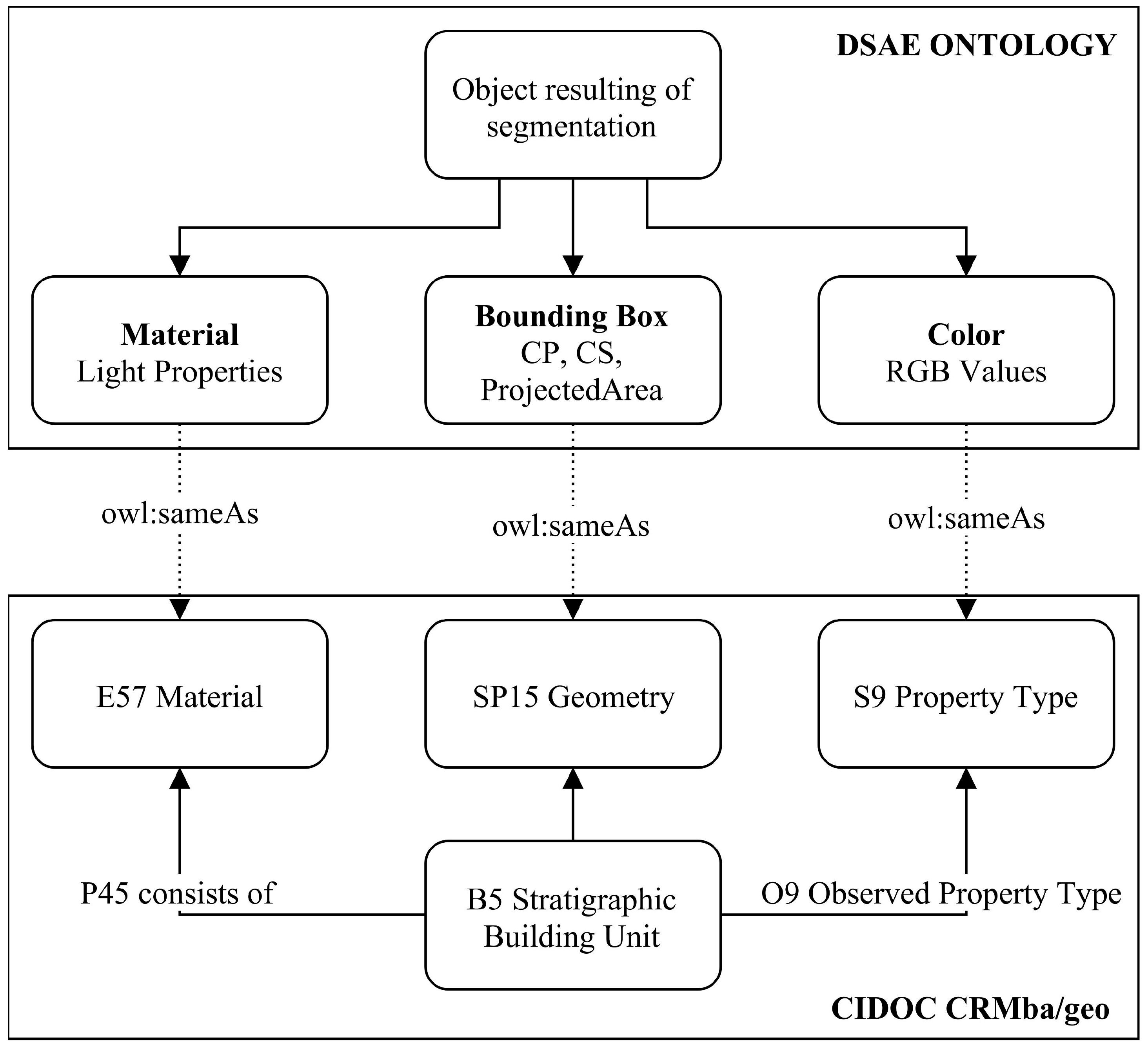

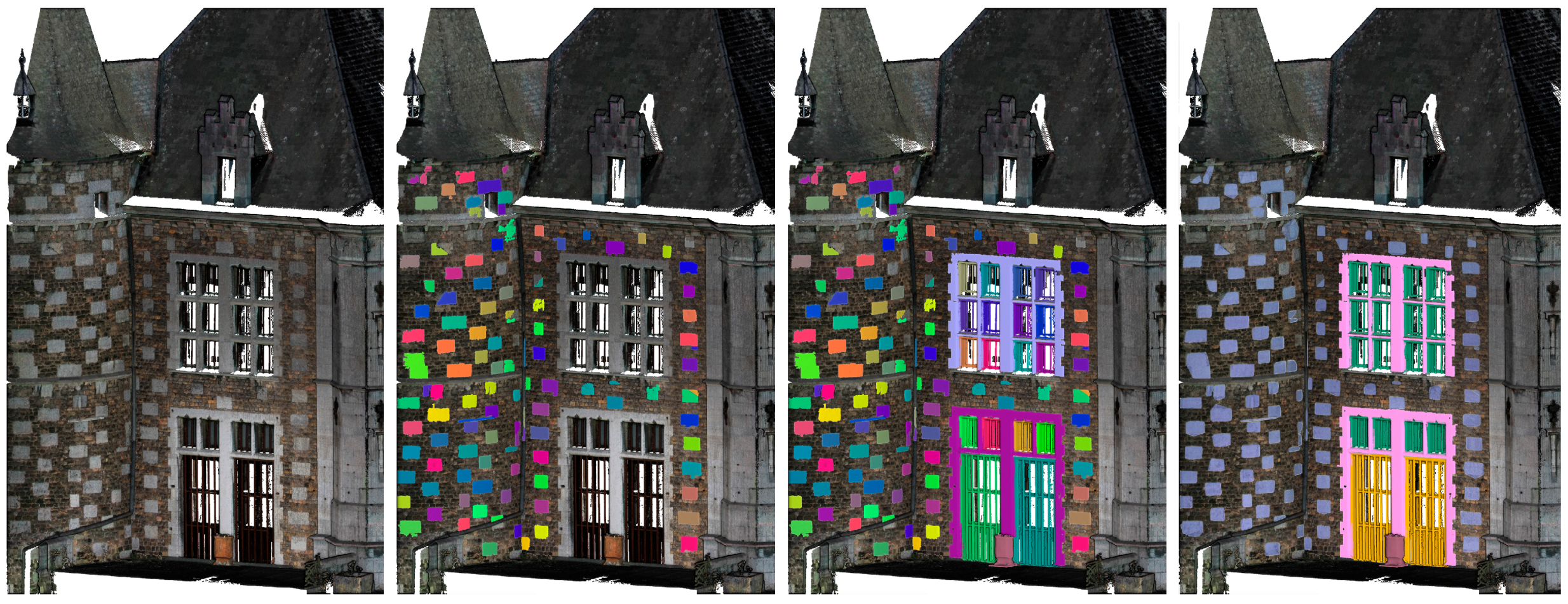

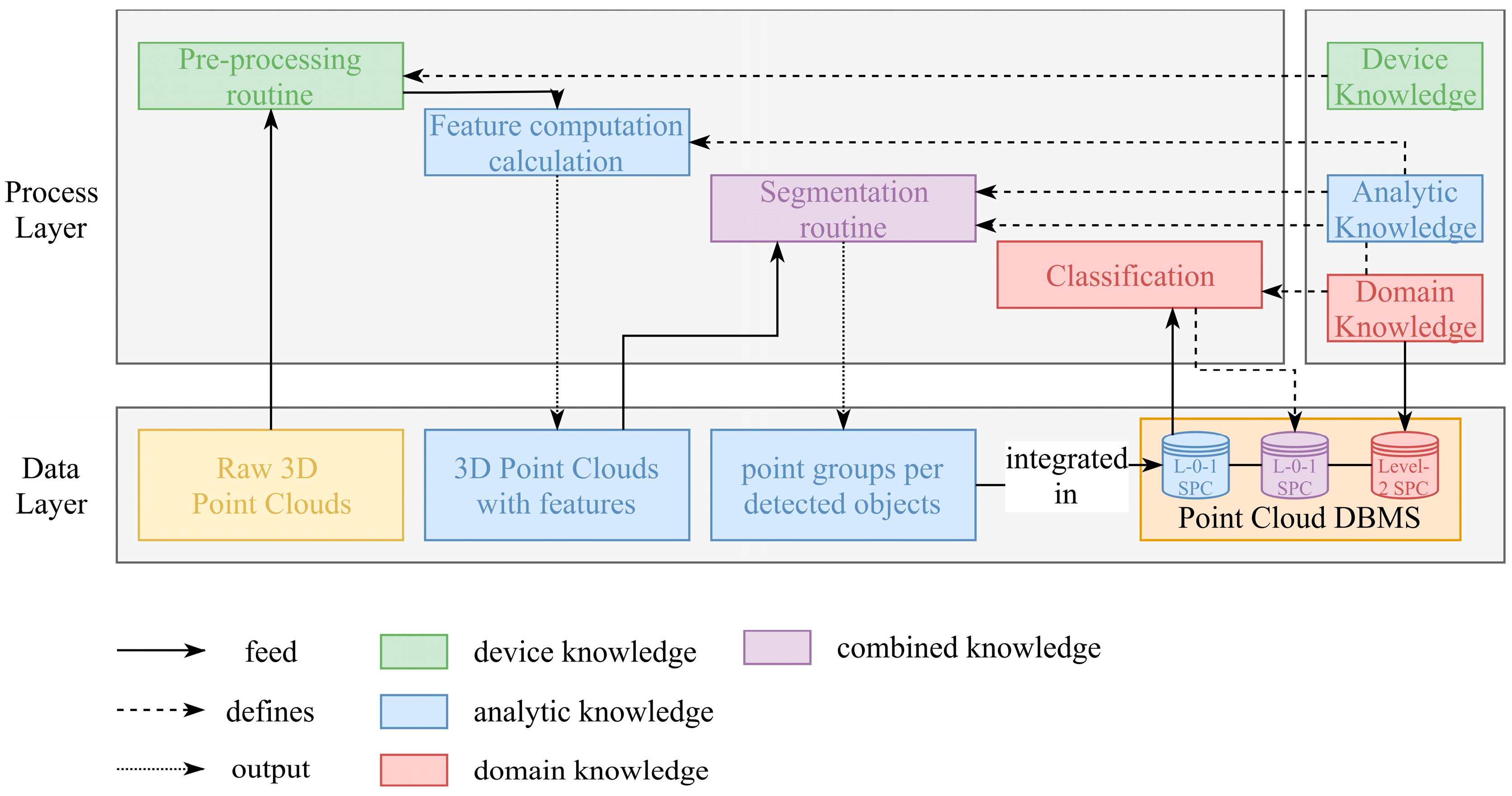

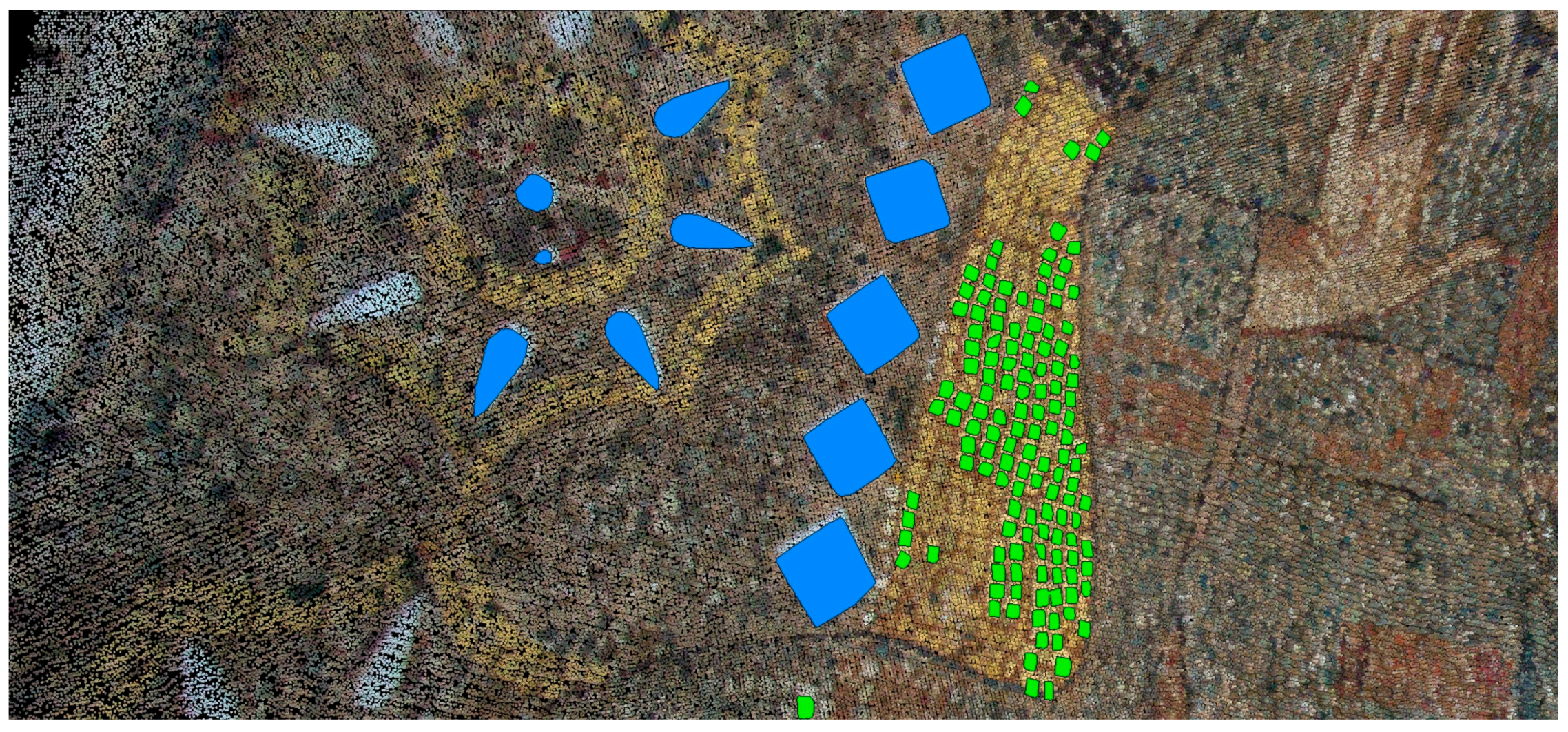

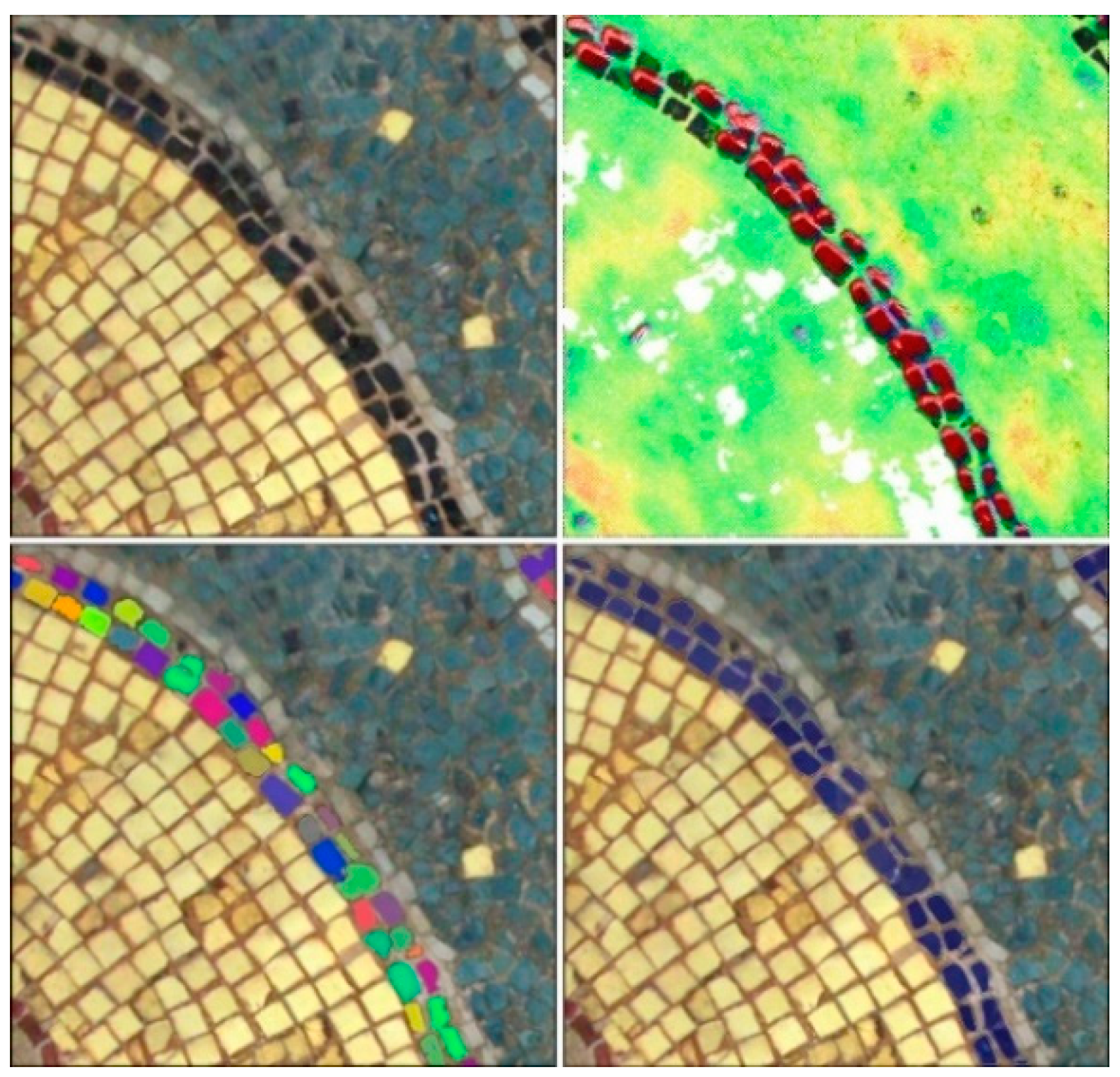

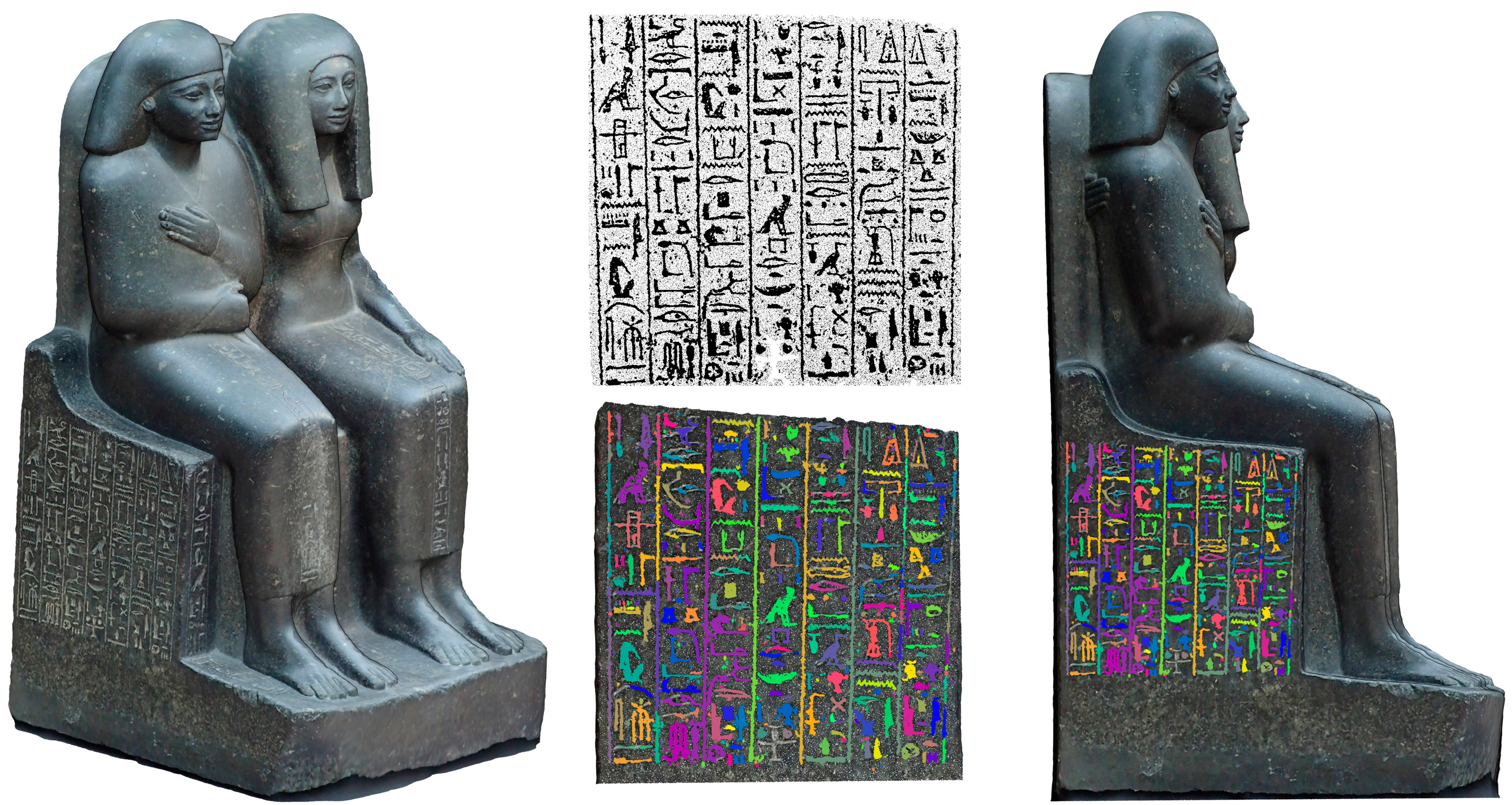

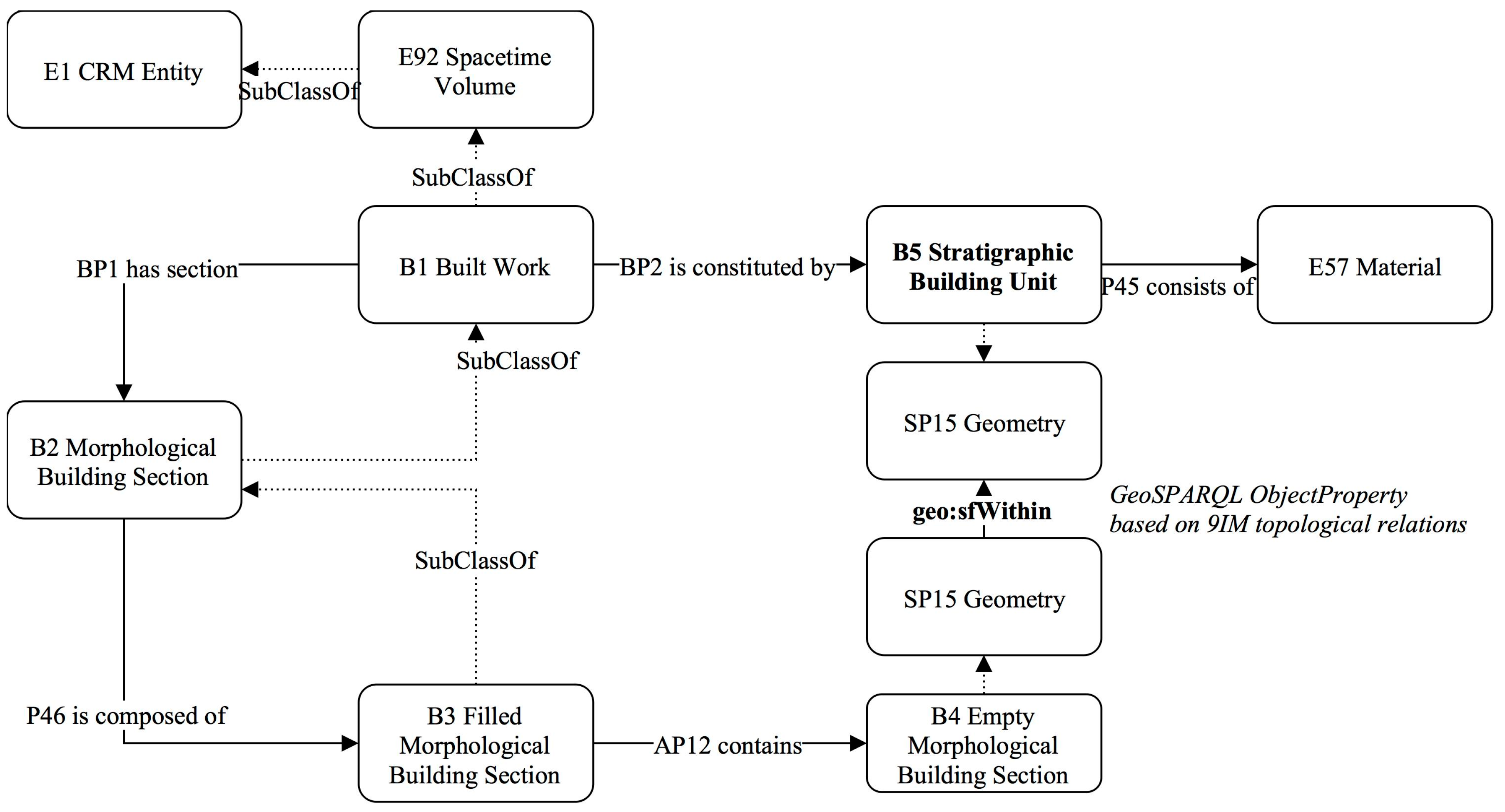

3.2. Knowledge-Based Detection and Classification

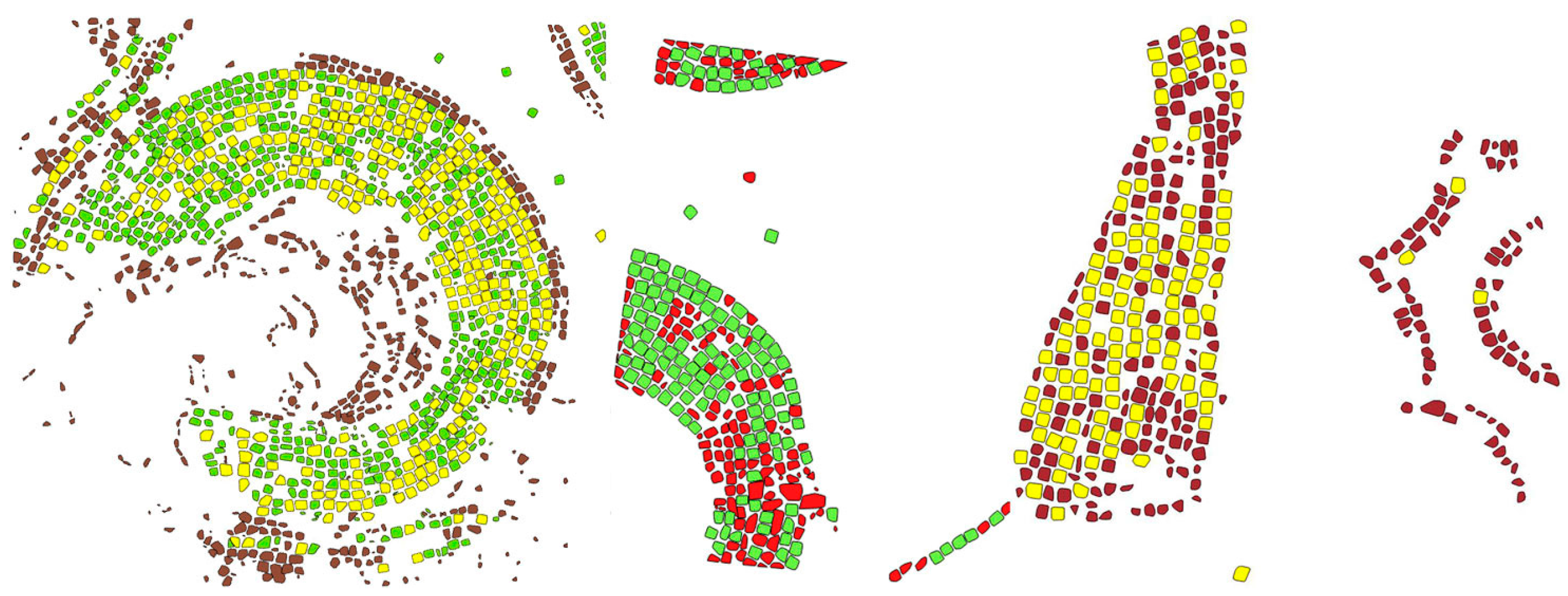

4. Results

5. Discussion

6. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Leute, U. Archaeometry: An Introduction to Physical Methods in Archaeology and the History of Art; VCH Verlagsgesellschaft mbH: Weinheim, Germany, 1987; ISBN 3527266313. [Google Scholar]

- Koller, D.; Frischer, B.; Humphreys, G. Research challenges for digital archives of 3D cultural heritage models. J. Comput. Cult. Herit. 2009, 2, 1–17. [Google Scholar] [CrossRef]

- Joukowsky, M. Field archaeology, tools and techniques of field work for archaeologists. In A complete Manual of Field Archaeology: Tools and Techniques of Field Work for Archaeologists; Prentice Hall: New York, NY, USA, 1980; ISBN 0-13-162164-5. [Google Scholar]

- Remondino, F. Heritage Recording and 3D Modeling with Photogrammetry and 3D Scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef] [Green Version]

- Tucci, G.; Bonora, V. GEOMATICS & RESTORATION—Conservation of Cultural Heritage in the Digital Era. In Proceedings of the ISPRS—International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Florence, Italy, 22–24 May 2017; Volume XLII-5/W1, p. 1. [Google Scholar]

- Patias, P. Cultural heritage documentation. In Proceedings of the International Summer School “Digital recording and 3D Modeling”, Crete, Greece, 24–29 April 2006; pp. 24–29. [Google Scholar]

- Treisman, A.M.; Gelade, G. A Feature-Integration of Attention. Cogn. Psychol. 1980, 136, 97–136. [Google Scholar] [CrossRef]

- Smith, B.; Varzi, A. Fiat and bona fide boundaries. Philos. Phenomenol. Res. 2000, 60, 401–420. [Google Scholar] [CrossRef]

- Binding, C.; May, K.; Tudhope, D. Semantic interoperability in archaeological datasets: Data mapping and extraction via the CIDOC CRM. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Lüscher, P.; Weibel, R.; Burghardt, D. Integrating ontological modelling and Bayesian inference for pattern classification in topographic vector data. Comput. Environ. Urban Syst. 2009, 33, 363–374. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Hallot, P.; Neuville, R.; Billen, R. Smart point cloud: Definition and remaining challenges. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, IV-2/W1, 119–127. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Hallot, P.; Van Wersch, L.; Luczfalvy Jancsó, A.; Billen, R. Digital Investigations of an Archaeological Smart Point Cloud: A Real Time Web-Based Platform To Manage the Visualisation of Semantical Queries. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-5/W1, 581–588. [Google Scholar] [CrossRef]

- Pieraccini, M.; Guidi, G.; Atzeni, C. 3D digitizing of cultural heritage. J. Cult. Herit. 2001, 2, 63–70. [Google Scholar] [CrossRef]

- Remondino, F.; Rizzi, A. Reality-based 3D documentation of natural and cultural heritage sites—Techniques, problems, and examples. Appl. Geomat. 2010, 2, 85–100. [Google Scholar] [CrossRef]

- Hartmann-Virnich, A. Transcrire l’analyse fine du bâti: Un plaidoyer pour le relevé manuel dans l’archéologie monumentale. In La Pierre et sa Mise en Oeuvre Dans L’art Médiéval: Mélanges d’Histoire de L’art Offerts à Eliane Vergnolle; Gallet, Y., Ed.; Brepols Publishers: Turnhout, Belgium, 2011; pp. 191–202. [Google Scholar]

- Lambers, K.; Remondino, F. Optical 3D Measurement Techniques in Archaeology: Recent Developments and Applications. In Layers of Perception, Proceedings of the 35th International Conference on Computer Applications and Quantitative Methods in Archaeology (CAA), Berlin, Germany, 2–6 April 2007; Posluschny, A., Ed.; Habelt: Bonn, Germany, 2008; pp. 27–35. [Google Scholar]

- Boto-varela, G.; Hartmann-virnich, A.; Nussbaum, N.; Reveyron, N.; Boto-varela, P.D.D.G.; Hartmann-virnich, A.; Nussbaum, N. Archéologie du bâti: Du mètre au laser. Perspectives 2012, 2, 329–346. [Google Scholar]

- Forte, M.; Dell’Unto, N.; Di Giuseppantonio Di Franco, P.; Galeazzi, F.; Liuzza, C.; Pescarin, S. The virtual museum of the Western Han Dynasty: 3D documentation and interpretation. In Space, Time, Place, Third International Conference on Remote Sensing in Archaeology; Archaeopress: Oxford, UK, 2010; Volume 2118, pp. 195–199. [Google Scholar]

- Pavlidis, G.; Koutsoudis, A.; Arnaoutoglou, F.; Tsioukas, V.; Chamzas, C. Methods for 3D digitization of Cultural Heritage. J. Cult. Herit. 2007, 8, 93–98. [Google Scholar] [CrossRef]

- Chase, A.F.; Chase, D.Z.; Weishampel, J.F.; Drake, J.B.; Shrestha, R.L.; Slatton, K.C.; Awe, J.J.; Carter, W.E. Airborne LiDAR, archaeology, and the ancient Maya landscape at Caracol, Belize. J. Archaeol. Sci. 2011, 38, 387–398. [Google Scholar] [CrossRef]

- Chase, A.F.; Chase, D.Z.; Fisher, C.T.; Leisz, S.J.; Weishampel, J.F. Geospatial revolution and remote sensing LiDAR in Mesoamerican archaeology. Proc. Natl. Acad. Sci. USA 2012, 109, 12916–12921. [Google Scholar] [CrossRef] [PubMed]

- Evans, D.H.; Fletcher, R.J.; Pottier, C.; Chevance, J.-B.; Soutif, D.; Tan, B.S.; Im, S.; Ea, D.; Tin, T.; Kim, S.; et al. Uncovering archaeological landscapes at Angkor using lidar. Proc. Natl. Acad. Sci. USA 2013, 110, 12595–12600. [Google Scholar]

- Coluzzi, R.; Lanorte, A.; Lasaponara, R. On the LiDAR contribution for landscape archaeology and palaeoenvironmental studies: The case study of Bosco dell’Incoronata (Southern Italy). Adv. Geosci. 2010, 24, 125–132. [Google Scholar] [CrossRef]

- Reshetyuk, Y. Self-Calibration and Direct Georeferencing in Terrestrial Laser Scanning. Ph.D. Thesis, Royal Institute of Technology (KTH), Stockholm, Sweden, 2009. [Google Scholar]

- Lerma, J.L.; Navarro, S.; Cabrelles, M.; Villaverde, V. Terrestrial laser scanning and close range photogrammetry for 3D archaeological documentation: The Upper Palaeolithic Cave of Parpallo as a case study. J. Archaeol. Sci. 2010, 37, 499–507. [Google Scholar] [CrossRef]

- Armesto-González, J.; Riveiro-Rodríguez, B.; González-Aguilera, D.; Rivas-Brea, M.T. Terrestrial laser scanning intensity data applied to damage detection for historical buildings. J. Archaeol. Sci. 2010, 37, 3037–3047. [Google Scholar] [CrossRef]

- Entwistle, J.A.; McCaffrey, K.J.W.; Abrahams, P.W. Three-dimensional (3D) visualisation: The application of terrestrial laser scanning in the investigation of historical Scottish farming townships. J. Archaeol. Sci. 2009, 36, 860–866. [Google Scholar] [CrossRef]

- Grussenmeyer, P.; Landes, T.; Voegtle, T.; Ringle, K. Comparison methods of terrestrial laser scanning, photogrammetry and tacheometry data for recording of cultural heritage buildings. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 15 October 2008; Volume XXXVI, pp. 213–218. [Google Scholar]

- Gonzalez-Aguilera, D.; Muñoz-Nieto, A.; Rodriguez-Gonzalvez, P.; Menéndez, M. New tools for rock art modelling: Automated sensor integration in Pindal Cave. J. Archaeol. Sci. 2011, 38, 120–128. [Google Scholar] [CrossRef]

- Barber, D.; Mills, J.; Smith-Voysey, S. Geometric validation of a ground-based mobile laser scanning system. J. Photogramm. Remote Sens. 2008, 63, 128–141. [Google Scholar] [CrossRef]

- James, M.R.; Quinton, J.N. Ultra-rapid topographic surveying for complex environments: The hand-held mobile laser scanner (HMLS). Earth Surf. Process. Landf. 2014, 39, 138–142. [Google Scholar] [CrossRef]

- Thomson, C.; Apostolopoulos, G.; Backes, D.; Boehm, J. Mobile Laser Scanning for Indoor Modelling. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, II-5/W2, 289–293. [Google Scholar]

- Lauterbach, H.; Borrmann, D.; Heß, R.; Eck, D.; Schilling, K.; Nüchter, A. Evaluation of a Backpack-Mounted 3D Mobile Scanning System. Remote Sens. 2015, 7, 13753–13781. [Google Scholar] [CrossRef]

- Sansoni, G.; Carocci, M.; Rodella, R. Calibration and performance evaluation of a 3-D imaging sensor based on the projection of structured light. IEEE Trans. Instrum. Meas. 2000, 49, 628–636. [Google Scholar] [CrossRef]

- Galindo Domínguez, R.E.; Bandy, W.L.; Mortera Gutiérrez, C.A.; Ortega Ramírez, J. Geophysical-Archaeological Survey in Lake Tequesquitengo, Morelos, Mexico. Geofísica Int. 2013, 52, 261–275. [Google Scholar] [CrossRef]

- Pix4D Terrestrial 3D Mapping Using Fisheye and Perspective Sensors—Support. Available online: https://support.pix4d.com/hc/en-us/articles/204220385-Scientific-White-Paper-Terrestrial-3D-Mapping-Using-Fisheye-and-Perspective-Sensors#gsc.tab=0 (accessed on 18 April 2016).

- Bentley 3D City Geographic Information System—A Major Step Towards Sustainable Infrastructure. Available online: https://www.bentley.com/en/products/product-line/reality-modeling-software/contextcapture (accessed on 26 September 2017).

- Wenzel, K.; Rothermel, M.; Haala, N.; Fritsch, D. SURE—The ifp Software for Dense Image Matching. In Photogrammetric Week 2013; Institute for Photogrammetry: Stuttgart, Germany, 2013; pp. 59–70. [Google Scholar]

- Agisoft Agisoft Photoscan. Available online: http://www.agisoft.com/pdf/photoscan_presentation.pdf (accessed on 26 September 2017).

- nFrames GmbH, nFrames. Available online: http://nframes.com/ (accessed on 26 September 2017).

- Capturing Reality, RealityCapture. Available online: https://www.capturingreality.com (accessed on 26 September 2017).

- Wu, C. VisualSFM. Available online: http://ccwu.me/vsfm/ (accessed on 26 September 2017).

- Moulon, P. openMVG. Available online: http://imagine.enpc.fr/~moulonp/openMVG/ (accessed on 26 September 2017).

- Autodesk, Autodesk: 123D Catch. Available online: http://www.123dapp.com/catch (accessed on 26 September 2017).

- EOS Systems Inc. Photomodeler. Available online: http://www.photomodeler.com/index.html (accessed on 26 September 2017).

- Profactor GmbH, ReconstructMe. Available online: http://reconstructme.net/ (accessed on 26 September 2017).

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef]

- Haala, N. The Landscape of Dense Image Matching Algorithms. Available online: http://www.ifp.uni-stuttgart.de/publications/phowo13/240Haala-new.pdf (accessed on 26 September 2017).

- Koenderink, J.J.; van Doorn, A.J. Affine structure from motion. J. Opt. Soc. Am. A 1991, 8, 377. [Google Scholar] [CrossRef] [PubMed]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Guarnieri, A.; Remondino, F.; Vettore, A. Digital photogrammetry and TLS data fusion applied to cultural heritage 3D modeling. In International Archives of Photogrammetry and Remote Sensing; Maas, H.-G., Schneider, D., Eds.; ISPRS: Dresden, Germany, 2006; Volume XXXVI. [Google Scholar]

- Nunez, M.A.; Buill, F.; Edo, M. 3D model of the Can Sadurni cave. J. Archaeol. Sci. 2013, 40, 4420–4428. [Google Scholar] [CrossRef]

- Novel, C.; Keriven, R.; Poux, F.; Graindorge, P. Comparing Aerial Photogrammetry and 3D Laser Scanning Methods for Creating 3D Models of Complex Objects. In Capturing Reality Forum; Bentley Systems: Salzburg, Austria, 2015; p. 15. [Google Scholar]

- Leberl, F.; Irschara, A.; Pock, T.; Meixner, P.; Gruber, M.; Scholz, S.; Wiechert, A. Point Clouds: Lidar versus 3D Vision. Photogramm. Eng. Remote Sens. 2010, 76, 1123–1134. [Google Scholar] [CrossRef]

- Al-kheder, S.; Al-shawabkeh, Y.; Haala, N. Developing a documentation system for desert palaces in Jordan using 3D laser scanning and digital photogrammetry. J. Archaeol. Sci. 2009, 36, 537–546. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Hallot, P.; Billen, R. Point clouds as an efficient multiscale layered spatial representation. In Proceedings of the Eurographics Workshop on Urban Data Modelling and Visualisation, Liège, Belgium, 8 December 2016. [Google Scholar]

- Koch, M.; Kaehler, M. Combining 3D laser-Scanning and close-range Photogrammetry—An approach to Exploit the Strength of Both methods. In Proceedings of the Computer Applications to Archaeology, Williamsburg, VA, USA, 22–26 March 2009; pp. 1–7. [Google Scholar]

- Stanco, F.; Battiato, S.; Gallo, G. Digital Imaging for Cultural Heritage Preservation: Analysis, Restoration, and Reconstruction of Ancient Artworks; CRC Press: Boca Raton, FL, USA, 2011; ISBN 1439821739. [Google Scholar]

- Billen, R. Nouvelle Perception de la Spatialité des Objets et de Leurs Relations. Ph.D. Thesis, University of Liège, Liege, Belgium, 2002. [Google Scholar]

- Agapiou, A.; Lysandrou, V. Remote sensing archaeology: Tracking and mapping evolution in European scientific literature from 1999 to 2015. J. Archaeol. Sci. Rep. 2015, 4, 192–200. [Google Scholar] [CrossRef]

- Rollier-Hanselmann, J.; Petty, Z.; Mazuir, A.; Faucher, S.; Coulais, J.-F. Développement d’un SIG pour la ville médiévale de Cluny. Archeol. Calc. 2014, 5, 164–179. [Google Scholar]

- Katsianis, M.; Tsipidis, S.; Kotsakis, K.; Kousoulakou, A. A 3D digital workflow for archaeological intra-site research using GIS. J. Archaeol. Sci. 2008, 35, 655–667. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Gaiani, M.; Benedetti, B. 3D reality-based artefact models for the management of archaeological sites using 3D Gis: A framework starting from the case study of the Pompeii Archaeological area. J. Archaeol. Sci. 2012, 39, 1271–1287. [Google Scholar] [CrossRef]

- Galeazzi, F.; Callieri, M.; Dellepiane, M.; Charno, M.; Richards, J.; Scopigno, R. Web-based visualization for 3D data in archaeology: The ADS 3D viewer. J. Archaeol. Sci. Rep. 2016, 9, 1–11. [Google Scholar] [CrossRef]

- Stefani, C.; Brunetaud, X.; Janvier-Badosa, S.; Beck, K.; De Luca, L.; Al-Mukhtar, M. Developing a toolkit for mapping and displaying stone alteration on a web-based documentation platform. J. Cult. Herit. 2014, 15, 1–9. [Google Scholar] [CrossRef]

- De Reu, J.; Plets, G.; Verhoeven, G.; De Smedt, P.; Bats, M.; Cherretté, B.; De Maeyer, W.; Deconynck, J.; Herremans, D.; Laloo, P.; et al. Towards a three-dimensional cost-effective registration of the archaeological heritage. J. Archaeol. Sci. 2013, 40, 1108–1121. [Google Scholar] [CrossRef]

- Scianna, A.; Gristina, S.; Paliaga, S. Experimental BIM applications in archaeology: A work-flow. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); University of Illinois at Urbana-Champaign: Champaign, IL, USA, 2014; Volume 8740, pp. 490–498. ISBN 978-3-319-13695-0; 978-3-319-13694-3. [Google Scholar]

- Campanaro, D.M.; Landeschi, G.; Dell’Unto, N.; Leander Touati, A.M. 3D GIS for cultural heritage restoration: A “white box” workflow. J. Cult. Herit. 2016, 18, 321–332. [Google Scholar] [CrossRef]

- Soler, F.; Melero, F.J.; Luzon, M.V. A complete 3D information system for cultural heritage documentation. J. Cult. Herit. 2017, 23, 49–57. [Google Scholar] [CrossRef]

- Lieberwirth, U. 3D GIS Voxel-Based Model Building in Archaeology. In Proceedings of the 35th International Conference on Computer Applications and Quantitative Methods in Archaeology (CAA), Berlin, Germany, 2–6 April 2007; Volume 2, pp. 1–8. [Google Scholar]

- Orengo, H.A. Combining terrestrial stereophotogrammetry, DGPS and GIS-based 3D voxel modelling in the volumetric recording of archaeological features. J. Photogramm. Remote Sens. 2013, 76, 49–55. [Google Scholar] [CrossRef]

- Clementini, E.; Di Felice, P. Approximate topological relations. Int. J. Approx. Reason. 1997, 16, 173–204. [Google Scholar] [CrossRef]

- Moscati, A.; Lombardo, J.; Losciale, L.V.; De Luca, L. Visual browsing of semantic descriptions of heritage buildings morphology. In Proceedings of the Digital Media and its Applications in Cultural Heritage (DMACH) 2011, Amman, Jordan, 16 March 2011; pp. 1–16. [Google Scholar]

- Janowicz, K. Observation-Driven Geo-Ontology Engineering. Trans. GIS 2012, 16, 351–374. [Google Scholar] [CrossRef]

- Novak, M. Intelligent Environments: Spatial Aspects of the Information Revolution; Droege, P., Ed.; Elsevier: Amsterdam, The Nederland, 1997; ISBN 0080534848. [Google Scholar]

- Klein, L.A. Sensor and Data Fusion: A Tool for Information Assessment and Decision Making; SPIE Press: Bellingham, WA, USA, 2004; ISBN 0819454354. [Google Scholar]

- Petrie, G. Systematic oblique ae using multiple digitarial photography i frame cameras. Photogramm. Eng. Remote Sens. 2009, 75, 102–107. [Google Scholar]

- Otepka, J.; Ghuffar, S.; Waldhauser, C.; Hochreiter, R.; Pfeifer, N. Georeferenced Point Clouds: A Survey of Features and Point Cloud Management. Int. J. Geoinf. 2013, 2, 1038–1065. [Google Scholar] [CrossRef] [Green Version]

- Van Wersch, L.; Kronz, A.; Simon, K.; Hocquet, F.-P.; Strivay, D. Matériaux des Mosaïques de Germigny-Des-Prés. Germigny-des-prés. Available online: http://pointcloudproject.com/wp-content/uploads/2017/01/Unknown-Van-Wersch-et-al.-Mat%C3%A9riaux-des-mosa%C3%AFques-de-Germigny-des-Pr%C3%A9s.pdf (accessed on 26 September 2017).

- Dimitrov, A.; Golparvar-Fard, M. Segmentation of building point cloud models including detailed architectural/structural features and MEP systems. Autom. Constr. 2015, 51, 32–45. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Billen, R. Point cloud classification of tesserae from terrestrial laser data combined with dense image matching for archaeological information extraction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W2, 203–211. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Pu, S.; Vosselman, G. Knowledge based reconstruction of building models from terrestrial laser scanning data. J. Photogramm. Remote Sens. 2009, 64, 575–584. [Google Scholar] [CrossRef]

- Ben Hmida, H.; Boochs, F.; Cruz, C.; Nicolle, C. Knowledge Base Approach for 3D Objects Detection in Point Clouds Using 3D Processing and Specialists Knowledge. Int. J. Adv. Intell. Syst. 2012, 5, 1–14. [Google Scholar]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point Cloud Shape Detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Lin, H.; Gao, J.; Zhou, Y.; Lu, G.; Ye, M.; Zhang, C.; Liu, L.; Yang, R. Semantic decomposition and reconstruction of residential scenes from LiDAR data. ACM Trans. Graph. 2013, 32, 1. [Google Scholar] [CrossRef]

- Ochmann, S.; Vock, R.; Wessel, R.; Klein, R. Automatic reconstruction of parametric building models from indoor point clouds. Comput. Graph. 2016, 54, 94–103. [Google Scholar] [CrossRef]

- Chaperon, T.; Goulette, F. Extracting cylinders in full 3D data using a random sampling method and the Gaussian image. In Computer Vision and Image Understanding; Academic Press: San Diego, CA, USA, 2001; pp. 35–42. [Google Scholar]

- Nurunnabi, A.; Belton, D.; West, G. Robust Segmentation in Laser Scanning 3D Point Cloud Data. In Proceedings of the 2012 International Conference on Digital Image Computing Techniques and Applications, Fremantle, Australia, 3–5 December 2012; IEEE: Fremantle, WA, USA, 2012; pp. 1–8. [Google Scholar]

- Rusu, R.B.; Blodow, N. Close-range scene segmentation and reconstruction of 3D point cloud maps for mobile manipulation in domestic environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2009), St. Louis, MO, USA, 10–15 October 2009. [Google Scholar]

- Samet, H.; Tamminen, M. Efficient component labeling of images of arbitrary dimension represented by linear bintrees. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 579–586. [Google Scholar] [CrossRef]

- Girardeau-Montaut, D.; Roux, M.; Thibault, G. Change Detection on points cloud data acquired with a ground laser scanner. In ISPRS Workshop Laser Scanning; ISPRS: Enschede, The Netherlands, 2005. [Google Scholar]

- Girardeau-Montaut, D. Détection de Changement sur des Données Géométriques Tridimensionnelles; Télécom ParisTech: Paris, France, 2006. [Google Scholar]

- Douillard, B.; Underwood, J. On the segmentation of 3D LIDAR point clouds. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 2798–2805. [Google Scholar]

- Aijazi, A.; Checchin, P.; Trassoudaine, L. Segmentation Based Classification of 3D Urban Point Clouds: A Super-Voxel Based Approach with Evaluation. Remote Sens. 2013, 5, 1624–1650. [Google Scholar] [CrossRef]

- Sapkota, P. Segmentation of Coloured Point Cloud Data; International Institute for Geo-Information Science and Earth Observation: Enschede, The Netherlands, 2008. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Poux, F.; Hallot, P.; Jonlet, B.; Carre, C.; Billen, R. Segmentation semi-automatique pour le traitement de données 3D denses: Application au patrimoine architectural. XYZ Rev. L’Assoc. Fr. Topogr. 2014, 141, 69–75. [Google Scholar]

- Knublauch, H.; Fergerson, R.W.; Noy, N.F.; Musen, M.A. The Protégé OWL Plugin: An Open Development Environment for Semantic Web Applications. Proceeings of the 3rd International Semantic Web Conference (ISWC2004), Hiroshima, Japan, 7–11 November 2004; pp. 229–243. [Google Scholar]

- Point Cloud Library (PCL). Statistical Outlier Fliter. Available online: http://pointclouds.org/documentation/tutorials/statistical_outlier.php (accessed on 26 September 2017).

- Kaufman, A.; Yagel, R.; Cohen, D. Modeling in Volume Graphics. In Modeling in Computer Graphics; Springer: Berlin/Heidelberg, Germany, 1993; pp. 441–454. [Google Scholar]

- Brinkhoff, T.; Kriegel, H.; Schneider, R.; Braun, A. Measuring the Complexity of Polygonal Objects. In Proceedings of the 3rd ACM International Workshop on Advances in Geographic Information Systems, Baltimore, MD, USA, 1–2 December 1995; p. 109. [Google Scholar]

- Arp, R.; Smith, B.; Spear, A.D. Building Ontologies with Basic Formal Ontology; The MIT Press: Cambridge, MA, USA, 2015; ISBN 9788578110796. [Google Scholar]

- Poux, F.; Neuville, R.; Hallot, P.; Billen, R. Model for reasoning from semantically rich point cloud data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, in press. [Google Scholar]

- Neuville, R.; Poux, F.; Hallot, P.; Billen, R. Towards a normalized 3D geovizualisation: The viewpoint management. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, IV-2/W1, 179–186. [Google Scholar] [CrossRef]

- Ronzino, P.; Niccolucci, F.; Felicetti, A.; Doerr, M. CRMba a CRM extension for the documentation of standing buildings. Int. J. Digit. Libr. 2016, 17, 71–78. [Google Scholar] [CrossRef]

- Garstka, J.; Peters, G. Learning Strategies to Select Point Cloud Descriptors for 3D Object Classification: A Proposal. In Eurographics 2014; Paulin, M., Dachsbacher, C., Eds.; The Eurographics Association: Strasbourg, France, 2014. [Google Scholar]

- Franken, M.; van Gemert, J.C. Automatic Egyptian hieroglyph recognition by retrieving images as texts. In Proceedings of the 21st ACM International Conference on Multimedia—MM ’13, Barcelona, Spain, 21–25 October 2013; ACM Press: New York, NY, USA, 2013; pp. 765–768. [Google Scholar]

- Hmida, H.B.; Cruz, C.; Boochs, F.; Nicolle, C. From 9-IM topological operators to qualitative spatial relations using 3D selective Nef complexes and logic rules for bodies. In Proceedings of the International Conference on Knowledge Engineering and Ontology Development (KEOD 2012), Barcelona, Spain, 4–7 October 2012; SciTePress: Setubal, Portugal, 2012; pp. 208–213. [Google Scholar]

- Forte, M. Cyber-archaeology: An eco-approach to the virtual reconstruction of the past. In International Symposium on “Information and Communication Technologies in Cultural Heritage”; Earthlab: Ioannina, Greece, 2008; pp. 91–106. [Google Scholar]

- Remondino, F. From point cloud to surface. In Proceedings of the International Workshop on Visualization and Animation of Reality-based 3D Models, Tarasp-Vulpera, Switzerland, 24–28 February 2003; Volume XXXIV. [Google Scholar]

- Hussain, M. Efficient simplification methods for generating high quality LODs of 3D meshes. J. Comput. Sci. Technol. 2009, 24, 604–608. [Google Scholar] [CrossRef]

- Scheiblauer, C.; Wimmer, M. Out-of-core selection and editing of huge point clouds. Comput. Graph. 2011, 35, 342–351. [Google Scholar] [CrossRef]

| Type | Point Features | Range | Explanation |

|---|---|---|---|

| Sensor desc. | X, Y, Z | Bounding-box | Limits the study of points to the zone of interest |

| R, G, B 1 | Material Colour | Limited to the colour range that domain knowledge specifies | |

| I | Clear noise and weight low intensity values for signal representativity | ||

| Shape desc. | RANSAC 2 | - | Used to provide estimator of planarity |

| Local desc. | Nx, Ny, Nz 3 | [−1, 1] | Normalized normal to provide insight on point and object orientation |

| Density 4 | - | Used to provide insights on noise level and point grouping into one object | |

| Curvature | [0, 1] | Used to provide insight for edge extraction and break lines | |

| KB 5 Distance map | Amplitude of the spatial error between the raw measurements and the final dataset | ||

| Structure desc. 6 | Voxels | - | Used to infer initial spatial connectivity |

| Type | Point Features | Range | Explanation |

|---|---|---|---|

| Sensor generalization | Xb, Yb, Zb | barycentre | Coordinates of the barycentre |

| Rg, Gg, Bg 1 | - | Material unique colour from statistical generalization | |

| I | - | Intensity unique value from statistical generalization | |

| Shape desc. | CV 2 | - | Convex Hull, used to provide a 2D shape generalization of the underlying points |

| Area | - | Area of the 2D shape, used as a reference for knowledge-based comparison | |

| CS, CP | [0, 1] | Used to provide insight on the regularity of the shape envelope | |

| Local generalization | Nx, Ny, Nz 3 | [−1, 1] | Normalized normal of the 2D shape to provide insight on the object orientation |

| RDF Triple Store | Effect |

|---|---|

| ((CS some xsd:double[> “1.1”^^xsd:double]) or (CS some xsd:double[< “1.05”^^xsd:double])) and (CP some xsd:double[> “4.0E-4”^^xsd:double])* | Tessera is irregular (1) |

| (CP some xsd:double[<= “4.0E-4”^^xsd:double]) and (CS some xsd:double[>= “1.05”^^xsd:double, <= “1.1”^^xsd:double]) | Tessera is square |

| (1) and (hasProperty some ColorGold) and (hasProperty some NonReflective) and (Area some xsd:double[<= “1.2”^^xsd:double]) | Tessera is alto-medieval |

| (hasProperty some ColorWhite) and (Area some xsd:double[>= “16.0”^^xsd:double, <= “24.0”^^xsd:double]) | Material is Faience |

| Tesserae | Segmentation Number of Points | Accuracy | |

|---|---|---|---|

| Ground truth | Tesserae C. | ||

| Gold | |||

| Sample NO. 1 | 10,891 | 10801 | 99% |

| Sample NO. 2 | 10,123 | 11,048 | 91% |

| Sample NO. 3 | 10,272 | 10,648 | 96% |

| Sample NO. 4 | 11,778 | 12,440 | 94% |

| Faience | |||

| Sample NO. 1 | 27,204 | 28,570 | 95% |

| Sample NO. 2 | 23,264 | 22,978 | 99% |

| Sample NO. 3 | 23,851 | 24,440 | 98% |

| Sample NO. 4 | 22,238 | 22,985 | 97% |

| Silver | |||

| Sample NO. 1 | 1364 | 1373 | 99% |

| Sample NO. 2 | 876 | 931 | 94% |

| Sample NO. 3 | 3783 | 3312 | 88% |

| Sample NO. 4 | 1137 | 1098 | 97% |

| C. Glass | |||

| Sample NO. 1 | 1139 | 1283 | 87% |

| Sample NO. 2 | 936 | 1029 | 90% |

| Sample NO. 3 | 821 | 736 | 90% |

| Sample NO. 4 | 598 | 625 | 95% |

| ID | Tesserae | Segmentation | Classification | Res. | ||||

|---|---|---|---|---|---|---|---|---|

| Type | Nb | Nb | % | Nb | % | Nb | ||

| 1 |  | NG | 138 | 331 | 88% | 131 | 98% | 7 |

| AG | 239 | 196 | 99% | 43 | |||

| FT | 11 | 11 | 100% | 11 | 100% | 0 | |

| 2 |  | NG | 155 | 284 | 91% | 128 | 93% | 27 |

| AG | 158 | 139 | 95% | 19 | |||

| ST | 269 | 249 | 93% | 216 | 87% | 53 | |

| 3 |  | NG | 396 | 839 | 89% | 297 | 86% | 99 |

| AG | 549 | 471 | 95% | 78 | |||

| CG | 695 | 494 | 71% | 486 | 98% | 209 | |

| Total | 2610 | 2208 | 85% | 2075 | 94% | 535 | ||

| Elements | Segmentation In Number of Points | Accuracy | |

|---|---|---|---|

| Ground truth | Method | ||

| Calcareous Stones | |||

| Sample NO. 1 | 37,057 | 35,668 | 96% |

| Sample NO. 2 | 30,610 | 27,100 | 88% |

| Sample NO. 3 | 34,087 | 32,200 | 99% |

| Sample NO. 4 | 35,197 | 30,459 | 86% |

| Language | RDF Triple Store | Effect |

|---|---|---|

| SPARQL | PREFIX rdf:<http://www.w3.org/1999/02/22-rdf-syntax-ns#> PREFIX npt: <http://www.geo.ulg.ac.be/nyspoux/> SELECT ?ind WHERE { ?ind rdf:type npt:AltoMedievalTessera } ORDER BY ?ind | Return all alto-medieval tesserae (regarding initial data input) |

| SQL | SELECT name, area FROM worldObject WHERE ST_3DIntersects(geomWo::geometry, polygonZ::geometry); | Return all tesserae which are comprised in the region defined by a selection polygon and gives their area |

| SPARQL & SQL | SELECT geomWo FROM worldObject WHERE ST_3DIntersects(geomWo::geometry, polygon2Z::geometry) AND area > 0,0001; PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> PREFIX npt: <http://www.geo.ulg.ac.be/nyspoux/> SELECT ?ind WHERE { ?ind rdf:type npt: XIXCentTessera } ORDER BY ?ind | Return all renovated tesserae in the region 2 where the area is superior to 1 cm² |

| Language | Equivalent To Definition | Effect |

|---|---|---|

| OWL (Protégé) | (hasProperty some ColorLimestone) and (hasProperty some NonReflective) and (CP some xsd:double[<= “4.0E-4“^^xsd:double]) and (CS some xsd:double[>= “1.05”^^xsd:double, <= “9.0”^^xsd:double]) and (ProjectedArea some xsd:double[>= “0.05”^^xsd:double, <= “0.4”^^xsd:double]) | Defines an element as a BayFrame |

| OWL (Protégé) | (not (hasProperty some ColorLimestone)) and (sfWithin some BayFrame) and (BoundingBox some xsd:double[>= “2.9”^^xsd:double, <= “3.5”^^xsd:double]) | Defines an element as a DoorSection |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Poux, F.; Neuville, R.; Van Wersch, L.; Nys, G.-A.; Billen, R. 3D Point Clouds in Archaeology: Advances in Acquisition, Processing and Knowledge Integration Applied to Quasi-Planar Objects. Geosciences 2017, 7, 96. https://doi.org/10.3390/geosciences7040096

Poux F, Neuville R, Van Wersch L, Nys G-A, Billen R. 3D Point Clouds in Archaeology: Advances in Acquisition, Processing and Knowledge Integration Applied to Quasi-Planar Objects. Geosciences. 2017; 7(4):96. https://doi.org/10.3390/geosciences7040096

Chicago/Turabian StylePoux, Florent, Romain Neuville, Line Van Wersch, Gilles-Antoine Nys, and Roland Billen. 2017. "3D Point Clouds in Archaeology: Advances in Acquisition, Processing and Knowledge Integration Applied to Quasi-Planar Objects" Geosciences 7, no. 4: 96. https://doi.org/10.3390/geosciences7040096