CEPP: Perceiving the Emotional State of the User Based on Body Posture

Abstract

:1. Introduction

2. Related Work

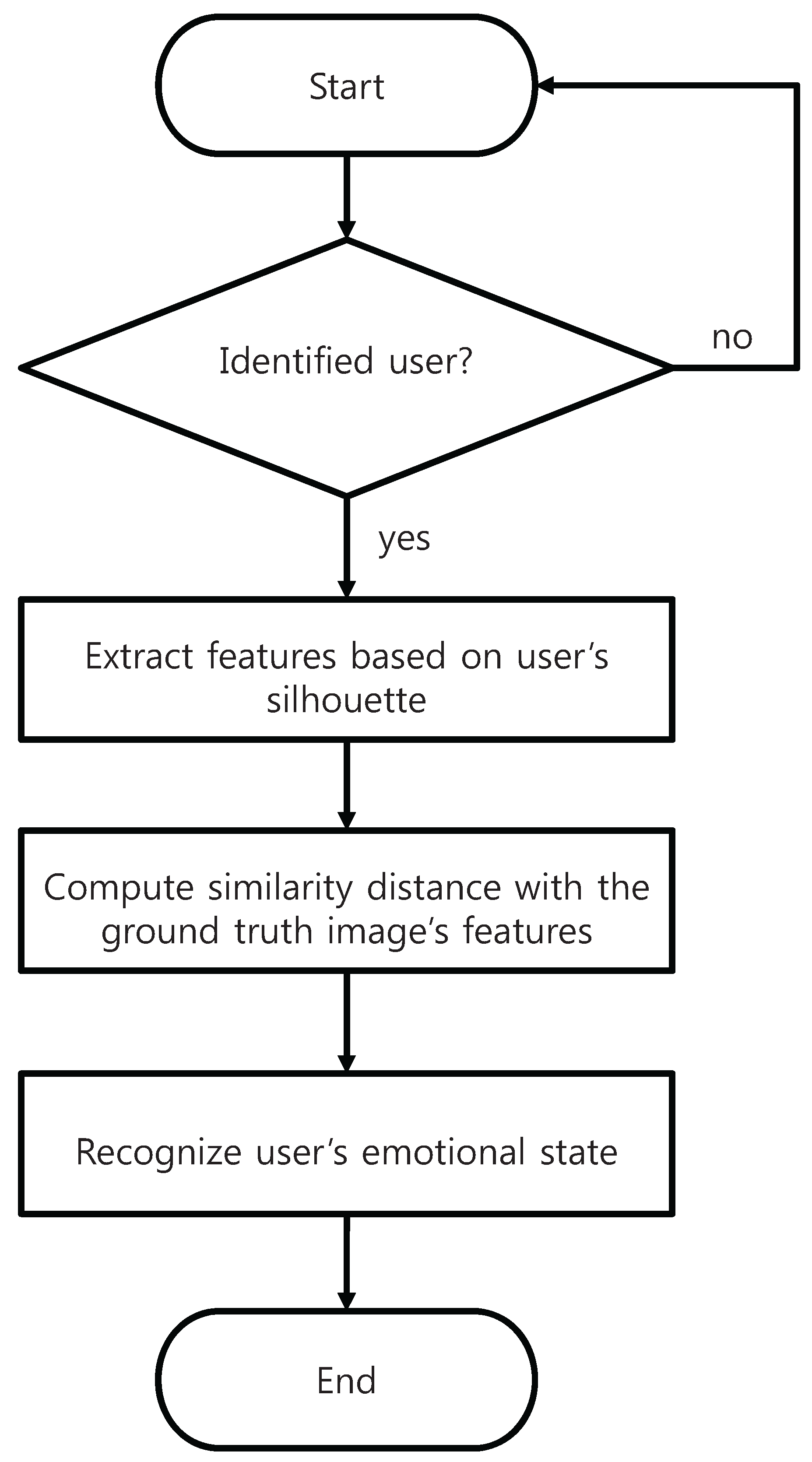

3. Overview of CEPP

3.1. Classifying Emotional State

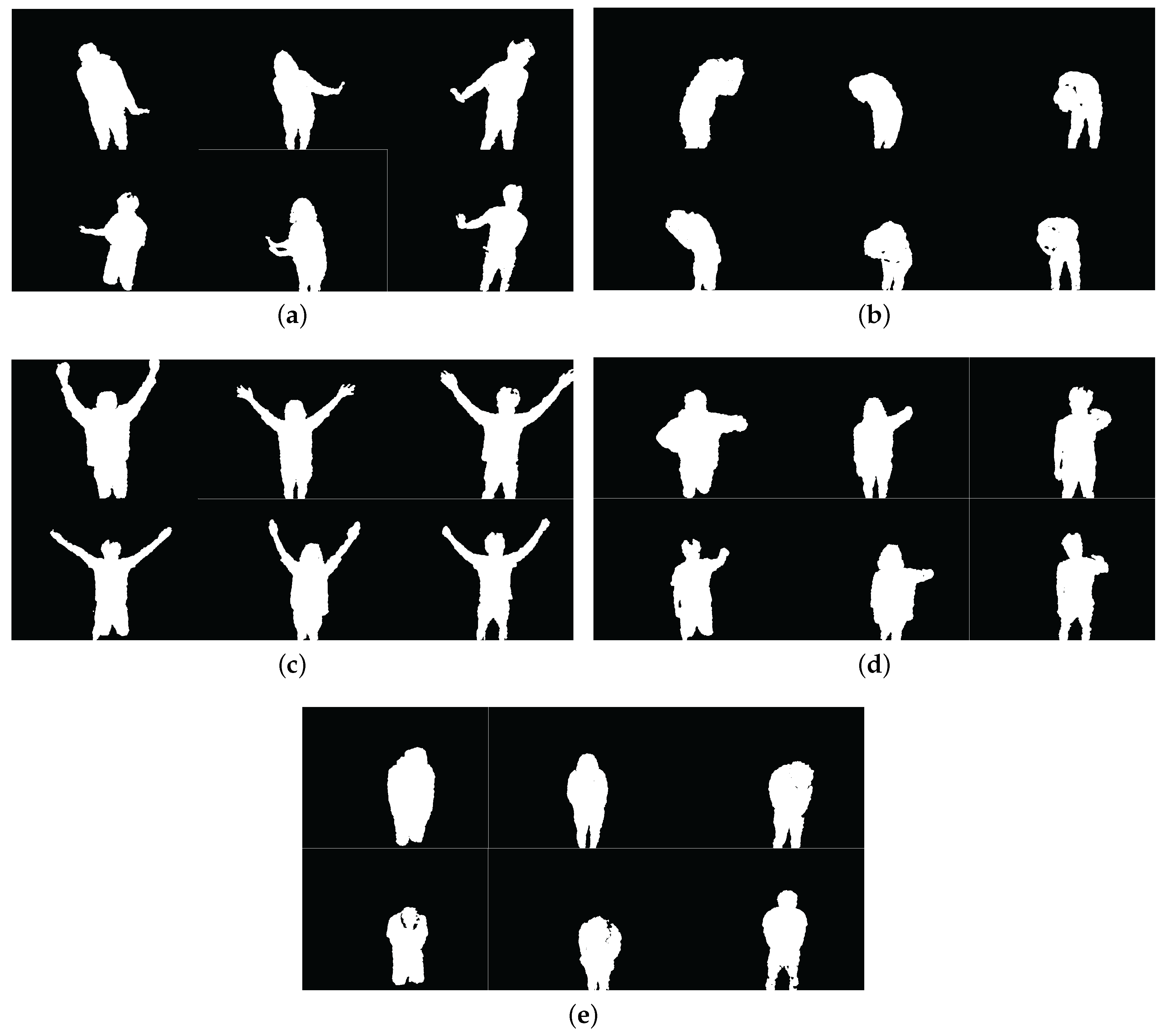

3.2. Recognizing the User’s Emotional State

| Algorithm 1 Pseudo code of CEPP. |

|

4. Performance Evaluation

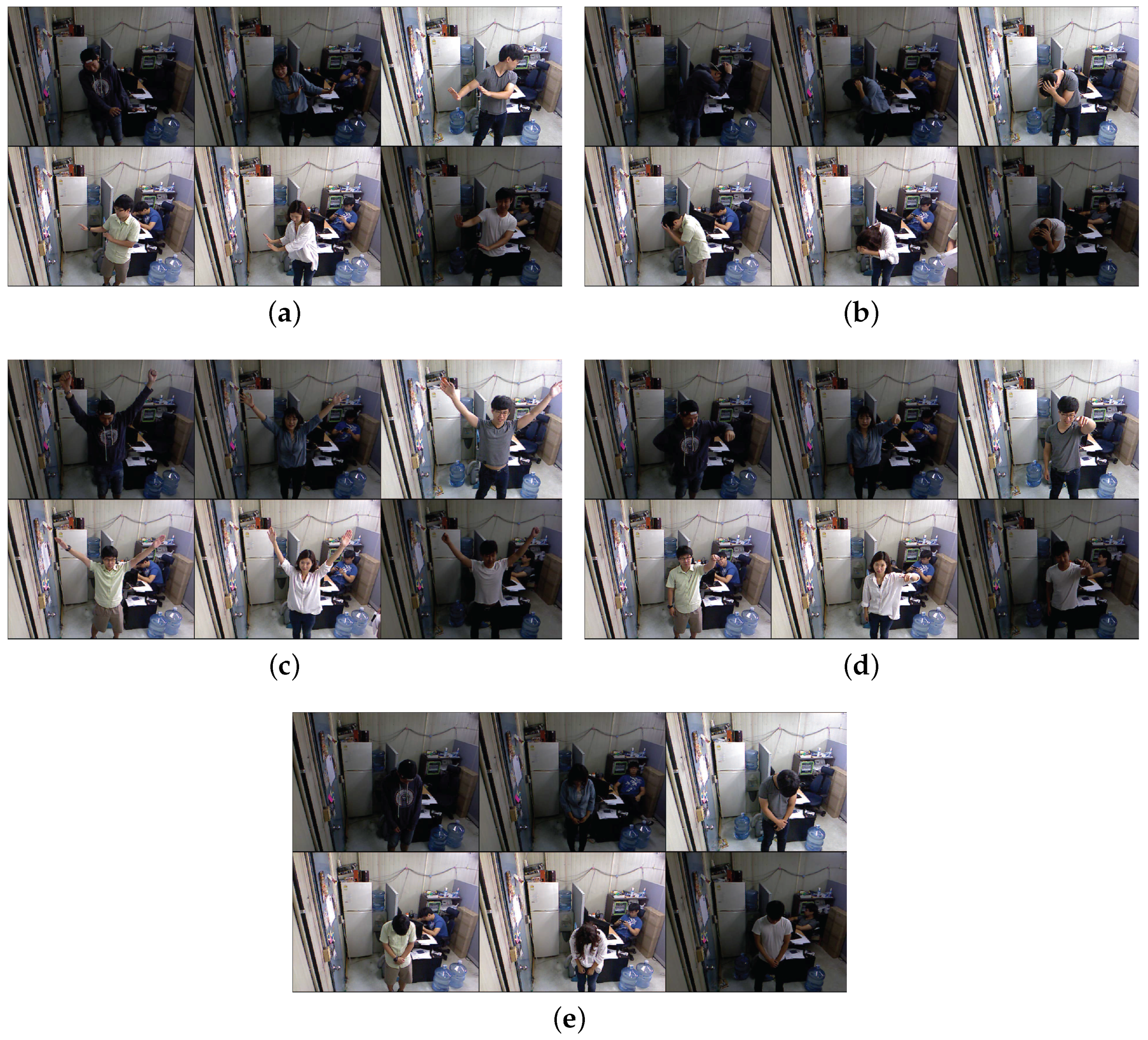

4.1. Experimental Configuration

4.1.1. Details about the Participants

4.1.2. Details on the Experimental Setup

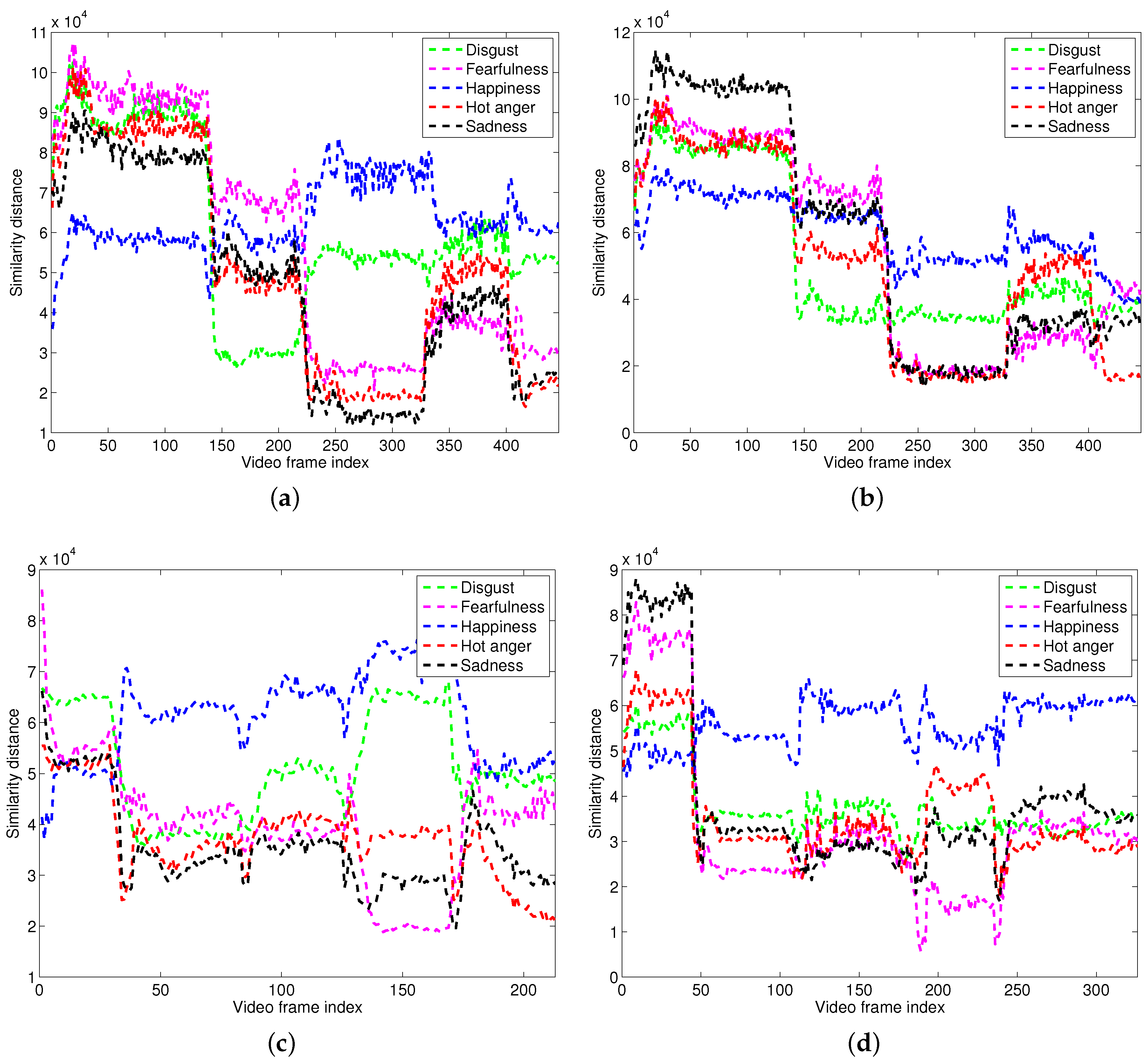

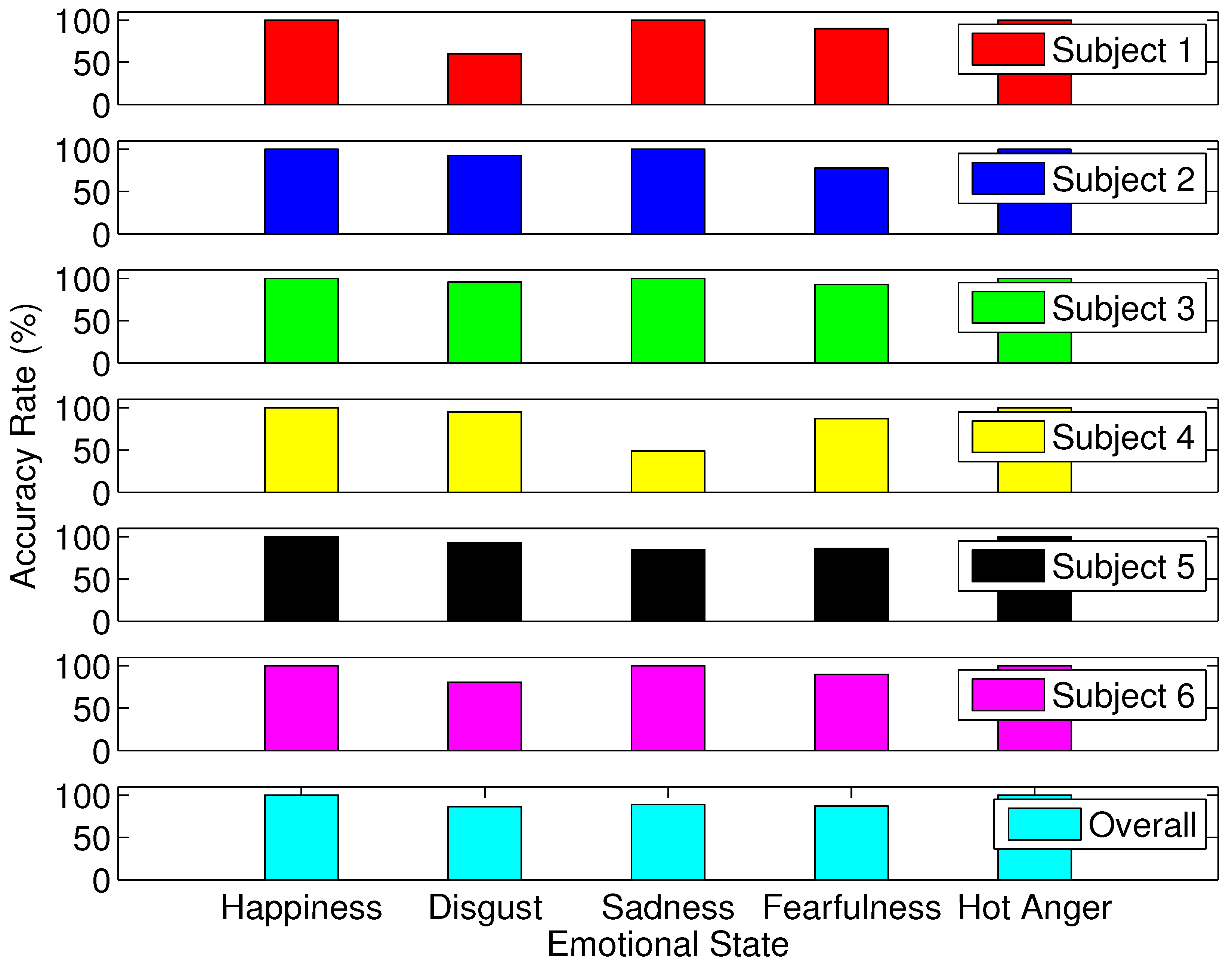

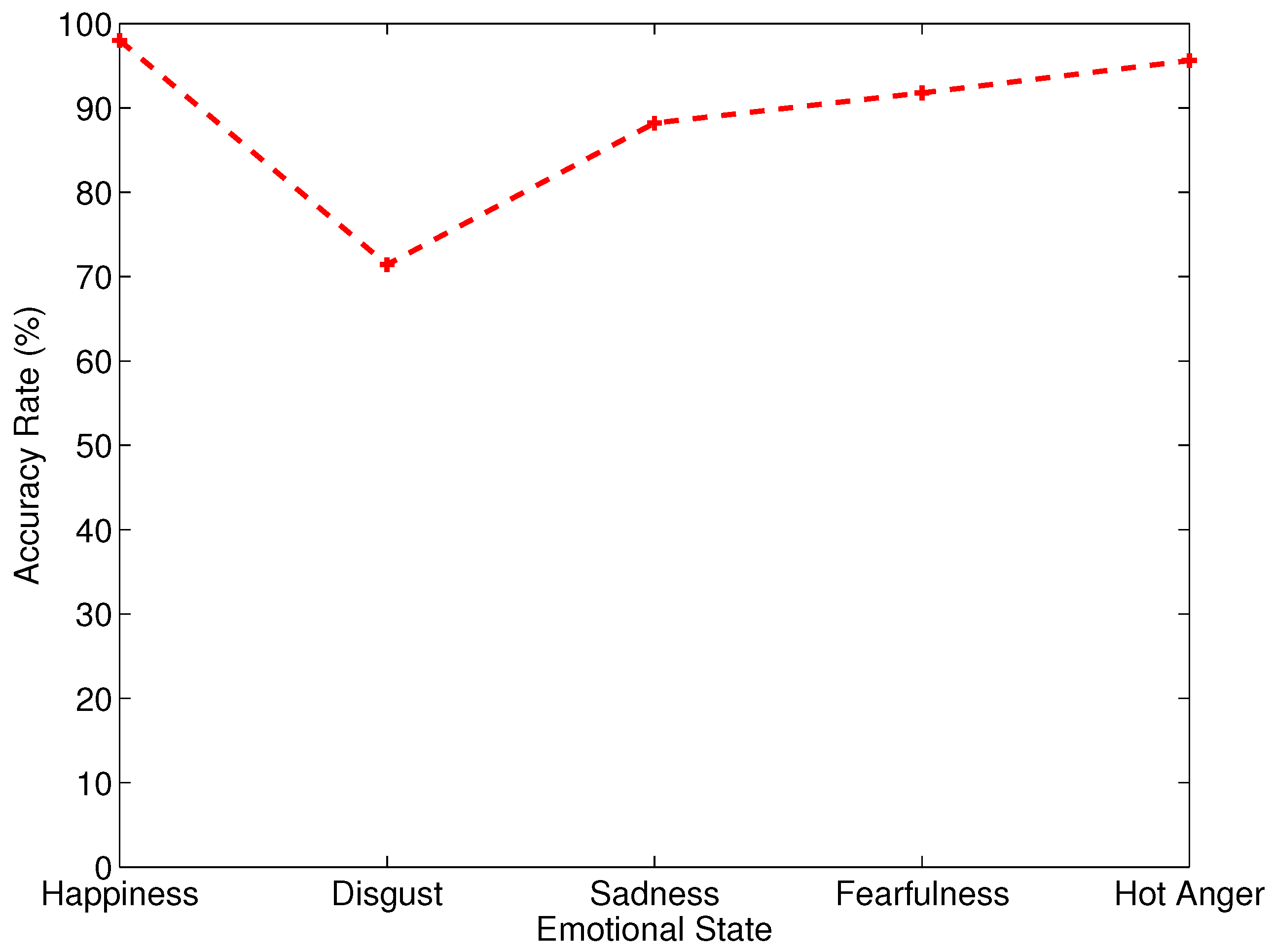

4.2. Evaluation with Respect to Accuracy

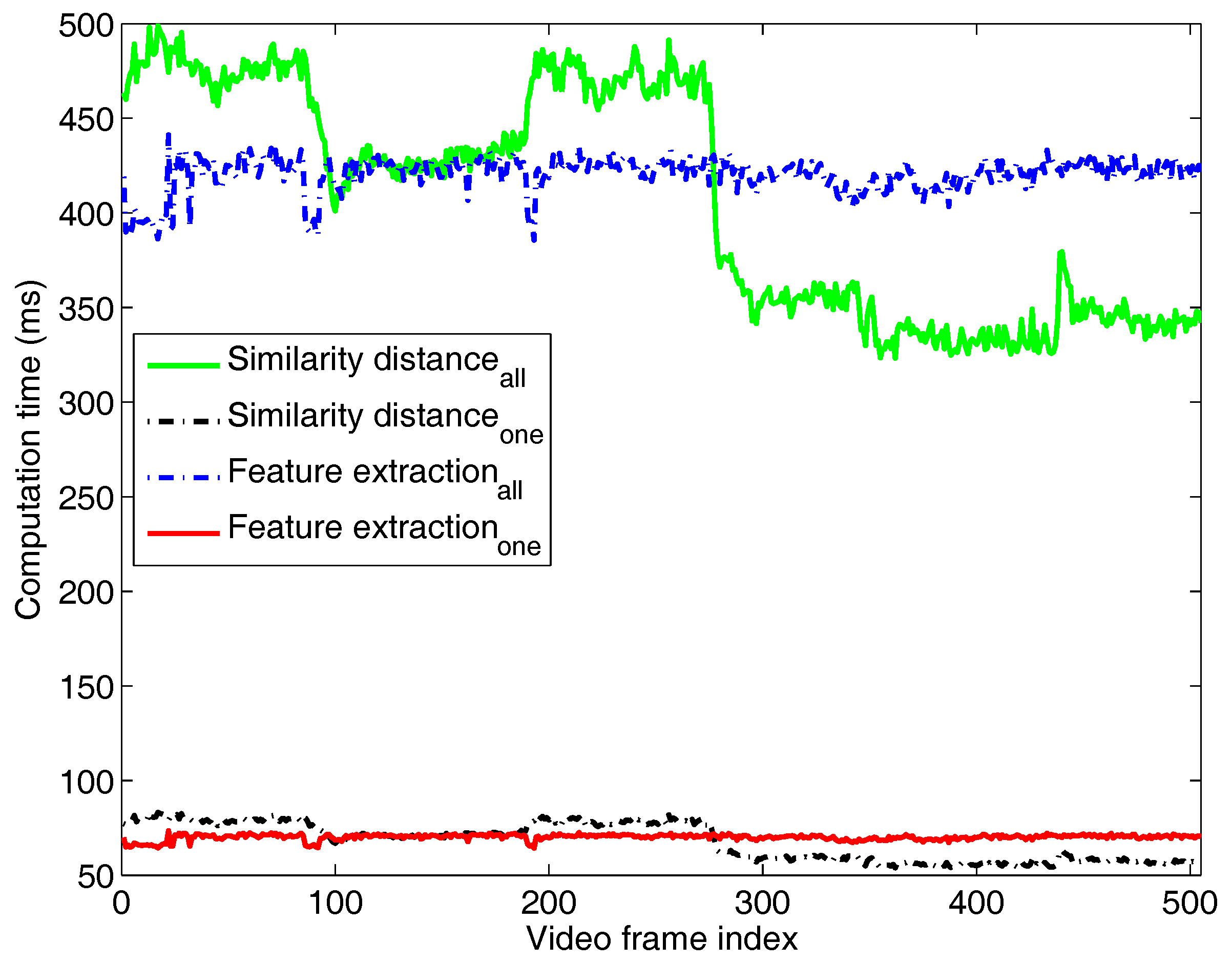

4.3. Evaluation with Respect to Computation Time

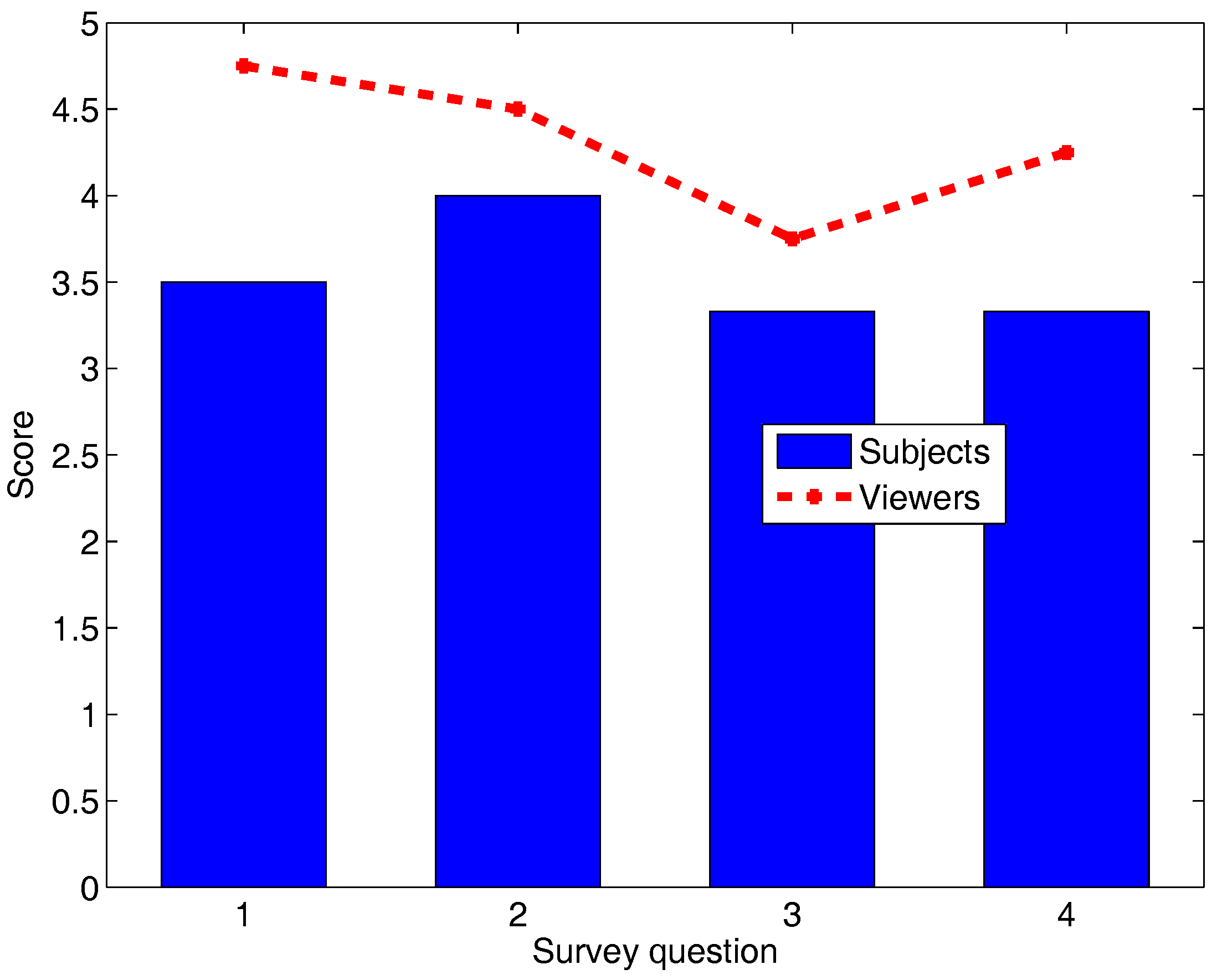

4.4. User Study

- “The body posture within the experiment is the most representative body posture to express one’s emotional state”.

- “There is a body posture that I used to express my emotional feeling”.

- “The body postures that I expressed within the experiment are body postures that I use within my daily life”.

- “The body posture that I formulated within the experiment is accurate in terms of expressing my emotional feeling”

- “The body postures that the subjects expressed within the experiment are body postures that I use within my daily life”.

- “The body postures that the subjects formulated within the experiment are accurate in terms of expressing my emotional feeling.”

“The motions chosen in the experiment seem to be somewhat exaggerated. My actions are not so extravagant”.

4.5. Remark

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Decisions, O.T.D. The Power of Emotional Appeals in Advertising. J. Advert. Res. 2010, 169–180. [Google Scholar] [CrossRef]

- Brave, S.; Nass, C. Emotion in human-computer interaction. In The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies and Emerging Applications; Sears, A., Jacko, J.A., Eds.; Taylor & Francis: Milton Park, UK, 2002; pp. 81–96. [Google Scholar]

- Gross, J.J. Emotion and emotion regulation. Handb. Personal. Theory Res. 1999, 2, 525–552. [Google Scholar]

- Isomursu, M.; Tähti, M.; Väinämö, S.; Kuutti, K. Experimental evaluation of five methods for collecting emotions in field settings with mobile applications. Int. J. Hum. Comput. Stud. 2007, 65, 404–418. [Google Scholar] [CrossRef]

- Li, Y. Hand gesture recognition using Kinect. In Proceedings of the 2012 IEEE 3rd International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 22–24 June 2012; pp. 196–199. [Google Scholar]

- Saneiro, M.; Santos, O.C.; Salmeron-Majadas, S.; Boticario, J.G. Towards Emotion Detection in Educational Scenarios from Facial Expressions and Body Movements through Multimodal Approaches. Sci. World J. 2014, 2014. [Google Scholar] [CrossRef] [PubMed]

- Libero, L.E.; Stevens, C.E.; Kana, R.K. Attribution of emotions to body postures: An independent component analysis study of functional connectivity in autism. Hum. Brain Mapp. 2014, 35, 5204–5218. [Google Scholar] [CrossRef] [PubMed]

- Geiser, M.; Walla, P. Objective measures of emotion during virtual walks through urban environments. Appl. Sci. 2011, 1, 1–11. [Google Scholar] [CrossRef]

- Dael, N.; Mortillaro, M.; Scherer, K.R. Emotion expression in body action and posture. Emotion 2012, 12, 1085–1101. [Google Scholar] [CrossRef] [PubMed]

- Camurri, A.; Lagerlöf, I.; Volpe, G. Recognizing emotion from dance movement: comparison of spectator recognition and automated techniques. Int. J. Hum. Comput. Stud. 2003, 59, 213–225. [Google Scholar] [CrossRef]

- Caridakis, G.; Castellano, G.; Kessous, L.; Raouzaiou, A.; Malatesta, L.; Asteriadis, S.; Karpouzis, K. Multimodal emotion recognition from expressive faces, body gestures and speech. In Artificial Intelligence and Innovations 2007: From Theory to Applications; Boukis, C., Pnevmatikakis, A., Polymenakos, L., Eds.; Springer: Berlin, Germany, 2007; pp. 375–388. [Google Scholar]

- Jia, X.; Liu, S.; Powers, D.; Cardiff, B. A Multi-Layer Fusion-Based Facial Expression Recognition Approach with Optimal Weighted AUs. Appl. Sci. 2017, 7. [Google Scholar] [CrossRef]

- Shibata, T.; Kijima, Y. Emotion recognition modeling of sitting postures by using pressure sensors and accelerometers. In Proceedings of the IEEE 21st International Conference on Pattern Recognition (ICPR), Tsukuba, Japan, 11–15 November 2012; pp. 1124–1127. [Google Scholar]

- Loi, F.; Vaidya, J.G.; Paradiso, S. Recognition of emotion from body language among patients with unipolar depression. Psychiatry Res. 2013, 209, 40–49. [Google Scholar] [CrossRef] [PubMed]

- Sankoff, D.; Kruskal, J.B. Time Warps, String Edits, and Macromolecules: The Theory and Practice of Sequence Comparison; Addison-Wesley: Reading, MA, USA, 1983; Volume 1. [Google Scholar]

- Castellano, G.; Villalba, S.D.; Camurri, A. Recognising human emotions from body movement and gesture dynamics. In Affective Computing and Intelligent Interaction; D’Mello, S., Graesser, A., Schuller, B., Martin, J.-C., Eds.; Springer: Berlin, Germany, 2007; pp. 71–82. [Google Scholar]

- Wallbott, H.G. Bodily expression of emotion. Eur. J. Soc. Psychol. 1998, 28, 879–896. [Google Scholar] [CrossRef]

- Darwin, C. The Expression of the Emotions in Man and Animals; Oxford University Press: Oxford, UK, 1998. [Google Scholar]

- OpenNI Consortium. OpenNI, the Standard Framework for 3D Sensing. Available online: http://openni.ru (accessed on 15 August 2017).

| Emotional State | Body Posture |

|---|---|

| Happiness | Shoulders up |

| Interest | Lateral hand and arm movement and arm stretched out frontal |

| Boredom | Raising the chin (moving the head backward), collapsed body posture, and head bent sideways |

| Disgust | Shoulders forward, head downward and upper body collapsed, and arms crossed in front of the chest |

| Hot anger | Lifting the shoulder, opening and closing hand, arms stretched out frontal, pointing, and shoulders squared |

| Video Scene | Emotional States |

|---|---|

| All of the emotions | (1) Happiness, (2) Disgust, (3) Sadness, (4) Fearfulness, (5) Hot anger |

| Emotions | Recognized Emotional State | ||||||

|---|---|---|---|---|---|---|---|

| Subject 1 | Subject 2 | Subject 3 | Subject 4 | Subject 5 | Subject 6 | Overall | |

| Happiness | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| Disgust | 60.32% | 92.68% | 95.45% | 95.08% | 92.86% | 80.70% | 86.18% |

| Sadness | 100% | 100% | 100% | 48.57% | 84.62% | 100% | 88.86% |

| Fearfulness | 89.83% | 77.78% | 92.59% | 86.79% | 86% | 89.87% | 87.14% |

| Hot anger | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| Category | Computation Time |

|---|---|

| Computing all similarity distances | 406.58 ms |

| Computing one similarity distance | 67.76 ms |

| Extracting all features | 420.50 ms |

| Extracting one feature | 70.08 ms |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.K.; Bae, M.; Lee, W.; Kim, H. CEPP: Perceiving the Emotional State of the User Based on Body Posture. Appl. Sci. 2017, 7, 978. https://doi.org/10.3390/app7100978

Lee SK, Bae M, Lee W, Kim H. CEPP: Perceiving the Emotional State of the User Based on Body Posture. Applied Sciences. 2017; 7(10):978. https://doi.org/10.3390/app7100978

Chicago/Turabian StyleLee, Suk Kyu, Mungyu Bae, Woonghee Lee, and Hwangnam Kim. 2017. "CEPP: Perceiving the Emotional State of the User Based on Body Posture" Applied Sciences 7, no. 10: 978. https://doi.org/10.3390/app7100978