1. Introduction

Software systems have become an essential part of our lives. These systems are very important because they are able to ensure the provision of high-quality services to customers due to their reliability and stability. However, software development is a difficult and complex process. Therefore, the main focus of software companies is on improving the reliability and stability of a software system. This has prompted research in software reliability engineering and many software reliability growth models (SRGM) have been proposed over the past decades. Many existing non-homogeneous Poisson process (NHPP) software reliability models have been developed through the fault intensity rate function and the mean value functions

within a controlled testing environment to estimate reliability metrics such as the number of residual faults, failure rate, and reliability of software. Generally, the reliability increases more quickly and later the improvement slows down. Software reliability models are used to estimate and predict the reliability, number of remaining faults, failure intensity, total software development cost, and so forth, of software. Various software reliability models and application studies have been developed to date. Discovering the confidence intervals of software reliability is done in the field of software reliability because it can enhance the decision of software releases and control the related expenditures for software testing [

1]. First, Yamada and Osaki [

2] considered that the maximum likelihood estimates concerning the confidence interval of the mean value function can be estimated. Yin and Trivedi [

3] present the confidence bounds for the model parameters via the Bayesian approach. Huang [

4] also present a graph to illustrate the confidence interval of the mean value function. Gonzalez et al. [

5] presented a general methodology that applied to a power distribution test system considering the effect of weather conditions and aging of components in the system reliability indexes for the analysis of repairable systems using non-homogeneous Poisson process, including several conditions in the system at the same time. Nagaraju and Fiondella [

6] presented an adaptive expectation-maximization algorithm for non-homogeneous Poisson process software reliability growth models, and illustrated the steps of this adaptive approach through a detailed example, which demonstrates improved flexibility over the standard expectation-maximization (EM) algorithm. Srivastava and Mondal [

7] proposed a predictive maintenance model for an N-component repairable system by integrating non-homogeneous Poisson process (NHPP) models and system availability concept, such that the use of costly predictive maintenance technology is minimized. Kim et al. [

8] described application of the software reliability model of the target system to increase the software reliability, and presented some analytical methods as well as the prediction and estimation results.

Chatterjee and Singh [

9] proposed a software reliability model based on NHPP that incorporates a logistic-exponential testing coverage function with imperfect debugging. In addition, Chatterjee and Shukla [

10] developed a software reliability model that considers different types of faults incorporating both imperfect debugging and a change point. Yamada et al. [

11] developed a software-reliability growth model incorporating the amount of test effort expended during the software testing phase. Joh et al. [

12] proposed a new Weibull distribution based on vulnerability discovery model. Sagar et al. [

13] presented best software reliability growth model with including feature of both Weibull distribution and inflection S-shaped SRGM to estimate the defects of software system, and provide help to researchers and software industries to develop highly reliable software products.

Generally, existing models are applied to software testing data and then used to make predictions on the software failures and reliability in the field. Here, the important point is that the test environment and operational environment are different from each other. Once software systems are introduced, the software systems used in the field environments are the same as or close to those used in the development-testing environment; however, the systems may be used in many different locations. Several researchers started applying the factor of operational environments. A few researchers, Yang and Xie, Huang et al., and Zhang et al. [

14,

15,

16], proposed a method of predicting the fault detection rate to reflect changes in operating environments, and used methodology that modifies the software reliability model in the operating environments by introducing a calibration factor. Teng and Pham [

17] discussed a generalized model that captures the uncertainty of the environment and its effects upon the software failure rate. Pham [

18,

19] and Chang et al. [

20] developed a software reliability model incorporating the uncertainty of the system fault detection rate per unit of time subject to the operating environment. Honda et al. [

21] proposed a generalized software reliability model (GSRM) based on a stochastic process and simulated developments that include uncertainties and dynamics. Pham [

22] recently presented a new generalized software reliability model subject to the uncertainty of operating environments. And also, Song et al. [

23] presented a new model with consideration of a three-parameter fault detection rate in the software development process, and relate it to the error detection rate function with consideration of the uncertainty of operating environments.

In this paper, we discuss a new model with consideration for the Weibull function in the software development process and relate it to the error detection rate function with consideration of the uncertainty of operating environments. We examine the goodness of fit of the fault detection rate software reliability model and other existing NHPP models based on several sets of software testing data. The explicit solution of the mean value function for the new model is derived in

Section 2. Criteria for model comparisons and confidence interval for selection of the best model are discussed in

Section 3. Model analysis and results are discussed in

Section 4.

Section 5 presents the conclusions and remarks.

3. Model Comparisons

In this section, we present a set of comparison criteria for best model selection, quantitatively compare the models using these comparison criteria, and obtain the confidence intervals of the NHPP software reliability model.

3.1. Criteria for Model Comparisons

Once the analytical expression for the mean value function

is derived, the model parameters to be estimated in the mean value function can then be obtained with the help of a developed Matlab program based on the least-squares estimate (LSE) method. Five common criteria [

27,

28], namely the mean squared error (MSE), the sum absolute error (SAE), the predictive ratio risk (PRR), the predictive power (PP), and Akaike’s information criterion (AIC), will be used as criteria for the model estimation of the goodness of fit and to compare the proposed model and other existing models as listed in

Table 1.

Table 1 summarizes the proposed model and several existing well-known NHPP models with different mean value functions. Note that models 9 and 10 in

Table 1 did consider environmental uncertainty.

The mean squared error is given by

The sum absolute error is given by

The predictive ratio risk and the predictive power are given as follows:

To compare the all model’s ability in terms of maximizing the likelihood function (MLF) while considering the degrees of freedom, Akaike’s information criterion (AIC) is applied:

where

is the total number of failures observed at time

;

is the number of unknown parameters in the model; and

is the estimated cumulative number of failures at

for

.

The mean squared error measures the distance of a model estimate from the actual data with the consideration of the number of observations, n, and the number of unknown parameters in the model, m. The sum absolute error is similar to the sum squared error, but the way of measuring the deviation is by the use of absolute values, and sums the absolute value of the deviation between the actual data and the estimated curve. The predictive ratio risk measures the distance of model estimates from the actual data against the model estimate. The predictive power measures the distance of model estimates from the actual data against the actual data. MSE, SAE, PRR, and PP are the criterion to measure the difference between the actual and predicted values. AIC is a measure of goodness of fit of an estimated statistical model, and considered to be a measure which can be used to rank the models, and it gives a penalty to a model with more number of parameters. For all five of these criteria—MSE, SAE, PRR, PP and AIC—the smaller the value, the closer the model fits relative to other models run on the same data set.

3.2. Estimation of the Confidence Intervals

In this section, we use Equation (7) to obtain the confidence intervals [

27] of the software reliability models in

Table 1. The confidence interval is given by

where

is

percentile of the standard normal distribution.

4. Numerical Examples

Wireless base stations provide the interface between mobile phone users and the conventional telephone network. It can take hundreds of wireless base stations to provide adequate coverage for users within a moderately sized metropolitan area. Controlling the cost of an individual base station is therefore an important objective. On the other hand, the availability of a base station is also an important consideration since wireless users expect the system availability to be comparable to the high availability they experience with the conventional telephone network. The software in this numerical example runs on an element within a wireless network switching center. Its main function includes routing voice channels and signaling messages to relevant radio resources and processing entities [

35]. Dataset #1, field failure data for Release 1 listed in

Table 2, was reported by Jeske and Zhang [

35]. Release 1 included Year 2000 compatibility modifications, an operating system upgrade, and some new features pertaining to the signaling message processing. Release 1 had a life cycle of 13 months in the field. The cumulative field exposure time of the software was 167,900 system days, and a total of 115 failures were observed in the field.

Table 2 shows the field failure data for Release 1 for each of the 13 months. Software failure data is available from the field for Release 1. Dataset #2, test data for Release 2 listed in

Table 3, was also reported by Jeske and Zhang [

35]. The test data is the set of failures that were observed during a combination of feature testing and load testing. The test interval that was used in this analysis was a 36-week period between. At times, as many as 11 different base station controller frame (BCF) frames were being used in parallel to test the software. Thus, to obtain an overall number of days spent testing the software we aggregated the number of days spent testing the software on each frame. The 36 weeks of Release 2 testing accumulates 1001 days of exposure time. Dataset #2 also show the cumulative software failures and the cumulative exposure time for the software on a weekly basis during the test interval.

Table 4 and

Table 5 summarize the results of the estimated parameters of all 11 models in

Table 1 using the least-squares estimation (LSE) technique and the values of the five common criteria (MSE, SAE, PRR, PP and AIC).

We obtained the five common criteria when

from Dataset #1 (

Table 2), with exposure time (Cum. System days) from Dataset #2 (

Table 3), As can be seen from

Table 4, the MSE, SAE, PRR, PP and AIC values for the proposed new model are the lowest values compared to all models. We can see that the values of MSE, SAE, and AIC of the proposed new model are 11.2281, 26.5568, and 79.3459, respectively, which is significantly smaller than the value of the other software reliability models. The values of PRR and PP of the proposed new model are 0.2042, 0.1558, respectively. As can be seen from

Table 5, the MSE, SAE and PRR value for the proposed new model are the lowest values, and the PP and AIC value for the proposed new model are the second lowest values compared to all models. We can see that the values of MSE and SAE of the proposed new model are 9.8789, 90.3633, respectively, which is significantly smaller than the value of the other software reliability models. The values of PRR, PP, and AIC of the proposed new model are 0.2944, 0.5159, 187.4204, respectively. The results show the difference between the actual and predicted values of the new model is smaller than the other models and the AIC value which is the measure of goodness of fit of an estimated statistical model is much smaller than the other software reliability models.

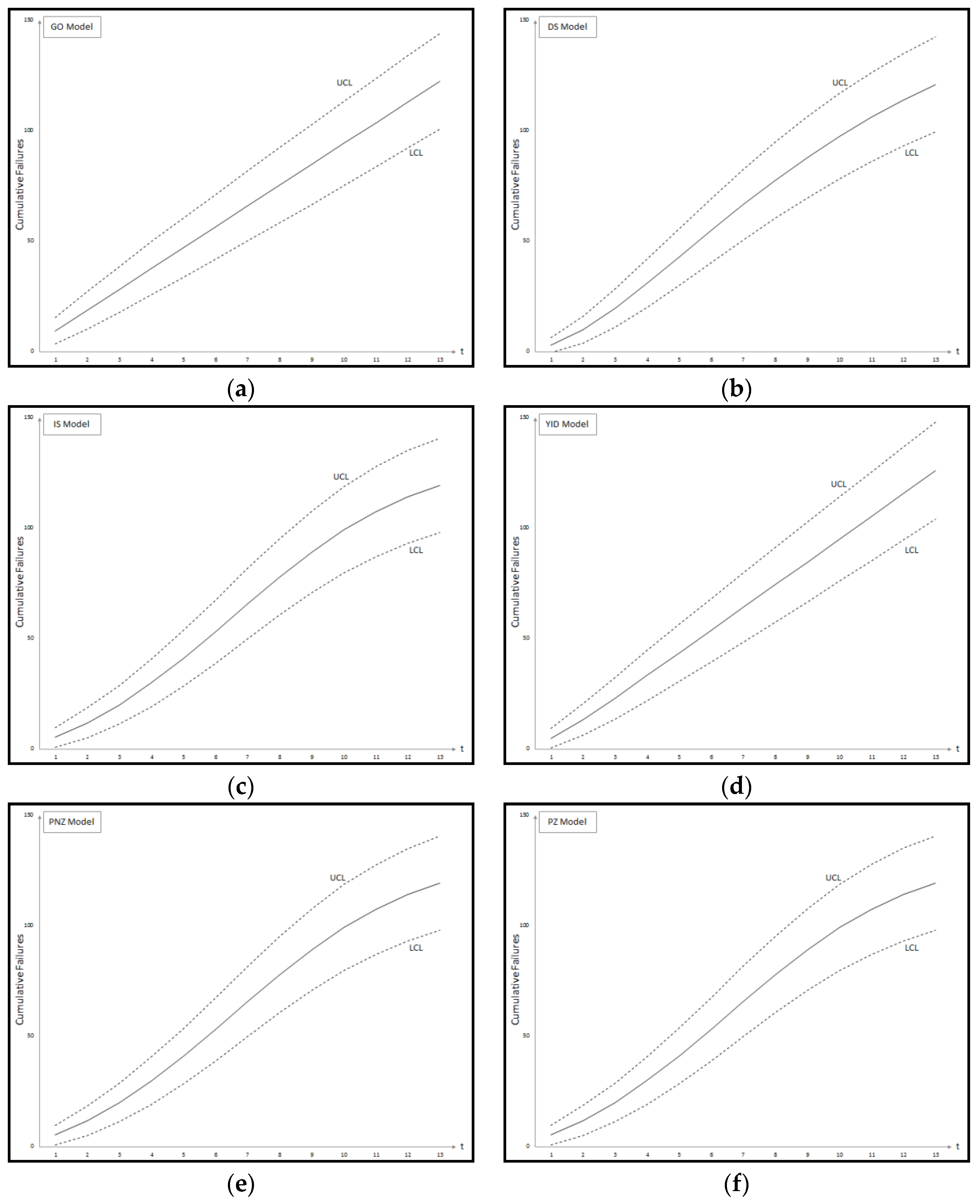

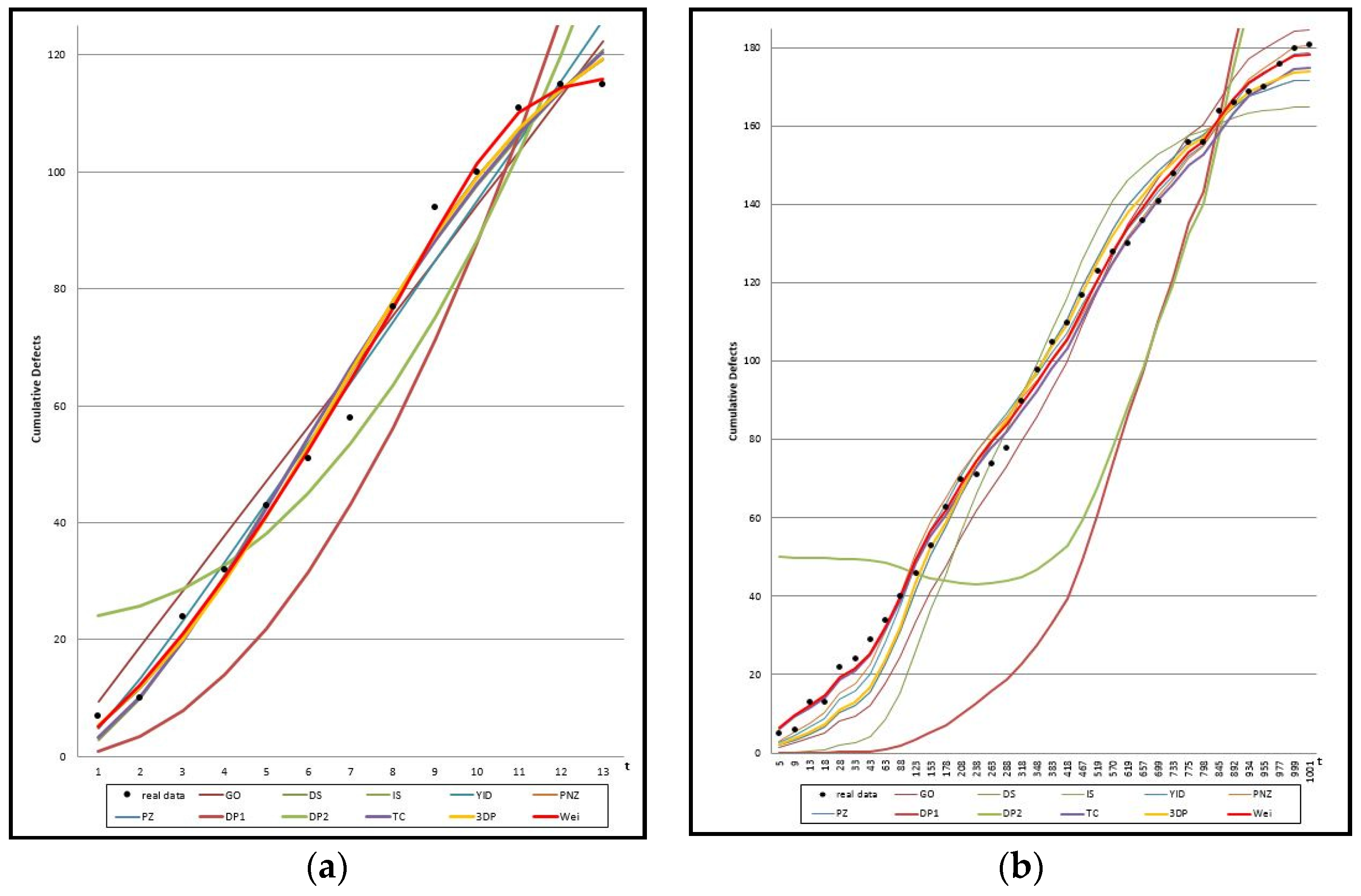

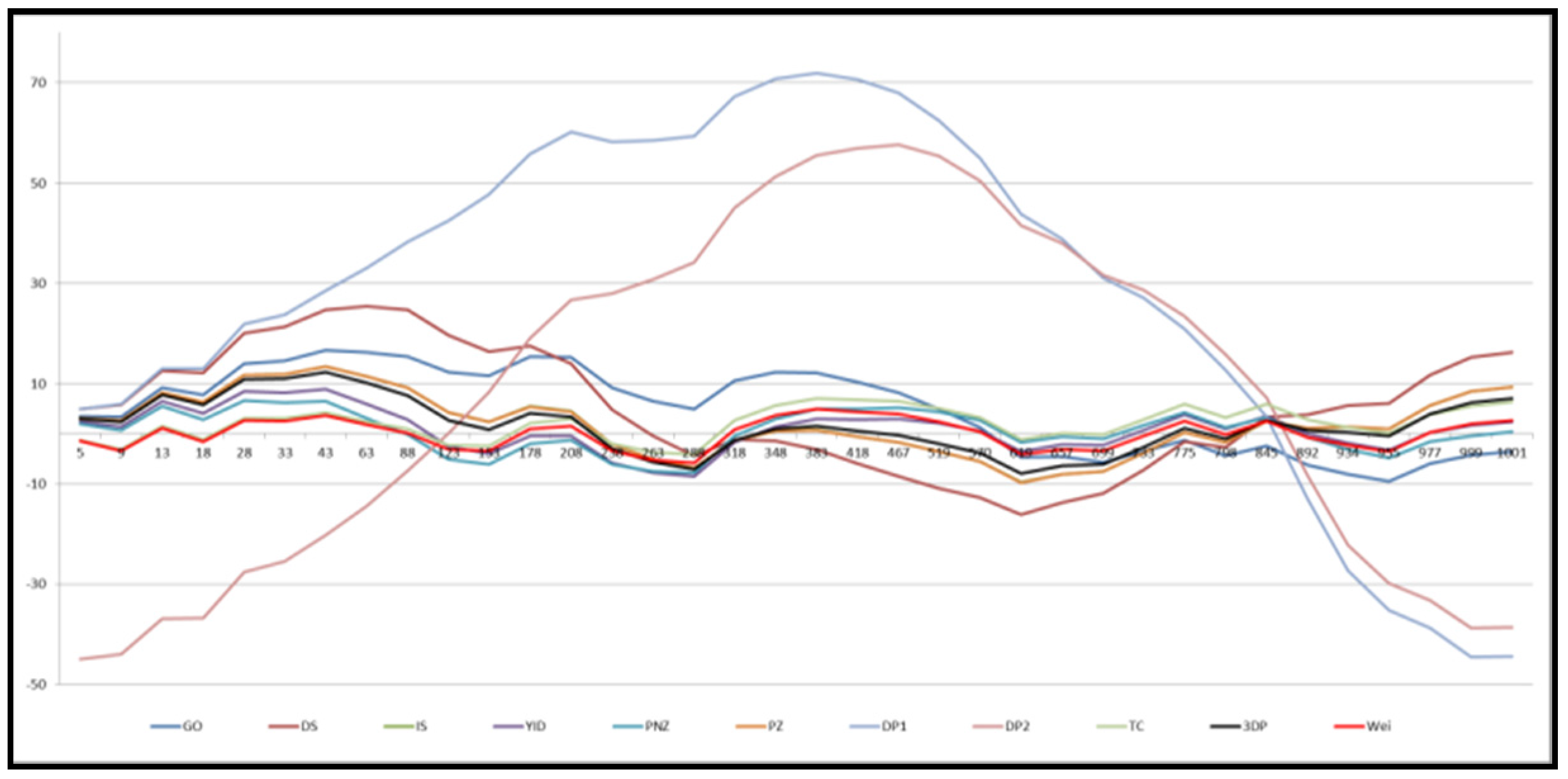

Figure 1 shows the graph of the mean value functions for all 11 models for Datasets #1 and #2, respectively.

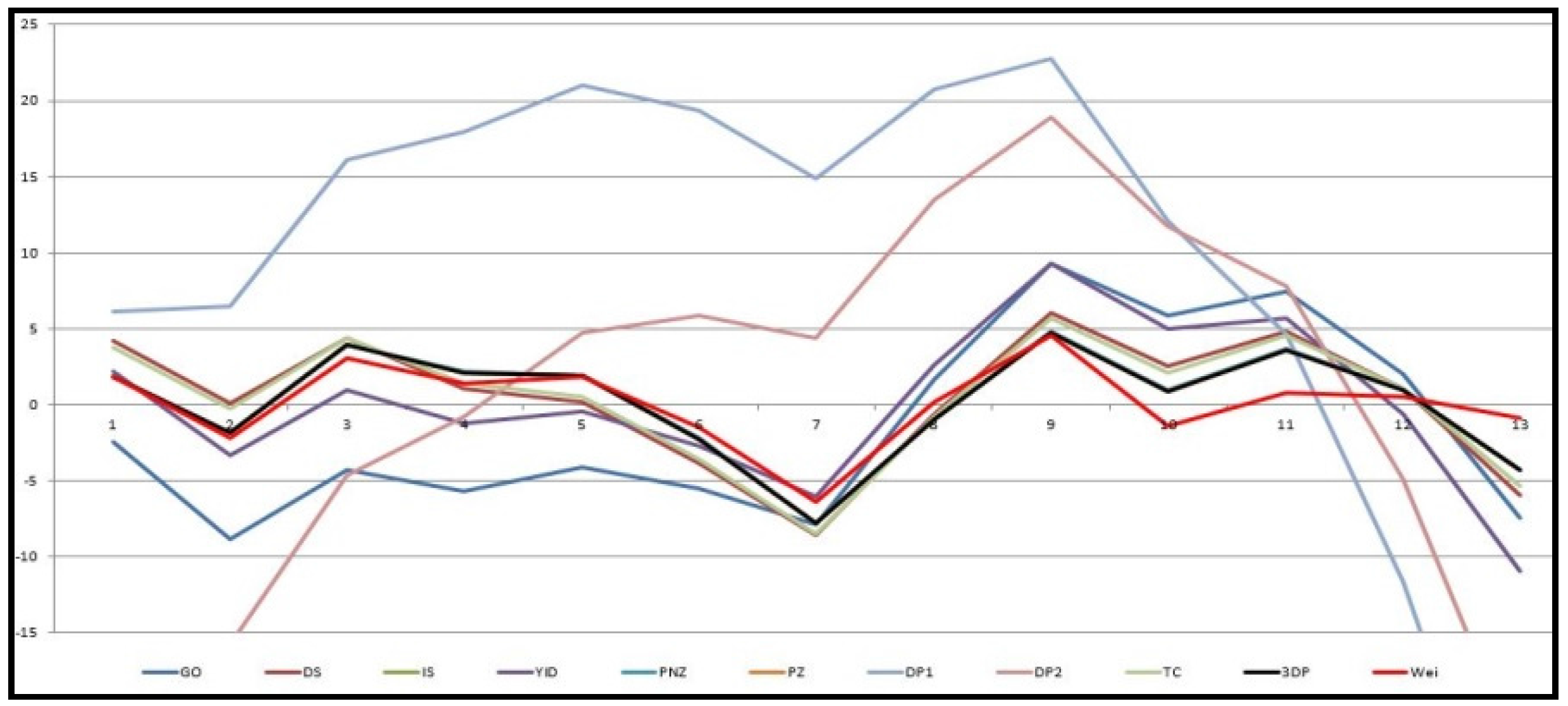

Figure 2 and

Figure 3 show that the relative error value of the software reliability model can quickly approach zero in comparison with the other models confirming its ability to provide more accurate prediction.

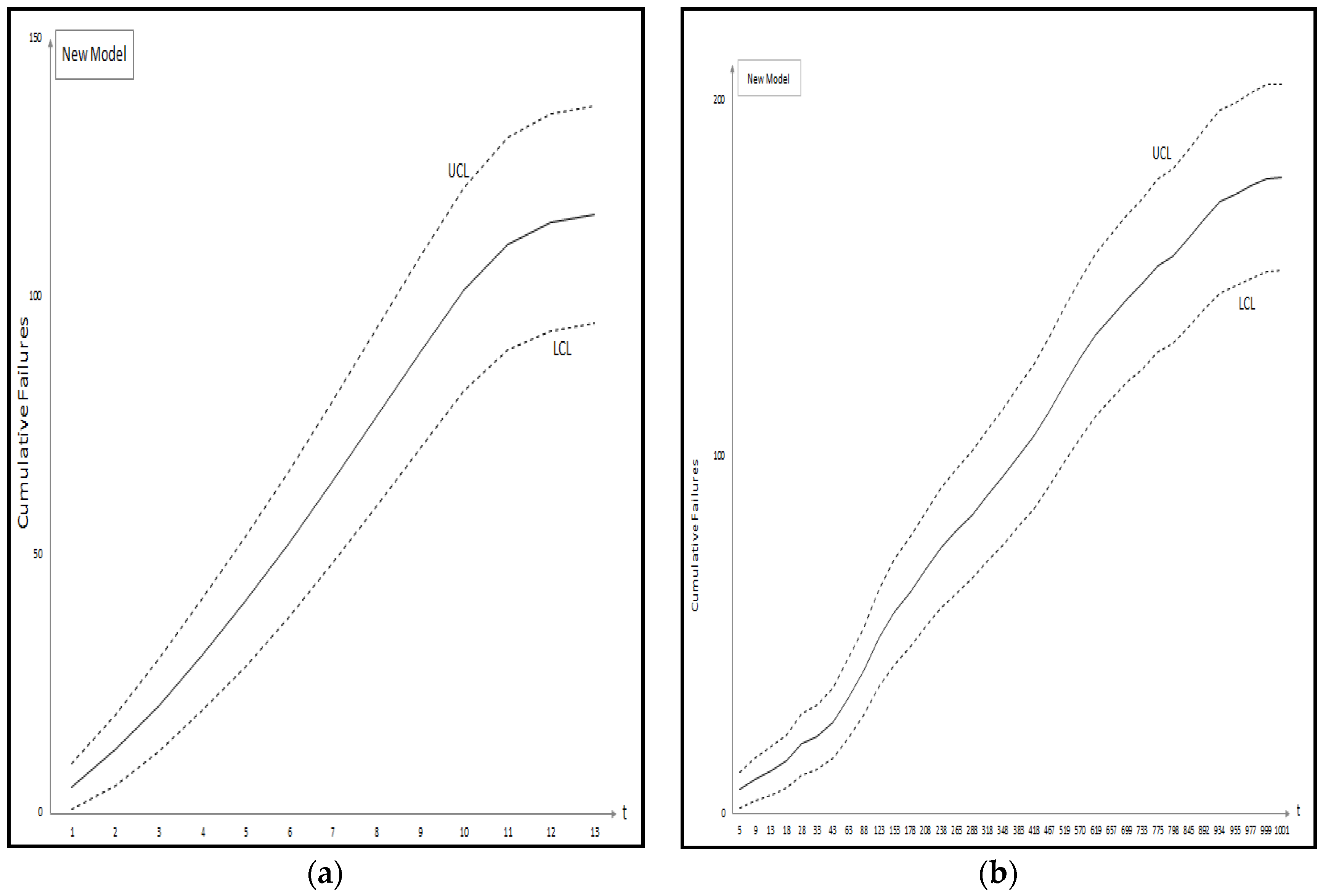

Figure 4 and

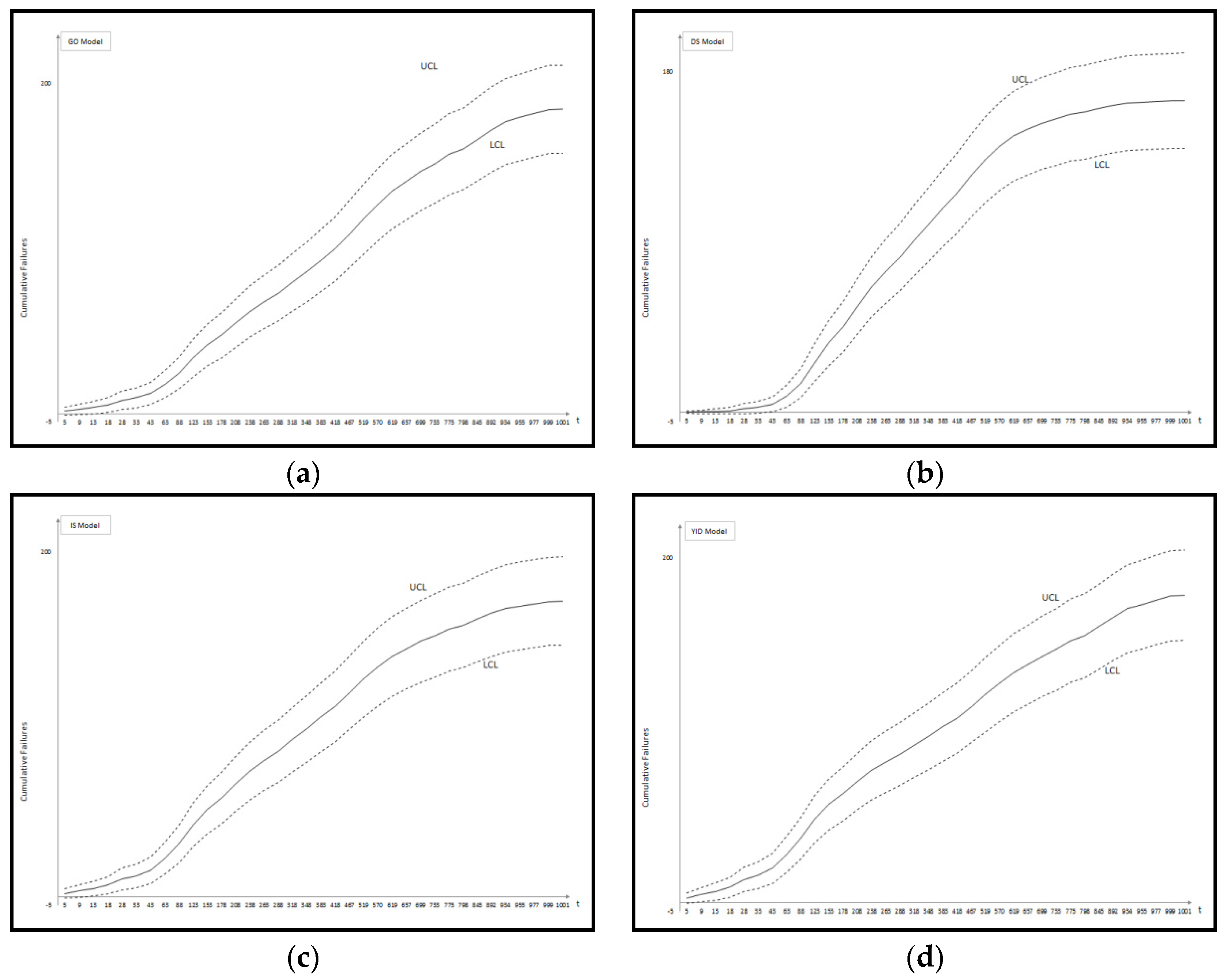

Figure 5 show the graph of the mean value function and confidence interval each of the proposed new model for Datasets #1 and #2, respectively. Refer to the

Appendix A for confidence intervals for the other software reliability models.

5. Conclusions

Generally, existing models are applied to software testing data and then used to make predictions on the software failures and reliability in the field. Here, the important point is that the test environment and operational environment are different from each other. We do not know in which operating environment the software will be used. Therefore, we need to develop the software reliability model considering uncertainty of operating environment. In this paper, we discussed a new software reliability model based on a Weibull fault detection rate function of Weibull distribution, which is the most commonly used distribution for modeling irregular data subject to the uncertainty of operating environments.

Table 4 and

Table 5 summarized the results of the estimated parameters of all 11 models in

Table 1 using the LSE technique and the five common criteria (MSE, SAE, PRR, PP and AIC) value for two data sets. As can be seen from

Table 4, the MSE, SAE, PRR, PP and AIC value for the proposed new model are the lowest values compared to all models. As can be seen from

Table 5, the MSE, SAE and PRR value for the proposed new model are the lowest values compared to all models. As the results show the difference between the actual and predicted values of the new model is smaller than the other models, and the AIC value, which is the measure of goodness of fit of an estimated statistical model, is much smaller than the other models. Finally, we show confidence intervals of all 11 models from Dataset #1 and #2, respectively. By estimating the confidence interval, we will help to find the optimal software reliability model at different confidence levels. Future work will involve broader validation of this conclusion based on recent data sets.