The Deep Belief and Self-Organizing Neural Network as a Semi-Supervised Classification Method for Hyperspectral Data

Abstract

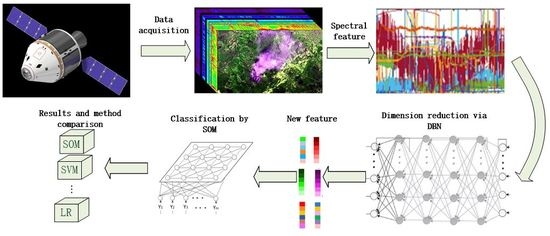

:1. Introduction

2. Algorithm Introduction

2.1. Algorithm Procedure

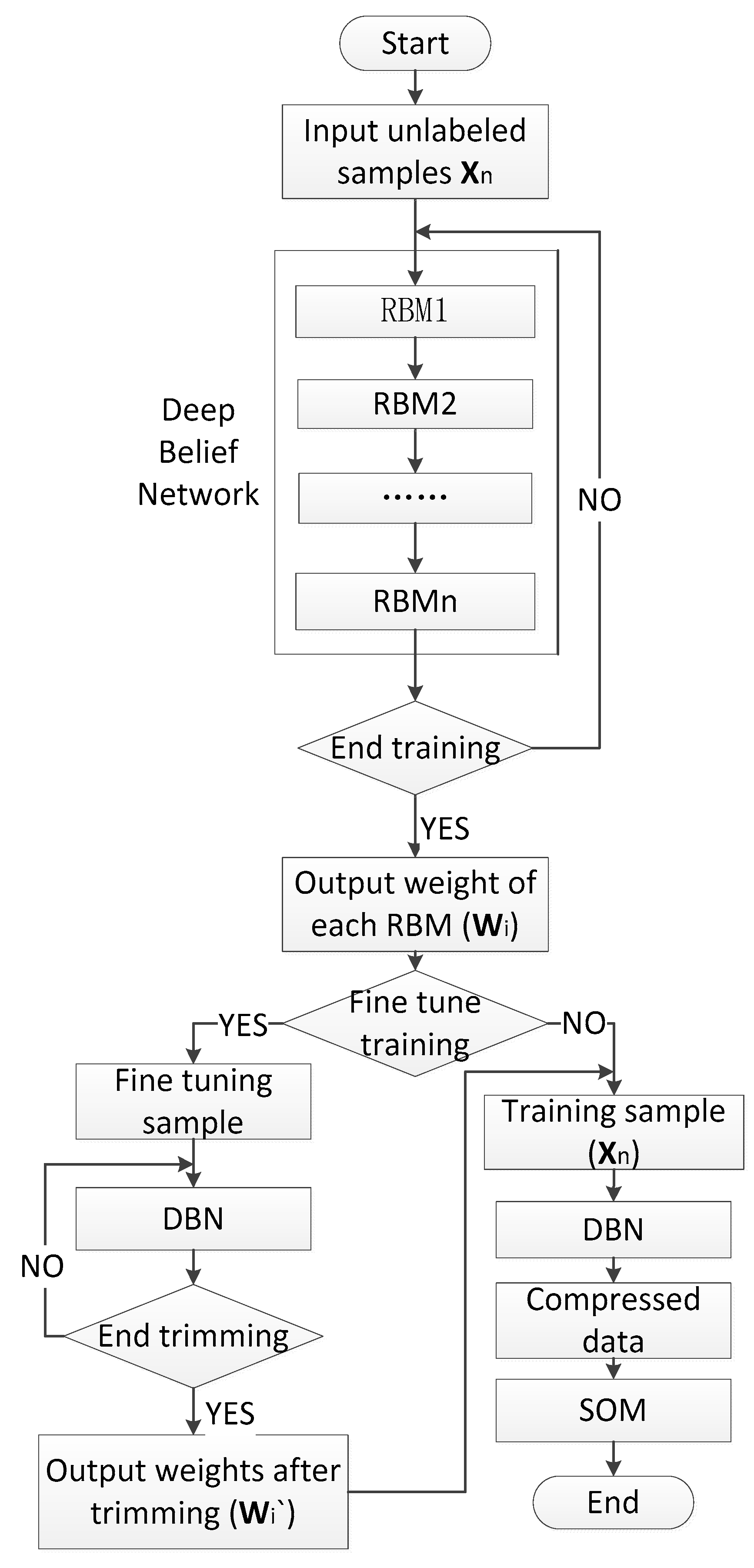

2.2. Network Training

2.2.1. Deep Belief Network

| Algorithm 1 |

| 1. init for ; |

| 2. Input ; |

| 3. For ; |

| 4. Train |

| 5. |

| 6. End |

| 7. If Fine tune training |

| 8. Input using Gradient descent method |

| 9. Endif |

| 10. Output for ; |

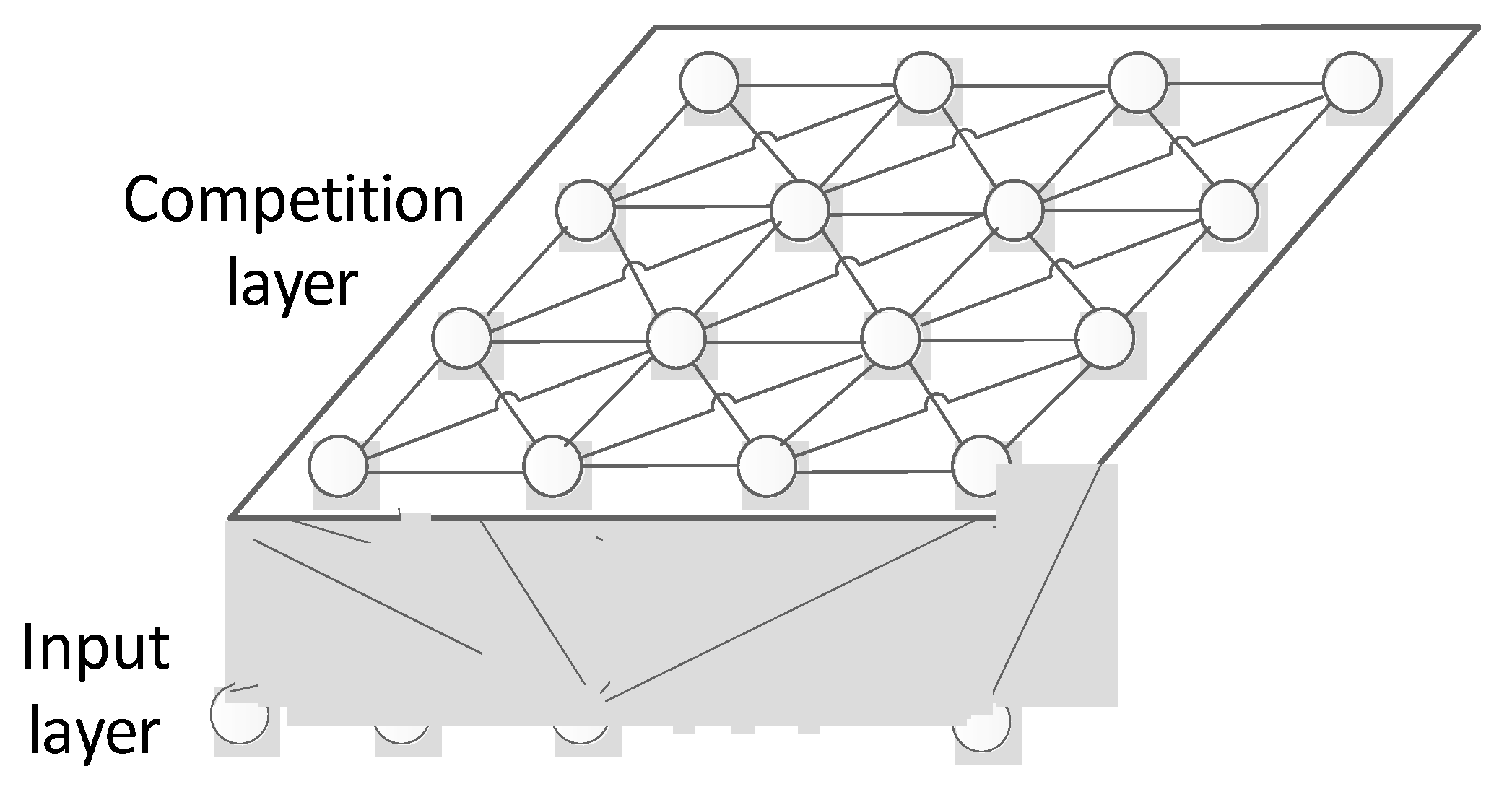

2.2.2. Training of Self-Organizing, Competitive Neural Network

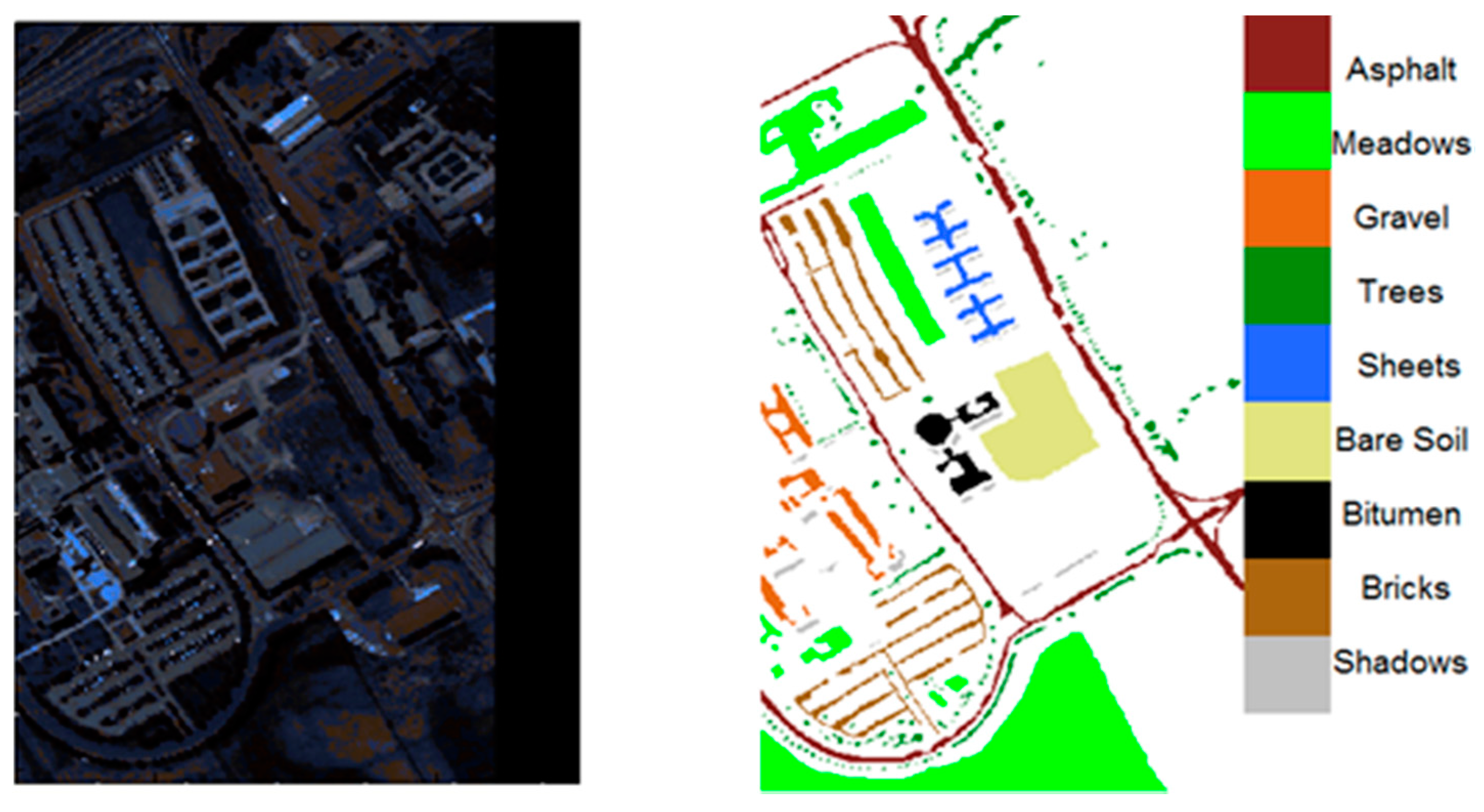

3. Test Experiment

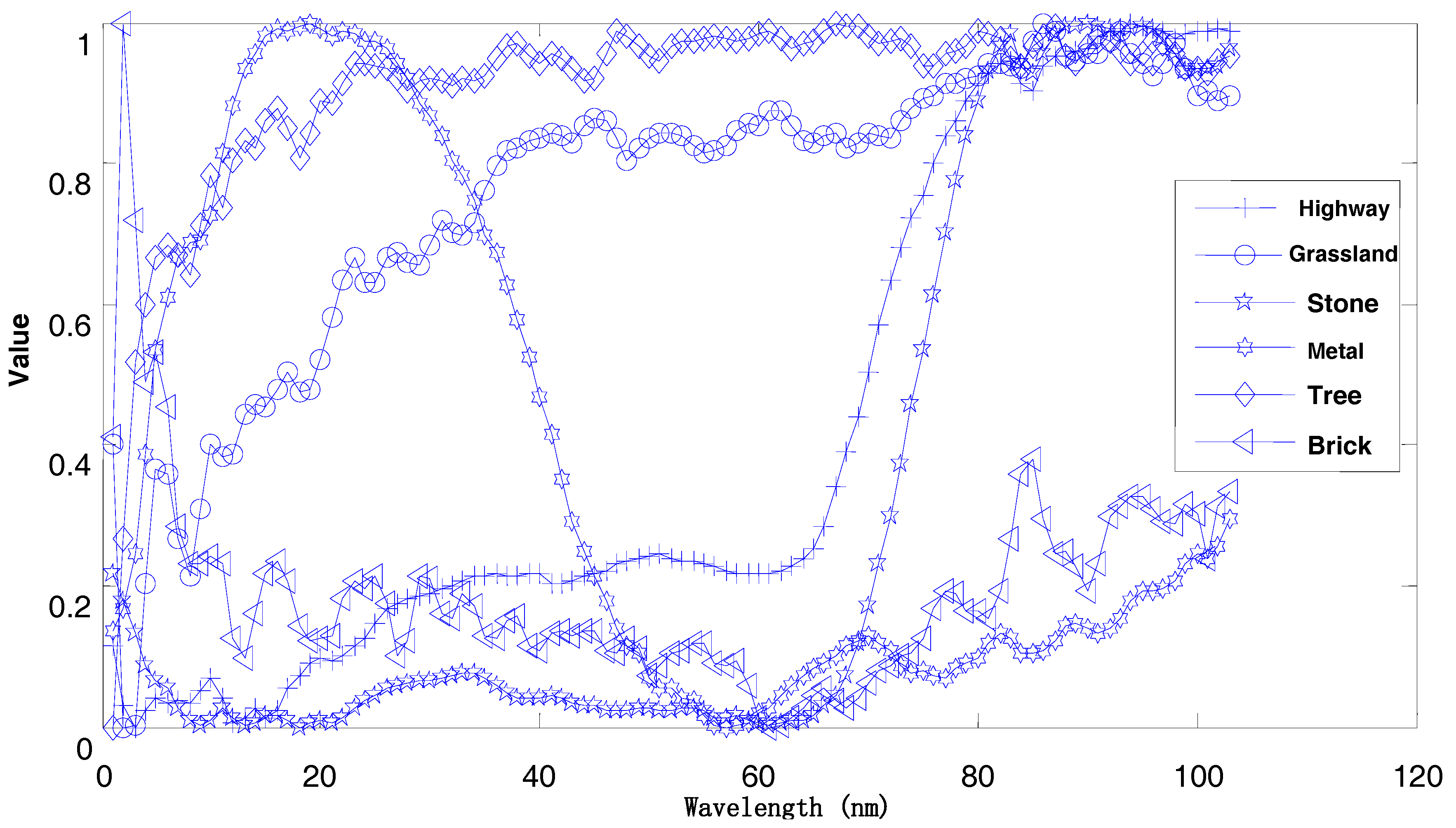

3.1. Data Sources

3.2. Dimension Reduction of Deep Belief Net

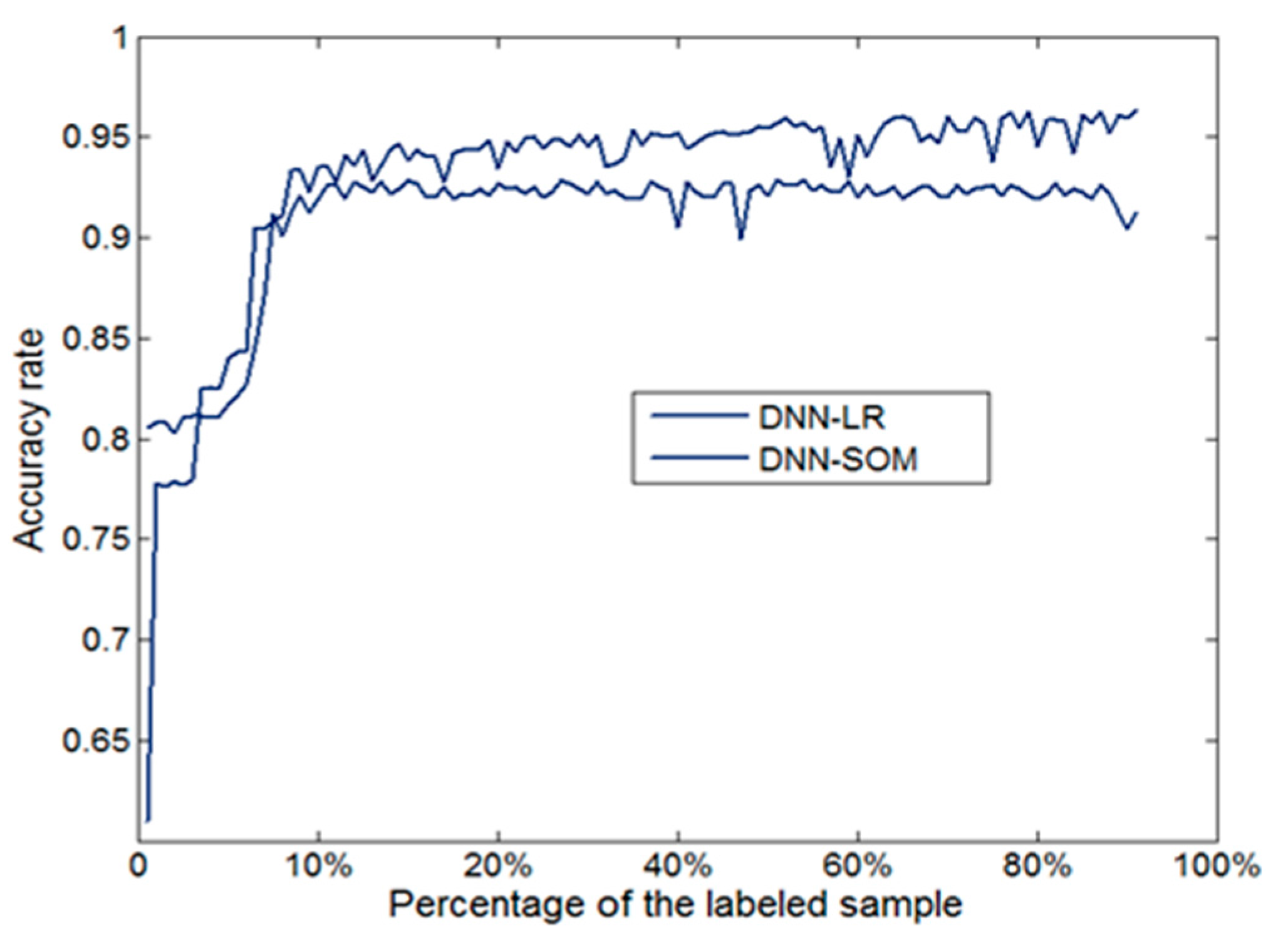

3.3. Research of Label Sample Size and Classification Accuracy

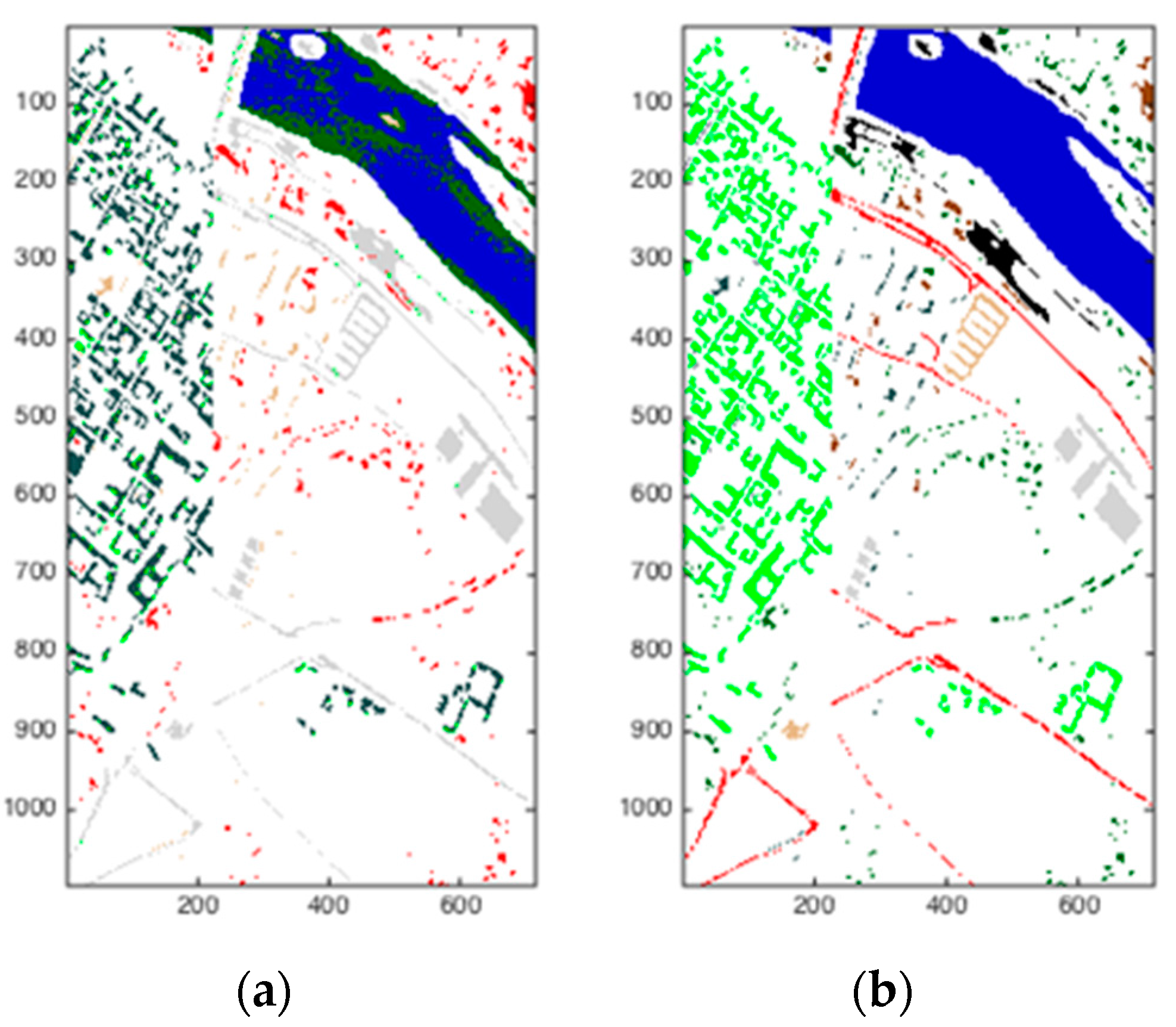

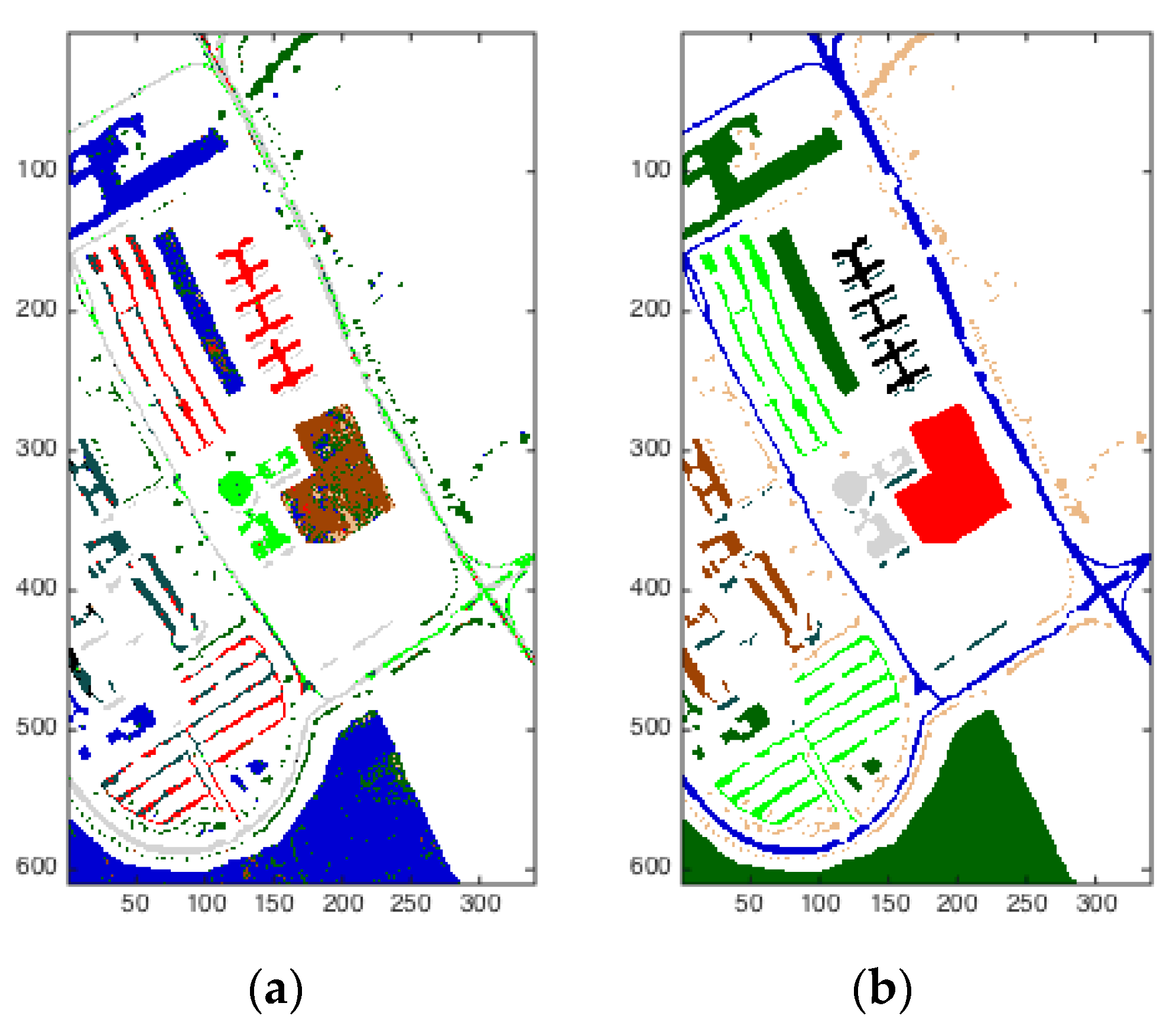

3.4. Classification Results of Public Hyperspectral Databases

4. Comparative Experiments

4.1. Simulation

4.1.1. Simulation Data Source

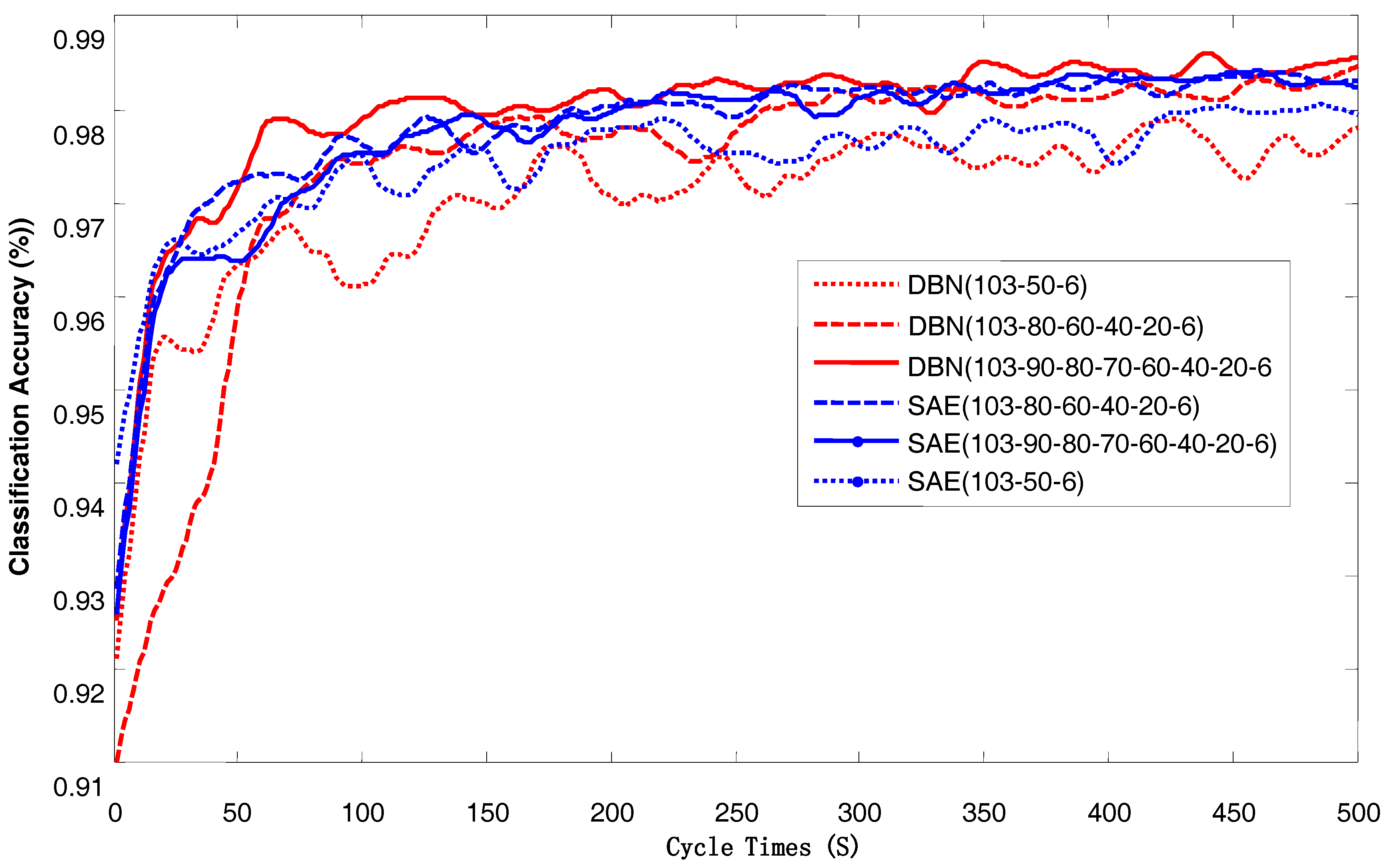

4.1.2. Depth Study of Deep Learning Network

4.1.3. Cycle Number Selection

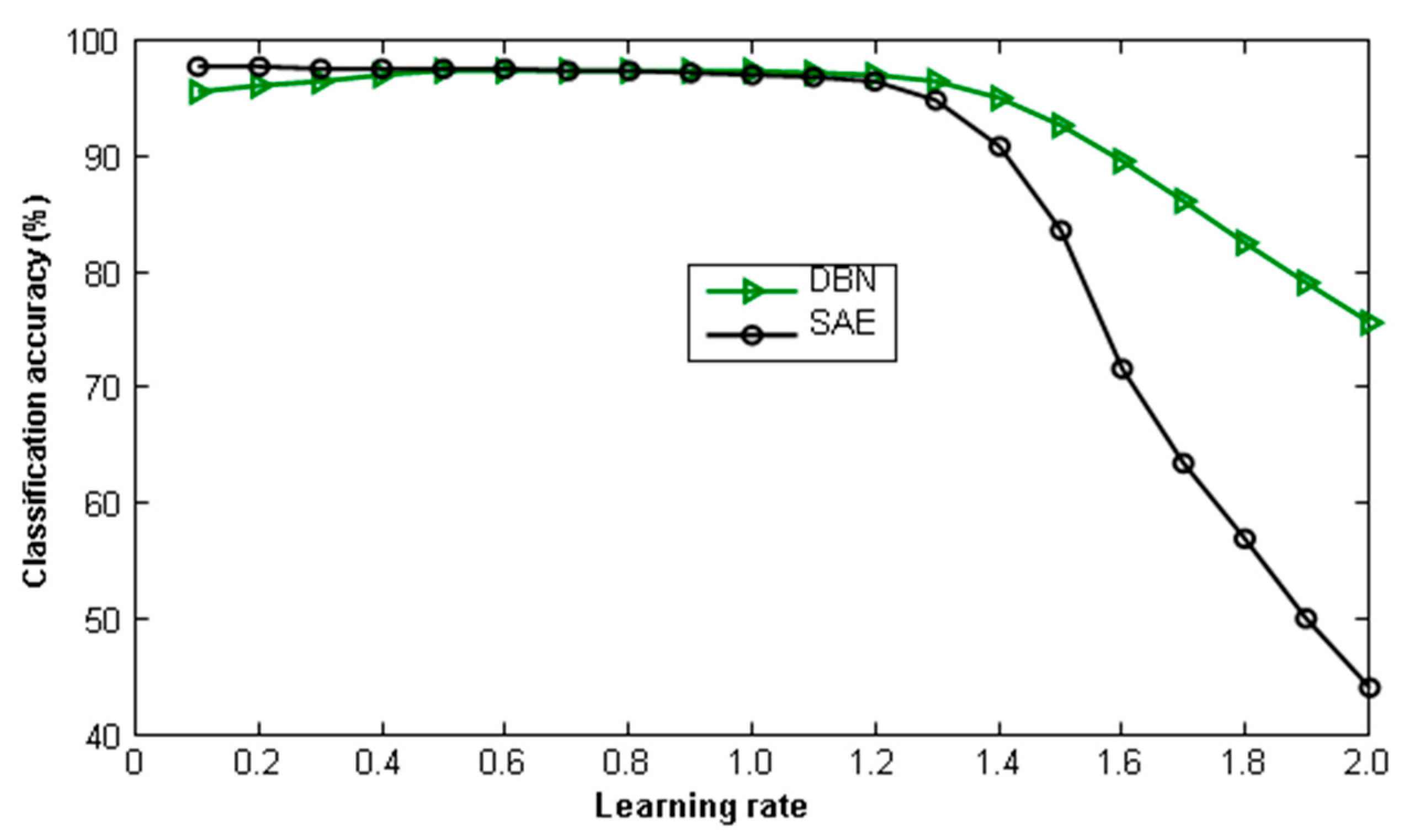

4.1.4. Learning Rate Choice

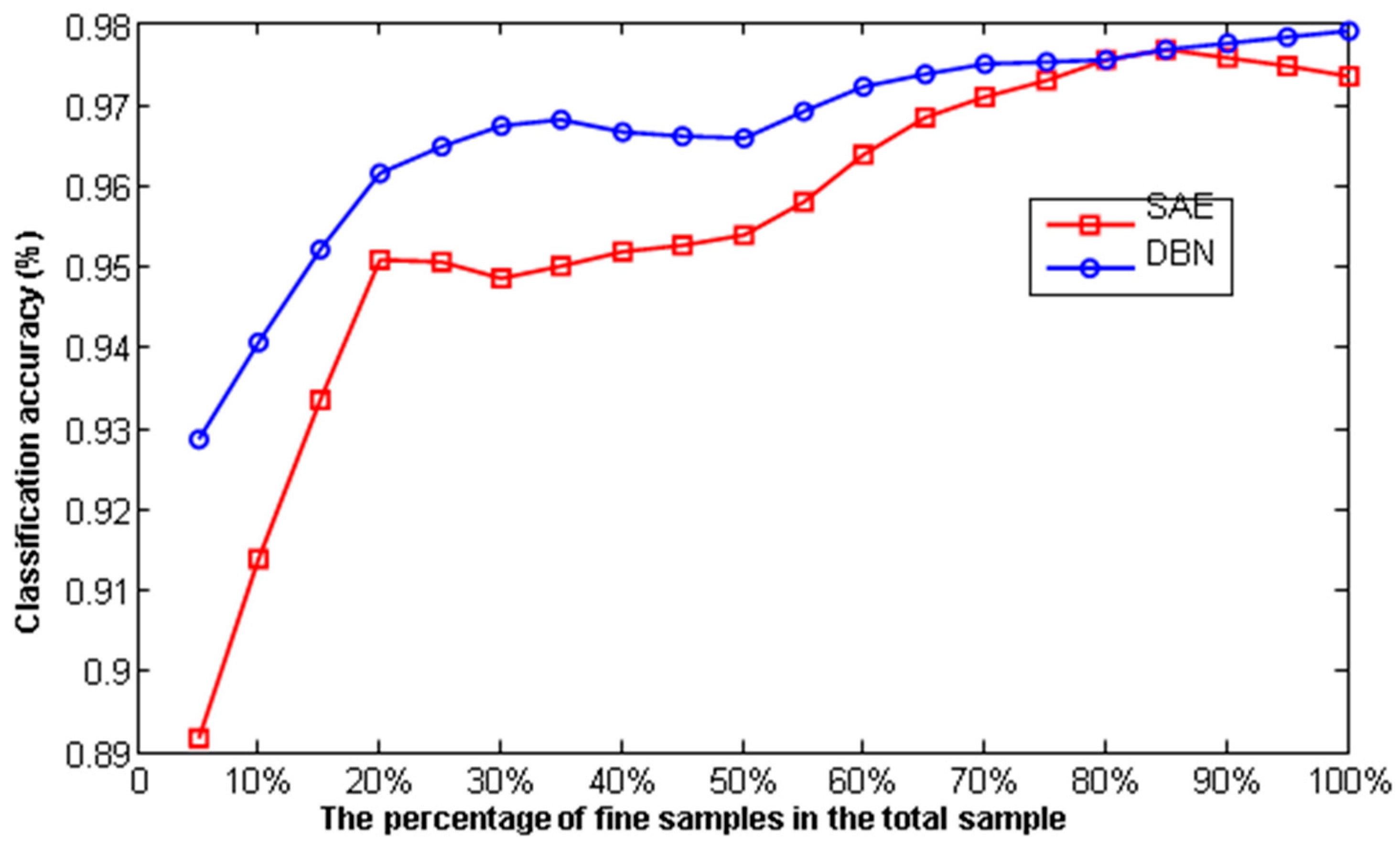

4.1.5. Relationship between Classification Accuracy and Fine Sample Size

4.2. Deep Learning Combined with Other Algorithms

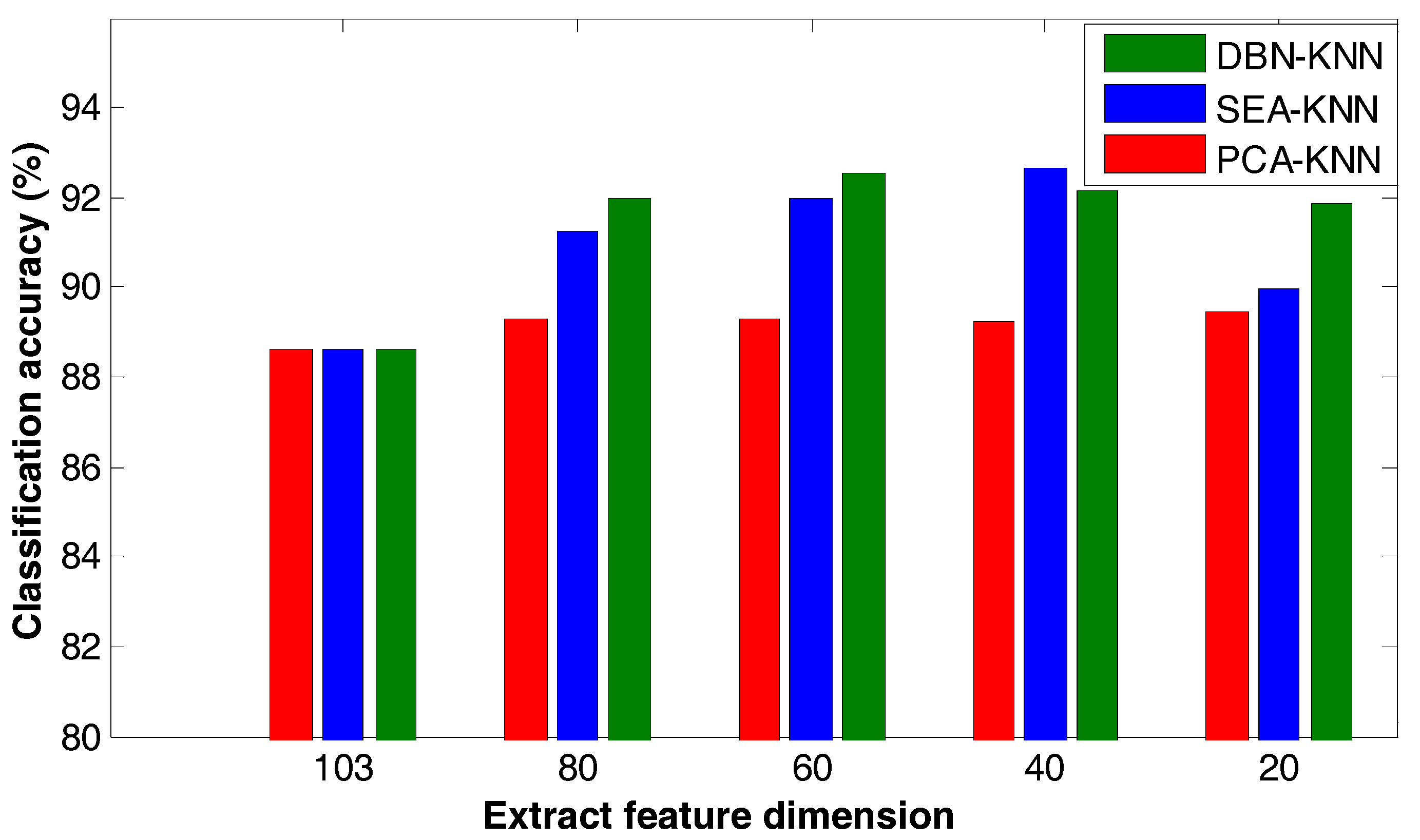

4.2.1. Contrast between Deep Learning and Principal Component Analysis

4.2.2. Comparison of Deep Learning and Other Algorithm Classification Results

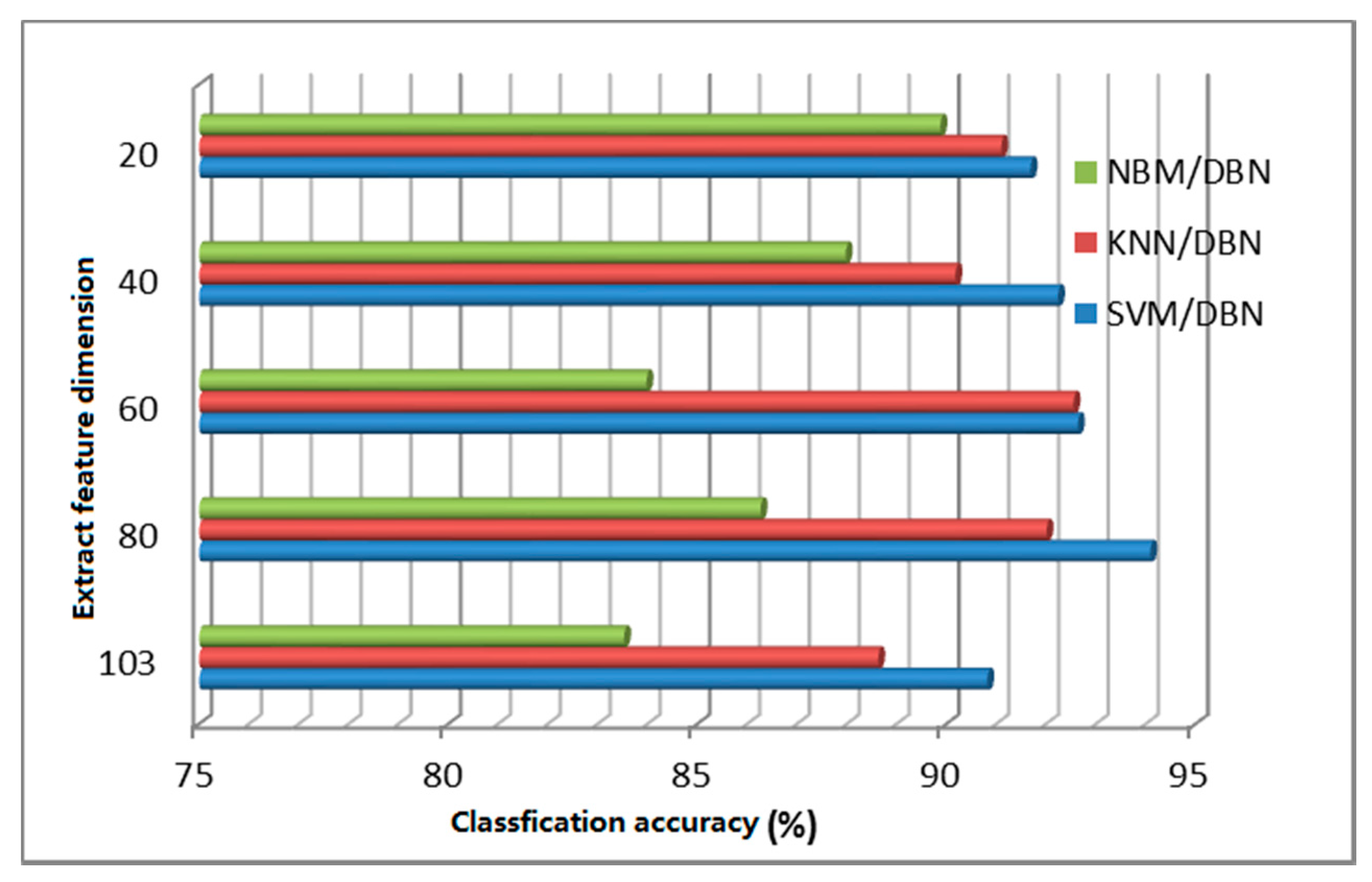

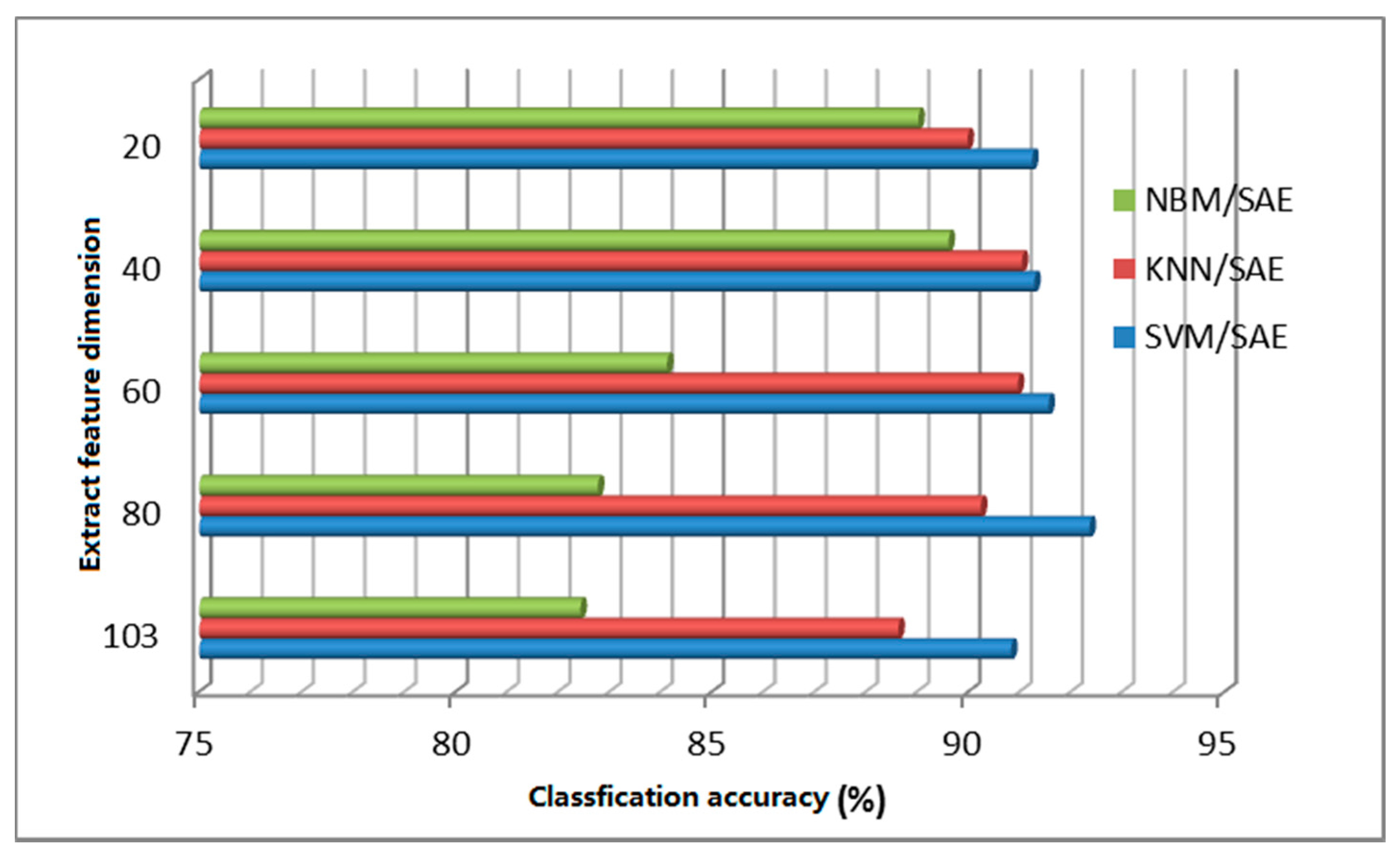

4.2.3. Deep Learning Extracts Features and Results are Compared with Other Algorithms

4.3. Algorithm Verification

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Xu, H.; Wang, X. Applications of multispectral/hyperspectral imaging technologies in military. Infrared Laser Eng. 2007, 36, 13–17. (In Chinese) [Google Scholar]

- Li, J.; Rao, X.; Ying, Y.; Wang, D. Detection of navel oranges canker based on hyperspectral imaging technology. Trans. Chin. Soc. Agric. Eng. 2010, 26, 222–228. (In Chinese) [Google Scholar]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Huang, M.; He, C.; Zhu, Q.; Qin, J. Maize Seed Variety Classification Using the Integration of Spectral and Image Features Combined with Feature Transformation Based on Hyperspectral Imaging. Appl. Sci. 2016, 6, 183. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Semisupervised hyperspectral image segmentation using multinomial logistic regression with active learning. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4085–4098. [Google Scholar] [CrossRef]

- Harsanyi, J.C.; Chang, C.I. Hyperspectral image classification and dimensionality reduction: An orthogonal subspace projection approach. IEEE Trans. Geosci. Remote Sens. 1994, 32, 779–785. [Google Scholar] [CrossRef]

- Lee, M.; Prasad, S.; Bruce, L.M.; West, T.R.; Reynolds, D.; Irby, T.; Kalluri, H. Sensitivity of hyperspectral classification algorithms to training sample size. In Proceedings of the IEEE First Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS’09), Grenoble, France, 26–28 August 2009; pp. 1–4. [Google Scholar]

- Demir, B.; Ertürk, S. Hyperspectral image classification using relevance vector machines. IEEE Geosci. Remote Sens. Lett. 2007, 4, 586–590. [Google Scholar] [CrossRef]

- Gualtieri, J.A.; Cromp, R.F. Support vector machines for hyperspectral remote sensing classification. In Proceedings of the 27th AIPR Workshop: Advances in Computer-Assisted Recognition, Washington, DC, USA, 14–16 October 1998; pp. 1–28. [Google Scholar]

- Liu, Y.; Li, K.; Huang, Y.; Wang, J.; Song, S.; Sun, Y. Spacecraft electrical characteristics identification study based on offline FCM clustering and online SVM classifier. In Proceedings of the 2014 International Conference on Multisensor Fusion and Information Integration for Intelligent Systems (MFI), Beijing, China, 28–30 September 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Li, K.; Wu, Y.; Nan, Y.; Li, P.; Li, Y. Hierarchical multi-class classification in multimodal spacecraft data using DNN and weighted support vector machine. Neurocomputing 2017. [Google Scholar] [CrossRef]

- Li, K.; Wu, Y.; Song, S.; Sun, Y.; Wang, J.; Li, Y. A novel method for spacecraft electrical fault detection based on FCM clustering and WPSVM classification with PCA feature extraction. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2017, 231, 98–108. [Google Scholar] [CrossRef]

- Li, K.; Liu, W.; Wang, J.; Huang, Y.; Liu, M. Multi-parameter decoupling and slope tracking control strategy of a large-scale high altitude environment simulation test cabin. Chin. J. Aeronaut. 2014, 27, 1390–1400. [Google Scholar] [CrossRef]

- Li, K.; Liu, W.; Wang, J.; Huang, Y. An intelligent control method for a large multi-parameter environmental simulation cabin. Chin. J. Aeronaut. 2013, 26, 1360–1369. [Google Scholar] [CrossRef]

- Li, K.; Liu, Y.; Wang, Q.; Wu, Y.; Song, S.; Sun, Y.; Liu, T.; Wang, J.; Li, Y.; Du, S. A Spacecraft Electrical Characteristics Multi-Label Classification Method Based on Off-Line FCM Clustering and On-Line WPSVM. PLoS ONE 2015, 10, e0140395. [Google Scholar] [CrossRef] [PubMed]

- Ratle, F.; Camps-Valls, G.; Weston, J. Semisupervised neural networks for efficient hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2271–2282. [Google Scholar] [CrossRef]

- Slavkovikj, V.; Verstockt, S.; De Neve, W.; Hoecke, S.V.; Walle, R.V.D. Hyperspectral Image Classification with Convolutional Neural Networks. In Proceedings of the 23rd Annual ACM Conference on Multimedia Conference, Brisbane, Australia, 26–30 October 2015; pp. 1159–1162. [Google Scholar]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Fauvel, M.; Chanussot, J.; Benediktsson, J.A. SVM-and MRF-based method for accurate classification of hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 736–740. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Bradford, J.M. Bradford yield estimation from hyperspectral imagery using spectral angle mapper (SAM). Trans. ASABE 2008, 51, 729–737. [Google Scholar] [CrossRef]

- Ma, X.L.; Ren, Z.Y.; Wang, Y.L. Research on Hyperspectral Remote Sensing Image Classification Based on SAM. Syst. Sci. Compr. Stud. Agric. 2009, 2, 0204–0207. [Google Scholar]

- Martinez, A.M.; Kak, A.C. PCA versus LDA. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 228–233. [Google Scholar] [CrossRef]

- Chang, C.I.; Wang, S. Constrained band selection for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1575–1585. [Google Scholar] [CrossRef]

- Pompilio, L.; Pepe, M.; Pedrazzi, G.; Marinangeli, L. Informational Clustering of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 6, 2209–2223. [Google Scholar] [CrossRef]

- Marlin, B.M.; Swersky, K.; Chen, B.; Freitas, N.D. Inductive Principles for Restricted Boltzmann Machine Learning. J. Mach. Learn. Res. 2010, 9, 509–516. [Google Scholar]

- Fauvel, M.; Benediktsson, J.A.; Chanussot, J.; Sveinsson, J.R. Spectral and spatial classification of hyperspectral data using SVMs and morphological profiles. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3804–3814. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, W.; Chu, X.; Li, C.; Kimuli, D. Early Detection of Aspergillus parasiticus Infection in Maize Kernels Using Near-Infrared Hyperspectral Imaging and Multivariate Data Analysis. Appl. Sci. 2017, 7, 90. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, Y.; Zhao, X.; Wang, G. Spectral-spatial classification of hyperspectral image using autoencoders. In Proceedings of the IEEE 2013 9th International Conference on Information, Communications and Signal Processing (ICICS), Tainan, Taiwan, 10–13 December 2013; pp. 1–5. [Google Scholar]

- Djokam, M.; Sandasi, M.; Chen, W.; Viljoen, A.; Vermaak, I. Hyperspectral Imaging as a Rapid Quality Control Method for Herbal Tea Blends. Appl. Sci. 2017, 7, 268. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep Learning Based Feature Selection for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Fischer, A.; Igel, C. Training restricted Boltzmann machines: An introduction. Pattern Recognit. 2014, 47, 25–39. [Google Scholar] [CrossRef]

- Lefcourt, A.; Kistler, R.; Gadsden, S.; Kim, M. Automated Cart with VIS/NIR Hyperspectral Reflectance and Fluorescence Imaging Capabilities. Appl. Sci. 2016, 7, 3. [Google Scholar] [CrossRef]

- Bengio, Y. Learning Deep Architectures for AI. Found. Trends Mach. Learn. 2009, 2, 1–127. [Google Scholar] [CrossRef]

- Bengio, Y.; Lamblin, P.; Popovici, D.; Larochelle, H. Greedy layer-wise training of deep networks. In Proceedings of the International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; pp. 153–160. [Google Scholar]

- Pal, M.; Foody, G.M. Feature selection for classification of hyperspectral data by SVM. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2297–2307. [Google Scholar] [CrossRef]

- Shao, H.; Lam, W.H.K.; Tam, M.L. A reliability-based stochastic traffic assignment model for network with multiple user classes under uncertainty in demand. Netw. Spat. Econ. 2006, 6, 173–204. [Google Scholar] [CrossRef]

- Fischer, A.; Igel, C. An introduction to restricted Boltzmann machines. In Iberoamerican Congress on Pattern Recognition; Lecture Notes in Computer Science; Springer: Berlin, Heidelberg, 2012; pp. 14–36. [Google Scholar]

- Xu, P.; Xu, S.; Yin, H. Application of self-organizing competitive neural network in fault diagnosis of suck rod pumping system. J. Pet. Sci. Eng. 2007, 58, 43–48. [Google Scholar] [CrossRef]

- Tieleman, T. Training restricted Boltzmann machines using approximations to the likelihood gradient. In Proceedings of the International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 1064–1071. [Google Scholar]

| Classification Method | Classification Accuracy (%) | Memory Usage (MB) | Time Spent (s) |

|---|---|---|---|

| SAE-LR | 93.69 | 37 | 0.79 |

| DBN-LR | 92.87 | 35 | 0.53 |

| PCA-NBM | 88.08 | 39 | 28.054 |

| KNN | 88.66 | 126 | 3.713 |

| SVM | 90.84 | 76 | 5.99 |

| Methods | Accuracy of Compressed Data | Classification Time of Raw Data | Classification Time of Compressed Data |

|---|---|---|---|

| KNN | 89.53% | 40.825 s | 20.097 s |

| NBM | 86.45% | 115.11 s | 60.11 s |

| SVM | 89.7% | 30.297 s | 10.513 s |

| Methods | Labeled Sample 12 | Labeled Sample 24 | Labeled Sample 36 | Labeled Sample 50 |

|---|---|---|---|---|

| KNN | 73.50% | 82.71% | 84.50% | 85.38% |

| NBM | 71.21% | 79.95% | 81.13% | 86.32% |

| SVM | 76.76% | 81.92% | 85.45% | 85.72% |

| DNN-LR | 78.04% | 80.80% | 88.18% | 89.99% |

| DNN-SOM | 83.60% | 84.13% | 85.31% | 86.43% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lan, W.; Li, Q.; Yu, N.; Wang, Q.; Jia, S.; Li, K. The Deep Belief and Self-Organizing Neural Network as a Semi-Supervised Classification Method for Hyperspectral Data. Appl. Sci. 2017, 7, 1212. https://doi.org/10.3390/app7121212

Lan W, Li Q, Yu N, Wang Q, Jia S, Li K. The Deep Belief and Self-Organizing Neural Network as a Semi-Supervised Classification Method for Hyperspectral Data. Applied Sciences. 2017; 7(12):1212. https://doi.org/10.3390/app7121212

Chicago/Turabian StyleLan, Wei, Qingjian Li, Nan Yu, Quanxin Wang, Suling Jia, and Ke Li. 2017. "The Deep Belief and Self-Organizing Neural Network as a Semi-Supervised Classification Method for Hyperspectral Data" Applied Sciences 7, no. 12: 1212. https://doi.org/10.3390/app7121212