An Efficient Compensation Method for Limited-View Photoacoustic Imaging Reconstruction Based on Gerchberg–Papoulis Extrapolation

Abstract

:1. Introduction

2. Theory and Methods

2.1. Model-Based Photoacoustic

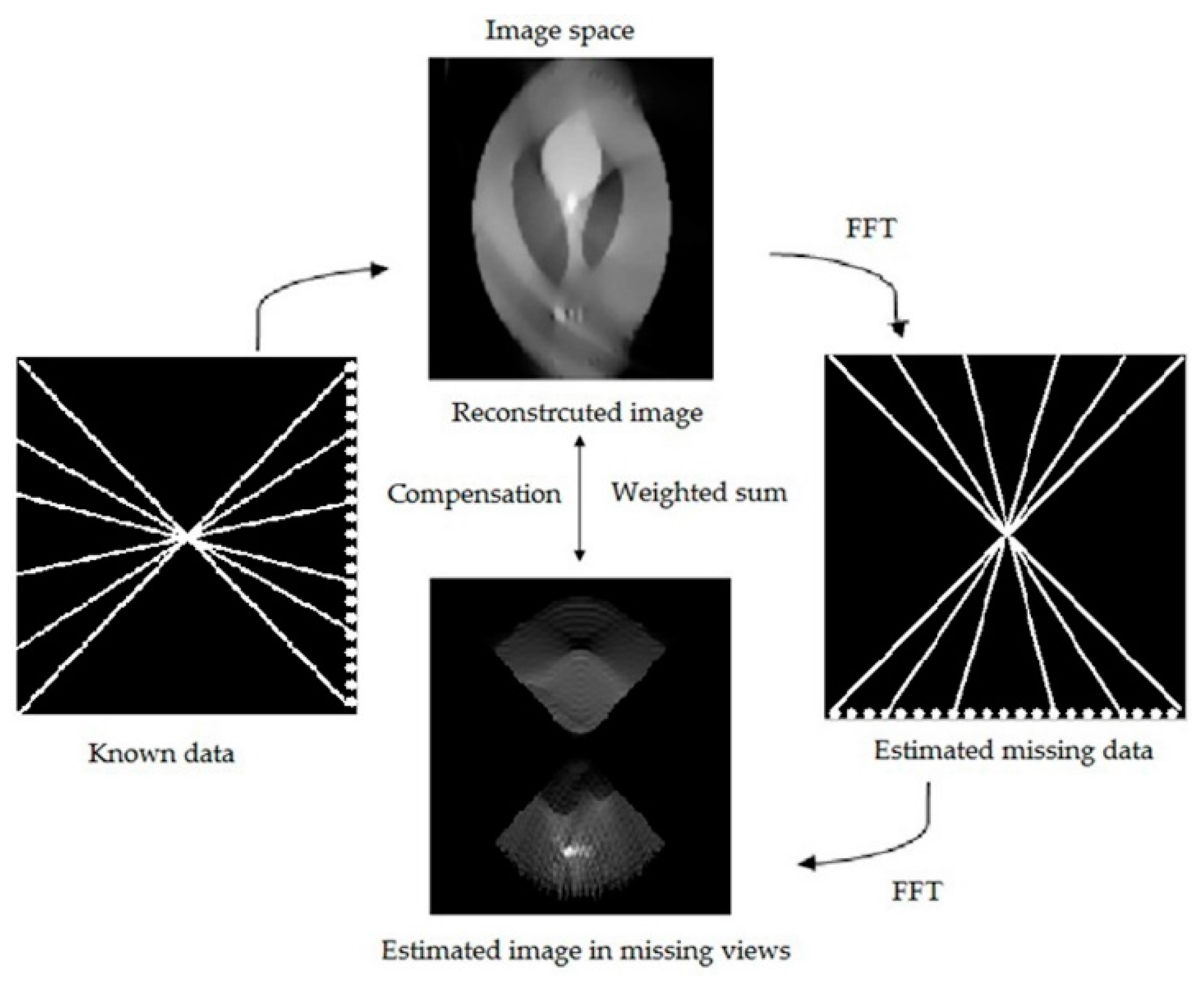

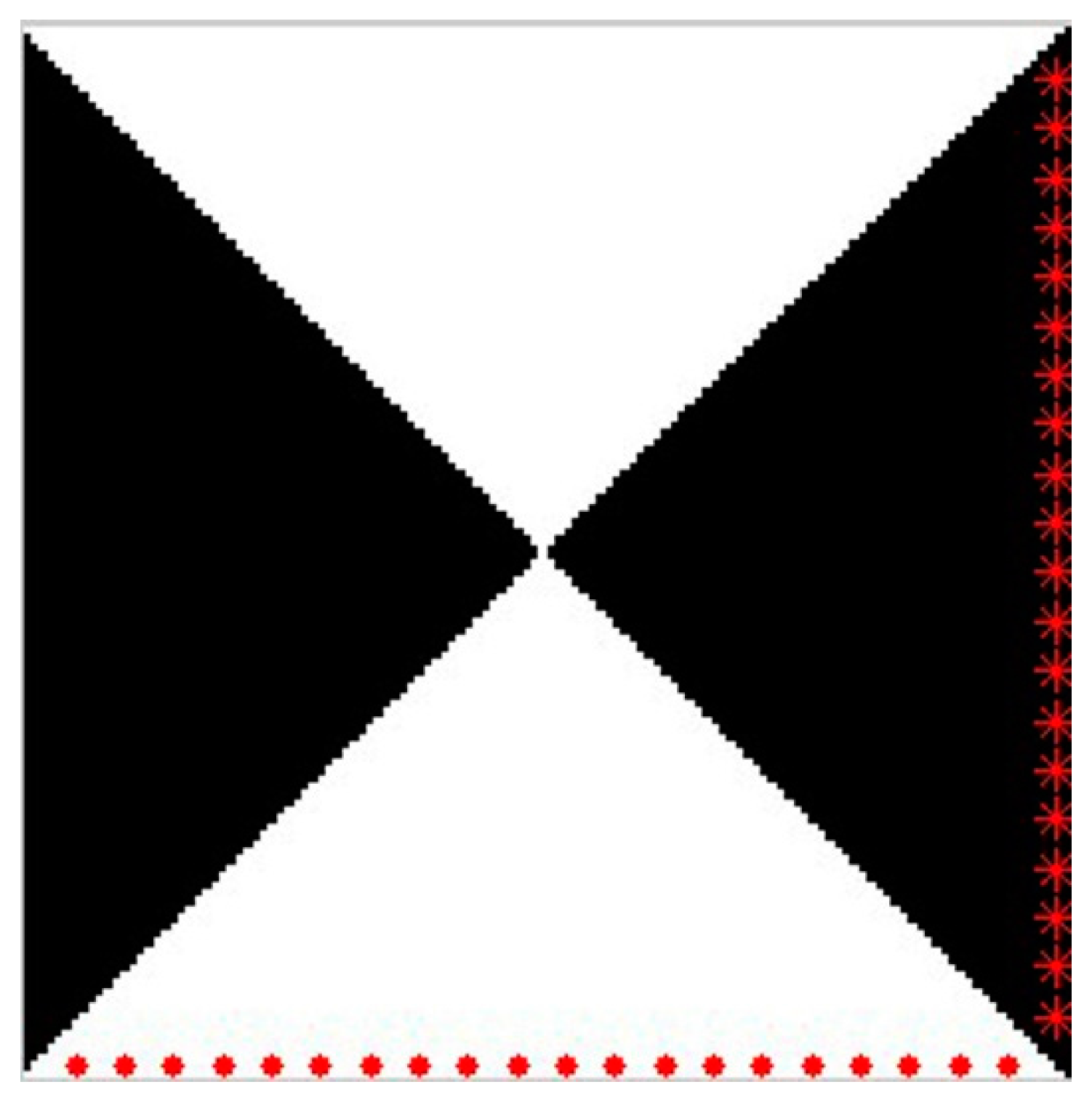

2.2. GPEF Compensation Method

2.3. TV-GPEF Algorithm

- Initialization: Input A, α, λ, η. Set δ0 = 1, b0 = 0. Determine MS and MI according to the scan patterns of the reconstruction based on the rule in [38] as is mentioned above.

- Update un using Equation (18) for the given An−1’. Update An using Equation (19) for the given un. Update bn and δn using Equation (17).

- Input the image An to Equations (10) and (11) to obtain the compensated image in the nth iteration An’.

- If the terminal condition is met, end the iteration. Otherwise, n = n + 1 and return to Steps 2 and 3. The exiting condition is as follows:

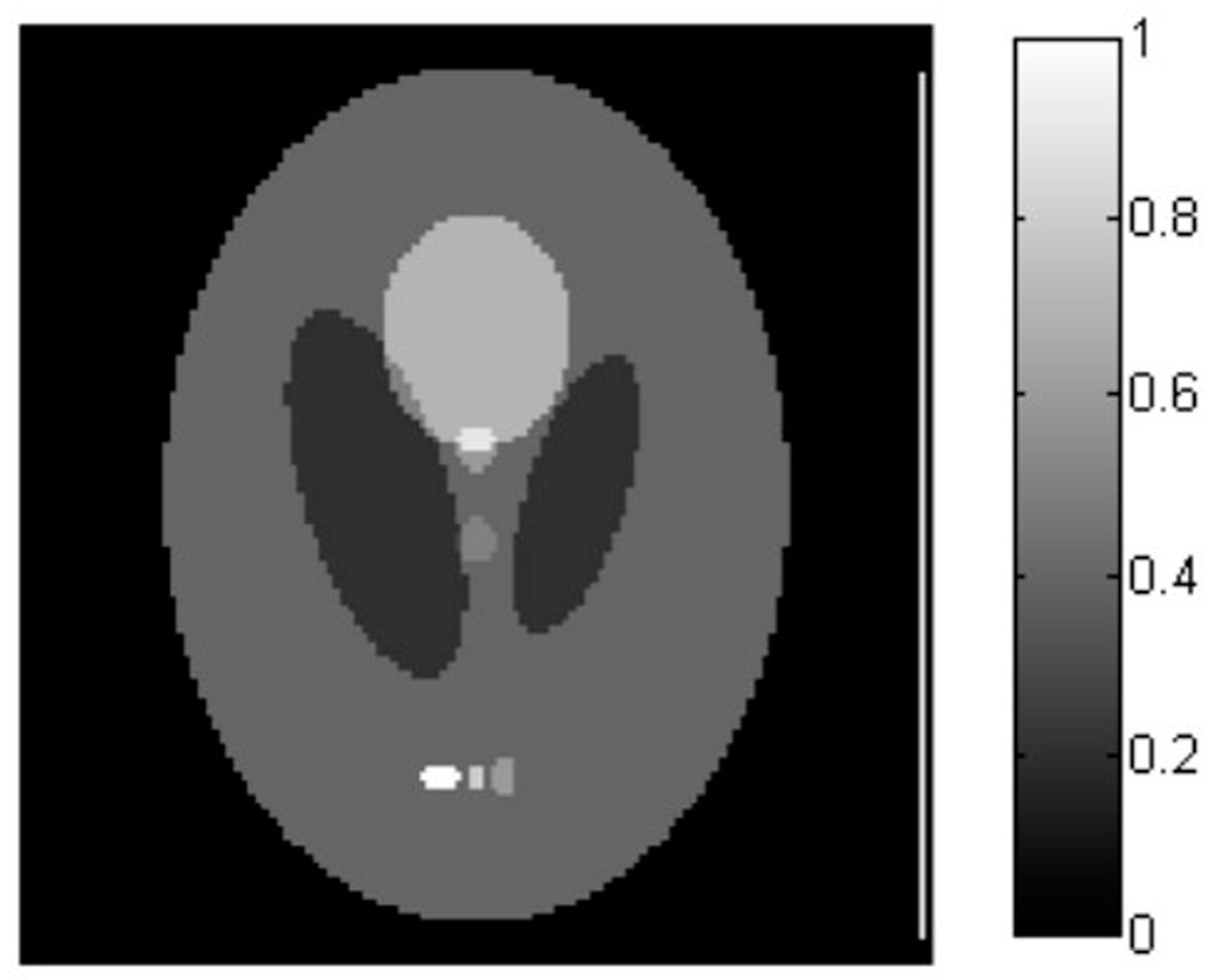

3. Simulation Results

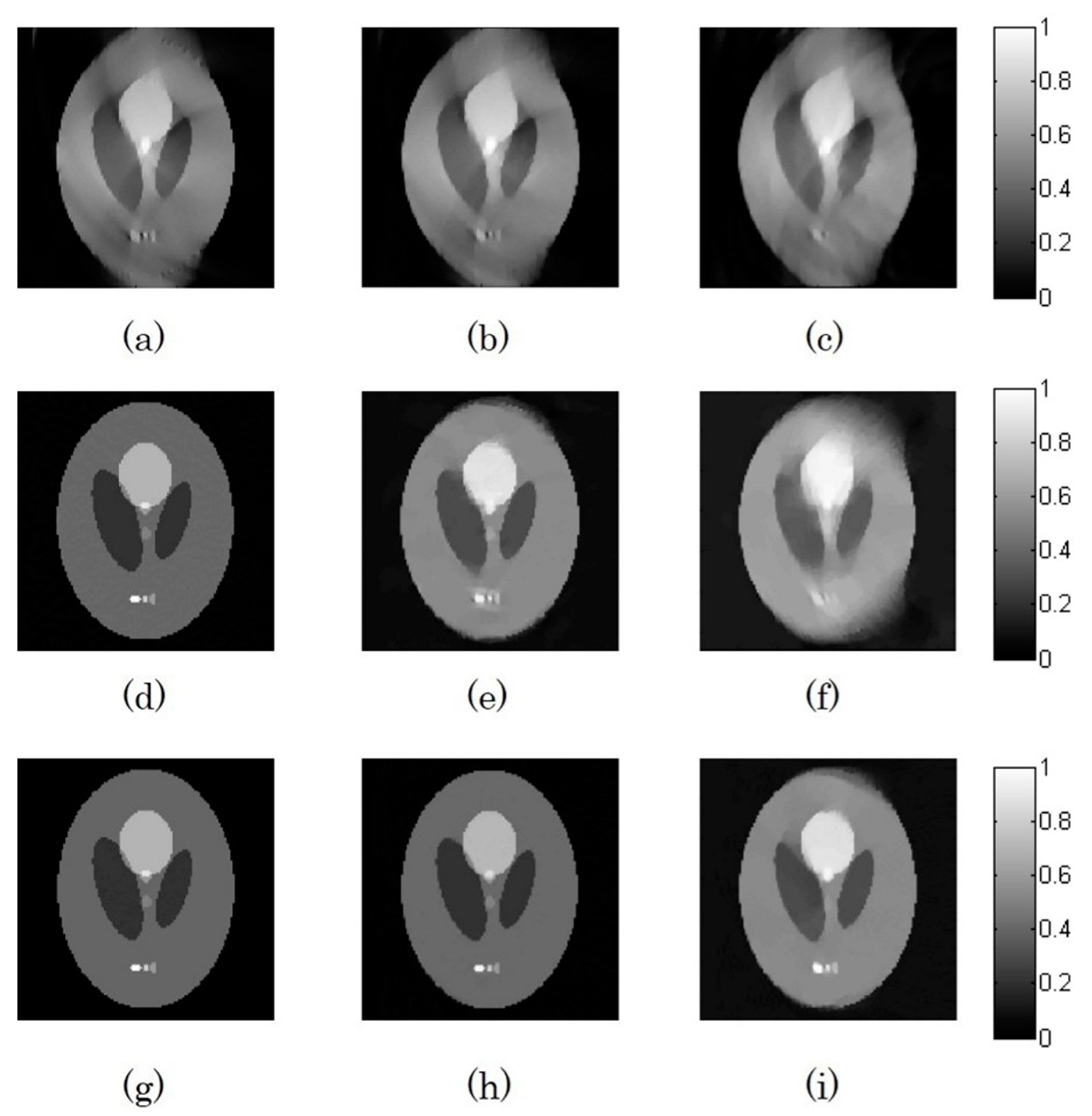

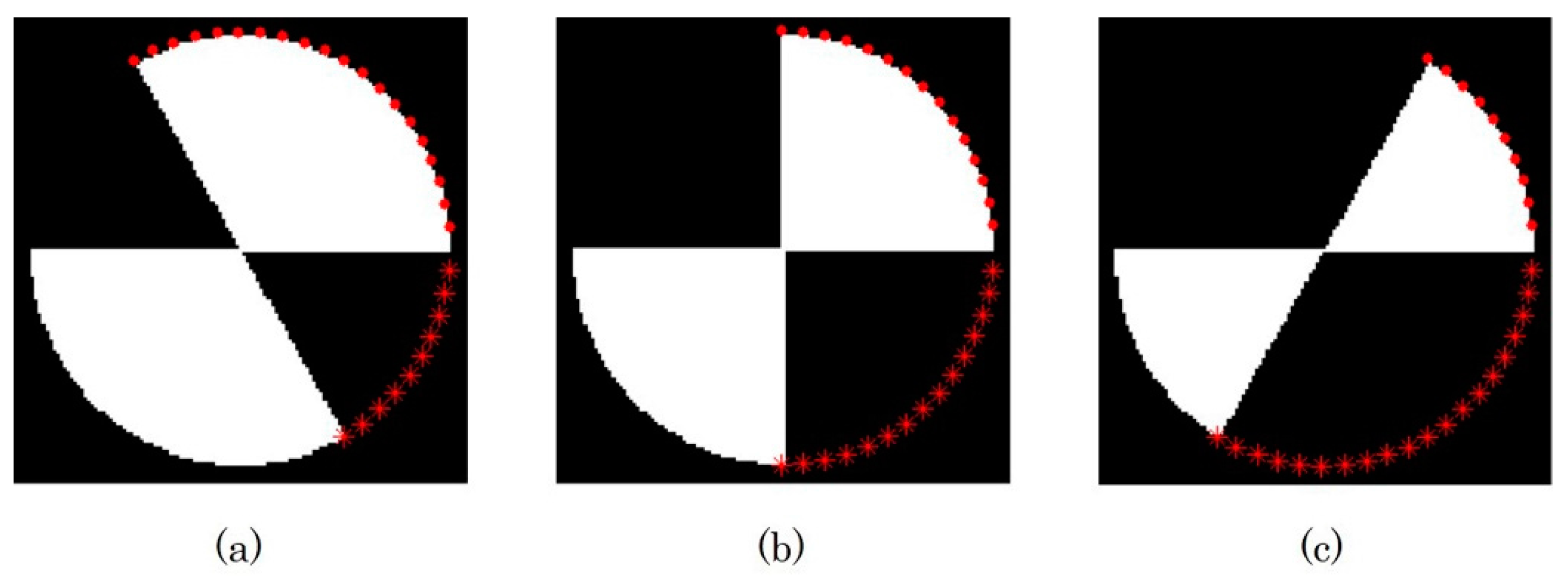

3.1. Straight-Line Scanning

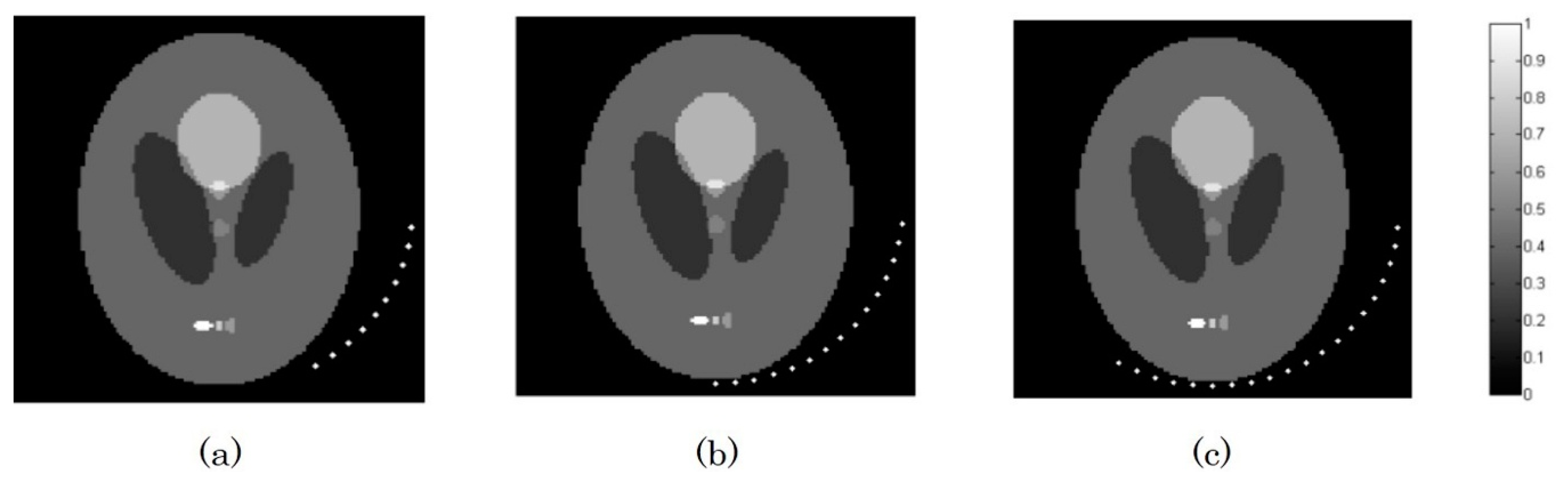

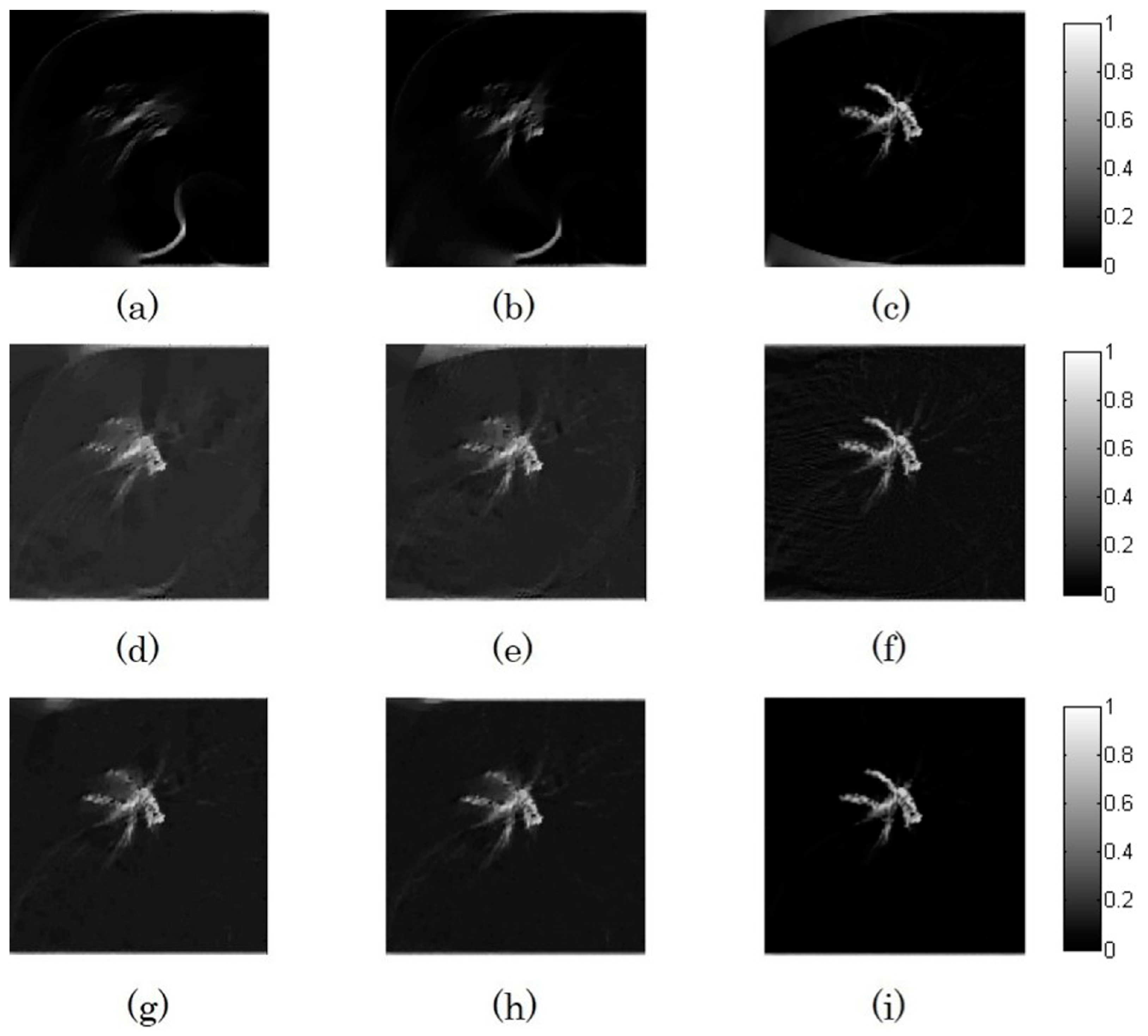

3.2. Limited-Angle Circular Scanning

3.3. Noise Robustness

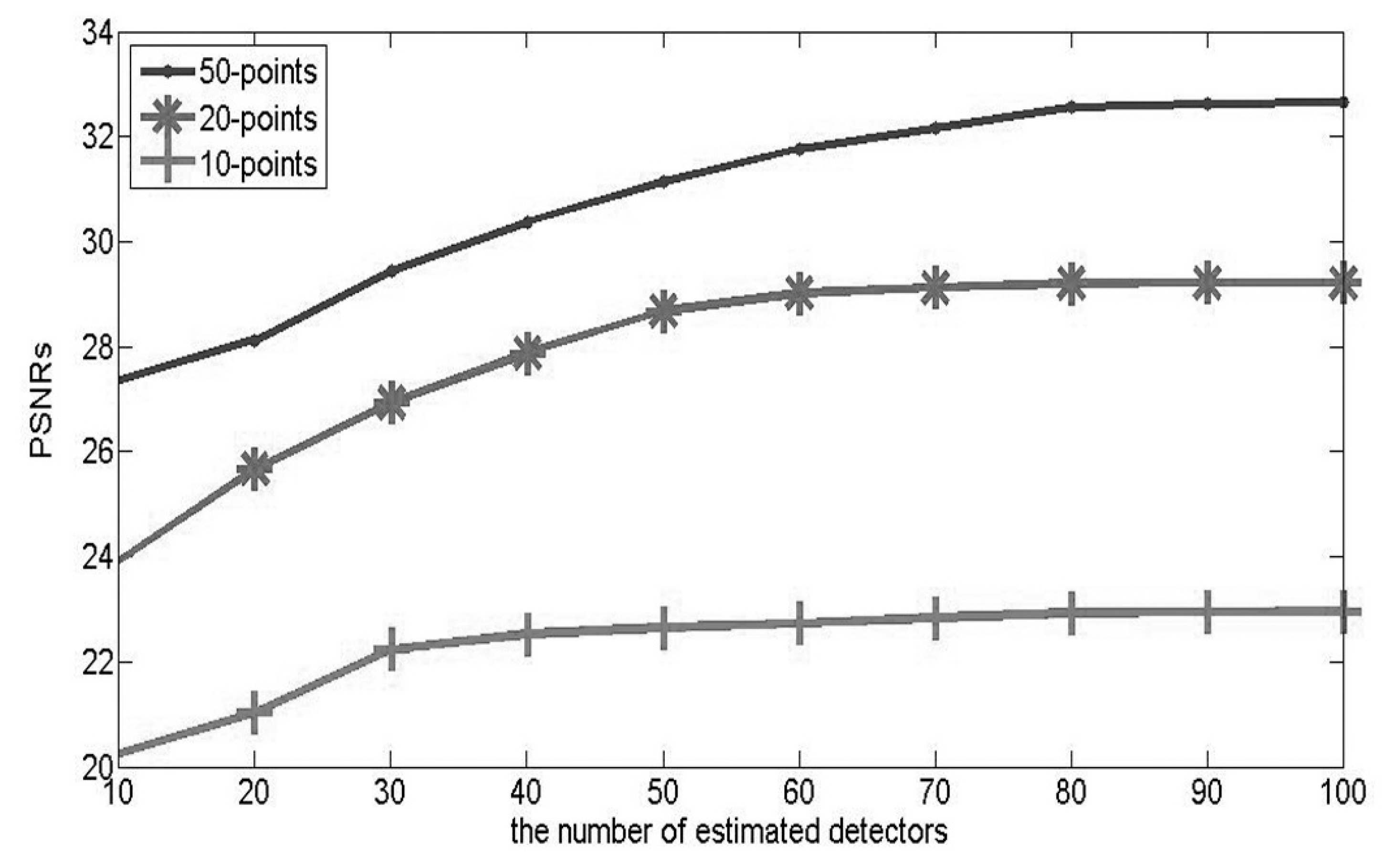

3.4. Convergence Speed

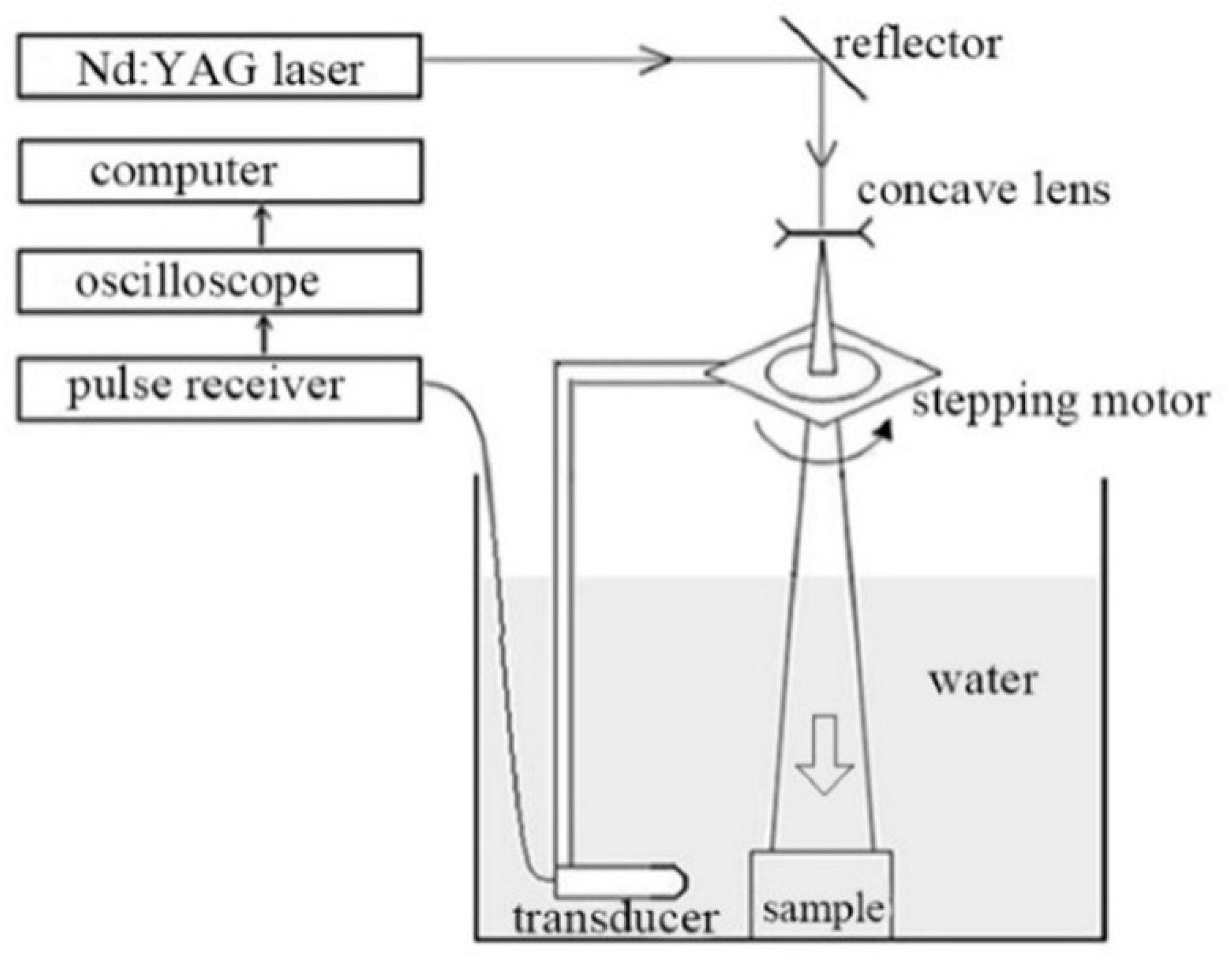

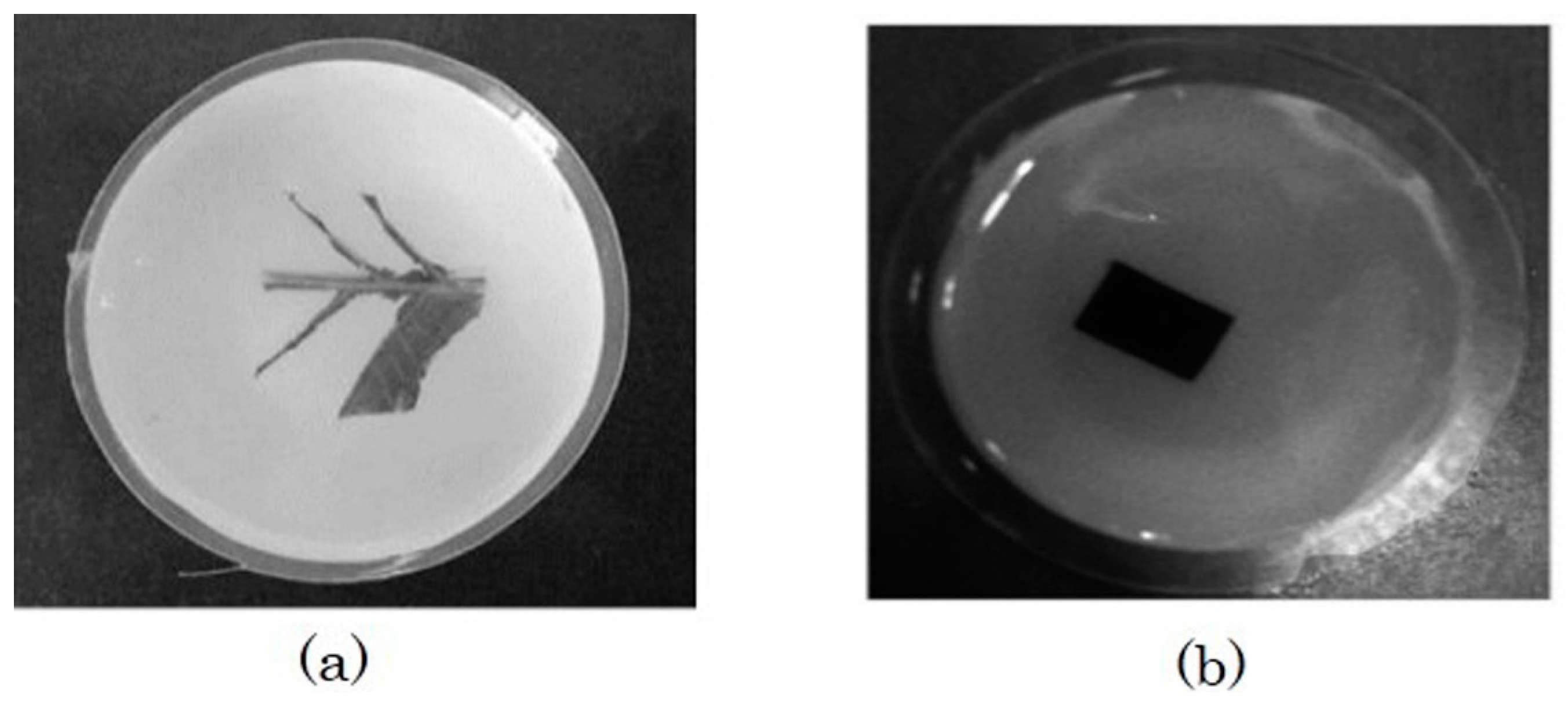

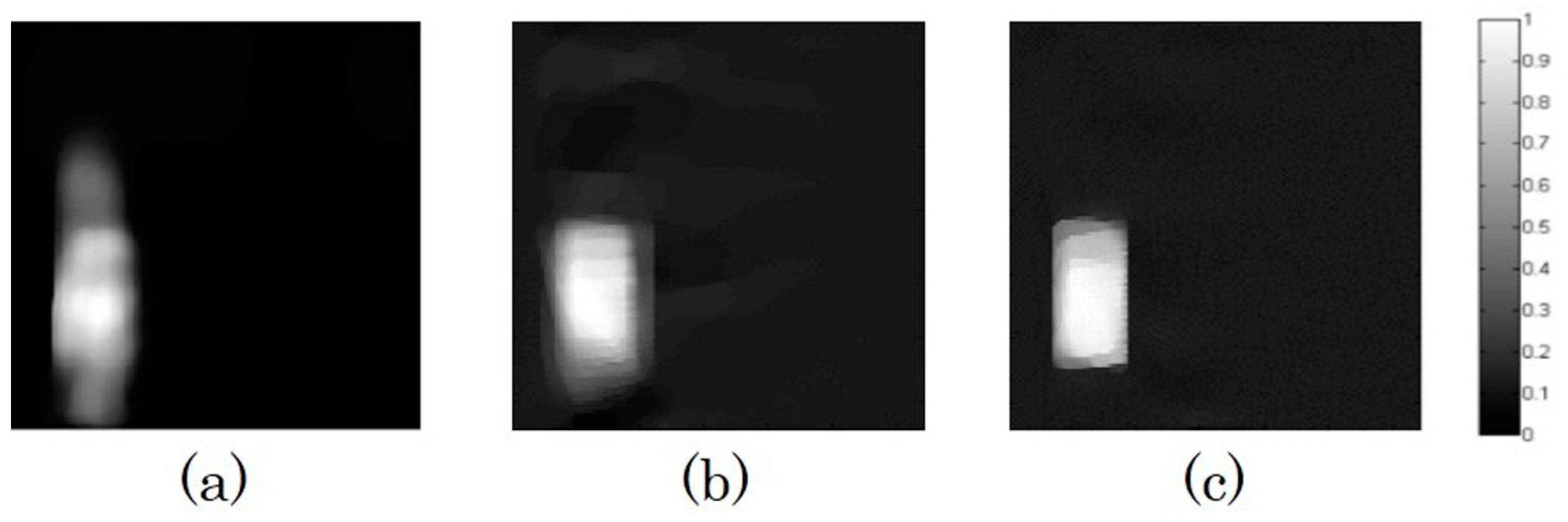

4. In Vitro Experiment

5. Discussion and Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Li, C.; Wang, L.V. Photoacoustic tomography and sensing in biomedicine. Phys. Med. Biol. 2009, 54, R59–R97. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.V. Prospects of photoacoustic tomography. Med. Phys. 2008, 35, 5758–5767. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Wang, L.V. Photoacoustic imaging in biomedicine. Rev. Sci. Instrum. 2006, 77, 041101. [Google Scholar] [CrossRef]

- Kim, C.; Favazza, C.; Wang, L.V. In vivo photoacoustic tomography of chemicals: High-resolution functional and molecular optical imaging at new depths. Chem. Rev. 2010, 110, 2756–2782. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Xiao, H.; Yoon, S.J.; Liu, C.; Matsuura, D.; Tai, W.; Song, L.; O’Donnell, M.; Cheng, D.; Gao, X. Functional photoacoustic imaging of gastric acid secretion using pH-responsive polyaniline nanoprobes. Small 2016, 12, 4690–4696. [Google Scholar] [CrossRef] [PubMed]

- Stein, E.W.; Maslov, K.; Wang, L.V. Noninvasive, in vivo imaging of blood-oxygenation dynamics within the mouse brain using photoacoustic microscopy. J. Biomed. Opt. 2009, 14, 020502. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Wu, Y.; Xia, J. Review on photoacoustic imaging of the brain using nanoprobes. Neurophotonics 2016, 3, 010901. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Wang, L.V. Monkey brain cortex imaging by photoacoustic tomography. J. Biomed. Opt. 2008, 13, 044009. [Google Scholar] [CrossRef] [PubMed]

- Lu, J.; Gao, Y.; Ma, Z.; Zhou, H.; Wang, R.K.; Wang, Y. In vivo photoacoustic imaging of blood vessels using a homodyne interferometer with zero-crossing triggering. J. Biomed. Opt. 2017, 22, 036002. [Google Scholar] [CrossRef] [PubMed]

- Zhong, J.; Wen, L.; Yang, S.; Xiang, L.; Chen, Q.; Xing, D. Imaging-guided high-efficient photoacoustic tumor therapy with targeting gold nanorods. Nanomed. Nanotechnol. Biol. Med. 2015, 11, 1499–1509. [Google Scholar] [CrossRef] [PubMed]

- Pu, K.; Shuhendler, A.J.; Jokerst, J.V.; Mei, J.; Gambhir, S.S.; Bao, Z.; Rao, J. Semiconducting polymer nanoparticles as photoacoustic molecular imaging probes in living mice. Nat. Nanotechnol. 2014, 9, 233–239. [Google Scholar] [CrossRef] [PubMed]

- Jose, J.; Willemink, R.G.; Resink, S.; Piras, D.; van Hespen, J.G.; Slump, C.H.; Steenbergen, W.; van Leeuwen, T.G.; Manohar, S. Passive element enriched photoacoustic computed tomography (PER PACT) for simultaneous imaging of acoustic propagation properties and light absorption. Opt. Express 2011, 19, 2093–2104. [Google Scholar] [CrossRef] [PubMed]

- Yao, J.; Xia, J.; Maslov, K.I.; Nasiriavanaki, M.; Tsytsarev, V.; Demchenko, A.V.; Wang, L.V. Noninvasive photoacoustic computed tomography of mouse brain metabolism in vivo. Neuroimage 2013, 64, 257–266. [Google Scholar] [CrossRef] [PubMed]

- Strohm, E.M.; Moore, M.J.; Kolios, M.C. Single cell photoacoustic microscopy: A review. IEEE J. Sel. Top. Quantum Electron. 2016, 22, 137–151. [Google Scholar] [CrossRef]

- Zhang, C.; Maslov, K.; Wang, L.V. Subwavelength-resolution label-free photoacoustic microscopy of optical absorption in vivo. Opt. Lett. 2010, 35, 3195–3197. [Google Scholar] [CrossRef] [PubMed]

- Kruger, R.A.; Liu, P.; Fang, Y.; Appledorn, C.R. Photoacoustic ultrasound (PAUS)—Reconstruction tomography. Med. Phys. 1995, 22, 1605–1609. [Google Scholar] [CrossRef] [PubMed]

- Mohajerani, P.; Kellnberger, S.; Ntziachristos, V. Fast Fourier backprojection for frequency-domain optoacoustic tomography. Opt. Lett. 2014, 39, 5455–5458. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Xu, Y.; Wang, L.V. Time-domain reconstruction algorithms and numerical simulations for thermoacoustic tomography in various geometries. IEEE Trans. Biomed. Eng. 2003, 50, 1086–1099. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Feng, D.; Wang, L.V. Exact frequency-domain reconstruction for thermoacoustic tomography. I. Planar geometry. IEEE Trans. Med. Imaging 2002, 21, 823–828. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Wang, Y. Deconvolution reconstruction of full-view and limited-view photoacoustic tomography: A simulation study. J. Opt. Soc. Am. 2008, 25, 2436–2443. [Google Scholar] [CrossRef]

- Finch, D.; Patch, S.K. Determining a function from its mean values over a family of spheres. SIAM J. Math. Anal. 2004, 35, 1213–1240. [Google Scholar] [CrossRef]

- Finch, D.; Haltmeier, M.; Rakesh. Inversion of spherical means and the wave equation in even dimensions. SIAM J. Appl. Math. 2007, 68, 392–412. [Google Scholar] [CrossRef]

- Kunyansky, L.A. Explicit inversion formulae for the spherical mean Radon transform. Inverse Probl. 2007, 23, 373–383. [Google Scholar] [CrossRef]

- Haltmeier, M. Universal inversion formulas for recovering a function from spherical means. SIAM J. Math. Anal. 2014, 46, 214–232. [Google Scholar] [CrossRef]

- Huang, C.; Wang, K.; Nie, L.; Wang, L.V.; Anastasio, M.A. Full-wave iterative image reconstruction in photoacoustic tomography with acoustically inhomogeneous media. IEEE Trans. Med. Imaging 2013, 32, 1097–1110. [Google Scholar] [CrossRef] [PubMed]

- Paltauf, G.; Viator, J.; Prahl, S.; Jacques, S. Iterative reconstruction algorithm for optoacoustic imaging. J. Acoust. Soc. Am. 2002, 112, 1536–1544. [Google Scholar] [CrossRef] [PubMed]

- Rosenthal, A.; Jetzfellner, T.; Razansky, D.; Ntziachristos, V. Efficient framework for model-based tomographic image reconstruction using wavelet packets. IEEE Trans. Med. Imaging 2012, 31, 1346–1357. [Google Scholar] [CrossRef] [PubMed]

- Ding, L.; Deán-Ben, X.L.; Lutzweiler, C.; Razansky, D.; Ntziachristos, V. Efficient non-negative constrained model-based inversion in optoacoustic tomography. Phys. Med. Biol. 2015, 60, 6733–6750. [Google Scholar] [CrossRef] [PubMed]

- Meng, J.; Wang, L.V.; Ying, L.; Liang, D.; Song, L. Compressed-sensing photoacoustic computed tomography in vivo with partially known support. Opt. Express 2012, 20, 16510–16523. [Google Scholar] [CrossRef] [PubMed]

- Haltmeier, M.; Berer, T.; Moon, S.; Burgholzer, P. Compressed sensing and sparsity in photoacoustic tomography. J. Opt. 2016, 18, 114004. [Google Scholar] [CrossRef]

- Betcke, M.M.; Cox, B.T.; Huynh, N.; Zhang, E.Z.; Beard, P.C.; Arridge, S.R. Acoustic wave field reconstruction from compressed measurements with application in photoacoustic tomography. arXiv, 2016; arXiv:1609.02763. [Google Scholar]

- Arridge, S.; Beard, P.; Betcke, M.; Cox, B.; Huynh, N.; Lucka, F.; Ogunlade, O.; Zhang, E. Accelerated high-resolution photoacoustic tomography via compressed sensing. Phys. Med. Biol. 2016, 61, 8908–8940. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Su, R.; Oraevsky, A.A.; Anastasio, M.A. Investigation of iterative image reconstruction in three-dimensional optoacoustic tomography. Phys. Med. Biol. 2012, 57, 5399–5423. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Wang, Y.; Zhang, C. Total variation based gradient descent algorithm for sparse-view photoacoustic image reconstruction. Ultrasonics 2012, 52, 1046–1055. [Google Scholar] [CrossRef] [PubMed]

- Gamelin, J.K.; Aguirre, A.; Zhu, Q. Fast, limited-data photoacoustic imaging for multiplexed systems using a frequency-domain estimation technique. Med. Phys. 2011, 38, 1503–1518. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Tao, C.; Liu, X. Photoacoustic tomography in scattering biological tissue by using virtual time reversal mirror. J. Appl. Phys. 2011, 109, 084702. [Google Scholar] [CrossRef]

- Yao, L.; Jiang, H. Photoacoustic image reconstruction from few-detector and limited-angle data. Biomed. Opt. Express 2011, 2, 2649–2654. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Wang, L.V.; Ambartsoumian, G.; Kuchment, P. Reconstructions in limited-view thermoacoustic tomography. Med. Phys. 2004, 31, 724–733. [Google Scholar] [CrossRef] [PubMed]

- Xiang, L.Z.; Xing, D.; Gu, H.M.; Yang, S.H.; Zeng, L.M. Photoacoustic imaging of blood vessels based on modified simultaneous iterative reconstruction technique. Acta Phys. Sin. 2007, 56, 3911–3916. [Google Scholar]

- Tao, C.; Liu, X. Reconstruction of high quality photoacoustic tomography with a limited-view scanning. Opt. Express 2010, 18, 2760–2766. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Zhang, Y.; Wang, Y. A photoacoustic image reconstruction method using total variation and nonconvex optimization. Biomed. Eng. Online 2014, 13, 117. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Peng, D.; Ma, X.; Guo, W.; Liu, Z.; Han, D.; Yang, X.; Tian, J. Limited-view photoacoustic imaging based on an iterative adaptive weighted filtered backprojection approach. Appl. Opt. 2013, 52, 3477–3483. [Google Scholar] [CrossRef] [PubMed]

- Modgil, D.; La, P.J. Riviere, Implementation and comparision of reconstruction algorithms for 2D optoacoustic tomography using a linear array. Proc. SPIE 2008, 6856, 68561D-1–68561D-12. [Google Scholar] [CrossRef]

- Gao, H.; Feng, J.; Song, L. Limited-view multi-source quantitative photoacoustic tomography. Inverse Probl. 2015, 31, 065004. [Google Scholar] [CrossRef]

- Huang, B.; Xia, J.; Maslov, K.; Wang, L.V. Improving limited-view photoacoustic tomography with an acoustic reflector. J. Biomed. Opt. 2013, 18, 110505. [Google Scholar] [CrossRef] [PubMed]

- Feng, J.; Zhou, W.; Gao, H. Multi-source Quantitative Photoacoustic Tomography with Detector Response Function and Limited-view Scanning. J. Comput. Math. 2016, 34, 588–607. [Google Scholar] [CrossRef]

- Gerchberg, R. Super-resolution through error energy reduction. J. Mod. Opt. 1974, 21, 709–720. [Google Scholar] [CrossRef]

- Papoulis, A. A new algorithm in spectral analysis and band-limited extrapolation. IEEE Trans. Circuits Syst. 1975, 22, 735–742. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Physica D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Gao, H.; Zhang, L.; Chen, Z.; Xing, Y.; Xue, H.; Cheng, J. Straight-line-trajectory-based x-ray tomographic imaging for security inspections: system design, image reconstruction and preliminary results. IEEE Trans. Nucl. Sci. 2013, 60, 3955–3968. [Google Scholar] [CrossRef]

- Köstli, K.P.; Beard, P.C. Two-dimensional photoacoustic imaging by use of Fourier-transform image reconstruction and a detector with an anisotropic response. Appl. Opt. 2003, 42, 1899–1908. [Google Scholar] [CrossRef] [PubMed]

- Ye, X.; Chen, Y.; Huang, F. Computational acceleration for MR image reconstruction in partially parallel imaging. IEEE Trans. Med. Imaging 2011, 30, 1055–1063. [Google Scholar] [CrossRef] [PubMed]

- Afonso, M.V.; Bioucas-Dias, J.M.; Figueiredo, M.A. An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Image Process. 2011, 20, 681–695. [Google Scholar] [CrossRef] [PubMed]

| PSNRs (dB) | 50-Points | 20-Points | 10-Points |

|---|---|---|---|

| TV-GD | 17.58 | 16.46 | 14.35 |

| TV-VB | 26.58 | 19.34 | 15.26 |

| TV-GPEF | 32.56 | 28.67 | 22.23 |

| PSNRs (dB) | 60-Views | 90-Views | 120-Views |

|---|---|---|---|

| TV-GD | 12.23 | 14.49 | 18.78 |

| TV-VB | 14.41 | 18.03 | 22.47 |

| TV-GPEF | 21.89 | 26.71 | 33.74 |

| PSNRs (dB) | 0 dB | 5 dB | 10 dB |

|---|---|---|---|

| TV-GD | 12.59 | 13.47 | 14.02 |

| TV-VB | 14.97 | 16.49 | 18.55 |

| TV-GPEF | 21.38 | 25.32 | 27.39 |

| Time (s) | 180-Views | 90-Views | 60-Views |

|---|---|---|---|

| TV-GD | 15.68 | 13.73 | 10.32 |

| TV-VB | 9.87 | 8.37 | 6.14 |

| TV-GPEF | 16.43 | 14.46 | 11.28 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Wang, Y. An Efficient Compensation Method for Limited-View Photoacoustic Imaging Reconstruction Based on Gerchberg–Papoulis Extrapolation. Appl. Sci. 2017, 7, 505. https://doi.org/10.3390/app7050505

Wang J, Wang Y. An Efficient Compensation Method for Limited-View Photoacoustic Imaging Reconstruction Based on Gerchberg–Papoulis Extrapolation. Applied Sciences. 2017; 7(5):505. https://doi.org/10.3390/app7050505

Chicago/Turabian StyleWang, Jin, and Yuanyuan Wang. 2017. "An Efficient Compensation Method for Limited-View Photoacoustic Imaging Reconstruction Based on Gerchberg–Papoulis Extrapolation" Applied Sciences 7, no. 5: 505. https://doi.org/10.3390/app7050505