In our research design, we explored possibilities for using a robot as a teaching assistant by using the Engagement Profile to vary six distinct capabilities, that were measured against user feedback regarding satisfaction and some engagement factors. The Engagement Profile and such methodology has been applied earlier in connection with evaluating exhibits in science centres and museums. Using this design methodology, one performs several iterations where the design of the robot teaching assistant is altered by changing its capabilities, followed by an evaluation step that gives evidence how to make further changes.

In our research, we used the Engagement Profile as an evaluation platform. For this purpose, we adjusted the Engagement Profile to the use case of robots in a teaching context. The transition from installations in science centres and museums, for which the Engagement Profile originally was designed, to robots in a teaching context seemed straightforward. Further, the categories in the Engagement Profile, i.e., competition, narrative, interactivity, physical user control, social, achievements, and exploration, appeared to be suitable for the analysis. Also, the use of the questionnaires for the analysis integrating the Engagement Profile, the user satisfaction questions, as well as the capability questions appeared to be a suitable procedure for analysis.

5.1. Limitations

The current work has various limitations regarding the study design, our approach to designing for engagement, and the robot’s capabilities. We discuss these limitations and their potential impact below.

Our study was conducted with young adults of various nationalities at an engineering master’s course, but there are many variables including age, culture, gender, and expertise and more, which can affect perception of robots. For example, age in adult classrooms can differ substantially. Young adults can be uncertain about their status as adults, with low rates of marriage, parenthood, and occupational experience [

61], and can also have different media preferences than older adults [

62]. As well, university classes in Sweden exhibit high cultural diversity, especially at the Master’s level [

63], where cultural differences in attitudes toward robots have been noted [

64]. Moreover, men have expressed more positive attitudes toward robots than women [

65].

In regard to the Engagement Profile, the online questionnaires were convenient for collecting statistics but alone did not provide a way to find out why the students thought the way they did (i.e., the kind of probing which interviews allow for), which complicated efforts to improve the system.

Also, the students had a week to answer the questionnaires, which was not optimal. It would have been better if the responses had been given immediately after the learning experience, as there is evidence in the literature that results can be biased when the memory is not fresh [

66]. However, there were practical reasons for performing the surveys as described previously.

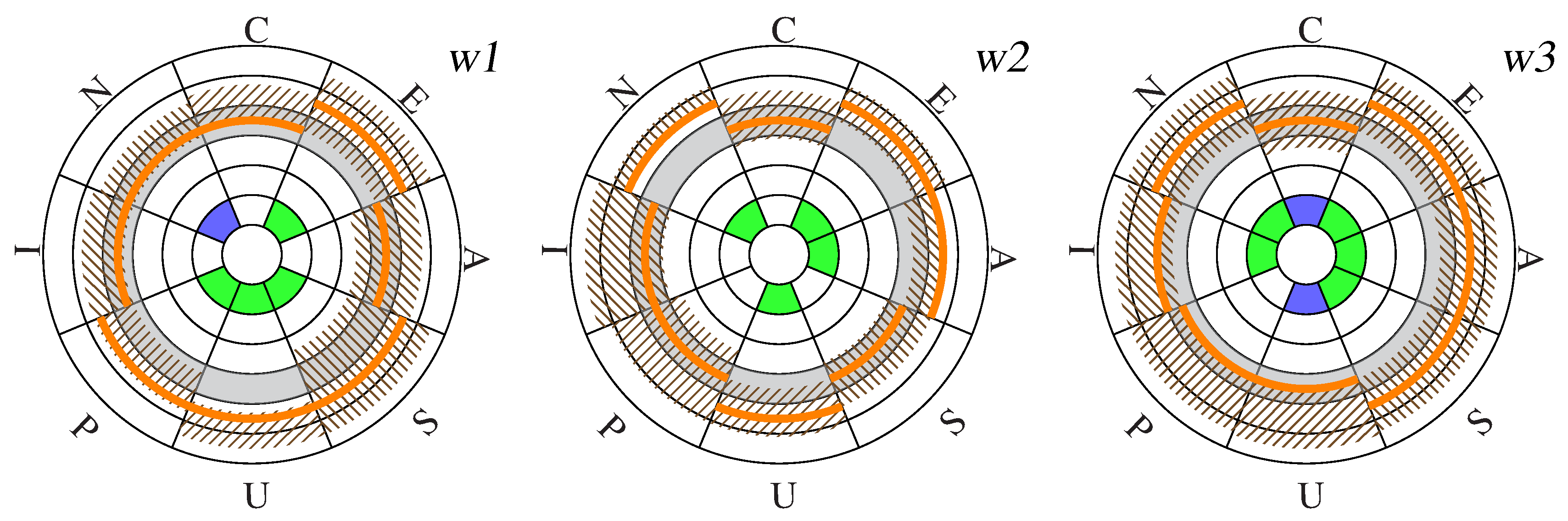

Additionally, participation in questionnaires was low, possibly because the questions were the same each week, we did not give incentives to the students, and they were to be completed outside of class time. For this reason, the responses might have been biased and not representative of the class as a whole. Thus, the low number of responses to our questionnaires limited our analysis and led to some outcomes that we could only use as indications. We also note that we wished that the number of responses to our questionnaire could have been higher, specifically in week 2 where only five students responded. Therefore, the analyses using statistics might not be conclusive for such low numbers of responses. However, the results still give some indications that the participants reacted positively to the experiments, as shown in

Table 9.

Also, the impact of changes made in the design was not as large as expected. Possibly, the introduction of a new artifact such as a robot teaching assistant had a greater influence on satisfaction and engagement than adjusting the robot’s capabilities. In hindsight, we recognize that the changes to the capabilities might not have been large enough between the iterations to show an impact in the Engagement Profile and satisfaction ratings. Probably, more iterations would have been useful, so that the novelty factor of using a robotic teaching assistant would be reduced, giving the changes between the iterations more room for comparison by the users.

Some limitations also apply to the mid-fidelity prototyping approach we employed, where capabilities were implemented only as prototypes or mock-ups. Thus, the teacher needed to construct situations and effectuate some actions by the robot manually, as capabilities were not fully autonomous. Being forced to press buttons and cause certain trigger-events required some need to concentrate on the robot instead of teaching. We assume that this had an impact on what the students experienced and satisfaction. Further, content for the robot such as quizzes and gestures needed to be prepared in advance. As there is no authoring system available, this can require time and resources, as well as being inflexible.

Moreover, the six capabilities we investigated were derived from functionality requirements and consideration of their usefulness was conducted from a theoretical perspective. We did not explicitly consider the abstract property of a robotic teaching assistant being recognized as a tangible entity that one can communicate with, or the degree to which the robot being evaluated is actually perceived as being capable of different capabilities. For future studies, we suggest that the impact of such properties be included in the research design.

In conducting our study, some unexpected behaviors were also observed, as was described, like in mistaking the timing for the robot to hand out an attendance sheet. Such events can influence how the students thought about the robot, but we think this was not a critical problem for our work for the following reasons: First, the contribution of the work is elsewhere, on reporting on how we can apply an iterative design tool (Engagement Profile) from a different domain to designing a robot teaching assistant, based on requirements we identified from teachers. Also, the students reported a positive general impression of the robot despite the few failures which occurred; and, the literature reports that mistakes from a robot can actually have some positive effects in helping students to feel less self-conscious about performing perfectly [

35]. Moreover, it was known from the start that some failures could be expected due to the challenging setting: the paper explores testing “in the wild” in a real world setting where there is really no way to avoid all troubles from occurring [

67,

68]—there is no robot we know of which already has the capabilities we described, and even human teachers can make mistakes in real class settings, as our teachers described. The students also were aware from the start, from the robot’s self-introduction, that the robot was a work-in-progress.

Conversely, we think it is a strength of the adopted approach that we report on such observations, because our exploratory experiment (based on accepted methodology such as grounded theory and prototyping approaches [

69]) is designed also for the purpose of trying to expose such failures early on. It is known that publication bias and the file-drawer effect are serious problems which severely impede the scientific community’s understanding [

70], and hiding or designing around failures runs the risk that others will make similar mistakes, which would be desirable to avoid. Since there is increasing interest in this area, with many courses around the world starting to use robots, we think there is a use for identifying such potential failing points early on by adopting such a research design.

5.2. Future Work

As described previously, various past studies have already reported good results using robots with people of different ages, cultures, and non-engineering students (e.g., in language classes), as well as engineering students, but more work is needed. Future work will involve comparing different demographics (e.g., young adults versus elderly, different cultures, and in different fields).

For the Engagement Profile, we shall use the questions we conceived to further develop this analysis and design methodology for artifacts in a teaching context. We will also explore the possibility of dedicating time at the end of classes to answering questionnaires in order to gain more responses and how to also conduct some interviews to gain insight into why the students thought the way they did.

Regarding capabilities for a robot teaching assistant, we focused on six capabilities which our teachers indicated as desirable, but there could be various other qualities which could be useful.

Also, in general, we pressed buttons and timers to trigger robot behaviors such as quizzes and reminders, but the robot itself should be able to flexibly determine the right time to act; this includes recognizing when students are listening.

As well, content such as quizzes or suggestions for extra reading had to be prepared ahead of time manually by the teacher, and it was not possible to take into account what each student knew; future work will explore how the robot itself can construct or select content, which will have benefits such as allowing for continuous evaluation. A fundamental related problem is analysis of student behaviors and responses, which can be addressed by approaches such as educational data mining (EDM) and learning analytics (LA) [

71]. For example, text mining can be conducted on a student’s verbal or written responses; the abstract structure underlying an answer can be represented using a tree or in the case of programming in terms of how input data are transformed [

72]. Some other typical applications of such approaches include characterizing students by learning behaviors, knowledge levels, or personalities [

73].

Such analysis should be utilized to plan a robot’s behaviors. For example, some work has already explored how deep learning can be used for an intelligent tutoring system (ITS) to select quiz questions in an optimal way for a single student based on knowledge tracing [

74]. Further work is required to explore how to do so for groups of students in a class. This is not a simple question as decisions must be made about which students, if any, to prioritize: weaker students who require help to meet minimum learning requirements, or stronger students who are trying hard to learn. Additionally, another attractive prospect would be if a robot can itself learn to improve its knowledge base, e.g., from YouTube videos [

75]; domain-specific learning has been demonstrated in various studies but general learning across various topics, and machine creativity for generating new content, are still desirable goals for future work.

As well, insight from various previous studies could be used to take these capabilities to a higher level of technological readiness: Greetings, a useful way to enhance engagement [

76], could be more effective if personalized. For example, a system was developed which could greet people personally, proposing that robots can remember thousands of faces and theoretically surpass humans in ability to tailor interactions toward specific individuals [

77]. However, much future work remains to be done in this area in regard to how content can be personalized, e.g., by recognizing features such as clothing or hairstyle, or leveraging prior knowledge of human social conventions.

As well, for alerting capability, adaptive reminding systems have been developed which seek to avoid annoying the person being reminded or making them overly reliant on the system, such as for the nurse robot PEARL [

78]. We expect that this challenge will become more difficult when dealing with a group of people, where some times and actions might be good for some students but not for others. For example, some students might finish an exercise faster than others, but should a robot wait before initiating discussion until the majority of students have stopped making progress on the exercise; and if so, how can this be recognized? Possibly, a robot could detect, for each student, when a student’s attention is no longer directed toward a problem (e.g., by tracking eye gaze [

79]), nothing has been written for a while, verbal behavior focuses on an unrelated topic, or potentially informative emotions such as satisfaction, frustration, or defeat are communicated—although we expect accurate inference to be quite challenging given the complexity of human behavior.

Various interesting possibilities for improved remote operation are suggested in the literature. For example, a robot could be used by students with a physical disability to carry out physical tasks [

36], or students with cancer to remotely attend classes [

19]. Moreover, it was suggested that robots’ limited capabilities actually facilitate collaboration, e.g., by increasing participation, proactiveness, and stimulation for operators [

18,

21]. Future work could consider how to share time on robots between multiple students, and provide enhanced sensing capabilities which human students might not have, like the ability to zoom in on slides. Moreover, ethical concerns should be investigated, such as if students using robots to attend classes could be vulnerable to being hassled or feeling stigmatized; or conversely, who is accountable if a student uses a robot to cause harm?

Clarification and automatically supporting the teacher (e.g., by looking up and showing topics on a display while the teacher talks) could be improved by incorporating state-of-the-art speech recognition and ability to conduct information retrieval with verbose natural language queries, such as is incorporated into IBM’s Watson, Microsoft’s Siri, Google Assistant, and Facebook Graph Search [

80]. How to display such information in an optimally informative way is an interesting question for future work, both for individual students and groups of students.

Physical tasks such as handing out materials could also be improved by considering previous work: for example, approaches have been described for making hand-overs natural and effective [

81], for handing materials to seated people [

82], and for how to approach people to deliver handouts [

83]. For example, a robot’s velocity profile can be controlled to be distinctive and evoke trust [

84], in students who might not be accustomed to interacting with robots. Mechanisms for dealing with complex and narrow human environments with obstacles can also be considered; for example, a drone could be used by a large robot to deliver handouts to students in locations which are difficult to directly access.

As well, the question of whether an embodiment is truly needed arises again. As noted, various studies have suggested the usefulness of robots as an engaging medium within certain contexts. For example, a human-like robot was considered more enjoyable than a human or a box with a speaker for reading poetry [

85]; the authors speculated that the robot was easier to focus attention on than the box, and that the robot’s neutral delivery allowed for more room for interpretation and for people to concentrate and immerse themselves more. However, five out of six of the capabilities we explored—all except physical tasks—do not strictly speaking require an embodiment. Not using an embodiment could be cheaper; also, using speakers and screens distributed through a classroom (e.g., as desks) could make it easier for all students to be able to see and hear to the same degree. This suggests the usefulness of some future work to examine cost/value trade-offs for having an embodiment within various contexts. Furthermore, additional mechanisms such as emotional speech synthesis could be used for capabilities such as reading, greeting, and alerting to have a more engaging delivery.