1. Introduction

Finding effective ways to measure student learning has been an enduring issue across the higher education sector. The benefits for students in using a learn-by-design approach have been well researched and the research has covered a broad range of research streams. For example, one stream of studies have focused on the benefits of student learning through design across the education sector; as Kafai states, “The greatest learning benefit remains reserved for those engaged in the design process…and not those at the receiving end” [

1] (p. 39). Another stream of research has investigated how to structure the design experience to support learning and has focused more on the design of learning tasks and activities [

2]. Another stream of studies has focused on teachers as designers [

3]. There have been numerous studies on cooperative and collaborative learning which have focused on benefits, such as motivation, social cohesion, and higher levels of learning [

4,

5]. Other studies have examined the role of formative assessment in supporting creative design [

6]. One issue raised by Goodyear et al. on the research on teachers as designers is that it may be “all too rare for university teachers to have timely, valid and reliable data on student achievement. This is a major problem in the assessment process itself, but also handicaps any attempts at evidence-driven iterative design” [

3] (p. 15). In this paper, we discuss how students interacted during a subject that comprised two collaborative learn-technology-by-design assessments. The assessment design being discussed here centred on two collaborative learn-technology-by-design tasks. The assessments were conducted in an Information and Communication Technologies (ICT) unit of study (subject) as part of a bachelor or education degree program. The rationale for the collaborative assessment approach was that, as educators, by understanding how students collaborate and engage in design processes we could provide an authentic learning experiences and formative feedback to support the acquisition of pedagogical and technological knowledge.

The focus of the study was to examine small group design processes in order to better understand how to design long-term assessments that support the development of pre-service teacher technological, pedagogical and content knowledge. This stream of research is part of the growing body of research on teachers as design researchers [

7].

3. Methodology and Process

This emphasis on design to solve problems is not a new notion in educational research, as Schön [

25] noted, educators are designers who design artefacts to solve problems, thus implying that design is at the core of education. A design-based research approach underpins the study where each semester the unit is modified on the basis of feedback and analysis of the previous studies in a system of continual renewal and improvement that considers not only the changing student needs, but also the evolution of technologies. Design-based research is an approach to research that supports the exploration of educational problems and refining theory and practice by defining a pedagogical outcome, and then focusing on how to create a learning environment that supports the outcome [

26,

27]. The use of micro phases or prototyping phases in design-based research is a strategy to ensure reliability of the design before the final field work study. As design-based research aims to ascertain if and why a particular intervention works in a certain context, micro research phases provide researchers with an opportunity to refine the design and to gain a more informed understanding of why an invention may (or may not) work in that context [

28]. Micro phases involve a series of small scale design studies that result in the subsequent revaluation of the materials before the final product is used in a school-based study. The use of micro phases is part of what Plomp refers to as the prototyping stage: “each cycle in the study is a piece of research in itself (i.e., having its research or evaluation question to be addressed with a proper research design)” [

28] (p. 25). Each phase should be presented as a separate study as there may be different research questions, population groups, data samples and methods of data analysis. What is of note is that over the past few years there has been a growing awareness of the need to investigate the design-based research. This growth in the literature has also resulted in a commentary on the benefits and challenges of using this approach in research as seen in the papers by Anderson and Shattuck [

29] and McKenny and Reeves [

30]. McKenny and Reeves [

30] pointed out that one of the main aims of a design research approach is to generate theoretical understanding that can be of value to others, and this premise can also be extended to include how the methodology can be applied. The authors acknowledge that this paper does not focus on the theoretical aspects of the study, as it centres on gaining an understanding of how pre-service teachers collaborate to plan and develop and ICT resources.

3.1. Overview of Research Design

We used a collaborative approach in both the design of the tasks and the critical feedback through a peer review process. Design-based learning activities have been shown to be of benefit to students learning how to use technology effectively in a range of educational contexts [

13,

16,

31]. Research findings have indicated that pre-service teachers develop deeper understanding through the experiences of both dialogue and reflection in action [

13]. Learn-technology-by-design tasks are accomplished in the environments where students, and in this instance pre-service teachers, are encouraged to use ICT tools to build a learning environment. In earlier iterations of the unit of study discussed in this paper, a range of technologies have been used. What we have found was that the more complicated the technology, the more resistant the students were to use these new technologies and new skills in an educational setting. For example, in a study on the use of virtual worlds in science education, we found that given the amount of time that it would take to master the virtual world, pre-service teachers did not want to risk using the technology in their own teaching [

32]. However, they would feel comfortable using an online point and click game that required no skills training [

33]. On the basis of these findings and studies, such as that by Choy et al. [

34], we adopted the use of web-supported tools so that the pre-service teachers would have sufficient exposure to the website platform to feel comfortable using this technology in the classroom. Over the past three years significant changes have been made to the 10-week (20 h) units. The revised curriculum attempts to adhere to four principles: (1) the learning tasks are problem-centred [

35]; (2) skills are developed via learning-technology-by-design approach [

13]; (3) design tasks are accomplished collaboratively (socio-cultural theory), and; (4) learners are encouraged to engage in reflective practice [

25]. Attempts were made to ensure that each component of technological pedagogical content knowledge (TPACK) was adequately addressed as a separate component to ensure that each facet was being adequately addressed (

Table 1). Providing opportunities for the students to engage with components of the TPACK model informed the design of the two assessments.

3.2. Assessment Design

The assessments that the students completed comprise two collaborative design tasks. The collaborative design tasks were carried out via two separate activities: (1) to design and create an Interactive Whiteboard teaching/learning resource, and; (2) to design a web-based multimedia teaching/learning resource. Both design tasks required pre-service teachers to address a particular teaching and/or learning need, which is difficult for teachers to teach and for students to learn in traditional classrooms. In order to accomplish the design tasks, pre-service teachers would need first to become familiar with the school curriculum and their subject content areas. They then identified a specific topic or concept that they believed to be difficult for teachers to teach and/or for students to learn. Once a specific topic was chosen, the pre-service teachers explored which ICT tool(s) could address this need. In this study, we used a collaborative approach in both the design of the tasks and the critical feedback through a peer review process. Rather than providing a generic skills course, tutorial sessions were designed so they adapted to the learning needs of the pre-service teachers. Here, we drew on the research of Rossett [

36], who used the ADDIE process for training and Mayer and Moreno [

37], who offered a series of principles on how people process multimedia, and they make nine recommendations for multimedia instruction based on those principles. This allowed the focus of the lesson to be on the use of innovative tools to model effective ICT integration in teaching. Students also submitted an individual critical reflection on the process of designing and building the website.

Before pre-service teachers started each of their design tasks, tutorial sessions were used to demonstrate how to use different features of the software applications in use, and explaining the steps involved in designing and creating a learning resource. During the design process, the second author discussed with the groups their design ideas and provided comments and suggestions. In Week 8, collaborative student evaluations were made. In an earlier offering of the course, we used Camtasia screen capture software, which recorded the audio, webcam and onscreen movements of each peers’ review. The recordings were uploaded to EVA (a video annotation platform) for peer review. A peer review list was provided to ensure that each resource was reviewed by at least three peers. We modified this process as the conversion of the files for use in EVA took too much time and made accessibility an issue. We used a paper-based scaffold for the feedback followed by a face-to-face de-brief session where groups would provide feedback to their peers. The pre-service teachers were provided with a scaffold to guide them in providing meaningful and effective feedback to their peers on their ICT resources. Peer review has been shown to allow students to integrate vertically with more advanced students to learn from exposure to other student’s designs and ideas [

11]. Following the peer review, students critically reflected on their peers’ comments, and then finalized their resources in Week 10. For each design task, pre-service teachers were required to write a reflection, documenting their learning experiences and discussing the possibility of transferring such an experience to the classroom contexts.

3.3. Data Collection and Analysis

The study was conducted over one semester. There were 15 participants in the study including five males. All of the students were completing an undergraduate degree in secondary education. This was a compulsory, fourth-year course. The students worked in self-selected groups of two to three students. The students self-selected on the basis of their teaching areas. There were seven groups. The two collaborative assessment tasks were observed over a period of five weeks from Week 2 through to Week 6. Week 1 was not observed as this was an introductory session providing students with an overview of the underpinning theories in design and collaboration, as well as information about the assessment tasks and timetable for each component around the design and development process. The observations were conducted by the first author, who was not a member of the university and was not known to the students. The students were advised that the first author would be making observations of the collaborative process, taking notes, taking photos and making videos. They were advised that these data would be used to build reliability into the observations and would not be disseminated.

The tutorial sessions were two hours in length. The tutorial sessions occurred on a weekly basis. There was no lecture for this subject. The task in the first two sessions (Weeks 2 and 3) was to build an interactive whiteboard resource. Week 4 was an assessment and in the remaining three sessions (Weeks 5, 6 and 7), the task was to design and build a website on a particular curriculum area. The low weighted task in Week 4 gave each of the groups the opportunity to develop their collaborative work strategies, as well as the opportunity to work together on their curriculum areas. In these early weeks there was a degree of fluidity in the groups with groups moving seating. After the first assessment, the groups had established permanent seating arrangements (i.e., each student sat in the same seat each tutorial). Note that Group 2 ceased to function after the first assessment in Week 4. These students joined other groups. The group split to find a better collaboration using their other curriculum subject major. Though they were both languages teachers (one Japanese and one Spanish) the collaboration on the first task was quite difficult for each student finding common subject content areas as each language had unique features for learning and building a generic IWB resource that dealt with each language provided some challenges for the group. Using their other subject majors (English and History) allowed for better subject content negotiation for the newly formed groups to collaborate with each other. This allowed the group to better engage with the task.

In this article, the observational data is being reported upon. The observations were time-based and were conducted at 15-minute intervals throughout the class. Each group was observed during the intervals. Time-based observations were deemed to be the most appropriate process to capture the group’s processes. For each observations, an observation form was completed that included students’ seating arrangements, computer use, and the activity of each group member. The observations focused specifically on the group processes. These timed observation periods lasted for approximately five minutes. The first author took notes, made videos and took photos of each of the sessions in order to develop a detailed narrative of each group’s interactions during the tutorials. Persistent observation is the ongoing observation of participants in a study. Persistent observation allows the researcher to identify what is relevant to the study and what is not [

38]. Through persistent observation, a researcher can also see how students and teachers function, which groups were motivated, which groups struggled, and how the teachers interacted with the students. The observational data was collected over the course of the semester by the second author. During this time, the first author attended all of the classes and observed the class while they were designing and developing the resources. The second author, who was the teacher, did not engage with the data collection activity during class time in order to avoid perceived coercion to participate in class or the Hawthorne Effect. The Hawthorne Effect was described by Mayo [

39] as a form of reactivity whereby students modify their behaviour or outputs purely in response to the fact that they know they are being studied. The observations were conducted over the course of the semester in order to ensure that sufficient data through a prolonged engagement with the group was collected. According to Lincoln and Guba [

38], prolonged engagement is crucial in a qualitative study in helping to support the concept of credibility in qualitative research because it assists the researcher in testing for misinformation and building trust with the participants.

In order to validate the observations peer de-briefing was used at the conclusion of each of the sessions and at the conclusion of the semester. The first and second author compared their observations of the class. Peer debriefing is a process in which the investigator discusses the investigation with peers. Through peer de-briefing a research team can explore aspects of the research that may otherwise remain only implicit [

38]. Peer debriefing can encourage researchers to search for biases, scrutinise their hypotheses and justification for their research, discuss the direction of their research and methodological design, and to explore their feelings and emotions towards their research so that they can assess how their experience might impact their interpretation of the data [

38].

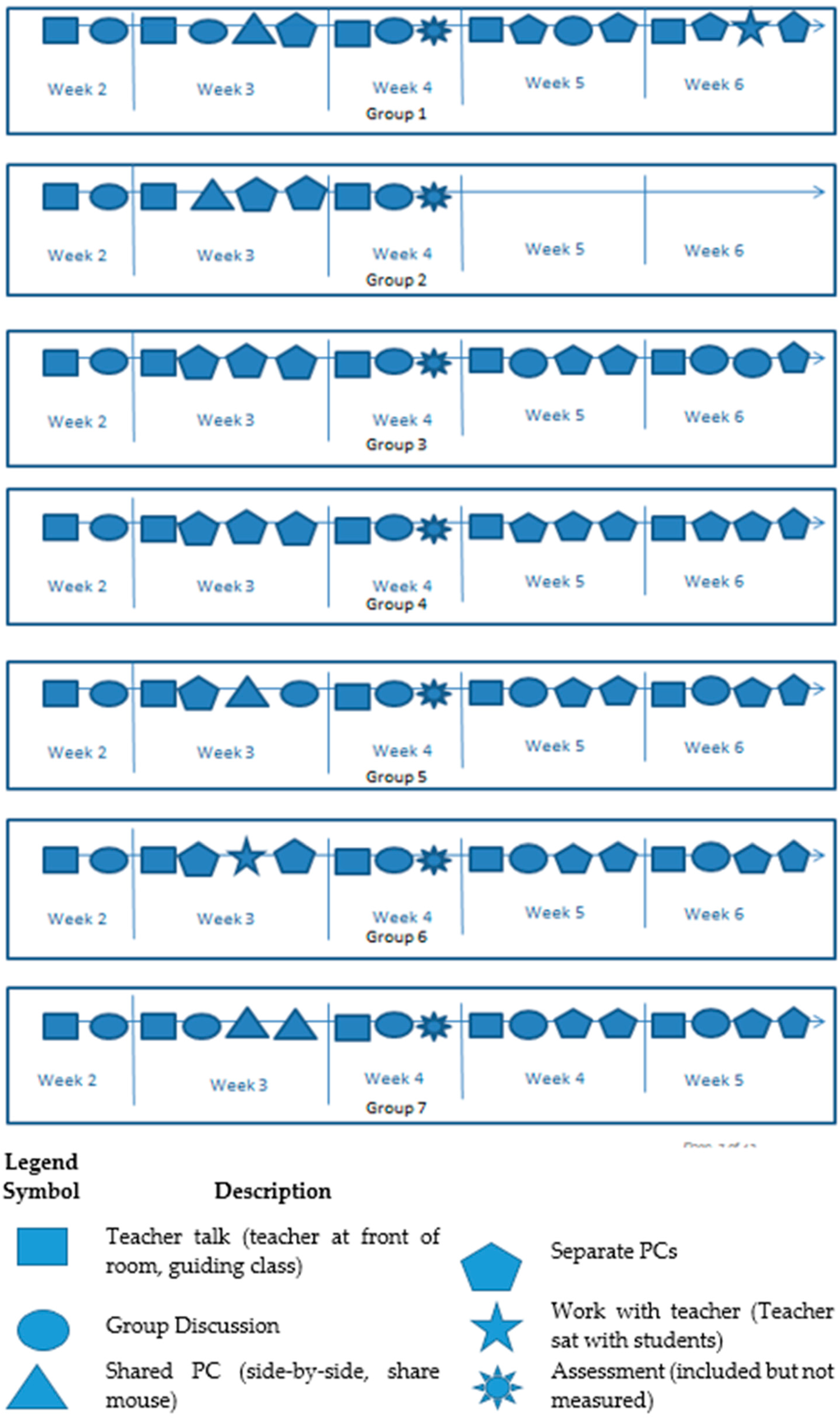

The analysis of the observational data was undertaken in several stages. Firstly, the individual group collaborations were identified for each tutorial. In this sense, the group patterns of collaboration were isolated from the whole class narrative. After the initial analysis of data, the individual segments of collaboration were coded. Originally, the codes were teacher talk, small group discussion, individual work (side-by-side on separate computers), shared work (group working on one computer), work with teacher, and assessment. The coded interactions were developed into a visualisation. After analysing the visualisations the research team re-coded the data to remove the assessment code, as it resulted in a skewed pattern of collaboration that did not reflect the actual class interactions. Next, the coded data were developed into a series of visualisations, the research team then discussed which visualisation provided the best representation of the collaborations. Each of the actions was allocated a symbol to develop the pattern; whilst this may seem fairly simplistic, it does provide a visual representation of the groups. The results of the observations were compiled and analysed for patterns of collaboration and are presented in the results.

4. Results

A frequency graph was developed to see if there were any trends in collaborative behaviour. Kali, Markauskaite, Goodyear and Ward [

40] in their research into collaborative design process found four unique characteristic of the teams: multi-dimensional exploration; balanced process; mutual respect, and; crossed domain expertise. The authors suggest that these principles can be used to guide the design process. Building on these principles, we examined how the groups actually organised their collaborations. As can be seen in

Figure 1, of the majority of recorded activities 34 per cent were the teacher-led activities. These occurred at the start of each class.

The next most frequent activity was working on a separate computer (32%), followed by group discussion (27%). The most infrequent activity was to work with the teacher (2%). What this meant was that for the timed observation, the group worked with the teacher and the teacher was actually sitting with the students. This was a positive result for the researchers as it meant that the groups were able to work effectively without sustained support from the teacher. In this respect, the actual level of skill required to master the two tools (interactive whiteboard and web resource) was within the range of the class members. This indicates that in comparison to our earlier studies, the students were not struggling with the technology.

What the frequency data does suggest is that both group discussion and individual work were the main task-related activities. Sharing a computer was not a frequent activity, with only five per cent of all of the recorded observations coded for sharing. It was also observed that as the groups progressed through the collaboration, sharing became less frequent. In fact, after the first assessment (developing an interactive whiteboard resource) none of the groups shared a computer. So whilst they sat next to each other, the group members were developing aspects of the shared design. That is, while the groups had shared goals, they all had individual roles and tasks to complete.

While the frequency analysis may show the broad trends of the class collaboration, it does not show the actual patterns of collaboration for each group. Accordingly, the research team developed a process map for each of the seven groups. These maps present the patterns of group design processes (

Figure 2). At the start of each week, the teacher-led the class in discussion and through the content for the course; hence, the high frequency of teacher-led activities. Note that the teacher walked around and talked to each group in each session; however, in the cases where the teacher remained with a group for a considerable period of time and not just for trouble shooting, this is recorded on the maps. Another consideration is that for Week 2 and Week 4 fewer observations were included in maps due to the actual class activities. In Week 2, the teacher talked for most of the class, and in Week 4 half the final two observations were coded as assessment. In terms of whole class behaviour, the patterns of collaboration Week 2 and Week 4 demonstrate how all of the groups had the same pattern of behaviour. In Week 2, the teacher-led the activity and then the groups discussed (planned) their shared interactive whiteboard resources. In Week 4, the teacher started the class and then each group had the opportunity to prepare for the interactive whiteboard assessment. All of the groups used this time for a small group discussion.

In regards to the development of a regular pattern of activity, some groups, such as Groups 4, 7, and 5, established fairly routine patterns of behaviour as the collaboration progresses. For example, Group 4 started each week with the teacher-led activity and then spent the rest of the tutorial working on separate computers. When asked about this behaviour, the two female students indicated that they met during the week to plan their tasks for the tutorial so that they could use the tutorial time specifically to build the resources. Other groups did not establish routine patterns on behaviour, for example, Group 3 changed their patterns of collaboration each week. In regards to the establishment of routines, it is not a necessary factor in achieving a group consensus or enacting a shared goal. Poole and Holmes [

41] found that the orderliness of groups had no clear relationship to consensus to change in group decision-making. They established that while a general ordering of activities (good planning) may prove useful, a tight micromanagement of the discussion was not necessarily advantageous. This would appear to be supported in these results, and it may just be that students need support in arriving at the pattern of collaboration that suits their particular group and their shared goals. This will require that students know how to identify a goal, negotiate a goal pathway, agree on tasks and evaluate the process in order to modify or continue with the current strategies in subsequent iterations of the activity.

What the results suggest is that the teacher-led activity at the start of each class was used to orient the groups. Some groups followed the teacher activity with a discussion. For instance, each week, Group 7 had a discussion after the teacher activity. We argue that the issue is not whether to scaffold the collaboration and the task, but when and how to scaffold [

42]. That is, a degree of scaffolding or guidance is needed to both aspects (collaboration and task), but allowing students to engage with a task without presenting a guided step-by-step task may encourage the activation of non-domain-specific knowledge and recognition of gaps in knowledge. This deeper understanding of the task increases the domain knowledge and problem-solving skills. Enabling students to struggle or make mistakes is often shied away from in education, but as Kolodner [

43] suggested, timely feedback and opportunities to re-engage with the task can afford learners with greater opportunities to learn and interpret their experiences.

5. Discussion

As ICTs have increasingly found their way into many curriculum materials in various domains, design projects have been shown not only to help teachers improve their own teaching, but also to adapt the technologies to better support their students’ learning, which altogether, have been shown to enhance student outcomes [

7,

44]. In this paper, we were examining the actual processes groups used to design their own resources rather than focusing on the output or resource (i.e., the assessable product) of the collaborative process. Success in this sense is to be able to communicate to achieve goals; hence, the focus is on negotiated meaning and a shared goal rather than achieving high grades. The collaborative design assessment provided pre-service teachers with the opportunity to collaboratively build an Interactive Whiteboard (IWB) resources and a web-based teaching (website) resource. The development of the resources was conducted in a computer-lab and the interactive sessions were structured around the task requirements; hence, students were situated in the design experience. In this sense, an anchored instruction approach was used. The goal of anchored instruction is the engagement of intention and attention so through the setting authentic tasks across multiple domains, in this case technology, pedagogy and content, students are to create meaningful goals [

10]. Barab and Roth further add in the discussion of a student-driven curriculum that “The emphasis is on establishing rich contexts and then providing necessary scaffolds to support the learner in successfully enlisting meaningful trajectories” [

18] (p. 9). This view of assessment as an ongoing collaborative process is supported by Diaz et al., who stipulate that “assessment is seen as a progressive process that develops throughout the course, not just an accounting of outcomes at the end” [

10]. What this demonstrates is a shift in the design of the subject and a move toward a process-driven curriculum.

We sought to examine how long-term collaborative assessments could be designed to provide pre-service teachers with the skills required to use technology in the classroom. We sought to investigate how different groups formed to engage with the tasks and identified that the majority of groups tensed towards developing their own pattern of performing. In this sense, the team members were competent, autonomous and able to handle the decision-making process required to complete the assessments without constant teacher monitoring [

45]. It was evident that the students mainly required assistance for technical troubleshooting. All of the groups were able to develop websites that could be used a teaching resources and hence passed the assessment task. The websites were relevant to the guiding syllabus documents and contained all of the requisite elements of the assessment task. The lack of teacher dependency and the success of the groups in achieving the task may also be attributed to the selection of the tools. It was evident from our earlier studies that the pre-service teachers and classroom teachers found the use of complicated technologies, such as virtual worlds, to be daunting due to factors such as the technical requirements and the amount of time it would take them to master the technology [

46]. Hence, by providing students with an opportunity to use an open-source Web 2.0 tool they were able to develop both the skills and confidence of using the technology in their particular content area. The need for pre-service teachers to gain successful experience in using technology for learning and teaching is supported by Teo, who reasons that there is:

A need for teacher educators to provide a conducive and non-threatening environment for pre-service teachers to experience success in using the computers, with a view to allowing pre-service teachers to gain competence and confidence in using computers for teaching and learning

This may be explained by the work of Kali et al. [

16], who found that the tool selection is of significant importance. They noted that tools that non-programmers can use to develop successful eLearning environments were becoming more accessible in higher education and schools. In this sense, if mastering the tool is no longer an issue then more attention can be placed on the design of the task.

We were seeking an understanding of how the students actually interact with each other and the environment so that strategies to support students can be embedded into the learning activity and assessment. As Clarke-Midura and Dede [

48] explain that while an assessment can serve multiple purposes, it is simply not possible for one assessment to meet all purposes within the context of a student’s learning experience. Hence, the actual design and purpose of the assessment needs to be carefully considered so that mastering the technology is not a barrier to undertaking the assessment. Innovative assessment formats, can measure complex knowledge, professional skills and inquiry that is not possible in paper-based formats [

48]. What we found was that as the groups were able to reflect and plan were better able to negotiate their way through the task as they made space to discuss and organise their collaboration. This reflection and group organisation followed the teacher-led introduction to the class. What this offers for assessment is that to help students move beyond the environment to the task that they should be given the language of the collaboration. Scaffolding formative tasks where the students need to reflect, evaluate and plan enable the stages to be built into the collaborative assessment task. This embedding of reflective time may also provide a checkpoint for students that are having technical problems, as the have a joint space to reflect, evaluate and plan phases and to identify gaps in technological, pedagogical and content knowledge that may otherwise prevent further progression through the task.

In regards to the actual task design, we argue that the groups only engage with highly scaffolded (guided or teacher-led) tasks, it is unlikely that they will delve deeper to gain a more comprehensive understanding of the task, the tool and the content as they are unable to explore the task as a group. That is, if the collaborative task is too guided, the students may not develop the technological, pedagogical and content knowledge needed to be able to evaluate, develop and plan to use ICTs in their own teaching practice across a range of contexts. As Bransford and Schwartz [

49] (pp. 82–83) suggest, there is value in allowing learners to be able to “bump up against the world” and to revise and re-think their initial actions if their first encounter is not successful, and they indicate that this testing of thinking can lead to better learning. The value having two assessments as part of the long term collaboration is that the students had time to develop and reflect upon their collaborative approach before they engaged with the more complex second assessment of planning, designing and building a web resource. Bower and Richards clarify that:

No matter which approach to collaboration is adopted, it has become clear to us that success lies in the implementation, and not in the specific approach. However there are differences between approaches, and as such educators need to carefully match the collaborative approach to the learning requirements of the task.

What they are suggesting here is in that in the design of the collaborative assessment task, as much attention needs to be placed on how the task is presented to the students as to the design of the task itself. They also note that building a conversational classroom environment will also support successful student collaboration as students feel comfortable sharing their ideas and designs with their peers. Overall, what we were trying to achieve in this phase of the research was a move away from teaching the tool to teaching with the tool to using ICT meaningfully [

50]. The aim was to provide space for students to develop workplace-ready skills. What we found was that the actual design of the unit of study as a long-term design project and the selection of easy-to-use tools meant that students were able to successfully design and build their ICT resources.

6. Conclusions

We put forward here that, in an educational environment in which teachers are increasingly expected to adopt the role of ICT designer as part of their practice, it is imperative that pre-service teachers have exposure to long-term design projects during their degree programs. The purpose of this is so that they gain the skills not only to build effective resources, but also to also to develop professional practices that encourages them to work collaboratively and to reflect upon how their designs impact upon learners. We note that a limitation of this paper is that it relied largely on observational data and does not report upon the students’ reflections. We also acknowledge that this paper focused on the process of the students’ engagement with the collaboration rather than on theory building, which is an expected output of a designed-based research study. In this article, we have drawn attention to the design of a long-term collaborative assessment and how students manage their group processes. The unit of study described in this paper tried to prepare pre-service teachers to be fluent in both pedagogy and basic design theory so that they may use their newly acquired ICT skills to produce educationally sound web-based learning resources. We propose here that through using a methodological approach that investigates patterns of collaboration that we, as educators, may be able to use these patterns to create learning designs that can be applied to a range of collaborative learning situations. The investigation of learning process can centre on both the spoken interactions and the interactions with the ICTs. These investigations may provide educators with a deeper understanding of how learners actually participate in the process of learning rather than on the assessment of the outcome. Through an analysis of the patterns of collaboration we were able to identify the most frequent activities of the collaboration, which happened best when students were working on separate computers. What can be surmised here was the success of the groups in completing both of the assessment tasks successfully due to the scaffolding and just-in-time support provided by the teacher. The lower-weighted assessment task in Week 4 gave the groups time to develop strategies that would enable a successful collaboration. That is not to say that all of the groups were successful: Group 2 separated after the first task and the members joined different groups. However, having time and appropriate guidance enabled the groups to develop both the collaborative skills and ICT skills to develop interactive content-based, syllabus-driven web-resources. What we are offering here is a way to reconsider how ICT in education subjects are taught in higher education, so rather than having the subject learning being driven by the teacher and the tool, students drive their own learning process by engaging with long-term collaborative tasks that anchor the learning experience in a multidisciplinary context. Thus, the emphasis here is in shifting the focus of the subject from the development of a product to the process of learning and acquiring work-ready skills.