4.1. Evaluation of the System Accuracy

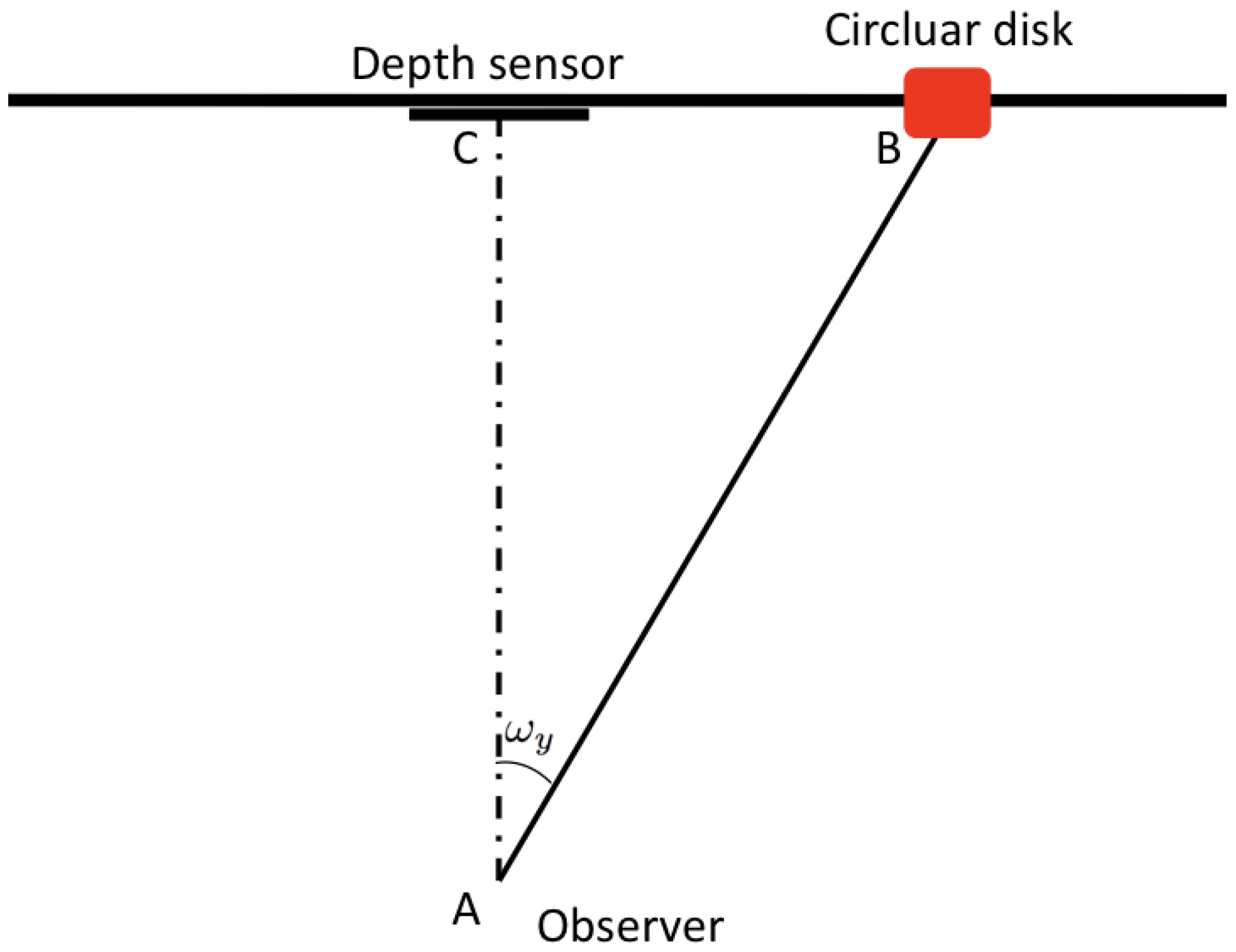

In this experimental phase, a Microsoft Kinect device, positioned at a height of 150 cm from the ground, was used as the depth sensor. A square (2 m per side) panel, with nine circular markers stuck on it, was placed behind the sensor. The circular markers were distributed on three rows, three markers on each row, with a distance of 50 cm from each other. The technical setup is described in

Figure 8 where it is possible also to observe that the markers were divided into three subsets. This breakdown was done in order to group together points that presented the same distance from the sensor in terms of

x,

y or both axes, from P1–P3, while P0 corresponds to the depth sensor position. For example, P3 are the points with a distance of 50 cm from the sensor along the

x axis and aligned along

y axis; P2 are the points with a distance of 50 cm from the sensor along the

y axis and aligned along

x axis, and so on. This differentiation has been kept in order to ensure a more complete evaluation that takes into account

x and

y axes in both separate and joint configurations.

The experiments in this phase were carried-out as follows: eight different typically-developed participants (four adults and four children) were asked to stand at a distance of about 70 cm from the panel and to look at each of the markers on the panel, in a predefined order. Note that neither a training nor a calibration phase were performed. For each marker, oral feedback from the participant confirmed that the markers were in their requisite positions, and this was the trigger to collect the pertinent gaze direction estimated by the system. The estimated gaze direction was then projected onto the panel and then compared with ground truth data (known since the topology of the markers on the panel was fixed and known a priori). In order to simulate different lighting conditions, a lamp was used and placed in three different positions with respect to the participants.

Table 1 reports the average errors experienced during the performed trials under three different lighting conditions (artificial light source positioned in front of, on the right and on the left, respectively) for the four adults involved.

Table 2 reports the average errors experienced during the trials (which ran in the same way as for adults) for the four children involved (age range (60–78) months). Reported errors (expressed in degrees) were computed as the spatial distances between the marker and the point determined as the intersection between the estimated gaze ray and the panel. Errors are averaged on nine different rounds of acquisition that were carried out for each participant (three for each different lighting condition). From

Table 1 and

Table 2, it is possible to assert that the gathered gaze estimation performances slightly depend on lighting conditions. Although some errors occurred in the estimation of gaze directions, they remained in a limited range of degrees that may be tolerated in a behavioral analysis application context, considering that real-world objects have a consistent visual size. Besides, it is very important to have found that the errors remained low also when experiments involved children whose faces have different morphological features than adults’ faces. In particular, the overall average error considering both axes and configurations (children and adults) is

.

Note that the results tend to be distinct for each group of markers, as a consequence of the different estimated head position, which becomes more sensitive as the yaw and pitch angles grow, and for the same difficulty in detecting eyes in near-frontal poses. In general, note also that the system shows better accuracy on the x axis. This is also a consequence of a better precision in the eye detection stage along this axis due to a more evident circularity. It is quite common indeed that the upper and lower parts of the border between the pupil and the sclera result in being occluded when the eye is not wide open.

In the light of these encouraging accuracy results, it is relevant to point out the differences and the advantages of using the proposed method, especially in critical application contexts, with respect to the solutions currently available on the market. Commercial non-intrusive eye-trackers are mainly designed to be used while watching a screen, e.g., with a limited size of the observed area. Besides, they require an initial calibration performed for each user in a collaborative setting making use of calibration points shown on the screen through a specific procedure. As a further binding feature, they do not allow free head movements, but are constrained in a limited range (this is, for instance, the case of the Tobii products (

https://www.tobii.com/), which is the leading company in this business area). In light of this, they are not suitable to be used in the operational setups (like the considered one) where the size of the area is larger than a screen and the calibration procedure cannot be easily designed and carried out (for example when children are involved, regardless of any neurological deficits that may be a further obstacle). Concerning the achieved accuracy, it is ineluctable that the commercial product based on detection of corneal reflection under infrared illumination performs very well. However, the performances achieved by the proposed approach are not very far from those. Actually, considering the central part of the panel, e.g., Point P0 (corresponding to the ideal conditions indicated by the commercial producers), the proposed approach achieved an accuracy of around one degree, as well (see

Table 1). Finally, yet importantly, it should be kept in mind that the proposed approach has been designed for the specific task of helping the understanding of visual exploration in natural contexts, where usually the different areas of interest are not very close in space, and additionally, each of them has a dimension related to objects in the scene. This implies that a weak loss in accuracy (as actually experienced with respect to the commercial eye-tracker data sheet report) can only marginally affects the overall analysis of the visual exploration behavior. On the other side, the benefits of having a calibration-free system are quite remarkable in terms of technical setup since they make the system suitable for use with non-collaborative individuals (e.g., ASD children or, more generally, neurologically-impaired persons). Anyway, the practical and functional advantages of using the proposed method in a clinical context for the visual observation of the behavior will be better pointed out in the next subsection.

4.2. Exploitation of the System in a Real ASD Treatment Scenario

In this section, a demonstration trial of how the proposed gaze estimation system could be exploited in a real ASD treatment scenario is given. By exploiting its main features (no invasive equipment to wear, neither calibration nor training), it was possible to introduce the system and subsequently to collect its outcomes while therapies for treating ASD with the ESDM program were taking place. As explained in the introductory section, the ESDM program is built on the child’s spontaneous interests and game choice delivered in a natural setting. In the considered setup, a closet containing specific toys, which were neatly disposed by the therapist, is placed in the therapy room. When a child enters the room, he is helped by the therapist to open the closet and invited to choose a toy. It is important to highlight that in this specific task of the therapeutic program, only the use of an automatic system can allow caregivers to capture visual exploration details and, as a consequence, to better understand the behavior of the child in perception tasks. Indeed, on the one hand, health professionals have no chance to observe the child from a frontal point of view, and on the other hand, they have also to address practical issues, as leading the child to the closet and encouraging him/her to pick a toy.

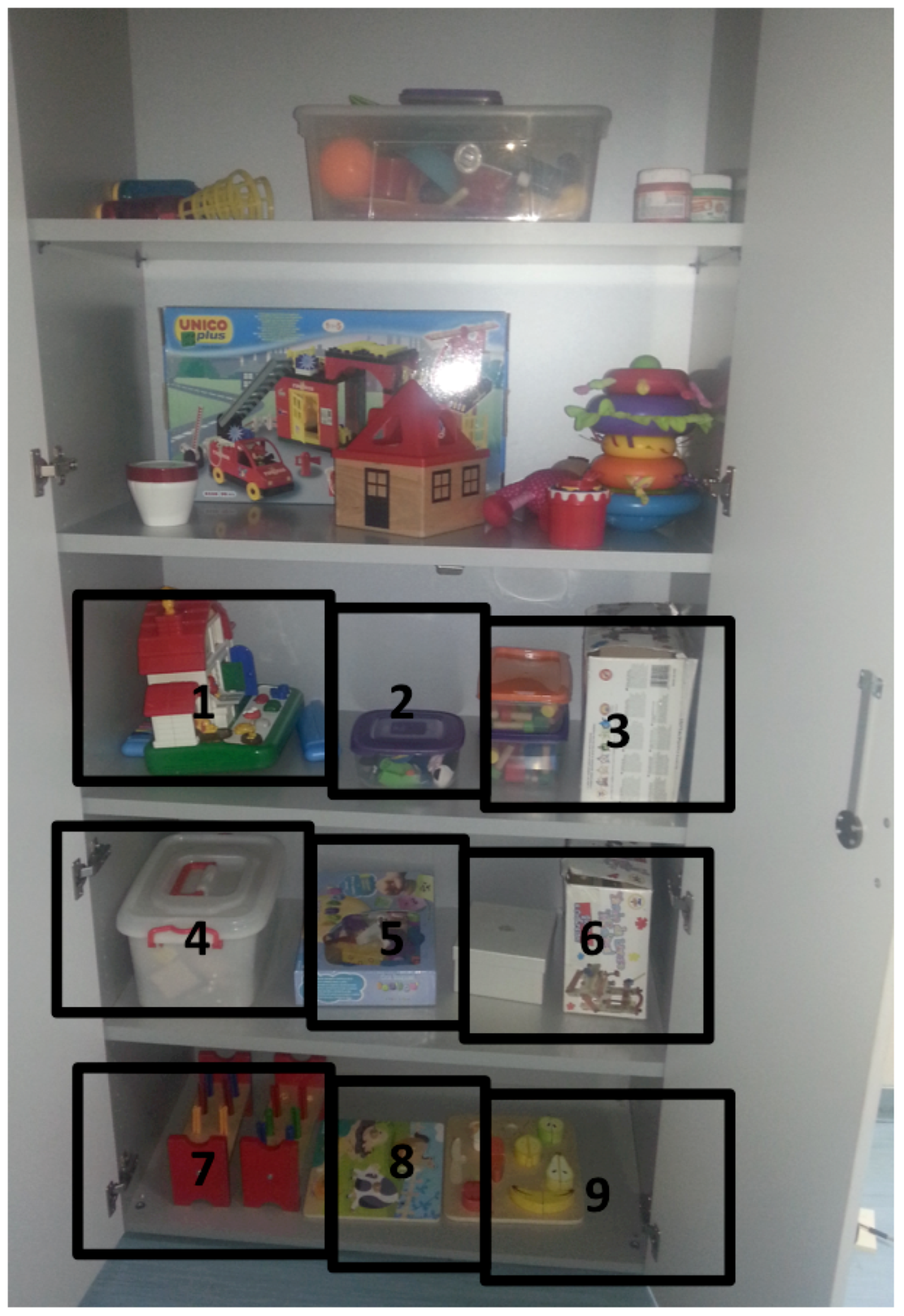

Figure 9 illustrates an example of the disposition of the toys in the closet. The closet has a size of

, but only three sectors (the bottom most ones) have been used, considering the age and the height of the involved children (see

Table 3). Thus, the useful part has a size of

. The approximate distance between the eyes and the targets varies in the range

, and thus, the precision of the system can be assumed comparable with the one reported in

Table 1 and

Table 2. In

Figure 9, the nine 2D-Areas Of Interest (AOI), corresponding to nine different portions of the closet where toys can be placed, are highlighted. The set of employed toys changes for each child, following the personal strategy and work methodology of the therapist. A Microsoft Kinect sensor was hidden in the area corresponding to Cell

. In this area, a boxed toy was placed, as well; therefore, all nine cells represent assessable areas of interest. Furthermore, the green LED of the sensor has been darkened, to avoid attracting the child’s attention and then affecting his choice. When operating, the system collected gaze data as gaze hits on each AOI. Gaze hits were subsequently aggregated at different levels, resulting in a wealth of psycho-physical measures. In particular, the following parameters have been extracted from the system:

Fixation count: the number of fixations on a specific AOI. A fixation was accounted if at least 15 consecutive frames present a hit on the same AOI;

First fixation: the first AOI on which the system accounts a fixation after closet opening;

Sequence: the ordered list of AOIs observed by the child in terms of fixations;

Most viewed toy: the AOI with the highest number of hits.

For each session, extracted data were completed by the record of the AOI containing the chosen toy. This last datum was introduced into the system by the therapist.

Data acquired by the depth sensor were processed by the gaze estimation algorithm running on an Ultrabook Intel i3 CPU @ 1.8 GHz with 4 GB of RAM. With the aforementioned hardware and software configurations, on average, an estimate was available every 0.11 s, i.e., a frequency of 9.1 hits per second was achieved. The use of a notebook available on the mainstream market was done in order to experiment also with the feasibility of arranging temporary installations (since the notebook can be easily placed on the topmost shelf of the closet with a relatively straightforward concealment of cables). In order to not miss information about visual exploration (i.e., to process each acquired frame independently of the available computational resources), a large buffer was introduced. This way, all the acquired frames were processed, and the final outcome of the system was available with a variable delay depending on the duration of each acquisition session and on the processing resources, as well. This means that estimations of gaze hits are, in any case, temporarily deferred by about 30 ms (i.e., all frames, acquired at 30 fps, are processed). In light of this additional consideration and following the above definition, it is possible to state that a fixation occurs when the gaze track remains in the same AOI for about half a second (i.e., 500 ms). It is well known that normal fixations are about 200–300 ms, but we found several studies [

67,

68] stating that the duration of each fixation can range from a hundred to several thousand milliseconds since it is influenced by exogenous properties such as object movement and luminance, as well as by endogenous properties such as the participant’s processing speed, vigilance levels and interest in the information at the point fixated. Summing up the duration of each fixation depends on the complexity of the environment to be explored and on the mental functions and age of the individuals. Since the evaluation of the durations of fixations in the considered setup was not in the scope of the paper (it might be in the future), we decided to set the minimum fixation length to half a second, which is just a trade off between normal expected durations (suggested by the reviewer as well) and longer durations experienced in the aforementioned papers in the case of infants and even more if they are affected by ASD.

Data acquisition was carried out in accordance with the Code of Ethics of the World Medical Association (Declaration of Helsinki) [

69]. Informed consent was obtained for experimentation with human subjects (from the children’s caregivers).

The sample consisted of three children (mean age: 75.66 months, range: 68–80 months), recruited at the Pervasive Healthcare Center of the Institute of Applied Sciences and Intelligent Systems of the National Research Council of Italy in Messina. The diagnostic assessment was based on the Autism Diagnostic Observation Schedule, Griffith’s Developmental Scale, Vineland Adaptive Scale, McArtur Language Test and Child Behavior Checklist (CBCL).

Table 3 shows gender, age and Griffith’s Developmental Scale [

70,

71] for each child involved in the experimental phase.

Children #1 and #2 were involved only in one data acquisition session. Child #3 was instead involved in two different sessions: the first one took place the same day as for the remaining children, whereas the second one took place exactly two weeks later.

This additional data acquisition session was implemented with the aim of verifying in the field the ability of the proposed approach to objectively detect possible temporal variations in the clinical diagnosis of the involved child.

During sessions, data were continuously acquired: when the child opened the closet, his face was detected, and the subsequent algorithmic pipeline started. The child’s gaze track was then computed, projected on the image plane, and then, its intersection with the toys’ AOIs was computed. Thanks to the known geometry of the closet, it was possible to intersect these hits with regions that enclose toys, and then useful information about visual exploration was extracted and recorded for each child. Moreover, the achieved 2D visual exploration on the closet plane was tracked over time, and at the end, this information was related to the chosen toy by means of the corresponding AOI. After data acquisition started, data samples were immediately processed. Anyway, the proposed framework allows the users to perform also an afterward off-line analysis of acquired data. This operating mode was implemented for debugging purposes, but it was also exploited by the caregivers (traditionally reluctant about technological tools) who had in this way the possibility to vouch for the correspondence between the extracted data and the actual behavior of the child.

Figure 10 shows the computed hit-maps for Children #1 and #2 in

Table 3. The color scale tracks the temporal occurrences of the hits: the lightest colors refer to the beginning of the visual exploratory session, whereas the darkest colors refer to the final part of the session.

The qualitative analysis of acquired gaze hits highlights that Child #1 selected a toy after a very quick exploration; his first fixation was in AOI 5, and the most viewed toy was in AOI 2. There were no relations between his visual exploration and the selected toy since he finally chose the one in AOI 7.

The second child performed a bigger number of gaze hits than the first one. At the end, he selected the last observed toy (in AOI 4). Finally, for the third child, two acquisition sessions (indicated as Sessions A and B), at a distance of two weeks, were carried out. The corresponding hit-maps automatically extracted by the system are reported in

Figure 11.

In the first session, the system highlighted different behaviors during the child’s visual exploration phase: at the beginning, a long exploration of toys was observed, then the child focused on a specific point, and finally, he took the corresponding toy (in AOI 1). This child had, in general, a higher number of gaze hits than the former children. The selected toy was by far also the most observed, since he spent much time looking at it. In the second experimental session, the same child performed a very similar behavior: the session had a similar length to the previous one, and again, the choice of the toy matched the AOI with the highest number of gaze hits. The selected toy was also the last one observed before making the decision after a whole exploration of the closet content.

The number of hits on each area of interest of the closet (assuming the same labeling as in

Figure 9) is reported in

Table 4, where the most viewed toy in each experimental session is highlighted using bold characters. Taking into account also temporal information, it is possible to perform the more exhaustive behavioral analysis that is reported in

Table 5 and that can be considered the final outcome of the proposed system. It follows that, through the analysis of the content of

Table 5, the therapists involved in the clinical sessions can draw more objective conclusions about the diagnosis or assessment of the disease with respect to the approach without technological support. This is a considerable result considering that wearable or calibration-dependent devices (as commercial eye-trackers are) cannot be exploited in the case of children in the considered age group, which is the more relevant for a proper diagnosis or assessment of ASD.

For example, concerning Child #1, the collected data showed that the selected toy was not the most viewed one (AOI 2), but the last one viewed (AOI 7). This numerical evidence confirmed the expectations of the therapist since the child usually presented repetitive and stereotypical behaviors and, in addition, the grabbed toy was his preferred one considering that he already chose it in clinical sessions. However, the system made it possible to discover additional and unknown behavioral features: the child expressed a strong interest also in two other toys (corresponding to AOI 5 and AOI 2, respectively) that he never chose, even in the foregoing sessions. In light of this new acquired knowledge (that was not possible to get without the proposed system), the therapist could decide to modify the disposition of the toys, for example putting Toys #2 and #5 next to the preferred one trying, in this way, to produce different stimuli to the child, encouraging him to vary the way he plays, since it would represent a strong advancement for him in light of the well-known problem of repetitive behaviors that characterize autism [

72].

The second child showed another typical behavior that characterizes autism, i.e., the tendency of taking unusual postures and showing overexciting behaviors [

73], demonstrating also that he feels uncomfortable and agitated during social interactions. The use of the proposed system allowed healthcare personnel to understand the impact of this clinical profile on visual exploration behavior. From the the data extracted by the system, a series of large (horizontal) movements of the gaze direction and no correlation between observation and the final choice of the toy were evident.

The case of Child #3 is even more interesting, since this case-study showed the differences in visual exploration in two different sessions pointing out even better the huge potential of a system able to extract a quantitative evaluation of visual exploratory behaviors. In particular, the system reported that in the first session, the child performed a quite complete exploration of the closet before choosing the toy. In light of this, in order to better verify his exploration ability, in the second session, the therapist changed the whole set of toys. In other words, in the second session, the child dealt with a closet containing toys he never had seen before. The system supplied numerical evidence that, in this case, the child performed a different exploration behavior: he took more time to make a decision and looked at the same toy many times. The evidence of this visual exploration behavior demonstrated to the healthcare personnel that the child can successfully undertake a therapeutic path to improve his capability to interact with the surrounding environment.

It is indisputable that the above results are preliminary, but at the same time, they are irrefutable proof that the proposed system can represent an effective tool to obtain detailed information about the exploratory behaviors in children with ASD. As experienced in practice, this additional information can help to carry out more specific therapeutic paths (for example by incrementally optimizing the toy disposition in the closet), but also to better assess developmental disorders.

A final consideration is due to how the AOIs’ boundary areas have been handled. It is straightforward that making a discretization of the observed region (instead of modeling it as continuous through a regression scheme) can result in a loss of accuracy. In other words, we did not ignore the fact that the gaze estimation errors, computed in

Section 4.1, can misplace some hits when they lie close to the borders of the AOIs.

In order to give measured data about the estimation of misplaced hits, the offline operation mode of the system (described in

Section 4.2 and allowing the user to check frame-by-frame the system outcomes and to match them with the corresponding acquired images) was exploited, and the number of gaze hits lying in the uncertainty regions of the AOI was computed for each experimental session. An uncertainty region was formally defined as the portion of the AOI having a distance from the adjacent AOI that is less than the average estimated error on the observed plane. Please note that uncertainty regions took place only around vertical borders of the AOIs since the objects were placed on different shelves of the closet, and so, horizontal borders of the AOIs were never adjacent. The average linear misplacement error on the observing plane was estimated by the cord calculation formula:

where

D is the distance between the plane and the user and

was set to 2.88

, i.e., the average angular error estimated during the preliminary tests reported in the previous subsection. This way, an area having a width of

cm was placed around each vertical border of the AOIs, and the gaze hits intersecting those areas were finally accounted for. This resulted in

Table 6.

From the above table, it is possible to realize that the number of hits in the uncertainty regions is a very small portion of the total amount of counted hits (10.5% on average). Besides, since gaze hits were subsequently aggregated to get psycho-physical measures, the effects of the occurrences of misplaced hits are further reduced, and thus, it is possible to conclude that they can only very marginally affect the overall evaluation of the visual behavioral patterns. We are perfectly aware that if there were a need for greater granularity in the detection plan (that is, a larger number of targets and smaller ones), it would be necessary to model the areas of uncertainty in the process of visual image reconstruction. However, we believe that this is beyond the scope of this paper.