On a First Evaluation of ROMOT—A RObotic 3D MOvie Theatre—For Driving Safety Awareness

Abstract

:1. Introduction

2. Materials and Methods

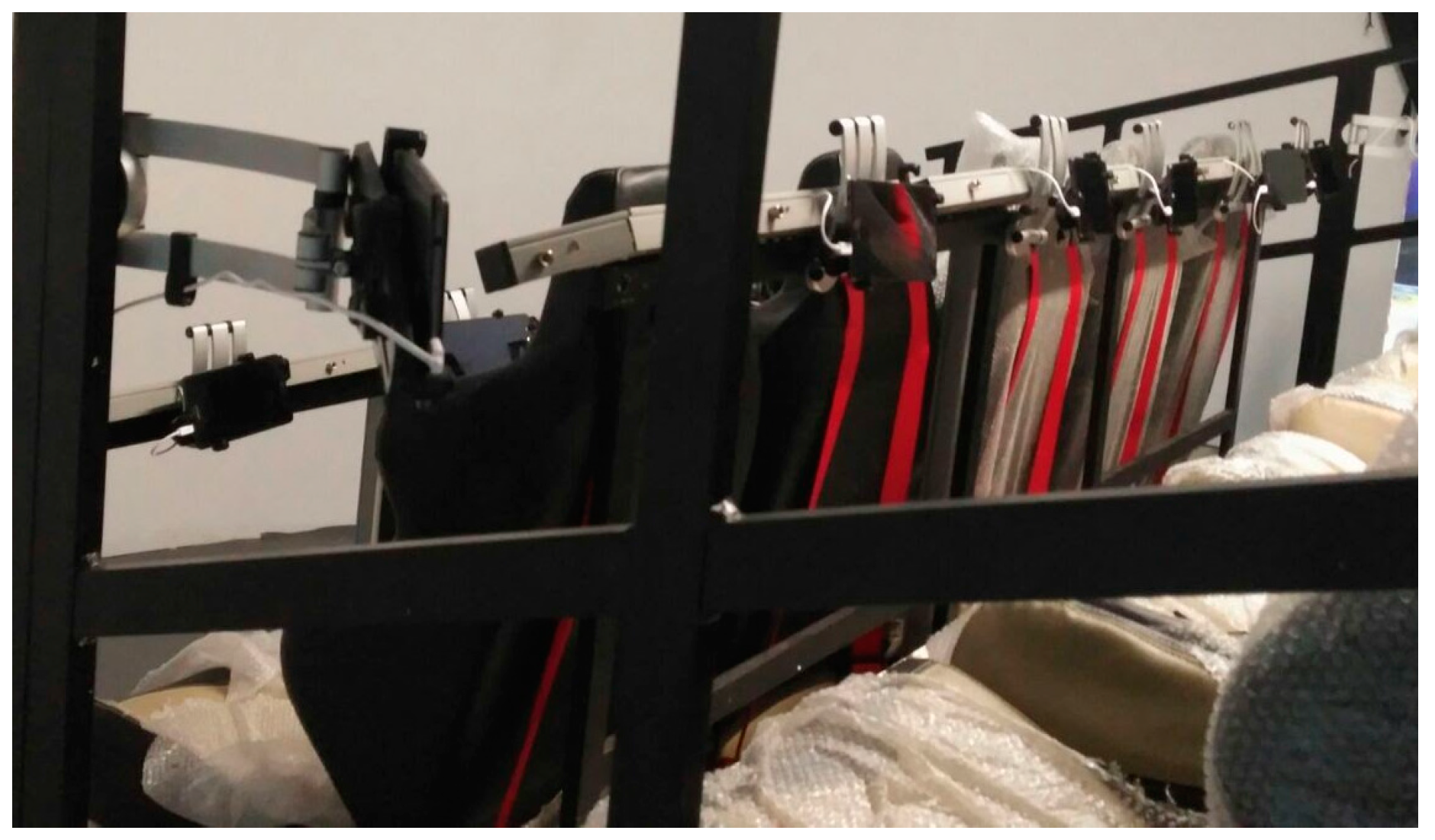

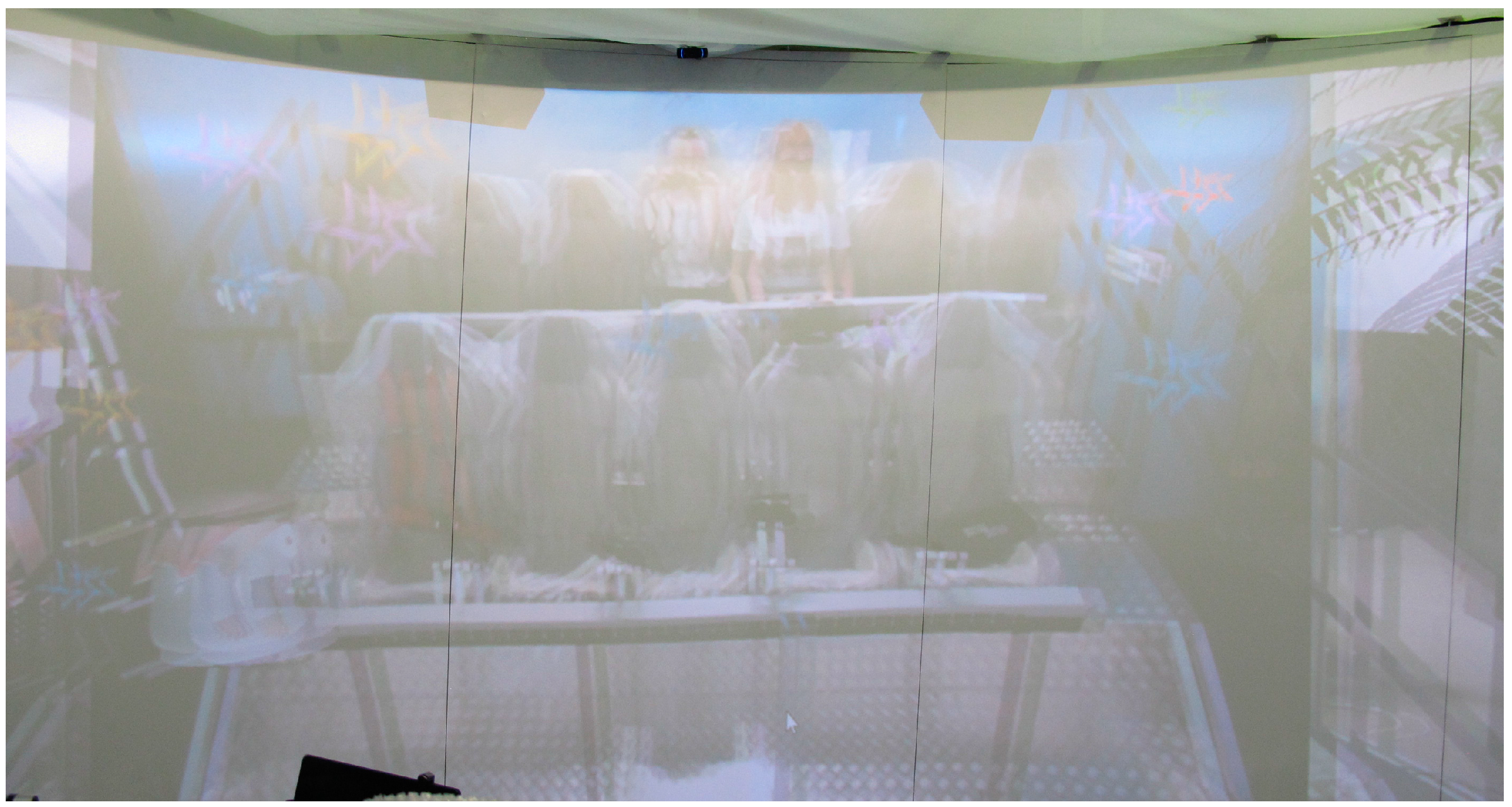

2.1. Construction of ROMOT

2.2. Multimodal Displays Integrated in ROMOT

- An olfactory display. We used the Olorama wireless aromatizer [23]. It features 12 scents arranged in 12 pre-charged channels, which can be chosen and triggered by means of a UDP packet. The device is equipped with a programmable fan that spreads the scent around. Both the intensity of the chosen scent (amount of time the scent valve is open) and the amount of fan time can be programmed.

- A smoke generator. We used a Quarkpro QF-1200. It is equipped with a DMX interface, so it is possible to control and synchronize the amount of smoke from a computer, by using a DMX-USB interface such as the Enttec Open DMX USB [24].

- Air and water dispensers. A total of 12 air and water dispensers (one for each set). The water and air system was built using an air compressor, a water recipient, 12 air electro-valves, 12 water electro-valves, 24 electric relays and two Arduino Uno to be able to control the relays from the PC and open the electro-valves to spray water or produce air.

- An electric fan. This fan is controllable by means of a frequency inverter connected to one of the previous Arduino Uno devices.

- Projectors. A total of four full HD 3D projectors.

- Glasses. A total of 12 3D glasses (one for each person).

- Loudspeaker. A 5.0 loudspeaker system to produce binaural sound.

- Tablets. A total of 12 individual tablets (one for each person).

- Webcam. A stereoscopic webcam to be able to construct an augmented reality mirror-based environment.

- Sight and stereoscopy: users can see a 3D representation of the scenes on the curved screen and through the 3D glasses; they can see additional interactive content on the tablets; they can see the smoke.

- Hearing: they can hear the sound synchronized with the 3D content.

- Smell: they can smell essences. For instance, when a car crashes, they can smell the smoke. In fact, they can even feel the smoke around them.

- Touch: they can feel the touch of air and water on their bodies; they can touch the tablets.

- Kinaesthetic: they can feel the movement of the 3-DOF platform.

2.3. System Setups

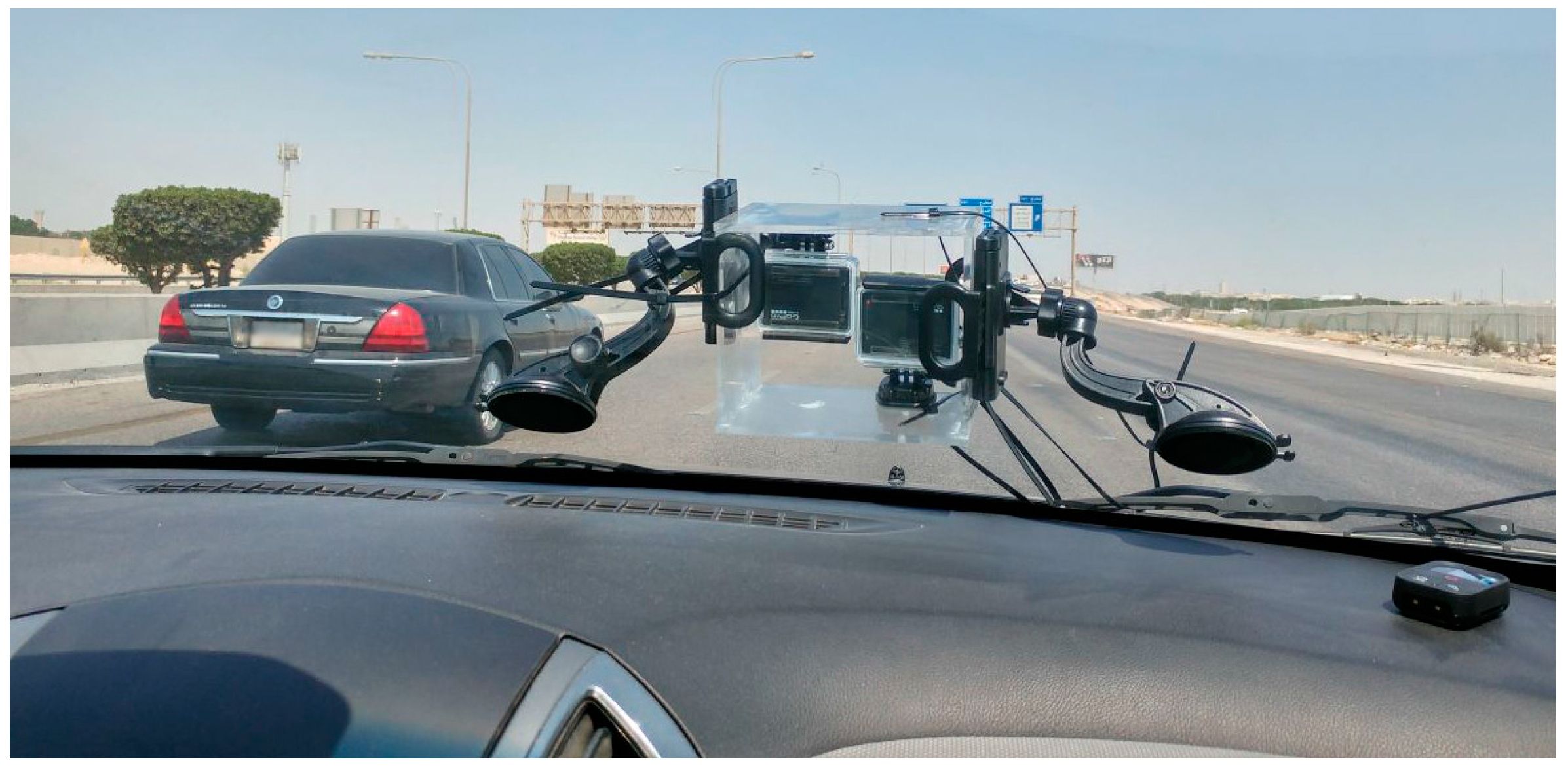

2.3.1. First-Person Movie

2.3.2. Mixed Reality Environment

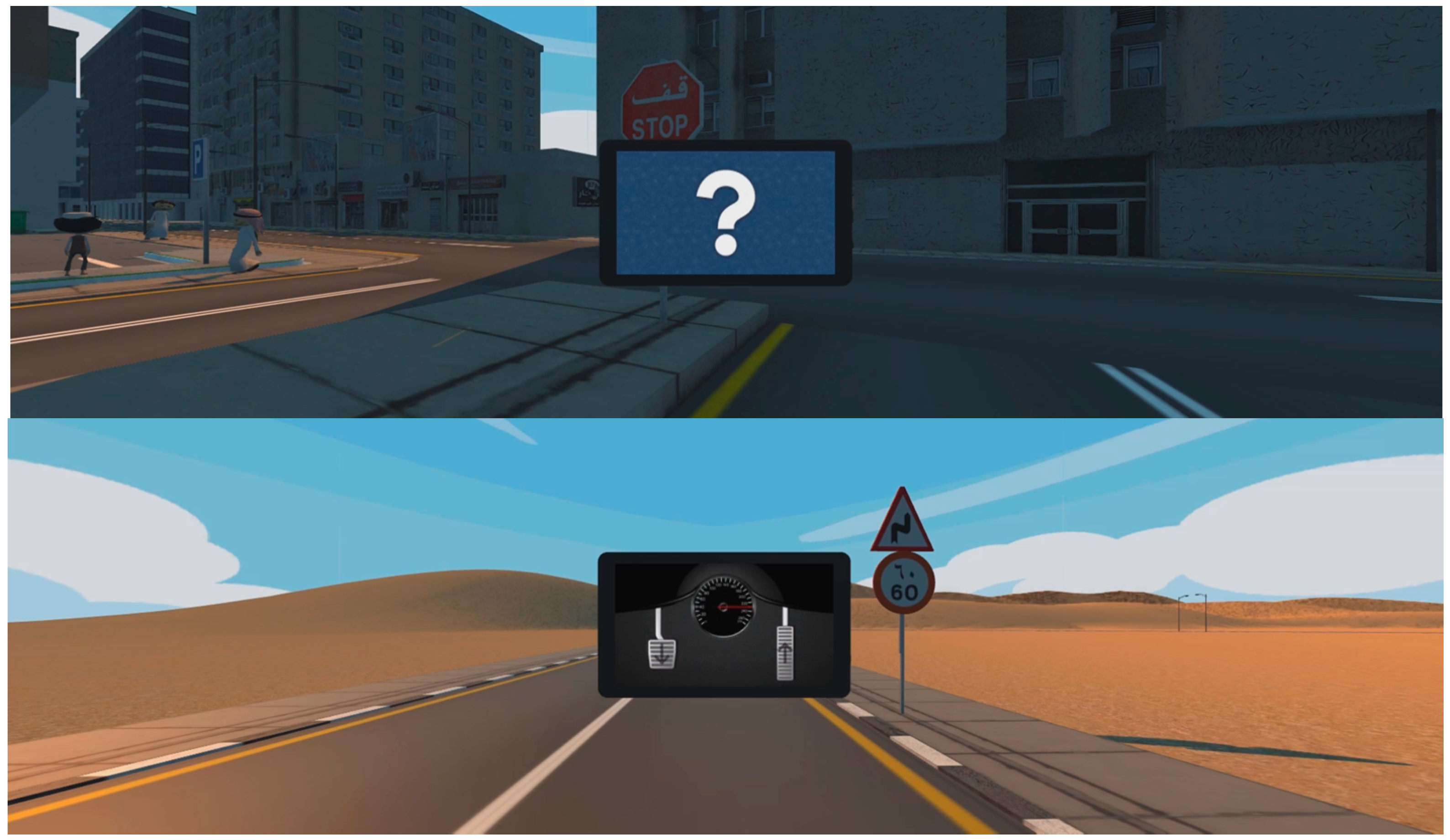

2.3.3. Virtual Reality Interactive Environment

2.3.4. Augmented Reality Mirror-Based Scene

3. Results

4. Discussion and Further Work

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Yrurzum, S.C. Mejoras en la Generación de Claves Gravito-Inerciales en Simuladores de Vehículos no Aéreos; Universitat de València: València, Spain, 2014. [Google Scholar]

- Vallières, É.; Ruer, P.; Bergeron, J.; McDuff, P.; Gouin-Vallerand, C.; Ait-Seddik, K.; Mezghani, N. Perceived fatigue among aging drivers: An examination of the impact of age and duration of driving time on a simulator. In Proceedings of the SOCIOINT15- 2nd International Conference on Education, Social Sciences and Humanities, Istanbul, Turkey, 8–10 June 2015; pp. 314–320. [Google Scholar]

- Cox, S.M.; Cox, D.J.; Kofler, M.J.; Moncrief, M.A.; Johnson, R.J.; Lambert, A.E.; Cain, S.A.; Reeve, R.E. Driving simulator performance in novice drivers with autism spectrum disorder: The role of executive functions and basic motor skills. J. Autism Dev. Disord. 2016, 46, 1379–1391. [Google Scholar] [CrossRef] [PubMed]

- McManus, B.; Cox, M.K.; Vance, D.E.; Stavrinos, D. Predicting motor vehicle collisions in a driving simulator in young adults using the useful field of view assessment. Traffic Inj. Prev. 2015, 16, 818–823. [Google Scholar] [CrossRef] [PubMed]

- Reymond, G.; Kemeny, A.; Droulez, J.; Berthoz, A. Role of lateral acceleration in curve driving: Driver model and experiments on a real vehicle and a driving simulator. Hum. Factors 2001, 43, 483–495. [Google Scholar] [CrossRef] [PubMed]

- Dziuda, Ł.; Biernacki, M.P.; Baran, P.M.; Truszczyński, O.E. The effects of simulated fog and motion on simulator sickness in a driving simulator and the duration of after-effects. Appl. Ergon. 2014, 45, 406–412. [Google Scholar] [CrossRef] [PubMed]

- Heilig, M.L. Sensorama Simulator. Available online: https://www.google.com/patents/US3050870 (accessed on 13 February 2017).

- Ikei, Y.; Okuya, Y.; Shimabukuro, S.; Abe, K.; Amemiya, T.; Hirota, K. To relive a valuable experience of the world at the digital museum. In Human Interface and the Management of Information, Proceedings of the Information and Knowledge in Applications and Services: 16th International Conference, Heraklion, Greece, 22–27 June 2014; Yamamoto, S., Ed.; Springer International Publishing: Cham, Germany, 2014; pp. 501–510. [Google Scholar]

- Matsukura, H.; Yoneda, T.; Ishida, H. Smelling screen: Development and evaluation of an olfactory display system for presenting a virtual odor source. IEEE Trans. Vis. Comput. Graph. 2013, 19, 606–615. [Google Scholar] [CrossRef] [PubMed]

- CJ 4DPLEX. 4dx. Get into the Action. Available online: http://www.cj4dx.com/about/about.asp (accessed on 13 February 2017).

- Express Avenue. Pix 5d Cinema. Available online: http://expressavenue.in/?q=store/pix-5d-cinema (accessed on 13 February 2017).

- 5D Cinema Extreme. Fedezze fel Most a Mozi új Dimenzióját! Available online: http://www.5dcinema.hu/ (accessed on 13 February 2017).

- Yecies, B. Transnational collaboration of the multisensory kind: Exploiting Korean 4d cinema in china. Media Int. Aust. 2016, 159, 22–31. [Google Scholar] [CrossRef]

- Tryon, C. Reboot cinema. Convergence 2013, 19, 432–437. [Google Scholar] [CrossRef]

- Casas, S.; Portalés, C.; Vidal-González, M.; García-Pereira, I.; Fernández, M. Romot: A robotic 3D-movie theater allowing interaction and multimodal experiences. Proceedings of International Congress on Love and Sex with Robots, London, UK, 19–20 December 2016. [Google Scholar]

- Groen, E.L.; Bles, W. How to use body tilt for the simulation of linear self motion. J. Vestib. Res. 2004, 14, 375–385. [Google Scholar] [PubMed]

- Stewart, D. A platform with six degrees of freedom. Proc. Inst. Mech. Eng. 1965, 180, 371–386. [Google Scholar] [CrossRef]

- Casas, S.; Coma, I.; Riera, J.V.; Fernández, M. Motion-cuing algorithms: Characterization of users’ perception. Hum. Factors 2015, 57, 144–162. [Google Scholar] [CrossRef] [PubMed]

- Nahon, M.A.; Reid, L.D. Simulator motion-drive algorithms—A designer’s perspective. J. Guid. Control Dyn. 1990, 13, 356–362. [Google Scholar] [CrossRef]

- Casas, S.; Coma, I.; Portalés, C.; Fernández, M. Towards a simulation-based tuning of motion cueing algorithms. Simul. Model. Pract. Theory 2016, 67, 137–154. [Google Scholar] [CrossRef]

- Küçük, S. Serial and Parallel Robot Manipulators—Kinematics, Dynamics, Control and Optimization; InTech: Vienna, Austria, 2012; p. 468. [Google Scholar]

- Sinacori, J.B. The Determination of Some Requirements for a Helicopter Flight Research Simulation Facility; Moffet Field: Mountain View, CA, USA, 1977. [Google Scholar]

- Olorama Technology. Olorama. Available online: http://www.olorama.com/en/ (accessed on 13 February 2017).

- Enttec. Controls, Lights, Solutions. Available online: http://www.enttec.com/ (accessed on 13 February 2017).

- Portalés, C.; Gimeno, J.; Casas, S.; Olanda, R.; Giner, F. Interacting with augmented reality mirrors. In Handbook of Research on Human-Computer Interfaces, Developments, and Applications; Rodrigues, J., Cardoso, P., Monteiro, J., Figueiredo, M., Eds.; IGI-Global: Hershey, PA, USA, 2016; pp. 216–244. [Google Scholar]

- Giner Martínez, F.; Portalés Ricart, C. The augmented user: A wearable augmented reality interface. In Proceedings of the International Conference on Virtual Systems and Multimedia, Ghent, Belgium, 3–7 October 2005. [Google Scholar]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Díaz, D.; Boj, C.; Portalés, C. Hybridplay: A new technology to foster outdoors physical activity, verbal communication and teamwork. Sensors 2016, 16, 586. [Google Scholar] [CrossRef] [PubMed]

- Peruri, A.; Borchert, O.; Cox, K.; Hokanson, G.; Slator, B.M. Using the system usability scale in a classification learning environment. In Proceedings of the 19th Interactive Collaborative Learning Conference, Belfast, UK, 21–23 September 2016. [Google Scholar]

- Kortum, P.T.; Bangor, A. Usability ratings for everyday products measured with the system usability scale. Int. J. Hum. Comput. Interact. 2013, 29, 67–76. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual sus scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Brooke, J. Sus: A retrospective. J. Usability Stud. 2013, 8, 29–40. [Google Scholar]

| Heave (m) | Pitch (°) | Roll (°) | |

|---|---|---|---|

| Minimum | −0.125 | −12.89 | −10.83 |

| Maximum | +0.125 | +12.89 | +10.83 |

| Questions | Mean | S.d. | Min | Max |

|---|---|---|---|---|

| 1. I think that I would like to use this system frequently | 3.00 | 0.89 | 1 | 4 |

| 2. I found the system unnecessarily complex | 0.70 | 0.78 | 0 | 2 |

| 3. I thought the system was easy to use | 3.50 | 0.5 | 3 | 4 |

| 4. I think that I would need the support of a technical person to be able to use this system | 1.30 | 1 | 0 | 3 |

| 5. I found the various functions in this system were well integrated | 3.60 | 0.66 | 2 | 4 |

| 6. I thought there was too much inconsistency in this system | 0.40 | 0.49 | 0 | 1 |

| 7. I would imagine that most people would learn to use this system very quickly | 3.60 | 0.49 | 3 | 4 |

| 8. I found the system very cumbersome to use | 0.60 | 0.8 | 0 | 2 |

| 9. I felt very confident using the system | 3.30 | 0.64 | 2 | 4 |

| 10. I needed to learn a lot of things before I could get going with this system | 0.30 | 0.46 | 0 | 1 |

| SUS score | 84.25 |

| Questions | Mean | S.d. | Min | Max |

|---|---|---|---|---|

| 1. Overall, I liked very much using ROMOT | 3.14 | 0.74 | 2 | 4 |

| 2. I find it very easy to engage with the multimodal content | 3.29 | 0.8 | 2 | 4 |

| 3. I enjoyed watching the 3D movies | 3.29 | 0.8 | 1 | 4 |

| 4. The audio was very well integrated with the 3D movies | 3.43 | 0.73 | 2 | 4 |

| 5. The smoke was very well integrated in the virtual reality interactive environment | 2.71 | 0.88 | 1 | 4 |

| 6. The smell was very well integrated in the virtual reality interactive environment | 2.93 | 1.1 | 0 | 4 |

| 7. The air and water were very well integrated in the virtual reality interactive environment | 2.79 | 1.01 | 0 | 4 |

| 8. The movement of the platform was very well synchronized with the movies | 3.36 | 0.72 | 2 | 4 |

| 9. The interaction with the tablet was very intuitive | 3.07 | 0.8 | 2 | 4 |

| 10. I didn’t feel sick after using ROMOT | 2.64 | 1.23 | 0 | 4 |

| 11. I would like to use again ROMOT | 3.36 | 0.61 | 2 | 4 |

| 12. I would like to recommend others to use ROMOT | 3.64 | 0.48 | 3 | 4 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Casas, S.; Portalés, C.; García-Pereira, I.; Fernández, M. On a First Evaluation of ROMOT—A RObotic 3D MOvie Theatre—For Driving Safety Awareness. Multimodal Technol. Interact. 2017, 1, 6. https://doi.org/10.3390/mti1020006

Casas S, Portalés C, García-Pereira I, Fernández M. On a First Evaluation of ROMOT—A RObotic 3D MOvie Theatre—For Driving Safety Awareness. Multimodal Technologies and Interaction. 2017; 1(2):6. https://doi.org/10.3390/mti1020006

Chicago/Turabian StyleCasas, Sergio, Cristina Portalés, Inma García-Pereira, and Marcos Fernández. 2017. "On a First Evaluation of ROMOT—A RObotic 3D MOvie Theatre—For Driving Safety Awareness" Multimodal Technologies and Interaction 1, no. 2: 6. https://doi.org/10.3390/mti1020006