A Kernel Gabor-Based Weighted Region Covariance Matrix for Face Recognition

Abstract

: This paper proposes a novel image region descriptor for face recognition, named kernel Gabor-based weighted region covariance matrix (KGWRCM). As different parts are different effectual in characterizing and recognizing faces, we construct a weighting matrix by computing the similarity of each pixel within a face sample to emphasize features. We then incorporate the weighting matrices into a region covariance matrix, named weighted region covariance matrix (WRCM), to obtain the discriminative features of faces for recognition. Finally, to further preserve discriminative features in higher dimensional space, we develop the kernel Gabor-based weighted region covariance matrix (KGWRCM). Experimental results show that the KGWRCM outperforms other algorithms including the kernel Gabor-based region covariance matrix (KGCRM).1. Introduction

Feature extraction from images or image regions is a key step for image recognition and video analysis problems. Recently, matrix-based feature representations [1–6] have been developed and employed for feature extraction. Tuzel et al. [3] introduced the region covariance matrix (RCM) as a new image region descriptor and have applied it to object detection and texture classification. RCM is a covariance matrix of basic features extracted from a region. The diagonal entries of the covariance matrix represent the variance of each feature, while the nondiagonal entries represent the respective correlations. Using RCM as region descriptor has several advantages. Firstly, RCM provides a natural fusion method because it can fuse multiple basic features without any normalization or weight operations. Secondly, RCM can be invariant to rotations. Thirdly, its computational cost does not depend on the size of the region. Due to these advantages, RCM has been employed to detect and track objects [3,5], and has achieved promising results. The RCM in [3] and [5] were constructed using the basic features including the pixel locations, color values and the norm of the first and second order derivatives. However, directly employing RCM for human face recognition cannot achieve higher recognition rates. In order to improve face recognition rates, Pang et al. [4] proposed the Gabor-based RCM (GRCM) method using pixel locations and Gabor features to construct region covariance. As Gabor features can carry more discriminating information, GRCM displayed better performance. Subsequently, they also proposed a kernel Gabor RCM (KGRCM) method [7] to capture the higher order statistics in the original low-dimensional space. Their experimental results have demonstrated that the KGRCM can improve the classification performance. Recently, KGRCM has been applied to object detection and tracking [6]. The nonlinear descriptor can capture nonlinear relationships within image regions due to the usage of nonlinear region covariance matrix.

However, the previous methods based on RCM consider each pixel in the training image to be contributing equally when reconstructing the RCM, i.e., the contribution of each pixel is usually set to be 1/N2, where N is the number of pixels in a local region. However, this assumption of equal contribution does not hold in real-world applications because it is possible that different pixels in different image parts may have different discriminative powers. For example, pixels at important facial features such as eyes, mouth, and nose should be emphasized and others such as cheek and forehead should be deemphasized.

Motivated by the above-mentioned reasons, we hence propose in this paper a weighted region covariance matrix (WRCM) to explicitly exploit the different importance of each pixel of a sample. WRCM can only extract linear face features. However, by using nonlinear features it can achieve higher performance for face recognition tasks [7–9]. To further preserve nonlinear features, we develop the kernel Gabor-based weighted region covariance matrix (KGWRCM). Experimental results on the ORL Face database [10], the Yale Face database [11] and the AR database [12] show that the KGWRCM algorithm outperforms the RCM, the WRCM, the RCM with Gabor features (GRCM) [4], the KRCM with Gabor features (KGRCM) [7], and the conventional KPCA [9], Gabor + PCA [13], and Gabor +LDA [13] algorithms in terms of the recognition rate.

2. Region Covariance Matrix (RCM)

The RCM [3] is a matrix of covariance of features computed inside a region of an image. Let F be a two-dimensional image size of h × w, where w and h are the height and width of the image region. The number of pixels in image region is N = h × w. Define a mapping ϕ that maps a pixel (k, l) of F onto the d dimensional feature vector xi:

As a result there are N d-dimensional feature vectors (xi)i = 1,…,N. For the intensity image, the feature mapping function ϕ is defined by pixel locations, gray values and the norm of the first and second order derivatives of the intensities with respect to k and l:

The image region can then be represented by a d × d covariance matrix of the basic feature vectors xi:

Equation (3) can also be expressed by following equation:

The computation process is given in Appendix A.

3. Weighted Region Covariance Matrix (WRCM)

Based on the feature vectors xi, the d × d weighted region covariance matrix of the image region is defined as follows:

Comparing Equations (5) and (6), we can see that the WRCM is just the RCM if Sij = 1/N2, which implies that RCM is a special case of the WRCM method. However, as all the weights in RCM are 1/N2, RCM cannot exploit the different importance of each pixel of a sample. On the other hand, the WRCM can assign different weights for each pixel of a sample, so it can preserve more discrimination information than RCM.

As CW in Equation (6) is a matrix-form feature, the commonly used distances are not used. The generalized eigenvalue based distance proposed by Forstner [15] is hence used to measure the distance/dissimilarity between the WRCMs and :

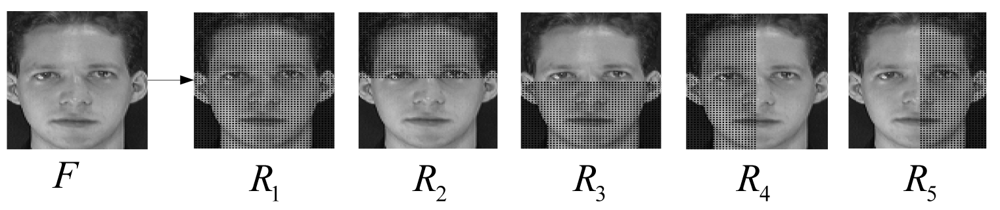

To preserve the local and global patterns, similar to [3,4], we represent a face image with five WRCMs from five different regions (R1, R2, R3, R4, and R5) (Figure 1). The five WRCMs (CW1, CW2, CW3, CW4, and CW5) are constructed from five different regions. As CW1 is the weighted region covariance matrix of the entire image region R1, it is a global representation of the face. The CW2, CW3, CW4, and CW5 are extracted from four local image regions (R2, R3, R4, and R5), so they are part-based representations of the face.

After obtaining WRCMs of each region, it is necessary to measure the distance between the gallery and probe sets. Let and be WRCMs from the gallery and probe sets. The distance between a gallery WRCM and a probe one is computed as follows:

4. Kernel Weighted Region Covariance Matrix (KWRCM)

To generalize WRCM to the nonlinear case, we use a nonlinear kernel mapping x ∈ Φd → ϕ(x) ∈ Ω to map the feature data x ∈ Φd into a higher dimensional subspace. Then a linear WRCM is performed to preserve intrinsic geometric structures in subspace Ω. Suppose that R1 and R2 are two rectangular regions in the gallery and probe set images, respectively. Let m and n be number of pixels located in regions R1 and R2, respectively. ϕ(x) and ϕ(y) are the higher dimensional features extracted from regions R1 and R2, where, ϕ(X) = [ϕ(x1), ϕ(x2),…, ϕ(xm)] and ϕ(Y) = [ϕ(y1), ϕ(y2),…, ϕ(yn)]. Let and be the kernel weighted region covariance matrices of regions R1 and R2, respectively. and are computed as follows:

Hence Equation (9) can be written as follows:

As any eigenvector can be expressed by a linear combination of the elements, there exist coefficients αi (i = 1,2,…,m) and βj (j = 1,2,…,n) such that:

Combining Equations (13) and (14), the generalized eigenvalue task in Equation (13) can be expressed in the form of block matrices:

The detailed derivation of Equation (15) is given in Appendix B.

We defined matrices U, A, and B as

Equation (15) can be rewritten as:

When A is positive definite, the generalized eigenvalues are obtained through solving the following eigenvalue problem:

However, in many cases, A is a singular matrix, we hence incorporate a regularization parameter u > 0 on both sides, respectively:

Based on eigenvalues obtained by Equation (9) or Equation (22), we compute the distance between the two image regions R1 and R2 using Equation (8).

5. Kernel Gabor-Based Weighted Region Covariance Matrix (KGWRCM)

In Equation (2), these features such as pixel locations (k,l), intensity values and the norm of the first and second order derivatives of the intensities with respect to k and l are effective for tracking and detecting objects. However, their discriminating ability is not strong enough for face recognition [4]. To further improve the performance, Gabor features are added to the feature space. A 2-D Gabor wavelet kernel is the product of an elliptical Gaussian envelope and a complex plane wave, defined as:

Therefore, a feature mapping function based on Gabor features is obtain by:

As the Gabor wavelet representation can capture salient visual properties such as spatial localization, orientation selectivity, and spatial frequency characteristic, Gabor-based features can carry more important information. The proposed KGWRCM method can be briefly summarized as follows:

partition a face image into five regions (R2, R3, R4, and R5), and extract basic features of five regions using Equation (26).

compute two weight matrices L* and L# using Equations (11) and (12), and obtain four kernel matrices K(X,X), K(X,Y), K(Y,X), and K(Y,Y), using Equations (30)–(33). Based on these matrices, the matrices A and B are computed utilizing Equations (17) and (18), respectively.

with A and B, the eigenvalues are obtained by Equation (20) or Equation (22) and submitted into Equation (8) to calculate the distance.

based on the distance defined in Equation (10), the nearest neighborhood classifier is employed to performance classification.

6. Experimental Results

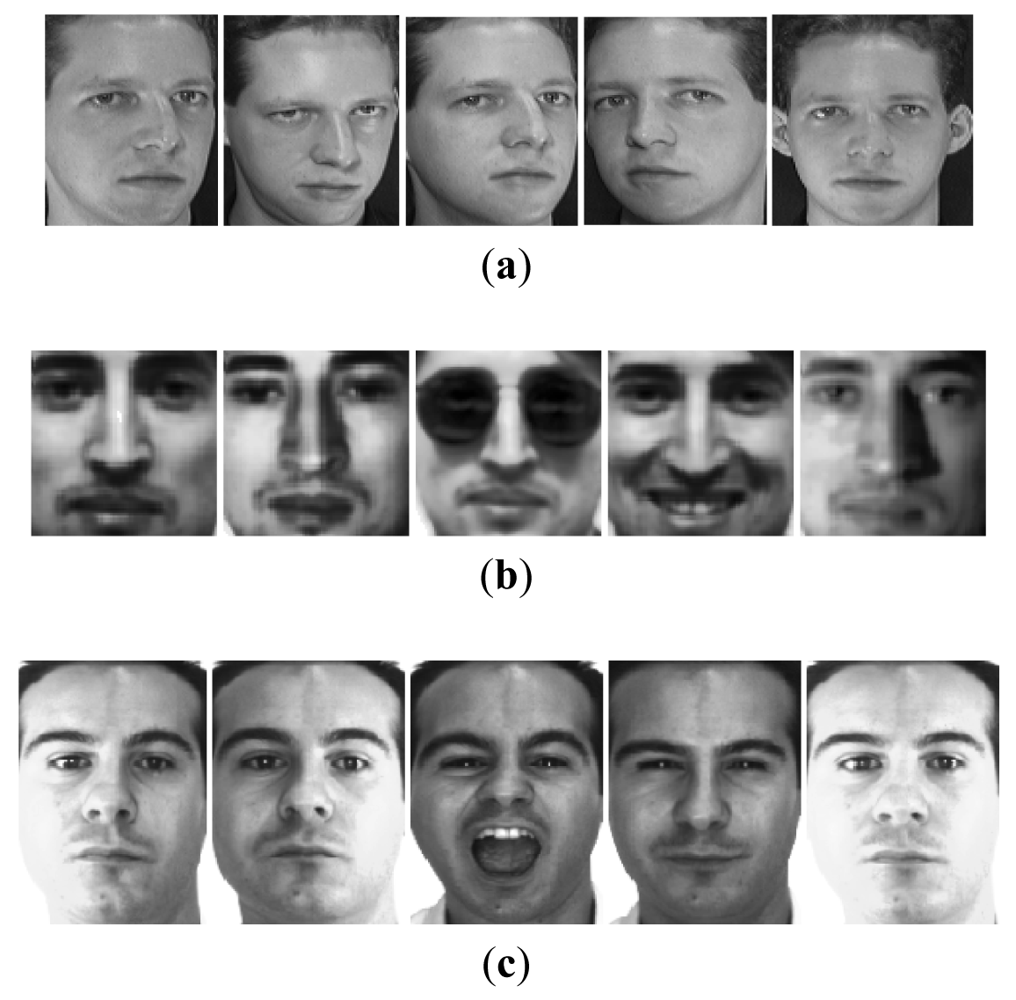

We tested the GKWRCM algorithm on the ORL Face database [10], the Yale Face database [11] and AR Face database [12]. The ORL Face database comprises of 400 different images of 40 distinct subjects. Each subject provides 10 images that include variations in pose and scale. To reduce computational cost, each original image is resized to 56 × 46 by the nearest-neighbor interpolation function. A random subset with five images per individual is taken with labels to comprise the training set, and the remaining constructs the testing set. There are totally 252 different ways of selecting five images for training and five for testing. We select 20 random subsets with five images for training and five for testing.

The Yale face database contains 165 grayscale images with 11 images for each of 15 individuals. These images are subject to expression and lighting variations. In this recognition experiment, all face images with size of 80 × 80 were resized to 40 × 40. Five images of each subject were randomly chosen for training and the remaining six images were used for testing. There are hence 462 different selection ways. We select 20 random subsets with five images for training and six for testing.

The AR database consists of over 4,000 images corresponding to 126 people's faces (70 men and 56 women). These images include more facial variations, including illumination change, and facial occlusions (sun glasses and scarf). For each individual, 26 pictures were taken in two separate sessions and each section contains 13 images. In the experiment, we chose a subset of the data set consisting of 50 male subjects and 50 female subjects with seven images for each subject. The size of images are 165 × 120. We select two images for training and five for testing from the seven images. There are 21 different selection ways. Figure 2 shows some examples of the first object in each database used here.

In our experiment, all images are normalized to zero mean and unit variance. We compare the developed WRCM and KGWRCM with the RCM, the RCM with Gabor features GRCM [4], the KGRCM [7] and the conventional methods including KPCA [11], Gabor + PCA [12], and Gabor +LDA [12]. For GPCA and the PCA stage of KPCA, we keep 99% information to determine the number of the principal components. For the PCA stage of Gabor+LDA, we selected the number of principal components as M − c, where M is the number of training samples and c is the number of classes (M = 200 and c = 40 for ORL database, and M = 55 and c = 11 for Yale database, and M = 200 and c = 100 for Yale database). For KPCA, KGRCM, and the proposed KGWRCM, a Gaussian kernel function is used as kernel. We select their parameters for following algorithms with cross-validation method. Their parameters are summarized as follows: (1) parameter σ for the WRCM and KGWRCM methods. (2) the kernel parameters for the KPCA, KGRCM, and KGWRCM methods. In all these experiments, the classifiers of nearest neighborhood are employed. The performance of all methods is evaluated by the mean and standard deviation (std) of the recognition accuracies on 20 data sets for ORL and Yale databases, and the 21 data sets for AR database.

The average recognition accuracies on the ORL, Yale and AR databases are shown in Tables 1, 2 and 3, respectively. For the ORL face database and the Yale face database, the proposed KGWRCM method achieves 99.21% and 79.20% mean recognition accuracy which is higher than that of other methods. For the AR database, the proposed KGWRCM method achieves 95.95% mean recognition accuracy, which is 4.15% higher than that of KGRCM and much higher than that of other methods.

These results clearly show that the proposed KGWRCM method can capture more discriminative information than other methods for face recognition. Particularly KGWRCM and WRCM outperform KGRCM and RCM, which implies that the weighted approaches can better emphasize more important parts in faces and deemphasize the less important parts, and also preserve discriminated information for face recognition.

7. Conclusions

In this paper, an efficient image representation method for face recognition called KGWRCM is proposed. Considering that some pixels in face image are more effectual in representing and recognizing faces, we have constructed KGWRCM based on weighted score of each pixel within a sample to duly emphasize the face features. As the weighted matrix can carry more important information, the proposed method has shown good performance. Experimental results also show that the proposed KGWRCM method outperforms other approaches in terms of recognition accuracy. However, similar to KGRCM, the computational cost of KGWRCM is high due to the computation of the high dimensional matrix. In future work, an effective KGWRCM method with low computational complexity will be developed for face recognition.

Acknowledgments

This work is supported by the Research Fund for the Doctoral Program of Higher Education of China (NO. 20100191110011), and the Fundamental Research Funds for the Central Universities (NO. CDJXS11122220, CDJXS11121145).

Appendix A

Equation (3) can be formulated

By some simple algebraic, Equation (5) is expressed by

Based on Equations (27) and (28), the following equation is obtained by

Appendix B

Let k(xi, xj) = ϕ(xi)·ϕ(xj) be the kernel function, the following four kernel matrices K(X,X), K(X,Y), K(Y,X), and K(Y,Y) with sizes of m × m, m × n, n × m, and n × n can be obtained by

Substituting Equation (11) into Equation (10), we can obtain

Based on Equations (21)–(24), Equation (25) can be expressed as

To express Equation (26) in the form of a kernel function, we multiply ϕT(X) on both sides, respectively. The following equation is obtained

Similarly, we multiply ϕT(Y) on both sides of Equation (26), and have

Equations (27) and (28) can be expressed using matrices and vectors

Combining Equation (29) and Equation (30) obtains

References

- Jensen, A.C.; Loog, M.; Solberg, A.H.S. Using Multiscale Spectra in Regularizing Covariance Matrices for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1851–1859. [Google Scholar]

- Porikliand, F.; Kocak, T. Robust License Plate Detection Using Covariance Descriptor in a Neural Network Framework. Proceedings of 2006 IEEE International Conference on Advanced Video and Signal, Sydney, Australia, 22–24 November 2006; pp. 107–113.

- Tuzel, O.; Porikli, F.; Meer, P. Region Covariance: A Fast Description for Detection and Classification. Proceedings of 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 589–600.

- Pang, Y.W.; Yuan, Y.; Li, X.D. Gabor-Based Region Covariance Matrices for Face Recognition. IEEE Trans. Circ. Syst. Video Technol. 2008, 18, 989–993. [Google Scholar]

- Tuzel, O.; Porikli, F.; Meer, P. Pedestrian Detection via Classification on Riemannian Manifolds. IEEE Trans. Patt. Anal. Mach. Intell. 2008, 30, 1713–1727. [Google Scholar]

- Arif, O.; Vela, P.A. Kernel Covariance Image Region Description for Object Tracking. Proceedings of 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 865–868.

- Pang, Y.W.; Yuan, Y.; Li, X.D. Effective Feature Extraction in High-Dimensional Space. IEEE Trans. Syst. Man Cyber. B Cybern. 2008, 38, 1652–656. [Google Scholar]

- Zhao, X.; Zhang, S. Facial Expression Recognition Based on Local Binary Patterns and Kernel Discriminant Isomap. Sensors 2011, 11, 9573–9588. [Google Scholar]

- Scholkopf, B.; Smola, A.; Muller, K.R. Nonlinear Component Analysis as a Kernel Eigenvalue Problem. Neur. Comput. 1998, 10, 1299–1319. [Google Scholar]

- The ORL Face Database. Available online: http://www.cam_orl.co.uk/ (accessed on 10 May 2012).

- Yale University. Face Database. Available online: http://cvc.yale.edu/projects/yalefaces/yalefaces.html (accessed on 10 May 2012).

- Martinez, A.M.; Kak, A.C. PCA versus LDA. IEEE Trans. Patt. Anal. Mach. Intell. 2001, 23, 228–233. [Google Scholar]

- Liu, C.J.; Wechsler, H. Gabor Feature Based Classification Using the Enhanced Fisher Linear Discriminant Model for Face Recognition. IEEE Trans. Image Process. 2002, 11, 467–476. [Google Scholar]

- He, X.F.; Yan, S.C.; Hu, Y.X.; Partha, N.Y.; Zhang, H.J. Face Recognition Using Laplacianfaces. IEEE Trans. Patt. Anal. Mach. Intell. 2005, 27, 328–340. [Google Scholar]

- Forstner, W.; Moonen, B. A Metric for Covariance Matrices; Technical Report; Stuttgart University: Stuttgart, Germany, 1999. [Google Scholar]

- Yang, X.; Zhou, Y.; Zhang, T.; Zheng, E.; Yang, J. Gabor Phase Based Gait Recognition. Electr. Lett. 2008, 44, 620–621. [Google Scholar]

| Method Mean | Recognition rates (%) | Standard deviations (%) |

|---|---|---|

| KGWRCM | 99.21 | 1.12 |

| KGRCM | 98.41 | 1.24 |

| GRCM | 97.06 | 1.28 |

| WRCM | 93.83 | 2.11 |

| RCM | 91.88 | 2.57 |

| GPCA | 89.78 | 2.43 |

| GLDA | 97.5 | 1.37 |

| KPCA | 94.43 | 1.55 |

| Method Mean | Recognition rates (%) | Standard deviations (%) |

|---|---|---|

| KGWRCM | 79.20 | 8.72 |

| KGRCM | 76.23 | 9.04 |

| GRCM | 72.00 | 10.58 |

| RWCM | 61.67 | 8.76 |

| RCM | 51.94 | 7.22 |

| GPCA | 67.94 | 9.36 |

| GLDA | 73.47 | 7.06 |

| KPCA | 73.28 | 8.11 |

| Method Mean | Recognition rates (%) | Standard deviations (%) |

|---|---|---|

| KGWRCM | 95.95 | 1.46 |

| KGRCM | 91.80 | 2.58 |

| GRCM | 81.46 | 11.73 |

| WRCM | 48.56 | 11.08 |

| RCM | 41.31 | 12.54 |

| GPCA | 78.64 | 5.35 |

| GLDA | 88.99 | 4.18 |

| KPCA | 66.89 | 7.68 |

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Qin, H.; Qin, L.; Xue, L.; Li, Y. A Kernel Gabor-Based Weighted Region Covariance Matrix for Face Recognition. Sensors 2012, 12, 7410-7422. https://doi.org/10.3390/s120607410

Qin H, Qin L, Xue L, Li Y. A Kernel Gabor-Based Weighted Region Covariance Matrix for Face Recognition. Sensors. 2012; 12(6):7410-7422. https://doi.org/10.3390/s120607410

Chicago/Turabian StyleQin, Huafeng, Lan Qin, Lian Xue, and Yantao Li. 2012. "A Kernel Gabor-Based Weighted Region Covariance Matrix for Face Recognition" Sensors 12, no. 6: 7410-7422. https://doi.org/10.3390/s120607410

APA StyleQin, H., Qin, L., Xue, L., & Li, Y. (2012). A Kernel Gabor-Based Weighted Region Covariance Matrix for Face Recognition. Sensors, 12(6), 7410-7422. https://doi.org/10.3390/s120607410