Decoupling Intensity Radiated by the Emitter in Distance Estimation from Camera to IR Emitter

Abstract

: Various models using radiometric approach have been proposed to solve the problem of estimating the distance between a camera and an infrared emitter diode (IRED). They depend directly on the radiant intensity of the emitter, set by the IRED bias current. As is known, this current presents a drift with temperature, which will be transferred to the distance estimation method. This paper proposes an alternative approach to remove temperature drift in the distance estimation method by eliminating the dependence on radiant intensity. The main aim was to use the relative accumulated energy together with other defined models, such as the zeroth-frequency component of the FFT of the IRED image and the standard deviation of pixel gray level intensities in the region of interest containing the IRED image. By using the abovementioned models, an expression free of IRED radiant intensity was obtained. Furthermore, the final model permitted simultaneous estimation of the distance between the IRED and the camera and the IRED orientation angle. The alternative presented in this paper gave a 3% maximum relative error over a range of distances up to 3 m.1. Introduction

The camera model used in computer vision involves a correspondence between real world 3-D coordinates and image sensor 2-D coordinates by modeling the projection of a 3-D space onto the image plane [1]. This model is commonly known as the projective model.

If a 3-D positioning system is implemented using one camera modeled by the projective model, this would imply relating the image coordinate with the world coordinate, obtaining an ill-posed mathematical problem. In this analysis, one of the three dimensions is lost. From a mathematical point of view, this implies that although the positioning system is capable of estimating a 2-D position of the subject, the distance between the camera and the subject cannot be calculated. In fact, the position in this case would be defined by a line passing through the optical center and the 3-D world coordinates of the subject.

The main problem in this case can thus be defined: how can the distance between the camera and a subject be estimated efficiently? That is, how can depth be estimated?

Mathematically, the typical projective model can be used to estimate depth by including additional constraints in the mathematical equation system. These additional constraints could be incorporated, for example, by using another camera to form a stereo vision system [1–4], by camera or subject motion [5], by structured light patterns emitted to the environment [6], by focus-defocus information [7], and more recently, by a radiometric approach considering certain specific conditions [8–12].

The radiometric model and the alternative distance measurement method proposed in [8–12] could present a practical solution to depth estimation to ensure 3-D positioning, because they are independent of image coordinates and only use information extracted from pixel gray level intensities, which is not used for absolute measurement.

1.1. Previous Distance Measurement Alternatives

In the image formation process, the camera accumulates incident light irradiance during exposure time. The accumulated irradiance is converted into an electrical signal that is sampled, quantized and coded to form pixel gray level intensity. By analyzing this process inversely, from pixel gray level intensity to the irradiance on the sensor surface, an inverse camera response function can be defined, and this constitutes the starting point for applying a radiometric model [13,14].

The function that relates pixel gray level intensities to light irradiance on the sensor surface is known as the camera radiometric response function [13,14], and it is commonly used to produce high dynamic range images.

However, in [8,9,11], the inverse camera radiometric response function was used to extract a measure of the irradiance accumulated by the camera in the image formation process, in order to then use this as an empirical parameter to extract depth information under specific conditions.

For example, if the positioning system is designed to estimate the 3-D position of a robot carrying an infrared emitter diode (IRED), then the IRED can be considered the point-source and the irradiance on the sensor surface (camera) will decrease with squared distance [15]. Therefore, this is the main hypothesis that has been used to define a radiometric model oriented to the distance estimation tasks in [8,9,11], which is based on the following conditions:

Images must be formed by the power emitted by the IRED. Background illumination must be eliminated using interference filters.

The IRED must be biased with a constant current to ensure a constant radiant intensity.

Focal length and aperture must be constant during the measurement process, as stated in projective models.

The camera and the IRED must be aligned. The orientation angles have not been considered yet.

The camera settings (such as gain, brightness, gamma) must be established in manual mode with a fixed value during the experiment. The goal is to disable any automatic process that could affect the output gray level.

Obviously, the power emitted by the IRED is affected by the azimuth and elevation angles. It can be modeled by the IRED emission pattern. As was stated in the conditions defined above, in a previous experiment, the camera was aligned with the IRED. However, in this paper the effect of the emission pattern of the IRED would be considered.

Another alternative to decrease the effect of the IRED emission pattern could be the use of an IRED source with an emission pattern conformed by optical devices. The idea is to ensure a constant radiant intensity on an interval of orientation angles. In this case, the effect of the IRED emission pattern could be obviated from the model definition. However, this alternative would be impractical, because a new limit to the practical implementation would be added to the proposed alternative.

Considering these conditions, the camera would capture images of the IRED. From the analysis of the captured images, the relative accumulated image irradiance (Errel) would be estimated. The relative accumulated irradiance (Errel) is obtained from the inverse radiometric response function of the camera f using the normalized gray level intensities (Mi) of the pixels i, i = 1,…, A, where A is the number of pixels of the ROI that contains the IRED image. Errel can be written as:

Under the conditions stated above, Errel is related to the magnitudes that affect the quantity of light that falls on the sensor surface and is also accumulated by the camera. These magnitudes are the intensity radiated by the IRED (I0), the camera exposure time (t), the emitter orientation angle (θ) and the distance between the camera and the IRED (d). Therefore, Errel is defined as:

There is a common singularity that could be interpreted as unconsidered parameter if the distance measuring alternative would be compared with typical pin-hole model. That is: the real position of the image sensor inside the camera.

The Errel of the incoming light was measured on the sensor surface by traveling through the optical system of the camera. However, the distance used in the modeling process was measured using an external reference. Therefore, there is an inaccuracy in the physical distance between the IRED and the image sensor and the real distance between the camera and the IRED measured externally.

Mathematically, to convert the real distance into the physical distance traveled by the incoming light, an offset displacement (α) might be included in the modeling process. That is: D = d − α, where D represents the real distance measured by an external reference and d the physical distance traveled by the incoming light. Nevertheless, without loss of generality, the quantitative evaluation of the accuracy of the distance estimation alternative can be made considering an external reference, but obviously this reference must be kept during whole implementation. It means that in the calibration and measurement processes, the same external reference must be used.

All automatic processes of the camera are disabled. The gain of the camera was fixed to the minimum value and the brightness and gamma were also fixed to a constant value during the whole implementation.

The modeling process used to obtain the function G was carried out empirically by measuring the individual behavior of Errel with t, I0, θ and d, respectively. From these behaviors, G was defined as:

Nevertheless, the behavior of Errel with θ could be an arbitrary function, and it has not been included, yet; in this case, it would be considered constant (K(θ)), because the camera and emitter were considered aligned [8,9,11].

After elimination of the parenthesis in the Equation (3), the relative accumulated irradiance would yield:

The acquired data was used to form a system of equations defined by:

Therefore, from the Equation (5), k can be calculated by:

Once values for the model coefficients have been calculated, the distance between the camera and the IRED can be estimated directly from Equation (4). However, a differential methodology has been proposed to estimate the distance [9]. This methodology uses at least two IRED images taken with different exposure times; in [9] it was demonstrated that better results for distance estimation were obtained with this method than with the other alternative.

The results of the measurement process suggest that the relative accumulated irradiance Errel could be an alternative to obtain depth when a 3-D positioning system using one camera and one IRED onboard the robot is required [8,9,11].

1.2. New Parameter to Be Considered to Obtain Distance between a Camera and an IRED

The procedure described in Section 1.1 was generalized in order to use other parameters extracted from the IRED image by considering only the pixel gray level intensities.

Specifically, the additional proposed parameters were the zeroth-frequency component of the FFT of the IRED image [10] and the standard deviation of gray level intensities in the region of interest containing the IRED image [12]. These were defined as alternative models and were tested in distance estimations between the camera and the IRED.

The zeroth-frequency component of the FFT of the IRED image represents the average gray level intensity. Thus, using the FFT to obtain the average gray level is an ineffective procedure. However, strategically the FFT of the IRED image will be used to obtain new parameters to be related to radiometric magnitudes including the distance between the camera and the IRED.

Nevertheless, in this paper only the zeroth-frequency component of the FFT of the image of the IRED is used for distance estimation.

In [10], it has been demonstrated that the zeroth-frequency component (F(0, 0)) and Errel have similar models, since the behaviors of F(0, 0) with t, I0 and d were equivalent compared with the measured behaviors of Errel.

In other words, the F(0, 0) was characterized similarly to Errel as was explained in Section 1.1. The behaviors of F(0, 0) with t, d and I0 were measured experimentally. Finally, a linear function was considered to model the behaviors of F(0, 0) with t, I0 and d−2, respectively.

Thus, considering a linear function to model the behaviors of F(0, 0) with t, I0 and d−2, the expression for F(0, 0) looks like Equation (3). In this case, the effect of the orientation angles was not included in the model yet, as was explained in Section 1.1 [10].

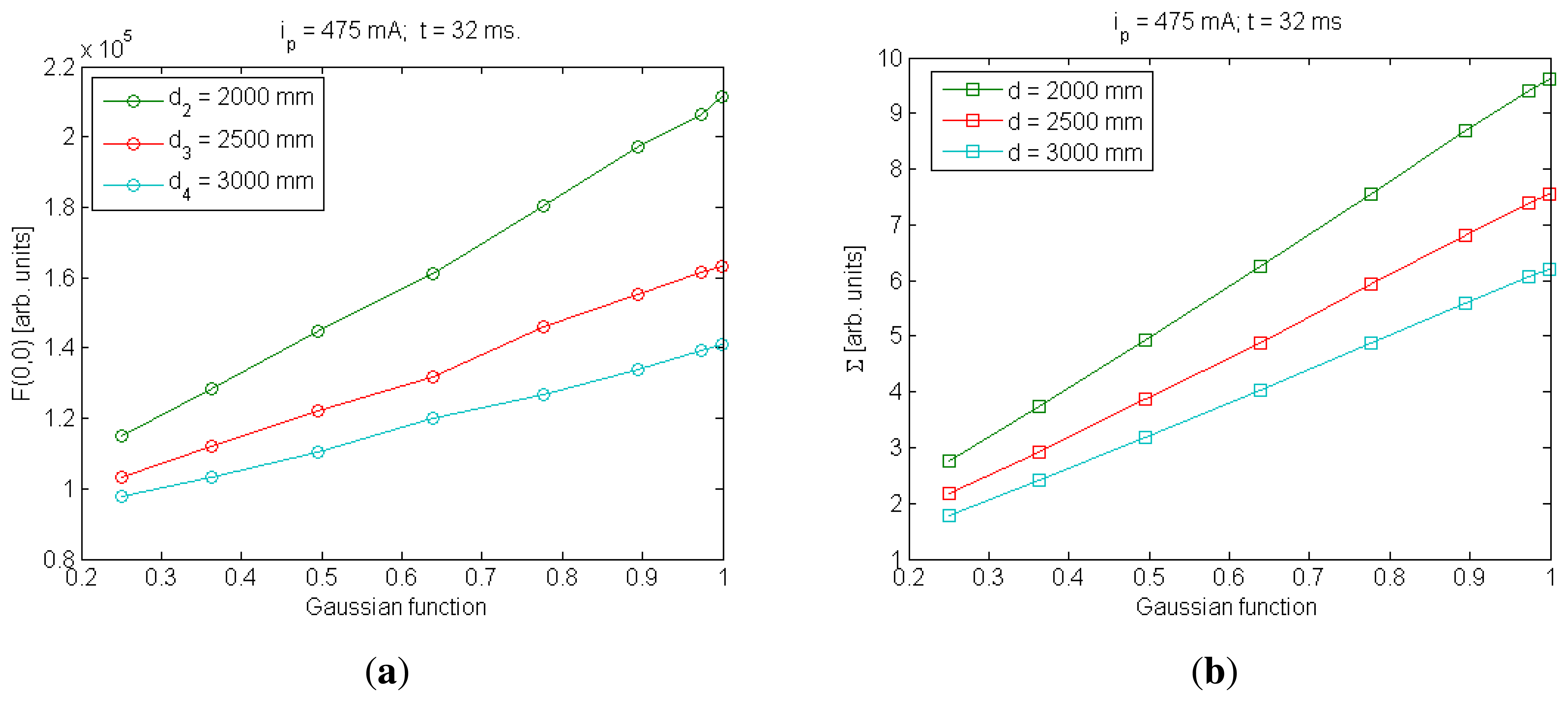

In the case of the standard deviation (Σ), the behavior of Σ with t and I0 was similar to Errel and F(0,0), respectively; consequently, linear behaviors were considered to model them. However, there was only a small difference in the behavior of Σ with distance compared with Errel and F(0, 0), as is shown in Figure 1 [12].

In F(0,0) and Errel models, linear functions have been used to model them with d−2. In the case of Σ, as is shown in Figure 1, the use of a quadratic function to model distance behavior will be more accurate than a linear one [12].

Finally, the additional parameters were tested to estimate the distance between one camera and one IRED, considering that the camera was aligned with the IRED. In both cases, the relative error in distance estimation was lower than 3% in a range of distances from 4 to 8 m.

Therefore, to describe the problem defined in Section 1 related to the estimation of the distance between one camera and one IRED onboard a robot, three independent alternatives have been proposed, which are summarized in Table 1.

Nevertheless, some questions remain unanswered, for example:

What will happen when the camera and emitter are not aligned?

How can we ensure that the estimated distance is kept constant under different conditions, e.g., for different temperatures (knowing that the IRED radiant intensity depends on temperature)?

Can IRED radiant intensity be eliminated or estimated using the defined models?

These questions will be answered in this paper, following the principal objective, which is to propose a distance measurement methodology that provides a better performance in real environments by integrating the distance estimation alternatives summarized in Table 1.

The following sections describe the solution to the problems stated above. First, in Section 2, the effect of the IRED orientation angle is incorporated into the models summarized in Table 1, to ensure that the models can be used in non-aligned situations. Next, in Section 3, a study is presented on how the influence of IRED radiant intensity can be eliminated from distance estimation methodology. In Section 4, a distance estimation method is described, taking into account the results of the study carried out in Section 3. Section 5 describes the experiments conducted to validate the I0-free distance estimation method and the corresponding results. Finally, in Section 6, the conclusions and future trends are presented.

2. Introducing the Orientation Angle Effect

In the measurement alternatives proposed in [8–12], the camera and the IRED were considered aligned. In practice, the IRED onboard the robot could have any orientation angle with respect to the camera. In other words, the IRED could be placed in any position in the positioning cell, and consequently the IRED image coordinates would vary, implying that different incidence angles would be obtained. Figure 2 shows a simplified diagram of the orientation angles that must be considered in the problem of estimating the distance between a camera and an IRED.

As can be seen in Figure 2, there are three angles involved into the problem of camera-to-emitter distance estimation. Two angles are related to the IRED: ψ and θ, which are used to describe the IRED emission pattern. ψ can be excluded because in most cases the emission pattern is a figure of revolution. Thus, the θ angle and the incidence angle (φ) are the main orientation angles that must be considered. The θ orientation angle is directly related to the quantity of light emitted to the camera and is also involved in the IRED emission pattern; therefore, this angle must be included in the distance estimation alternatives.

The effect of the incidence angle (φ) is related to the vignetting phenomenon, which produces a reduction in the gray level intensities of peripheral pixels compared with the center of the image. In addition, the incidence angle can be estimated by the geometric model when the focal distance and the optical center of the camera are known. That is, , where ‖r⃗‖ represents the distance between the IRED image coordinates and the image coordinates of the optical center, and f is the focal distance. Nevertheless, the vignetting effect can be reduced if a telecentric lens is used.

From a radiometric point of view, the main angle to be considered in the distance estimation alternatives is the θ orientation angle, because it ponders the quantity of energy emitted to the camera by the emission pattern.

Although the effect of the IRED orientation angle (θ) was measured in [8–12], as can be seen in Table 1, it has not been included in mathematical models.

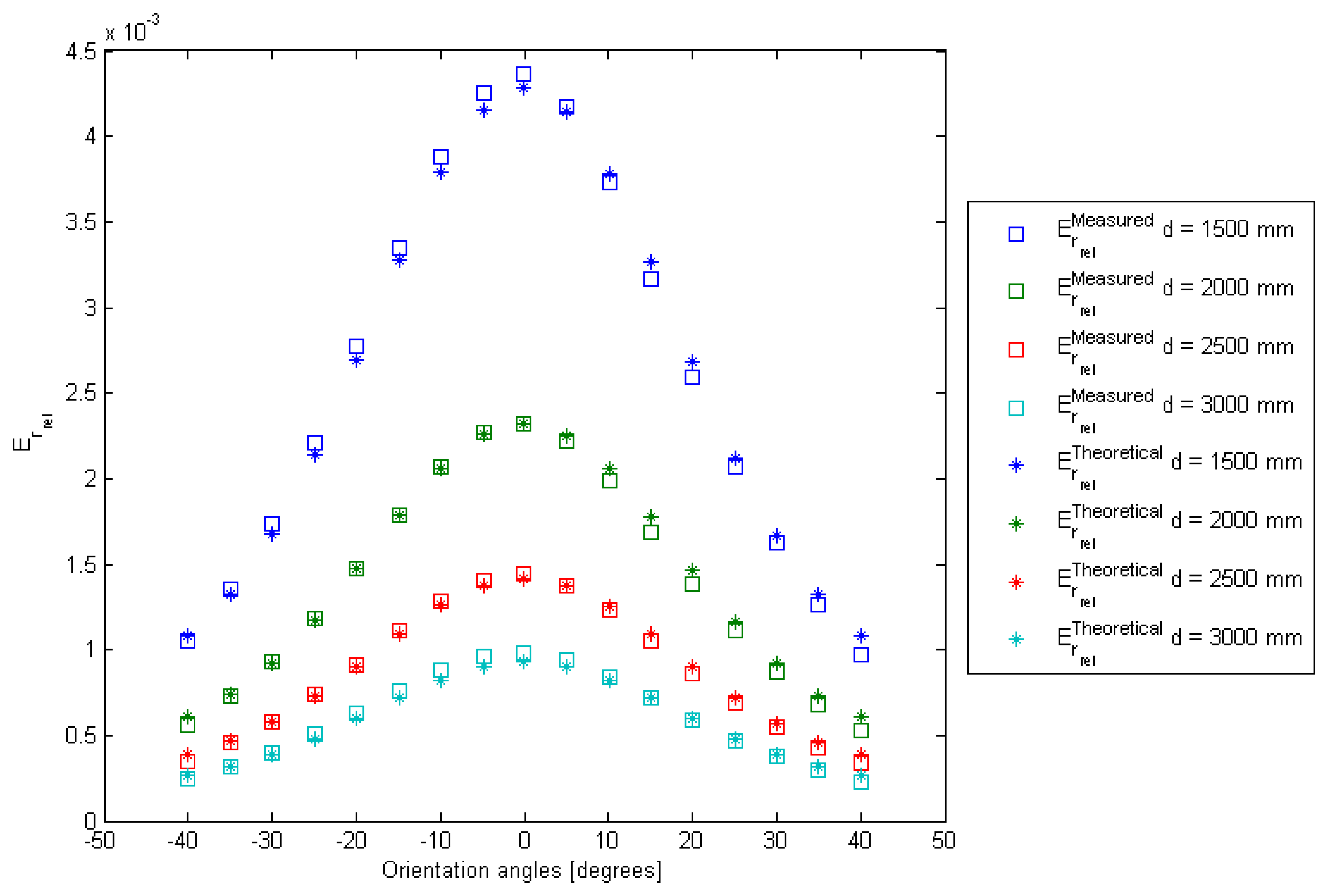

Starting with the relative accumulated image irradiance, Figure 3 depicts the behavior of Errel with the IRED orientation angle (θ). As can be seen, this behavior can be modeled by a Gaussian function [16].

Figure 3 demonstrates the statement summarized in Table 1, namely that Errel can be modeled by a linear function of the function used to fit the IRED pattern.

Mathematically, to take into account the θ orientation angle, an additional product must be included in the Equation (3). That is:

Once the parentheses have been eliminated, the relative accumulated image irradiance would yield:

The values for κj with j = 1, …, 16 are those that minimize the error between theoretical and measured Errel. That is:

The result of this fitting process is shown in Figure 4.

Figure 4 gives the result of model fitting for different distances between the IRED and the camera and for different IRED orientation angles. The relative error in this model fitting process was lower than 4%.

Once the coefficients vector k has been calculated, the model defined in Equation (8) can be used for distance estimation. As in [9–12], a differential methodology can be used, but an estimation of θ is required to calculate the camera-to-emitter distance. Thus, a methodology to estimate the IRED orientation angle outside the Errel model is needed in order to estimate the distance.

In [17], a method to estimate the camera pan-tilt angles by detecting circular shapes was proposed, and this idea was used to estimate the IRED orientation angle. However, in contrast to [17], in our system the camera was static and placed in a fixed position. Thus, if a circular IRED is used, the orientation angles calculated by [17] can be used in the Errel alternative.

As was demonstrated in [17], the pan-tilt angle of the IRED can be estimated from the estimated ellipse and its parameters: the minor and major axes and the angle formed between the horizontal axis of the image and the major axis.

Figure 5 depicts a typical result for the IRED orientation angle. The figure shows the estimated ellipse, the center point and the points that belong to the IRED image. In Figure 5(a), the real pan-tilt angles were 20 and 30 degrees, respectively, and in Figure 5(b), the orientation angles were 0 and 30 degrees. The estimated IRED orientation angles were obtained with ±2 degrees of error and were subsequently used as the initial value in an optimization process, as will be explained in Section 4.

The methodology employed to include the orientation angle in the Errel alternative was also used to include the orientation angles in the F(0,0) and Σ alternatives. In both cases, their behaviors with the IRED orientation angle were considered linear, and consequently a linear product was added to their specific expression. Figure 6 shows the behavior of F(0,0) and Σ with a Gaussian function used to model the IRED emission pattern. Note that qualitatively, the assumption of linear functions to model the effect of the IRED emission pattern could be a valid statement.

As in the case of the Errel model, the calibration process also considered the Gaussian dispersion as an unknown in the F(0, 0) and Σ models, respectively. Table 2 summarizes the equations for Errel, F(0, 0) and Σ in the general form.

3. Defining a Radiant Intensity-Free Model

The other principal problem to be analyzed in this paper is the variation in IRED characteristics under different conditions, for example, temperature. As stated earlier, radiant intensity can be set by the IRED bias current. As the IRED is a semiconductor diode, temperature variation produces a drift in bias current that is transferred to radiant intensity [18] and thus affects the distance estimation.

A priori, an estimation of IRED radiant intensity would be a practical solution, but knowing the I0 would not give us any useful information related to the positioning system. The ideal solution would be to eliminate, at least in the mathematical procedure, the effect of IRED radiant intensity on the distance estimation method.

Three equations were obtained mathematically, shown in Table 2; if a system of equations could be formed using the three parameters/equations summarized in Table 2, then three unknowns could be calculated. As stated above, there is no benefit to be gained in estimating the value of I0, therefore, eliminating it would be the best solution.

First, the equations summarized in Table 2 were written in differential form as defined in [9,10,12]. This study demonstrated that a better performance is obtained than with a direct distance estimation method.

The differential method analyzes two images captured with different exposure times tm and tr, respectively. The difference of Errel in the two images, , can be defined as:

Analogously, for the F(0, 0) parameter:

Finally, for Σ:

Subsequently, from Equation (11), I0 can be written as:

To simplify the mathematical expressions, this notation was used:

The idea was to substitute I0 in the other equations, which would produce two I0-free equations.

Thus, substituting I0 from Equation (13) in Equations (10) and (12) would yield:

Eliminating the parentheses in Equations (15) and (16), respectively, yields:

The function ϱE in Equation (17) is formed by the product of ϱ, which is the Gaussian function used to model the IRED emission pattern in the Errel model, and ϱF is an equivalent function for the F(0, 0) model: the resulting function remains a Gaussian function with a different dispersion. As was explained in Section 2, in the calibration process the Gaussian dispersion parameter was considered as an additional unknown to guarantee the best possible model fitting.

In the case of the standard deviation of pixel gray level intensities in the ROI containing the IRED image, the I0-free expression would yield:

Formally, the model fitting process for Equations (17) and (18) would be written as:

Equation (19) describes the calibration process for the ΔErrel model. In the case of ΔΣ, the model fitting process can be defined as:

In Equations (19) and (20), an optimization process was carried out to obtain the vectors q and p, which minimize the errors ∊E and ∊Σ respectively, using the Levenberg-Marquardt algorithm.

The results for the model fitting process are shown in Figure 7.

In Figure 7(a,b) the blue square represents the measured ΔErrel and ΔΣ values and the asterisk represents the theoretical values. Each point shown in Figure 7(a,b) represents the differences of Errel and Σ of two images captured with different exposure times.

The results shown in these figures demonstrate that the defined models can be considered valid to mathematically characterize the ΔErrel and ΔΣ parameters; this validity has also been demonstrated by the errors of the model fitting process, which are shown in Figure 7(c,d). Specifically, for the ΔΣ model, the fitting errors were lower than the ΔErrel model, but in both cases the average relative error considering all image differences was lower than 1%.

4. Estimating the Distance between the Camera and the IRED Independently of IRED Radiant Intensity

The measurement alternative is formed by the Equations (17) and (18), which are independent of IRED radiant intensity I0.

Once the models coefficients q and p have been calculated, for each model, an expression to estimate the distance can be defined. For example, for the ΔErrel model, the distance would yield:

Analogously, for the ΔΣ model:

The coefficients a1, 2, 3 and c1, 2, 3, 4 in Equations (22) and (24) depend on ΔErrel ΔF(0, 0), ΔΣ, which are extracted from images, Δt, which is measured in the camera, and θ, which is calculated by the estimated ellipse using the method proposed in [17] and also explained in Section 2. Therefore, once the coefficients in Equations (22) and (24) have been estimated, Equations (21) and (23) can be solved to obtain the distance between the camera and the IRED.

From the root of each of these two equations, the Equations (21) and (23) provide the distance estimation. The contribution of each of these equations could be weighted to take accuracy in the model fitting process into account, as was proposed in [2]. However, in this case the weighting contribution was not considered. Consequently, Equations (21) and (23) were equaled. Thus, the distance can be calculated by solving the following equation:

Mathematically, Equations (21) and (23) have at least one equal roots. If both equations are equaled, then the intersection points of both equations could be estimated. As was shown in Equation (25), the intersection of Equations (21) and (23) is defined by a cubic equation. By obtaining the roots of Equation (24), the solution for the intersection of Equations (21) and (23) can be estimated.

Figure 8 was generated from the data used in the calibration process. It graphically shows that the roots of Equation (25) correspond to the real distance employed to obtain the model coefficients.

Figure 8 shows the behavior of Equation (25) in the range of distances from 1,000 to 3,000 mm for all conditions taken into account in the calibration process.

Each condition was formed by the combination of the values of I0, d, θ and Δt used in the calibration process. For each of the conditions, a set of values for a1,2,3 and c1,2,3,4 coefficients can be obtained, so Equation (25) would be different for each of the conditions used in the calibration process. Specifically, Figure 8 was formed by superposing the entire set of Equation (25) obtained using the calibration data.

Although the data used in the calibration process will be formally defined in Table 3 in Section 5, it can be observed in Figure 8 that Equation (25) has zeros closer to the real distance value used in the calibration process, with dispersion lower than 2% of the real distance value.

Using the fact that two I0-free equations have been defined, another additional unknown was considered to form a system of two equations with two unknowns. Thus, the goal was to calculate the distance between the camera and the IRED as well as the IRED orientation angle simultaneously. Mathematically, this can defined as:

Evidently, using the system of equations defined in Equation (26) constitutes a more general solution to the problem of estimating the distance between a camera and an IRED by considering only the information extracted for pixel grey level intensities and camera exposure times. Furthermore, by estimating from Equation (26) the distance and angle of orientation of the IRED, more efficient use is made of the possibilities offered by this system of equations.

The final alternative proposed in this paper uses the optimization stated in Equation (26) and summarized in Algorithm 1.

| Algorithm 1 Distance Measurement Alternative. | |

| Input: One image of the IRED captured with a reference exposure time tr and n = 1, …, N images of the IRED captured with different exposure times tn. | |

| Output: Distance between the IRED and the camera d(n) and the IRED orientation angle θ(n). | |

| 1: | Estimating parameters from image: |

| 2: | for n = 1 → N do |

| 3: | Δt(n) = tn − tr |

| 4: | Estimating parameters from image: |

| 5: | Forming the differences: |

| 6: | Estimating the IRED orientation angle: by method of the estimated ellipse [17]. |

| 7: | Calculating the coefficients a(n) and c(n) from Equations (22) and (24), respectively. |

| 8: | Obtaining the initial distance estimation by solving c1D3 + (c2 − a1)D2 + (c3 − a2)D + (c4 − a3) = 0 |

| 9: | Optimization method to calculate simultaneously d(n) and θ(n) |

| 10: | with as initial estimations. |

| 11: | end for |

Another interesting aspect addressed in this paper, which can be inferred from Algorithm 1, is that different exposure times were considered to test the alternative for estimating the distance and the IRED orientation angle. This is related to the fact analyzed in [19]. In this study, a differential method to estimate the distance between an IRED and a camera was proposed using the relative accumulated image irradiance. The novelty of this approach resides in the selection of optimum exposure times to perform the distance measurement process. These optimum exposure times can be determined from the bathtub curves, which were obtained from the relative error in the model fitting process. That is why in Algorithm 1, n = 1, …, N exposure times were considered in order to obtain elements from which to select the optimum exposure times to execute the distance estimation algorithm.

The algorithm for estimating the distance and the IRED orientation angle uses several images captured with different exposure times tn and one image captured with tr, which is a reference exposure time, to obtain the differences Δt(n), , ΔF(0,0)(n) and ΔΣ(n). Using the images captured with tn exposure times, the θ(n) were estimated from the estimated ellipse, as proposed in [17]. With the calculated differences and the IRED orientation angle estimated by [17], the coefficients a and c can be calculated. This permits the initial distance estimation to be obtained. Finally, the initial distance estimation obtained by solving Equation (25) and the initial IRED orientation angle obtained by [17] were used as initial values to solve the system of equations, Equation (26). The resultant x from Equation (26) constitutes the final distance and IRED orientation angle estimation.

5. Experimental Setup, Results and Discussions

The main goal of the experimental tests was to demonstrate that the alternatives defined in Equations (17) and (18) and summarized in Algorithm 1 are independent of IRED bias current. Therefore, the term independent means that the measurement alternative is independent of the IRED radiant intensity.

The tests were carried out using the measurement station shown in Figure 9.

To ensure real distances and IRED orientation, an accurate ad-hoc measurement station was built, which was controlled from a PC by serial port communication. The measurement station was composed of a pan-tilt platform onto which the IRED was mounted and which permitted the IRED orientation angles to be changed with a precision of 0.01 degrees. As can be seen in Figure 9(b), the measurement station also allowed the distance between the camera and the pan-tilt platform to be changed with a precision of 0.01 mm.

Figure 9 also shows the camera, the IRED and the power supply to bias the IRED.

It was necessary to calibrate the distance estimation alternative before the distance estimation process could begin. In other words, the model coefficient values had to be calculated before initiating the distance estimation process.

The calibration process was described briefly in Section 3, to demonstrate the validity of the defined model, as shown in Figure 7. However, the data used to estimate the coefficients' values are summarized in Table 3.

By combining the conditions summarized in Table 3, namely, four distances, two IRED bias currents, eight IRED orientation angles and five exposure times, a dataset of 320 images was obtained. From the analysis of these images, 256 parameter differences were obtained, which means that 256 equations were defined. As indicated in Section 3, the results of this calibration process are shown in Figure 7.

Once the model coefficients had been calculated, an experiment to estimate the distance between the IRED and the camera was carried out. In this experiment, a reference exposure time tr =8 ms was considered and images were captured with exposure times from tn = 30 to 40 ms in 2 ms steps. With these times, the differences in exposure times (Δtn) would yield: Δtn = 22, 24, …, 32 ms. The distance was varied from 1,500 to 2,900 mm in 20 cm steps, and the IRED orientation angles were fixed at 0, 10, 20 and 30 degrees. For all available Δtn, a distance estimation was obtained by using the Equation (25). In addition, the IRED bias currents were set at 475, 500 and 525 mA. The results are shown in Figure 10. The goal of this experiment was to test independence from I0 in the distance estimation process, considering all possible conditions. Furthermore, it should be noted that in this experiment, a new value for I0 was used, which had not been considered in the calibration process.

In Figure 10, all distance estimations for all available Δt and for all IRED orientation angles for the three different bias currents are superposed. In the three cases (Figure 10), the estimated distances were qualitatively similar. This indicates that the influence of IRED radiant intensity variation was considerably minimized. However, the accuracy of the distance estimations depends on the accuracy of IRED angle estimation and the differences in exposure times used in the distance measurement process.

To clarify the relationship between the IRED orientation angles and different exposure times, the data plotted in Figure 10 is represented in a 3-D space, as shown in Figure 11, with the goal of determining the influence of differences in exposure times on the distance estimation process.

The dependency of distance estimation accuracy on the differences in exposure times was analyzed in [19], who demonstrated that there is an optimum exposure time difference where the best accuracy in distance estimation can be achieved. Lázaro et al [19] proposed estimating the optimum measurement conditions from the calibration process. In this case, the optimum exposure time difference was estimated by an analysis of the distance estimations shown in Figure 10.

Thus, by analyzing Figure 11, it was possible to determine the exposure time difference where best accuracy would be obtained. In this case, the experimental results indicated that best accuracy in distance and IRED orientation angle estimations was obtained using Δt = 27 ms, as can be seen in Figure 12.

Using the optimum exposure time difference, another experiment was carried out to test the alternative for estimating the distance between the IRED and the camera and the IRED orientation angle simultaneously, as described in Algorithm 1. This experiment considered distances of 1,700, 2,300 and 2,900 mm between the camera and the IRED, and 10, 15 and 20 degrees for the IRED orientation. Besides, unlike the previous experiment, the IRED was biased with a random bias current with a mean of 500 mA and a dispersion of 50 mA. A random value for the IRED bias current was generated for each distance–orientation pair and for each d–θ pair: ten random bias currents were used to bias the emitter and to capture images to estimate the distance and the IRED orientation. In total, 90 d–θ pairs were estimated. The results of the d– θ pairs are shown in Figure 13.

In Figure 13, the blue square represents the real d–θ pairs and the colored circle represents the estimated d–θ pairs. The (x, y) coordinates of these points represent the IRED orientation angle and the distance, respectively. The color of the circles encodes the relative error in distance estimation.

There is an interesting aspect that merits emphasis, which is related to the accuracy of distance estimations. Accuracy can be obtained from Figure 13, from the difference between y coordinates for real and estimated points. For these cases, the error was lower than the differences between x coordinates, which represent the error of IRED orientation angles estimations. Therefore, even with an IRED random bias current, the proposed measurement alternative maintained less than 2% relative error throughout the majority of distance estimations. Figure 13(b) was constructed precisely in order to summarize the behavior of the relative error for all the estimated distances. This figure is a histogram of all relative errors in distance estimations and it can be concluded that from the 90 estimations, the relative error in more than 50% of these was lower than 2%. Indeed, this figure was closer to 1% in approximately 46 estimations, which represented 51% of the distance estimations.

However, as is shown in Figure 13(a), estimation of the IRED orientation angle was not as accurate in this experiment as the distance estimation. In fact, better estimations of the IRED orientation angles would have been obtained using the estimated ellipse method proposed in [17].

Although estimation of the IRED orientation angle was not as accurate as distance estimation, the results obtained were qualitatively accurate, especially for estimated distance. Furthermore, the worst estimations for the IRED orientation angles were obtained for lower than 10 degrees, as shown in Figure 13. In a practical positioning system, the camera must be mounted so as to ensure the greatest field of vision, in order to cover a larger area; the most common solution is to tilt the camera. This means that the optical axes of the camera form an angle with the vertical direction. On the other hand, the IRED is oriented towards the ceiling, and thus the angle between the IRED maximum direction and the vertical direction is zero. In this case, and in most IRED positions, the IRED orientation angle would be higher than 10 degrees and could be estimated by the geometrical model.

As a final experiment, the consistency of the distance measurement alternative was tested. The measurement alternative summarized in Algorithm 1 was included in a loop of 100 iterations. In each iteration, two images were captured, one with tr = 8 ms and the other with tn = 35 ms, with a fixed IRED bias current. The 100 distance estimations for a distance of 2,700 mm and for 0, 10, 20 and 30 degrees of IRED orientation angle are shown in Figure 14. The estimated IRED orientation angles were also estimated but more importance was given to distance estimation.

From Figure 14, the dispersion in distance estimation can be calculated. Note that the proposed alternative provides less than 3% accuracy for distance estimation with 15 mm of dispersion in the range of distance from 1 m to 3 m.

As a final comment, one aspect that requires further study is the selection of the magnitudes included in the camera-IRED system, especially the IRED bias current and the camera exposure times.

The values used in this paper were selected based on empirical results; therefore, the automatic selection of these values would improve the calibration and measurement process.

6. Conclusions and Future Work

In this paper, an IRED radiant-intensity-free model has been proposed to decouple the intensity radiated by the IRED from the distance estimation method using only the information extracted from pixel gray level intensities and a radiometric analysis of the image formation process in the camera.

The camera-to-emitter distance estimation alternatives proposed in [8,10–12] are dependent on IRED radiant intensity, which constitutes a drawback for future implementation using the abovementioned alternatives individually. As was explained earlier, IRED radiant intensity depends on the IRED bias current and this varies according to many factors, including temperature. In this particular case, temperature drifts would be transferred to the distance estimations. This imposes the requirement that the I0 parameter must be decoupled from the camera-to-IRED distance estimation alternatives.

The previously proposed alternatives to estimate the distance between an IRED and a camera have also considered the camera as aligned with the IRED, which reduces the possibilities of future implementations. However, in this paper, a study of the effect of the IRED orientation angle was performed and this effect was modeled by a Gaussian function.

The dispersion of the Gaussian function used to model the effect of the IRED emission pattern was included as an additional unknown in the calibration process for each individual model defined for camera-to-IRED distance estimation. In the model validation, it has been demonstrated that a maximum error of 4% was obtained for the three individual models proposed in [8,10–12].

Once the IRED orientation angle effect had been described mathematically, a method to estimate the IRED orientation angle was implemented by using a circular IRED through the estimation of the ellipse formed by the projection of the circular IRED on the image plane. This method provided an IRED orientation angle with 2 degrees of maximum error.

However, the main contribution of this paper is the mathematical approach to characterizing the IRED—camera system independently of IRED radiant intensity, by using the models defined in [8,10–12] together in order to form a system of equations.

The procedure to eliminate the effect of IRED radiant intensity uses the F(0, 0) model to obtain an expression for I0, which is substituted in the Errel and Σ models, respectively. Therefore, two I0-free expressions were obtained.

From the two I0-free expressions, the distance is the main unknown; however, an optimization scheme was defined to calculate the distance between the IRED and the camera and the IRED orientation angle simultaneously.

The I0-free alternative was tested for distance estimation in the range from 1,500 to 2,900 mm with three different IRED bias currents, for different IRED orientation angles and different exposure times. The results of distance estimations were very similar for all the conditions considered in this experiment. Furthermore, the experimental results obtained permitted the selection of optimum exposure time differences where distance estimations were more accurate.

Once the optimum exposure times had been selected, another experiment was carried out. In this case, the IRED was biased using a random bias current, in order to determine the independence of the distance estimation method from variations in IRED radiant intensity. Considering the random bias current, the experimental results demonstrated that the proposed alternative provided a maximum error of 2% in distance estimation. However, the IRED orientation angle estimations were not as accurate as the distance estimations.

As a further validation experiment, the consistency of the distance estimation method was tested for three different distance values over 100 repetitions. The results over the 100 repetitions showed that the maximum error of the average distance estimation was lower than 3% and the maximum dispersion was lower than 15 mm.

Finally, some aspects require further research. For example, throughout the modeling process, the IRED radiant intensity and the camera exposure times were selected empirically. Thus, a quality index based on on-line analysis of the IRED images acquired by the camera, to facilitate the assignment of values for these magnitudes, must be defined in order to increase the efficiency of the modeling and measurement processes, respectively.

Acknowledgments

This research was funded by the Spanish Ministry of Science and Technology sponsored project ESPIRA DPI2009-10143.

Conflict of Interest

The authors declare no conflict of interest.

References

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Press Syndicate of the University of Cambridge: Cambridge, UK, 2003. [Google Scholar]

- Fernández, I. Sistema de Posicionamiento Absoluto de un Robot Móvil utilizando Cámaras Externas. Ph.D. Thesis, Universidad de Alcalá, Madrid, Spain, July 2005. [Google Scholar]

- Toscani, G.; Faugeras, O.D. The Calibration Problem for Stereo. Proceedings of IEEE Conference of Computer Vision and Pattern Recognition, London, UK, 8–11 June 1987; Volume 1, pp. 323–344.

- Xu, G.; Zhang, Z. Epipolar Geometry in Stereo, Motion and Object Recognition. A Unified Approach; Kluwer Academic Publisher: Norwell, MA, USA, 1996. [Google Scholar]

- Nayar, S.; Watanabe, M.; Noguchi, M. Real-time focus range sensor. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 1186–1198. [Google Scholar]

- Lázaro, J.L.; Lavest, J.M.; Luna, C.A.; Gardel, A. Sensor for simultaneous high accurate measurement of three-dimensional points. J. Sens. Lett. 2006, 4, 426–432. [Google Scholar]

- Ens, J.; Lawrence, P. An investigation of methods for determining depth from focus. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 97–108. [Google Scholar]

- Cano-García, A.; Lázaro, J.L.; Fernández, P.; Esteban, O.; Luna, C.A. A preliminary model for a distance sensor, using a radiometric point of view. Sens. Lett. 2009, 7, 17–23. [Google Scholar]

- Galilea, J.L.; Cano-García, A.; Esteban, O.; Pompa-Chacón, Y. Camera to emitter distance estimation using pixel grey-levels. Sens. Lett. 2009, 7, 133–142. [Google Scholar]

- Lázaro, J.L.; Cano, A.E.; Fernández, P.R.; Luna, C.A. Sensor for distance estimation using FFT of images. Sensors 2009, 9, 10434–10446. [Google Scholar]

- Lázaro, J.L.; Cano, A.E.; Fernández, P.R.; Pompa-Chacón, Y. Sensor for distance measurement using pixel gray-level information. Sensors 2009, 9, 8896–8906. [Google Scholar]

- Cano-García, A.E.; Lázaro, J.L.; Infante, A.; Fernández, P.; Pompa-Chacón, Y.; Espinoza, F. Using the standard deviation of a region of interest in an image to estimate camera to emitter distance. Sensors 2012, 12, 5687–5704. [Google Scholar]

- Mitsunaga, T.; Nayar, S.K. Radiometric Self Calibration. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Fort Collins, CO, USA, 23–25 June 1999.

- Debevec, P.E.; Malik, J. Recovering High Dynamic Range Radiance Maps from Photographs. Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH '97), Los Angeles, CA, USA, 3–8 August 1997; pp. 369–378.

- McCluney, R. Introduction to Radiometry and Photometry; Artech House: London, UK, 1994. [Google Scholar]

- Moreno, I.; Sun, C.C. Modeling the radiation pattern of LEDs. Opt. Express 2008, 16, 1808–1819. [Google Scholar]

- Putz, V.; Zagar, B. Single-Shot Estimation of Camera Position and Orientation Using SVD. Proceedings of the Instrumentation and Measurement Technology Conference (IMTC 2008), San Francisco, CA, USA, 12–15 May 2008; pp. 1914–1919.

- Rashid, M.H. Circuitos Microelectrónicos, Análisis y Diseño; International Thomson Editores: México D.F., Mexico, 1999. [Google Scholar]

- Lazaro, J.L.; Cano, A.E.; Fernández, P.R.; Domínguez, C.A. Selecting an optimal exposure time for estimating the distance between a camera and an infrared emitter diode using pixel grey-level intensities. Sens. Lett. 2009, 7, 1086–1092. [Google Scholar]

| Model Parameter | f(t) | f(I0) | f(d−2) | f(θ) |

|---|---|---|---|---|

| Errel | Linear | Linear | Linear | Linear with a function of the emitter pattern |

| F(0, 0) | Linear | Linear | Linear | Linear with a function of the emitter pattern |

| Σ | Linear | Linear | Quadratic | Linear with a function of the emitter pattern |

| Parameter | Equation in the General Form |

|---|---|

| Errel | (τE1 t + τE2) × (ϱE1 I0 + ϱE2) × (δE1 D + δE2) × (γE1 ϱE(θ) + γE2) |

| F(0, 0) | (τF1 t + τF2) × (ρF1 I0 + ρF2) × (δF1 D + δF2) × (γF1 ϱF (θ) + γF2) |

| Σ | (τΣ1 t + τΣ2) × (ρΣ1 I0 + ρΣ2) × (δΣ1 D2 + δΣ2 D + δΣ3) × (γΣ1 ϱΣ (θ) + γΣ2) |

| Calibration Data | |

|---|---|

| Exposure time [ms] | tr =8, tn =30, 32, 34 and 36 |

| IRED bias current [mA] | 475 and 500 |

| Distances [mm] | 1500, 2000, 2500 and 3000 |

| IRED orientation angles [degrees] | 0, 5, 10, 15, 20, 25, 30 and 35 |

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Cano-García, A.E.; Galilea, J.L.L.; Fernández, P.; Infante, A.L.; Pompa-Chacón, Y.; Vázquez, C.A.L. Decoupling Intensity Radiated by the Emitter in Distance Estimation from Camera to IR Emitter. Sensors 2013, 13, 7184-7211. https://doi.org/10.3390/s130607184

Cano-García AE, Galilea JLL, Fernández P, Infante AL, Pompa-Chacón Y, Vázquez CAL. Decoupling Intensity Radiated by the Emitter in Distance Estimation from Camera to IR Emitter. Sensors. 2013; 13(6):7184-7211. https://doi.org/10.3390/s130607184

Chicago/Turabian StyleCano-García, Angel E., José Luis Lázaro Galilea, Pedro Fernández, Arturo Luis Infante, Yamilet Pompa-Chacón, and Carlos Andrés Luna Vázquez. 2013. "Decoupling Intensity Radiated by the Emitter in Distance Estimation from Camera to IR Emitter" Sensors 13, no. 6: 7184-7211. https://doi.org/10.3390/s130607184