A Smartphone-Based Automatic Diagnosis System for Facial Nerve Palsy

Abstract

:1. Introduction

2. Experiment

2.1. Incremental Parallel Cascade of Linear Regression

2.2. Data Acquisition

2.3. Feature Extraction

2.3.1. Local Points-Based Feature Extraction

Asymmetry Index of Forehead Region

- (1)

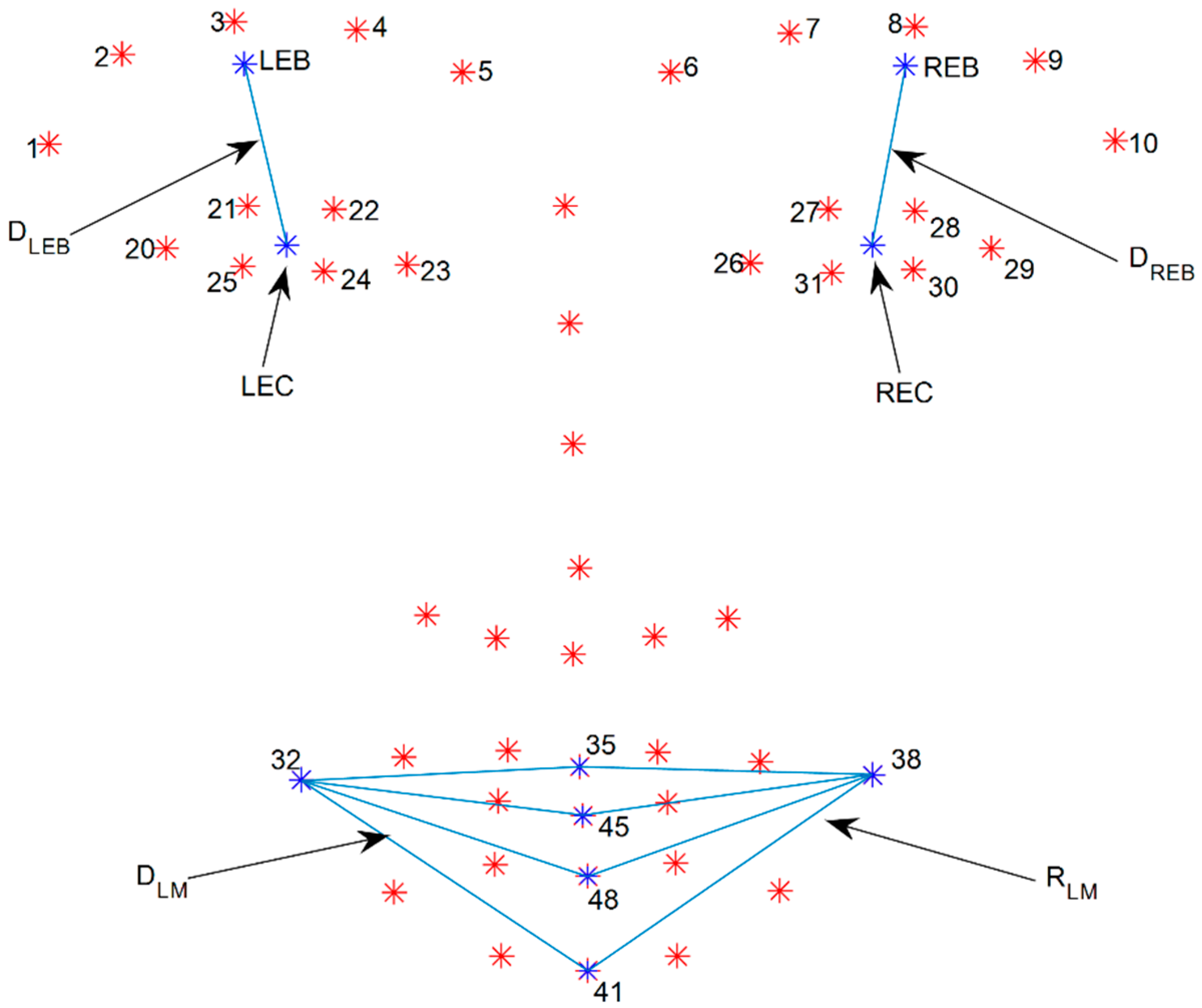

- Calculate the mean point of the left and right eye-brows (LEB and REB) by averaging five points from each eye-brow from number one to five and six to ten as shown in Figure 3:

- (2)

- Calculate the mean point of the eye (LEC and REC) by averaging six points from each eye from the numbers 20 to 25 and 26 to 31 as shown in Figure 3:

- (3)

- Calculate distance ( and ) between the mean point of the eyebrow and that of the eye as shown in Figure 3:

- (4)

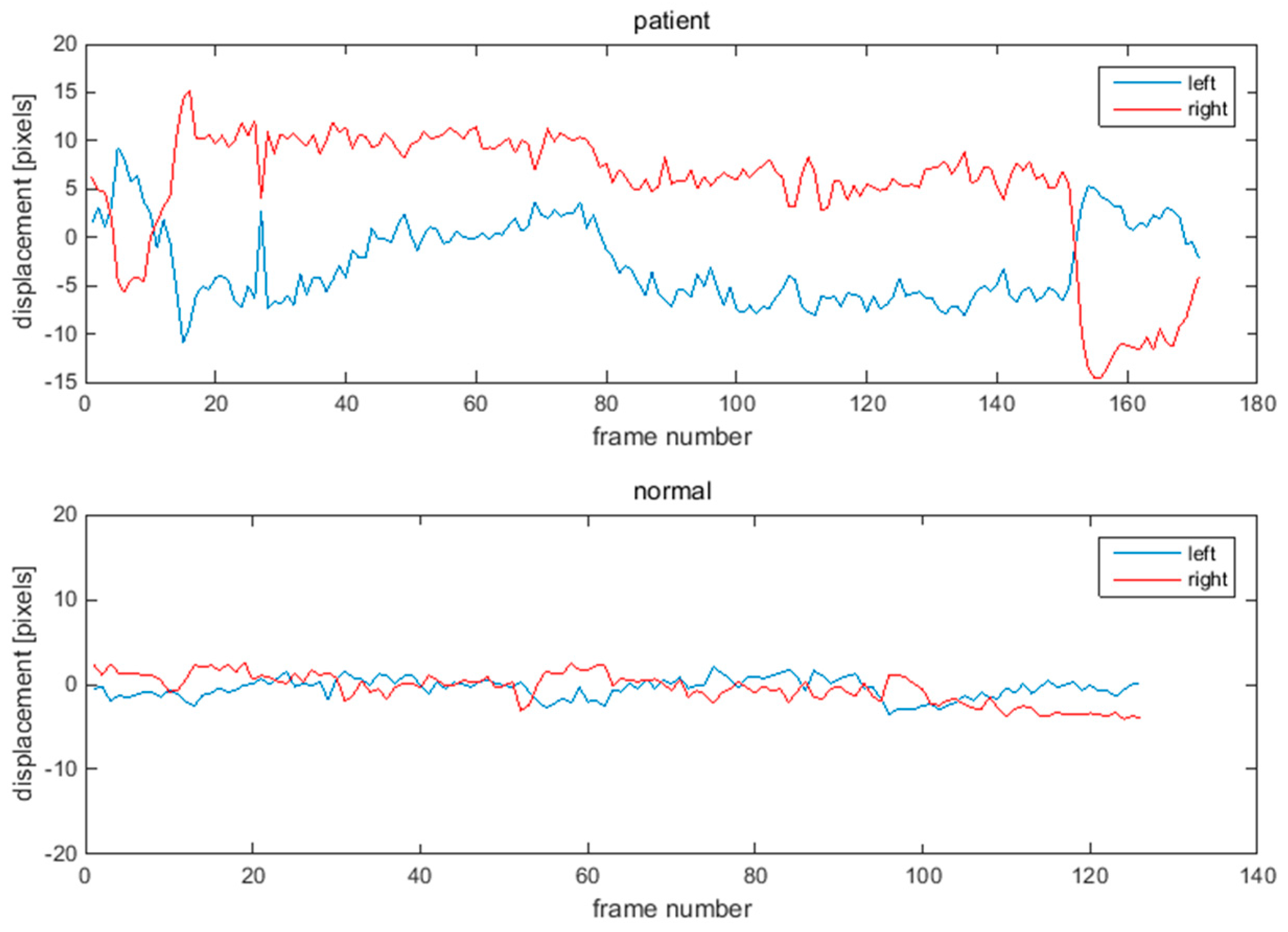

- Calculate displacement on each side by subtracting the mean distance of the resting state from the maximum distance of the raising eye brow movement:

- (5)

- Calculate the displacement ratio between the left and right side of the forehead. After comparing the two displacement values, the larger becomes the denominator:

Asymmetry Index of Mouth Region

- (1)

- Calculate the mean distance ( and ) between the point of the mouth corner and the points of the middle of mouth () as shown in Figure 3:

- (2)

- Calculate the displacement of each side by subtracting the mean distance of the resting state from the maximum distance of the smile movement:

- (3)

- Calculate the displacement ratio between the left and right side of the mouth. After comparing the two displacement values, the larger becomes the denominator.

2.3.2. Axis-Based Feature Extraction

Asymmetry Index of Forehead Region

- (1)

- Calculate the mean point of the eyebrows by averaging five points for each eyebrow (from the numbers one to five and six to ten, as shown in Equation (2)).

- (2)

- Find the point of intersection with the mean points of the eyebrows by drawing lines perpendicular to the horizontal line.

- (3)

- Calculate the distance between the mean point of the eyebrow and the point of intersection ( and shown in Figure 4).

- (4)

- Calculate the displacement of each side by subtracting the mean distance of the resting state from the maximum distance of the raising eyebrow movement.

- (5)

- Calculate the displacement ratio between the left and right side of the forehead. After comparing the two displacement values, the larger becomes the denominator.

Asymmetry Index of Mouth Region

- (1)

- Find the point of intersection with the points of the mouth corners by drawing lines perpendicular to the vertical line.

- (2)

- Calculate the distance between the point of the mouth corner and the point of intersection ( and shown in Figure 4).

- (3)

- Calculate the displacement of each side by subtracting the mean distance of the resting state from the maximum distance of the smile movement.

- (4)

- Calculate the displacement ratio between the left and right side of the mouth. After comparing the two displacement values, the larger becomes the denominator.

2.4. Subjects

3. Results

| LDA | SVM (Linear) | |||||

|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | Accuracy | Precision | Recall | |

| Forehead_axis + Mouth_axis | 77.8 | 76.9 | 66.7 | 77.8 | 76.9 | 66.7 |

| Forehead_axis + Mouth_region | 66.7 | 46.2 | 54.6 | 63.9 | 46.2 | 50.0 |

| Forehead_region + Mouth_axis | 88.9 | 92.3 | 80.0 | 88.9 | 92.3 | 80.0 |

| Forehead_region + Mouth_region | 75.0 | 85.7 | 63.2 | 77.8 | 84.6 | 64.7 |

4. Discussion

4.1. Simulation of Asymmetry Index with Various Head Orientations

4.2. Measurement Error

4.3. Analysis of Eye Region

4.4. Combination of Asymmetry Indices

4.5. Performance Comparison with Conventional Methods

4.6. Limitations of Proposed System

4.7. Future Works

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- House, J.W.; Brackmann, D.E. Facial nerve grading system. Otolaryngol. Head Neck Surg. 1985, 93, 146–147. [Google Scholar] [CrossRef] [PubMed]

- Park, M.; Seo, J.; Park, K. PC-based asymmetry analyzer for facial palsy study in uncontrolled environment: A preliminary study. Comput. Methods Prog. Biomed. 2010, 99, 57–65. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Dong, J.; Sun, X.; Zhang, S.; Wang, S. Automatic recognition of facial movement for paralyzed face. Biomed. Mater. Eng. 2014, 24, 2751–2760. [Google Scholar] [PubMed]

- McGrenary, S.; O'Reilly, B.F.; Soraghan, J.J. Objective grading of facial paralysis using artificial intelligence analysis of video data. In Proceedings of the 18th IEEE Symposium on Computer-Based Medical Systems, Dublin, Ireland, 23–24 June 2005; pp. 587–592.

- He, S.; Soraghan, J.J.; O'Reilly, B.F.; Xing, D.S. Quantitative analysis of facial paralysis using local binary patterns in biomedical videos. IEEE Trans. Biomed. Eng. 2009, 56, 1864–1870. [Google Scholar] [PubMed]

- Delannoy, J.R.; Ward, T.E. A preliminary investigation into the use of machine vision techniques for automating facial paralysis rehabilitation therapy. In Proceedings of the IET Irish Signals and Systems Conference (ISSC 2010), Cork, Ireland, 23–24 June 2010; pp. 228–232.

- Asthana, A.; Zafeiriou, S.; Shiyang, C.; Pantic, M. Incremental face alignment in the wild. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1859–1866.

- Belhumeur, P.N.; Jacobs, D.W.; Kriegman, D.; Kumar, N. Localizing parts of faces using a consensus of exemplars. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 35, 2930–2940. [Google Scholar] [CrossRef] [PubMed]

- Le, V.; Brandt, J.; Lin, Z.; Bourdev, L.; Huang, T. Interactive Facial Feature Localization. In Computer Vision–ECCV 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer Heidelberg: Berlin, Germany, 2012; pp. 679–692. [Google Scholar]

- Zhu, X.; Ramanan, D. Face detection, pose estimation, and landmark localization in the wild. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2879–2886.

- Sagonas, C.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. A semi-automatic methodology for facial landmark annotation. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Portland, OR, USA, 23–28 June 2013; 2013; pp. 896–903. [Google Scholar]

- Sagonas, C.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. 300 faces in-the-wild challenge: The first facial landmark localization challenge. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops (ICCVW), Sydney, NSW, Australia, 2–8 December 2013; pp. 397–403.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.S.; Kim, S.Y.; Kim, Y.H.; Park, K.S. A Smartphone-Based Automatic Diagnosis System for Facial Nerve Palsy. Sensors 2015, 15, 26756-26768. https://doi.org/10.3390/s151026756

Kim HS, Kim SY, Kim YH, Park KS. A Smartphone-Based Automatic Diagnosis System for Facial Nerve Palsy. Sensors. 2015; 15(10):26756-26768. https://doi.org/10.3390/s151026756

Chicago/Turabian StyleKim, Hyun Seok, So Young Kim, Young Ho Kim, and Kwang Suk Park. 2015. "A Smartphone-Based Automatic Diagnosis System for Facial Nerve Palsy" Sensors 15, no. 10: 26756-26768. https://doi.org/10.3390/s151026756

APA StyleKim, H. S., Kim, S. Y., Kim, Y. H., & Park, K. S. (2015). A Smartphone-Based Automatic Diagnosis System for Facial Nerve Palsy. Sensors, 15(10), 26756-26768. https://doi.org/10.3390/s151026756