1. Introduction

Recently, various localization technologies for mobile robots have been studied with respect to acquiring accurate environmental information. Typically, mobile robots have self-organized sensors to obtain environmental information. However, such robots are very expensive to manufacture and have complicated body structures. The structural layout of indoor environments is typically a known state; thus, many localization studies have obtained the required information from external sensors installed on a robot. The infrared light, ultrasonic, laser range finder, RFID (Radio Frequency Identification), and RADAR (Radio Detecting and Ranging) are the popularly used sensors for localization. Hopper

et al. [

1] presented an active sensing system, which uses infrared emitters and detectors to achieve 5–10 m accuracy. However, this sensor system is not suitable for high-speed application as the localization cycle requires about 15 s and always requires line of sight. Ultrasonic sensor uses the time of flight measurement technique to provide location information [

2]. However, the ultrasonic sensor requires a great deal of infrastructure for its high effectiveness and accuracy. Laser distance measurement is executed by measuring the time that it takes for a laser light to be reflected off a target and returned back to the sensor. Since the laser finder is a very accurate and quick measurement device, this device is widely used in many applications. Subramanian

et al. [

3] and Barawid

et al. [

4] proposed a localization method based on a laser finder. The laser finder was used to acquire environment distance information that can be used to identify and avoid obstacles during navigation. However, their high performance relies on high hardware costs. Miller

et al. [

5] presented an indoor localization system based on RFID. RFID based localization used RF tags and a reader with an antenna to locate objects, but detection of each tag can only work over about 4–6 m. Bahl

et al. [

6] and Lin

et al. [

7] introduced the RADAR system, which is a radio-frequency (RF) based system for locating and tracking users inside buildings. The concept of RADAR is to measure signal strength information at multiple stations positioned to provide overlapping field of coverage. It aggregates real measurements with signal propagation modeling to determine object location, thereby enabling location-aware applications. The accuracy of the RADAR system was reported by 2–3 m.

Recently, visual image location systems have been preferred because they are not easily disturbed by other sensors [

2,

8,

9]. Sungho [

10] used workspace landmark features as external reference sources. However, this method has insufficient accuracy and is difficult to install and maintain due to the required additional equipment. Kim

et al. [

11] proposed the augmented reality techniques to achieve an average location recognition success rate of 89%, though the extra cost must be considered. Cheoket

et al. [

12] developed a method of localization and navigation in wide indoor areas by using a vision sensor. Though the set-up cost is lower, this method is not easy to implement if users do not have knowledge about the basic concept of electronic circuit analysis Recently, two camera localization systems have been proposed [

13,

14]. The concept of this system is that the object distances can be calculated by a triangular relationship from two different images of the cameras. However, to ensure the measuring reliability, the relative coordinates between two cameras must be maintained at the same position. In addition, the set-up cost of the experimental environment is quite expensive due to the use of two cameras.

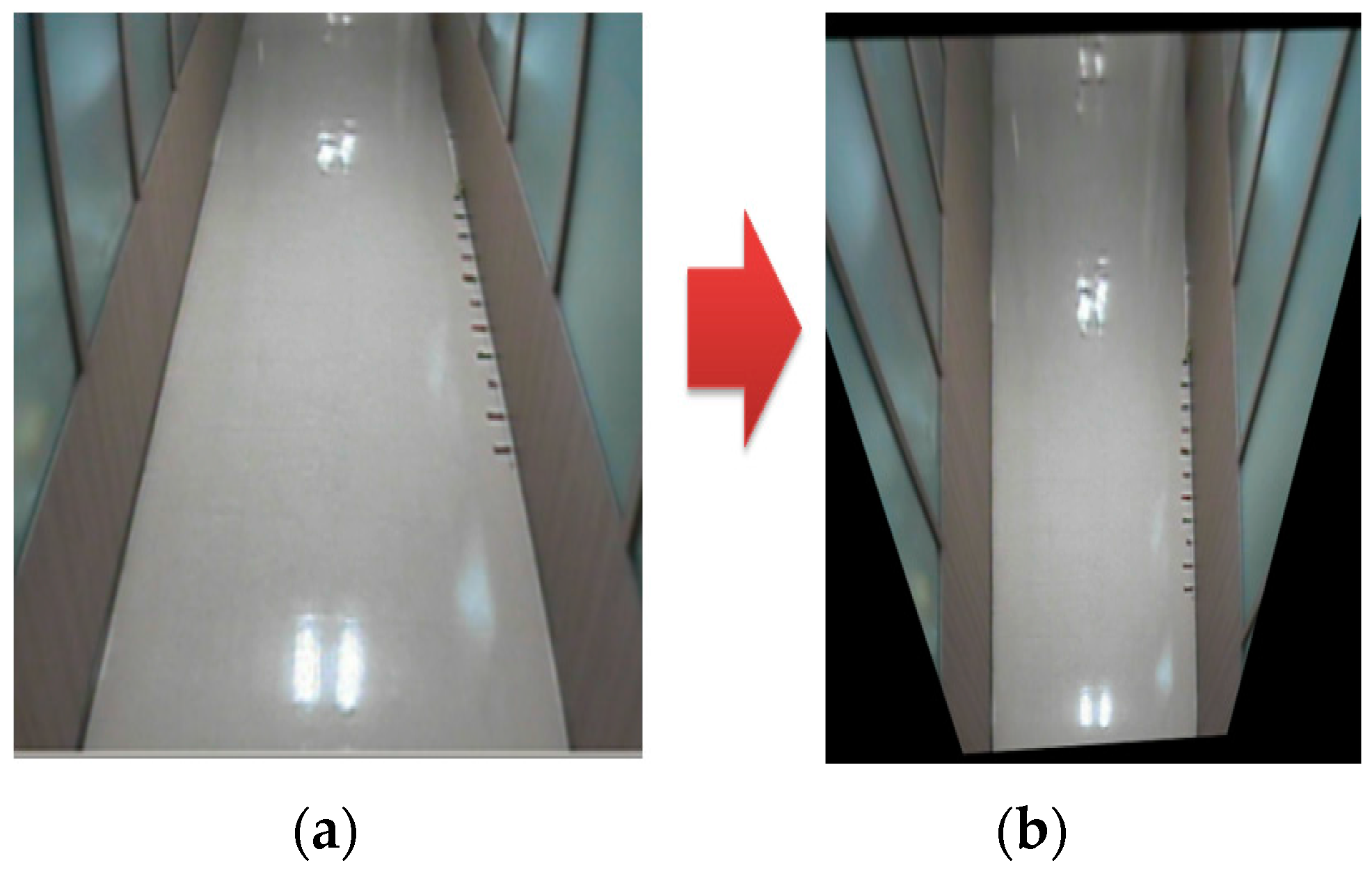

Nowadays, surveillance systems exist in most modern buildings, and cheap cameras are usually installed around these buildings. Indoor surveillance cameras are typically installed without blind areas, and visual data are transferred to a central data server for processing and analysis. If a mobile robot can determine its position using indoor cameras, it would not require an additional sensor for localization and could be applied to multi-agent mobile robot systems [

15,

16]. Kuscue

et al. [

17] and Li

et al. [

18] proposed a vision-based localization method using a single ceiling mounted surveillance camera. However, there are several problems that must be addressed prior to the realization of this concept. First, lens distortion arises from the poor-quality lenses in surveillance cameras, and shadow effects are produced by indoor light sources [

19,

20]. Second, information about occluding objects cannot be obtained using a single camera. Third, calibrations of camera and a map for localization are carried out independently, and it is very time-consuming work.

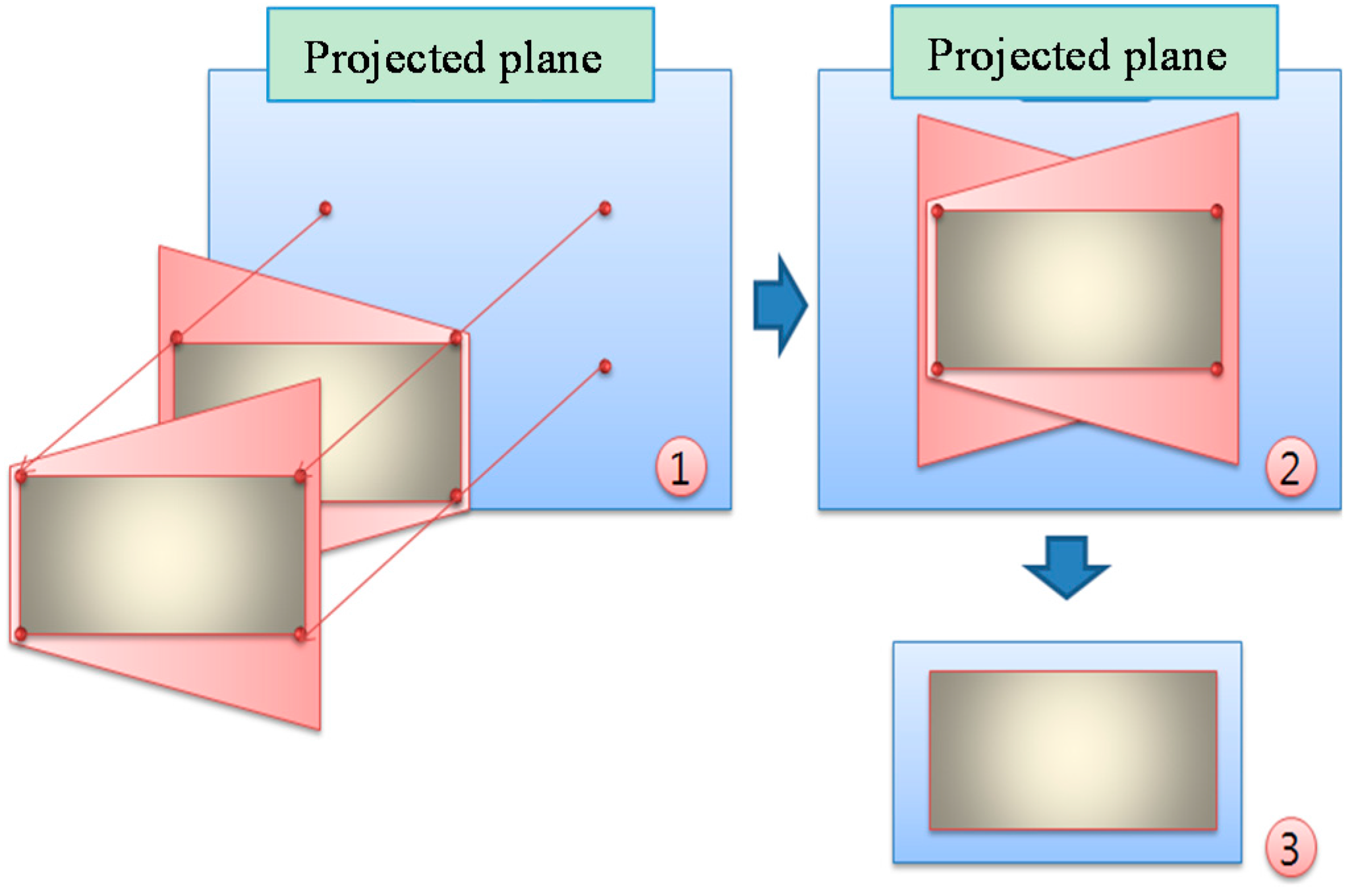

Herein, we propose a localization method for a mobile robot to overcome the abovementioned problems associated with indoor environments. A two-dimensional map containing robot and object position information is constructed using several neighboring surveillance cameras [

21]. The concept of this technique is based on modeling the four edges of the projected image of the field of coverage of the camera and an image processing algorithm of the finding object’s center for enhancing the location estimation of objects of interest. This approach relies on coordinate mapping techniques to identify the robot in the environment using multiple ceiling-mounted cameras. It can be applied for localization in complex indoor environments like T- and L-shaped environments. In addition, simultaneous calibration of cameras and a two-dimensional map can be carried out. Via the above modeling process, a 2D map is built in the form of air-view and quite accurate location can be dynamically acquired from a scaled grid of the map. Significant advantages of the proposed localization are its minimal cost, simple calibration and little occlusion, where it needs multiple ceiling-mounted inexpensive cameras that are installed in opposition to each other and wirelessly communicate with the mobile robot and update its current estimated position. Moreover, we experimentally demonstrate the effectiveness of the proposed method by analyzing the resulting robot movements in response to the location information acquired from the generated map.

3. Topology Map Building and Optimal Path

Path planning is required for a robot to safely move without colliding into any object placed on the two dimensional map (

Section 2). Herein, we employ the thinning algorithm [

22]. The thinning algorithm leaves a single pixel in the center after continuous elimination of contour in random areas. Thus, a path by which a robot can avoid obstacles and move safely is generated by the thinning algorithm.

After generating a moving path with the thinning algorithm, as shown in

Figure 11 and

Figure 12, a movement indicator is required for a robot. Thus, a topological map is generated to create the path indicator. However, the algorithm can generate a path that is difficult for a robot to move along; thus, eliminating information about such paths by checking the area around nodes is required. To make robots move through nodes, an area around each node that is larger than that of the robot should be examined to eliminate nodes and edges that cannot be traversed by the robot. Note that a robot cannot pass if there is an object in the search field around a node and if the search field exists beyond the boundary of an image around a node.

Figure 11.

Example image of objects on a two-dimensional map before applying the thinning algorithm.

Figure 11.

Example image of objects on a two-dimensional map before applying the thinning algorithm.

Figure 12.

Topology map after applying the thinning algorithm.

Figure 12.

Topology map after applying the thinning algorithm.

Figure 13 shows an image formed after removing a searched path by which a robot cannot pass. A path by which a robot could pass was generated with a topology map by applying the thinning algorithm.

Figure 13.

Modified driving path via searching path.

Figure 13.

Modified driving path via searching path.

The A* algorithm is a graph exploring algorithm that calculates an optimal driving path with a given starting point and goal [

23]. It uses a heuristic estimate on each node to estimate the shortest route to the target node with minimal calculation.

Figure 14 shows the shortest robot path estimated by the A* algorithm. A robot moves along nodes on the estimated path.

Figure 14.

Shortest robot path by A* algorithm.

Figure 14.

Shortest robot path by A* algorithm.

4. Experimental Results

We performed a series of experiments to demonstrate the effectiveness of the proposed two-dimensional-map-based localization method using indoor surveillance cameras. The width and length of the floor viewed by the two neighboring cameras were 2.2 and 6 m, respectively. The detected two-dimensional map by homography represents the area of the floor viewed by the two cameras in an air view. We used a self-developed mobile robot with an omnidirectional wheel in the experiment. The surveillance camera had a resolution of 320 × 240 pixels and three RGB (Red Green Blue) channels.

The accuracy of the two dimensional map with the proposed method was experimentally obtained. Each position error in

Figure 15 has two

x- and

y-axis components in a plane. To represent two error components as a single parameter at each position, we suggest the position error estimation shown in

Figure 16. Here,

xreal and

yreal mean the real position, and

xmeasur and

ymeasur represent the visually detected object position on the two dimensional map.

xe and

ye are obtained using Equation (4).

Figure 15.

Position error estimate.

Figure 15.

Position error estimate.

Position error

verror is composed of

xe and

ye in Equation (4) and is expressed by Equation (5). The magnitude of position error estimate

verror is obtained using Equation (6).

We define the position error estimate

E(

x,

y) as the absolute value of

verror using Equation (7).

Here,

E(

x,

y) is the error plane of the difference between the real and visually detected object position. To examine the effectiveness of the proposed error compensation by homography (

Section 2), we compared

E(

x,

y) before and after error compensation. The position error estimate before error compensation is shown in

Figure 16. The

x–

y plane of

Figure 16 is the

x–

y plane of the two-dimensional map, and the

z plane is the value of

E(

x,

y). The maximum and average values of

E(

x,

y) are 11.5 and 6.7 cm, respectively. After error compensation by homography, the maximum and average values of

E(

x,

y) are 7.1 and 2.6 cm, respectively, as shown in

Figure 17. The maximum position error was decreased by 38% through the proposed error compensation method. The accuracy of the two-dimensional map obtained by two ceiling surveillance cameras is 7.1 cm, which is sufficient for mobile robot localization.

Figure 16.

Position error estimate before error compensation.

Figure 16.

Position error estimate before error compensation.

Figure 17.

Position error estimate after error compensation.

Figure 17.

Position error estimate after error compensation.

Figure 18 shows two images from the two neighboring surveillance cameras. There are several objects on the floor. A mobile robot was controlled to move from one position to the opposite position using the proposed localization based on the two-dimensional map described in

Section 2.2. We used the A* algorithm as the path planning method for the mobile robot. The objects on the floor were detected by homography as the object area in the projected plane, and the robot’s moving path was planned considering the object area in the two-dimensional map. The experimental results of the robot’s path control are shown in

Figure 19. The error bounds between the planned and actual movement path of the robot was ±5 cm. This means that the proposed localization method may be effective for indoor mobile robots.

Figure 18.

Objects in the experimental environment.

Figure 18.

Objects in the experimental environment.

Figure 19.

Experimental results of the robot’s path control using the proposed localization method.

Figure 19.

Experimental results of the robot’s path control using the proposed localization method.

To show that the proposed method can be applied for complex indoor environments, an experiment was carried out at a T-shaped indoor environment. As shown in

Figure 20a, three surveillance cameras were used to build a two-dimensional map.

Figure 20b–d show images from the three cameras.

Figure 20.

(a) Map of our experimental environment; (b) Image of camera 1; (c) Image of camera 2; (d) Image of camera 3.

Figure 20.

(a) Map of our experimental environment; (b) Image of camera 1; (c) Image of camera 2; (d) Image of camera 3.

Figure 21 shows the two-dimensional map using the proposed method. The accuracy of the two-dimensional map by three ceiling surveillance cameras is 7 cm, which is satisfactory for mobile robot localization.

Figure 21.

Experimental results of the two-dimensional map using the proposed localization method.

Figure 21.

Experimental results of the two-dimensional map using the proposed localization method.