Abstract

Environmental perception and information processing are two key steps of active safety for vehicle reversing. Single-sensor environmental perception cannot meet the need for vehicle reversing safety due to its low reliability. In this paper, we present a novel multi-sensor environmental perception method using low-rank representation and a particle filter for vehicle reversing safety. The proposed system consists of four main steps, namely multi-sensor environmental perception, information fusion, target recognition and tracking using low-rank representation and a particle filter, and vehicle reversing speed control modules. First of all, the multi-sensor environmental perception module, based on a binocular-camera system and ultrasonic range finders, obtains the distance data for obstacles behind the vehicle when the vehicle is reversing. Secondly, the information fusion algorithm using an adaptive Kalman filter is used to process the data obtained with the multi-sensor environmental perception module, which greatly improves the robustness of the sensors. Then the framework of a particle filter and low-rank representation is used to track the main obstacles. The low-rank representation is used to optimize an objective particle template that has the smallest L-1 norm. Finally, the electronic throttle opening and automatic braking is under control of the proposed vehicle reversing control strategy prior to any potential collisions, making the reversing control safer and more reliable. The final system simulation and practical testing results demonstrate the validity of the proposed multi-sensor environmental perception method using low-rank representation and a particle filter for vehicle reversing safety.

1. Introduction

Vehicles have long been widely utilized around the world. To reduce the probability and rate of traffic accidents is always the focus of research [1,2,3,4]. The growing number of reversing traffic accidents has become a serious social safety problem in recent years. Collision, especially reversing collision due to careless or violent driving, is one of the major causes of traffic accidents [5,6]. Drivers’ abnormal behavior and some minor mistakes may result in a great deal of accidents when the vehicle reverses. In 2012, the National Highway Traffic Safety Administration (NHTSA) reported that about 8% of traffic accidents were caused by driving in reverse [7]. In Finland, analysis of data indicated that reversing accidents contributed to approximately 24% of motor vehicle crashes and most of the reversing accidents (70%) took place in parking lots [8]. Statistics also show that reversing accidents are 1.2 times more likely to occur with middle-aged drivers than with young drivers. The main reason for the increase of reversing accidents might be that the rear view is a blind and restricted area [8,9]. Also, in another study of the NHTSA [10], 99% of reversing accidents were due to drivers’ behavior and about 80% of all driving mishaps are due to drivers’ inattention when reversing. The reversing accidents reflect problems in vehicle maneuvering and drivers’ observations. In reversing traffic safety, an obstacle detection and avoidance system plays an important role in preventing reversing collisions. The earlier an obstacle in the rear area is detected or the better the restricted rear area is viewed, the more chances there are to protect passengers.

To avoid these reversing accidents and to reduce losses through reversing accidents, several emerging technologies were rapidly developed [11]. Sensors installed at the rear of a vehicle can effectively help watch for potential reverse collisions. Vehicle reversing safety using multi-sensors and pre-collision technology have been extensively developed and implemented. We mainly devote this paper to multi-sensors and key information processing in efforts to enhance safety while reversing.

1.1. Multi-Sensor Environmental Perception for Vehicle Reversing

Pre-collision detection using multi-sensor technology, both moving object detection [1] and the vehicle parking assistant [9,12,13,14], is an important part of a reversing vehicle’s active safety system. The systems use multiple sensors, such as RADAR, LIDAR, or GPS technology and a camera, to perceive the current traffic situation for vehicle collision detection and reversing safety [11]. Another example of multiple sensors, including motion sensors (accelerometer, gyroscope, and magnetometer), wireless signal strength indicators (WiFi, Bluetooth, and Zigbee), and visual sensors (LIDAR and camera), is used in a hidden Markov model (HMM) framework for mobile-device user-positioning [15]. A new integrated vehicle health maintenance system (IVHMS) [16], equipped with gear sensors, engine sensors, and fuel and electrical sensors, is reported. The different kinds of sensors mentioned in the above three papers perceive different information and so provide the whole state of the system. Some information, for example-images of a target and the distance between two objects, can be obtained by different sensors. As described in [17], the authors presented multi-sensors of eight thermal infrared and panchromatic images to gain better results than an individual sensor. All of the endeavors of the researchers aim to improve the performance of environmental perception. The authors of [18] described a probabilistic analysis of dynamic scenes and collision risk assessment to improve driving safety by means of sensor data (LIDAR-based sensors). Among the multiple sensors, stereo vision stands out because it is not limited to plane vision and gives precise measurements. Stereo vision can combine the views from between the left and right cameras to obtain a 3D visual of an object [19]. Owing to these features, stereo vision can be used to detect object distance and track obstacles and potential collisions for a reversing or parking vehicle [11,13,20,21].

According to [15], multiple sensors might offer erroneous or inconsistent information. So, in order to gain the ability to identify and eliminate spurious data with high accuracy, information from multiple sensors must be processed properly [22]. However, since the different sensors are used to measure different physical phenomena, it is not easy to effectively fuse the information to have a better result. One of the major problems in multi-sensor information fusion is that the sensors frequently provide spurious observations that are useless to predicting and modeling. Thus many researchers have attempted to settle this problem by developing fusion systems and a fusion framework [23] based on the information obtained from multi-sensors. In [24], the authors presented a unified sensor fusion strategy based on a modified Bayesian approach that can automatically identify the inconsistency in sensor measurements. Other authors presented a sensor-fusion module with integrated vision, Global Positioning Systems (GPSs), and Geographical Information Systems (GIS) [23]. GPS and GIS provide prior knowledge about the road for the vision module. Except for positioning systems, information fusion can be used in different fields. A human detection system that can be employed on board for autonomous surveillance was proposed in [25] based on the fusion of two sensor modules, one for a laser and another for visual data. A consensus-like distributed fusion scheme [26] is presented for multiple stationary ground targets by a group of unmanned aerial vehicles with limited sensing and communication capabilities. Some more popular methods for multi-sensor fusion are explored extensively in the literature, including fuzzy logic [27], neural network [28], genetic algorithm [29], and Bayesian information fusion [30].

1.2. Target Recognition and Tracking of Vehicle Safety

Target recognition and tracking plays an irreplaceable role in vehicle reversing safety. The fundamental problem is how to recognize and track the target in a changing environment while the driver is reversing.

In the process of target recognition and tracking, overcoming contradictions among the target tracking rapidity, precision, and robustness [31] is always the focus. For real-time target tracking using MAVs, paper [32] concentrates on the development of a vision-based navigation system. It is proven to be a realistic and cost-effective solution. On the other hand, some authors presented a robust feature matching-based solution to real-time target recognition and tracking [33] under large-scale variation using affordable memory consumption. The results show that the method preforms well. Other researchers were devoted to designing an experimental setup to have human-robot interaction [34] in a surveillance robot. This is helpful in dangerous or emergent situations, such as earthquake and fire, for tracking the targeted person in a robust manner indoors and outdoors under different light and dynamic conditions.

Moving object detection by a backup camera mounted on a vehicle was proposed in [1]. The authors presented a procedure using traditional moving object detection methods for relaxing the stationary camera’s restriction, by introducing additional steps before and after the detection. The target application was to use a road vehicle’s rear-view camera systems. Unlike the algorithm in paper [1], the authors in [35] used the frame difference method to recognize a regular moving target and the Camshift algorithm to track a significant moving target. In fact, in the application of target recognition and tracking of a vehicle, there is more than one target that needs to be recognized and tracked. This increases the work burden of the system. In [36], a multi-target tracking algorithm aided by high-resolution range profile (HRRP) was proposed. The problem of multi-target data association was simplified to multiple sub-problems of data association for a single target. Similarly, a preceding vehicle detection and tracking adaptive to illumination variation in night traffic scenes was presented based on relevance analysis in the literature [4]. The test results indicate that the proposed system could detect vehicles quickly, correctly, and robustly in actual traffic conditions with illumination variation, which was helpful for vehicle safety. A performance evaluation of vehicle safety strategies for reversing speed was proposed [5]. In [9], a vision-based top-view transformation model for a vehicle parking assistant was presented. A novel searching algorithm estimates the parameters that are used to transform the coordinates from the source image. Using that approach, it is not necessary to provide any interior and exterior orientation parameters of the camera for a parking assistant. Visual sensor-based road detection for field robot navigation was proposed in [18]. The authors presented a hierarchical visual sensor-based method for robust road detection in challenging road scenes. The experimental results show that the proposed method exhibits high robustness. The vision system of a surface moving platform is an important piece of equipment for avoidance, target tracking, and recognition. Paper [37] mainly discussed the feature extraction and recognition methods of multiple targets. In this paper, a goal down image detection adaptive sparse representation and tracking method based on image is proposed. The results show that recognition can achieve over than 90% which means it has a good performance. In [38], the authors presented a stereo vision-based vehicle detection system on the road using a disparity histogram. Their system can be viewed as three main parts: obstacle detection, obstacle segmentation, and vehicle detection, with a 95.5% average detection rate.

Based on sparse expression, researchers built a new adaptive sparse expression [39] theory system, improved the robustness of the image recognition, and promoted target tracking technology innovation. This system has wide application potential. Some robust visual tracking and vehicle classifications have been proposed in [40,41,42,43].

1.3. Reversing Speed Control for Vehicle Safety

In [44], model predictive control (MPC) was used to compute the spacing-control laws for transitional maneuvers of vehicles. Drivers may adapt to the automatic braking control feature available on adaptive cruise control (ACC) in ways unintended by designers [45]. In [46], an autonomous reverse parking system was presented based on robust path generation and improved sliding mode control for vehicle reversing safety. Their system consists of four key parts: a novel path-planning module; a modified sliding mode controller on the steering wheel; image processing and real-time estimation of the vehicle’s position; and a robust overall control scheme. The authors of [14] presented a novel vehicle speed control method based on driver vigilance detection using EEG and sparse representation. The scheme mentioned in this paper has been implemented and successfully used to reverse the vehicle. In [47], a Bayesian network is used to detect human action to reduce reversing traffic accidents. The authors used Lidar and wheel speed sensors to detect environmental situations. In [48], a robust trajectory tracking for a reversing tractor trailer system was proposed. They treat the vehicle reversing speed control by virtue of neural network [49], fuzzy control [50], and human–automation interaction [18,51].

Despite successful utilization of the existing approaches and systems, a variety of factors in vehicle reversing safety systems still challenge researchers. Many studies have been conducted on reversing safety systems, focusing on three main problems: (1) how to find smaller size, higher reliability, and lower cost multi-sensors that are suitable to environmental perception; (2) how to realize target recognition and tracking based on information fusion for different physical phenomena measured by the multi-sensors; (3) how to realize the vehicle reversing speed control strategy based on multi-sensor environmental perception and object tracking for preventing collisions in realistic conditions?

In this paper, we introduce a multi-sensor environmental perception method using low-rank representation and a particle filter for vehicle reversing safety. A multi-sensor environmental perception module based on a binocular-camera system and ultrasonic range finders is used to acquire the distance of an obstacle behind the vehicle. The information fusion algorithm using adaptive Kalman filter is employed to process the data obtained by the binocular vision and ultrasonic sensors. The framework of particle filter and low-rank representation is used to track the main obstacles. After obstacle detection and tracking, the vehicle reversing control strategy takes steps to avoid reversing collisions.

The rest of the paper is organized as follows. In Section 2, we present the general system architecture of our proposed system. Section 3 focuses on multi-sensor environmental perception for vehicle reversing. Target recognition and tracking are developed in Section 4, and vehicle reversing speed control strategies are described in Section 5. Section 6 is devoted to the system simulation and validation. Finally, some conclusions are provided in Section 7.

2. System Architecture

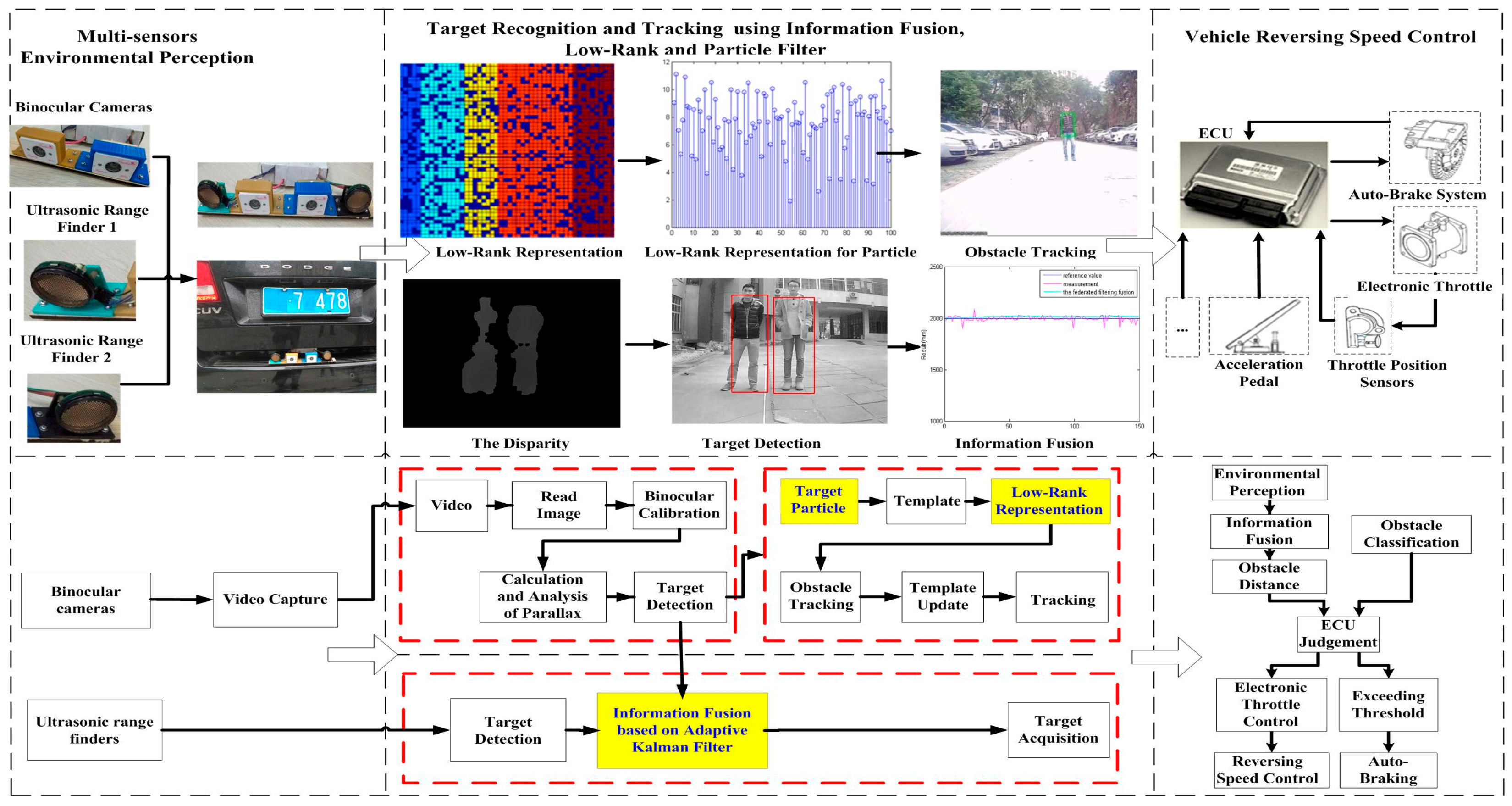

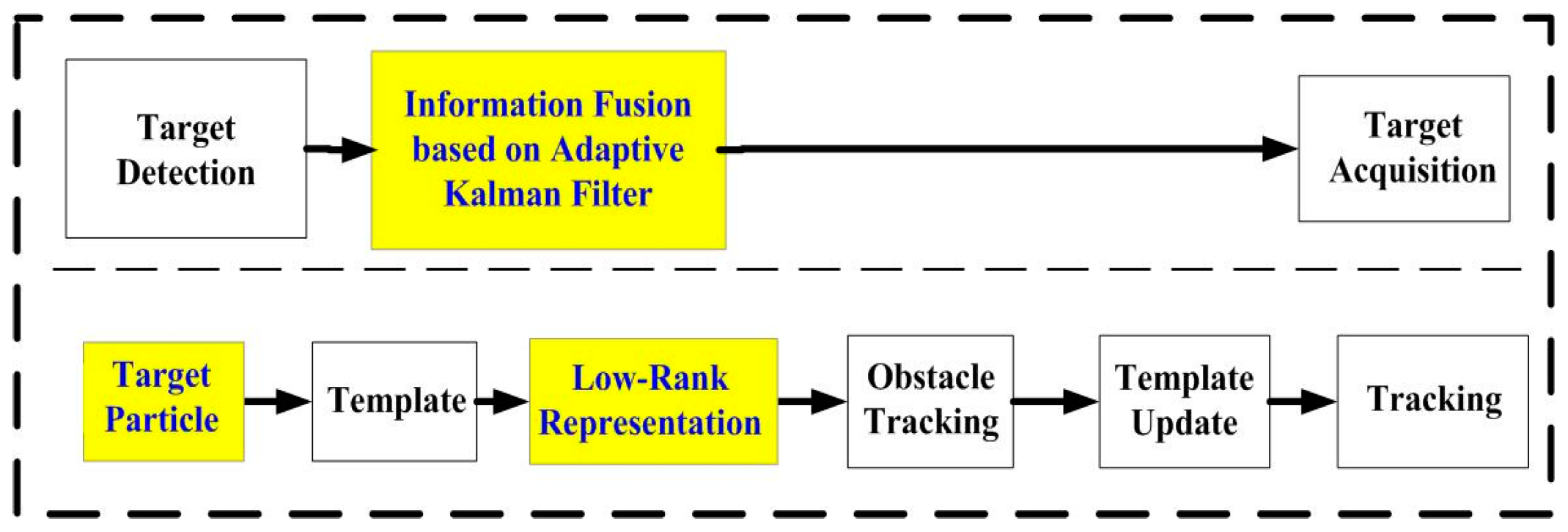

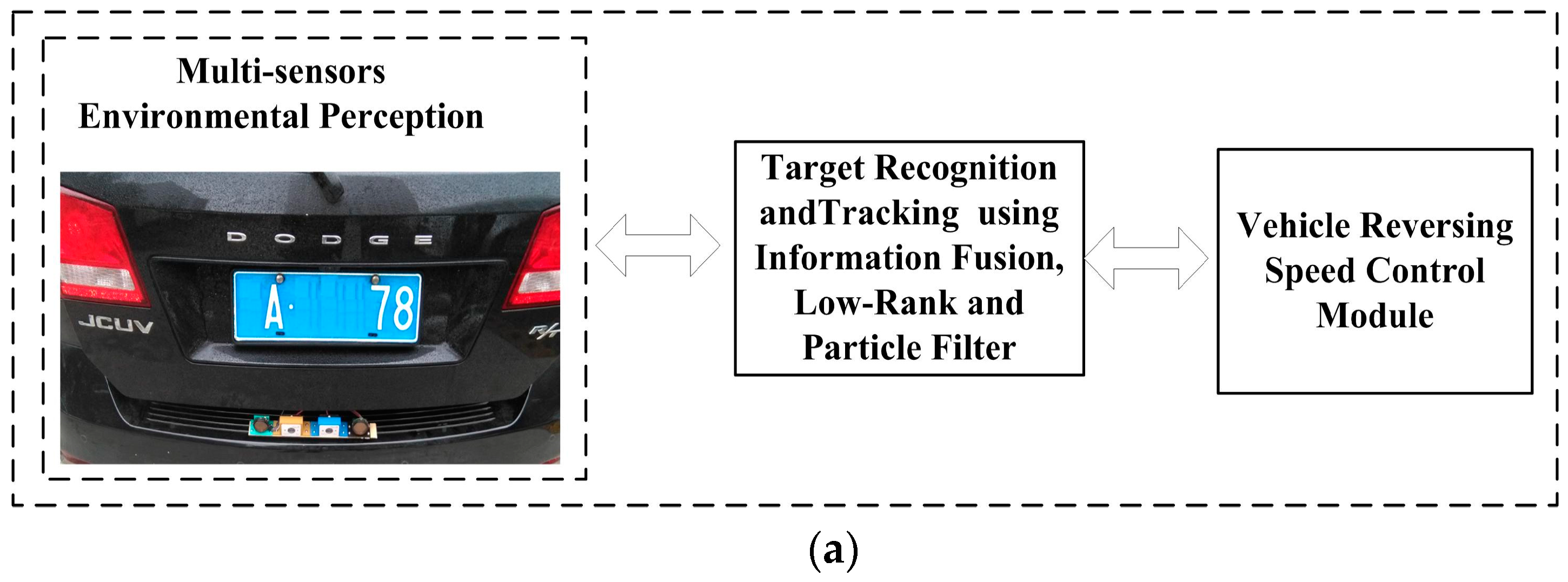

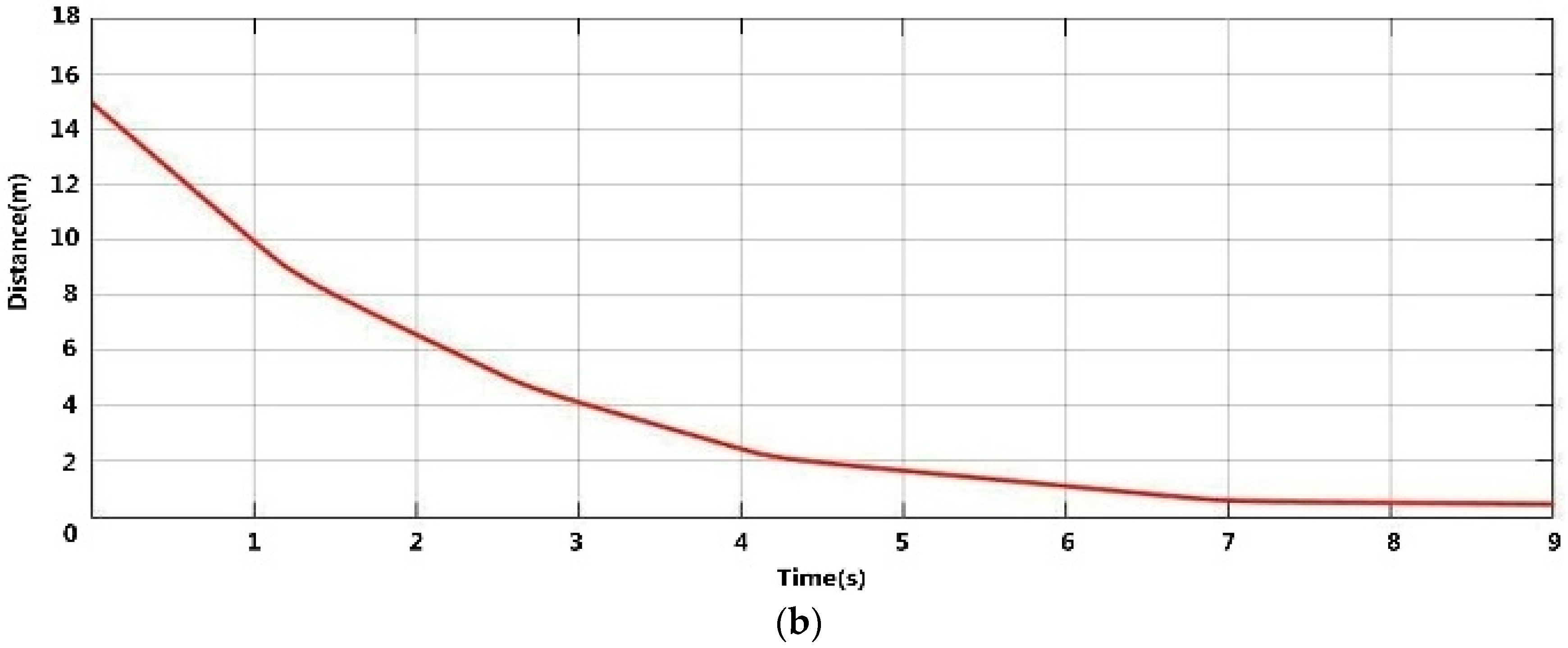

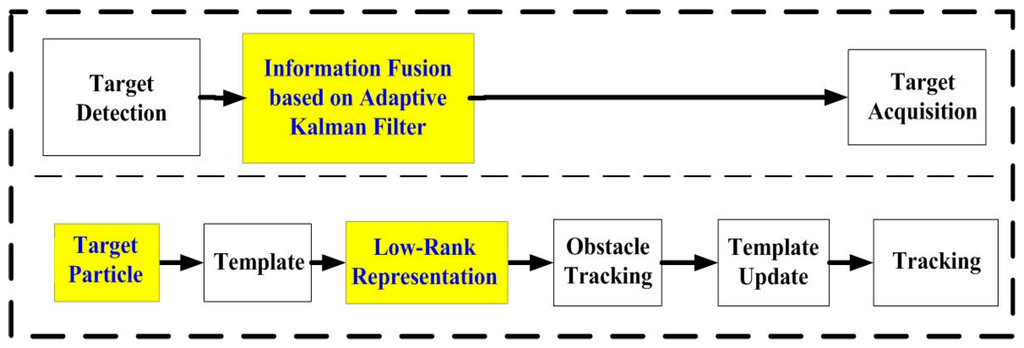

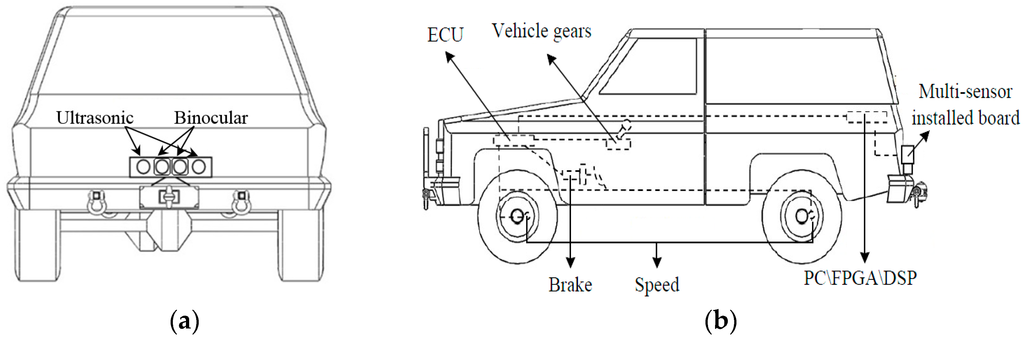

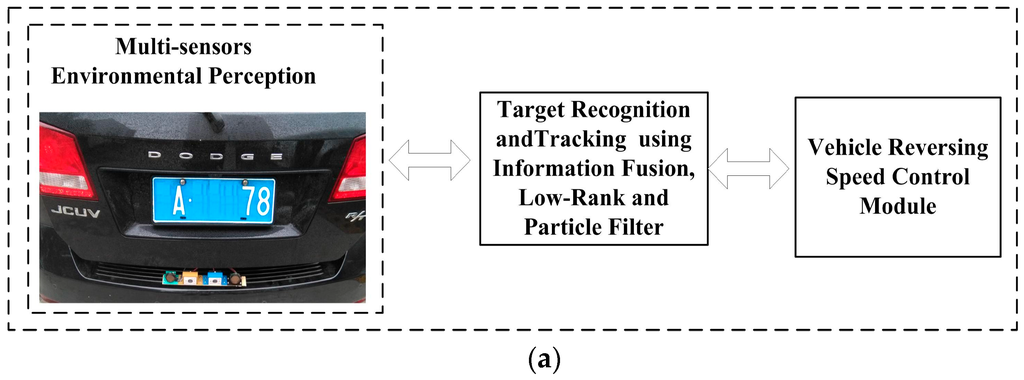

The general architecture of our system, as shown in Figure 1, is made up of multi-sensor environmental perception, target recognition and object tracking, and vehicle reversing speed control strategy.

Figure 1.

Flowchart of the proposed system.

In the first step, when a vehicle is reversing, the Electronic Control Unit (ECU) receives the reversing information behind the vehicle and automatically operates the multi-sensors’ environmental perception module to acquire rear-view information. Then the binocular cameras and two ultrasonic range finders capture information about the complex reversing environment. After obtaining the images, we can get the obstacle’s distance information using disparity computation and triangulation. At the same time, two ultrasonic sensors are applied for rear collision intervention, which inform drivers of distance feedback for obstacles behind the vehicle. The left block of Figure 1 shows the binocular cameras and two ultrasonic sensors.

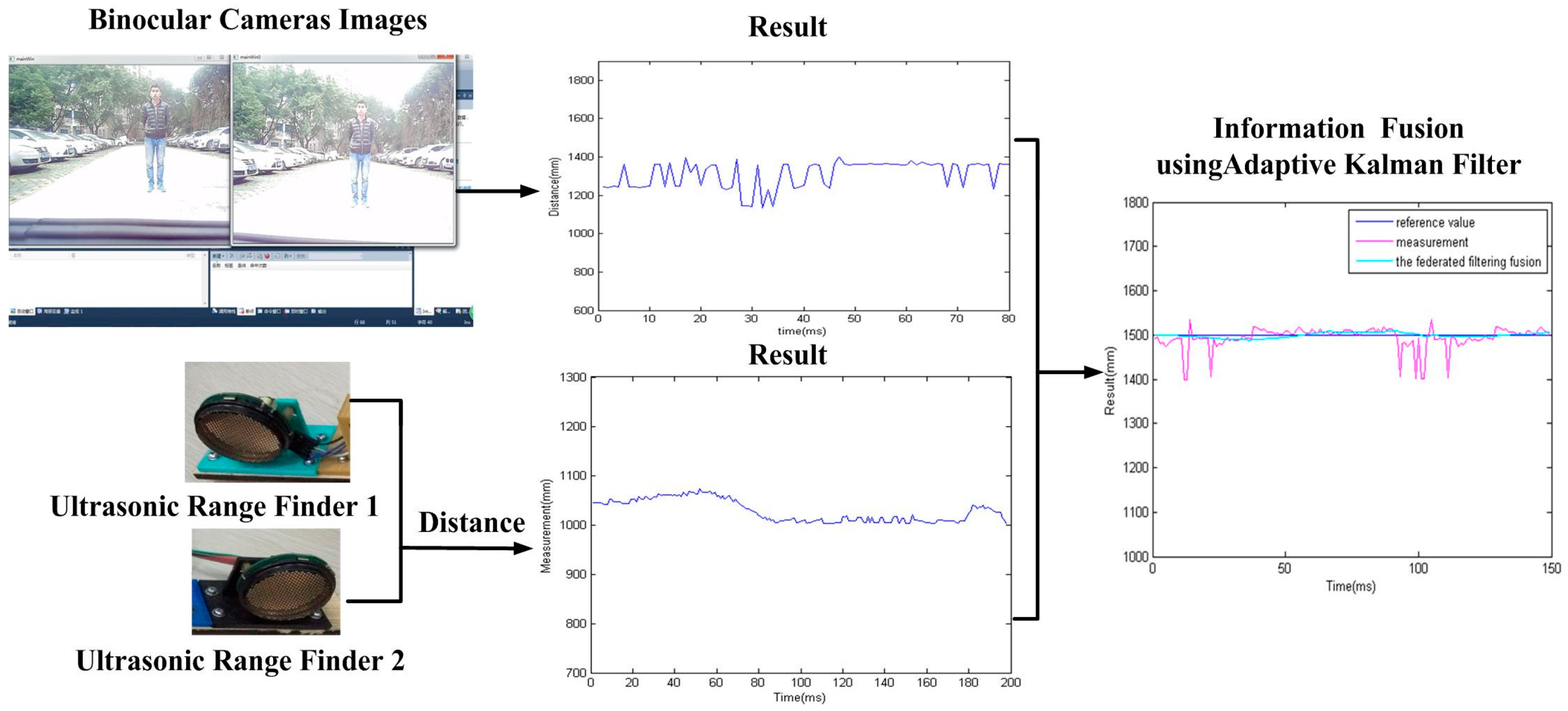

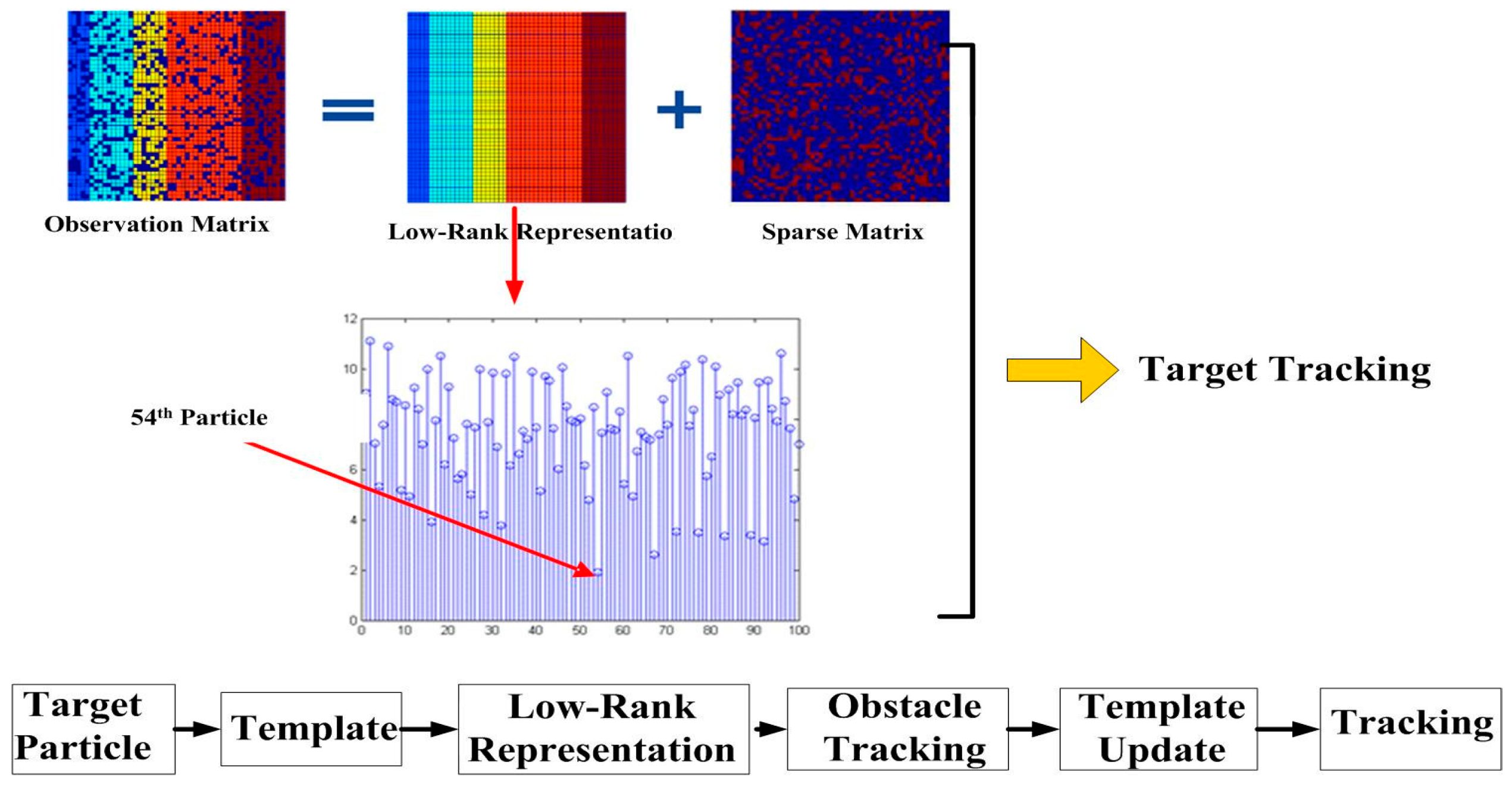

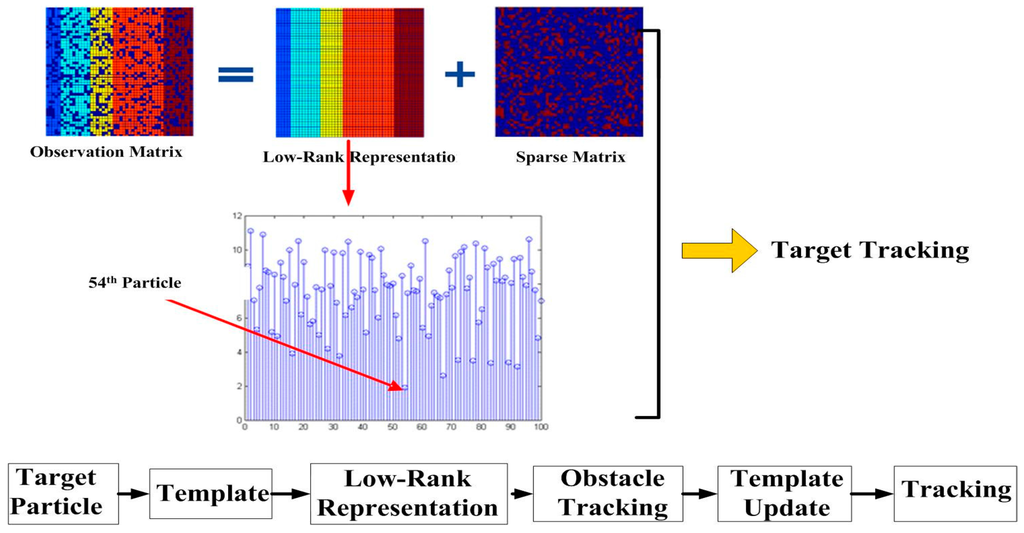

The second step includes the information fusion algorithm based on multi-sensors for obstacles detection, and target tracking using particle filter and low-rank representation. As shown in the middle blocks of Figure 1 and Figure 2, an adaptive Kalman filter is used to process the data by binocular vision and ultrasonic sensors. The result of multi-sensor information fusion is very important for a vehicle to keep a safe distance from obstacles. A novel framework of a particle filter based on low-rank representation is used to track the main obstacles for vehicle reversing, as shown in the middle block of Figure 1. In this paper, we introduce a low-rank matrix in the particle filter to choose an optimal objective particle template with the smallest L-1 norm. As shown in Figure 3, combining low-rank representation in a target particle, we eventually choose the particle that has the smallest difference compared with target templates in the candidates set. The proposed novel obstacle tracking algorithm can successfully track the obstacle when the vehicle is reversing.

Figure 2.

Process of information fusion.

Figure 3.

A novel target tracking algorithm in our proposed approach.

The final step is the vehicle reversing speed control strategy, also shown in the right block of Figure 1. After target recognition and tracking, the ECU of the vehicle controls reversing speed for vehicle safety. Based on the information fusion of multi-sensor environmental perception and obstacle tracking, ECU will judge the safe distance for reversing. If a danger is detected, ECU will control the speed of the vehicle to avoid a reversing collision.

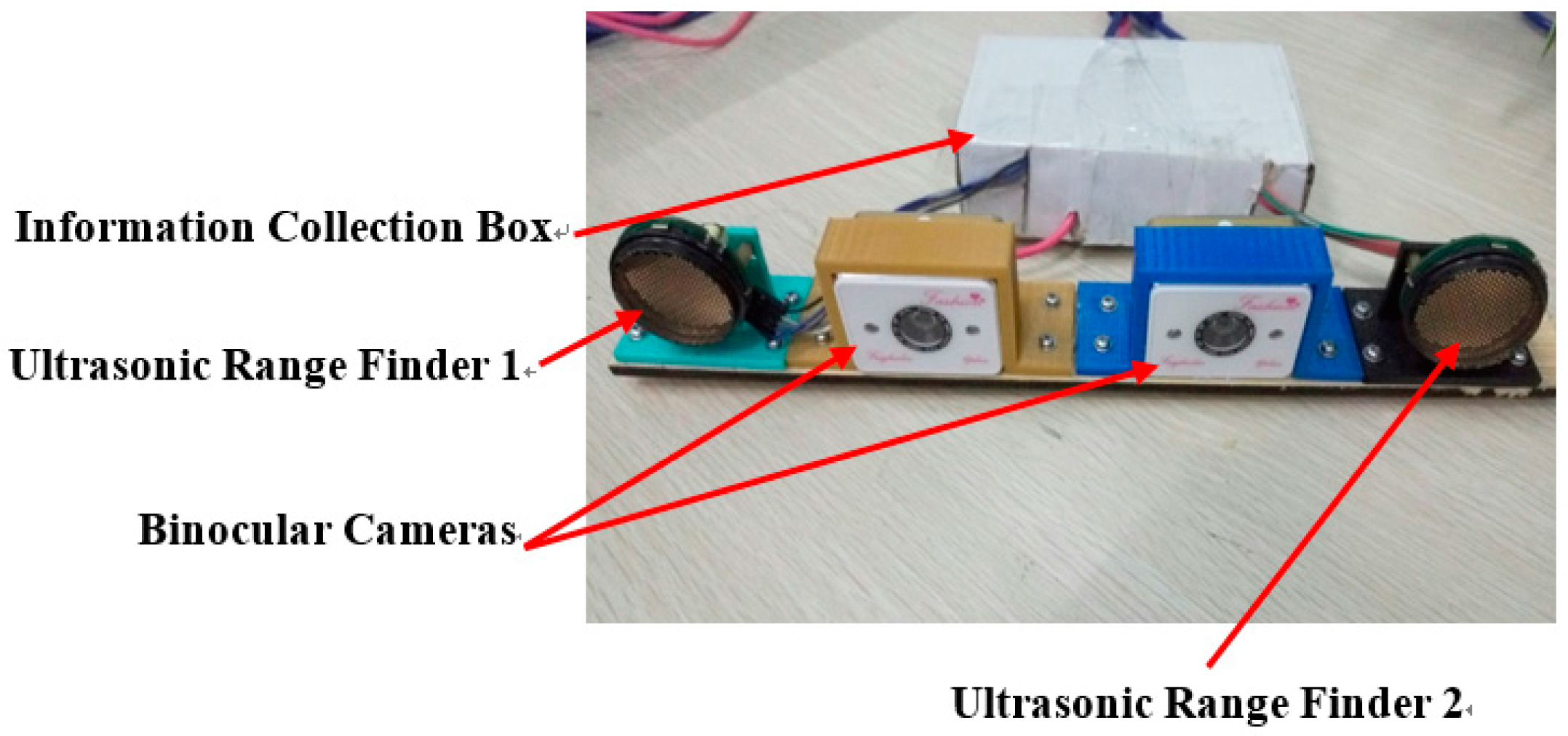

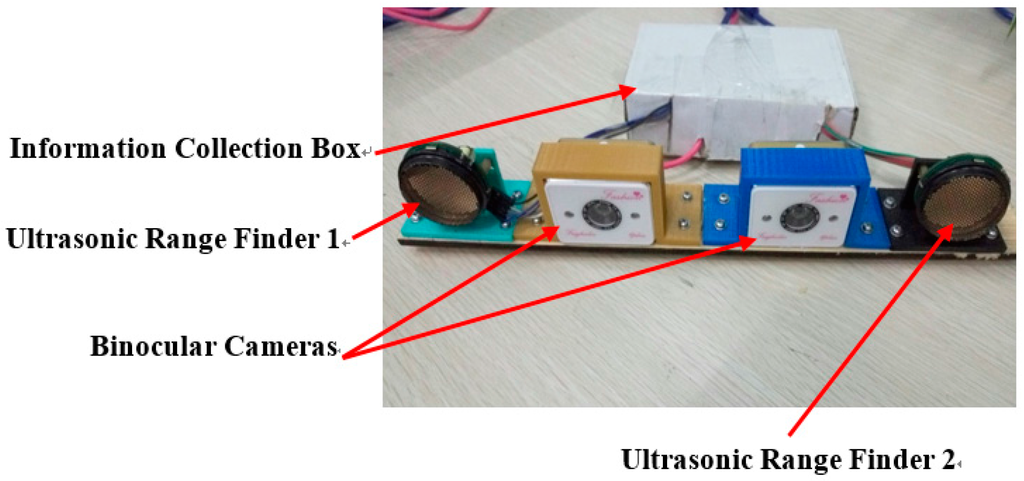

3. Multi-Sensor Environmental Perception

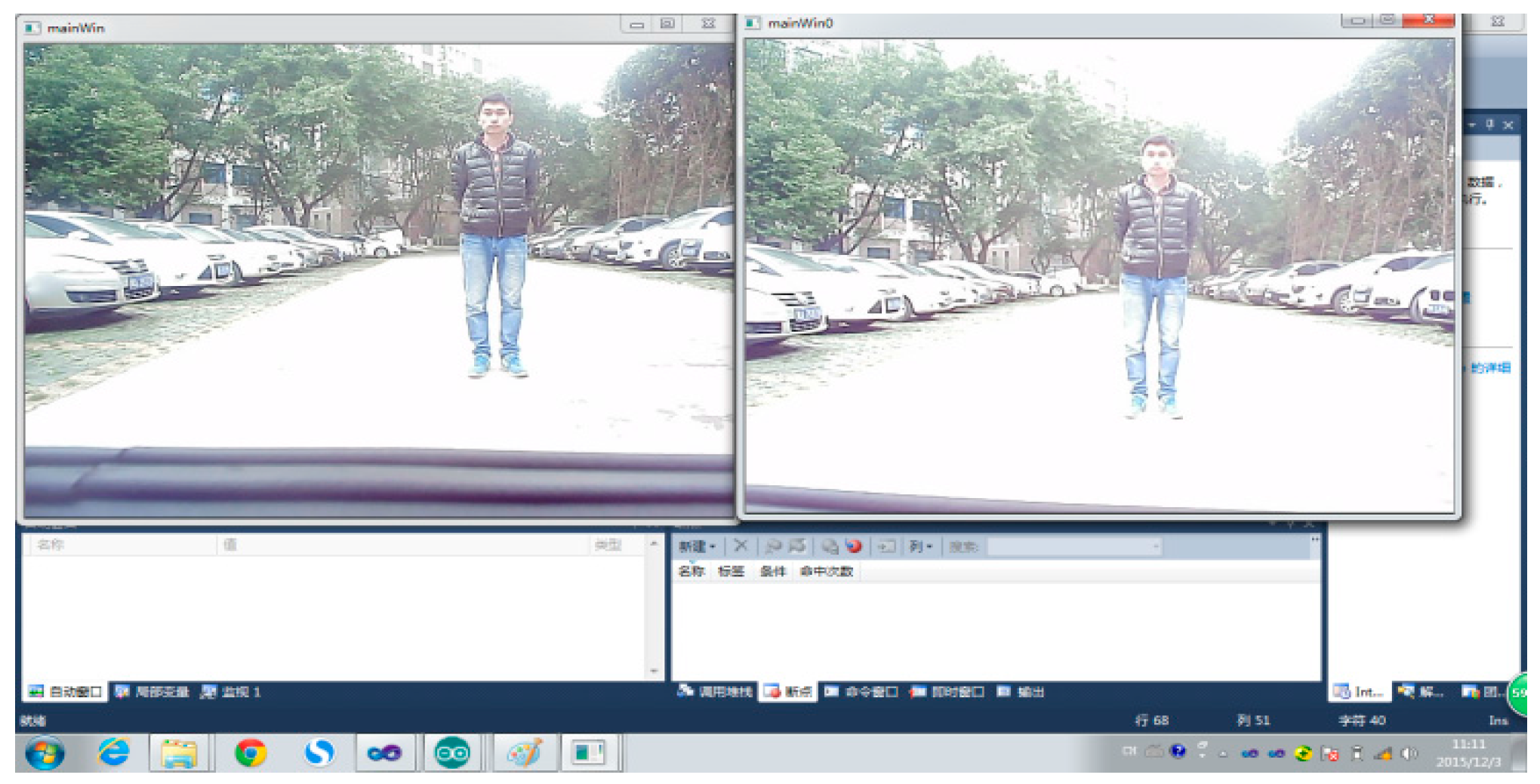

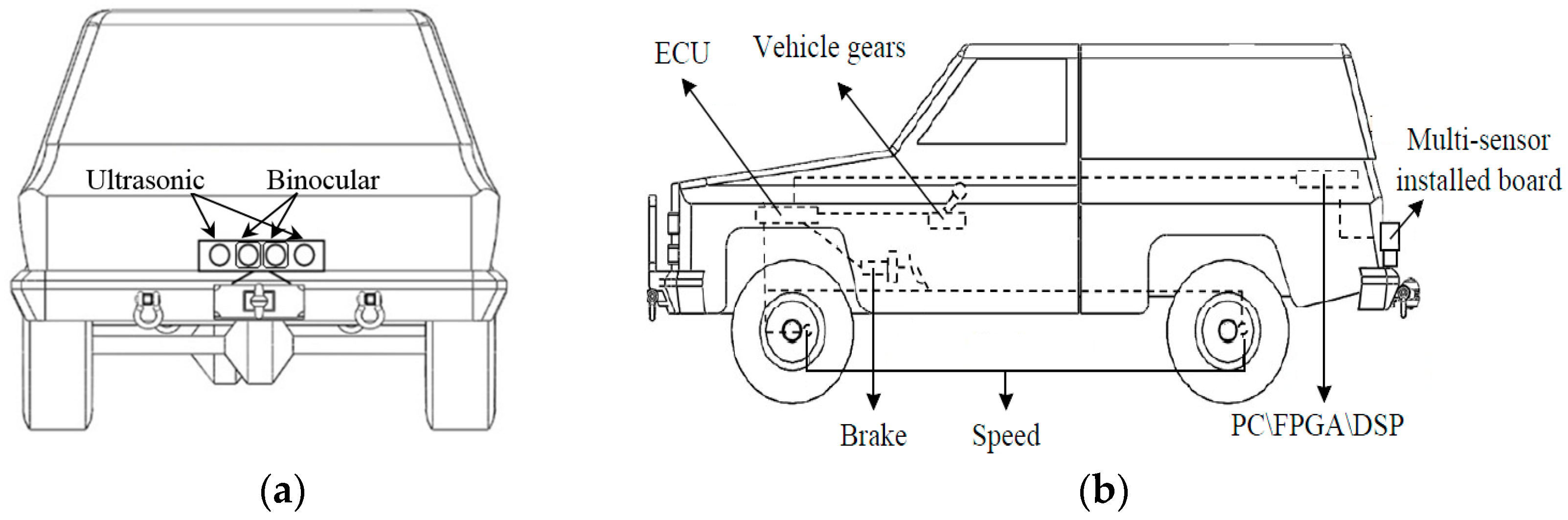

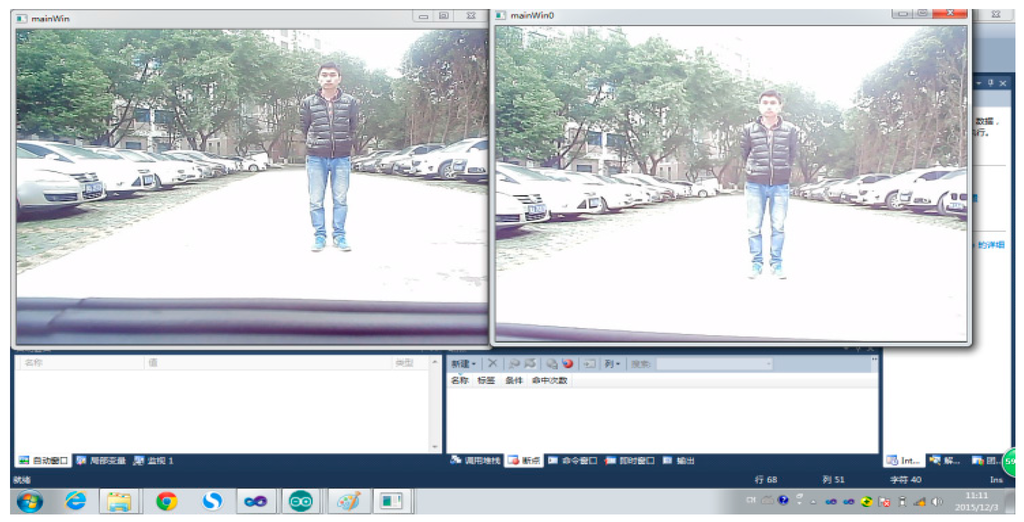

In this section, two types of sensors, ultrasonic range finders and binocular cameras, are used to perceive the environment around a vehicle. As shown in Figure 4, the proposed multiple sensors are used in our research. Two ultrasonic range finders measure the distance from obstacles, and the binocular cameras are used to capture vision and distance information about the obstacles. We integrate the two sensors on a board as a vehicle reversing multi-sensor in Figure 4. The images from the binocular cameras are shown in Figure 5.

Figure 4.

Installation board of multi-sensors.

Figure 5.

The results of binocular cameras when a vehicle is reversing.

3.1. Obstacle Detection Based on Binocular Cameras

Stereo vision technology is used more often in machine vision. With two images from left and right, a person can approximately obtain an object’s 3D geometry, distance, and position. In this paper, videos captured by binocular cameras as in Figure 4 are used not only for visual information for a driver, but also for ECU for vehicle reversing safety.

3.1.1. Binocular Stereo Calibration

In order to get the depth of an obstacle, key parameters are introduced to solve the 3D depth computation of a target for binocular stereo rectification [52] of the binocular cameras. To get these parameters, images are calibrated using methods given in the literature [5]. For binocular stereo rectification, the parameters are defined as follows:

- M: intrinsic matrix, a 3 × 3 matrix containing camera normalized focal length and optical center.

- , : camera normalized focal length.

- , : camera normalized optical center.

- d: distortion vector, it is a 5 × 1 vector.

- , , : radial distortion parameters.

- , : tangential distortion parameters.

- R: rotation matrix, it is a 3 × 3 matrix that contains three 3 × 1 vectors.

- , , : rotation matrix vectors.

- t: translation vector, it is a 3 × 1 vector of three translation parameters.

- , , : translation parameters.

We can find the relation of the real-world plane coordinates (X, Y) and the camera coordinates (x, y) using the above parameters:

where s is a scale ratio and r3 can be removed by the depth Z = 0, and the coordinate should be modified by a Taylor series expansion at r = 0.

where (, ) is a coordinate before correction and (, ) is a corrected coordinate for stereo rectification. A single camera can be calibrated using Equations (1) and (2).

At the same time, another two parameters called rotation matrix Rs = (, , ) and translation vector Ts = (, , ), which aligns with two cameras, are computed as in Equation (3):

where and are the right and left camera coordinates, respectively. A 3D point P can be projected into the left and right cameras as in Equations (4) and (5) according to the above single camera calibration:

where is the rotation matrix of left camera and Rr is the rotation matrix of right camera. is the translation vector of left camera and is the translation vector of right camera. and can be calculated using Equations (3)–(5).

3.1.2. Binocular Stereo Rectification and Stereo Correspondence

When the binocular camera images are obtained, the next steps are to rectify binocular stereo and establish stereo correspondence. Stereo rectification is used to remove the distortions and turn the stereo binocular images into a standard aligned form utilizing the calibration results. This is an important step for calculating the disparity of binocular images for vehicle reversing. Open Source Computer Vision Library (OpenCV) [53] is introduced to rectify the binocular images.

For binocular stereo rectification and stereo correspondence, the parameters are defined as follows:

- : the segment which is used to obtain the minimization of reprojection distortion

- and : the rotation matrices

- and : intrinsic matrices.

After finding the correspondence points, the disparity can easily be calculated. Disparity optimization algorithm is also used to remove bad matching points.

After stereo rectification and correspondence, we compute the disparity of the binocular images. The disparities of different targets that have a different distance to the binocular cameras usually contain the edge information of the targets, which can be used for separating targets from others. This important characteristic can be used for target recognition and tracking.

The disparity is usually affected by the noise of light and shelter. We must take measures to decrease the noise to obtain the edge information successfully. In our paper, some operations, such as dilation, erosion, and image binarization, are used to settle this problem. After that, there are still regions with a small area that is the noise, so we choose the largest region as the target to track. In order to gain the location of the target, the external rectangle of the target can be obtained. As a result, we can also get the location of the external rectangle. The four points of the rectangle are the initial location of the particle filter. Of course, the target chosen by binocular stereo vision is the particle template.

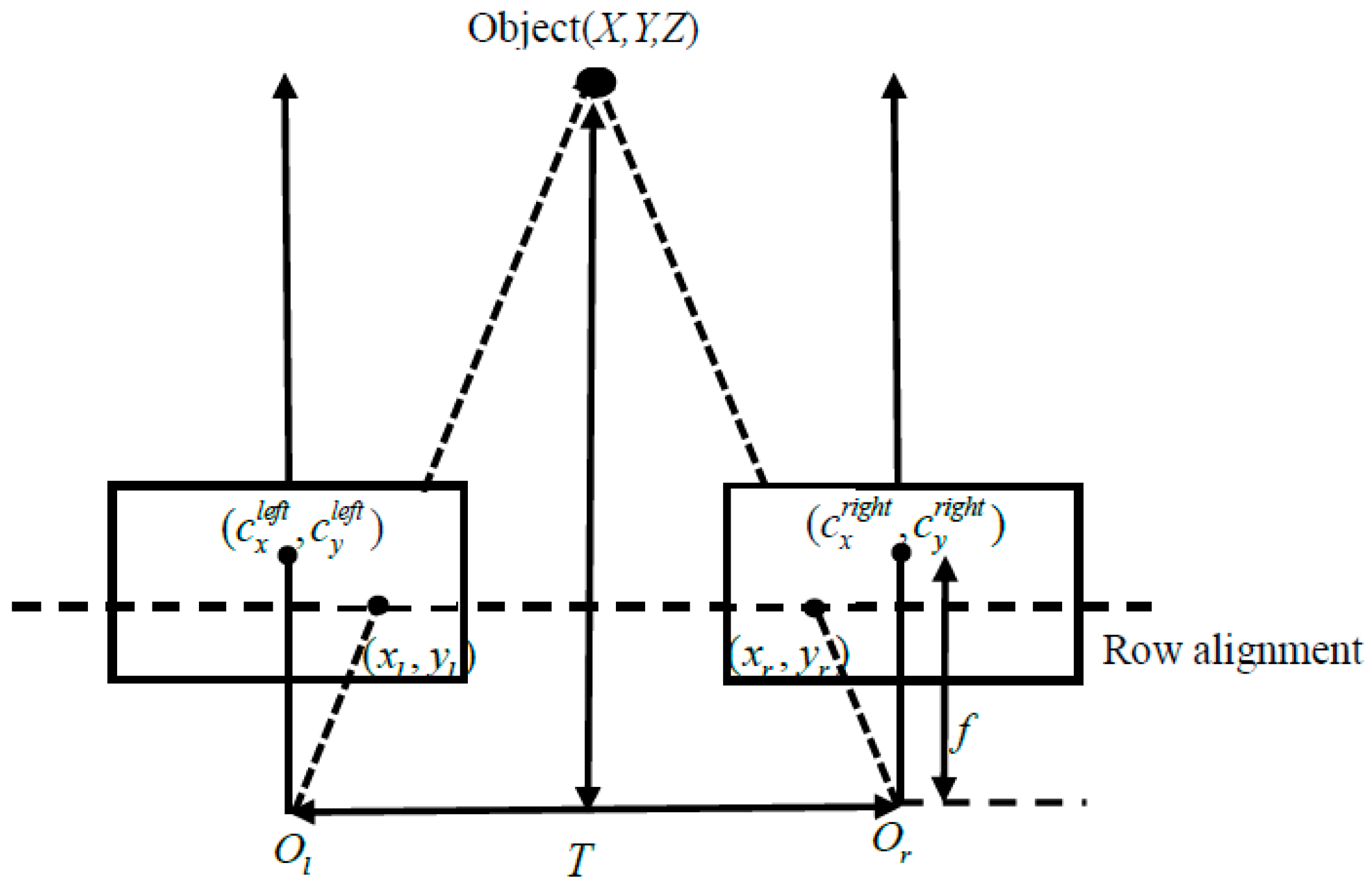

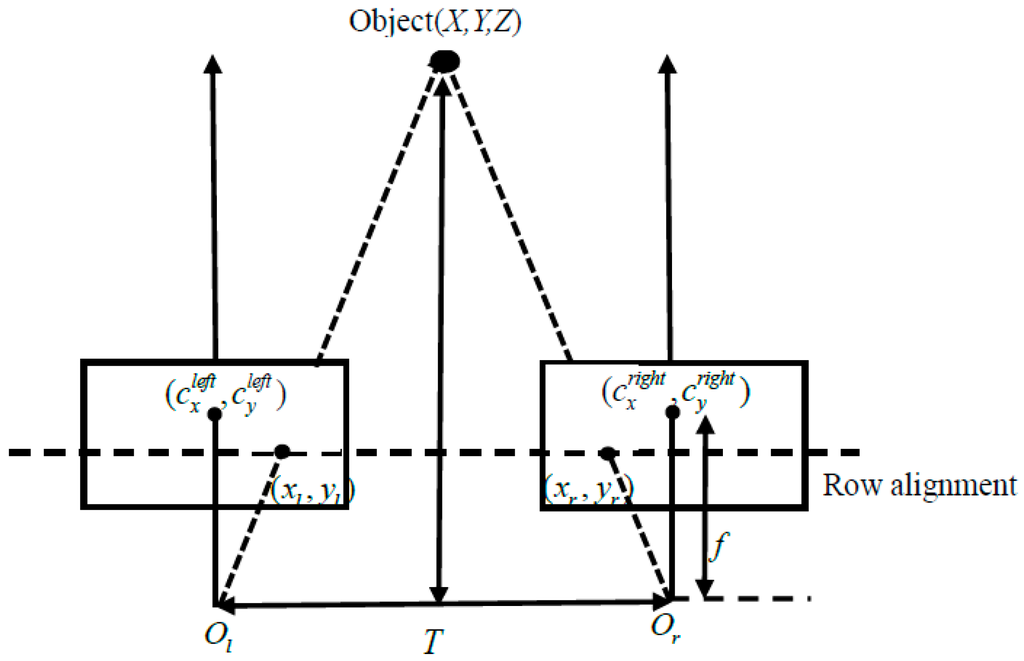

3.1.3. Binocular Triangulation

After binocular stereo rectification and correspondence, binocular triangulation is used to compute the position of a target in 3D space. A binocular stereo model is shown in Figure 6. The theorems of binocular triangulation are analyzed in Equations (6)–(8):

where (, ), (, ), (, ), and (, ) are corrected through the above steps. In Equation (7), T is the distance between the binocular cameras’ centers. At the same time, the obstacle’s depth, width, and height can also be obtained by the binocular vision for target detection.

Figure 6.

Binocular triangulation model.

3.2. Obstacle Detection Based on Ultrasonic Range Finders

An ultrasonic sensor is used to find the range of a target by means of capturing the reflected ultrasonic wave. An ultrasonic wave is a mechanical vibration at a frequency higher than the sound wave. It is used widely for the high frequency, the short wave length, the lower diffraction, and especially the good direction. However, the range of this sensor is limited to 0–10 m. In our proposed system, two ultrasonic range finders are installed on the rear board for the distance of an obstacle and the instantaneous information. The ultrasonic range finder, KS109, is shown in Figure 4.

4. Target Recognition and Tracking Based on Information Fusion and the Improved Particle Filter

The information fusion algorithm using an adaptive Kalman filter is employed to process the data obtained from binocular vision and ultrasonic sensors of the same obstacle at the same time. It improves the robustness of the sensors. Then the improved particle filter based on low-rank representation tracks the main obstacles. The low-rank representation is used to optimize an objective particle template that has the smallest L-1 norm. This optimization improves the tracking performance. The structure of the proposed algorithm for information fusion and tracking is shown in Figure 7.

Figure 7.

Structure of proposed information fusion and tracking algorithm.

4.1. Data Fusion Based on Adaptive Kalman Filter

4.1.1. The Basic Principle and Structure of Adaptive Kalman Filter

In the model of a Kalman filter, we use one stochastic differential equation,

where X(k) represents the state of the system in moment k and U(k) is the control quality of the current state. A and B are the parameters of the system, which vary in different systems. W(k) is the processing noise.

Therefore, we can describe the measurement by:

where Z(k) represents the measurement, H is the parameter of the measuring system, and V(k) is the noise in measuring. Based on the model of the Kalman filter, the current state can be estimated based on the previous state. It is described as:

where X(k|k − 1) is the estimation based on the previous state and X(k − 1|k − 1) is the optimal result of the previous state. After updating the system, the covariance can be updated using Equation (12). We use P to represent the covariance:

where covariance P(k|k − 1) corresponds to X(k|k − 1), P(k − 1|k − 1) corresponds to X(k − 1|k − 1), is the transpose of A, and Q represents the variance of the system. We can have the optimal value X(k|k) using the measurement of the system and the predicted value:

where Kg is the Kalman gain obtained by

In order to update the system constantly, the covariance updates through

where I is the identity matrix. Equations (11)–(15) are the basic frame of the Kalman filter.

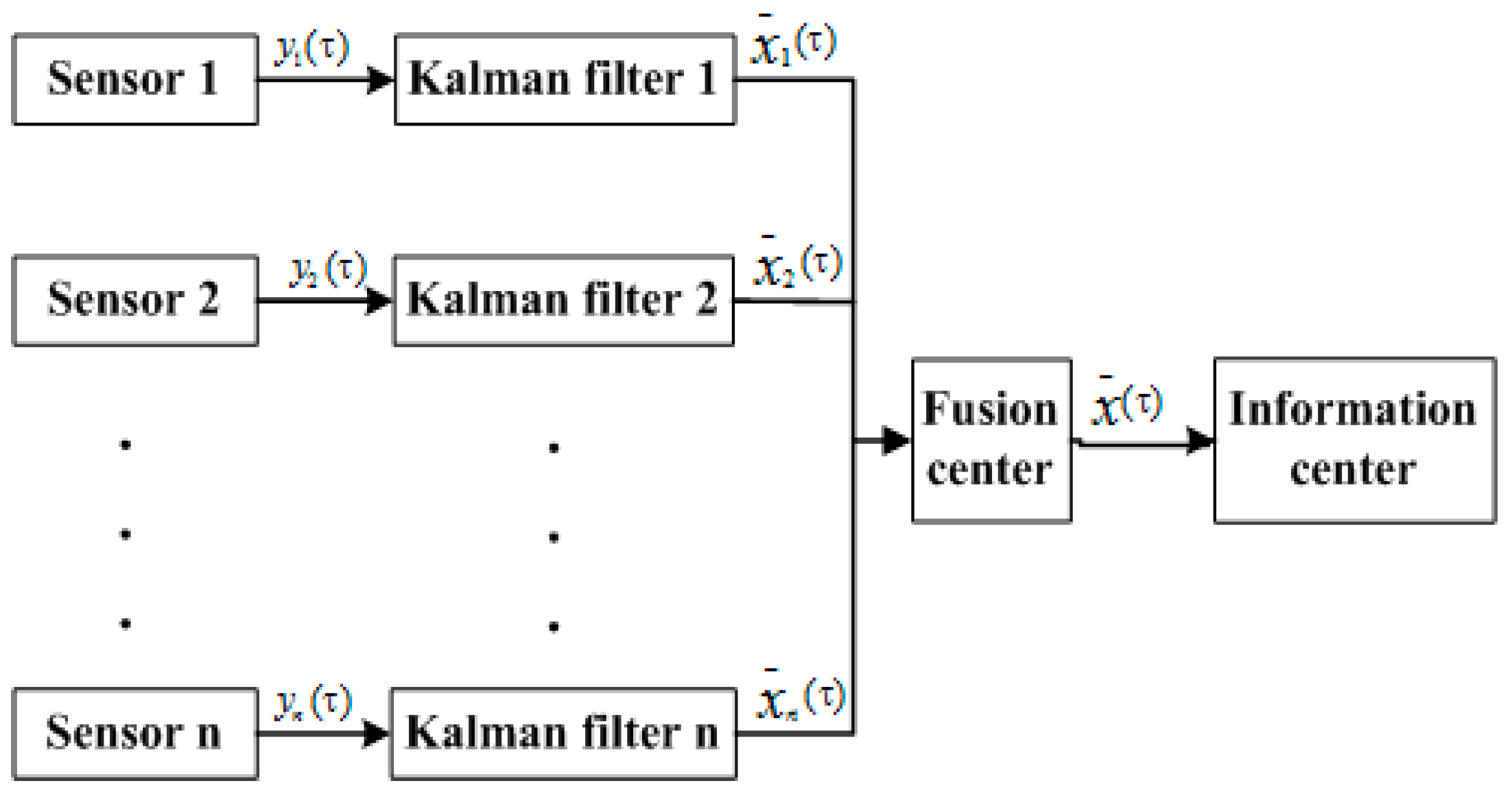

4.1.2. Information Fusion Based on Federal Kalman Filter

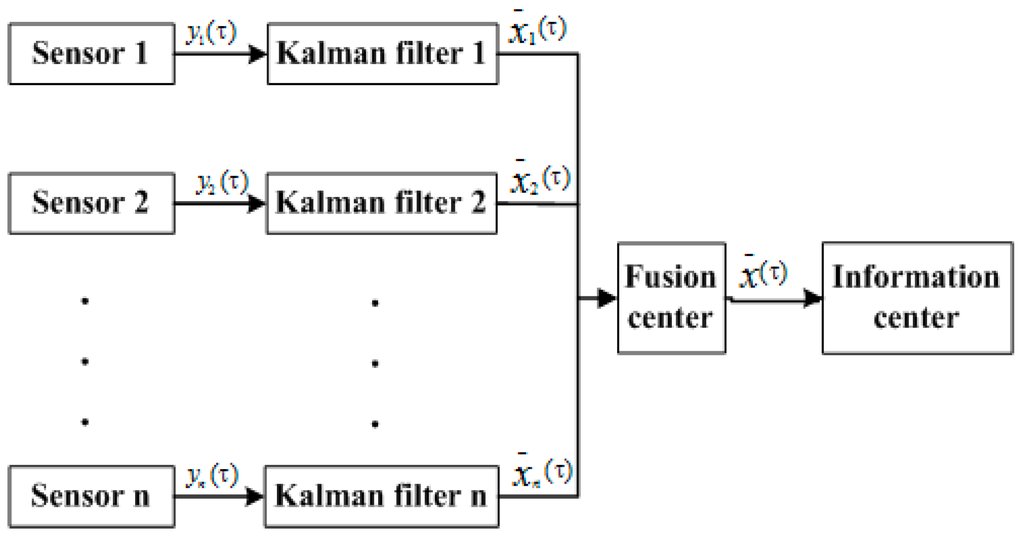

In this paper, a federated Kalman filter is used for multi-sensor information fusion as in Figure 8. An adaptive federal filter is a decentralized scheme to distribute dynamic information. The dynamic information has two parts, information about the state equation and information about the observation equation.

Figure 8.

Federal filter information fusion structure.

As shown in Figure 8, the output and covariance of the sub-filters in federal filter fusion are local estimations based on the measurement of the subsystem. The outputs, and , determined by all the subsystems of the filter, are the optimal estimation. Their relationship can be described as follows:

After fusing, the main filter distributes the information to each sub-filter. The principle of allocation can be described as:

The parameter which is shown above, should meet the following requirement:

Actually, we used four real sensors in our proposed multi-sensor system, including binocular cameras and two ultrasonic rangefinders. The above equations should be changed according to the state equation and measuring equation of the system.

4.2. Target Tracking Based on the Modified Particle Filter

4.2.1. Introduction of Particle Filter

Framework: A particle filter based on the Monte Carlo method is widely used in many fields. We can get the state probability using the following steps:

where is a state variable at time k and is an observation of , and is a normalizing constant in Equation (25):

By spreading N samples at time k {xki, i = 1, ..., N }~importance distribution q(x) with the weights {wki, i = 1, ..., N }, the weight is approximated in the following:

A maximal approximate optical state is given by the following:

Model: contains the affine transformation parameters that convert the obstacle region to a fixed size rectangle. Parameters in are independently drawn from a Gaussian distribution around . is the transformed image and is stretched into columns of the obstacle region with a constant size by using . For details about the particle filter, please refer to the literature [5].

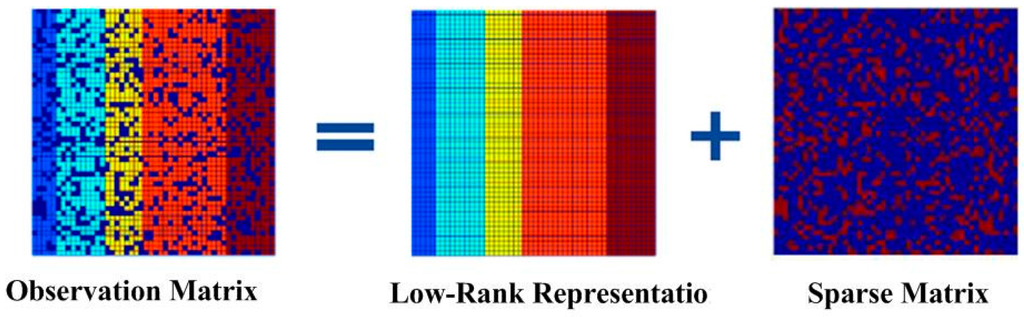

4.2.2. Introduction of Low-Rank Representation and Principal Component Analysis

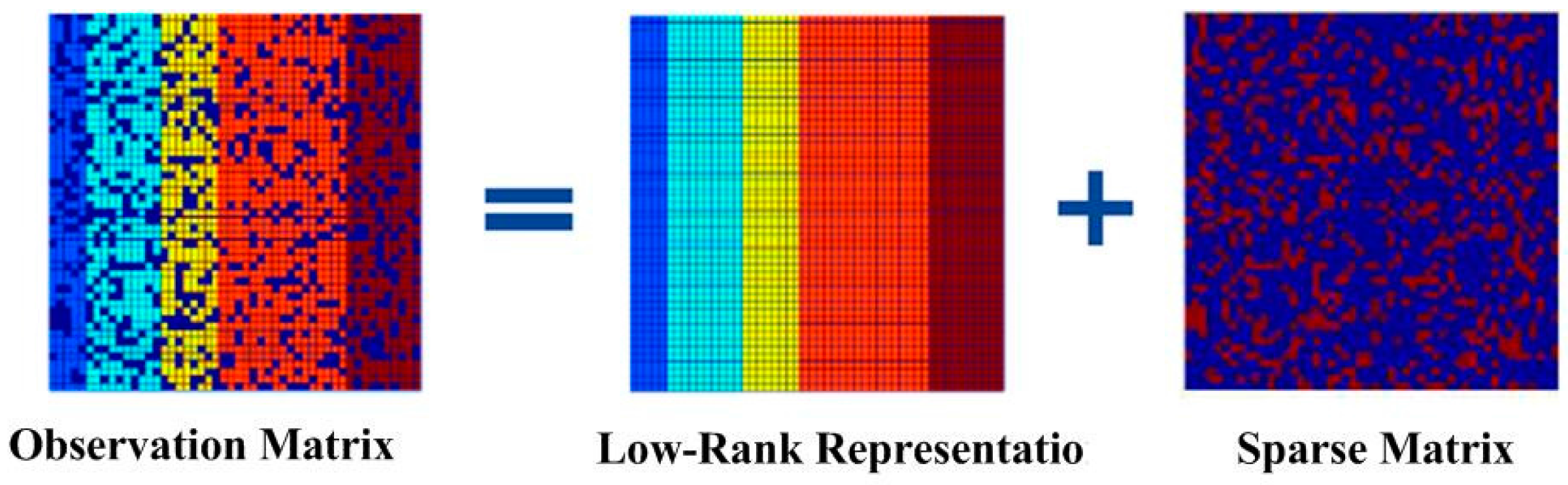

As in many practical applications, the given data matrix D is low rank or approximately low rank. In order to restore the low-rank structure of matrix D, matrix D is decomposed into two unknown matrices, X and E, as in D = X + E. X is low rank, as shown in Figure 9.

where is the number of the nonzero elements. Because this is a non-deterministic polynomial, it needs convex optimization. The formula turns to

where is the trace nor, and the sum of the singular value of the matrix; is the L1 norm and the sum of the absolute value of the element, and is the weight of the formulation. In this way, we convert the problem of searching for the rank of the matrix into searching for the trace norm of the matrix.

Figure 9.

Sparse and low-rank matrix decomposition.

In the process of mathematical optimization, the Lagrange multiplier method is one of the optimization methods that can help with finding the extremum of a multivariate function bounded by one or more variables. The Augmented Lagrange Multiplier (ALM) algorithm also makes use of the multiplier method. The target function is:

where is the Frobenius norm. The iterative process of the algorithm is shown in Algorithm 1.

| Algorithm 1 Algorithm of low rank representation |

| Input |

| Initialization: |

| Procedure |

| While do |

| End while |

| Output X,E |

In the process of iteration, the main computation is a singular value decomposition (SVD). In order to improve the speed of the system, we use IALM in our paper.

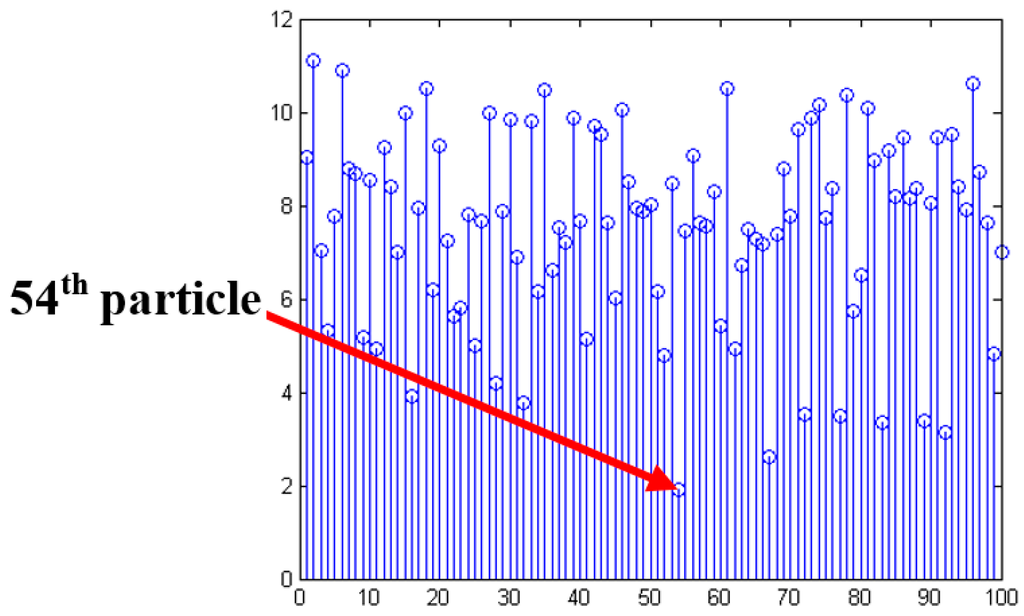

4.2.3. Low Rank Representation for Obstacle Recognition and Tracking

As shown in Figure 7, we introduce low-rank representation into the particle filter to improve the tracking performance.

The target template set is defined to have n target templates and m dimensions of the matrix. There are k candidate targets generated based on the framework of particle filter. They are defined as:

If we combine each particle of the candidate targets with target template one by one to form a new matrix , we have

Then, we can make use of principle component analysis:

which is a low-rank matrix obtained after the computation. It can be considered as a matrix that consists of the unchangeable data of the target.

where is an observation that can be decomposed into a low-rank matrix and a noise matrix . For the element of the candidate template set , it will be a zero matrix. Based on the theory described above, Equation (34) can be changed to:

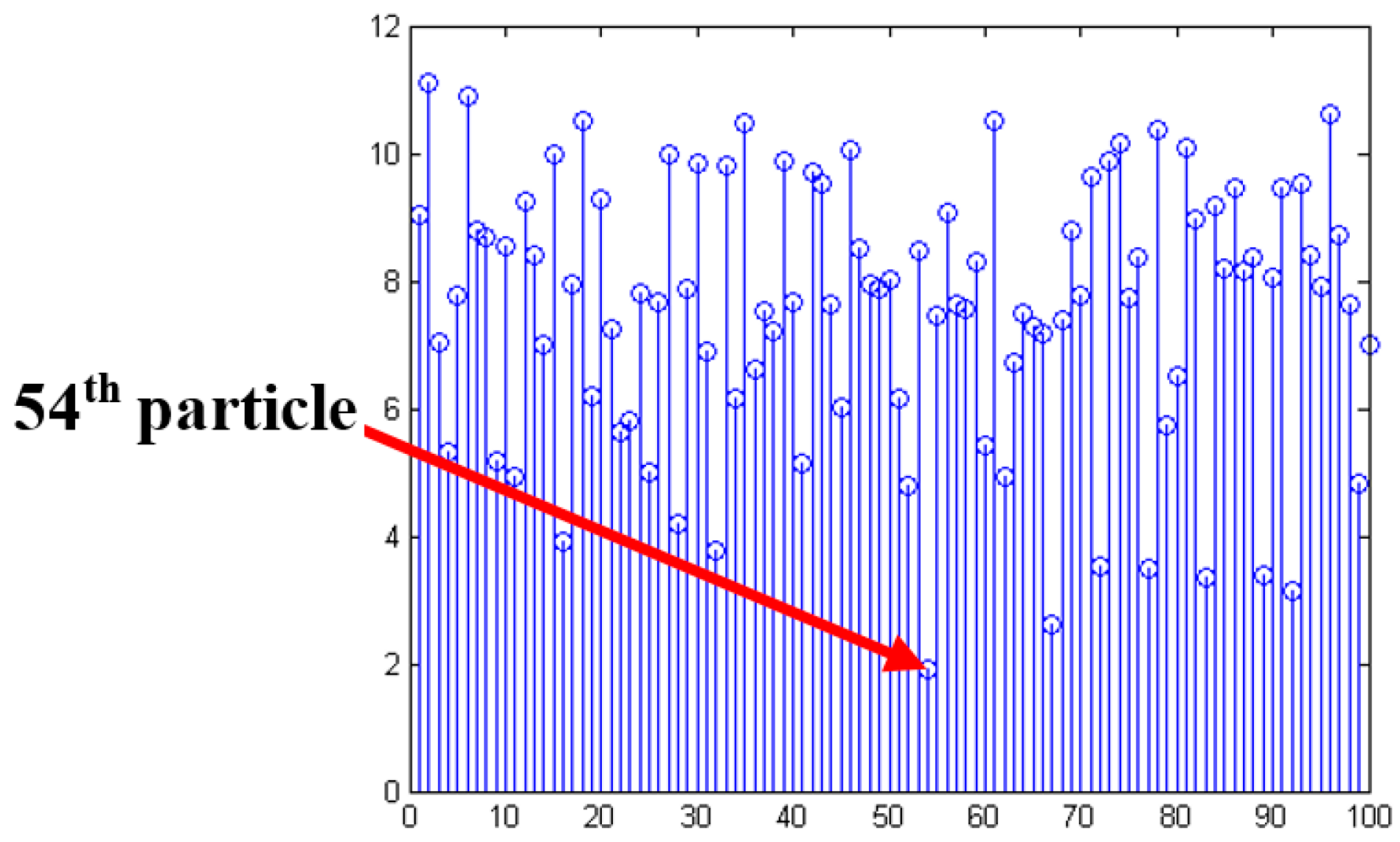

In this case, is a low-rank matrix. However, we mainly concentrate on the noise matrix , because the last column of matrix is smaller if the target template set D gets closer to the target. We define the last column of as , which represents the difference between the target of the current frame and the sample set. The optimal objective is to have the smallest L-1 norm. Actually, can be considered as the difference in the process of tracking of the shelter, and the change of the light. We chose particles having the smallest difference compared with target templates in the candidate set, as shown in Figure 10. The chosen particle has the smallest that is the most desirable particle. It shows that this kind of algorithm can successfully track the target when the vehicle is reversing.

Figure 10.

The smallest of the particles.

5. Vehicle Speed Control Strategy

In this section, we use the vehicle reversing speed control algorithm as in [5] to keep the vehicle in a safety speed. This makes the reversing control safer and more reliable. Table 1 shows strategies of ECU under different conditions for vehicle reversing safety. The fuzzy rules in [5] and Table 1 are presented to show the feasibility. The system is enabled to take control of the electronic throttle opening and automatic braking to avoid collisions. The prototype shown in Figure 11 makes reversing control more reliable [5].

Table 1.

Various judgments of ECU under different conditions.

Figure 11.

Vehicle reversing speed control prototype: (a) position of multi-sensors; (b) prototype of the vehicle reversing speed control system.

6. Simulation and Validation

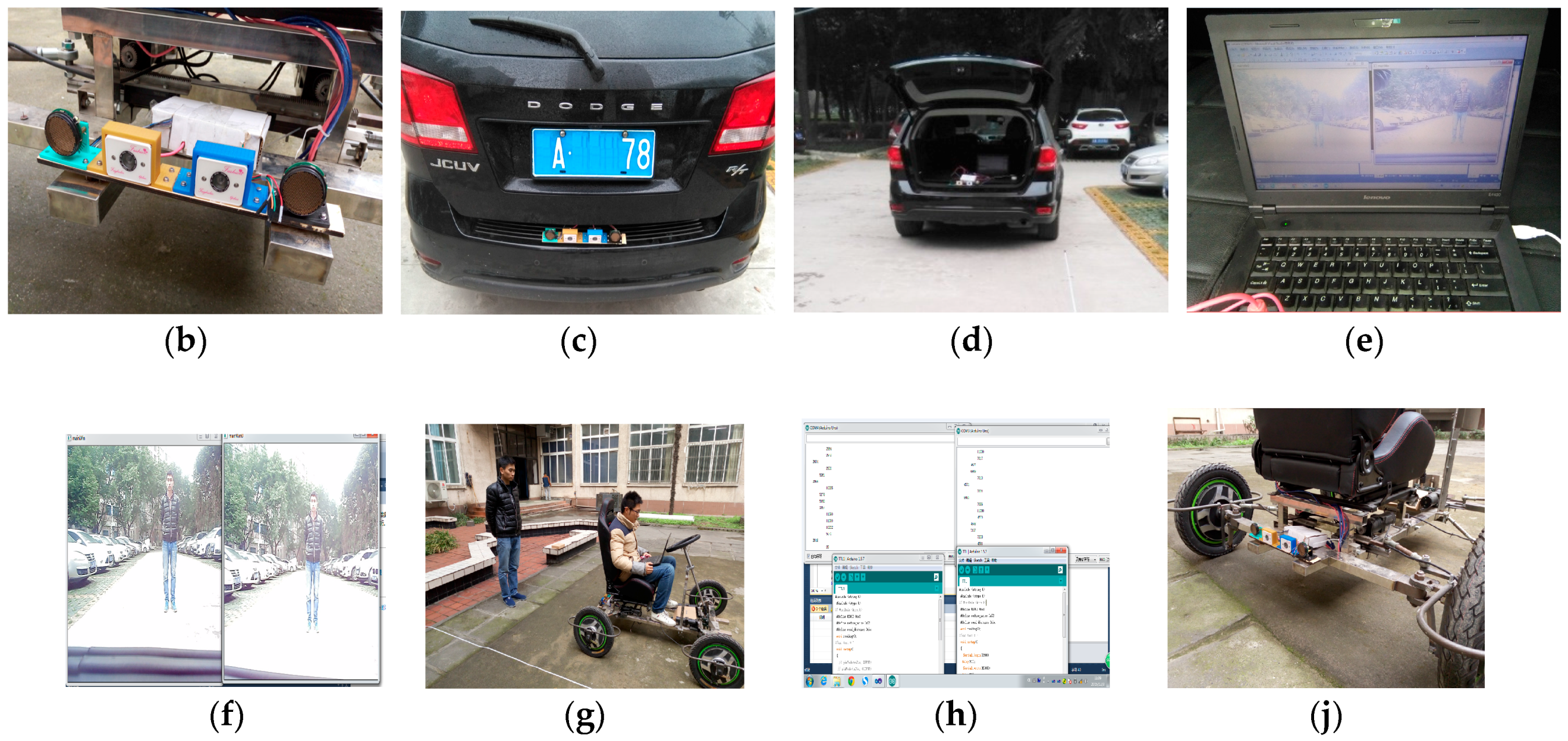

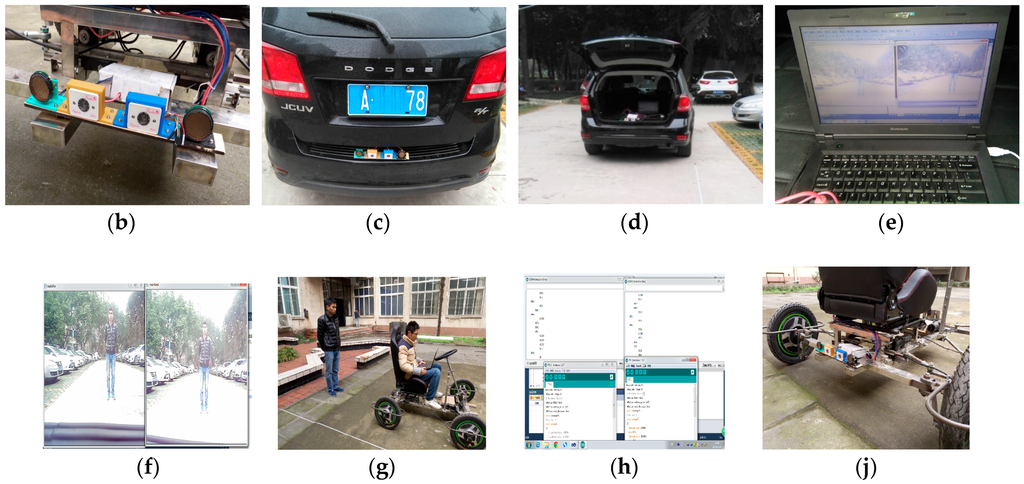

In this section, experiments have been done to confirm the effectiveness of the system. As shown in Figure 12, a real vehicle reversing experimental environment is used. The ultrasonic sensors work under the control of Arduino chips and the binocular cameras are operated by a laptop under the environment of Microsoft Visual.

Figure 12.

Experimental setup. (a) Experimental prototype; (b) multi-sensor in proposed system; (c) experimental vehicle; (d) test preparation; (e) test preparation; (f) binocular camera images; (g) obstacle detection using ultrasonic range finders; (h) tests using a homemade vehicle; (i) tests using a homemade vehicle.

There are three parts to our experiment, as shown in Figure 11a. The first part is a multi-sensor that perceives the environment of the vehicle. The vision information is obtained from binocular cameras and the distance information from ultrasonic sensors. The second part is target recognition and tracking using information fusion and low rank with particle filter. Simulation and tests about reversing speed control are the third part.

Figure 12 shows the real experimental environment for testing the system. The algorithm was tested on video sequences captured by the binocular cameras, as shown in Figure 12b, where the binocular cameras were manufactured at our lab. This system has been field-tested on the test vehicle in Figure 12c. Figure 12d–g illustrate the experiments on binocular camera images and obstacle detection using ultrasonic range finders. As shown in Figure 12h,i, in our experiments a homemade experimental vehicle is designed to test our system without traffic risk

The ultrasonic sensors and binocular cameras work simultaneously. Ultrasonic sensors are first used to detect whether there is an obstacle within 0–10 m or not. The binocular cameras capture the visual information at the back of a vehicle at the same time. If an obstacle exists, the system will start to target the obstacle and compute the distance to it based on the binocular stereo vison. After that, the initial location of the target is sent to the particle filter. Finally, the system controls the speed based on target tracking and information fusion.

6.1. Experimental Results of Binocular Vision

The distance to an obstacle is tested by using the 3D reconstruction of binocular vision, mainly for the depth of the obstacle. Firstly, we use a Matlab box to have the parameters of the binocular cameras, which are then installed into Microsoft Visual for the calibration of images captured from the right and left cameras. The results of calibration are shown in Figure 13. We can see that the images are calibrated properly.

Figure 13.

Binocular calibration of our system.

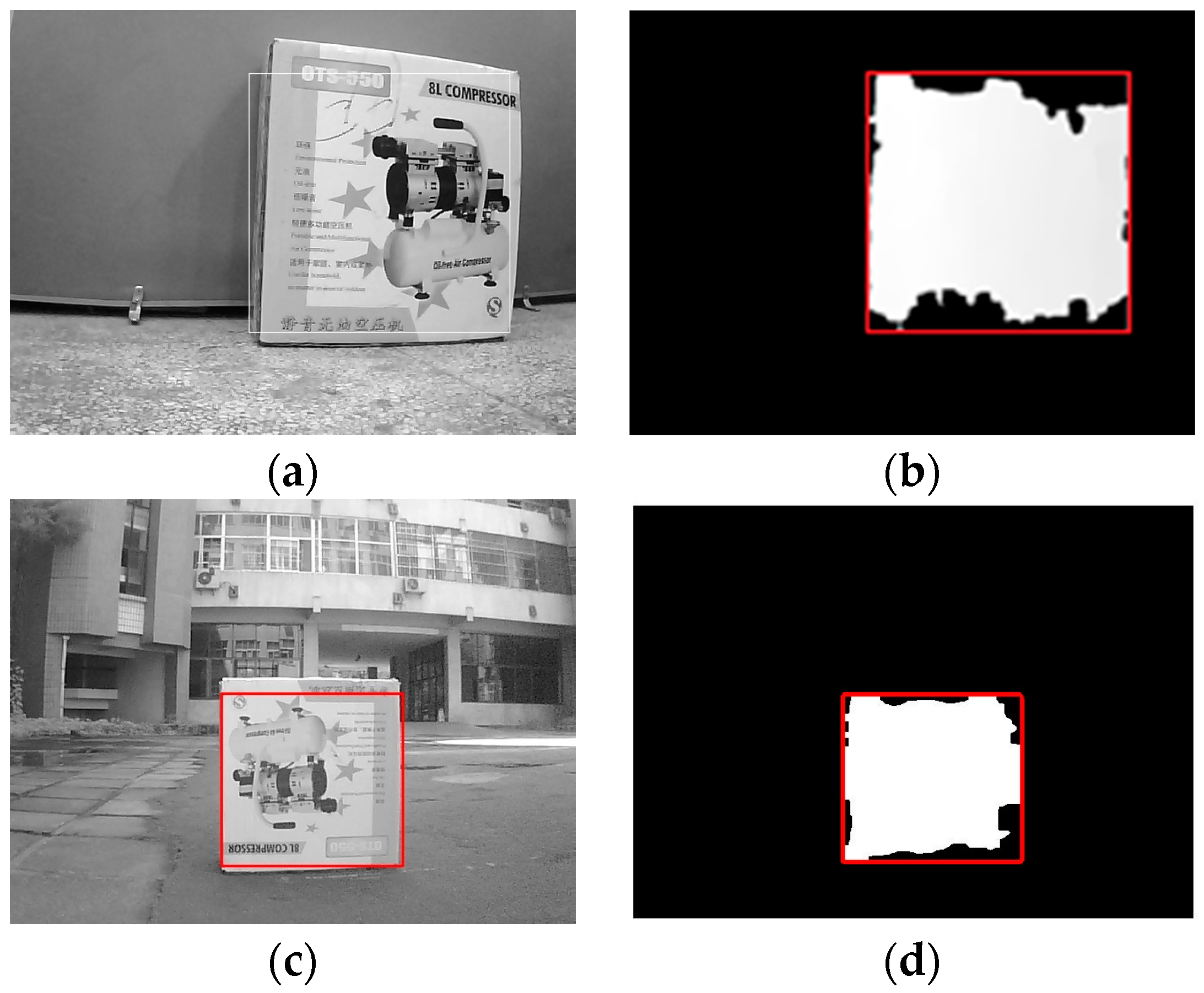

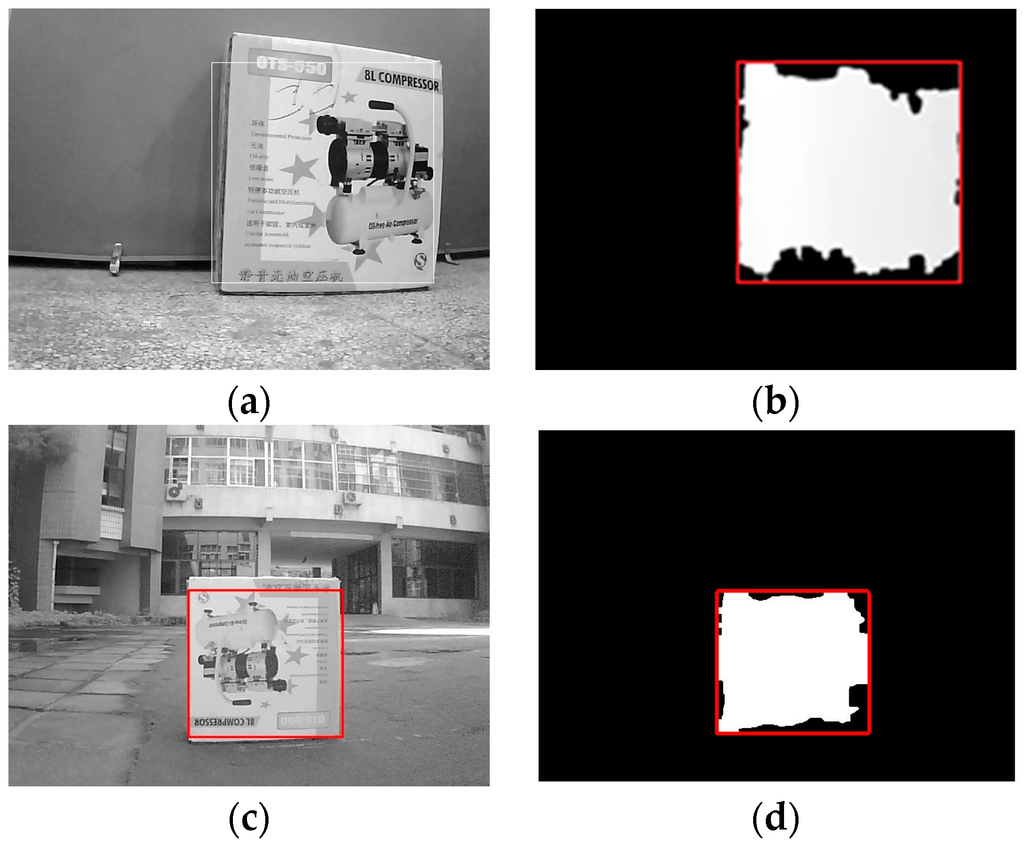

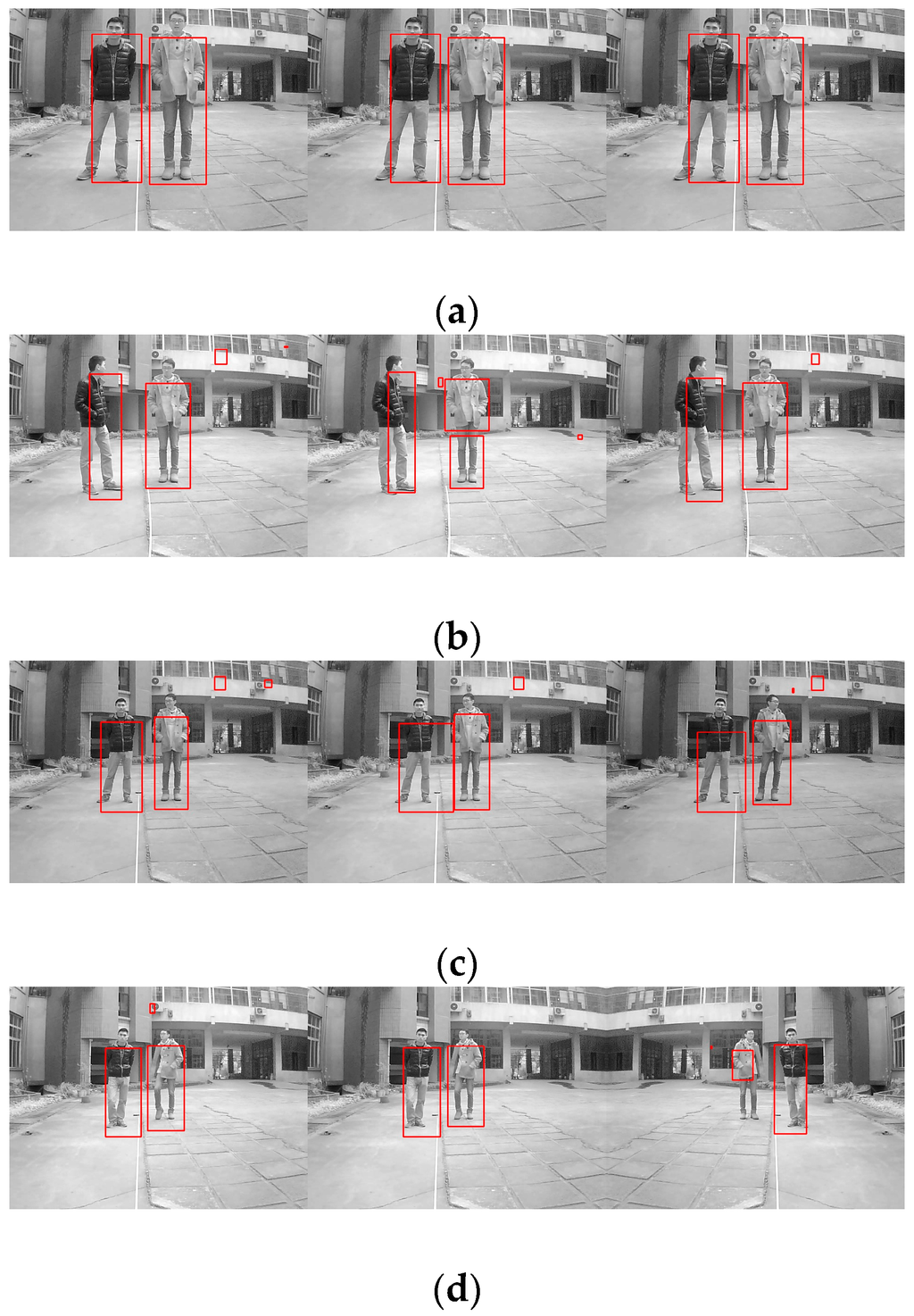

After the calibration process, stereo rectification and stereo correspondence are made to acquire the disparity of the images. As shown in Figure 14a, the distance to obstacles is obtained from the disparity. In addition, the disparity is used to detect the obstacle. The external rectangle corresponds to the target, as shown in Figure 14b, and the objects are successfully detected. After the disparity of the target, we can calculate the distance to an obstacle based on Equation (6). Here, the distance to an obstacle based on binocular vision is shown in Figure 15. The obstacle detection results at different distances are shown in Figure 16a–d. In Figure 16a, the obstacle distance is about 3.5 m away, and from the detection results it can be seen that the obstacle is well detected. Figure 16b, c show the detection results when the obstacle is about 4.0 m and 5.0 m away, respectively. We can see that the obstacles are well detected. We chose the largest region as the target to track. In Figure 16d, the obstacle is about 6.0 m away, and from the detection results we can see that the obstacle is also well detected. This shows the validity of the proposed binocular vision obstacle detection module.

Figure 14.

Results of target grab: (a) target grabbing from the disparity indoor; (b) target detection using images indoor; (c) target grabbing from the disparity outdoor; (d) target detection using images outdoor.

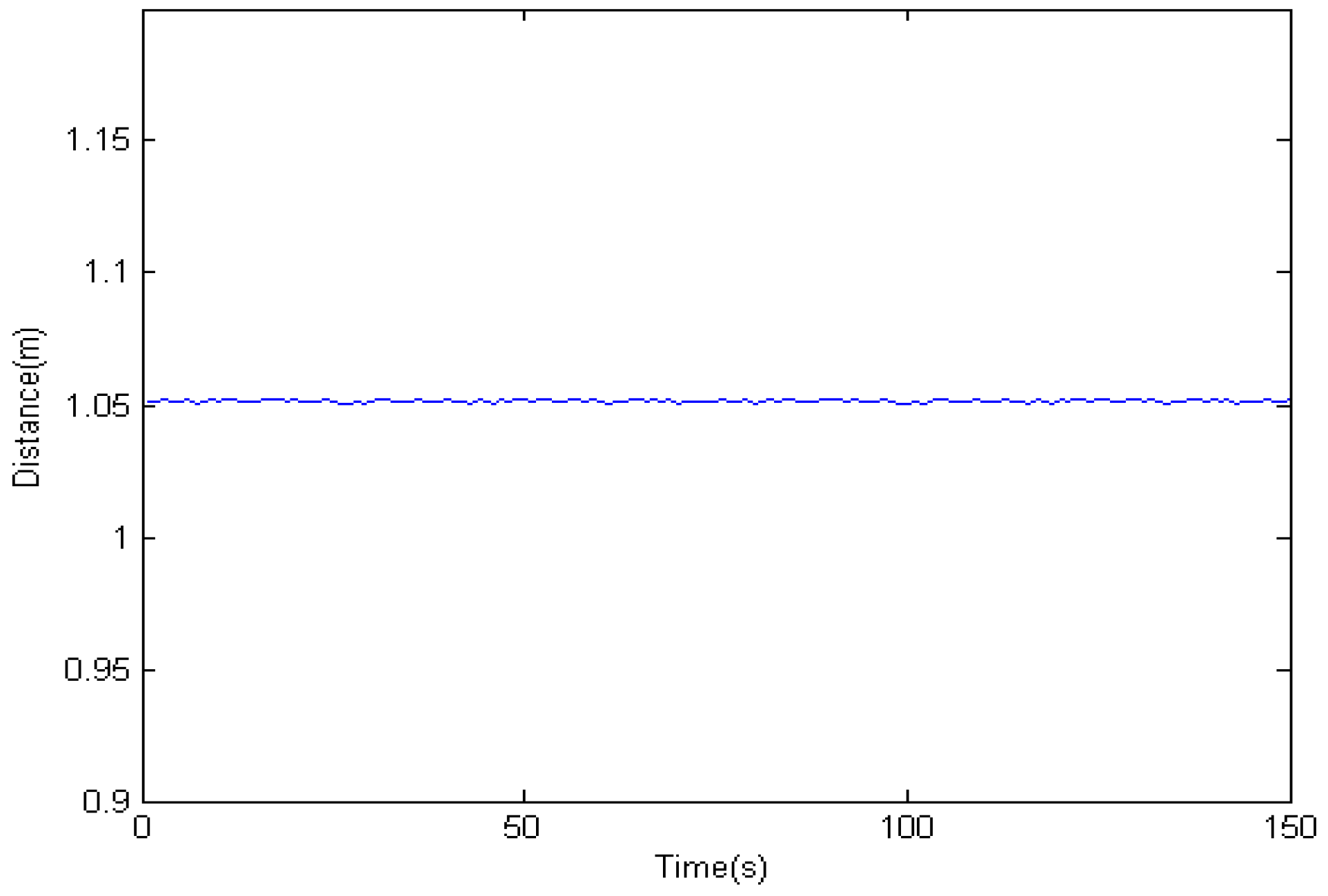

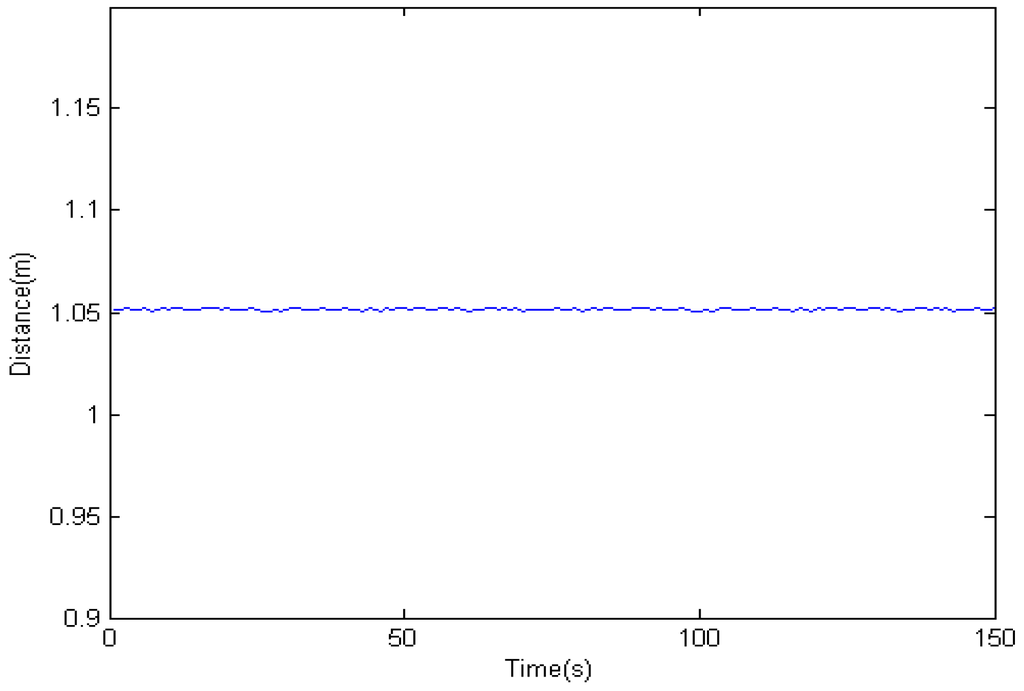

Figure 15.

The distance to an obstacle obtained by binocular vision.

Figure 16.

Obstacle detection results: (a) Obstacle detection results at 1.0 m; (b) Obstacle detection results at 1.0 m; (c) Obstacle detection results at 1.0 m; (d) Obstacle detection results at 1.0 m.

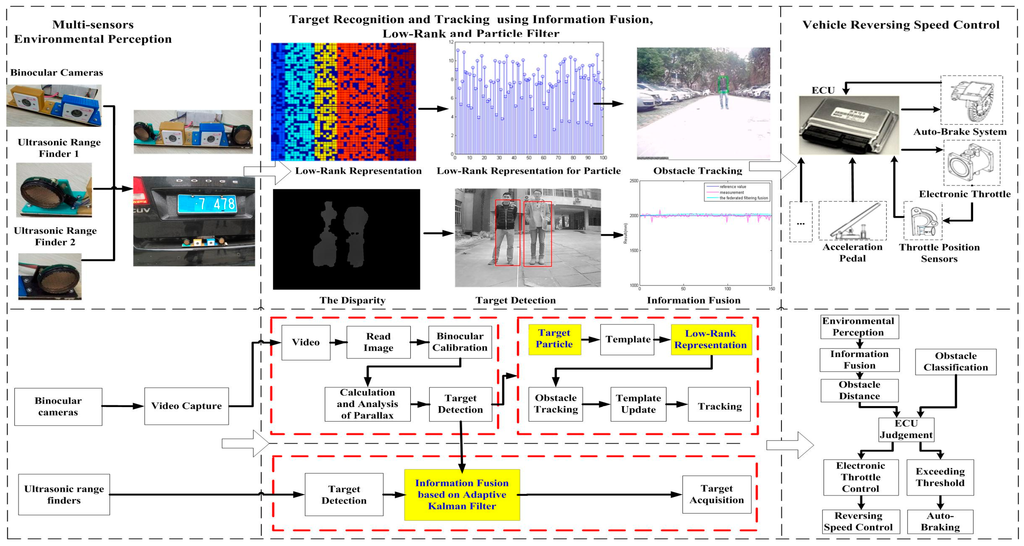

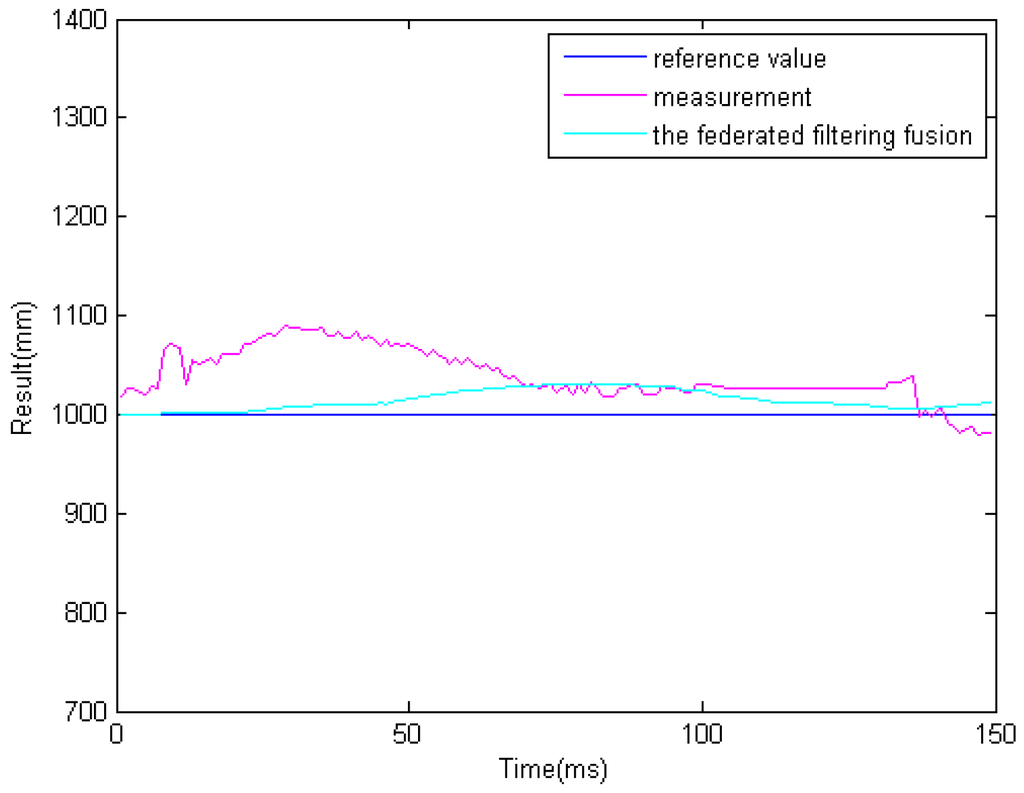

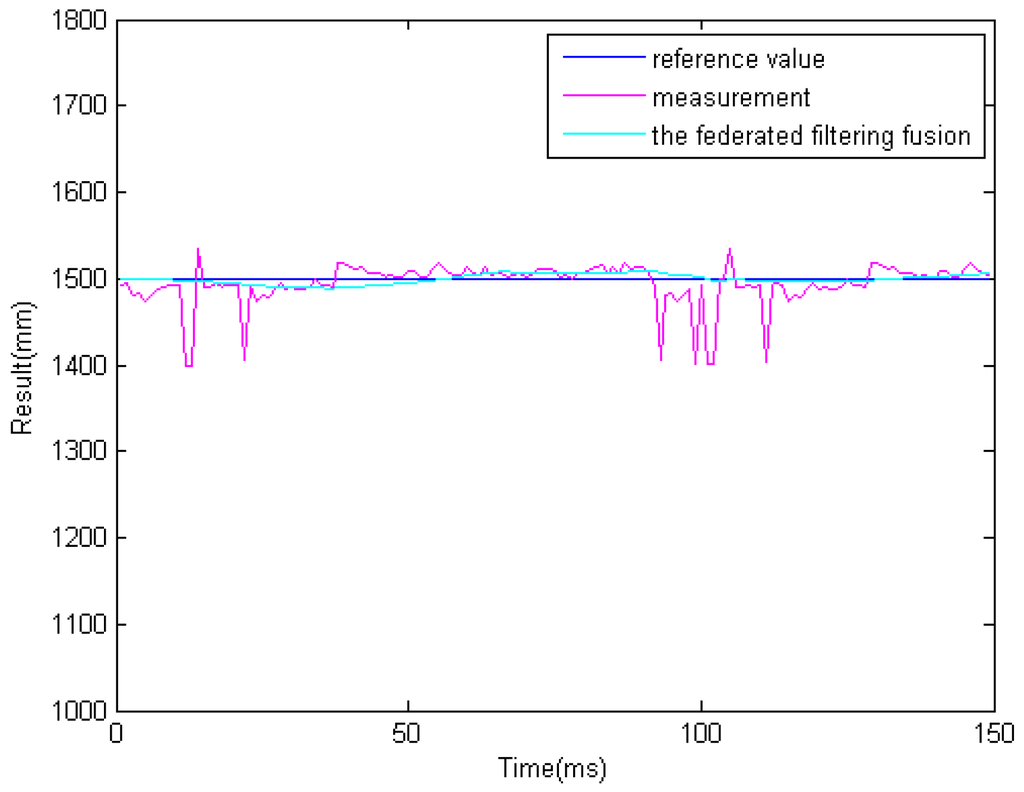

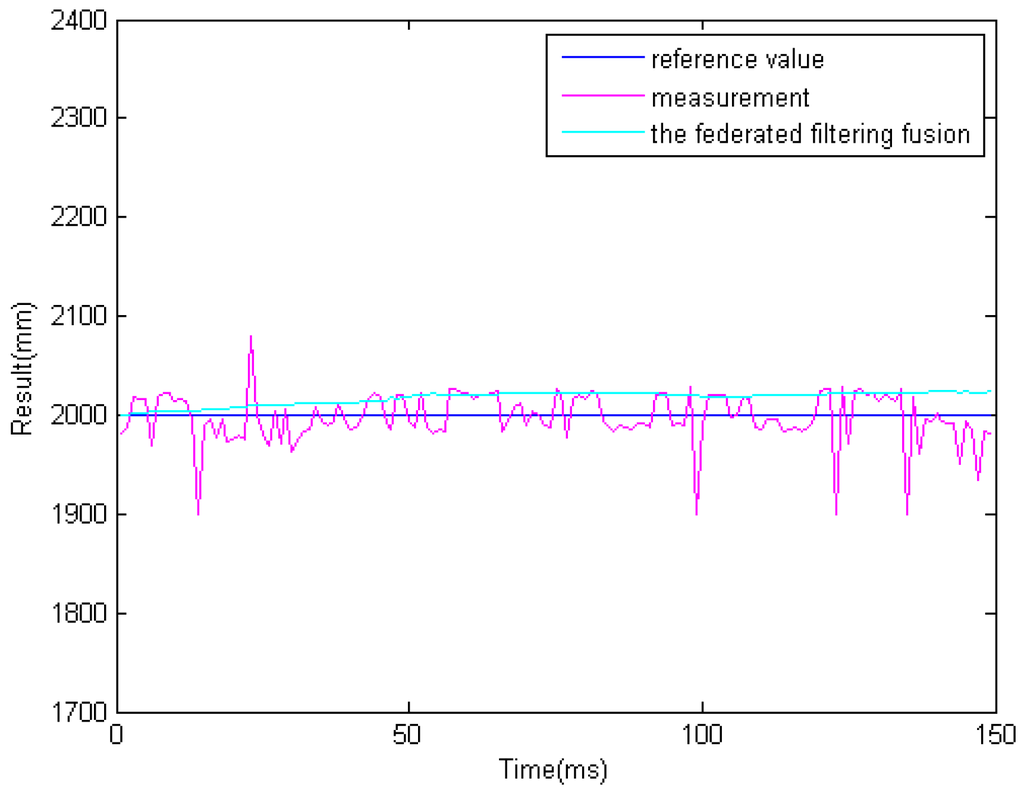

6.2. Information Fusion Based on an Adaptive Kalman Filter

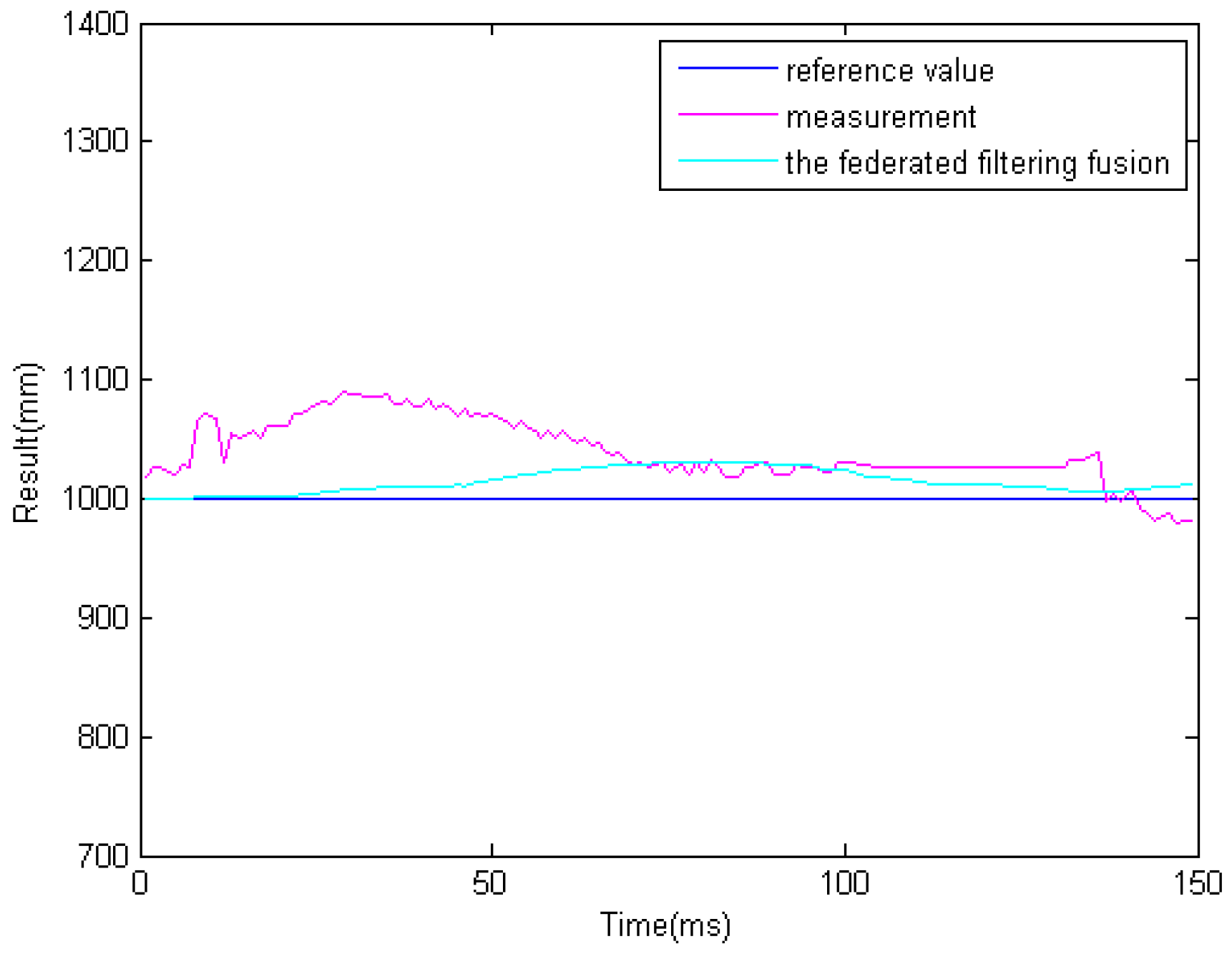

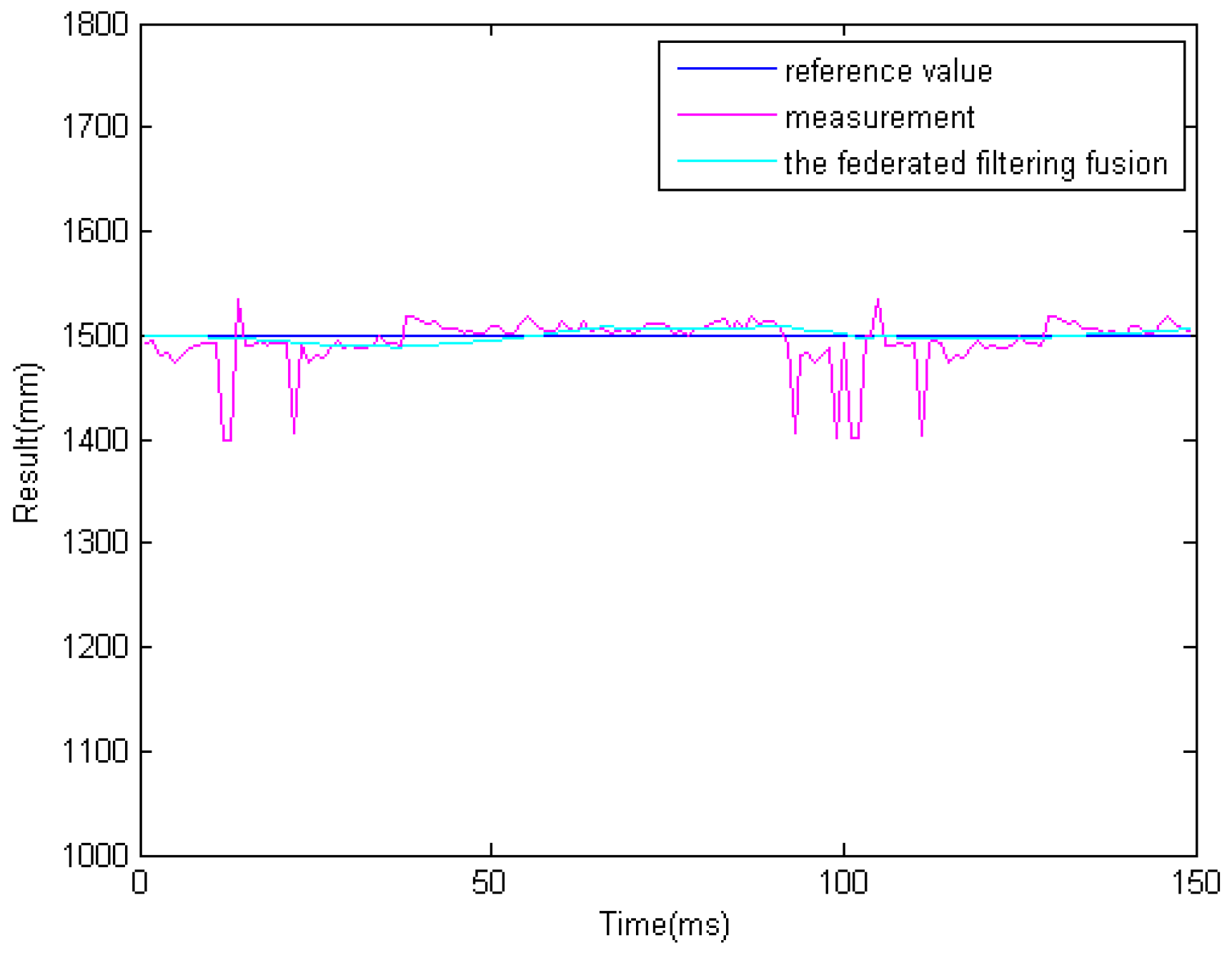

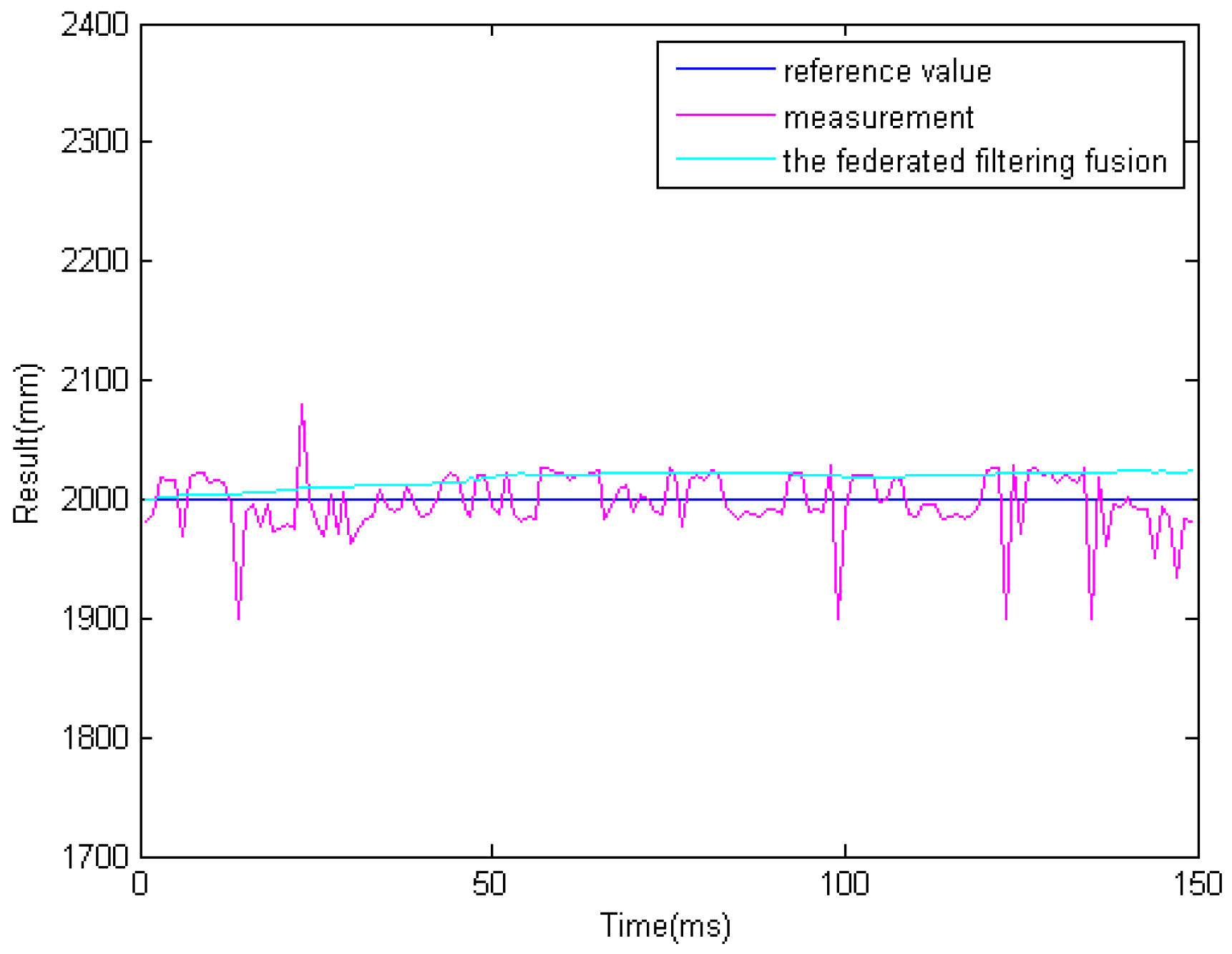

In the previous step, we get different results related to the same obstacle from the two ultrasonic sensors and binocular cameras. Because the obstacle data is obtained from four sensors. So it must be processed properly. The results of information fusion based on an adaptive Kalman filter using binocular cameras and two ultrasonic sensors are shown in Figure 17, Figure 18 and Figure 19 at 1.0, 1.5, and 2.0 m. The proposed information fusion algorithm has better performance when the system is influenced by the environment or other factors greatly.

Figure 17.

The results of information fusion based on an adaptive Kalman filter using binocular cameras and two ultrasonic sensors at 1.0 m.

Figure 18.

The results of information fusion based on an adaptive Kalman filter using binocular cameras and two ultrasonic sensors at 1.5 m.

Figure 19.

The results of information fusion based on an adaptive Kalman filter using binocular cameras and two ultrasonic sensors at 2.0 m.

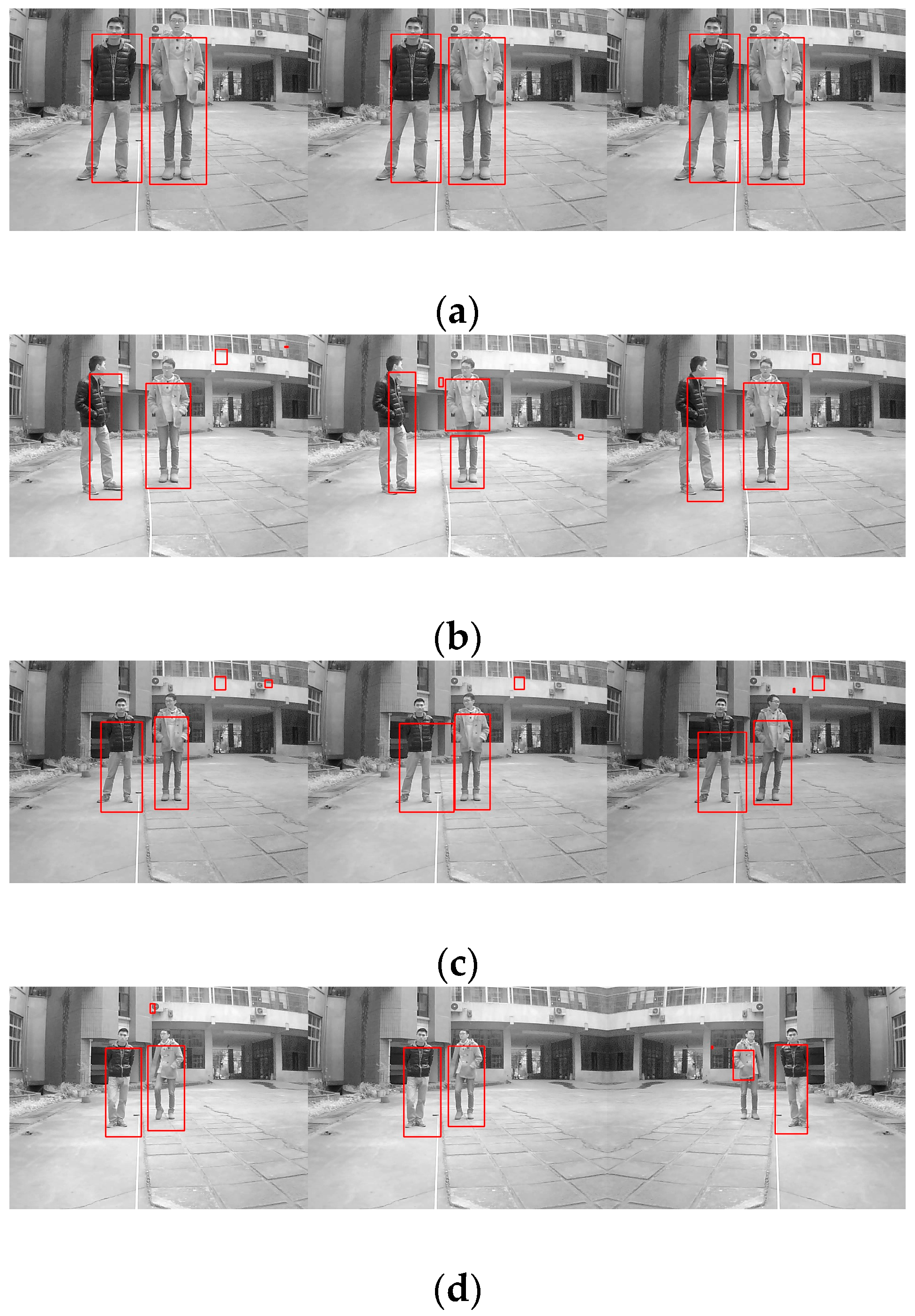

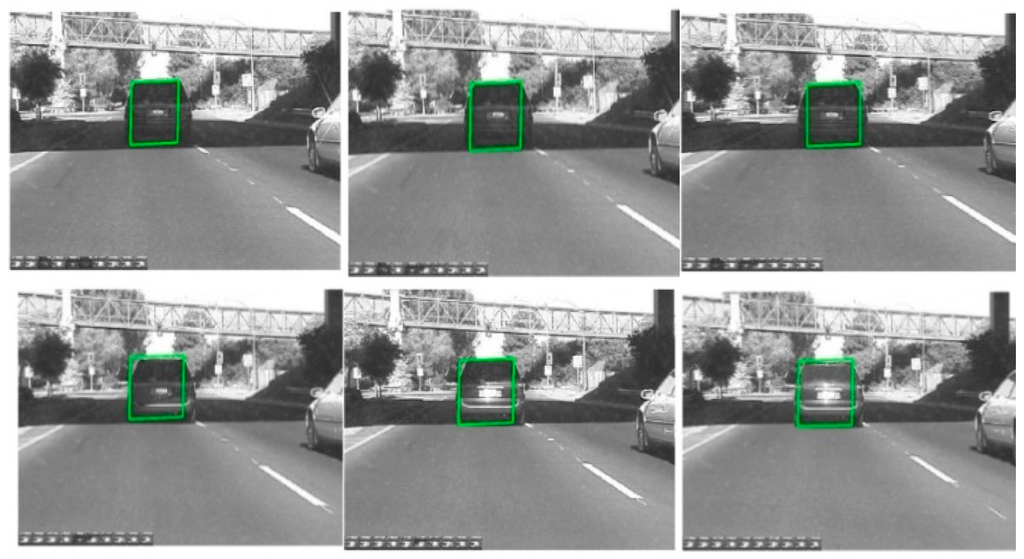

6.3. Target Recognition and Tracking Based on the Modified Particle Filter

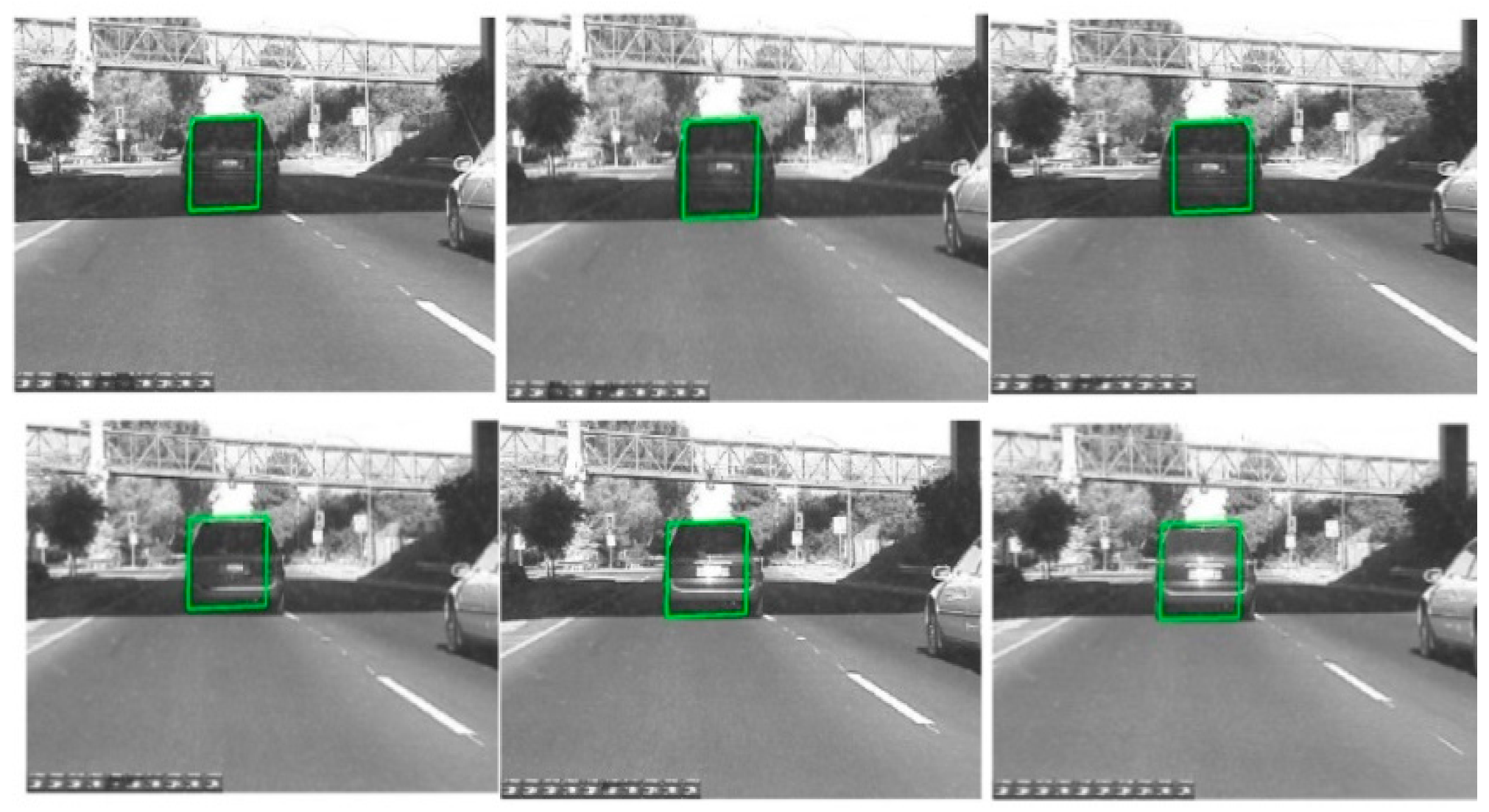

After the information fusion and target detection, using low-rank representation, the framework of the particle filter tracks and identifies obstacles like human or animal bodies and vehicles, as shown in Figure 20. The proposed algorithm can track any object in various light intensity as well as in the shadows of other objects. In Figure 20, we tried to track and recognize a vehicle, where the target is marked as the green rectangle. It can be seen that even when the vehicle is in the shadows, the target can be well tracked and recognized. Figure 21 shows the tracking results for a human based on a particle filter using low-rank representation, where the human is well targeted. Figure 22 shows a contrast experiment wherein we tried to track and recognize the man on the left in an object’s shadow. From the results, we can see that the selected target is well tracked and recognized successfully.

Figure 20.

The results of object tracking based on a particle filter using low-rank representation.

Figure 21.

Tracking results based on a particle filter using low-rank representation.

Figure 22.

Tracking results based on a particle filter using low-rank representation under a sheltered object.

In order to prove the effectiveness of the system, an experiment about the fps (frames per second) has been done on a computer equipped with an Intel i3-4150 processer at 3.5 GHz and 4 GB memory in the MATLAB 2012a environment. The comparison of fps between our proposed algorithm and the algorithm in the literature [41] is shown in Table 2. As we can see from this table, we use the same video taken by other researchers but with different algorithms. When the number is set to 30, the result of our proposed algorithm can get to 148.1246, about 7 to 8 times that of the algorithm used by other researchers. While we increase the number of frames, it still keeps the same trend. These results indicate that the proposed method shows better performance in obstacle tracking.

Table 2.

Comparison of the algorithms.

On the other hand, we have counted the tracking rate based on the 200 frames of the same video in which the light changes a lot using four videos offered by other researchers and captured by ourselves. Table 3 shows the results of the tracking. As shown in Table 3, the proposed algorithm in our paper can also reach good performance.

Table 3.

Tracking accuracy of the algorithms.

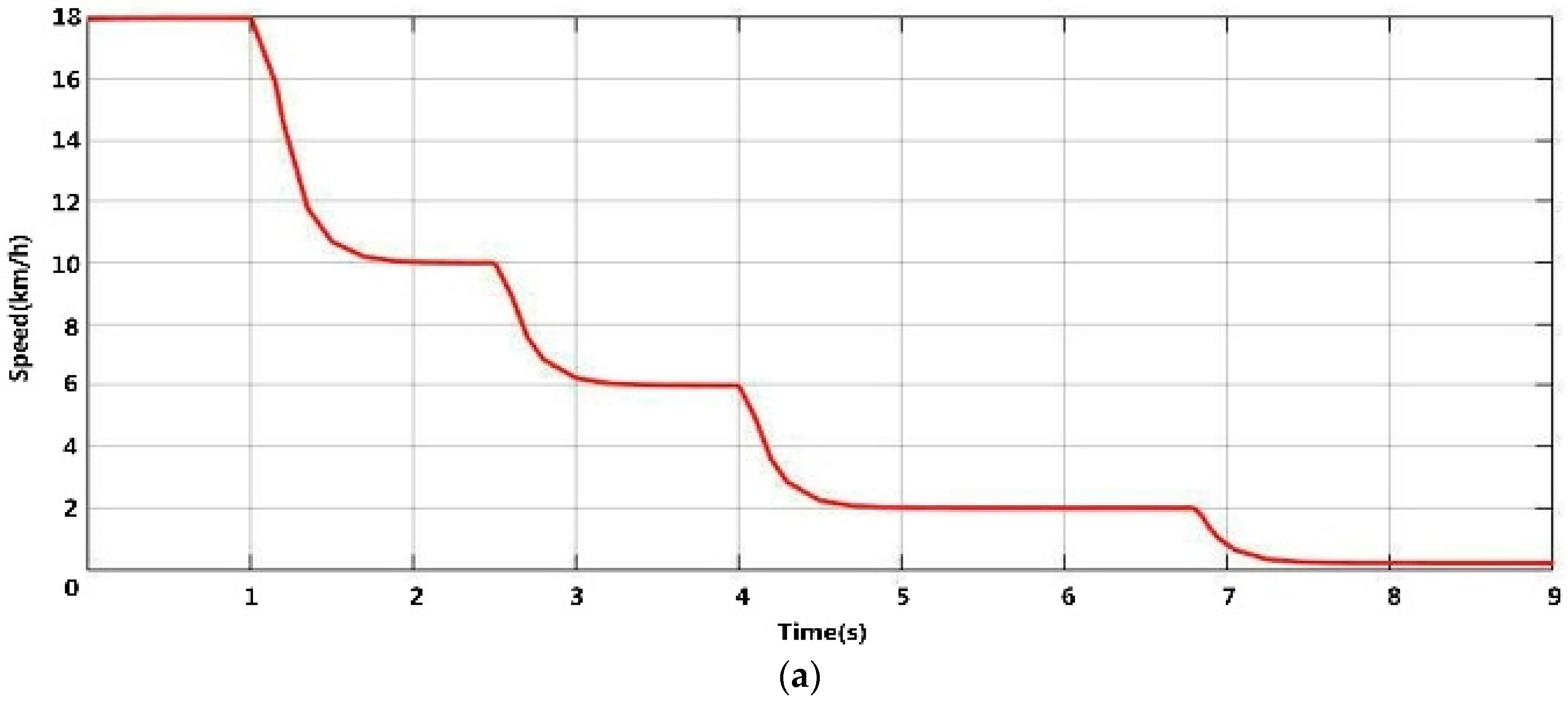

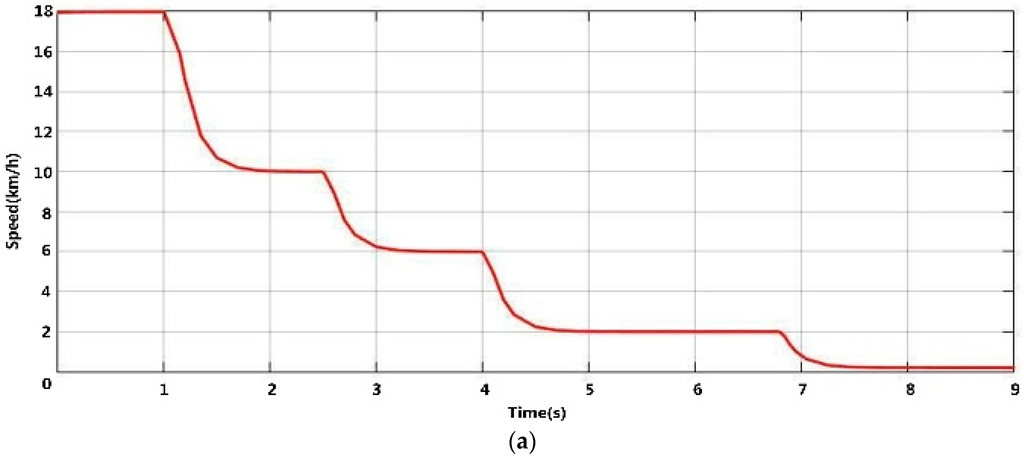

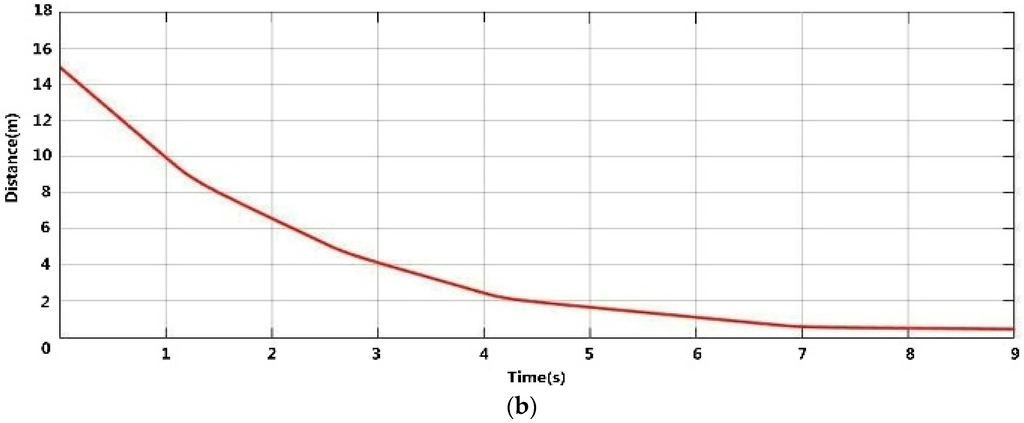

6.4. Experimental Results of Vehicle Speed Control Based on Multi-Sensor Environmental Perception

Figure 23 shows the simulation results of vehicle reversing control according to the rule of Table 1. When the vehicle is reversing, the multi-sensor’s environmental perception module starts to detect and track rear obstacles to get the obstacle’s distance information in real time. In the simulation, a man suddenly appears 15 m behind the vehicle, but the driver keeps reversing the vehicle at 18 km/h without noticing him. In order to avoid a collision, the speed of the vehicle must be restricted according to the obstacle distance, as Figure 23 shows.

Figure 23.

Simulation results of the proposed vehicle reversing speed control. (a) Relationship between speed and time; (b) Relationship between distance and time.

As shown in Figure 23a,b, when the distance between the vehicle and the man is about 10 m, by obstacle detection and tracking, the vehicle begins to slow down from 18 km/h to 10 km/h. When the distance between the vehicle and the man decreases to 5 m, the vehicle automatically slows down to 6 km/h. When the driver continues to reverse the vehicle to 2.5 m away from the pedestrian, the vehicle speed drops to 2 km/h. Finally, when the distance is less than 0.4 m, the vehicle automatically begins to brake to zero to prevent a reversing accident. So with the assistant of the multi-sensors environmental perception using low-rank representation and particle filter, the driver can operate the automobile more safely and stably to prevent reversing accident.

7. Conclusions

In this paper, we present a novel multi-sensor environmental perception method using low-rank representation and a particle filter for vehicle reversing safety. The proposed system consists of four main steps, namely multi-sensor environmental perception, target recognition, target tracking, and vehicle reversing speed control modules. The final system simulation and practical testing results demonstrate the validity of the proposed multi-sensor environmental perception method using low-rank representation and a particle filter for vehicle reversing safety. This system has been tested on a DODGE SUV and a homemade vehicle. The theoretical analysis and practical experiments show that the proposed system not only has better performance in obstacle tracking and recognition, but also enhances the vehicle’s reversing control. The information fusion and particle filter tracking improve the accuracy of measurements and rear object tracking. The effectiveness of our system is one of the key factors for the active safety system; it can help reduce drivers’ work and tiredness and, accordingly, decrease traffic accidents.

Acknowledgments

This work was supported by the National Natural Science Foundation of China under Grant No. 51175443, and by the Science and Technology Projects of Sichuan under 2015RZ0017 and 2016ZC1139.

Author Contributions

The asterisk indicates the corresponding author, and the first two authors contributed equally to this work. Zutao Zhang, and Yanjun Li designed the multi-sensor environmental perception module and the algorithm for information fusion and target recognition and tracking. Fubing Wang, Guanjun Meng, and Waleed Salman, Layth Saleem, Xiaoliang Zhang designed the experimental system and analyzed the data. Chunbai Wang, Guangdi Hu, and Yugang Liu provided valuable insight in preparing this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kim, D.-S.; Kwon, J. Moving Object Detection on a Vehicle Mounted Back-Up Camera. Sensors 2016, 16, 23. [Google Scholar] [CrossRef] [PubMed]

- Hamdani, S.T.A.; Anura, F. The application of a piezo-resistive cardiorespiratory sensor system in an automobile safety belt. Sensors 2015, 15, 7742–7753. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.T.; Luo, D.Y.; Rasim, Y.; Li, Y.J.; Meng, G.J.; Xu, J.; Wang, C.B. A Vehicle Active Safety Model: Vehicle Speed Control Based on Driver Vigilance Detection Using Wearable EEG and Sparse Representation. Sensors 2016, 16, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Gao, J.; Wang, J.; Guo, X.; Yu, C.; Sun, X. Preceding Vehicle Detection and Tracking Adaptive to Illumination Variation in Night Traffic Scenes Based on Relevance Analysis. Sensors 2014, 14, 15325–15347. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Xu, H.; Chao, Z.; Li, X. A Novel Vehicle Reversing Speed Control Based on Obstacle Detection and Sparse Representation. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1321–1334. [Google Scholar] [CrossRef]

- Chu, Y.C.; Huang, N.F. An Efficient Traffic Information Forwarding Solution for Vehicle Safety Communications on Highways. IEEE Trans. Intell. Transp. Syst. 2012, 13, 631–643. [Google Scholar] [CrossRef]

- Traffic safety facts: Research notes. 2012. Available online: www-nrd.nhtsa.dot.gov/Pubs/811552.pdf (accessed on 7 June 2016).

- Sirkku, L.; Esko, K. Has the difference in accident patterns between male and female drivers changed between 1984 and 2000. Accid. Anal. Prev. 2004, 36, 577–584. [Google Scholar]

- Lin, C.-C.; Wang, M.-S. A vision based top-view transformation model for a vehicle parking assistant. Sensors 2012, 12, 4431–4446. [Google Scholar] [CrossRef] [PubMed]

- New Vehicle Collision Alert (or Detection) System May Help Driving Safe. Available online: www.smartmotorist.com/motorist-news/new-vehicle-collision-alert-system-may-help-driving-safe.html (accessed on 7 June 2016).

- Monwar, M.M.; Kumar, B.V.K.V. Vision-based potential collision detection for reversing vehicle. In Proceedings of the Intelligent Vehicles Symposium (IV), Gold Coast City, Australia, 23–26 June 2013; pp. 81–86.

- He, X.; Aloi, D.N.; Li, J. Probabilistic Multi-Sensor Fusion Based Indoor Positioning System on a Mobile Device. Sensors 2015, 15, 31464–31481. [Google Scholar] [CrossRef] [PubMed]

- Rodger, J.A. Toward reducing failure risk in an integrated vehicle health maintenance system: A fuzzy multi-sensor data fusion Kalman filter approach for IVHMS. Expert Syst. Appl. 2012, 39, 9821–9836. [Google Scholar] [CrossRef]

- Altafini, C.; Speranzon, A.; Wahlberg, B. A feedback control scheme for reversing a truck and trailer vehicle. IEEE Trans. Robot. Autom. 2001, 17, 915–922. [Google Scholar] [CrossRef]

- Fox-Parrish, L.; Jurin, R.R. Multi-Sensor Based Perception Network for Vehicle Driving Assistance. In Proceedings of the 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–6.

- Lu, K.; Li, J.; An, X.; He, H. Vision Sensor-Based Road Detection for Field Robot Navigation. Sensors 2015, 15, 29594–29617. [Google Scholar] [CrossRef] [PubMed]

- Jung, H.-S.; Park, S.-W. Multi-sensor fusion of Landsat 8 thermal infrared (TIR) and panchromatic (PAN) images. Sensors 2014, 14, 24425–24440. [Google Scholar] [CrossRef] [PubMed]

- Laugier, C.; Paromtchik, I.E.; Perrollaz, M.; Yong, M.Y. Probabilistic Analysis of Dynamic Scenes and Collision Risks Assessment to Improve Driving Safety. IEEE Trans. Intell. Transp. Syst. 2011, 3, 4–19. [Google Scholar] [CrossRef]

- Zheng, L.W.; Chang, Y.H.; Li, Z.Z. A study of 3D feature tracking and localization using a stereo vision system. In Proceedings of the 2010 International Computer Symposium, Tainan, Taiwan, 16–18 December 2010; pp. 402–407.

- Morales, J.; Mandow, A.; Martínez, J.L.; Reina, A.J.; García-Cerezo, A. Driver Assistance System for Passive Multi-Trailer Vehicles with Haptic Steering Limitations on the Leading Unit. Sensors 2013, 13, 4485–4498. [Google Scholar] [CrossRef] [PubMed]

- Castillo Aguilar, J.J.; Cabrera Carrillo, J.A.; Guerra Fernández, A.J.; Carabias, A.E. Robust Road Condition Detection System Using In-Vehicle Standard Sensors. Sensors 2015, 15, 32056–32078. [Google Scholar] [CrossRef] [PubMed]

- Liang, Z.; Chaovalitwongse, W.A.; Rodriguez, A.D.; Jeffcoat, D.E.; Grundel, D.A.; O’Neal, J.K. Optimization of spatiotemporal clustering for target tracking from multisensor data. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2010, 40, 176–188. [Google Scholar] [CrossRef]

- Kumar, M.; Garg, D.P.; Zachery, R.A. A Method for Judicious Fusion of Inconsistent Multiple Sensor Data. IEEE Sens. J. 2007, 7, 723–733. [Google Scholar] [CrossRef]

- Morten, G.; Giovanna, P.; Jensen, A.A.; Karin, S.N.; Martin, S.; Morten, G. Human Detection from a Mobile Robot Using Fusion of Laser and Vision Information. Sensors 2013, 13, 11603–11635. [Google Scholar]

- Hu, J.; Xie, L.; Lum, K.Y.; Xu, J. Multiagent Information Fusion and Cooperative Control in Target Search. IEEE Trans. Control Syst. Technol. 2013, 21, 1223–1235. [Google Scholar] [CrossRef]

- Bai, L.; Wang, Y. A Sensor Fusion Framework Using Multiple Particle Filters for Video-Based Navigation. IEEE Trans. Intell. Transp. Syst. 2010, 11, 348–358. [Google Scholar] [CrossRef]

- Klir, G.J.; Yuan, B. Fuzzy sets and fuzzy logic: Theory and applications. Int. Encycl. Hum. Geogr. 1995, 13, 283–287. [Google Scholar]

- Haykin, S.S. Neural Networks: A Comprehensive Foundation; Prentice-Hall: Englewood Cliffs, NJ, USA, 1998. [Google Scholar]

- Wang, Z.; Leung, K.S.; Wang, J. A genetic algorithm for determining nonadditive set functions in information fusion. Fuzzy Set. Syst. 1999, 102, 463–469. [Google Scholar] [CrossRef]

- Pavlin, G.; Oude, P.D.; Maris, M.; Nunnink, J.; Hood, T. A multi-agent systems approach to distributed bayesian information fusion. Inform. Fusion 2010, 11, 267–282. [Google Scholar] [CrossRef]

- Zhao, X.; Fei, Q.; Geng, Q. Vision Based Ground Target Tracking for Rotor UAV. In Proceedings of the 2013 10th IEEE International Conference on Control and Automation, Hangzhou, China, 12–14 June 2013; pp. 1907–1911.

- Sankarasrinivasan, S.; Balasubramanian, E.; Hsiao, F.Y.; Yang, L.J. Robust Target Tracking Algorithm for MAV Navigation System. In Proceedings of the 2015 International Conference on Industrial Instrumentation and Control, Pune, India, 28–30 May 2015; pp. 269–274.

- Sun, K.; Wang, B.; Hao, Z. Rapid Target Recognition and Tracking under Large Scale Variation Using Semi-Naive Bayesian. In Proceedings of the 29th Chinese Control Conference, Beijing, China, 29–31 July 2010; pp. 2750–2754.

- Sachin, K.; Abhijit, M.; Pavan, C.; Nandi, G.C. Tracking of a Target Person Using Face Recognition by Surveillance Robot. In Proceedings of the International Conference on Communication, Information & Computing Technology, Mumbai, India, 19–20 October 2012; pp. 1–6.

- Yao, S.; Chen, X.; Wang, S.; Jiao, Z.; Wang, Y.; Yu, D. Camshift Algorithm -based Moving Target Recognition and Tracking System. In Proceedings of the 2012 IEEE International Conference on Virtual Environments Human-Computer Interfaces and Measurement Systems (VECIMS) Proceedings, Tianjin, China, 2–4 July 2012; pp. 181–185.

- Jin, B.; Su, T.; Li, Y.; Zhang, L. Multi-target tracking algorithm aided by a high resolution range profile. J. Xidian Univ. 2016, 43, 1–7. [Google Scholar]

- Ma, Z.; Wen, J.; Hao, L.; Wang, X. Multi-targets Recognition for Surface Moving Platform Vision System Based on Combined Features. In Proceedings of the 2014 IEEE International Conference on Mechatronics and Automation, Tianjin, China, 3–6 August 2014; pp. 1833–1838.

- Lee, C.H.; Lim, Y.C.; Kwon, S.; Lee, J. Stereo vision-based vehicle detection using a road feature and disparity histogram. Opt. Eng. 2011, 50. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, X.; Zhang, W. An Algorithm of Feather and Down Target Detection and Tracking Method Based on Sparse Representation. In Proceedings of the 2015 Seventh International Conference on Measuring Technology and Mechatronics Automation, Nanchang, China, 13–14 June 2015; pp. 87–90.

- Khammari, A.; Nashashibi, F.; Abramson, Y.; Laurgeau, C. In Vehicle detection combining gradient analysis and AdaBoost classification. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Vienna, Austria, 13–16 September 2005; pp. 13–16.

- Mei, X.; Ling, H. Robust Visual Tracking and Vehicle Classification via Sparse Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2259–2272. [Google Scholar] [PubMed]

- Mei, X.; Ling, H.; Wu, Y.; Blasch, E.; Bai, L. In Minimum error bounded efficient L1 tracker with occlusion detection. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1257–1264.

- Ling, H.; Bai, L.; Blasch, E.; Mei, X. Robust infrared vehicle tracking across target pose change using L 1 regularization. In Proceedings of the 13th Information Fusion, Edinburgh, Scotland, 26–29 July 2010; pp. 1–8.

- Bageshwar, V.L.; Garrard, W.L.; Rajamani, R. Model Predictive Control of Transitional Maneuvers for Adaptive Cruise Control Vehicles. IEEE Trans. Veh. Technol. 2004, 53, 1573–1585. [Google Scholar] [CrossRef]

- Xiong, H.; Boyle, L.N. Adaptation to Adaptive Cruise Control: Examination of Automatic and Manual Braking. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1468–1473. [Google Scholar] [CrossRef]

- Du, X.; Tan, K.K. Autonomous Reverse Parking System Based on Robust Path Generation and Improved Sliding Mode Control. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1225–1237. [Google Scholar] [CrossRef]

- Luo, D.Y.; Zhang, Z.T. A Novel Vehicle Speed Control Based on Driver’s Vigilance Detection Using EEG and Sparse Representation. In Proceedings of the Applied Mechanics and Materials, Shanghai, China, 23–24 April 2014; pp. 607–611.

- Mccall, J.C.; Trivedi, M.M. Human Behavior Based Predictive Brake Assistance. In Proceedings of Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 8–12.

- Nguyen, D.; Widrow, B. The truck backer-upper: an example of self-learning in neural networks. In Proceedings of the International Society for Optical Engineering, Los Angeles, CA, USA, 15 January 1989; Volume 2, pp. 357–363.

- Kong, S.G.; Kosko, B. Adaptive fuzzy systems for backing up a truck-and-trailer. IEEE Trans. Neural Netw. 1992, 3, 211–223. [Google Scholar] [CrossRef] [PubMed]

- Hwisoo, E.; Sang, H.L. Human-Automation Interaction Design for Adaptive Cruise Control Systems of Ground Vehicles. Sensors 2015, 15, 13916–13944. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- OpenCV, Open Source Computer Vision Library. 2013. Available online: http://opencvlibrary.sourceforge.net (accessed on 7 June 2016).

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).