1. Introduction

Natural ecosystems provide a wide variety of useful resources that enhance human welfare. However, in recent decades, there has been a decline in these ecosystem services, as well as their biodiversity [

1]. In particular, coastal ecosystems are the most complex, dynamic and productive systems in the world [

2]. This creates a demand to preserve environmental resources; hence, it is of great importance to develop reliable methodologies, applied to new high resolution satellite imagery. Thus, the analysis, conservation and management of these environments could be studied, in a continuous, reliable and economic way, and at the suitable spatial, spectral and temporal resolution. However, some processing tasks need to be improved; for instance, the weaknesses in the classification and analysis of land and coastal ecosystems on the basis of remote sensing data, as well as the lack of reliability of the maps; particularly, the extreme difficulty to monitor the coastal ecosystems from remote sensing imagery due to the low reflectivity of these areas covered by water.

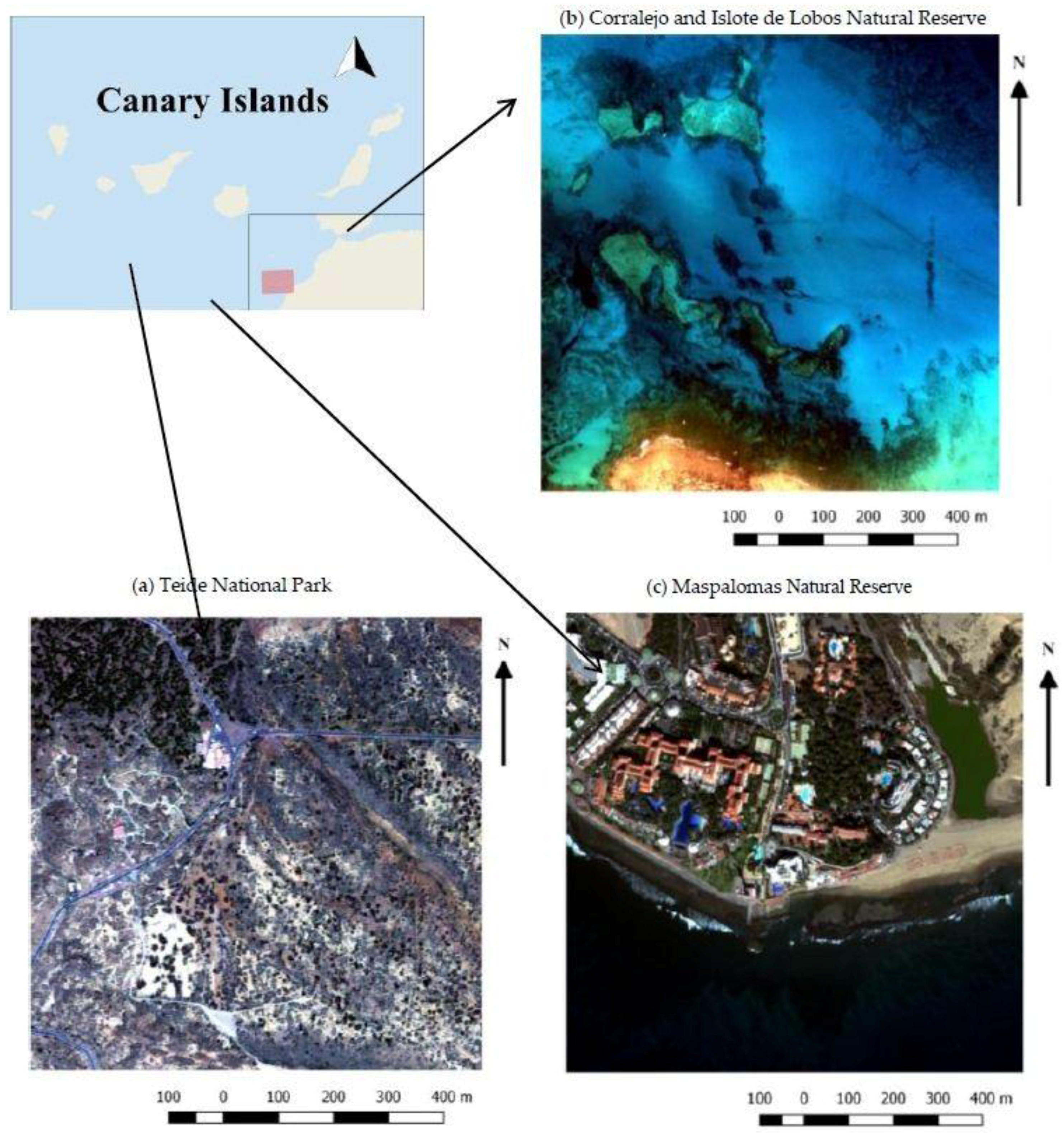

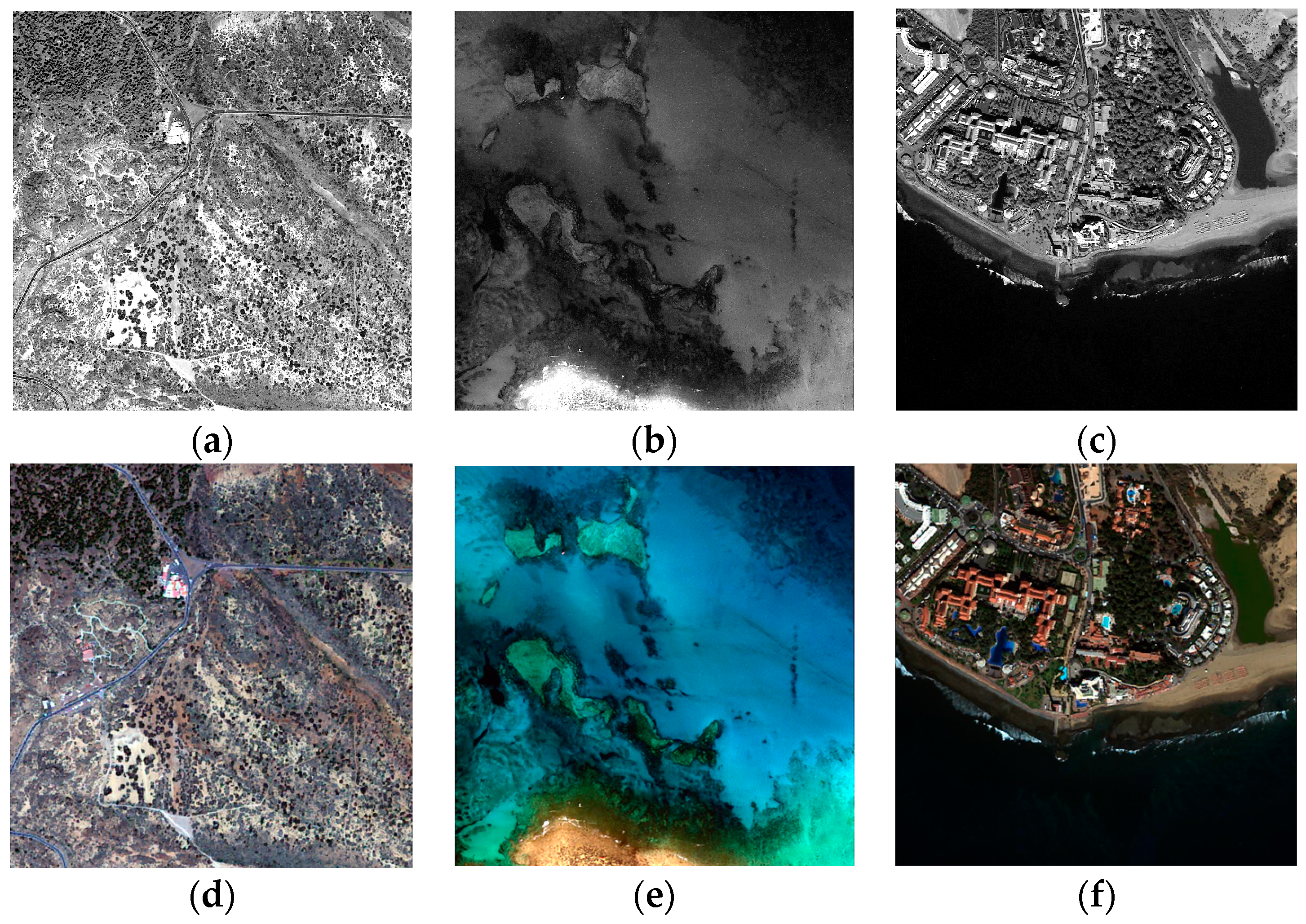

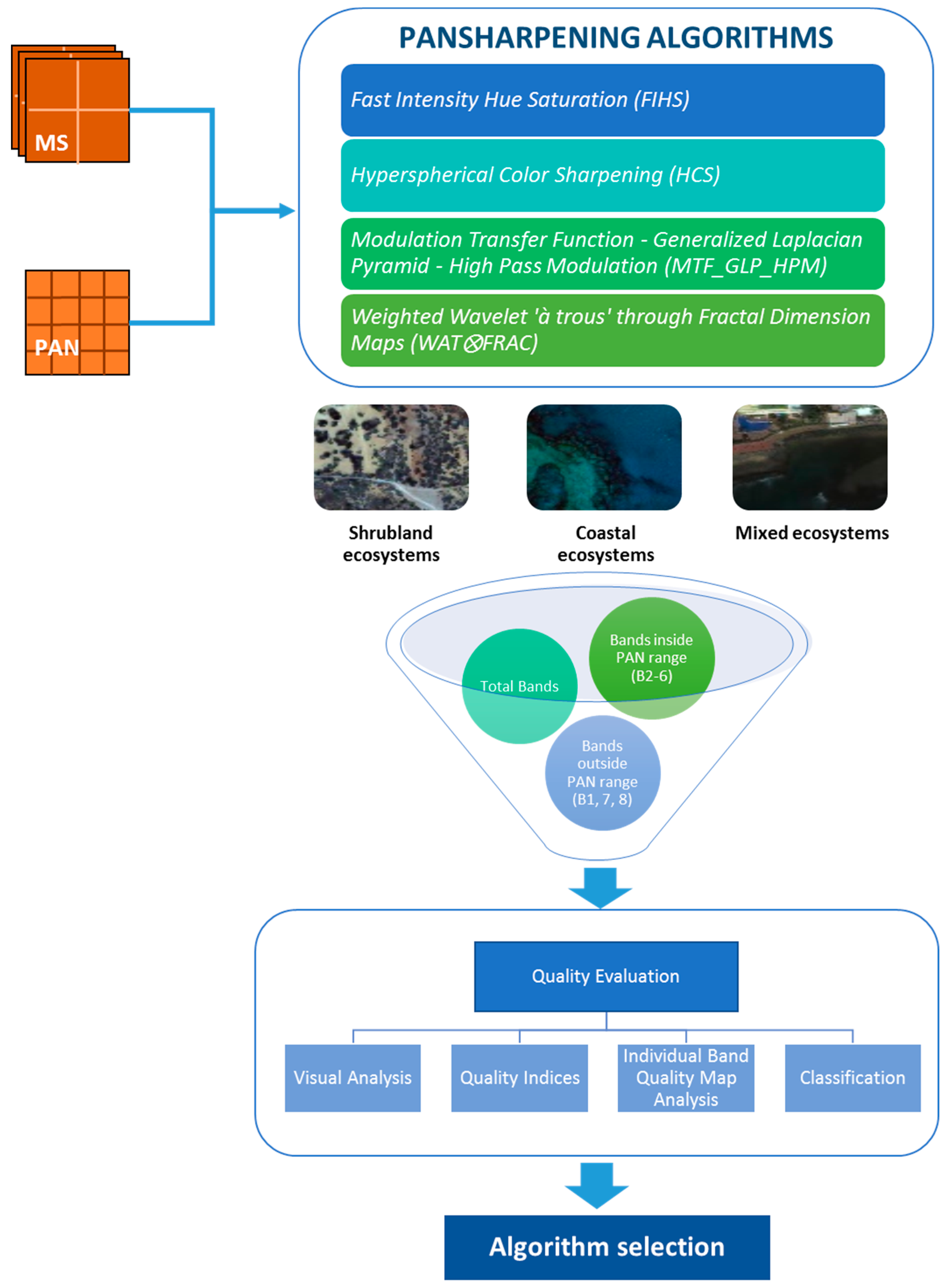

The framework in which the study has been developed is the analysis of both coastal and land ecosystems through very high resolution (VHR) remote sensing imagery in order to obtain high quality products that will allow the comprehensive analysis of natural resources. In this context, remote sensing imagery offers practical and cost-effective means for a good environmental management, especially when large areas have to be monitored [

3] or periodic information is needed. Most VHR optical sensors provide a multispectral image (MS) and a panchromatic image (PAN), which require a number of corrections and enhancements. Image fusion or pansharpening algorithms are important for improving the spatial quality of information available. The pansharpening data fusion technique could be defined as the process of merging MS and PAN images to create new multispectral images with a high spatial resolution [

4,

5]. This fusion stage is important in the analysis of such vulnerable ecosystems, mainly characterized by heterogeneous and mixed vegetation shrubs, with small shrubs in the case of terrestrial ecosystems and the complexity of seagrass meadows or algae distribution in shallow water ecosystems.

Image fusion techniques combine sensor data from different sources with the aim of providing more detailed and reliable information. The extensive research into image fusion techniques in remote sensing started in the 1980s [

6,

7]. Generally, image fusion can be categorized into three levels: pixel level, feature level and knowledge or decision level [

8,

9], and pansharpening is performed at the pixel level.

Many pansharpening techniques have appeared in recent decades, as a consequence of the launch of very high resolution sensors [

10,

11,

12,

13,

14]. An ideal pansharpening algorithm should have two main attributes: (i) enhancing high spatial resolution; and (ii) reducing spectral distortion [

15]. The simplest pansharpening methods, at the conceptual and computational level, are intensity-hue-saturation (IHS), principal component analysis (PCA) and Brovey transforms (BT). However, these techniques have problems because the radiometry on the spectral channels is distorted after fusion. New approaches, such as wavelet transformations and high pass filtering (HPF) [

4,

8,

16,

17,

18], have been proposed to address particular problems with the traditional techniques.

On the other hand, quality evaluation is a fundamental issue to benchmark and optimize different pansharpening algorithms [

18,

19], as there is the necessity to assess the spectral and spatial quality of the fused images. There are two types of evaluation approaches commonly used: (1) qualitative analysis (visual assessment); and (2) quantitative analysis (quality indices). Visual analysis is a powerful tool for capturing the geometrical aspect [

20] and the main color disturbances. According to [

10], some visual parameters are necessary for testing the properties of the image, such as the spectral preservation of features, multispectral synthesis in fused images and the synthesis of images close to actual images at high resolution. On the other hand, quality indices measure the spectral and the spatial distortion produced due to the pansharpening process by comparing each fused image to the reference MS or PAN image. The work in [

21] categorized them into three main groups: (i) spectral quality indices such as the spectral angle mapper (SAM) [

22] and the spectral relative dimensionless global error, in French ‘

erreur relative globale adimensionnelle de synthése’ (ERGAS) [

23]; (ii) spatial quality indices, i.e., the spatial ERGAS [

24], the frequency comparison index (FC) [

25] and the Zhou index [

26]; and (iii) global quality indices, such as the 8-band image quality index (Q8) [

27]. On the other hand, there are several evaluation techniques with no reference requirement, such as the quality with no reference (QNR) approach [

28].

In this study, the main goal is to assess which pansharpening technique, using Worldview-2 VHR imagery with eight MS bands, provides a better fused image. Future research will be focused on the classification of the vulnerable ecosystems, in order to obtain specific products for the management of coastal and land resources, in contrast to several studies assessing and reviewing pansharpening techniques [

11,

16,

20,

29,

30,

31,

32,

33,

34]. Further specific goals in this paper are: (i) the study of the spatial and spectral quality of pansharpened bands covered by and outside the PAN wavelength range; (ii) analysis of pansharpening algorithms’ behavior in vulnerable natural ecosystems, unlike the majority of previous studies, which apply the pansharpening techniques in urban areas or on other land cover types; and (iii) novel assessment in VHR image fusion in shallow coastal waters, whilst being aware of the pansharpening difficulty of these ecosystems. Although other authors apply fusion in water areas, such as [

35,

36], they use Landsat imagery, not VHR imagery. The aim of the last point is to identify which techniques were more suitable for shallow water areas and the improvement achieved. This information can lead to obtaining more accurate seafloor segmentation or mapping of coastal zones [

37]; hence, studies on the state of conservation of natural resources could be conducted.

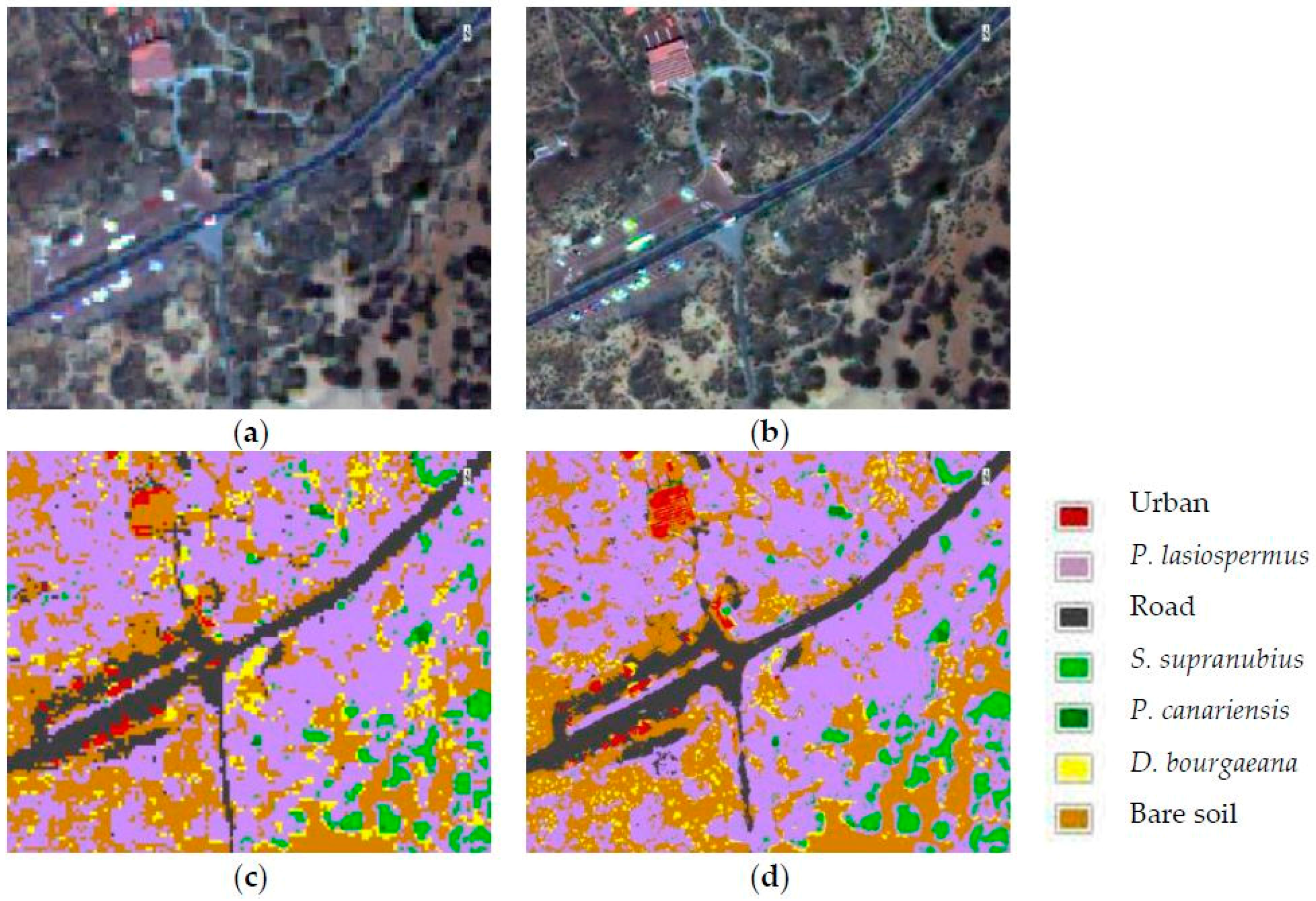

Finally, in order to strengthen the study, a final thematic map of the shrubland area was carried out. Thus, it will analyze the influence of the fusion on the classification results which serve to obtain accurate information for the conservation of natural resources. This study can be found in more detail in [

38].

The paper is structured as follows:

Section 2 includes the description of the study area, datasets, the image fusion methods used in the analysis and the evaluation methodology. The visual and quantitative evaluation of the different algorithms, as well as map analysis are presented in

Section 3.

Section 4 includes the critical analysis of the results. Finally,

Section 4 summarizes the main outcomes and contributions.

4. Conclusions

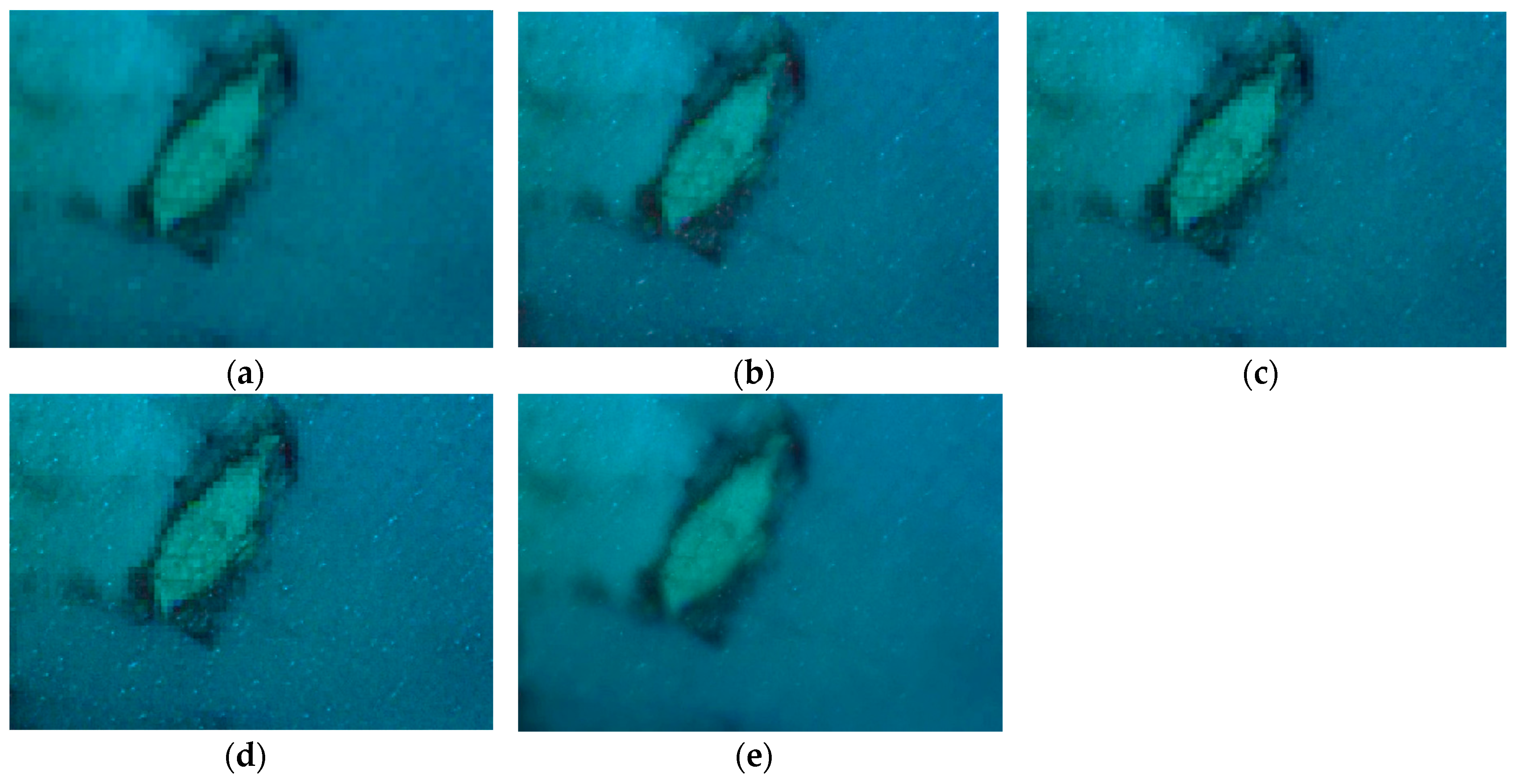

As indicated, the main objective of this work was to select the pansharpening algorithm that provides the image with the best spatial and spectral quality for land and coastal ecosystems. Due to this reason, the most efficient pansharpening techniques developed in recent years have been applied, in order to achieve the highest spatial resolution of the MS bands while preserving the original spectral information, and assessed. As not a single pansharpening algorithm has exhibited a superior performance, the best techniques have been evaluated in three different types of ecosystems, i.e., heterogenic shrubland ecosystems, coastal systems and coastal-land transitional zones with inland water and an urban area. Fusion methods have frequently been applied to land and urban areas; however, a novel analysis has been conducted covering their evaluation in areas of shallow water using VHR imagery, as well, as they could be useful for the mapping of seabed species, such as seagrasses and coral reefs.

After a preliminary assessment of twelve pansharpening techniques, a total of four algorithms was selected for the study. In the literature, four band sensors are mostly selected to carry out this kind of study (Ikonos, GeoEye, QuickBird, etc.); however, we have tried to find the best fused image using an eight-band sensor (WorldView-2).

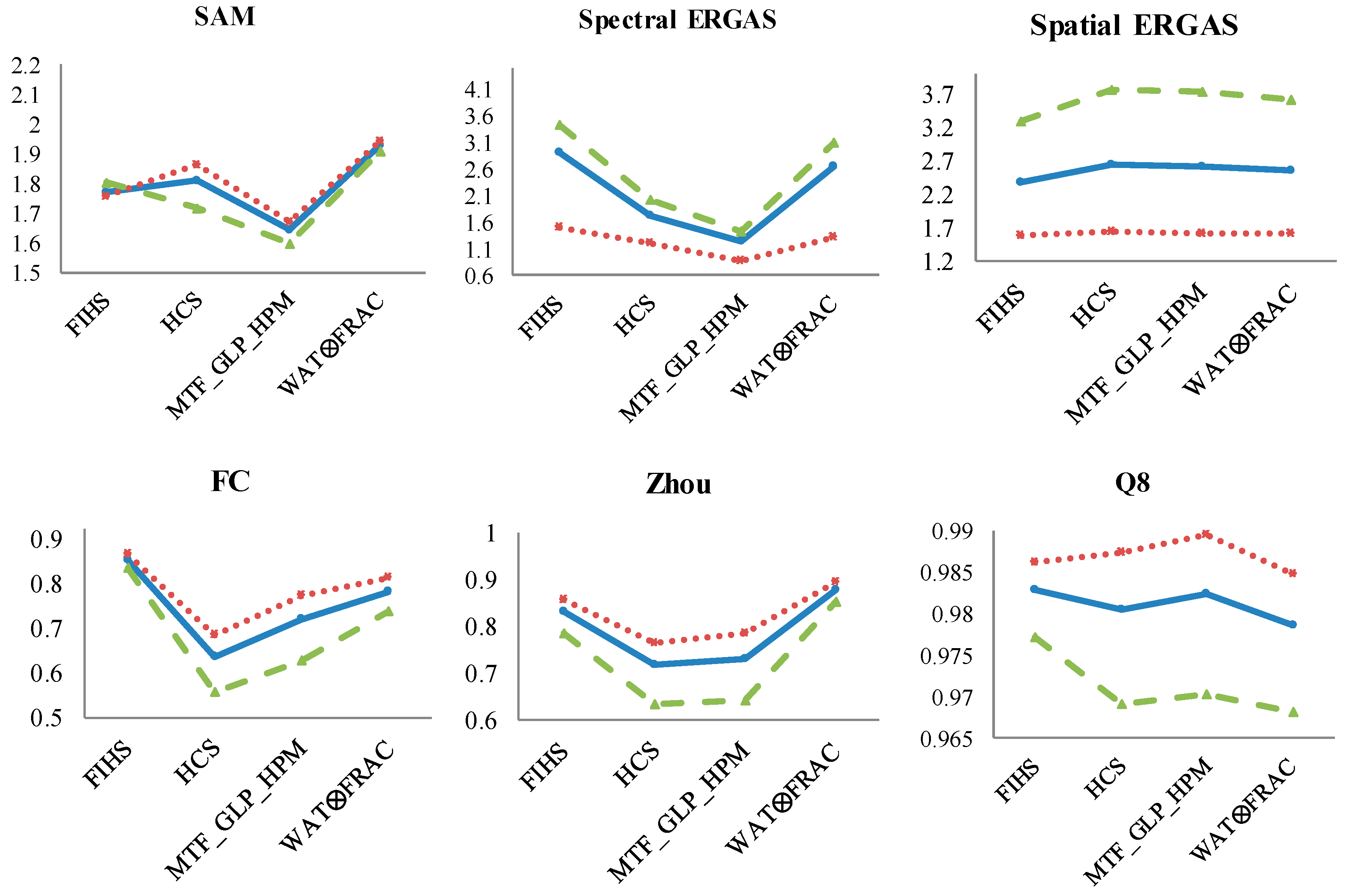

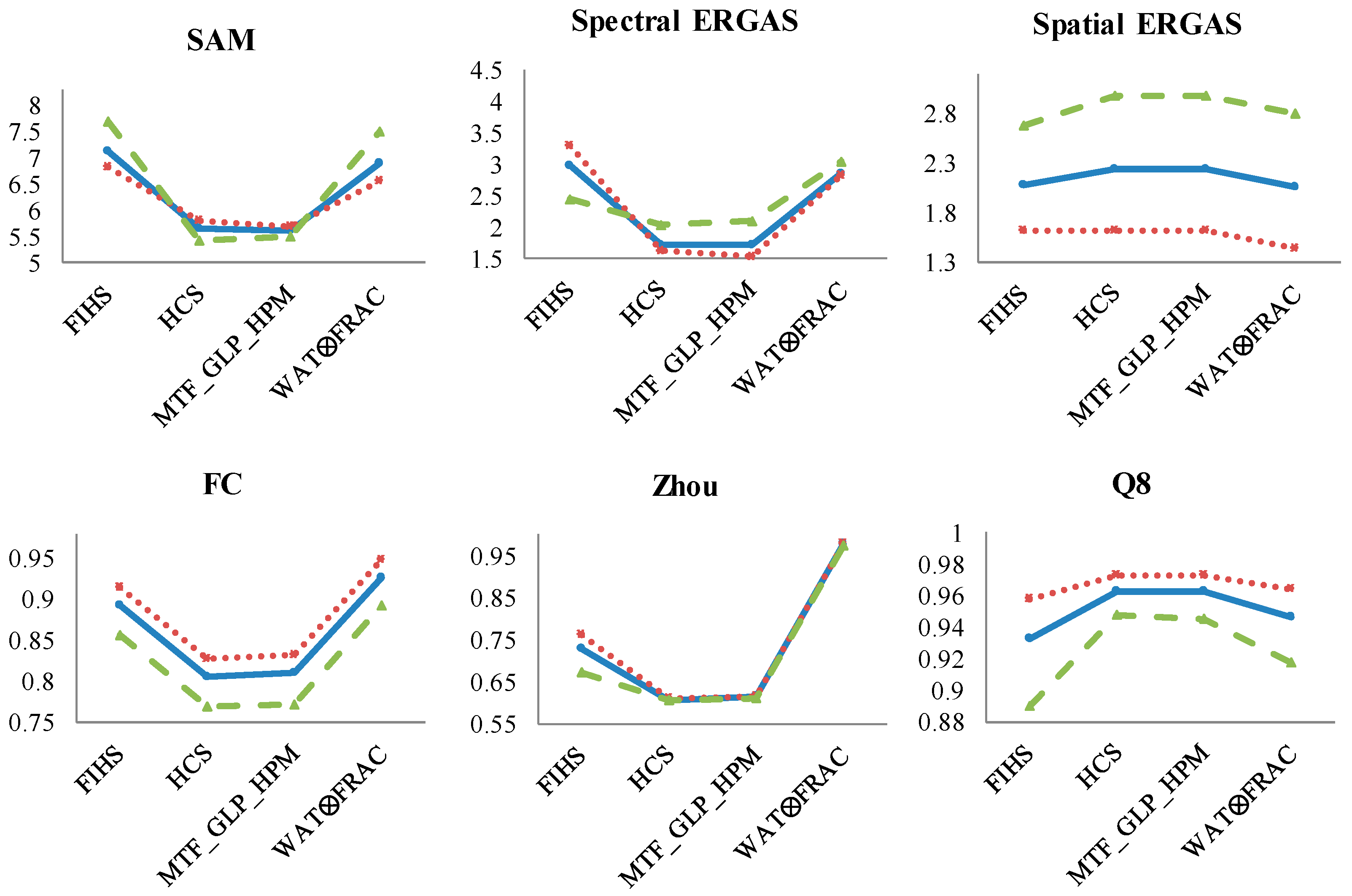

Both the visual evaluation and the quantitative analysis were achieved using six quality indices at the overall, spectral and spatial level. The best algorithms at the spectral and spatial levels were obtained for each type of ecosystem. Finally, the best fused technique with a compromise between the spectral and spatial quality was identified following the Borda count method. Thus, we provide guidance to users in order to choose the best algorithm that would be more suitable in accordance with the type of ecosystem and the information to be preserved.

It is interesting to observe that, for land regions, the MTF algorithm performs better at preserving the spectral quality, while the weighted wavelet ‘à trous’ method through the fractal dimension maps algorithm demonstrates better results considering the spatial detail of the fused imagery. Balancing the spectral and spatial quality, the most appropriate pansharpening algorithm for shrubland and mixed ecosystems is the WAT⊗FRAC technique, while FIHS is selected for the coastal ecosystems. Note that to date, the WAT⊗FRAC algorithm has only been used in agricultural areas; however, we have applied this algorithm in natural vulnerable ecosystems, where a successful result has been obtained. Moreover, we can conclude that the more heterogenic the area to be fused, the smaller the window size in WAT⊗FRAC. FIHS provides the best overall fused image in the simplest scenario. Thus, even though there is no remarkable difference in the way the algorithms perform with respect to land and water areas, we have concluded that images with low variability, such as a coastal scenario, covered mostly by water, require simpler algorithms, rather than more complex and heterogeneous images (i.e., shrubland and mixed ecosystems), which need more advanced algorithms in order to obtain good fused imagery.

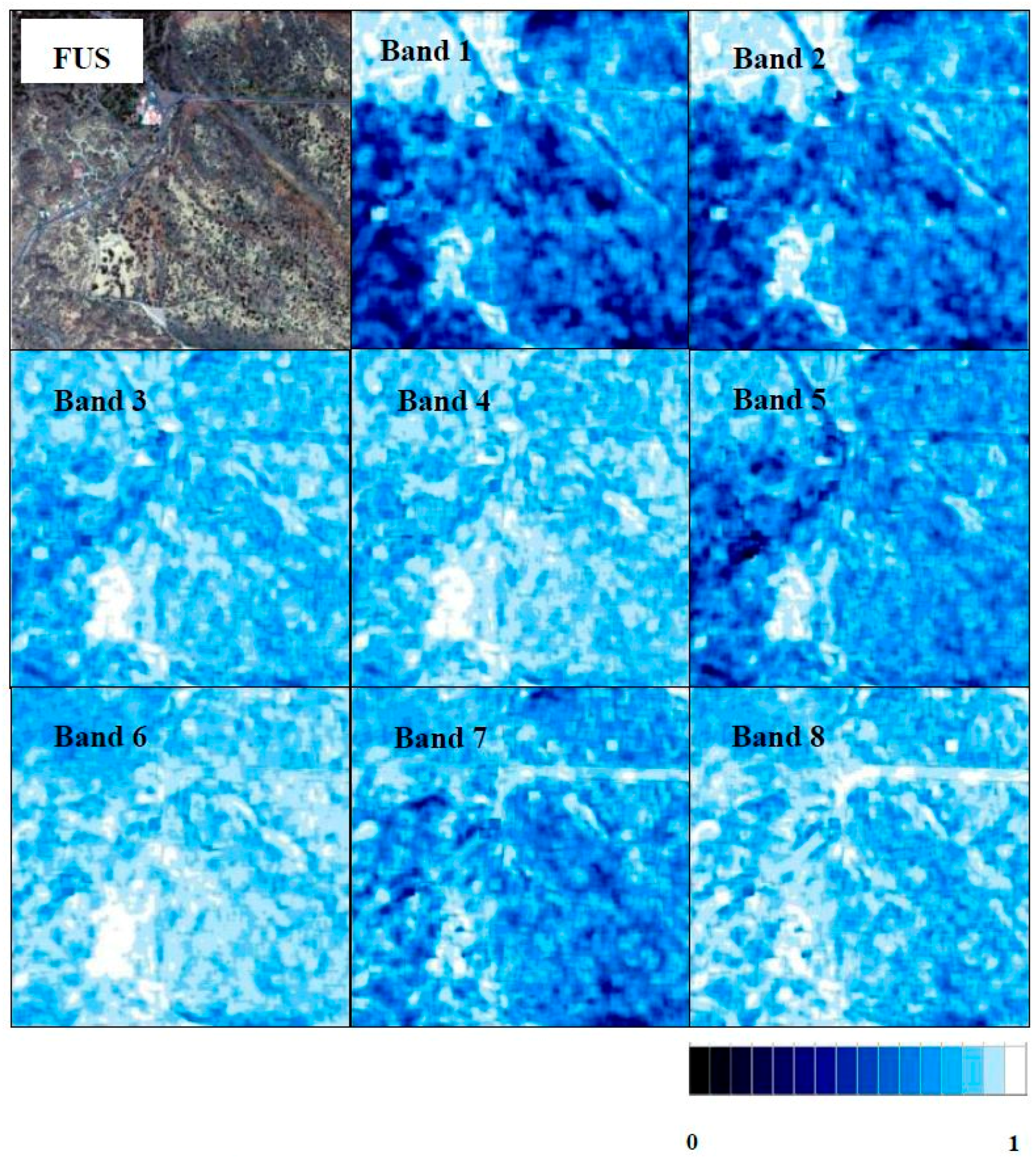

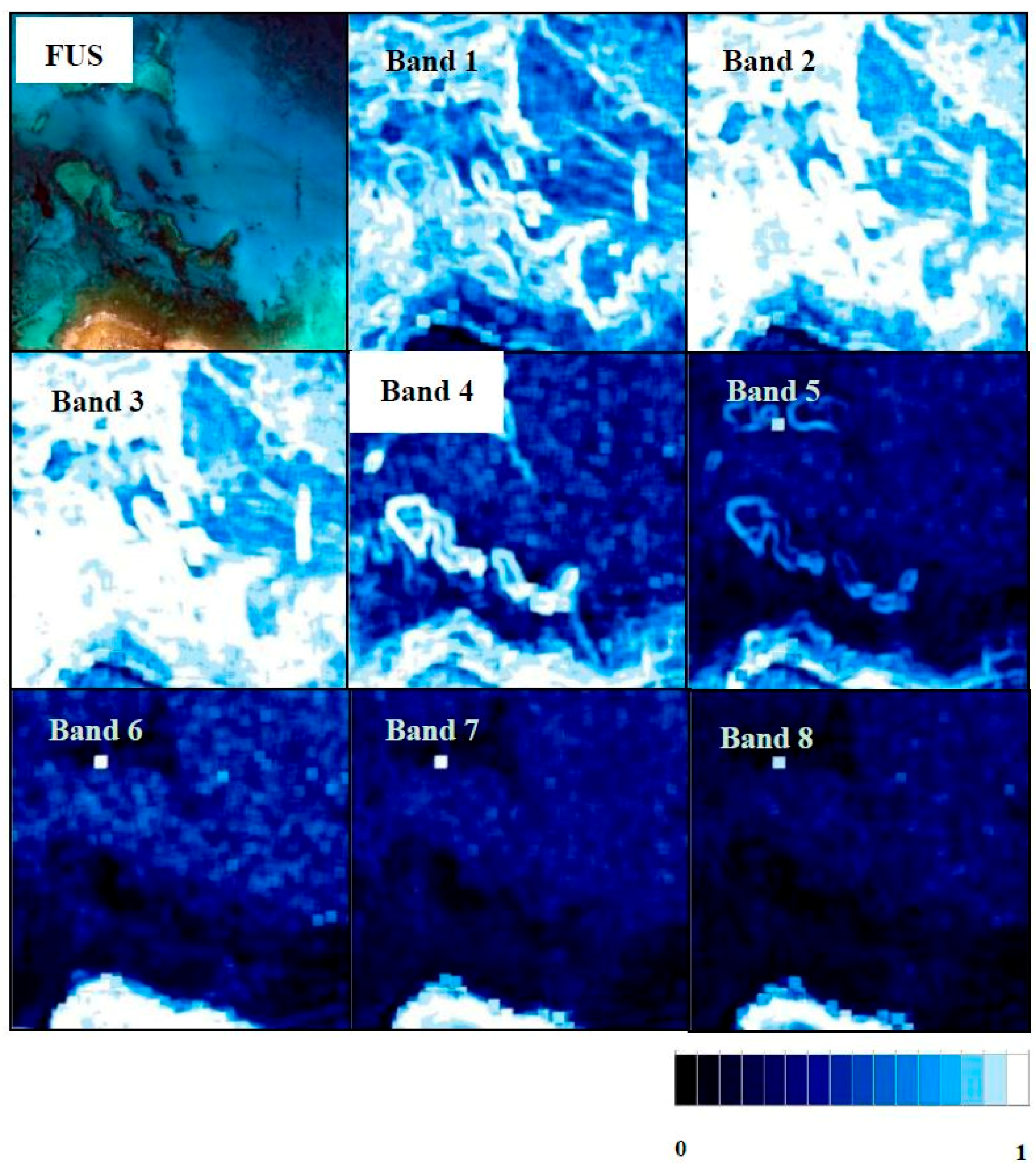

Moreover, we have studied the behavior of each algorithm when applied to the complete set of MS bands and on bands covered by and outside of the PAN range. In general, Bands 2–6 mainly have better spatial and spectral quality, but the quality of the remaining bands is acceptable. Analyzing the results, there is a difference in how the same algorithm works on land and coastal areas. The fusion might have higher quality on land, while a lower quality appears in bodies of water.

Additionally, a local study was carried out to identify the distortion introduced in each single band by the best fused algorithms chosen for each scenario. In general, Bands 3–8 attained higher quality for land areas, while in water areas, red and near-infrared bands (5, 7 and 8) experience high spectral distortion. However, these bands are not usually used in seabed mapping applications due to their low penetration capability in water.

Finally, it is important to recall the need to obtain the best fused image in the analyzed ecosystems, as they are heterogenic regions with sparse vegetation mainly made up of small and mixed shrubs with reduced leaf area in the case of shrubland ecosystems and with low radiance absorption in complex and dynamic coastal ecosystems. In this context, thematic maps were obtained using the SVM classifier in the original MS image and in the WAT⊗FRAC fused image. This corroborates the best performance of the WAT⊗FRAC algorithm to generate accurate thematic maps in the shrubland ecosystem. The excellent results provided by these studies are being applied to the generation of challenging thematic maps of coastal and land protected areas, and studies of the state of conservation of natural resources will be performed.