Fusion Based on Visible Light Positioning and Inertial Navigation Using Extended Kalman Filters

Abstract

:1. Introduction

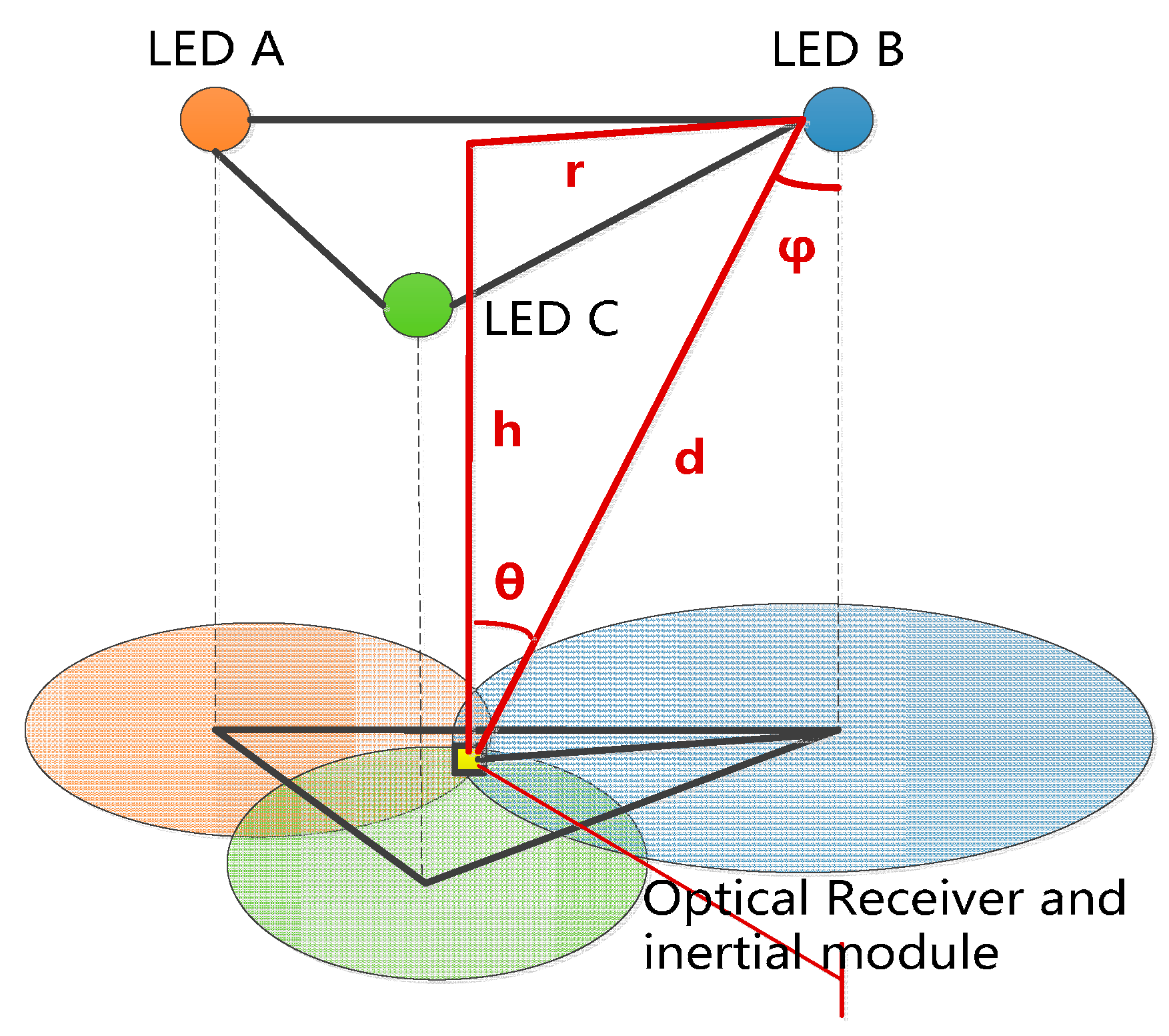

2. System Configuration and Algorithm

2.1. System Design

2.2. VLC Positioning Algorithm

2.3. Inertial Navigation Algorithm

2.4. Fusion Position Algorithm Based on the Kalman Filter

3. Field Experiment and Results

3.1. Experiment Setup

3.1.1. Structure of the Fusion Positioning System

3.1.2. Experiment Environment

3.2. Experiment Results

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Yang, C.; Shao, H.R. WiFi-based indoor positioning. IEEE Commun. Mag. 2015, 53, 150–157. [Google Scholar] [CrossRef]

- Lin, X.Y.; Ho, T.W.; Fang, C.C.; Yen, Z.S.; Yang, B.J.; Lai, F. A mobile indoor positioning system based on iBeacon technology. In Proceedings of the International Conference of the IEEE Engineering in Medicine & Biology Society, Milan, Italy, 25–29 August 2015; pp. 4970–4973. [Google Scholar]

- Huang, C.H.; Lee, L.H.; Ho, C.C.; Wu, L.L.; Lai, Z.H. Real-Time RFID Indoor Positioning System Based on Kalman-Filter Drift Removal and Heron-Bilateration Location Estimation. IEEE Trans. Instrum. Meas. 2015, 64, 728–739. [Google Scholar] [CrossRef]

- Harle, R. A Survey of Indoor Inertial Positioning Systems for Pedestrians. IEEE Commun. Surv. Tutor. 2013, 15, 1281–1293. [Google Scholar] [CrossRef]

- Hassan, N.U.; Naeem, A.; Pasha, M.A.; Jadoon, T.; Yuen, C. Indoor Positioning Using Visible LED Lights: A Survey. Acm Comput. Surv. 2015, 48, 1–32. [Google Scholar] [CrossRef]

- Gu, W.; Aminikashani, M.; Deng, P.; Kavehrad, M. Impact of Multipath Reflections on the Performance of Indoor Visible Light Positioning Systems. J. Lightwave Technol. 2015, 34, 2578–2587. [Google Scholar] [CrossRef]

- Xu, Q.; Zheng, R.; Hranilovic, S. IDyLL: Indoor localization using inertial and light sensors on smartphones. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; pp. 307–318. [Google Scholar]

- Zhou, Z.; Kavehrad, M.; Deng, P. Indoor positioning algorithm using light-emitting diode visible light communications. Opt. Eng. 2012, 51, 527–529. [Google Scholar] [CrossRef]

- Ganti, D.; Zhang, W.; Kavehrad, M. VLC-based indoor positioning system with tracking capability using Kalman and particle filters. In Proceedings of the 2014 IEEE International Conference on Consumer Electronics, Las Vegas, NV, USA, 10–13 January 2014; pp. 476–477. [Google Scholar]

- Komine, T.; Nakagawa, M. Fundamental analysis for visible-light communication system using LED lights. IEEE Trans. Consum. Electron. 2004, 50, 100–107. [Google Scholar] [CrossRef]

- Kim, J.W.; Han, J.J.; Hwang, D.H.; Park, C. A Step, Stride and Heading Determination for the Pedestrian Navigation System. Positioning 2004, 3, 273–279. [Google Scholar] [CrossRef]

- Judd, T. A personal dead reckoning module. In Proceedings of the 1997 Institute of Navigations Ion 97 Conference, Kansas City, MO, USA, 16–19 September 1997. [Google Scholar]

- Elwell, J. Inertial Navigation for the Urban Warrior. Aerosense 1999, 3709, 196–204. [Google Scholar]

- Leppäkoski, H.; Collin, J.; Takala, J. Pedestrian Navigation Based on Inertial Sensors, Indoor Map, and WLAN Signals. J. Signal Process. Syst. 2013, 71, 287–296. [Google Scholar] [CrossRef]

- Weinberg, H. Using the ADXL202 in pedometer and personal navigation applications. Analog Devices AN-602 Appl. Note 2002, 2, 1–6. [Google Scholar]

- Scarlett, J. Enhancing the performance of pedometers using a single accelerometer. Analog Devices AN-900 Appl. Note 2007, 41, 1–16. [Google Scholar]

- Chen, Z.H.; Zou, H.; Jiang, H.; Zhu, Q.C.; Soh, Y.C.; Xie, L.H. Fusion of WiFi, Smartphone Sensors and Landmarks Using the Kalman Filter for Indoor Localization. Sensors 2015, 15, 715–732. [Google Scholar] [CrossRef] [PubMed]

- Chai, W.; Chen, C.; Edwan, E.; Zhang, J.; Loffeld, O. INS/Wi-Fi based indoor navigation using adaptive Kalman filtering and vehicle constraints. In Proceedings of the 2012 9th Workshop on Positioning Navigation and Communication (WPNC), Dresden, Germany, 15–16 March 2012; pp. 36–41. [Google Scholar]

- Chen, G.L.; Meng, X.L.; Wang, Y.J.; Zhang, Y.Z.; Tian, P.; Yang, H.C. Integrated WiFi/PDR/Smartphone Using an Unscented Kalman Filter Algorithm for 3D Indoor Localization. Sensors 2015, 15, 24595–24614. [Google Scholar] [CrossRef] [PubMed]

| Error | VLC | EKF |

|---|---|---|

| Maximum | 0.619 m | 0.411 m |

| Average | 0.339 m | 0.145 m |

| Minimum | 0.167 m | 0.137 m |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Feng, L.; Yang, A. Fusion Based on Visible Light Positioning and Inertial Navigation Using Extended Kalman Filters. Sensors 2017, 17, 1093. https://doi.org/10.3390/s17051093

Li Z, Feng L, Yang A. Fusion Based on Visible Light Positioning and Inertial Navigation Using Extended Kalman Filters. Sensors. 2017; 17(5):1093. https://doi.org/10.3390/s17051093

Chicago/Turabian StyleLi, Zhitian, Lihui Feng, and Aiying Yang. 2017. "Fusion Based on Visible Light Positioning and Inertial Navigation Using Extended Kalman Filters" Sensors 17, no. 5: 1093. https://doi.org/10.3390/s17051093

APA StyleLi, Z., Feng, L., & Yang, A. (2017). Fusion Based on Visible Light Positioning and Inertial Navigation Using Extended Kalman Filters. Sensors, 17(5), 1093. https://doi.org/10.3390/s17051093