Octopus: A Design Methodology for Motion Capture Wearables

Abstract

:1. Introduction

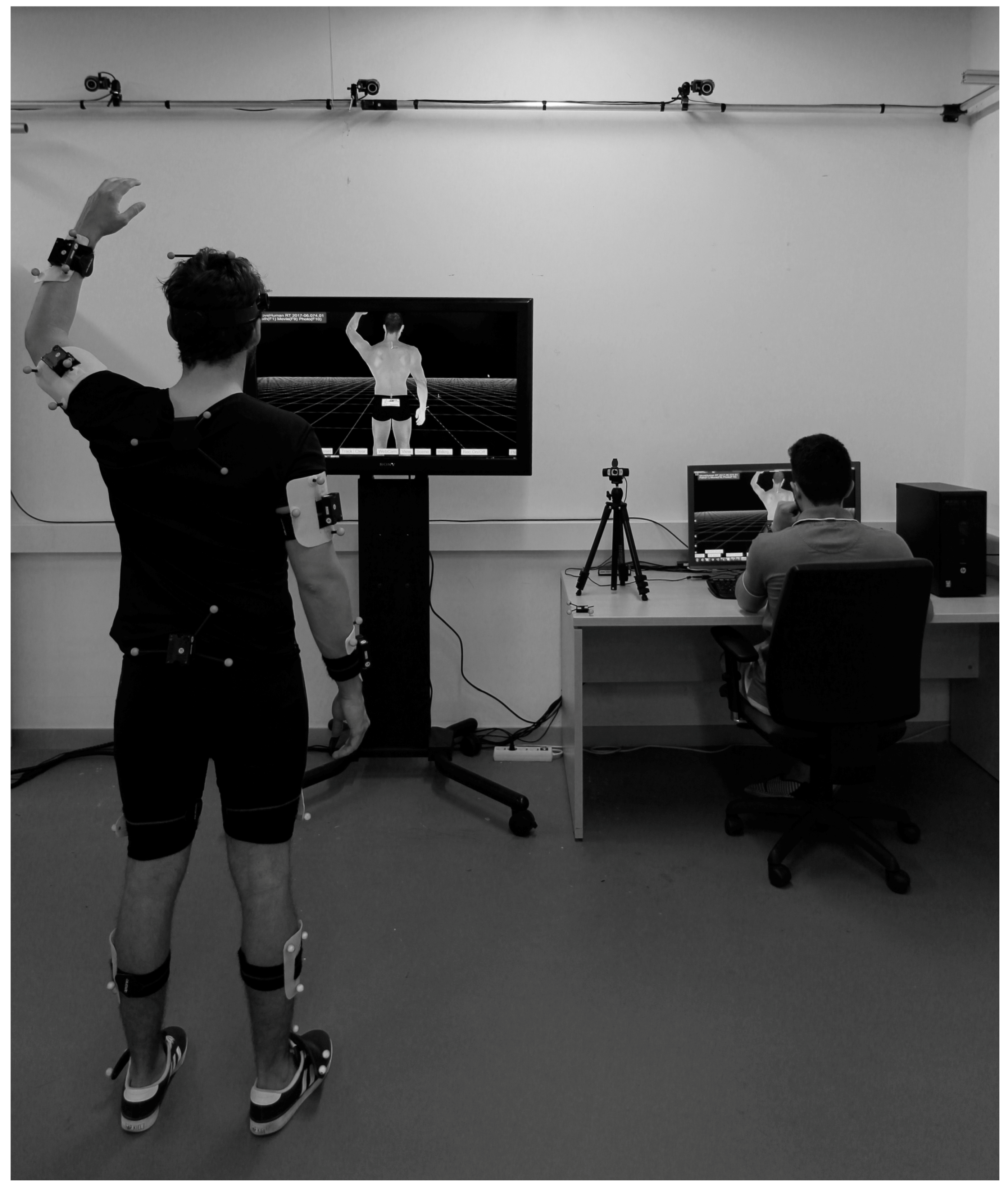

2. Materials and Methods

2.1. Contextual Factors

2.2. User Interaction Factors

2.2.1. User Interface with MoCap-Wearable Device

- Visual feedback: Interaction with LEDs, images, or text. From the perspective of the user actor, an interesting point of interaction may be the upper face of the wrist, inspired by wearable wristwatch style designs or visible areas of the body, such as legs or arms.

- Aural feedback: Sounds, beeps, instructions, etc.

2.2.2. User Interface with DPP

2.3. Technological Factors

2.3.1. Choice between Optical and Inertial MoCap Technology

2.3.2. Electronic Components (Building Blocks)

- User interface: Already mentioned in Section 2.2.

- Battery: MoCap wireless sensors typically have built-in non-removable batteries, recharging either in a charging socket or directly connecting to each sensor. The battery life (according to manufacturer’s information) can vary between 3 h and 8 h. The battery selection has a special interrelation with the other factors because it depends on the usage scenarios (life required), on the other components’ consumption, on the DPP characteristics, and on the interconnection between the different components.

- Storage: With the option of including internal storage, it is not required to be in range of a wireless network, which increases versatility. In contrast, in this case, the ability to perform real-time processing is limited. Systems that work with internal storage usually have a secure digital (SD) card, such as Perception Neuron or Stt-Systems [33,51].

- Communication: The communication features between devices and the DPP depend on the selected wireless communication protocols and consequently on the selected DPP to process the data. However, sometimes, an external dongle communication is connected to the DPP, which relieves its selection requirements. Communication protocols are typically WiFi 2.4–5 GHz for local area networks (LANs), Bluetooth for personal area networks (PANs), or other proprietary protocols.

- Intelligence: All these actions are performed by the devices from raw measurement data and users’ actions. In terms of intelligence, the quality of the measurements provided by the IMU, and therefore the restrictions for some applications, depends on the quality in the accelerometers, gyroscopes, and magnetometers and the quality of the signal processing. The IMU measurement ranges vary between ±2 g and ±50 g for accelerometers and between ±150 °/s and ±1000 °/s for gyroscopes [27]; however, the evolution of the technology is improving the quality of these aspects. In signal processing, Kalman filters are widely used [67]. Currently, this field is being improved by different authors. For example, Dejnabadi et al. (2006) and Favre et al. (2009) [19,20] have proposed other magnetic and compensation drift algorithms that have been shown to be effective.

2.3.3. Number of Devices and Interconnection of Them

2.4. Body Attachment Factors

2.4.1. Device Positioning

2.4.2. Device Attachment Methods

- Fabric fixing supports: The union with fabric is made from different widths of bands or tape or tight garments. The elastic tape can be closed or open, and the latter will be closed with Velcro, clips, or buckles. The fabric characteristics will influence the union accuracy, perspiration, comfort, and wear resistance. The connection between the fabric and the device can be made with Velcro, pockets, dedicated housing (plastic base), or metal pressure clips that are used by some wearables [38,50]. In addition, it may be beneficial to use wide Velcro areas above the fabric, which allow certain variability at the point of attachment to suit each subject.

- Disposable adhesive fixing supports: This support type groups different types of unions: hypoallergenic double-sided adhesive, which is economical although relatively weak, bandage or kinesio-tape, which has high adhesion but with some preparation time required, and disposable electrodes, which are used in some wearables [43,49,58]. The latter can be standard or manufactured specifically for the product, being able to use one or several connection points and allowing measurement of biometric signals. Note that, if we choose disposable adhesive fixing supports, although hygiene is maximum, the cost is higher due to the material expenditure. In addition, body hair and sweating will significantly worsen the adhesion.

- Semi-rigid fixing supports: This union type is mainly found in wearable devices that, due to the flexibility of some of the parts, the product stands by itself, wrapping around the body or the garments that the subject wears. This solution is observed in the Thalmic Labs product [55] that has elastic zones to fit the arm, surrounding it as a bracelet, the Jolt Sensor wearable [48] that can be attached to clothing with flexible flaps, or others such as Alex Posture, Google Glass, Melon Headband, or Thync [35,42,53,59] that take advantage of their elasticity to hold onto the head as if they were hair headbands. Although it is a method that does not provide a strong union as others and it may be difficult to apply in all body segments, it must be considered to solve some problems, such as fungible expenditures or hygiene.

2.5. Physical Property Factors

2.5.1. Shape

2.5.2. Dimensions

2.5.3. Weight

2.5.4. Flexibility

2.5.5. Material

2.5.6. Comfort

2.5.7. Psychological Aspects

2.5.8. Aesthetics

3. Discussion

3.1. Step 1: Design Goal

3.2. Step 2: Context Study

3.3. Step 3: Service Design

3.4. Step 4: User Interaction

3.5. Step 5: Technology

- Make decisions about the technology type to be used (described in Section 2.3.1): optical, inertial, or even other MoCap technologies depending on the application. Thus, we can find solutions such as the one proposed by Shiratori et al. [64], which uses cameras fixed on the body.

- Define the main electronics that are needed, for which the electronic building blocks can be made and apply electronic design methodologies focused on designers or multidisciplinary teams, as Blanco et al. proposed [66]. Defining the building blocks (Figure 4) is necessary to ensure product viability, and to anticipate factors like the space that can occupy the electronics or the associated requirements and restrictions; for example, for IMU design, zones free of ferromagnetic materials and a minimum distance from the human body are required to not alter the magnetic fields and facilitate radiofrequency communications. The main blocks in the case of MoCap-wearables devices would be (Section 2.3.2) communication (dashed line of the schematic), storage, battery, intelligence, and interface (defined in Step 4). Note that, to be able to define some points of the electronics, it will be necessary to at least have selected the DPP that will process and register the data (Step 8) because the DPP will also influence the communications, functionalities, size of components, etc.

- Select the required number of MoCap-wearable devices to be placed on the body and the most appropriate interconnection between them (Section 2.3.3): full body suits, wired independent elements, or wireless devices.

3.6. Step 6: Body Attachment

3.7. Step 7: Device Physical Properties

3.8. Step 8: DPP

3.9. Case Study, Methodology Assessment

4. Conclusions

- It is flexible and adaptable. Without a closed and immovable scheme that limits creativity, the design team, which is necessarily multidisciplinary, can eliminate or add factors and elements according to each case.

- The tool makes the job easier. Due to the visual representation of Figure 6 and the different steps proposed, it is expected to improve organisation, structuring, synthesis, and facilitate decision making, providing a global view of all the necessary factors.

- Due to the case study, it has been observed that the tool allows us to generate innovative ideas in an effortless way and to consider the main specifications and design problems.

- It is a communication tool between professionals or researchers from different disciplines involved in the design team, illustrating the common objectives to be fulfilled, mapping the status of the project, and allowing the assessment and recognition of the contributions of each of the team members.

- In the current context of the technological progress, electronics miniaturisation, cost reduction, and the IoT, Octopus can contribute to facilitating the success of new products aimed at the MoCap area. It is expected that developed MoCap-wearable systems will be more suited to users and environments, so that MoCap can be used more extensively solving current problems in existing applications and allowing its implementation in more and new ones.

- In relation to this search for new applications, it is observed that the assumption of the MoCap as a service can be an improvement and an opportunity to cover real needs.

- It is expected that the study increases knowledge of optical rigid body MoCap systems that, according to Baker et al. [22], have been largely ignored in the literature as of 2006, and it has been verified in further review that it has not increased in the last years.

- MoCap-wearables devices can be considered products of high or very high complexity in relation to the design requirements, so the study can also be extrapolated to other wearables and to other areas with less complexity. In fact, the described factors can be used not only for advanced technological devices but also for other, more basic products that need to be precisely and comfortably placed on the body.

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| MoCap | Motion Capture |

| IoT | Internet of Things |

| IMU | Inertial Measurement Unit |

| DPP | Data Processing Point |

| LED | Light-Emitting Diode |

| GUI | Graphic User Interface |

| RMS | Root Mean Square |

| SD | Secure Digital |

| LAN | Local Area Network |

| PAN | Personal Area Network |

References

- Zijlstra, W.; Aminian, K. Mobility Assessment in Older People: New Possibilities and Challenges. Eur. J. Ageing 2007, 4, 3–12. [Google Scholar] [CrossRef] [PubMed]

- Majumder, S.; Mondal, T.; Deen, M.J. Wearable Sensors for Remote Health Monitoring. Sensors 2017, 17, 130. [Google Scholar] [CrossRef] [PubMed]

- Perera, C.; Zaslavsky, A.; Christen, P.; Georgakopoulos, D. Context Aware Computing for the Internet of Things: A Survey. IEEE Commun. Surv. Tutor. 2014, 16, 414–454. [Google Scholar] [CrossRef]

- Knight, J.F.; Deen-Williams, D.; Arvanitis, T.N.; Baber, C.; Sotiriou, S.; Anastopoulou, S.; Gargalakos, M. Assessing the Wearability of Wearable Computers. In Proceedings of the 10th IEEE International Symposium on Wearable Computers, Montreux, Switzerland, 11–14 October 2006; pp. 75–82. [Google Scholar]

- Gemperle, F.; Kasabach, C.; Stivoric, J.; Bauer, M.; Martin, R. Design for Wearability. In Proceedings of the 2nd International Symposium on Wearable Computers, Digest of Papers, Pittsburgh, PA, USA, 19–20 October 1998; pp. 116–122. [Google Scholar]

- Skogstad, S.A.; Nymoen, K.; Høvin, M. Comparing Inertial and Optical Mocap Technologies for Synthesis Control. In Proceedings of the Intertional Sound and Music Computing Conference, Padova, Italy, 6–9 July 2011; pp. 421–426. [Google Scholar]

- Mayagoitia, R.E.; Nene, A.V.; Veltink, P.H. Accelerometer and Rate Gyroscope Measurement of Kinematics: An Inexpensive Alternative to Optical Motion Analysis Systems. J. Biomech. 2002, 35, 537–542. [Google Scholar] [CrossRef]

- Cloete, T.; Scheffer, C. Benchmarking of a Full-Body Inertial Motion Capture System for Clinical Gait Analysis. In Proceedings of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 4579–4582. [Google Scholar]

- Mooney, R.; Corley, G.; Godfrey, A.; Quinlan, L.R.; ÓLaighin, G. Inertial Sensor Technology for Elite Swimming Performance Analysis: A Systematic Review. Sensors 2016, 16, 18. [Google Scholar] [CrossRef] [PubMed]

- Cooper, G.; Sheret, I.; McMillian, L.; Siliverdis, K.; Sha, N.; Hodgins, D.; Kenney, L.; Howard, D. Inertial Sensor-Based Knee Flexion/Extension Angle Estimation. J. Biomech. 2009, 42, 2678–2685. [Google Scholar] [CrossRef] [PubMed]

- Roetenberg, D.; Luinge, H.; Slycke, P. Xsens MVN: Full 6DOF Human Motion Tracking using Miniature Inertial Sensors. Available online: https://www.researchgate.net/profile/Per_Slycke/publication/239920367_Xsens_MVN_Full_6DOF_human_motion_tracking_using_miniature_inertial_sensors/links/0f31752f1f60c20b18000000/Xsens-MVN-Full-6DOF-human-motion-tracking-using-miniature-inertial-sensors.pdf (accessed on 14 August 2017).

- Kok, M.; Hol, J.D.; Schön, T.B. An Optimization-Based Approach to Human Body Motion Capture Using Inertial Sensors. IFAC Proc. Vol. 2014, 47, 79–85. [Google Scholar] [CrossRef]

- Riaz, Q.; Tao, G.; Krüger, B.; Weber, A. Motion Reconstruction using very Few Accelerometers and Ground Contacts. Graph. Model. 2015, 79, 23–38. [Google Scholar] [CrossRef]

- NaturalPoint—Optical Tracking Solutions. Available online: https://www.naturalpoint.com/ (accessed on 20 June 2017).

- Trivisio—Inertial Motion Traking. Available online: https://www.trivisio.com/inertial-motion-tracking (accessed on 20 June 2017).

- Ahmad, N.; Ghazilla, R.A.R.; Khairi, N.M.; Kasi, V. Reviews on various Inertial Measurement Unit (IMU) Sensor Applications. Int. J. Signal Proc. Syst. 2013, 1, 256–262. [Google Scholar] [CrossRef]

- Cloete, T.; Scheffer, C. Repeatability of an Off-the-Shelf, Full Body Inertial Motion Capture System during Clinical Gait Analysis. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 5125–5128. [Google Scholar]

- Bellusci, G.; Roetenberg, D.; Dijkstra, F.; Luinge, H.; Slycke, P. Xsens MVN Motiongrid: Drift-Free Human Motion Tracking using Tightly Coupled Ultra-Wideband and Miniature Inertial Sensors. Available online: http://www.dwintech.com/MVN_MotionGrid_White_Paper.pdf (accessed on 14 August 2017).

- Favre, J.; Aissaoui, R.; Jolles, B.; De Guise, J.; Aminian, K. Functional Calibration Procedure for 3D Knee Joint Angle Description using Inertial Sensors. J. Biomech. 2009, 42, 2330–2335. [Google Scholar] [CrossRef] [PubMed]

- Dejnabadi, H.; Jolles, B.M.; Casanova, E.; Fua, P.; Aminian, K. Estimation and Visualization of Sagittal Kinematics of Lower Limbs Orientation using Body-Fixed Sensors. IEEE Trans. Biomed. Eng. 2006, 53, 1385–1393. [Google Scholar] [CrossRef] [PubMed]

- Vlasic, D.; Adelsberger, R.; Vannucci, G.; Barnwell, J.; Gross, M.; Matusik, W.; Popović, J. Practical Motion Capture in Everyday Surroundings. ACM Trans. Graph. (TOG) 2007, 26. [Google Scholar] [CrossRef]

- Baker, R. Gait Analysis Methods in Rehabilitation. J. NeuroEng. Rehabil. 2006, 3, 4. [Google Scholar] [CrossRef] [PubMed]

- Capozzo, A.; Catani, F.; Leardini, A.; Benedetti, M.; Della Croce, U. Position and Orientation in Space of Bones during Movement: Experimental Artefacts. Clin. Biomech. 1996, 11, 90–100. [Google Scholar] [CrossRef]

- Benoit, D.L.; Ramsey, D.K.; Lamontagne, M.; Xu, L.; Wretenberg, P.; Renström, P. Effect of Skin Movement Artifact on Knee Kinematics during Gait and Cutting Motions Measured in Vivo. Gait Posture 2006, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Haratian, R.; Twycross-Lewis, R.; Timotijevic, T.; Phillips, C. Toward Flexibility in Sensor Placement for Motion Capture Systems: A Signal Processing Approach. IEEE Sens. J. 2014, 14, 701–709. [Google Scholar] [CrossRef]

- Andreoni, G.; Standoli, C.E.; Perego, P. Defining Requirements and Related Methods for Designing Sensorized Garments. Sensors 2016, 16, 769. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Li, Q. Inertial Sensor-Based Methods in Walking Speed Estimation: A Systematic Review. Sensors 2012, 12, 6102–6116. [Google Scholar] [CrossRef] [PubMed]

- Sabatini, A.M.; Martelloni, C.; Scapellato, S.; Cavallo, F. Assessment of Walking Features from Foot Inertial Sensing. IEEE Trans. Biomed. Eng. 2005, 52, 486–494. [Google Scholar] [CrossRef] [PubMed]

- Taetz, B.; Bleser, G.; Miezal, M. Towards Self-Calibrating Inertial Body Motion Capture. In Proceedings of the 19th International Conference on Information Fusion (FUSION), Heidelberg, Germany, 5–8 July 2016; pp. 1751–1759. [Google Scholar]

- Müller, P.; Bégin, M.; Schauer, T.; Seel, T. Alignment-Free, Self-Calibrating Elbow Angles Measurement using Inertial Sensors. IEEE J. Biomed. Health Inform. 2017, 21, 312–319. [Google Scholar] [CrossRef] [PubMed]

- Yu, B.; Bao, T.; Zhang, D.; Carender, W.; Sienko, K.H.; Shull, P.B. Determining Inertial Measurement Unit Placement for Estimating Human Trunk Sway while Standing, Walking and Running. In Proceedings of the 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 4651–4654. [Google Scholar]

- Noraxon-3D Motion Capture Sensors. Available online: http://www.webcitation.org/6rMGpRqTm (accessed on 20 June 2017).

- Perception Neuron-MoCap System. Available online: https://neuronmocap.com/ (accessed on 20 June 2017).

- My Swing-Golf Motion Captrure Service. Available online: http://www.webcitation.org/6rMHJKUtu (accessed on 20 June 2017).

- Alex Posture. Available online: http://www.webcitation.org/6rMHl1we4 (accessed on 20 June 2017).

- Araig-Sensory Feedback Suit for Video Game. Available online: http://www.webcitation.org/6rMHwg3Hu (accessed on 20 June 2017).

- LEO Fitness Intelligence. Available online: http://www.webcitation.org/6rMI2Yhj5 (accessed on 20 June 2017).

- Notch-Smart Motion Capture for Mobile Devices. Available online: http://www.webcitation.org/6rMI4aW6g (accessed on 20 June 2017).

- Rokoko-Motion Capture System-Smartsuit Pro. Available online: http://www.webcitation.org/6rMIBBlnf (accessed on 20 June 2017).

- Vicon-Motion Captrure Systems. Available online: http://www.webcitation.org/6rMINb14O (accessed on 20 June 2017).

- Run3D-3D Gait Analysis. Available online: http://www.webcitation.org/6rMIX3thp (accessed on 20 June 2017).

- Google Glass. Available online: http://www.webcitation.org/6rMIaF0iB (accessed on 20 June 2017).

- MC10-Wearable Healthcare Technology & Devices. Available online: http://www.webcitation.org/6rMIvMYAG (accessed on 20 June 2017).

- Quell-Wearable Pain Relief That’s 100% Drug Free. Available online: http://www.webcitation.org/6rMJ27WzR (accessed on 20 June 2017).

- Perception Legacy-Motion Capture System. Available online: http://www.webcitation.org/6rMJRSnZb (accessed on 20 June 2017).

- Shadow-Motion Capture System. Available online: http://www.webcitation.org/6rMJWrKgp (accessed on 20 June 2017).

- The Imaginarium Studios-Performance Capture Studio and Production Company. Available online: http://www.webcitation.org/6rMJbEqrb (accessed on 20 June 2017).

- Jolt Sensor-A Wearable head Impact Sensor for Youth Athletes. Available online: http://www.webcitation.org/6rMJmWx9e (accessed on 20 June 2017).

- SenseON-A Clinically Accurate Heart Rate Monitor. Available online: http://www.webcitation.org/6rMJsFsGa (accessed on 20 June 2017).

- Sensoria Fitness-Garments Monitor Heart Rate with Embedded Technology for the Most Effective Workout. Available online: http://www.webcitation.org/6rMJxDGEo (accessed on 20 June 2017).

- Stt Systems. Available online: http://www.webcitation.org/6rMKMGBto (accessed on 20 June 2017).

- Technaid-Motion Capture System. Available online: http://www.webcitation.org/6rMKQqNHf (accessed on 20 June 2017).

- Melon-A Headband and Mobile App to Measure Your Focus. Available online: http://www.webcitation.org/6rMKxkcY0 (accessed on 20 June 2017).

- Teslasuit-Full Body Haptic VR Suit. Available online: http://www.webcitation.org/6rML4RSOd (accessed on 20 June 2017).

- Myo Gesture Control Armband-Wearable Technology by Thalmic Labs. Available online: http://www.webcitation.org/6rMLGM7sy (accessed on 20 June 2017).

- Xsens-3D Motion Traking. Available online: http://www.webcitation.org/6rEoDr7rq (accessed on 15 June 2017).

- Reebok CheckLight. Available online: http://www.webcitation.org/6rMLTqeWx (accessed on 20 June 2017).

- Upright-the World Leader in Connected Posture Trainers. Available online: http://www.webcitation.org/6rMLZ4Sic (accessed on 20 June 2017).

- Thync Relax. Available online: http://www.webcitation.org/6rMLoUfF1 (accessed on 20 June 2017).

- Motti, V.G.; Caine, K. Human Factors Considerations in the Design of Wearable Devices. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2014, 58, 1820–1824. [Google Scholar] [CrossRef]

- Spagnolli, A.; Guardigli, E.; Orso, V.; Varotto, A.; Gamberini, L. Measuring User Acceptance of Wearable Symbiotic Devices: Validation Study Across Application Scenarios. In Proceedings of the International Workshop on Symbiotic Interaction, Helsinki, Finland, 30–31 October 2014; pp. 87–98. [Google Scholar]

- Shneiderman, B. Designing the User Interface: Strategies for Effective Human-Computer Interaction; Pearson Education: London, UK, 2010. [Google Scholar]

- Mckee, M.G. Biofeedback: An Overview in the Context of Heart-Brain Medicine. Clevel. Clin. J. Med. 2008, 75, 31–34. [Google Scholar] [CrossRef]

- Shiratori, T.; Park, H.S.; Sigal, L.; Sheikh, Y.; Hodgins, J.K. Motion Capture from Body-Mounted Cameras. ACM Trans Graph. (TOG) 2011, 30. [Google Scholar] [CrossRef]

- Thewlis, D.; Bishop, C.; Daniell, N.; Paul, G. Next Generation Low-Cost Motion Capture Systems can Provide Comparable Spatial Accuracy to High-End Systems. J. Appl. Biomech. 2013, 29, 112–117. [Google Scholar] [CrossRef] [PubMed]

- Blanco, T.; Casas, R.; Manchado-Pérez, E.; Asensio, Á.; López-Pérez, J.M. From the Islands of Knowledge to a Shared Understanding: Interdisciplinarity and Technology Literacy for Innovation in Smart Electronic Product Design. Int. J. Technol. Des. Educ. 2017, 27, 329–362. [Google Scholar] [CrossRef]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Von Marcard, T.; Rosenhahn, B.; Black, M.J.; Pons-Moll, G. Sparse Inertial Poser: Automatic 3D Human Pose Estimation from Sparse IMUs. Comput. Graph. Forum 2017, 369, 349–360. [Google Scholar] [CrossRef]

- Kalkum, E.; Van Drongelen, S.; Mussler, J.; Wolf, S.I.; Kuni, B. A Marker Placement Laser Device for Improving Repeatability in 3D-Foot Motion Analysis. Gait Posture 2016, 44, 227–230. [Google Scholar] [CrossRef] [PubMed]

- Knight, J.F.; Baber, C.; Schwirtz, A.; Bristow, H.W. The Comfort Assessment of Wearable Computers. ISWC 2002, 2, 65–74. [Google Scholar]

- Sonderegger, A. Smart Garments—The Issue of Usability and Aesthetics. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication, Zurich, Switzerland, 8–12 September 2013; pp. 385–392. [Google Scholar]

- Lilien, G.L.; Morrison, P.D.; Searls, K.; Sonnack, M.; Hippel, E.V. Performance Assessment of the Lead User Idea-Generation Process for New Product Development. Manag. Sci. 2002, 48, 1042–1059. [Google Scholar] [CrossRef]

- Maguire, M. Methods to Support Human-Centred Design. Int. J. Hum.-Comput. Stud. 2001, 55, 587–634. [Google Scholar] [CrossRef]

- Abras, C.; Maloney-Krichmar, D.; Preece, J. User-Centered Design. In Encyclopedia of Human-Computer Interaction; Bainbridge, W., Ed.; Sage Publications: Thousand Oaks, CA, USA, 2004; Volume 37, pp. 445–456. [Google Scholar]

- Tassi, R. Dervice Design Tools Communication Methods Supporting Design Processes. Ph.D. Thesis, Politecnico di Milano, Milano, Italy, October 2008. [Google Scholar]

- Stickdorn, M.; Schneider, J.; Andrews, K.; Lawrence, A. This is Service Design Thinking: Basics, Tools, Cases; Wiley Hoboken: Hoboken, NJ, USA, 2011. [Google Scholar]

- Shostack, G.L. Designing Services That Deliver. Harv. Bus. Rev. 1984, 62, 133–139. [Google Scholar]

- Blanco, T.; Berbegal, A.; Blasco, R.; Casas, R. Xassess: Crossdisciplinary Framework in User-Centred Design of Assistive Products. J. Eng. Des. 2016, 27, 636–664. [Google Scholar] [CrossRef]

| Full Body Systems MoCap | Wearable Products | |||||

|---|---|---|---|---|---|---|

| Wireless Inertial Products | Wired Inertial Products 1 | Optical Products | Services | Head | Chest | Extremities |

| Noraxon [32] | Perception Neuron [33] | Natural point [14] | MySwing [34] | Alex Posture [35] | Araig [36] | LEO Fitness Intelligence [37] |

| Notch [38] | Rokoko studios [39] | Vicon [40] | Run3D [41] | Google Glass [42] | MC10 [43] | Quell relief [44] |

| Perception Legacy [45] | Shadow [46] | Imaginarium Studios [47] | Jolt Sensor [48] | SenseOn [49] | Sensoria fitness [50] | |

| Stt-Systems [51] | Technaid [52] | Melon Headband [53] | Tesla Suit [54] | Thalmic Labs [55] | ||

| Trivisio [15] | Xsens (suit) [56] | Reebok checklight [57] | UpRight [58] | |||

| Xsens [56] | Thync [59] | |||||

| Environment | Professional User | Actor User | |

|---|---|---|---|

| Medicine | Diagnosis | Doctor | Patient |

| Rehabilitation | Doctor, Physiotherapist | Patient | |

| Forensic | Forensic Doctor | Injured (may be uncooperative) | |

| Sports | Performance | Coach, Physiotherapist | Athlete |

| Rehabilitation | Coach, Physiotherapist | Athlete | |

| Animation Simulation | Professional simulation | Coach, Technician, Others | Athlete, Military, Others |

| Video game | Player | Player | |

| Cinema/theatre | Director, Technician, Others | Performer | |

| Research | Laboratory | Developer, Researcher | Unknown |

| Attachment Method | Area Selection | Preparation Speed | Washing | Adapt-Ability | Fungible Restrictions | Union Distribution | Union Strength | Result (R) |

|---|---|---|---|---|---|---|---|---|

| Weight (W) | (5) 1 | (4) 1 | (9) 1 | (10) 1 | (2) 1 | (8) 1 | (8) 1 | - |

| Closed elastic tape 2 | 3 | 1 | 1 | 2 | 3 | 3 | 3 | (102) 1 |

| Open elastic tape 2 | 3 | 2 | 1 | 3 | 3 | 3 | 3 | (116) 1 |

| Garment (fixed pockets, clips) 2 | 1 | 2 | 1 | 1 | 3 | 3 | 3 | (86) 1 |

| Garment (Velcro areas) 2 | 3 | 2 | 1 | 1 | 3 | 3 | 3 | (96) 1 |

| Commercial electrode 3 | 3 | 3 | 3 | 3 | 1 | 1 | 2 | (110) 1 |

| Custom electrode 3 | 3 | 3 | 3 | 3 | 1 | 2 | 2 | (118) 1 |

| Double-sided tape 3 | 3 | 2 | 3 | 3 | 1 | 1 | 2 | (106) 1 |

| Bandage kinesiotape 3 | 3 | 1 | 3 | 3 | 1 | 3 | 3 | (126) 1 |

| Semi-rigid (bracelet, flaps) 4 | 2 | 3 | 3 | 2 | 3 | 3 | 1 | (107) 1 |

| Step 1: Design Goal |

| Shoulder rehabilitation service for elderly in private clinics. |

| Step 2: Context Study |

| Professional User Target: |

| Occupation: Physiotherapist. Age: 35. Technology level: Medium—High. |

| Verbatim: ‘I am a person who wants to improve and learn every day in my work. I have little time since I attend about six patients a day in 45-min sessions’. |

| Actor User Target (patient): |

| Occupation: Retired. Age: 78. Technology level: Low. |

| Verbatim: ‘At my age, I appreciate tranquillity and patience; it makes me feel safer’. |

| Environmental Characteristics: |

| Indoor, bright, hygienic, and clean. Furniture: work tables, chairs, and stretchers. |

| Step 3: Service Design |

|

| Step 4: User Interaction |

| DPP—Professional Interaction: |

| Allow to start and pause motion recording (possibility to do it remotely). |

| Set the number of repetitions of the exercise and grade the threshold to consider whether the movement is correct for rehabilitation purposes in the session. |

| Observe the results. |

| DPP—Patient Interaction: Display an avatar that moves in real time according to the patient’s movement. Display a superimposed avatar that plays the pre-recorded motions during the professional’s mobilisation. Use gamification techniques to facilitate tracking of target movement. |

| Device—Professional Interaction: During the recording period, the user interacts with the devices through the visual sense: green LED—transmitting and no movement recorded, red LED—recording, and blue LED—there is movement recorded. |

| Device—Patient Interaction: During the task of repetitive movements, the patient interacts with the haptic sense (vibration) to indicate breaks, repetitive failures, phase changes, etc. |

| Step 5: Technology |

| Technology Selection: IMU devices are used. No special lighting or space requirements are necessary. During the physiotherapist manipulation, there are no problems with marker occultations. |

| Building Blocks: Shown in Figure 7. |

| Number of Sensors: Five are placed if a single upper extremity is examined and seven for both. |

| Connection: The sensors are wireless and connect to the DPP via Wi-Fi. |

| Step 6: Body Attachment |

| Placement: Sacrum, on vertebra D2, the upper area of the head, outside of the arm just above the elbow, and on the forearm on the upper face of the wrist. |

| Attachment Method: Device on the skin, attached to the body by pre-cut kinesio-tape bands with hole to protrude the device and leave the LED visible. (In case of excessive hair, this would be removed. The area is cleaned with disinfectant). The choice of this attachment method is justified in the Table 3 example. |

| Step 7: Device Physical Properties |

| Shape: Oval, rounded edges with wings in the skin contact area, where the tape is fixed. |

| Dimensions: 4 × 3 cm. |

| Weight: 40 grams per sensor. |

| Housing material: Rigid plastic and resistant to humidity. It is painted with silicone texture for easy sanitising. |

| Colour: It is white, adapts to the environment, and transmits appropriate values. |

| Step 8: DPP |

| Elements: Computer, Wi-Fi dongle for communications, and an external monitor. |

| Basic software operation: It has two modes, the configuration for the professional user and the display for the patient user. It calculates the hits and misses regarding the target movement and displays them in real time. It connects to the Internet to store data in the cloud at the end of the session. |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marin, J.; Blanco, T.; Marin, J.J. Octopus: A Design Methodology for Motion Capture Wearables. Sensors 2017, 17, 1875. https://doi.org/10.3390/s17081875

Marin J, Blanco T, Marin JJ. Octopus: A Design Methodology for Motion Capture Wearables. Sensors. 2017; 17(8):1875. https://doi.org/10.3390/s17081875

Chicago/Turabian StyleMarin, Javier, Teresa Blanco, and Jose J. Marin. 2017. "Octopus: A Design Methodology for Motion Capture Wearables" Sensors 17, no. 8: 1875. https://doi.org/10.3390/s17081875

APA StyleMarin, J., Blanco, T., & Marin, J. J. (2017). Octopus: A Design Methodology for Motion Capture Wearables. Sensors, 17(8), 1875. https://doi.org/10.3390/s17081875