Event-Based Sensing and Control for Remote Robot Guidance: An Experimental Case

Abstract

:1. Introduction

1.1. Review of Event-Based Control in Robotics

1.2. Review of Event-Based Estimation and Sensing in Robotics

1.3. Objectives

- Integration of event-based sensing and control techniques for nonlinear trajectory tracking of a non-holonomic robot.

- Requesting measurements based on the error estimation covariance matrix, and correcting the predicted state taking into account the PC-sensor communication delay.

- Control triggering mechanism based on state estimation, which is recalculated when a new measurement is available.

- EBSE and EBLC algorithms implemented on a PC wirelessly linked to a robot node and a camera node, experimental validation of the global strategy.

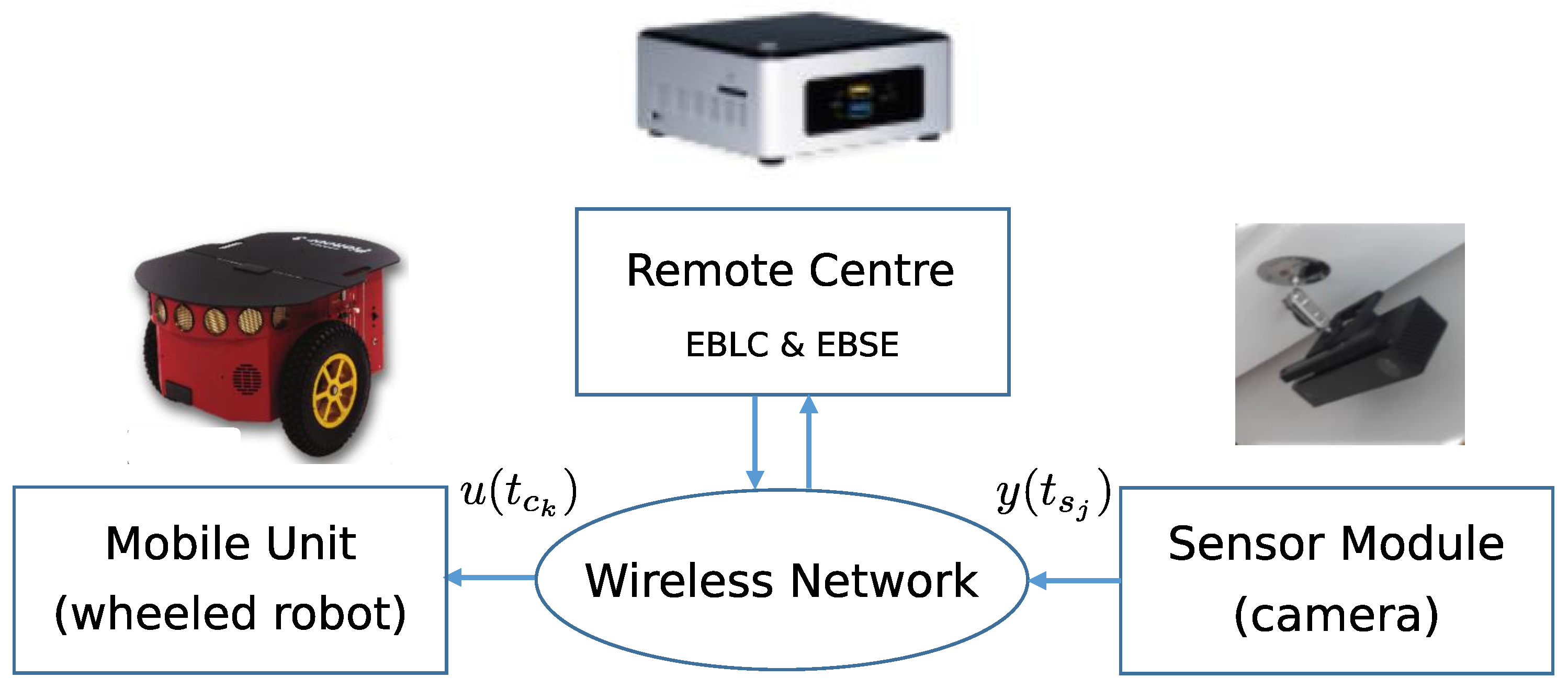

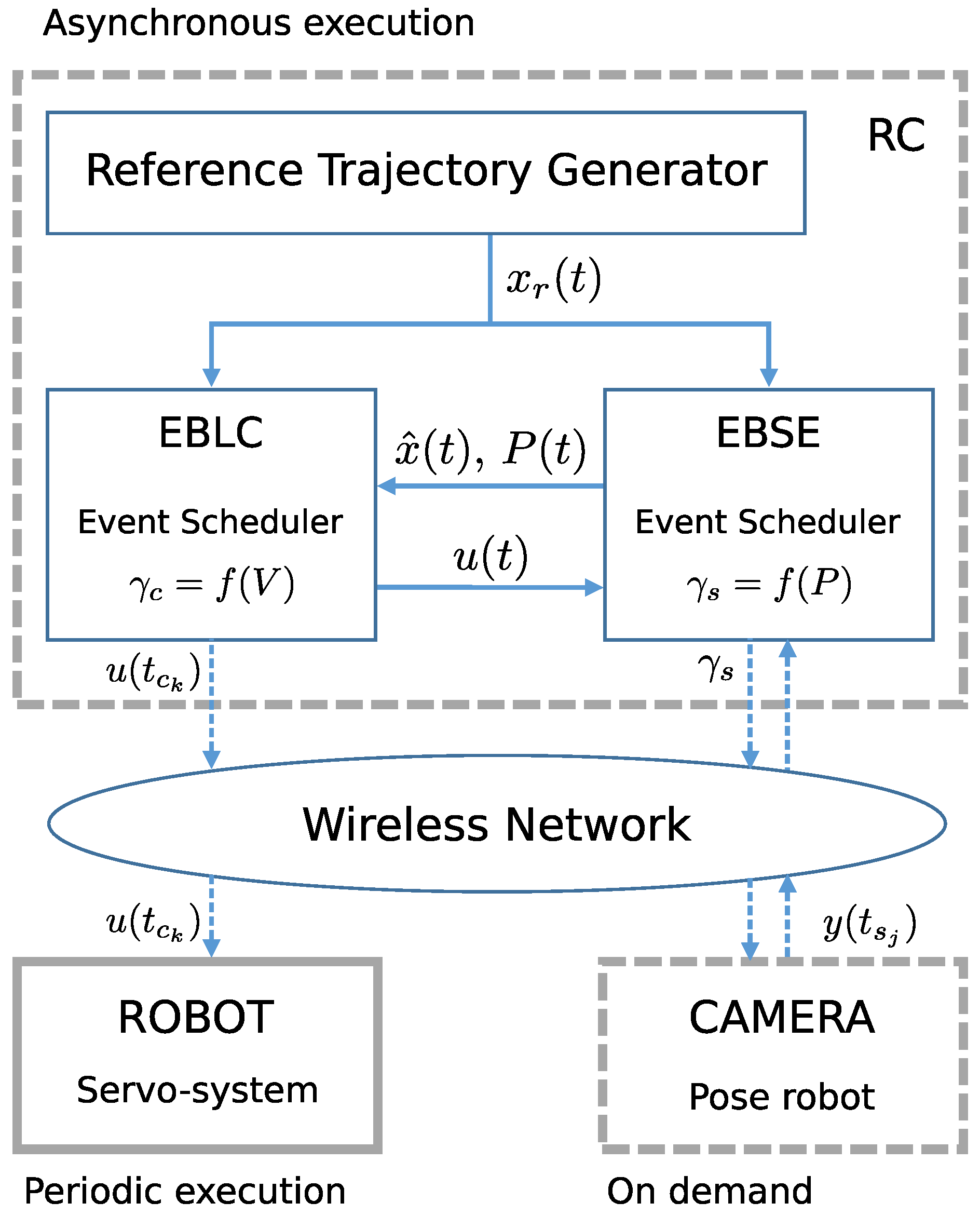

2. Problem Statement

- Implementation of aperiodic and nonlinear control law to generate linear and angular speed commands, guaranteeing system stability. For this, Lyapunov equations were designed.

- Control events generation () using current information on the estimated state vector .

- To provide a state prediction for every time step . Given that the plant model is nonlinear, this task is performed using the Unscented transformation. This prediction is then corrected every time a requested measurement is received.

- To request Measurements in order to obtain information from the sensor at the correct time instants , so that the estimation error covariance matrix remains below predefined threshold conditions [24].

- To update the prediction each time, a measurement is received, according to the acquisition times and the time elapsed until such measurements are received (), due to image processing and communication delays.

Robot Model

3. Event-Based Control Solution

3.1. Notation

3.2. Lyapunov Based Controller for Nonlinear Trajectory Tracking

3.3. Event-Based Lyapunov Control

- 1.

- The function , with .

- 2.

- The functions in (11) are such that are Lipschitz continuous in the compact working set .

4. Event-Based State Estimation

4.1. Sensor Event Triggering

4.2. Control Design Dependent on State Estimation

5. Implementation

6. Experimental Tests

6.1. Set-Up

6.2. Results

7. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| CDF | Cumulative distribution function |

| EBLC | Event-based Lyapunov controller |

| EBSE | Event-based state estimator |

| NCS | Networked control system |

| NTP | Network time protocol |

| PTP | Precision time protocol |

| RMS | Root mean squared error |

| UKF | Unscented Kalman filter |

| WSN | Wireless sensor network |

References

- Hespanha, J.P. A Survey of Recent Results in Networked Control Systems. Proc. IEEE 2007, 95, 138–162. [Google Scholar] [CrossRef]

- Yang, S.H.; Cao, Y. Networked Control Systems and Wireless Sensor Networks: Theories and Applications. Int. J. Syst. Sci. 2008, 39, 1041–1044. [Google Scholar] [CrossRef] [Green Version]

- Gupta, R.A.; Chow, M.Y. Networked Control System: Overview and Research Trends. IEEE Trans. Ind. Electron. 2010, 57, 2527–2535. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, H. A review of wireless sensor networks and its applications. In Proceedings of the 2012 IEEE International Conference on Automation and Logistics, Zhengzhou, China, 15–17 August 2012; pp. 386–389. [Google Scholar]

- Pawlowski, A.; Guzmán, J.L.; Rodríguez, F.; Berenguel, M.; Sánchez, J.; Dormido, S. The influence of event-based sampling techniques on data transmission and control performance. In Proceedings of the 2009 IEEE Conference on Emerging Technologies Factory Automation, Palma de Mallorca, Spain, 22–25 September 2009; pp. 1–8. [Google Scholar]

- Cloosterman, M.B.G.; van de Wouw, N.; Heemels, W.P.M.H.; Nijmeijer, H. Stability of Networked Control Systems With Uncertain Time-Varying Delays. IEEE Trans. Autom. Control 2009, 54, 1575–1580. [Google Scholar] [CrossRef]

- Luan, X.; Shi, P.; Liu, F. Stabilization of Networked Control Systems With Random Delays. IEEE Trans. Ind. Electron. 2011, 58, 4323–4330. [Google Scholar] [CrossRef]

- Cuenca, A.; García, P.; Albertos, P.; Salt, J. A Non-Uniform Predictor-Observer for a Networked Control System. Int. J. Control Autom. Syst. 2011, 9, 1194–1202. [Google Scholar] [CrossRef]

- Li, H.; Shi, Y. Network-Based Predictive Control for Constrained Nonlinear Systems with Two-Channel Packet Dropouts. IEEE Trans. Ind. Electron. 2014, 61, 1574–1582. [Google Scholar] [CrossRef]

- Zhao, Y.B.; Liu, G.P.; Rees, D. Actively Compensating for Data Packet Disorder in Networked Control Systems. IEEE Trans. Circuits Syst. II Express Briefs 2010, 57, 913–917. [Google Scholar] [CrossRef]

- Casanova, V.; Salt, J.; Cuenca, A.; Piza, R. Networked Control Systems: Control structures with bandwidth limitations. Int. J. Syst. Control Commun. 2009, 1, 267–296. [Google Scholar] [CrossRef]

- Ojha, U.; Chow, M.Y. Realization and validation of Delay Tolerant Behavior Control based Adaptive Bandwidth Allocation for networked control system. In Proceedings of the 2010 IEEE International Symposium on Industrial Electronics, Bari, Italy, 4–7 July 2010; pp. 2853–2858. [Google Scholar]

- Miskowicz, M. Event-Based Control and Signal Processing; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Postoyan, R.; Bragagnolo, M.; Galbrun, E.; Daafouz, J.; Nesic, D.; Castellan, E. Nonlinear event-triggered tracking control of a mobile robot: Design, analysis and experimental results. In Proceedings of the 9th IFAC Symposium on Nonlinear Control Systems, Toulouse, France, 4–6 September 2013; pp. 318–323. [Google Scholar]

- Guinaldo, M.; Fábregas, E.; Farias, G.; Dormido, S.; Chaos, D.; Moreno, J.S. A Mobile Robots Experimental Environment with Event-Based Wireless Communication. Sensors 2013, 13, 9396–9413. [Google Scholar] [CrossRef] [PubMed]

- Colledanchise, M.; Dimarogonas, D.V.; Ögren, P. Robot navigation under uncertainties using event based sampling. In Proceedings of the 53rd IEEE Conference on Decision and Control (CDC), Los Angeles, CA, USA, 15–17 December 2014; pp. 1438–1445. [Google Scholar]

- Villarreal-Cervantes, M.G.; Guerrero-Castellanos, J.F.; Ramírez-Martínez, S.; Sánchez-Santana, J.P. Stabilization of a (3,0) mobile robot by means of an event-triggered control. ISA Trans. 2015, 58, 605–613. [Google Scholar] [CrossRef] [PubMed]

- Trimpe, S.; Buchli, J. Event-based estimation and control for remote robot operation with reduced communication. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5018–5025. [Google Scholar]

- Socas, R.; Dormido, S.; Dormido, R.; Fabregas, E. Event-Based Control Strategy for Mobile Robots in Wireless Environments. Sensors 2015, 15, 30076–30092. [Google Scholar] [CrossRef] [PubMed]

- Cuenca, Á.; Castillo, A.; García, P.; Torres, A.; Sanz, R. Periodic event-triggered dual-rate control for a networked control system. In Proceedings of the Second International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP), Krakow, Poland, 13–15 June 2016; pp. 1–8. [Google Scholar]

- Santos, C.; Mazo, M., Jr.; Espinosa, F. Adaptive self-triggered control of a remotely operated P3-DX robot: Simulation and experimentation. Robot. Auton. Syst. 2014, 62, 847–854. [Google Scholar] [CrossRef]

- Trimpe, S.; D’Andrea, R. An Experimental Demonstration of a Distributed and Event-based State Estimation Algorithm. In Proceedings of the 18th IFAC World Congress, Milano, Italy, 28 August–2 September 2011. [Google Scholar]

- Trimpe, S.; D’Andrea, R. Event-Based State Estimation With Variance-Based Triggering. IEEE Trans. Autom. Control 2014, 59, 3266–3281. [Google Scholar] [CrossRef]

- Martínez-Rey, M.; Espinosa, F.; Gardel, A.; Santos, C. On-Board Event-Based State Estimation for Trajectory Approaching and Tracking of a Vehicle. Sensors 2015, 15, 14569–14590. [Google Scholar] [CrossRef] [PubMed]

- Shi, D.; Elliott, R.J.; Chen, T. Event-based state estimation of a discrete-state hidden Markov model through a reliable communication channel. In Proceedings of the 34th Chinese Control Conference (CCC), Hangzhou, China, 28–30 July 2015; pp. 4673–4678. [Google Scholar]

- Santos, C.; Espinosa, F.; Santiso, E.; Mazo, M. Aperiodic Linear Networked Control Considering Variable Channel Delays: Application to Robots Coordination. Sensors 2015, 15, 12454–12473. [Google Scholar] [CrossRef] [PubMed]

- Eqtami, A.; Heshmati-alamdari, S.; Dimarogonas, D.; Kyriakopoulos, K. Self-triggered Model Predictive Control for nonholonomic systems. In Proceedings of the 2013 European Control Conference (ECC), Zurich, Switzerland, 17–19 July 2013; pp. 638–643. [Google Scholar]

- Brockett, R.W. Asymptotic stability and feedback stabilization. In Differential Geometric Control Theory; Birkhauser: Boston, MA, USA, 1983; pp. 181–191. [Google Scholar]

- Wang, Z.; Liu, Y. Visual regulation of a nonholonomic wheeled mobile robot with two points using Lyapunov functions. In Proceedings of the 2010 International Conference on Mechatronics and Automation (ICMA), Xi’an, China, 4–7 August 2010; pp. 1603–1608. [Google Scholar]

- Amoozgar, M.; Zhang, Y. Trajectory tracking of Wheeled Mobile Robots: A kinematical approach. In Proceedings of the 2012 IEEE/ASME International Conference on Mechatronics and Embedded Systems and Applications (MESA), Suzhou, China, 8–10 July 2012; pp. 275–280. [Google Scholar]

- Khalil, H. Nonlinear Systems; Prentice Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Kurzweil, J. On the inversion of Lypaunov’s second theorem on stability of motion. Czechoslov. Math. J. 1956, 81, 217–259. [Google Scholar]

- Tiberi, U.; Johansson, K. A simple self-triggered sampler for perturbed nonlinear systems. Nonlinear Anal. Hybrid Syst. 2013, 10, 126–140. [Google Scholar] [CrossRef]

- Lamnabhi-Lagarrigu, F.; Loria, A.; Panteley, E.; Laghrouche, S. Taming Heterogeneity and Complexity of Embedded Control; Wiley-ISTE: Hoboken, NJ, USA, 2007. [Google Scholar]

- Martínez-Rey, M.; Espinosa, F.; Gardel, A.; Santos, C.; Santiso, E. Mobile robot guidance using adaptive event-based pose estimation and camera sensor. In Proceedings of the Second International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP), Krakow, Poland, 13–15 June 2016. [Google Scholar]

- Atassi, A.N.; Khalil, H.K. A separation principle for the stabilization of a class of nonlinear systems. IEEE Trans. Autom. Control 1999, 44, 1672–1687. [Google Scholar] [CrossRef]

- Hammami, M.A.; Jerbi, H. Separation principle for nonlinear systems: A bilinear approach. Int. J. Appl. Math. Comput. Sci. 2001, 11, 481–492. [Google Scholar]

- Damak, H.; Ellouze, I.; Hammami, M. A separation principle of time-varying nonlinear dynamical systems. Differ. Equ. Control Process. 2013, 2013, 36–49. [Google Scholar]

- Heemels, W.P.M.H.; Johansson, K.H.; Tabuada, P. An introduction to event-triggered and self-triggered control. In Proceedings of the 2012 Annual 51st IEEE Conference on Decision and Control (CDC), Maui, HI, USA, 10–13 December 2012; pp. 3270–3285. [Google Scholar]

- Tanwani, A.; Teel, A.; Prieur, C. On using norm estimators for event-triggered control with dynamic output feedback. In Proceedings of the 54th IEEE Conference on Decision and Control (CDC), Osaka, Japan, 15–18 December 2015; pp. 5500–5505. [Google Scholar]

- Pioneer P3-DX. Mapping and navigation robot. Available online: http://www.mobilerobots.com/ResearchRobots/PioneerP3DX.aspx (accessed on 5 September 2017).

- Espinosa, F.; Salazar, M.; Pizarro, D.; Valdes, F. Electronics Proposal for Telerobotics Operation of P3-DX Units. In Remote and Telerobotics; Mollet, N., Ed.; InTech: Hong Kong, China, 2010; Chapter 1. [Google Scholar]

- Intel NUC Kit NUC5i3RYH Product Brief. Available online: https://www.intel.com/content/www/us/en/nuc/nuc-kit-nuc5i3ryh-brief.html (accessed on 5 September 2017).

- Kinect hardware. Available online: https://developer.microsoft.com/en-us/windows/kinect/hardware (accessed on 5 September 2017).

- Wang, J.; Olson, E. AprilTag 2: Efficient and robust fiducial detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016. [Google Scholar]

- Wan, E.A.; van der Merwe, R. The Unscented Kalman Filter. In Kalman Filtering and Neural Networks; Haykin, S., Ed.; John Wiley & Sons: New York, NY, USA, 2001; Chapter 7; pp. 221–280. [Google Scholar]

| # Control Updates | # Sensor Updates | D (mm rms) | L (mm rms) | |||||

|---|---|---|---|---|---|---|---|---|

| Mean | St. dev. | Mean | St. dev. | Mean | St. dev. | Mean | St. dev. | |

| Periodic (fastest) | 6428 | – | 454 | – | 7.77 | 0.46 | 37.05 | 1.78 |

| Periodic () | 429 | – | 429 | – | 7.69 | 0.22 | 38.89 | 1.09 |

| Periodic () | 92 | – | 92 | – | 9.17 | 0.55 | 63.97 | 14.57 |

| Event based | 94.17 | 8.97 | 83 | 5.8 | 10.21 | 0.98 | 40.11 | 7.68 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Santos, C.; Martínez-Rey, M.; Espinosa, F.; Gardel, A.; Santiso, E. Event-Based Sensing and Control for Remote Robot Guidance: An Experimental Case. Sensors 2017, 17, 2034. https://doi.org/10.3390/s17092034

Santos C, Martínez-Rey M, Espinosa F, Gardel A, Santiso E. Event-Based Sensing and Control for Remote Robot Guidance: An Experimental Case. Sensors. 2017; 17(9):2034. https://doi.org/10.3390/s17092034

Chicago/Turabian StyleSantos, Carlos, Miguel Martínez-Rey, Felipe Espinosa, Alfredo Gardel, and Enrique Santiso. 2017. "Event-Based Sensing and Control for Remote Robot Guidance: An Experimental Case" Sensors 17, no. 9: 2034. https://doi.org/10.3390/s17092034

APA StyleSantos, C., Martínez-Rey, M., Espinosa, F., Gardel, A., & Santiso, E. (2017). Event-Based Sensing and Control for Remote Robot Guidance: An Experimental Case. Sensors, 17(9), 2034. https://doi.org/10.3390/s17092034