Measurement and Calibration of Plant-Height from Fixed-Wing UAV Images

Abstract

:1. Introduction

2. Materials and Methods

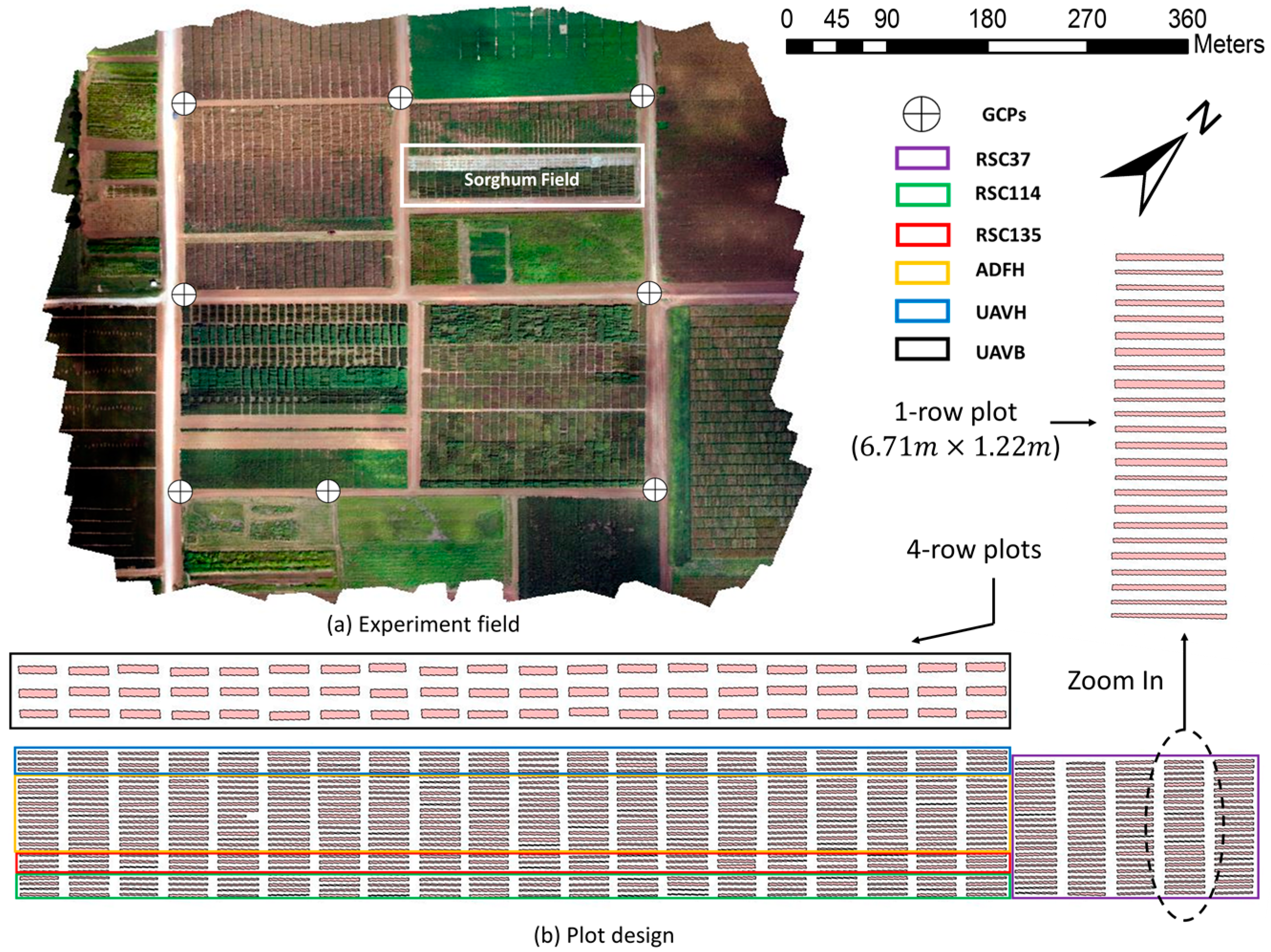

2.1. Trial Plots

2.1.1. Experimental Setup

2.1.2. Ground-Truth Measurements of Plant Height

2.2. Image Data Acquisition

2.2.1. UAV Platform

2.2.2. Sensor

2.2.3. Flight Control

2.2.4. Flight Procedures

2.3. Ground Control Points (GCPs)

2.3.1. Structure

2.3.2. Uses

2.4. Image Data Processing

2.4.1. UAV SfM Method

2.4.2. Crop Height Analysis

2.4.3. Comparison with Ground-Truth Measurements

2.5. Image Quality Assessment

3. Results

3.1. Plant Height Estimation with Fixed-Wing UAV

3.1.1. SfM Model Accuracy and Trends in Ground-Truth and Estimated Plant-Height Data

3.1.2. Accuracy Assessment of SfM Plant-Height Estimates

3.2. Plant Height Accuracy Improvement with Height Calibration

3.3. Plant Height Accuracy Correlation with Image Quality

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food security: The challenge of feeding 9 billion people. Science 2010, 327, 812–818. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.J. The impacts of climate change on human health in the United States: A scientific assessment, by us global change research program. J. Am. Plan. Assoc. 2016, 82, 418–419. [Google Scholar] [CrossRef]

- Tilman, D.; Balzer, C.; Hill, J.; Befort, B.L. Global food demand and the sustainable intensification of agriculture. Proc. Natl. Acad. Sci. USA 2011, 108, 20260–20264. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gebbers, R.; Adamchuk, V.I. Precision agriculture and food security. Science 2010, 327, 828–831. [Google Scholar] [CrossRef] [PubMed]

- Miflin, B. Crop improvement in the 21st century. J. Exp. Bot. 2000, 51, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fernandez, M.; Bao, Y.; Tang, L.; Schnable, P. A high-throughput, field-based phenotyping technology for tall biomass crops. Plant Physiol. 2017, 174, 2008–2022. [Google Scholar] [CrossRef] [PubMed]

- Andrade-Sanchez, P.; Gore, M.A.; Heun, J.T.; Thorp, K.R.; Carmo-Silva, A.E.; French, A.N.; Salvucci, M.E.; White, J.W. Development and evaluation of a field-based high-throughput phenotyping platform. Funct. Plant Biol. 2014, 41, 68–79. [Google Scholar] [CrossRef] [Green Version]

- McCormick, R.F.; Truong, S.K.; Mullet, J.E. 3d sorghum reconstructions from depth images identify qtl regulating shoot architecture. Plant Physiol. 2016, 172, 823–834. [Google Scholar] [CrossRef] [PubMed]

- Sodhi, P. In-Field Plant Phenotyping Using Model-Free and Model-Based Methods. Master’s Thesis, Carnegie Mellon University Pittsburgh, Pittsburgh, PA, USA, 2017. [Google Scholar]

- Batz, J.; Méndez-Dorado, M.A.; Thomasson, J.A. Imaging for high-throughput phenotyping in energy sorghum. J. Imaging 2016, 2, 4. [Google Scholar] [CrossRef]

- Efron, S. The Use of Unmanned Aerial Systems for Agriculture in Africa. Ph.D. Thesis, The Pardee RAND Graduate School, Santa Monica, CA, USA, 2015. [Google Scholar]

- Xue, X.; Lan, Y.; Sun, Z.; Chang, C.; Hoffmann, W.C. Develop an unmanned aerial vehicle based automatic aerial spraying system. Comput. Electron. Agric. 2016, 128, 58–66. [Google Scholar] [CrossRef]

- Luna, I.; Lobo, A. Mapping crop planting quality in sugarcane from uav imagery: A pilot study in Nicaragua. Remote Sens. 2016, 8, 500. [Google Scholar] [CrossRef]

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.; Neely, H.L. Unmanned aerial vehicles for high-throughput phenotyping and agronomic research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef] [PubMed]

- Lan, Y.; Thomson, S.J.; Huang, Y.; Hoffmann, W.C.; Zhang, H. Current status and future directions of precision aerial application for site-specific crop management in the USA. Comput. Electron. Agric. 2010, 74, 34–38. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Gutierrez, M.; Reynolds, M.P.; Raun, W.R.; Stone, M.L.; Klatt, A.R. Spectral water indices for assessing yield in elite bread wheat genotypes under well-irrigated, water-stressed, and high-temperature conditions. Crop Sci. 2010, 50, 197–214. [Google Scholar] [CrossRef]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine learning for high-throughput stress phenotyping in plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef] [PubMed]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using uav based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using uav-based photogrammetry and hyperspectral imaging for mapping bark beetle damage at tree-level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N.F. Radiometric and geometric analysis of hyperspectral imagery acquired from an unmanned aerial vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining uav-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef] [PubMed]

- Tagle Casapia, M.X. Study of Radiometric Variations in Unmanned Aerial Vehicle Remote Sensing Imagery for Vegetation Mapping. Master’s Thesis, Lund University, Lund, Sweden, 2017. [Google Scholar]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; De Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-throughput phenotyping of plant height: Comparing unmanned aerial vehicles and ground lidar estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef] [PubMed]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution uav imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Fisher, J.R.; Acosta, E.A.; Dennedy-Frank, P.J.; Kroeger, T.; Boucher, T.M. Impact of satellite imagery spatial resolution on land use classification accuracy and modeled water quality. Remote Sens. Ecol. Conserv. 2018, 4, 137–149. [Google Scholar] [CrossRef]

- Willkomm, M.; Bolten, A.; Bareth, G. Non-destructive monitoring of rice by hyperspectral in-field spectrometry and uav-based remote sensing: Case study of field-grown rice in north rhine-westphalia, Germany. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; Volume 41. [Google Scholar]

- Malambo, L.; Popescu, S.; Murray, S.; Putman, E.; Pugh, N.; Horne, D.; Richardson, G.; Sheridan, R.; Rooney, W.; Avant, R. Multitemporal field-based plant height estimation using 3d point clouds generated from small unmanned aerial systems high-resolution imagery. Int. J. Appl. Earth Obs. 2018, 64, 31–42. [Google Scholar] [CrossRef]

- Lan, Y.; Shengde, C.; Fritz, B.K. Current status and future trends of precision agricultural aviation technologies. Int. J. Agric. Biol. Eng. 2017, 10, 1–17. [Google Scholar]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Abdullahi, H.; Mahieddine, F.; Sheriff, R.E. Technology impact on agricultural productivity: A review of precision agriculture using unmanned aerial vehicles. In Proceedings of the International Conference on Wireless and Satellite Systems, Bradford, UK, 6–7 July 2015; pp. 388–400. [Google Scholar]

- Sieberth, T.; Wackrow, R.; Chandler, J. Motion blur disturbs–the influence of motion-blurred images in photogrammetry. Photogramm. Rec. 2014, 29, 434–453. [Google Scholar] [CrossRef]

- Boracchi, G. Estimating the 3d direction of a translating camera from a single motion-blurred image. Pattern Recogn. Lett. 2009, 30, 671–681. [Google Scholar] [CrossRef]

- Mölg, N.; Bolch, T. Structure-from-motion using historical aerial images to analyse changes in glacier surface elevation. Remote Sens. 2017, 9, 1021. [Google Scholar] [CrossRef]

- ArduPilot. Available online: http://ardupilot.org/planner/ (accessed on 10 May 2017).

- Chang, A.; Jung, J.; Maeda, M.M.; Landivar, J. Crop height monitoring with digital imagery from unmanned aerial system (uas). Comput. Electron. Agric. 2017, 141, 232–237. [Google Scholar] [CrossRef]

- Wierzbicki, D.; Kedzierski, M.; Fryskowska, A. Assesment of the influence of uav image quality on the orthophoto production. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 1. [Google Scholar] [CrossRef]

- Crete, F.; Dolmiere, T.; Ladret, P.; Nicolas, M. The blur effect: Perception and estimation with a new no-reference perceptual blur metric. In Proceedings of the Human Vision and Electronic Imaging XII, San Jose, CA, USA, 12 February 2007; p. 64920I. [Google Scholar]

- Yin, X.; Hayes, R.M.; McClure, M.A.; Savoy, H.J. Assessment of plant biomass and nitrogen nutrition with plant height in early-to mid-season corn. J. Sci. Food Agric. 2012, 92, 2611–2617. [Google Scholar] [CrossRef] [PubMed]

- Anthony, D.; Elbaum, S.; Lorenz, A.; Detweiler, C. On crop height estimation with uavs. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4805–4812. [Google Scholar]

- Ehlert, D.; Adamek, R.; Horn, H.-J. Laser rangefinder-based measuring of crop biomass under field conditions. Precis. Agric. 2009, 10, 395–408. [Google Scholar] [CrossRef]

- Lumme, J.; Karjalainen, M.; Kaartinen, H.; Kukko, A.; Hyyppä, J.; Hyyppä, H.; Jaakkola, A.; Kleemola, J. Terrestrial laser scanning of agricultural crops. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences XXXVII-B5, Beijing, China, 3–11 July 2008; pp. 563–566. [Google Scholar]

- Yang, Q.; Ye, H.; Huang, K.; Zha, Y.; Shi, L. Estimation of leaf area index of sugarcane using crop surface model based on uav image. Trans. Chin. Soc. Agric. Eng. 2017, 33, 104–111. [Google Scholar]

- Jakob, S.; Zimmermann, R.; Gloaguen, R. The need for accurate geometric and radiometric corrections of drone-borne hyperspectral data for mineral exploration: Mephysto—A toolbox for pre-processing drone-borne hyperspectral data. Remote Sens. 2017, 9, 88. [Google Scholar] [CrossRef]

| Flight Date | Ground Truth Date | Days Difference | Number of Images | Number of Plots | Wind Speed |

|---|---|---|---|---|---|

| 05/24 | 05/26 | 2 | 231 | 700 | 4.4 m/s |

| 05/30 | 05/31 | 1 | 242 | 700 | 2.2 m/s |

| 06/16 | 06/16 | 0 | 242 | 700 | 6.2 m/s |

| 06/29 | 07/03 | 4 | 233 | 700 | 4.0 m/s |

| 07/25 | 07/27 | 2 | 240 | 610 | 7.2 m/s |

| Items | Specifications |

|---|---|

| Wingspan | 1.22 m |

| Weight maximum | 2 kg |

| Material | EPP foam, carbon fiber tubes, coroplast |

| Battery | 6200 mAh, lithium polymer |

| Flight planning software | Mission Planner |

| Endurance | 40 minutes |

| Minimum air speed | 16 meters per second |

| Items | Descriptions | Specifications |

|---|---|---|

| Sensor | Sensor | APS-C type (23.5 × 15.6 mm) |

| Number of pixels | 24.3 MP | |

| Image sensor aspect ratio | 3:2 | |

| Exposure | ISO sensitivity | ISO 100-25600 |

| Shutter | Shutter speed | 1/4000 to 30 s |

| Flash sync. speed | 1/160 s | |

| Lens | Focal length Aperture range | 16 mm F22 to F2.8 |

| Size and Weight | Dimensions (W × H × L) | 4.72 × 2.63 × 1.78 in |

| Weight (with battery) | 0.34 kg |

| Items | Specifications |

|---|---|

| Processor | 32-bit ARM Cortex M4 core with FPU |

| 168 Mhz/256 KB RAM/2 MB Flash | |

| 32-bit failsafe co-processor | |

| Sensors | MPU6000 as main accel and gyro |

| ST Micro 14-bit accelerometer/compass (magnetometer) | |

| ST Micro 16-bit gyroscope | |

| Dimensions (W × H × L) | 2.0 × 0.6 × 3.2 in |

| Weight | 3.8 g |

| Items | Descriptions | Values |

|---|---|---|

| Alignment | Accuracy | High |

| Adaptive camera model fitting | Yes | |

| Dense point cloud | Quality | High |

| Depth filtering | Mild | |

| DEM | Model resolution | Around 5.52 cm/pix |

| Source data | Dense cloud | |

| Orthomosaic | Coordinate system | WGS 84/UTM zone 14N |

| Blending mode | Mosaic |

| Flight Date | X_RMSE (cm) | Y_RMSE (cm) | Z_RMSE (cm) |

|---|---|---|---|

| 5/24 | 2.52 | 1.72 | 1.88 |

| 5/30 | 2.23 | 2.12 | 0.96 |

| 6/16 | 2.29 | 1.96 | 1.83 |

| 6/29 | 1.83 | 3.09 | 2.22 |

| 7/25 | 1.87 | 2.55 | 2.18 |

| Date | Performance | ||||

|---|---|---|---|---|---|

| Uncalibrated RMSE | Calibrated RMSE | Improvement RMSE | R2 | Relative RMSE | |

| 05/24 | 0.23 m | 0.19 m | 21.3% | 0.81 | 20.4% |

| 05/30 | 0.09 m | 0.07 m | 29.2% | 0.83 | 6.1% |

| 06/16 | 0.21 m | 0.18 m | 17.7% | 0.73 | 12.0% |

| 06/29 | 0.14 m | 0.12 m | 17.4% | 0.85 | 8.0% |

| 07/25 | 0.29 m | 0.26 m | 12.8% | 0.63 | 16.2% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, X.; Thomasson, J.A.; Bagnall, G.C.; Pugh, N.A.; Horne, D.W.; Rooney, W.L.; Jung, J.; Chang, A.; Malambo, L.; Popescu, S.C.; et al. Measurement and Calibration of Plant-Height from Fixed-Wing UAV Images. Sensors 2018, 18, 4092. https://doi.org/10.3390/s18124092

Han X, Thomasson JA, Bagnall GC, Pugh NA, Horne DW, Rooney WL, Jung J, Chang A, Malambo L, Popescu SC, et al. Measurement and Calibration of Plant-Height from Fixed-Wing UAV Images. Sensors. 2018; 18(12):4092. https://doi.org/10.3390/s18124092

Chicago/Turabian StyleHan, Xiongzhe, J. Alex Thomasson, G. Cody Bagnall, N. Ace Pugh, David W. Horne, William L. Rooney, Jinha Jung, Anjin Chang, Lonesome Malambo, Sorin C. Popescu, and et al. 2018. "Measurement and Calibration of Plant-Height from Fixed-Wing UAV Images" Sensors 18, no. 12: 4092. https://doi.org/10.3390/s18124092

APA StyleHan, X., Thomasson, J. A., Bagnall, G. C., Pugh, N. A., Horne, D. W., Rooney, W. L., Jung, J., Chang, A., Malambo, L., Popescu, S. C., Gates, I. T., & Cope, D. A. (2018). Measurement and Calibration of Plant-Height from Fixed-Wing UAV Images. Sensors, 18(12), 4092. https://doi.org/10.3390/s18124092