Abstract

In this paper, the non-ideal factors, which include spatial noise and temporal noise, are analyzed and suppressed in the high-speed spike-based image sensor, which combines the high-speed scanning sequential format with the method that uses the interspike time interval to indicate the scene information. In this imager, spatial noise contains device mismatch, which results in photo response non-uniformity (PRNU) and the non-uniformity of dark current. By multiplying the measured coefficient matrix the photo response non-uniformity is suppressed, and the non-uniformity of dark current is suppressed by correcting the interspike time interval based on the time interval of dark current. The temporal noise is composed of the shot noise and thermal noise. This kind of noise can be eliminated when using the spike frequency to restore the image. The experimental results show that, based on the spike frequency method, the standard deviation of the image decreases from 18.4792 to 0.5683 in the uniform bright light by using the calibration algorithm. While in the relatively uniform dark condition, the standard deviation decreases from 1.5812 to 0.4516. Based on interspike time interval method, because of time mismatch and temporal noise, the standard deviation of the image changes from 27.4252 to 27.4977 in the uniform bright light by using the calibration algorithm. While in the uniform dark condition, the standard deviation decreases from 2.361 to 0.3678.

1. Introduction

High-speed target recognition, as a branch of visual technology, is to identify objects in the field, for instance, for vehicle tracking [1]. In recent years, with the deepening of research on target recognition, how to let machine capture high-speed moving objects has attracted more and more attention. To meet these needs, high-speed image sensors have been developed rapidly. They can be classified into two types. One is the continuous image sensor [2,3,4]. Its frame rate is relatively low. In some areas, where the frame rate needs to reach kfps~Mfps, this imager will no longer be applicable. Another one is the burst image sensor. The frame rate of this kind of image sensor can reach extremely high [5,6], which means the high-speed demand can be satisfied, yet the image sensor’s ability of continuous perception is sacrificed. The number of consecutive frames is no more than 100. Besides, an extremely high frame rate will bring huge power consumption and large data volume, which becomes the bottleneck for the development of this image sensor. In summary, for the high-speed sensor, the focus of research has been to increase the speed of detection, while making sure that the output data rate and power consumption remain low.

In the past decade, in view of the deepening of biological vision research, biomimetic silicon retinal has been developed rapidly based on silicon technology. A silicon retina-based image sensor named the Dynamic Visual Sensor (DVS) [7,8] has attracted more attention in recent years. In this image sensor, the change of light intensity is converted into a series of spikes or events. Each pixel just detects the variation of the light and no spike will be generated if the light intensity is constant. Therefore, plenty of static background or redundant information that the high-speed image senor puts out is eliminated by the pixel when it receives the scene information. In this way, the amount of data and power consumption are greatly reduced. In addition, a new asynchronous communication pattern called Address Event Representation (AER) [9,10] is adapted to this image sensor. This mode of communication eliminates the concept of frame rate by using handshake protocol as a bridge of communication. In this way, the image sensor has the ability to capture fast moving objects continuously with an extremely low data rate. The sensor in reference [11] is able to capture objects rotating at 10,000 revolutions per second. However, as arbiter is employed to judge the conflicts when multiple pixel issue output requests at the same time, this communication protocol still has a drawback where the event rate has a ceiling because of the limitation of the output bandwidth. In reference [12], the max bandwidth is 50,000,000 events per second (eps) with the imager’s resolution of 240 × 180. With violent changes in light intensity or with a larger size of the pixel array, the max data bandwidth is easy to reach. In the meanwhile, a large number of pixels are blind and wait the readout command, which will miss subsequent scenes.

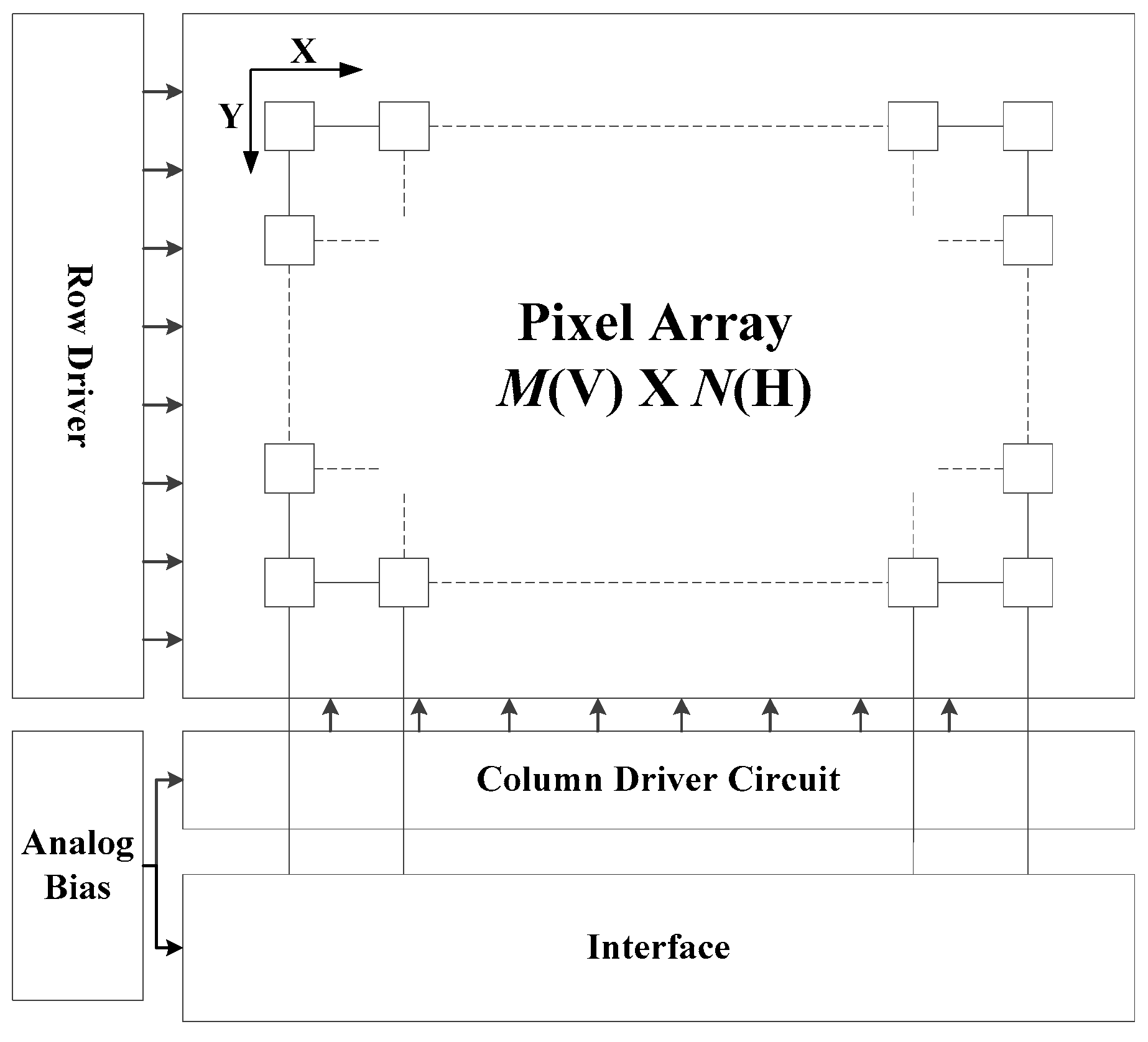

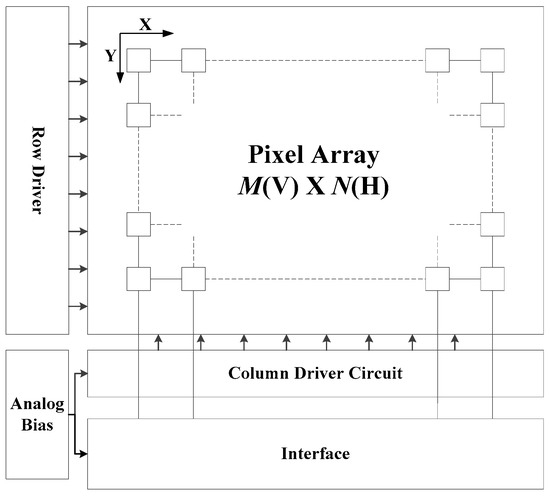

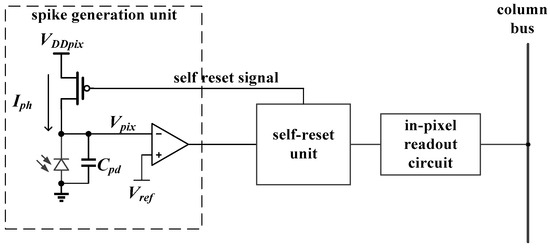

Unlike the DVS, another way that uses the interspike time interval to represent the light intensity has been reported in [9,13]. The image sensor in this paper uses a traditional pulse frequency modulation (PFM) image sensor to generate spikes, and eliminates the multi-bit memory, which can effectively improve the speed detection ability. By converting the light information into two adjacent spikes, plenty of data can be reduced. Besides, the high-speed scanning sequential format means the image sensor has the ability to capture the high-speed moving objects even in a complex scene. Based on the above two considerations, an imager with the pixel structure combining with the interspike time interval representation and synchronous scan readout is shown in Figure 1.

Figure 1.

The structure of the spike-based image sensor. M is the row number and N is column number of the pixel array.

In this paper, the mechanism and influence of non-ideal factors of a spike-based image sensor are discussed. As the pixel continuously perceives the scene information in the time domain, it is impossible to eliminate the influence of photo response non-uniformity (PRNU) by the time domain correlation double sampling method [14,15]. However, based on characteristics of PRNU, a coefficient matrix obtained by the measurement is used to suppress its effect. The non-uniformity of dark current will introduce a coefficient, which is related to the light intensity, and it can be corrected by means of the measured time interval in the dark. The temporal noise is distributed disorderly in the time domain, but its influence can be eliminated by averaging in imaging with sacrificing the high-speed detection capability of the imager. From the experimental results, the non-uniformity in the image caused by non-ideal factors is well suppressed in the uniform light and the scene graph test by using the spike frequency to restore the image. However, when using the interspike time interval to restore the image, the correction effect performs poorly in the bright portion, and effectively in the dark portion.

This paper is organized as follows: The working principle and detailed modules of the spike-based pixel structure are described in Section 2. In Section 3, the non-ideal factors introduced by the spatial noise and temporal noise and their impacts on the system are discussed. In addition, different correction methods are proposed for corresponding the spatial noise. Experimental results are given in Section 4, and Section 5 gives a conclusion on the calibration algorithm.

2. Pixel Architecture

2.1. Spike-Based Pixel Structure and Working Principle

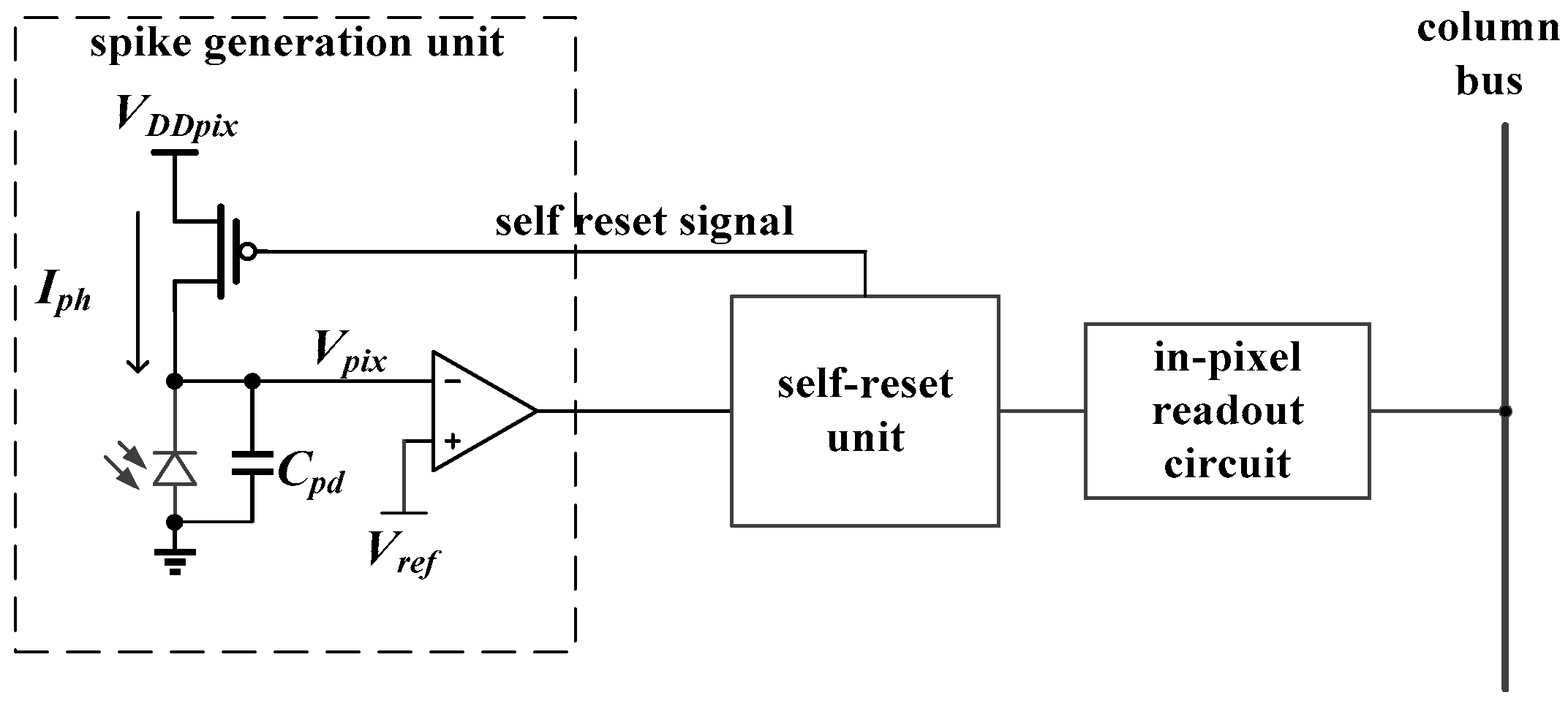

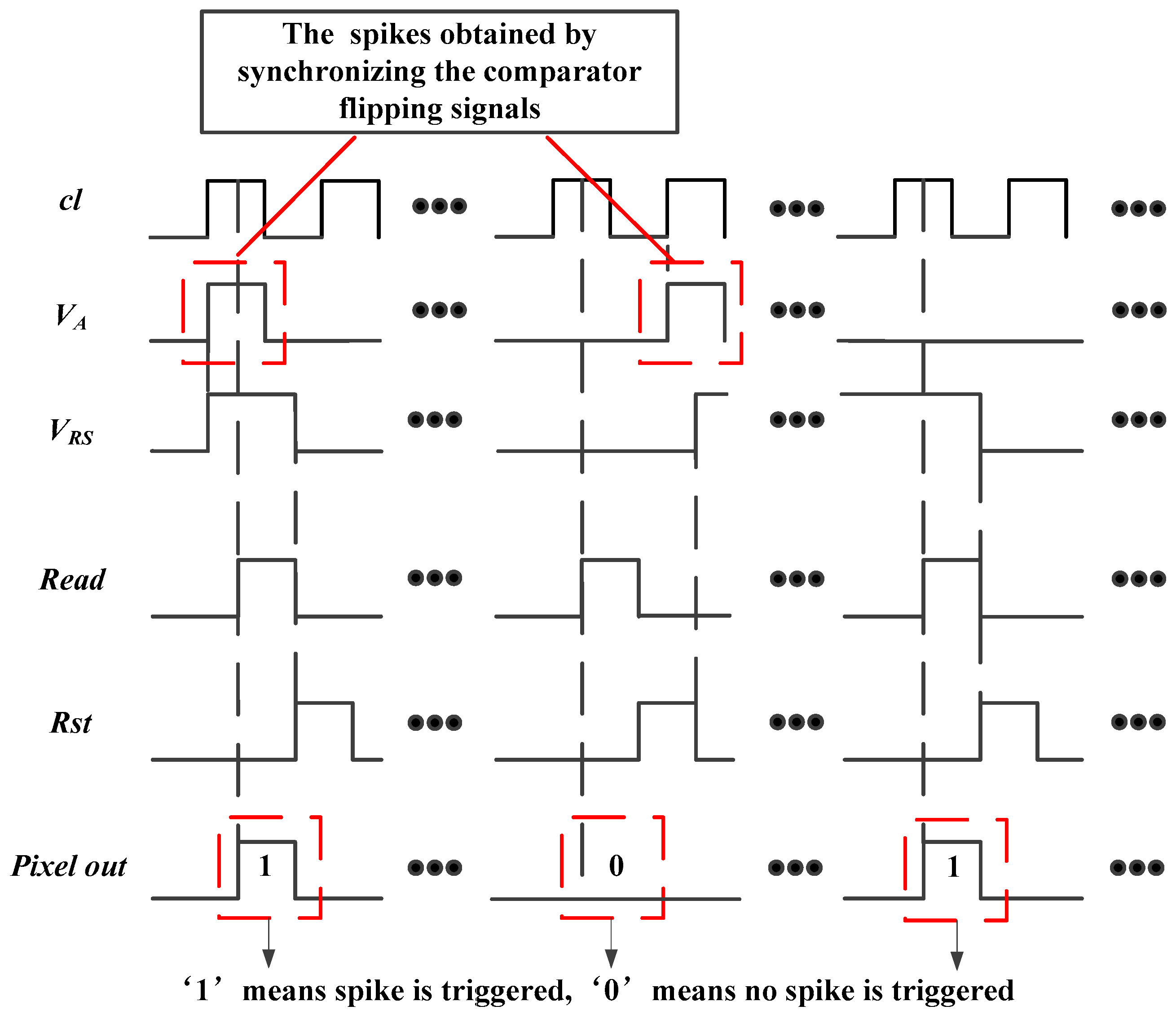

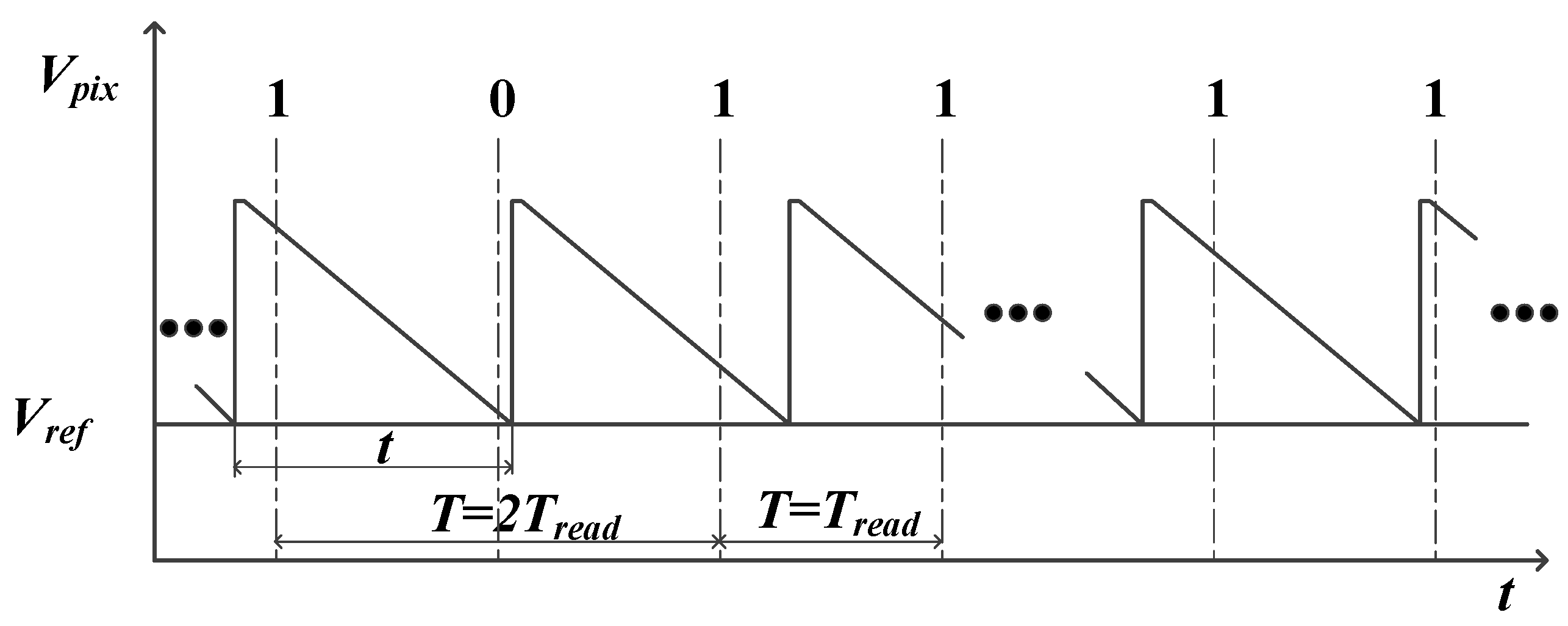

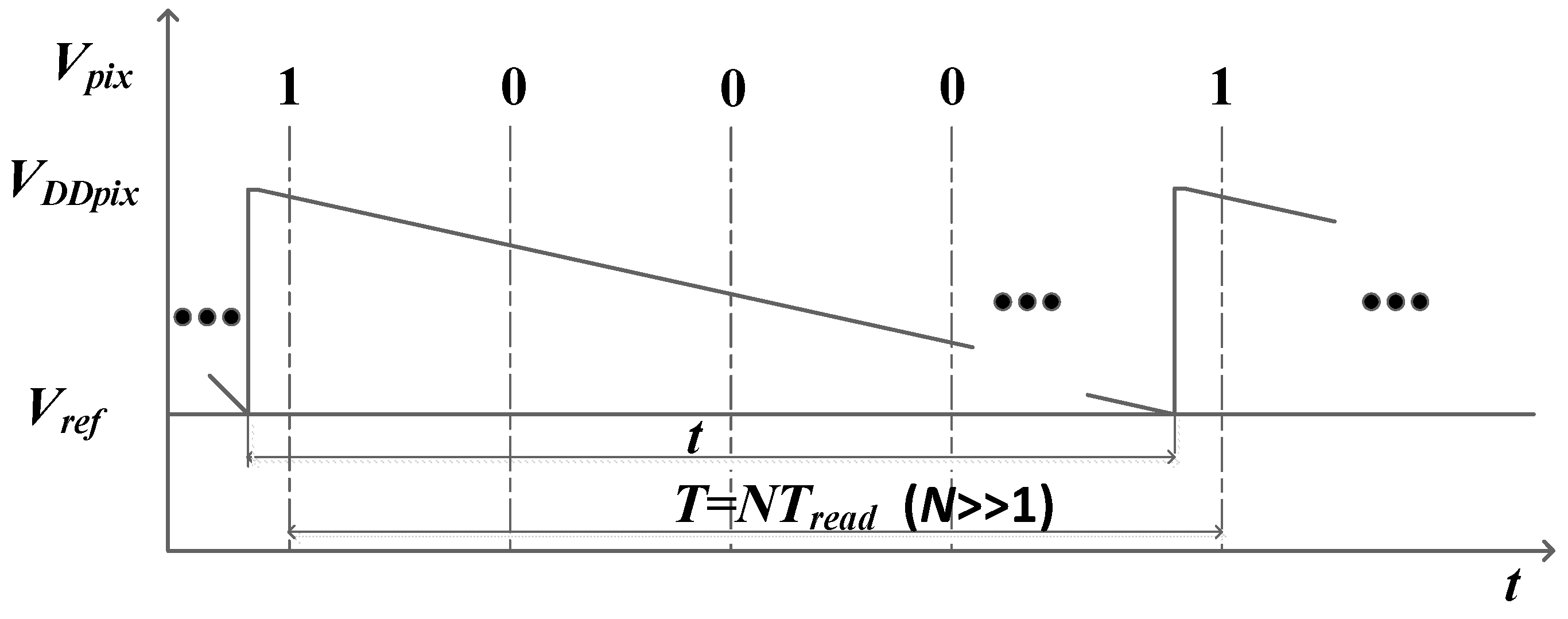

Figure 2 shows the pixel structure and its working principle. Figure 2a shows the pixel consists of three parts: spike generation unit, self-reset unit, and in-pixel readout circuit. Figure 2b illustrates the operating principle with three states: integration, reset, and readout. In the integration state, the photodiode in the spike generation unit is employed to convert the light current, Iph, into the voltage generated by the photodiode, Vpix. The Vpix node voltage will drop with the collection of photoelectrons. When its value reaches the reference voltage, Vref, the comparator turns over. Once the self-reset unit detects the flip signal, the pixel will enter the reset state. After temporary reset time, a low-level spike, which is used to reset the photodiode, will be generated in the self-reset unit and a new integration stage is resumed. The spike will be stored in the in-pixel readout circuit in the meanwhile. In the readout state, the spike is sequentially scanned and transmitted to the column bus. The dashed line in Figure 2b is the readout state. There will be two kinds of results: “0” and “1”, where “0” means that there is no trigger spike completed within a readout cycle and “1” means that the trigger is completed. Once the information has been readout by the high-speed scanning sequential format, the in-pixel readout circuit will be cleared to receive the next round of signals. In this way, the pixel can detect the continuous light intensity of the scene without information loss.

Figure 2.

(a) The pixel structure and (b) working principle. VDDpix is the supply voltage of the photodiode and Cpd is the capacitance of the photodiode.

According to the analysis of the working principle, Iph is inversely proportional to the discharging time, t, and the relationship can be expressed as follows:

2.2. Detailed Pixel Structure and Timing Diagram

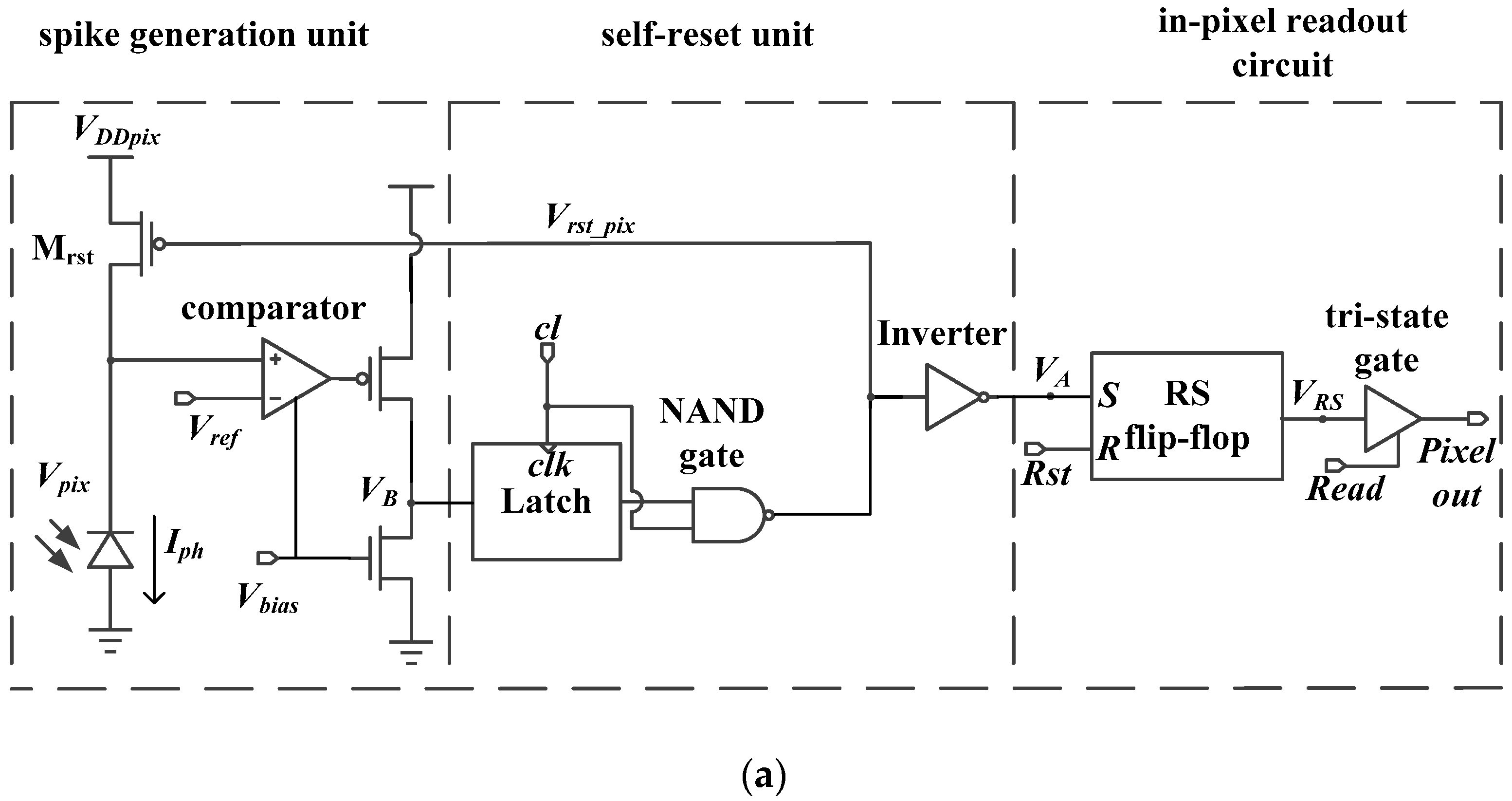

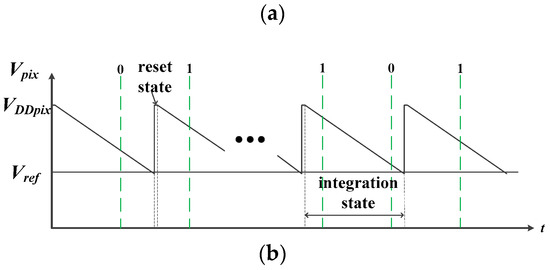

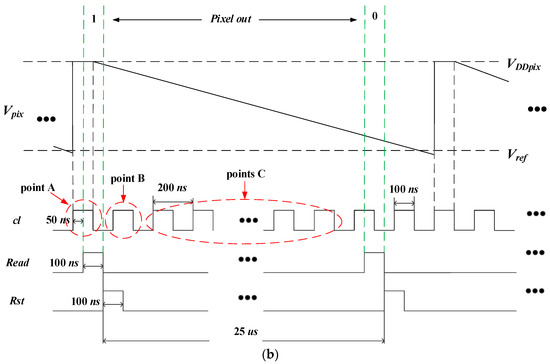

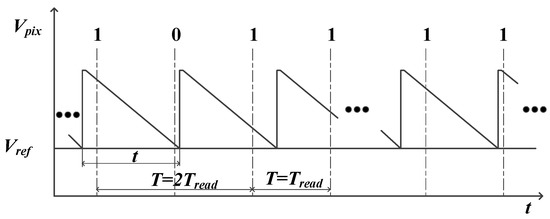

The detailed pixel structure and timing diagram are given in Figure 3. As shown in Figure 3a, the self-reset unit consists of a Latch, a not–and (NAND) gate, and an Inverter. When the Latch control signal, cl, is low, Latch is transparent to the input data. Latch will be locked when cl is high. The in-pixel readout circuit is composed of a reset-set (RS) flip-flop and a tri-state gate. When the Inverter output signal, VA, is high, the output of RS flip-flop is set to “1” and will be maintained until the row reset signal, Rst, is enabled. For the tri-state gate, once the row scan readout signal, Read, is enabled, the data stored in the RS flip-flop will be transmitted to the column bus. Figure 3b shows the timing diagram, where the three signals are all shared by the pixels of one row. The array has 250 (V) × 400 (H) and the row scanning time is 100 ns, so both the period of signal Read (as the frame period, Tread, of the pixel) and signal Rst are 25 μs. The period of the Latch control signal cl is 200 ns.

Figure 3.

(a) Detailed structure and (b) timing diagram of the spike-based pixel.

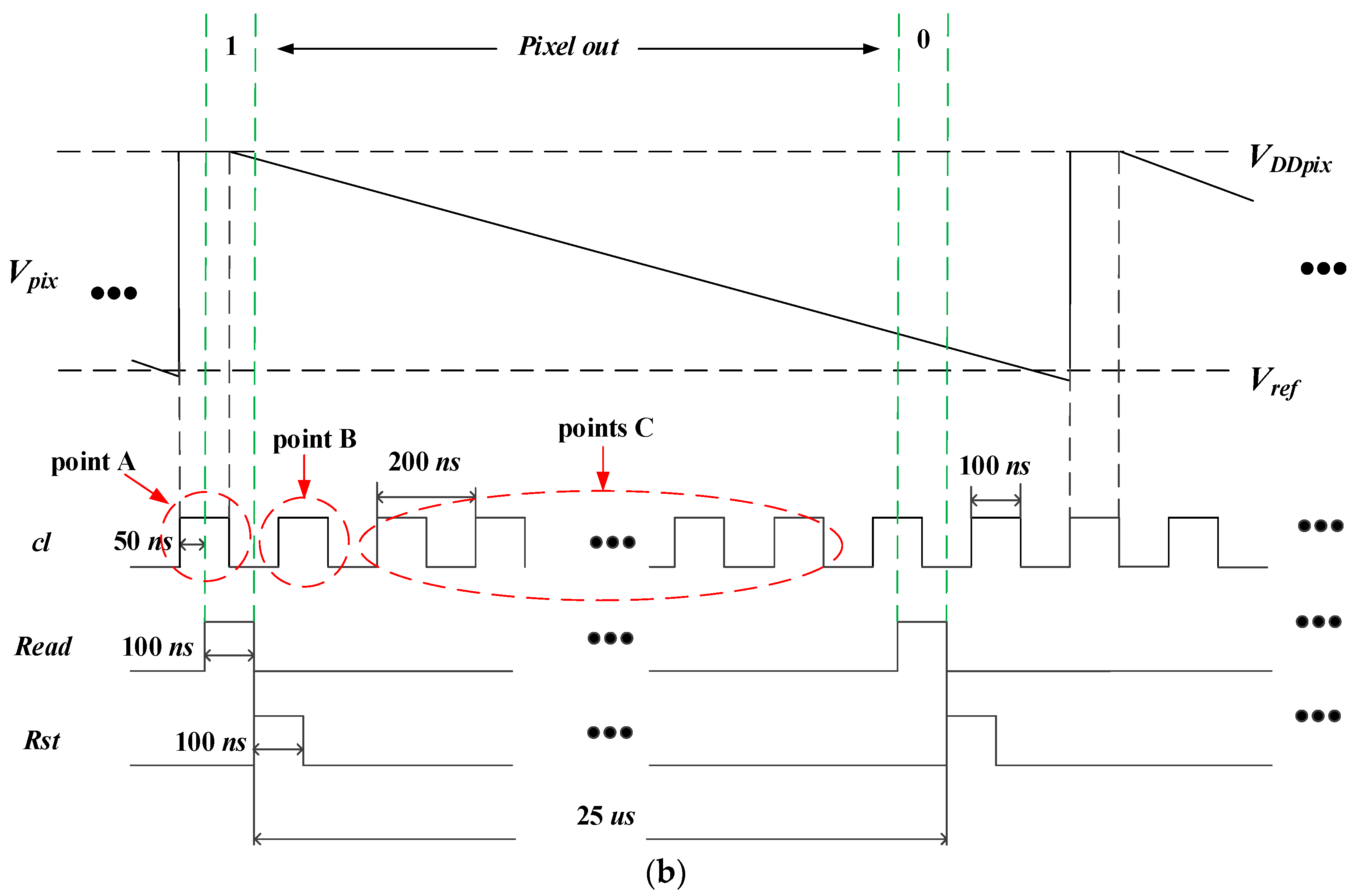

2.3. Synchronous Operation of Comparator Flipping Signal

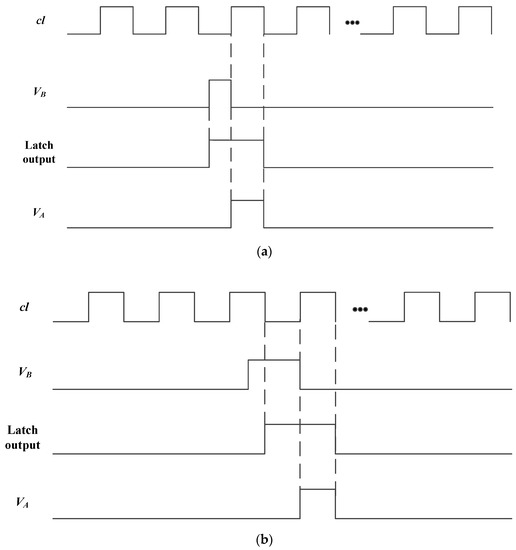

The Latch and NAND Gate play a significant role in synchronizing the output of the comparator with cl. Figure 4 shows the two cases of the synchronization process where Figure 4a,b show the rising edge of the comparator output, VB, that falls on the low level and high level of cl, respectively. As we can see in Figure 4, the spike of VA is just produced during the high level of cl. In this way, the flipping signal of the comparator is synchronized to cl. When the spike appears at point A of cl in Figure 3b, it completes triggering the RS flip-flop. After 50 ns, the output of RS flip-flop, VRS, is readout by Read. For point B, the RS flip-flop is shielded by Rst for 50 ns. However, the spike can still trigger the RS trigger through another 50 ns and the information of VRS will be read out until the next enabled level of Read arrives. For the points C, the information of VRS is readout when the next enable level of Read arrives.

Figure 4.

The rising edge of comparator output, VB, that falls on the (a) low level and (b) high level of cl.

In summary, the timing structure in Figure 3b ensures that the generated spike can be stored and readout. However, the pixel just completes one spike readout process even if two or more spikes are triggered in one readout cycle. The reason is that the RS flip-flop has been set and latched by the time of the first spike comes, and the RS flip-flop will not react to the other spikes. So, the time resolution of the image sensor is 25 μs and the maximum detectable light of the structure can be defined according to Tread.

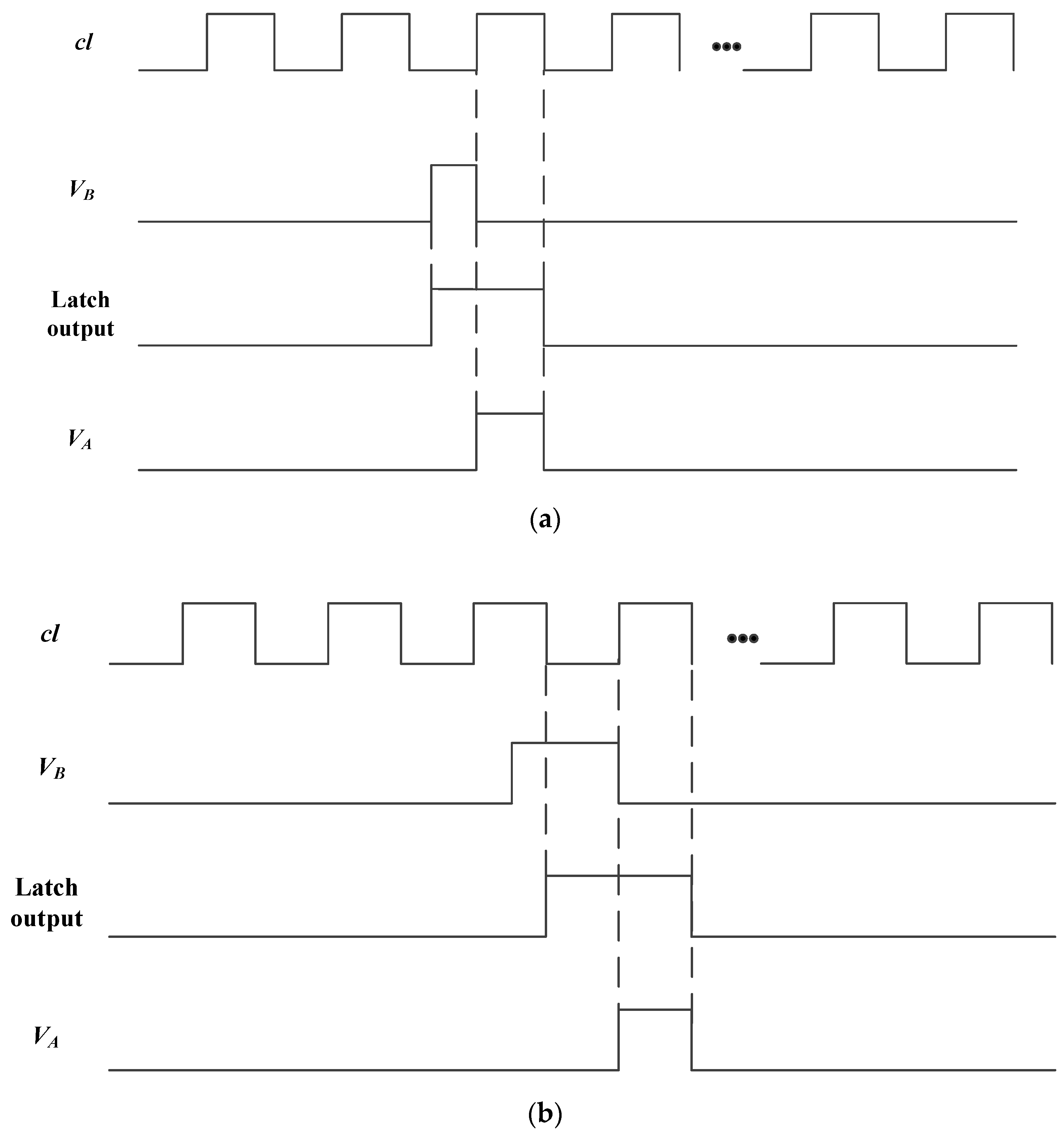

2.4. The Storage and Readout Process of the Generated Spike

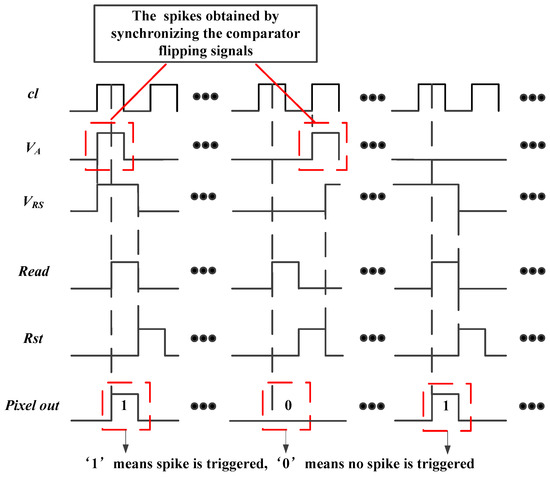

The in-pixel readout circuit is employed to store and output the generated spike. Its two components, the RS flip-flop and tri-state gate, are served as the 1 bit memory and the switch that connects the bus and the pixel, respectively. An example of the storage and readout process of two generated spikes is given in Figure 5.

Figure 5.

The storage and readout process of a generated spike.

As the generated spike has to be stored in pixel to match the synchronous readout process, the spike information observed on the column bus must be the integer multiple of the readout cycle, Tread, which means the spike time interval resolution is 25 μs. Here, we define the time interval between the two ‘1’ observed on the bus column as the interspike time interval, T, then we obtain:

In (2), INV is the integer function. Here, we can notice that the interspike time interval is not equal to t. Besides, the position where the pixel completes the reset operation also affects the interspike time interval. Figure 6 illustrates the operation of a pixel under the bright uniform light intensity. The value of t is greater than Tread and less than 2Tread. The first interspike time interval readout from the column is 2Tread, while the second one is Tread. In this way, a time mismatch whose value is uncertain is introduced when using the T to replace t. However, t can be obtained by counting the number of spikes during a period of time, like the PFM image sensor. Yet, it will sacrifice the ability of the imager to detect high speed objects.

Figure 6.

The relationship between the discharging time and interspike time interval.

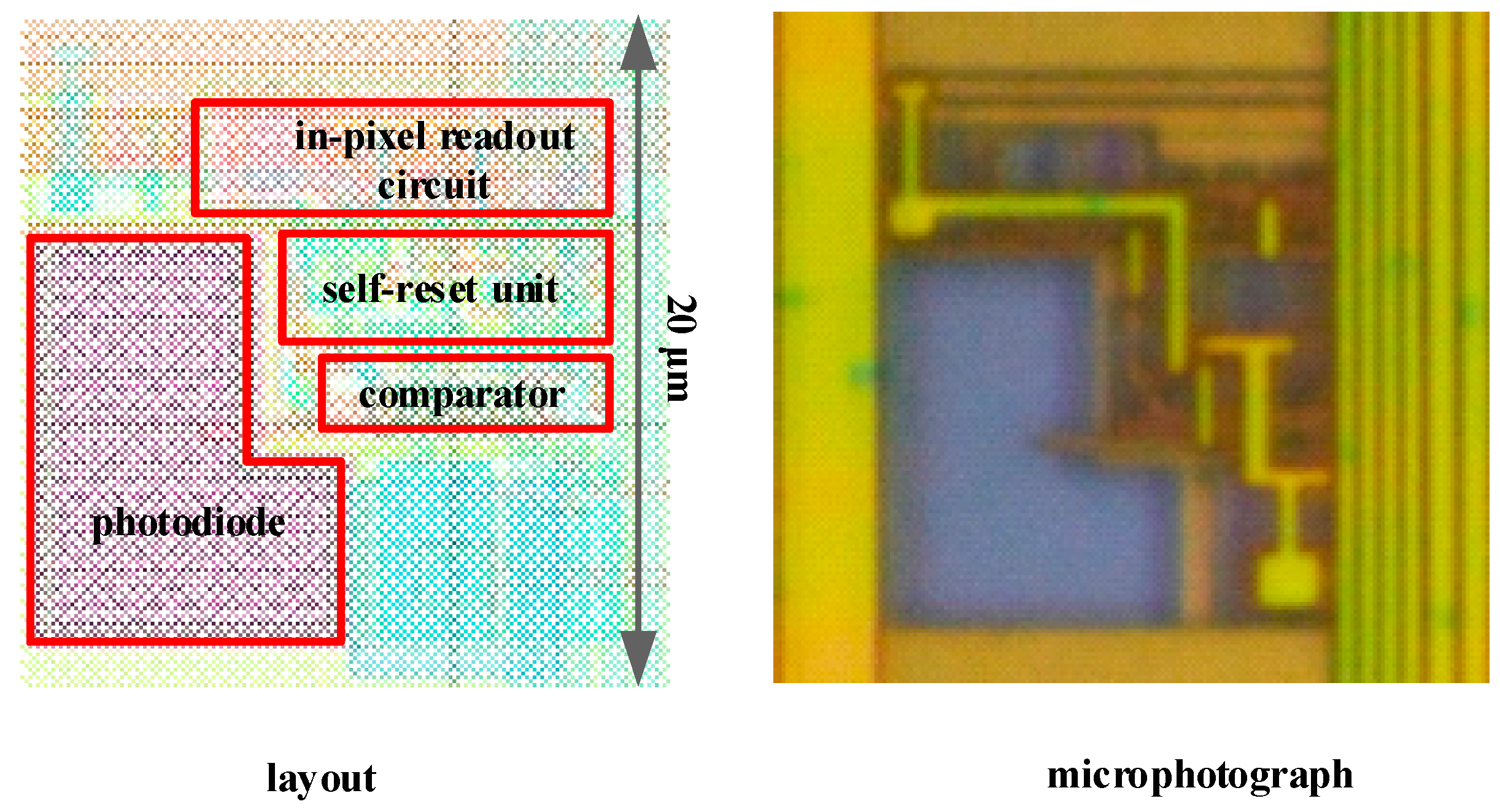

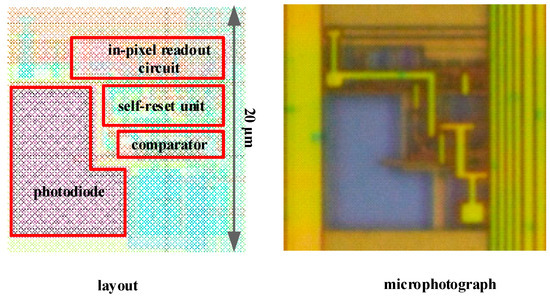

2.5. Pixel Layout

The chip has been implemented in a standard 0.11 μm 1-poly 3-metal process. Figure 7 shows the layout and microphotograph of the pixel with the main circuit parts. The square pixel covers 400 μm2 of silicon area and its fill factor is 13.75%.

Figure 7.

Layout and microphotograph of the spike-based pixel.

3. Noise Analysis and Suppression Methods

The noise sources of this spike-based image sensor are divided in two kinds: spatial noise, mainly caused by device mismatch and dark current non-uniformity, and noise in the time domain, shot, and thermal noise. This article will introduce their sources and impact on t.

3.1. Analysis of Spatial Noise

3.1.1. Analysis of Photo Response Non-Uniformity

In a physical realization, PRNU will be dominated by transistor mismatch between different pixels. Its main source comes from the mismatch of capacitance at the photodiode node, comparator, and reset transistor (Mrst) in Figure 3a. For capacitance mismatch, it will introduce a coefficient in (1), which can be expressed as:

where t’ is the discharging time with capacitance mismatch, ΔCpd is the relative deviation value of capacitance, and λ is the coefficient introduced by capacitance mismatch and its value is 1 + ΔCpd/Cpd.

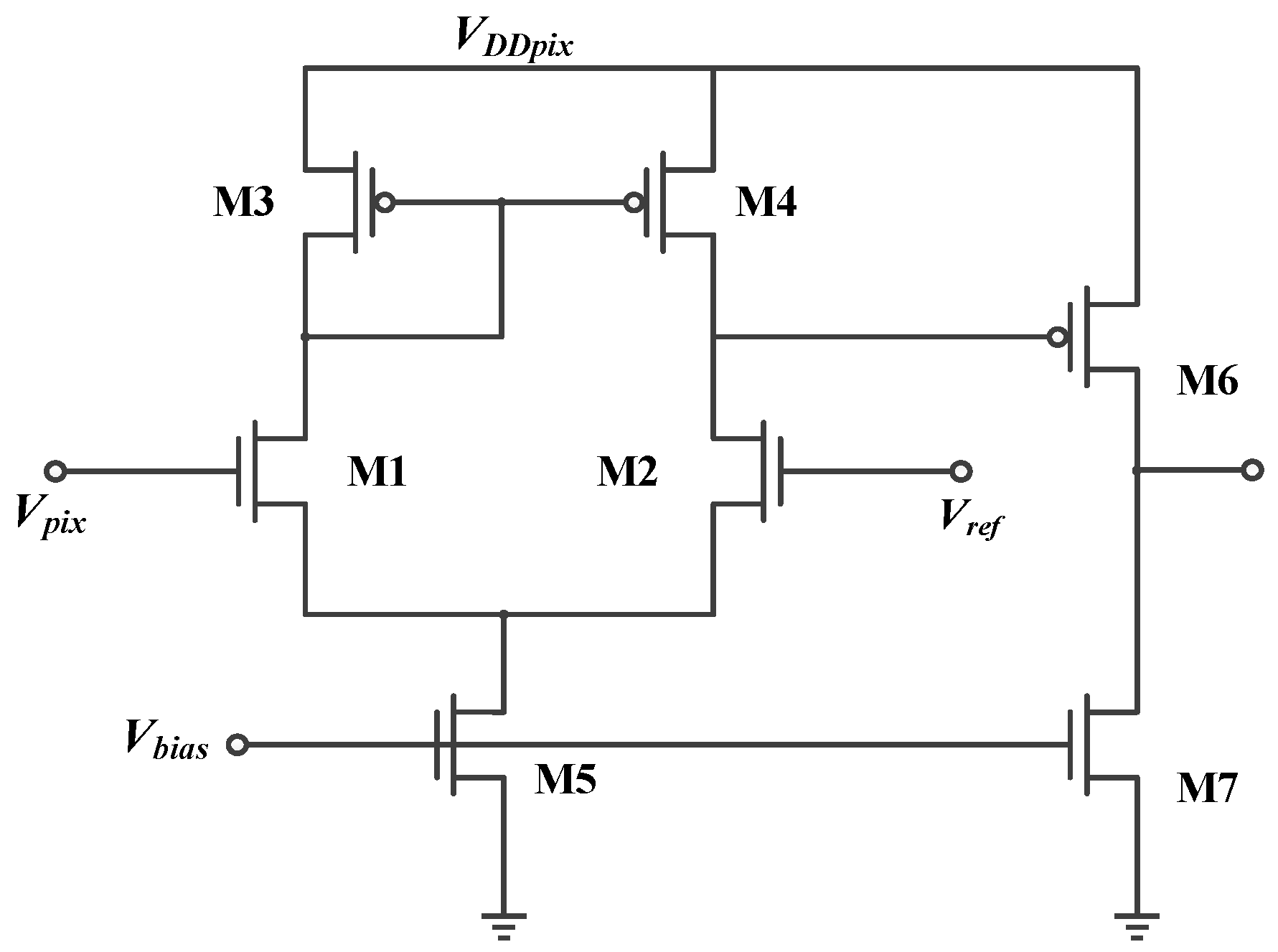

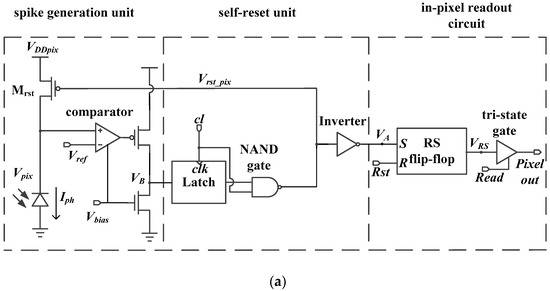

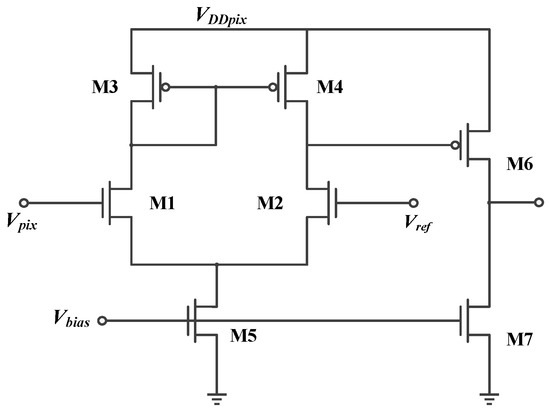

The in-pixel comparator shown in Figure 8 is used to trigger the spike. It must have a small current to reduce the power consumption. Besides, in order to reduce the noise introduced into the pixel, it is necessary to increase the transconductance of M1 and M2 and reduce the transconductance of M3 and M4, which will result in M1 and M2 working in the state of the sub-threshold region and M3 and M4 working in the state of the saturation region. In the subthreshold region, the current formula is given by:

where μ is the electron mobility, Cd is the gate oxide capacitance per unit area, VT is the thermal voltage, and n is the subthreshold slope. Combining (4) with the analysis of noise in [16,17], the offset voltage, VOS, can be expressed as:

where gmi is the transconductance of the ith transistor, and Δ indicates the variation of the corresponding parameters. The value of VOS is related to the mismatch between M1 and M2 and the mismatch between M3 and M4 in Figure 8. In order to decrease the size of the image sensor, a smaller size of comparator is expected. However, the mismatches will become serious with a small size of the comparator. Then, the value of VOS will increase according to (5).

Figure 8.

The structure of the in-pixel comparator.

As for the mismatch of the reset transistor, it will lead to the deviation of the reset voltage at the photodiode node, which will also introduce an offset voltage. In this way, the total offset voltage, VOS,tot, including the mismatch of comparator and the mismatch of reset transistor will introduce a coefficient in (1), which can be expressed as:

where ϕ is the introduced coefficient introduced by VOS,tot and its value is 1 + VOS,tot/( VDDpix − Vref), and t″ is the discharging time with VOS,tot.

In summary, considering the effects of capacitance mismatch and offset voltage, this paper proposes a coefficient, θ, to represent the total effects in (1), which is given by:

where tmis is the discharging time with the capacitance mismatch and VOS,tot. The possibility to suppress the effect of PRNU is provided by the coefficient θ.

3.1.2. Analysis of Non-Uniformity of Dark Current

The deviation of the photodiode will also lead to the non-uniformity of dark current, Idark, which is particularly prominent in low light intensity. It will introduce a coefficient, which is related to light intensity and expressed as:

where t‴ is the discharging time, including the dark current. Idark is the dark current, Ψiph is the coefficient introduced by the dark current and its value is 1 + Idark/Iph.

3.2. Analysis of Temporal Noise

Shot noise, , is caused by random carriers generated by the Positive-Negative (PN) junction. It is related to the photocurrent, dark current, integration time, and capacitance at the PD node. The randomly generated pair of electrons and holes caused by the photocurrent will result in the shot noise, while the thermal excitation in the depletion region will cause the shot noise of the dark current. It can be expressed as:

where K is the Boltzmann constant, and Ta is the absolute temperature. Combining (9) with (1) and the influence of the dark current, the can be expressed as:

It can be seen from (10) that when VDDpix is constant, the effect of the shot noise is independent of Iph, Idark and t, but just related to Vref. The transient values of and are unpredictable. However, the influence can be eliminated by time integration. The speed of the image sensor is limited yet.

3.3. Methods of Suppressing the Non-Uniformity

3.3.1. Method of Suppressing the Photo Response Non-Uniformity

As the image sensor will work continuously, and the effect introduced by mismatch can be reflected in the form of time integration. A measured coefficient matrix can be obtained to suppress the PRNU. The specific operation flow is described as: the uniform light current, Iph1, is used to irradiate the chip, and then count the spikes per pixel, Ni,j, in the array within a fixed time, Ttotal (the influence of temporal noise has been eliminated by long-term statistics). The number of spikes of one random pixel, which is considered to have no mismatch, is selected as the reference value, N, and the coefficient matrix, θi,j, can be obtained through dividing the reference value by the number of spikes of other pixels.

For the reference pixel, the relationship between the N and Ttotal can be written as:

where Cpdr is the capacitance at photodiode node of reference pixel, and VDDpix − Vrefr is the voltage needed to be released for the reference pixel. For the other pixels, due to the device mismatch (in fact, it is the mismatch between the pixel and reference pixel), we can also obtain:

where Cpd,i,j is the capacitance of pixel in (i,j), and VDDpix − Vrefr,i,j is the voltage needed to be released for the pixel in (i,j). Through dividing (11) by (12), we obtain the coefficient matrix as:

In this way, the PRNU can be eliminated by dividing the coefficient, θi,j.

3.3.2. Method of Suppressing the Non-Uniformity of Dark Current

The dark current has little relationship with the voltage of the photodiode in the image sensor. To facilitate analysis, the value of Idark is assumed to be constant. Under no light condition, the discharging time, tdark, can be expressed as:

Through dividing (14) by (8), the ratio of the dark current to the light current can be obtained as:

Then, the value of Ψiph is tdark/(tdark – t‴), and the relationship between t and t‴ can be expressed as:

According to (16), it is feasible to correct the discharging time by measuring the matrix of the dark current time interval, tdark,i,j.

4. Experimental Results

4.1. Verification Based on Behavior-Level Model

According to the expressions and working methods in this paper, a behavior-level model of the image sensor with the size of 250 (V) × 400 (H) is established. It can be found in our previous work [18]. Table 1 gives the parameters of the model. The simulation exposure time of the whole experiment is set to 7000 μs. Based on this ideal model, this paper adds non-ideal factors. The mismatch of the Cpd is ± 5% and the influence of VOS,tot introduced by the mismatch of the comparator and the reset transistor is ± 5%. The range of dark current is between 0.74 pA and 1.82 pA. These non-ideal factor matrices are a random distribution.

Table 1.

Model parameter list.

4.1.1. Evaluating Indexes

The objective evaluation indexes include the Peak Signal to Noise Ratio (PSNR) and Structural Similarity Index (SSIM). These two indexes are used to evaluate the effectiveness of the calibration algorithm. The indexes are given by:

where Framesize is the size of the picture, and R and F are the gray value of the reference picture and evaluate picture, respectively.

where x and y are the pictures that needs to be compared; γ is the average value of the picture; σ is the standard deviation of the picture, which is given in (21); L is the range of the gray value, which is 255; and k1 and k2 are the constant, which are 0.01 and 0.03, respectively.

where xi is the gray value of each pixel and is the average of the gray value of all the pixels.

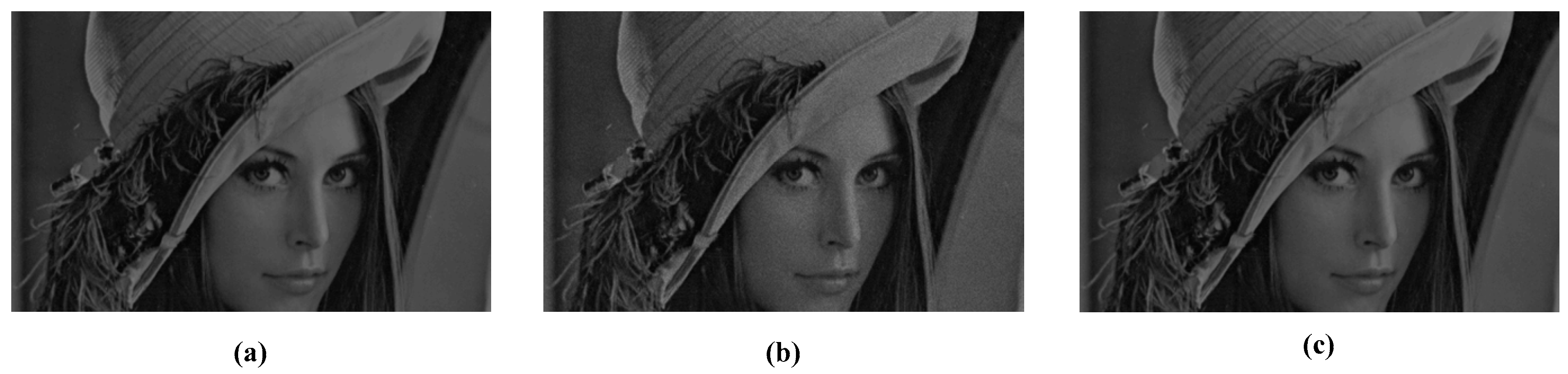

4.1.2. Simulation Results Based on the Spike Frequency

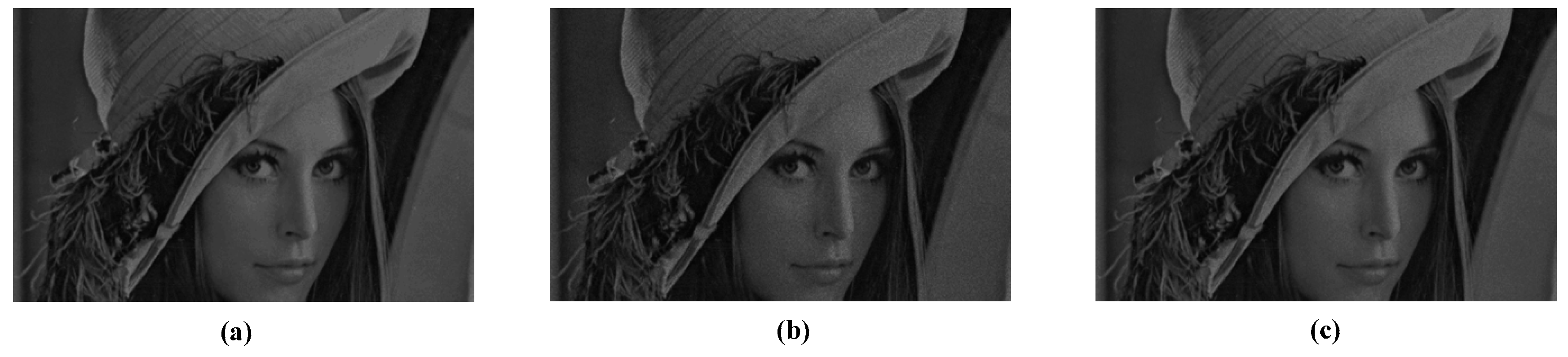

In this paper, the Lena image is used as the input of the sensor. To reduce the effect of synchronous readout on the discharging time, the spike frequency is used to restore the image. The data of 255 read out cycles are added up as the gray value of each pixel. Figure 9 shows the images reconstructed by using this method. Figure 9a shows the image without non-ideal factors, Figure 9b shows the image with non-ideal factors, and Figure 9c shows the image suppressed by the proposed calibration algorithm. Table 2 gives the results of the evaluating indexes. From the results, the non-ideal factors are suppressed.

Figure 9.

Comparison of images without and with suppression. (a) Original image. (b) The image with non-ideal factors. (c) The image suppressed by the calibration algorithm.

Table 2.

Comparison of objective evaluation indexes of the images in Figure 9.

4.1.3. Simulation Results Based on the Interspike Time Interval

In the high-speed application, the method of restoring images by the spike frequency limits the speed of image sensors, so using the interspike time interval, which can be found in [17], to restore images is desired. Images reconstructed by using the interspike time interval method are shown in Figure 10. Table 3 gives the results of the evaluating indexes. According to the two indicators, the method has an effective effect on suppressing non-ideal factors.

Figure 10.

Comparison of images without and with suppression. (a) Original image. (b) The image with non-ideal factors. (c) The image suppressed by the calibration algorithm.

Table 3.

Comparison of objective evaluation indexes of the images in Figure 10.

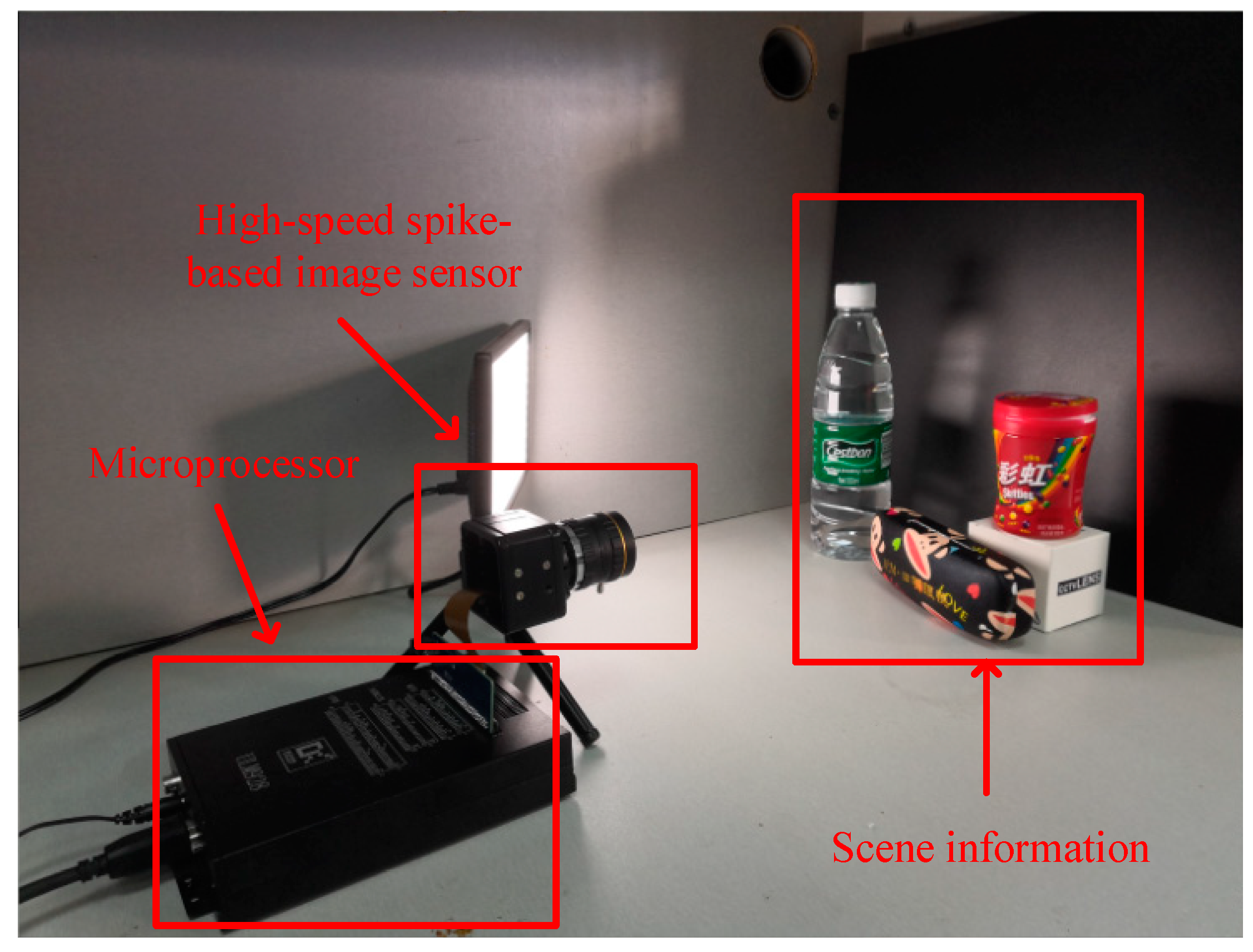

4.2. Verification Based on Camera

To verify the effectiveness of the proposed methods, a spike-based Complementary Metal Oxide Semiconductor (CMOS) imaging system is built. As shown in Figure 11, the system consists of the microprocessor, which is used to receive the data and transmit them to the computer, and a high-speed spike-based image sensor, which is used to collect the scene information. Table 4 provides an overview of the imager’s main specifications, important test, and measurement results.

Figure 11.

Photograph of the spike-based imaging system.

Table 4.

Summary sensor characteristics.

To obtain the coefficient matrix, θi,j, introduced by the devices mismatch in (13), the spikes are counted under the light intensity of 400 lux in 40 ms. In this paper, the pixel in the first row and the first column is taken as the reference pixel to obtain the coefficient matrix introduced by PRNU, and matrix tdark,i,j can be obtained through long time statistics. In this way, the preparation for correcting non-uniformity is ready.

4.2.1. Evaluation Indexes

In this paper, the experimental results are evaluated by subjective and objective indexes. The subjective indexes include the image visual effect and mean curve [19]. The objective index includes the standard deviation.

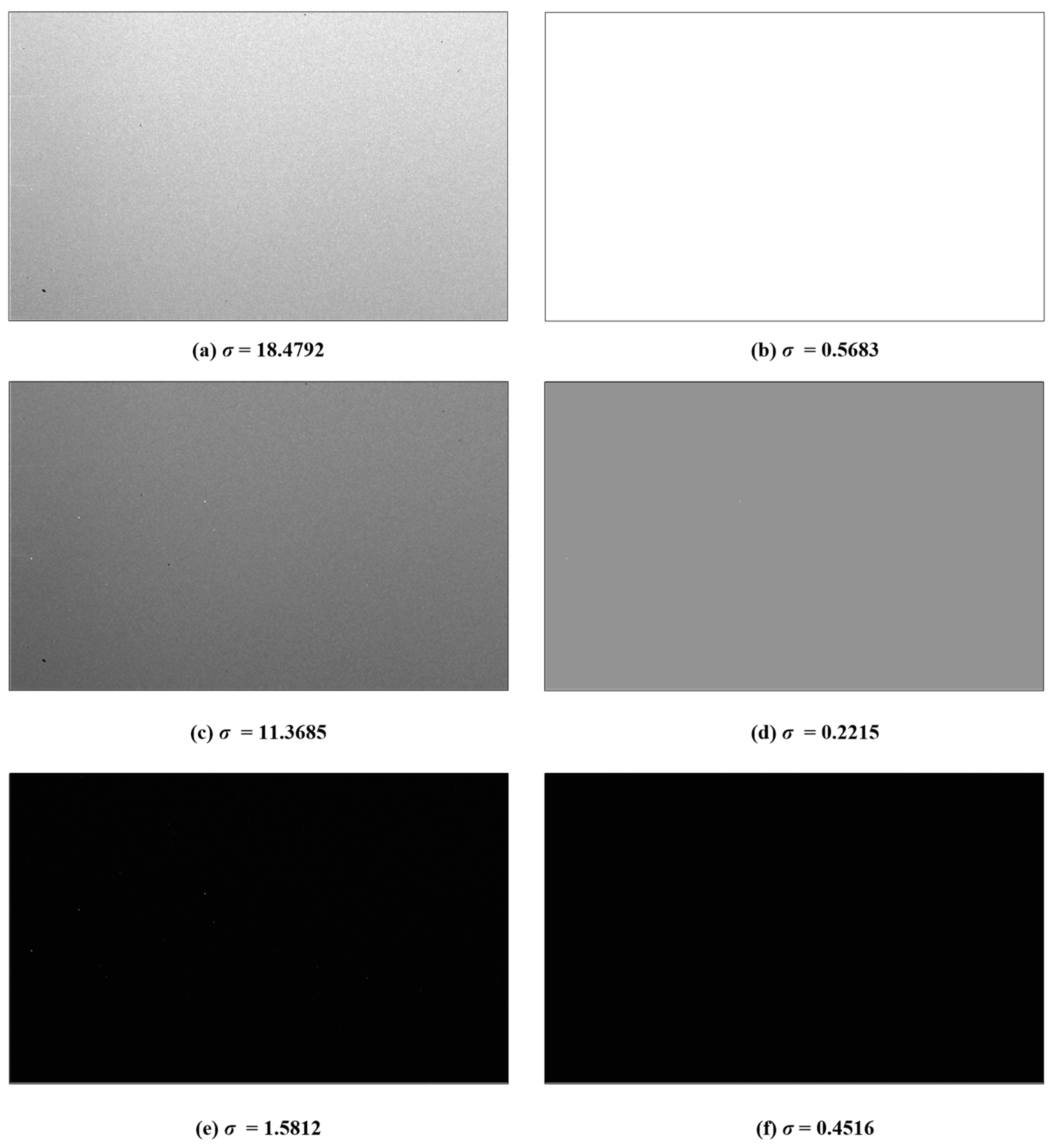

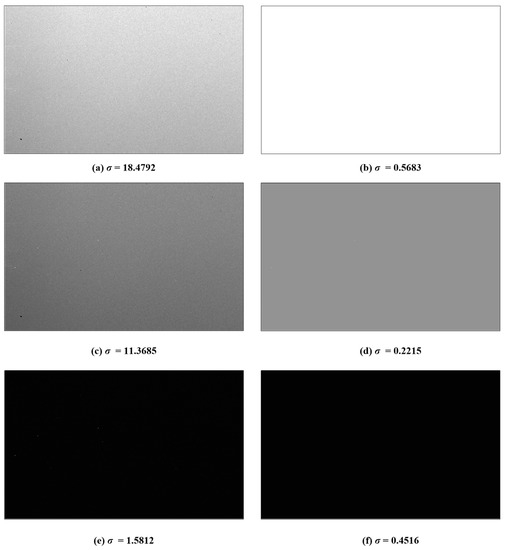

4.2.2. Non-uniformity Correction for Images Based on Spike Frequency

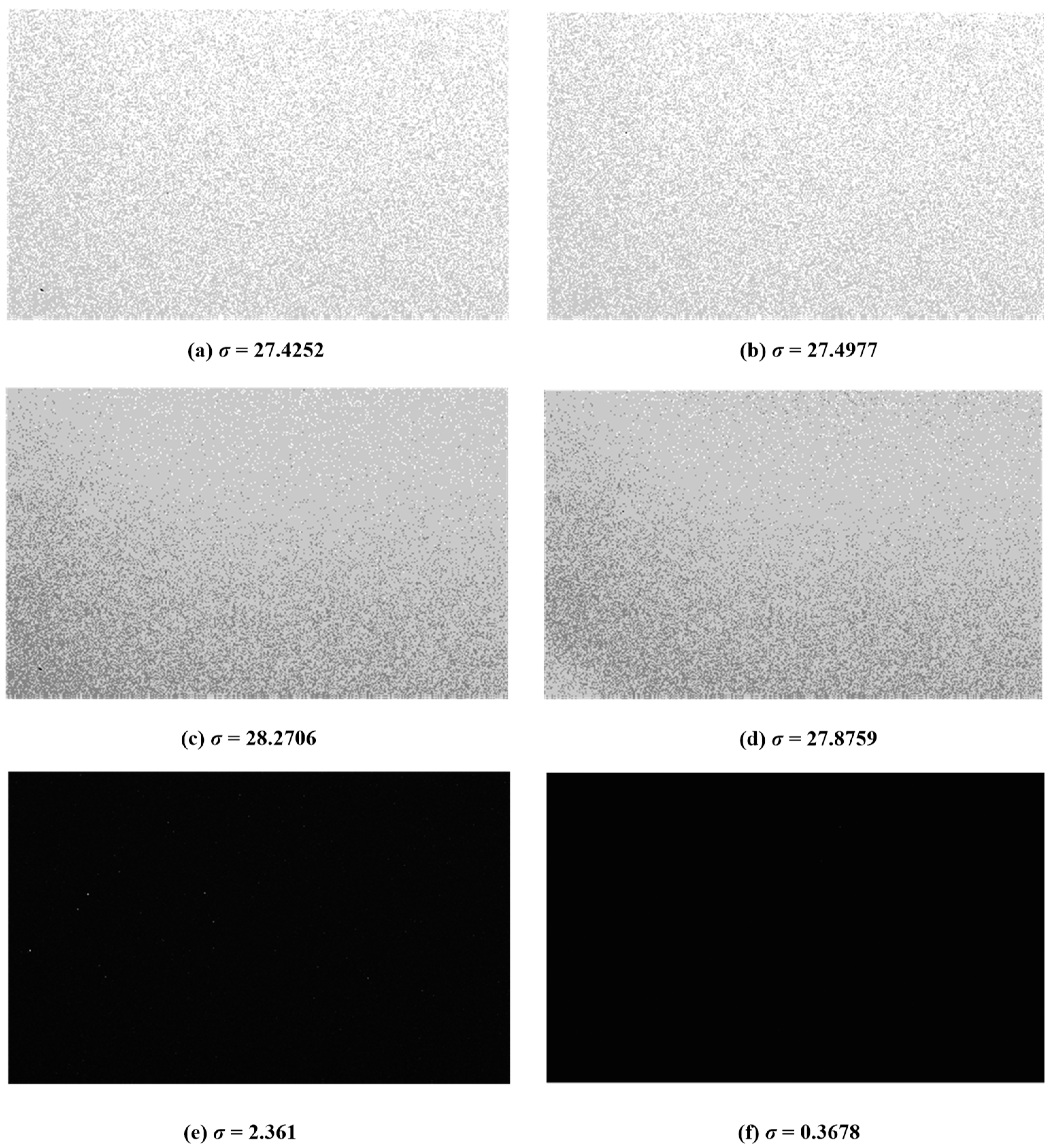

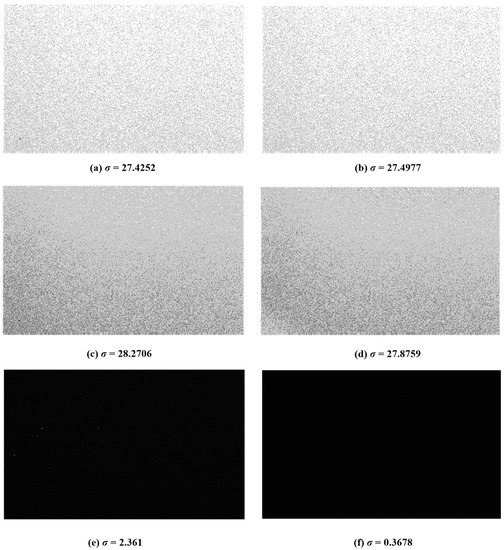

The original images and the images suppressed by the proposed methods are shown in Figure 12. The images are captured under the different uniform illumination with a resolution of 250 (V) × 400 (H) in Figure 12a,c,e. In these images, the standard deviation, σ, is used as an index to evaluate the inconsistency under the uniform illumination. From the results, the higher the intensity of light is, the better the suppressed result will be.

Figure 12.

The original images are captured under the (a) high, (c) moderate, and (e) low uniform light intensity. The suppressed results are given corresponding to (b) high, (d) moderate, and (f) low light intensity, respectively. The standard deviation of the image is shown in the bottom.

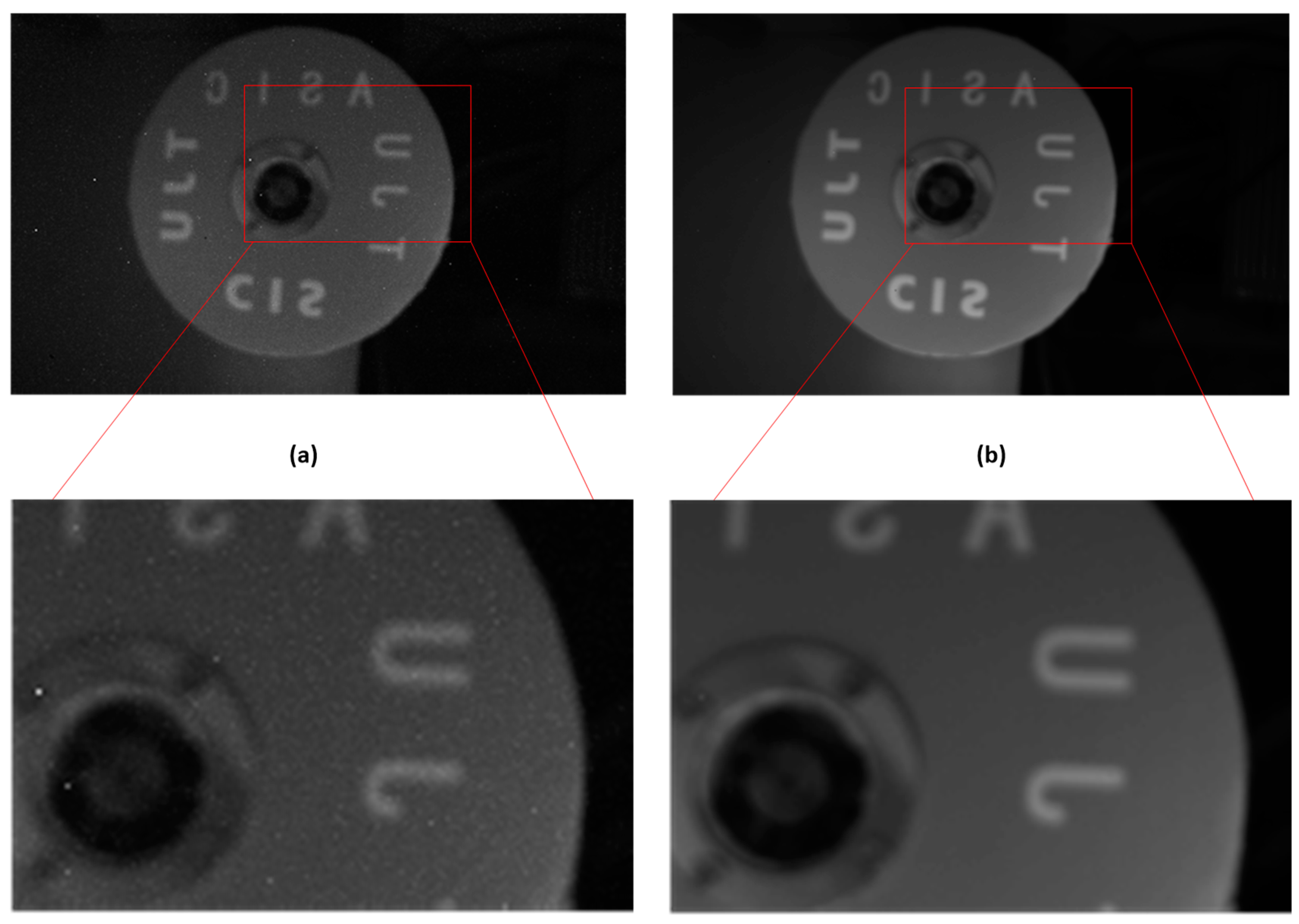

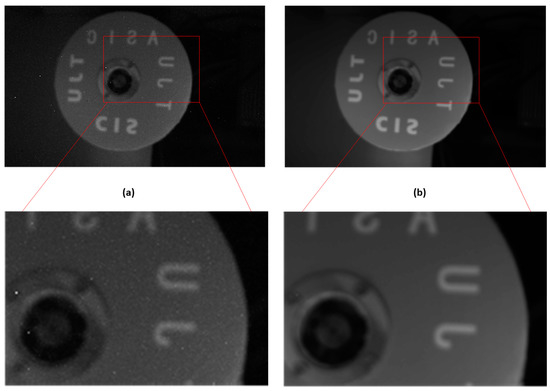

In order to verify the applicability of the calibration algorithm in any light intensity, a turntable image has been collected. Figure 13a shows the image recovered by the spike frequency, and Figure 13b displays the suppressed image. As shown in these two images, the influences of PRNU and the dark current are effectively suppressed and the image becomes very smooth.

Figure 13.

The original scene graph captured by the imager is shown in (a), and (b) gives the suppressed results.

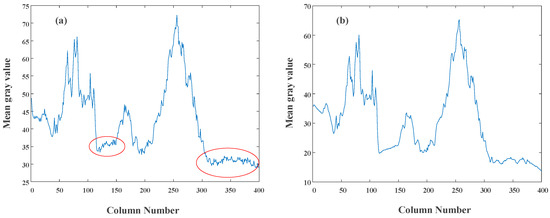

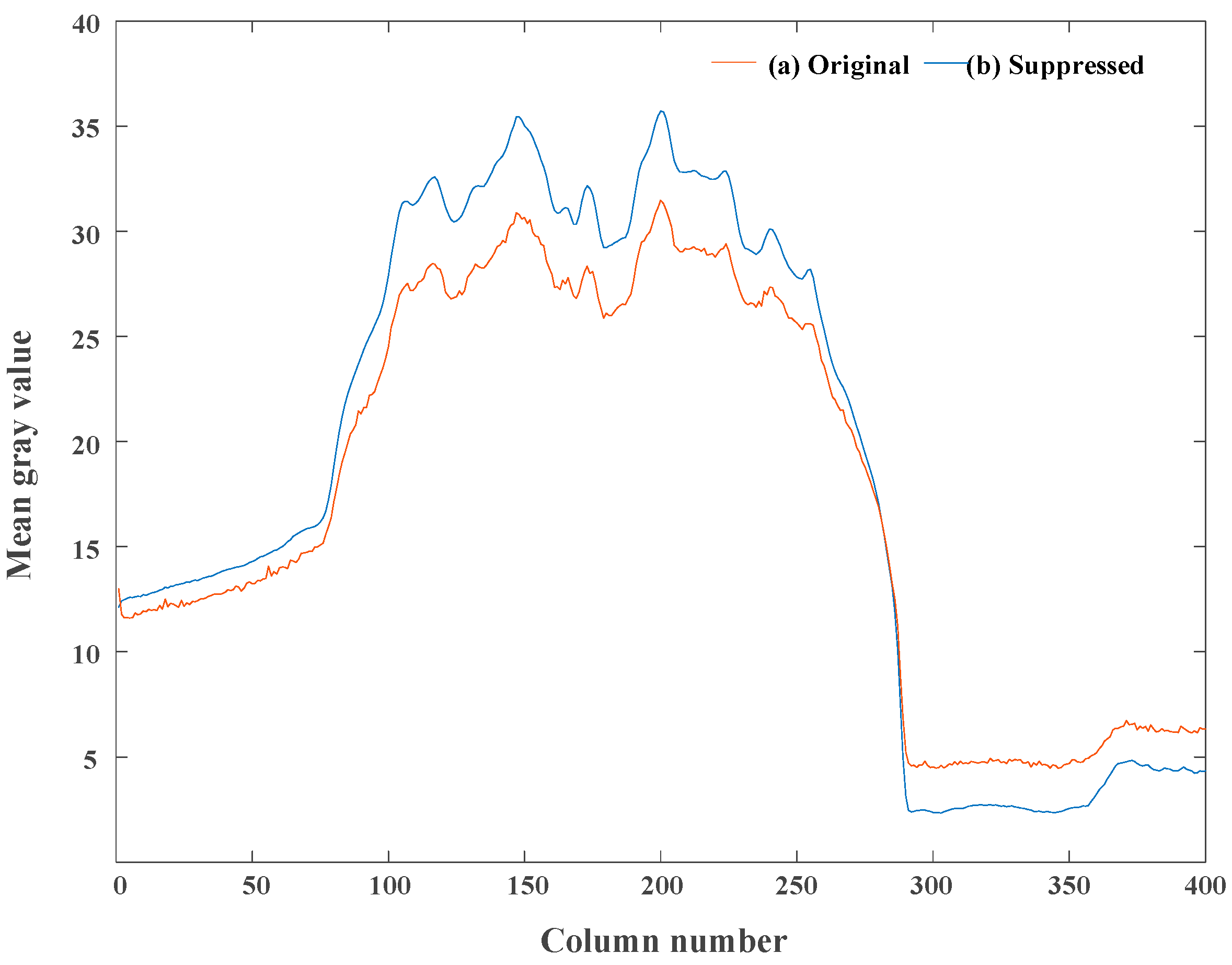

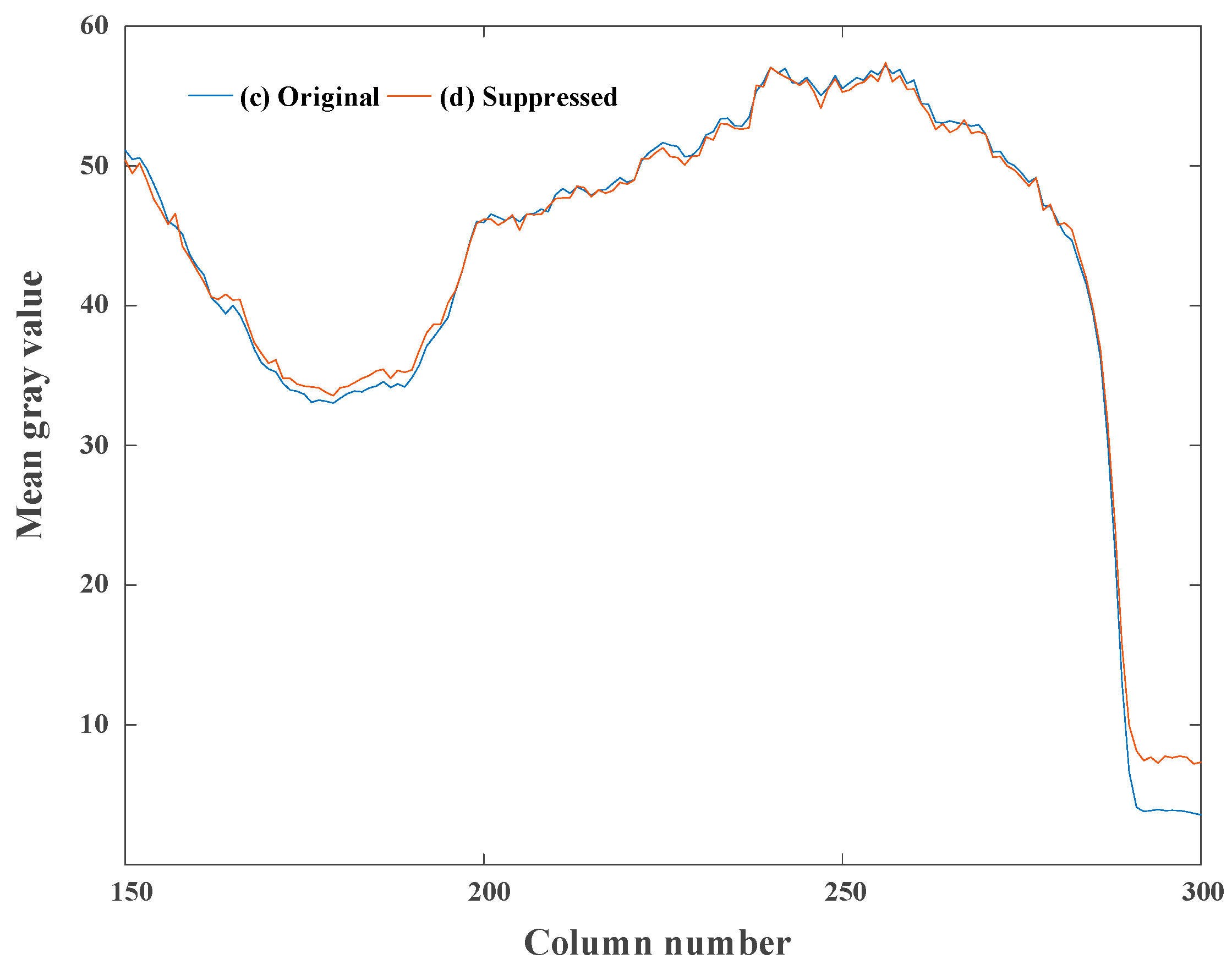

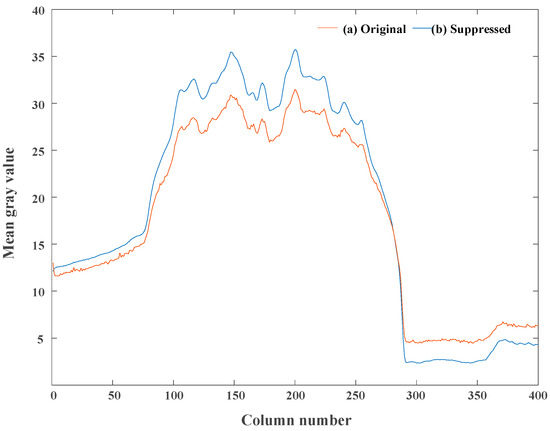

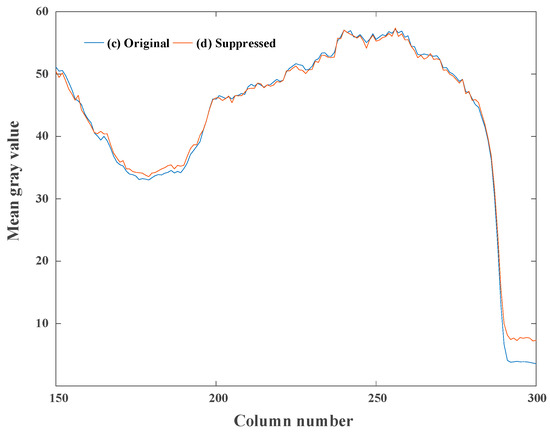

The comparison of the column-mean curve of the image in Figure 13 is shown in Figure 14, where the original curve corresponds to the original image and the suppressed curve corresponds to the image suppressed by the proposed calibration algorithm. After correcting the non-ideal factors, the curve becomes smooth. Besides, the form and trend of the suppressed curve are consistent with the original curve, which demonstrates that the proposed correction algorithm can suppress the non-ideal factors while preserving the original information of the image.

Figure 14.

The comparison of column-mean curves of the test images in Figure 13. (a) Original curve and (b) suppressed curve.

4.2.3. Non-Uniformity Correction for Images Based on Interspike Time Interval

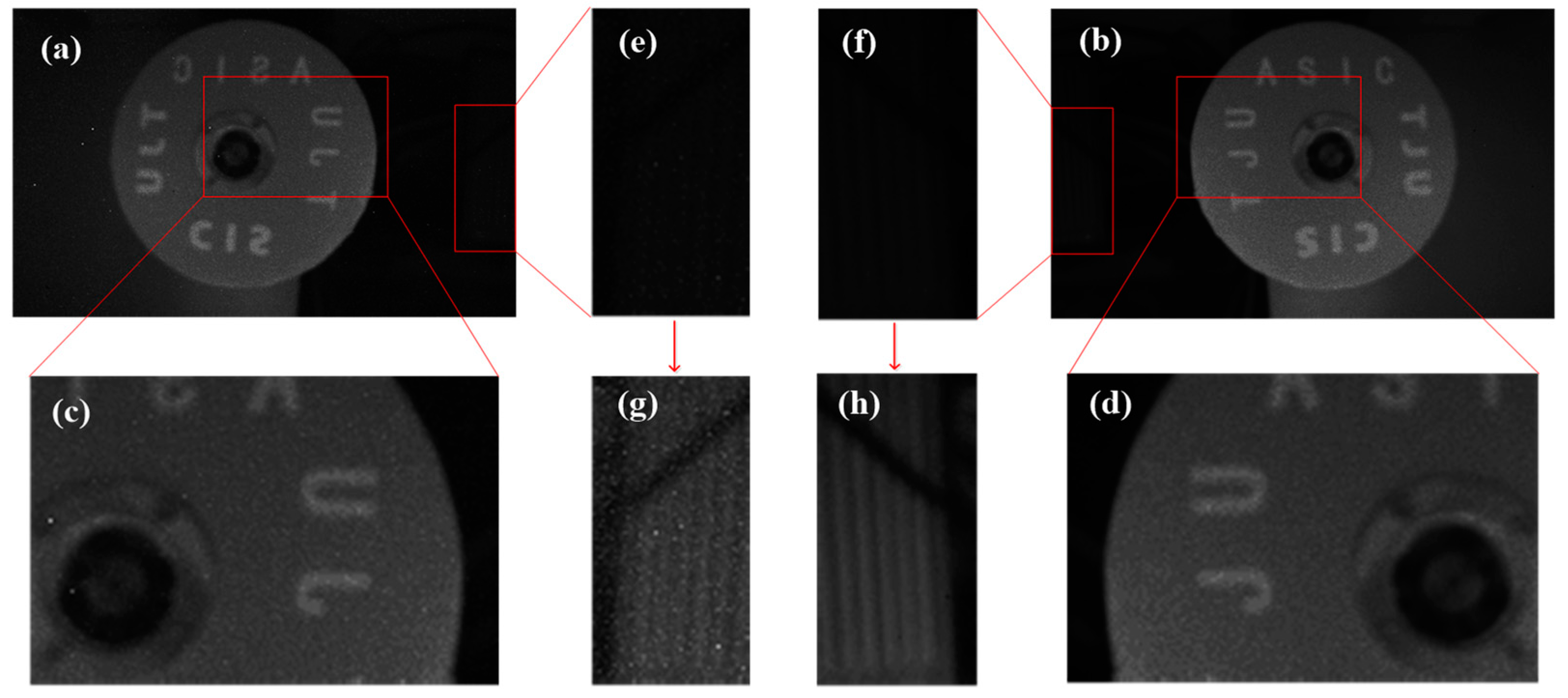

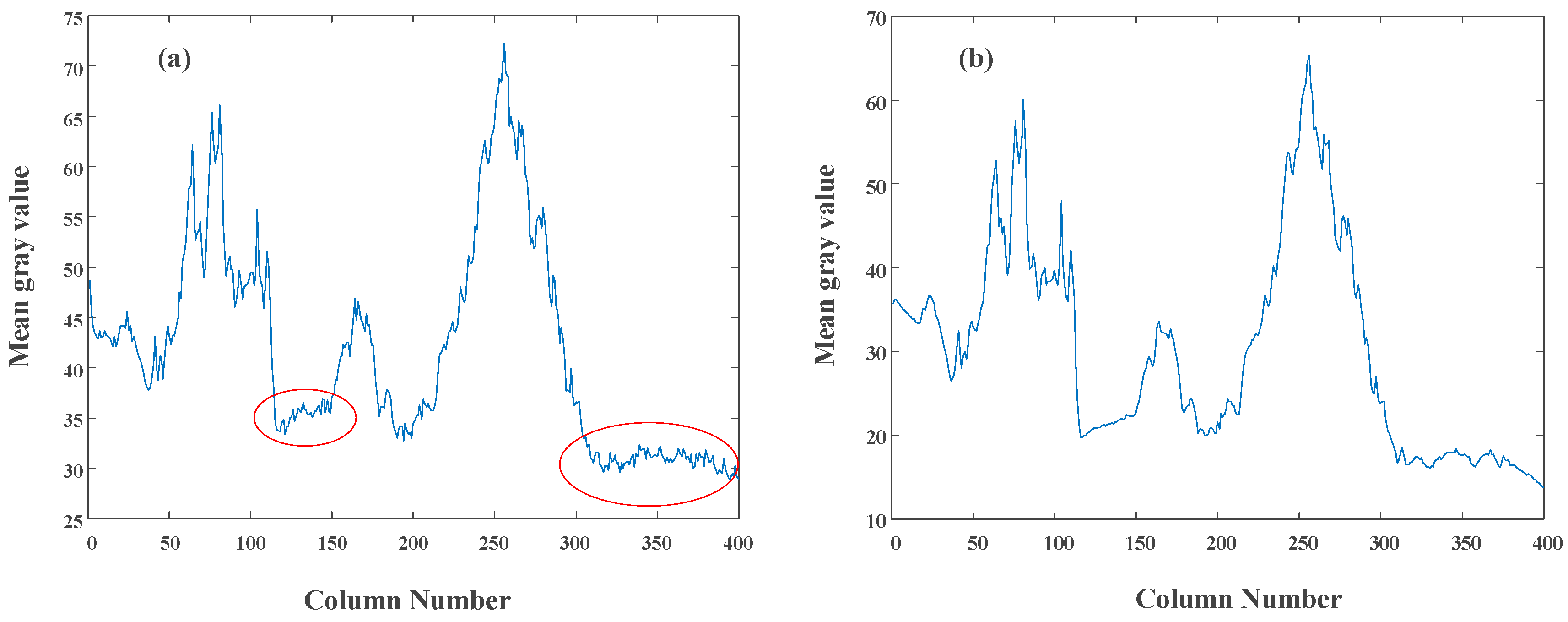

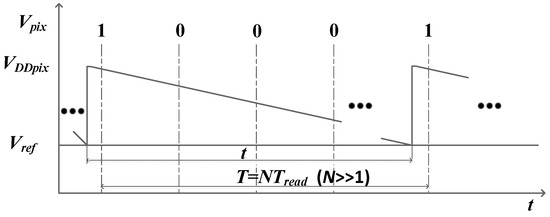

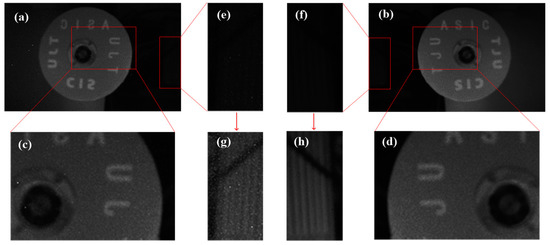

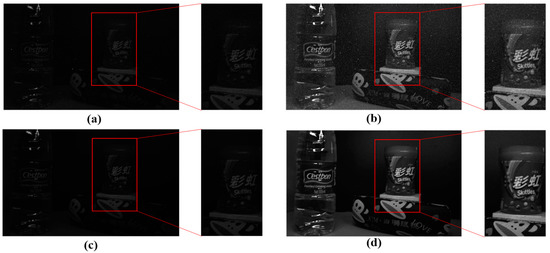

Through using the data collected in Figure 12, the recovered and suppressed images under the different uniform light intensity are shown in Figure 15. The results show that the non-ideal factors are suppressed more effectively in the dark portion compared with the bright portion. The reason is as follows: according to the analysis in Figure 6, the time mismatch is introduced by the synchronous readout. For the bright portion of the image, the influence of the time mismatch and temporal noise on t is larger, which leads to a poor correction effect. However, in the dark intensity, the influence of temporal noise is reduced, and t is long enough for the time mismatch. Assuming that t ≈ T, the calibration algorithm will perform effectively. Figure 16 describes the relationship between the t and T under the dark conditions. Figure 17a gives the original image recovered by the interspike time interval and Figure 17b shows the suppressed image. To observe the correction effect of the image visually, the gray values are strengthened in the dark portion in Figure 17e,f. Figure 18 shows the column-mean curves of the images in Figure 17c,d. According to Figure 18, the smoothness of the suppressed curve is almost unchanged due to the time mismatch in the bright portion.

Figure 15.

The original images are recovered by the interspike time interval under the (a) high, (c) moderate, and (e) low uniform light intensity. The suppressed results are given corresponding to (b) high, (d) moderate, and (f) low light intensity, respectively.

Figure 16.

The influence of time mismatch on dark conditions.

Figure 17.

The original image recovered by the interspike time interval in (a), and (b) gives the suppressed results. The gray values of the dark portion in (e) and (f) are magnified four times to display in (g) and (h).

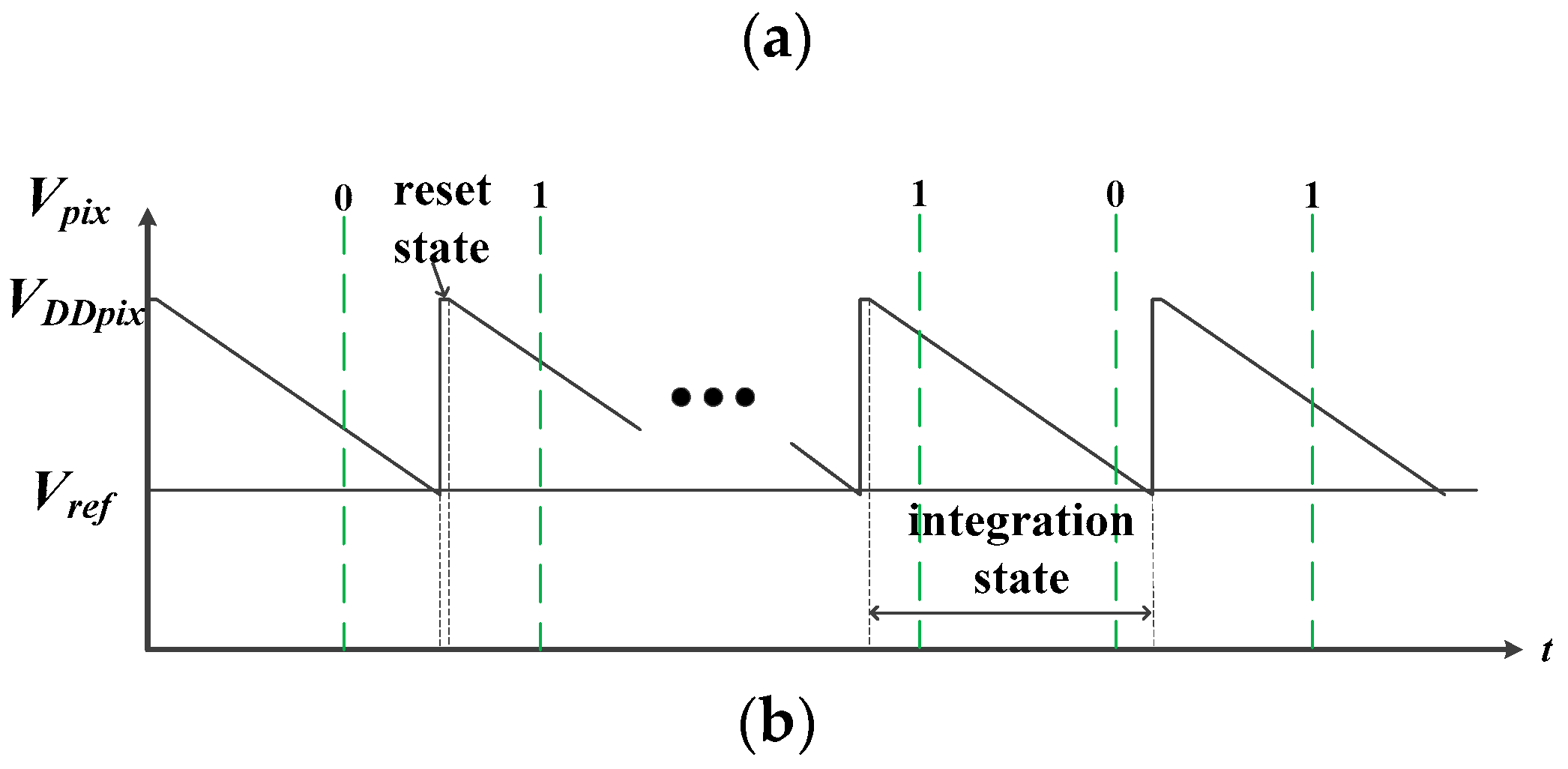

Figure 18.

The comparison of column-mean curves of the test images in Figure 17. (c) Original curve and (d) suppressed curve.

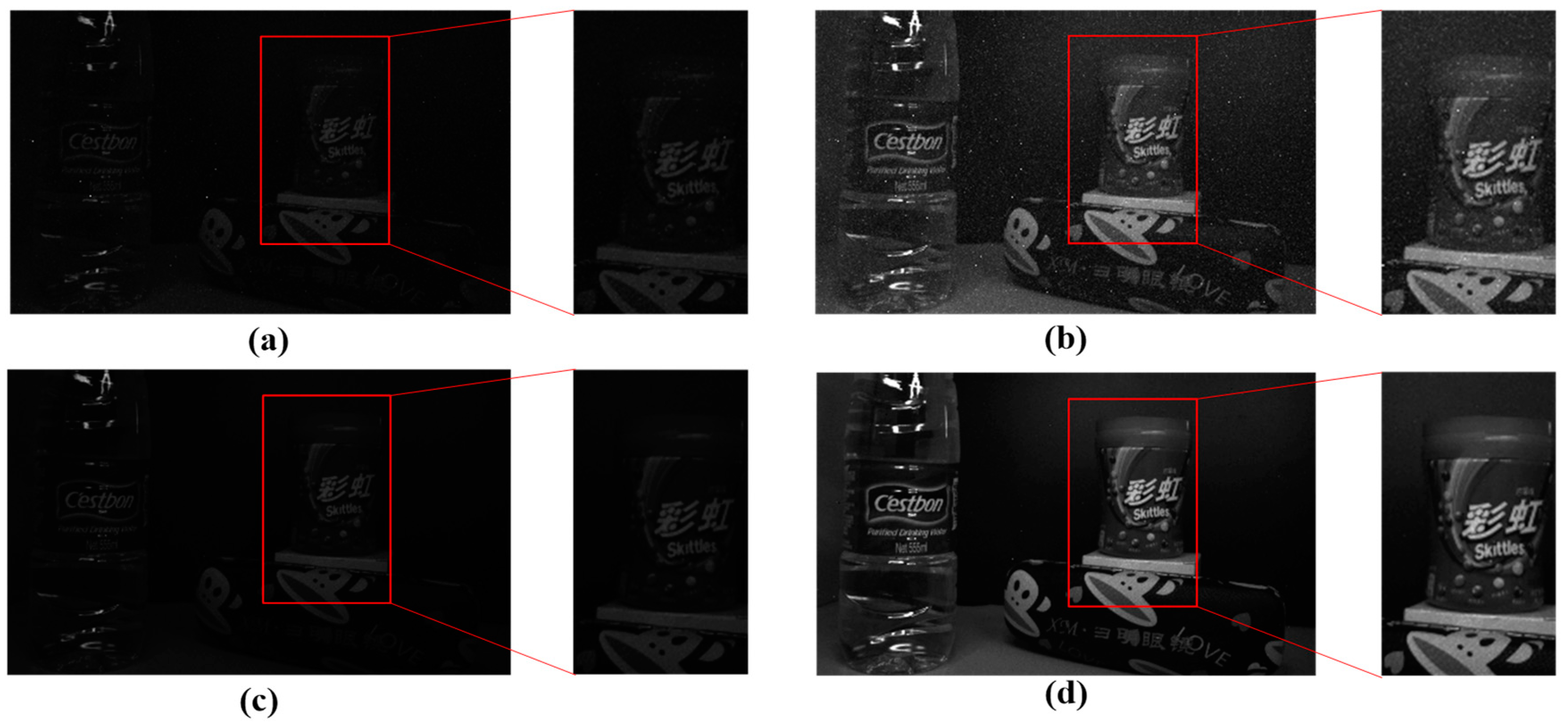

To verify the influence of time mismatch results from the synchronous readout, an image shown in Figure 19 is captured under the dark condition. To observe the image visually, the gray value is strengthened as well. Figure 20 shows the column-mean curves of the images in Figure 19b,d. The original curve marked with ellipses fluctuates, and the suppressed curve is smooth. The results illustrate the suppression of non-ideal factors under the dark light condition.

Figure 19.

The images restore by the interspike time interval under the dark condition in (a). (c) Gives the result suppressed by the calibration algorithm. (b) and (d) are the reinforced results corresponding to (a) and (c), respectively. The gray values of the whole image are magnified four times to display.

5. Conclusions

In the spike-based pixel structure, the non-ideal factors and their influences in the time domain have been discussed clearly in this paper. To suppress their effects, different correction coefficients were proposed. To verify the correctness of the analysis and the calibration algorithm, a system based on this imager of 250 (V) × 400 (H) pixels has been built. Experimental results show that the proposed calibration algorithm suppresses the effect of non-uniformity effectively when the image is recovered by spike frequency. In the uniform light and scene graph test, the effectiveness of the calibration algorithm can be verified. Recovering the image information by the interspike time interval is desired at high speed applications. The correction effect of scene graphs proves that the shorter the discharging time is, the worse the calibration algorithm will be because of the time mismatch and temporal noise.

Further research includes the PRNU elimination in the spike-based image sensor by hardware and photodiode optimization to reduce the effect of the dark current. The author will do further research on this kind of image sensor.

Author Contributions

K.N., Z.G. and Y.W. conceived the ideas and innovations; Y.W., K.N. and Z.G. performed the design, simulations and test; J.X. and J.G. provided supervision and guidance in this work; Y.W. wrote the paper.

Funding

This work is supported by the National Natural Science Foundation of China (61434004, 61604107), Tianjin artificial intelligence science and technology project (17ZXRGGX00040), and Tianjin Research Program of Application Foundation and Advanced Technology (17JCYBJC16000).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Noda, A.; Hirano, M.; Yamakawa, Y.; Ishikawa, M. A networked high-speed vision system for vehicle tracking. In Proceedings of the IEEE Sensors Applications Symposium, Queenstown, New Zealand, 18–20 February 2014; pp. 343–348. [Google Scholar]

- Chae, Y.; Cheon, J.; Lim, S.; Kwon, M.; Yoo, K.; Jung, W.; Lee, D.; Ham, S.; Han, G. A 2.1 M Pixels, 120 Frame/s CMOS image sensor with column-parallel Δ∑ADC architecture. IEEE J. Solid-State Circuits 2011, 46, 236–247. [Google Scholar] [CrossRef]

- Toyama, T.; Mishina, K.; Tsuchiya, H.; Ichikawa, T.; Iwaki, H.; Gendai, Y.; Murakami, H.; Takamiya, K.; Shiroshita, H.; Muramatsu, Y.; et al. A 17.7 Mpixel 120 fps CMOS image sensor with 34.8 Gb/s. In Proceedings of the IEEE International Solid-State Circuits Conference Digest of Technical Papers, San Francisco, CA, USA, 20–24 February 2011; pp. 420–421. [Google Scholar]

- Wakabayashi, H.; Yamaguchi, K.; Okano, M.; Kuramochi, S.; Kumagai, O.; Sakane, S.; Ito, M.; Hatano, M.; Kikuchi, M.; Yamagata, Y.; et al. A 1/2.3-inch 10.3 Mpixel 50 frame/s Back-Illuminated CMOS image sensor. In Proceedings of the IEEE International Solid-State Circuits Conference Digest of Technical Papers. In Proceedings of the IEEE International Solid-State Circuits Conference Digest of Technical Papers, San Francisco, CA, USA, 7–11 February 2010; pp. 410–411. [Google Scholar]

- Etoh, T.G.; Poggemann, D.; Kreider, G.; Mutoh, H.; Theuwissen, A.; Ruckelshausen, A.; Kondo, Y.; Maruno, H.; Takubo, K.; Soya, H.; et al. An image sensor which captures 100 consecutive frames at 1 000 000 frames/s. IEEE Trans. Electron. Devices 2003, 50, 144–151. [Google Scholar] [CrossRef]

- Etoh, T.G.; Nguyen, D.H.; Dao, S.V.T.; Vo, C.L.; Tanaka, M.; Takehara, K.; Okinaka, T.; Kuijk, H.V.; Klassens, W.; Bosiers, J.; et al. A 16 Mfps 165 kpixel backside-illuminated CCD. In Proceedings of the IEEE International Solid-State Circuits Conference Digest of Technical Papers, San Francisco, CA, USA, 20–24 February 2011; pp. 406–407. [Google Scholar]

- Mallik, U.; Clap, M.; Choi, E.; Cauwenberghs, G.; Etienne-Cummings, R. Temporal change threshold detection imager. In Proceedings of the IEEE International Solid-State Circuits Conference Digest of Technical Papers, San Francisco, CA, USA, 10 February 2005; pp. 362–603. [Google Scholar]

- Moeys, D.P.; Corradi, F.; Li, C.; Bamford, S.A.; Longinotti, L.; Voigt, F.F.; Delbruck, T. A Sensitive Dynamic and Active Pixel Vision Sensor for Color or Neural Imaging Applications. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Culurciello, E.; Etienne-Cummings, R.; Boahen, K.A. A biomorphic digital image sensor. IEEE J. Solid-State Circuits 2003, 38, 281–294. [Google Scholar] [CrossRef]

- Posch, C.; Matolin, D.; Wohlgenannt, R.; Maier, T.; Litzenberger, M. A Microbolometer Asynchronous Dynamic Vision Sensor for LWIR. IEEE Sens. J. 2009, 9, 654–664. [Google Scholar] [CrossRef]

- Lenero-Bardallo, J.A.; Serrano-Gotarredona, T.; Linares-Barranco, B. A 3.6 μs Latency Asynchronous Frame-Free Event-Driven Dynamic-Vision-Sensor. IEEE J. Solid-State Circuits 2011, 46, 1443–1455. [Google Scholar] [CrossRef]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.C.; Delbruck, T. A 240 × 180 130 dB 3 µs Latency Global Shutter Spatiotemporal Vision Sensor. IEEE J. Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Bermak, A. VLSI implementation of a neuromorphic spiking pixel and investigation of various focal-plane excitation schemes. Int. J. Rob. Autom. 2004, 19, 197–205. [Google Scholar] [CrossRef]

- Matolin, D.; Posch, C.; Wohlgenannt, R. True correlated double sampling and comparator design for time-based image sensors. In Proceedings of the IEEE International Symposium on Circuits and Systems, Taipei, Taiwan, 24–27 May 2009; pp. 1269–1272. [Google Scholar]

- Kim, D.; Bae, J.; Song, M. A high speed CMOS image sensor with a novel digital correlated double sampling and a differential difference amplifier. Sensors 2015, 15, 5081–5095. [Google Scholar] [CrossRef] [PubMed]

- Razavi, B. Chapter 7. Noise. In Design of Analog CMOS Integrated Circuits; McGraw-Hill: New York, NY, USA, 2001. [Google Scholar]

- Razavi, B. Chapter 13. Nonlinearity and Mismatch. In Design of Analog CMOS Integrated Circuits; McGraw-Hill: New York, NY, USA, 2001. [Google Scholar]

- Xu, J.; Yang, Z.; Gao, Z.; Zheng, W.; Ma, J. A method of biomimetic visual perception and image reconstruction based on pulse sequence of events. IEEE Sens. J. 2018, in press. [Google Scholar] [CrossRef]

- Liu, Z.; Xu, J.; Wang, X.; Nie, K.; Jin, W. A Fixed-Pattern Noise Correction Method Based on Gray Value Compensation for TDI CMOS Image Sensor. Sensors 2015, 15, 23496–23513. [Google Scholar] [CrossRef] [PubMed]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).