1. Introduction

The ability to monitor subcutaneous blood flow in living tissues has been an area of intensive biomedical research for decades [

1,

2,

3]. Until recently laser Doppler and laser speckle imaging were the only viable non-contact techniques for mapping capillary blood flow [

4]. Imaging photoplethysmography (iPPG) has since been used to demonstrate the feasibility of remote blood perfusion imaging as an inexpensive alternative method where tissue surface is illuminated by ambient [

5] or artificial light [

6,

7,

8], and modulated backscattered light is captured by an image sensor, typically a digital camera. However, the extracted iPPG waveforms have been found to be sensitive to optical distortions, in particular body motion, which is able to instantly change the amount of backscattered and stray light hitting the camera sensor [

9,

10]. Pioneering work on iPPG systems often still operates under the condition of a motionless subject [

11,

12] or at least very limited natural motion [

13] during video recording, which tempers its acceptance and wide uptake in real-time clinical applications.

The main disadvantage of motion-uncompensated perfusion mapping is the loss of spatial resolution caused by the need to average over a relatively large block of pixels (varying with the application and the severity of motion) in order to overcome a poor signal-to-noise ratio (SNR). Focusing on the same skin area during the whole cardiac cycle is paramount in obtaining a high-resolution perfusion image of a rich capillary bed in body areas such as the face, palms, and fingertips, as otherwise neighboring regions would overlap in a single map and create undesirable blurring. Therefore, motion compensation becomes a crucial pre-processing step.

Progress in understanding subject motion and its compensation has been reported by applying a chrominance-based approach to color camera video frames [

14,

15]. Independent and principal component analyses (ICA and PCA) have been utilized to retrieve a motion-robust signal by returning a linear combination of the three color channels, assuming that the unknown cardiac-related pulsatile signal is

a priori periodic, while the motion-related distortion is non-periodic [

13,

16]. A simple yet effective method of motion suppression is through object tracking by image registration [

17], which has shown its usefulness as a pre-processing stage before more advance signal computation. Image registration is the process of aligning two or more images (usually called a template and targets) using a mathematical transformation in such a way that mutually registered images include overlapping scenes of the same features. Typical applications include remote environmental monitoring, motion stabilization in video cameras, multi-modal imaging of internal organs and tumors, and quality control on production lines. The rationale for utilizing image registration is to eliminate, or minimize, in-image motion of the tissue under examination, in advance of extracting PPG signals and constructing perfusion maps. However, studies systematically investigating the application of optical image stabilization by frame registration and its impact on iPPG signal quality have been somewhat neglected in the peer-review literature.

One motivation for this study, amongst others, is the lack of a standardized framework for assessing motion compensation schemes due to the variability of parameters, such as optical components selection, sensor resolution, distance to the target surface, field of view and an object’s motion amplitude, to name a few. Contemporary studies usually focus on discussing only some of these aspects, while each of them presents a unique challenge, and contribution, to the recovered iPPG waveform and derived vital signs. Therefore, the influence of each of these factors on the overall iPPG signal quality is difficult to quantify. Moreover, the fact that the iPPG peak–peak amplitude contributes as little as 0.6% of the total extracted signal [

18] minimizes the ability to identify whether registered noise is caused by signal processing artifacts, variation in light uniformity, or the object’s motion vector with respect to a light source. Typical utilization of adaptive filters to isolate these noise components in the frequency domain also becomes impossible when the acquired motion is in-band with the cardiac activity.

The aim of this study is to understand how various parameters influence iPPG signal quality, starting from an object that could mimic the shape of human subject, but does not exhibit any time-varying pulsatile fluctuations associated with cardiac and respiratory functions. During the first stage, a high accuracy replica of a human palm is cast, and various noise sources are measured to establish a signal baseline when the object is static. In the second stage, low amplitude motion is modeled by exposing the prosthetic palm to a controlled displacement w.r.t. the optical system, by placing the cast on a movable platform. Four image registration algorithms are then applied to the video sets collected to assess their contribution to motion suppression in extracted spatially averaged signals. The final stage is dedicated to carrying over knowledge obtained throughout the previous steps and confirming preliminary findings with controlled live tissue experiments. Finally, a conclusion on the systematic approach and suitability of the optical image registration as a pre-processing step in iPPG waveform extraction is drawn.

2. Materials and Methods

2.1. Palm Mold Preparation

In order to compare various approaches to optical motion compensation, there needs to be a framework invariant to changes other than motion-induced ones during the experiment. It is anticipated that, if live skin tissue is used for image recording and the simulation of relative motion, it would be hard to identify the exact cause and to justify whether any changes in the morphology and quality of the extracted iPPG signal are to be attributed to the natural fluctuation in the vital signs over a short period, to the signal noise due to the variations in diffused backscattered and specular light, or to the quality of the motion registration algorithms.

Therefore, a new approach is proposed by creating a human palm model and replicating its physical attributes, such as its shape, curvature, protruding superficial blood vessels, and features such as wrinkles and birthmarks. Since the artificial model does not exhibit any cardiac pulse-related light backscattering and reflection, the effects of motion compensation on the extracted signal can be more closely evaluated.

The palm model was produced by lifecasting, where a three-dimensional copy was created replicating very small details including wrinkles, fingerprints, and pores with a high level of detail (

Figure 1). The lifecasting process was approved by the Ethics Committee at Loughborough University, UK, and a participating subject signed a consent form prior to the procedure.

There are variations in the lifecasting process performed, depending on the body part being replicated, the level of detail required, and the reusability of the mold. The outline of the process used here is described below:

Model preparation: A thin layer of hypoallergenic release agent was applied to an untreated skin surface to facilitate palm release and minimize adhesion to skin and hair.

Mold application: To achieve a high level of detail, a mixture of non-toxic hypoallergenic alginate (Polycraft chromatic alginate, MB Fibreglass, UK) and water was poured into a tall plastic container followed by the slow insertion of the palm to avoid trapping air bubbles.

Casting: High strength and tear resistant silicone was used as a casting material (Polycraft RTV condensation cure silicone rubber, MB Fibreglass, UK). A pigment (Polycraft dark flesh silicone pigment, MB Fibreglass, UK) was added to mimic Groups II and III of the skin classification system developed by Fitzpatrick [

19]. The temperature was controlled to 20–24

to avoid premature setting.

Curing: The mold was left curing for a period of 48 h at room temperature of 25–27. Small trapped air bubbles resulted in visible surface imperfections, but the measured diameter of such pores was less than 0.3 mm.

2.2. Image Registration Model

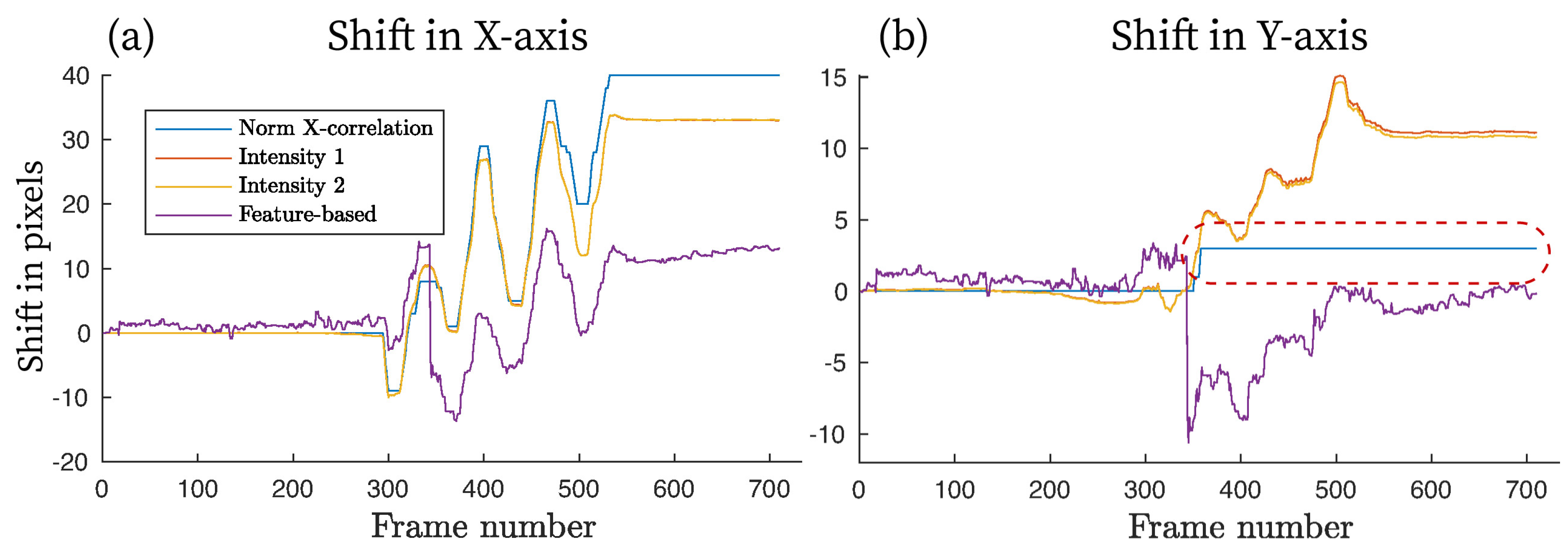

The influence of motion on iPPG signal extraction is best illustrated by

Figure 2a. When an object is subjected to a motion vector, its relative position within a captured camera frame also changes. If a selected region of interest (ROI), used to spatially average pixel values within its boundaries, is expected to be at a location

-

in Frame A, then this ROI drifts to occupy a location

-

in Frame B (see

Figure 2a). This effect has two implications: (1) each camera sensor pixel focuses on a different tissue area during object’s motion resulting in iPPG observation inconsistency; (2) the underlying structure of skin tissue is expected to be spatially heterogeneous, so the intensity of the backscattered light and the morphology of the iPPG signal varies as the object moves

w.r.t. the sensor, resulting in abrupt signal noise. Therefore, there is a need for tracking to focus on the same tissue area when extracting iPPG signals from the captured frames.

Optical image registration is often utilized to accurately work out frame-to-frame drift and refocus the ROI so as to consistently overlay the same tissue area. These are typically divided into feature-based and intensity-based groups. The former group aims to establish a relationship between some distinct pixels (features) in the images, such as corners, lines, or color differences, while the latter group is based on finding and comparing intensity patterns using various correlation metrics. The complexity of the registration process and the choice of the appropriate method depends widely on the type of geometric deformation present in the target images, its amplitude and frequency, as well as the number of resolution levels involved. A combination of geometric transformation functions is then applied to these feature or intensity patterns, pair-wise, in order to establish a motion vector and refocus the ROI (

Figure 2b).

2.2.1. Feature-Based Registration

Feature-based registration relies on the fact that images tend to have distinctive elements (features) associated with a pixel cluster that is different to its immediate neighbors in color or intensity. High quality features allow localization of the correspondences within images regardless of any change in the illumination level, view point, or partial occlusion. Two fundamental building blocks required for a high accuracy image registration include a feature detector and a feature descriptor.

Feature detection is a step responsible for identifying local corners, sharp edges, and blobs by utilizing intensity variation and gradient approaches. Selected features should be distinctive and exhibit a significantly different pixel-value gradient compared to other neighboring pixels (

Figure 2b). The corresponding features should also demonstrate a uniquely assigned location and remain locally invariant, which ensures accurate registration even if the viewing angle has changed.

A feature descriptor is a compact vector representation of a particular local neighborhood of pixels. This step establishes an accurate relationship between the target and reference images which remains stable in the presence of noise, image degradation, and changes in scale or orientation, while properly discriminating among other feature pairs. The choice of a descriptor is likely to be application-specific. Images containing high amounts of distortion benefit from computationally intensive local gradient-based descriptors, such as KAZE [

20] and SURF (Speeded Up Robust Features) [

21]. Binary descriptors like FREAK (Fast Retina Keypoint) [

22] and BRISK (Binary Robust Invariant Scalable Keypoints) [

23], which rely on pairs of local intensity differences encoded into a binary vector, are generally faster but less accurate than gradient-based descriptors.

To address a wide range of potential distortions due to the palm motion, the SURF feature detector and descriptor were selected (denoted as

Feature-based in this paper). Palm skin surfaces, examined under visible light, do not generally exhibit many sharp corners or high contrast lines, making utilization of FREAK and FAST (Features from Accelerated Segment Test) [

24] detectors less suitable. Therefore, the use of a feature descriptor aimed at detecting regions that differ in brightness or color compared to surrounding regions is justified at the cost of increased computational load.

2.2.2. Intensity-Based Registration

Intensity-based registration algorithms align images based on their relative intensity patterns. A specific performance measure called a similarity metric is computed and iteratively optimized, thus producing the best possible alignment results for a given set of images and geometrical transformations (see

Figure 3). This optimization problem may be expressed as

where

is an intensity transformation, mapping the target image

with transformation parameter vector

, and

is the similarity metric between the reference image

and the transformed target, and is used with the goal of finding

, which minimizes the difference between the two. The most common similarity metrics include the sum of absolute differences [

25] or the mean squared error. A simple implementation of Equation (

1) to an image sequence

compares a translation

of the frame

, with displacement test vector

pixels, to the preceding frame

using a sum of absolute differences (SAD) similarity approach across the ROI of frame

. One obtains a “best fit” displacement vector as the translation that minimizes the SAD metric, giving an estimate of the motion vector as

The minimization here is performed over some specified pixel range appropriate the type of motion involved. This particular form of Equation (

1) is commonly seen, for example, in the compression of MPEG data prior to video transmission. In the general case, the optimizer block governs how the search for an ultimate image alignment should be conducted. The optimization problem is often tackled by an iterative strategy to achieve a minimal error between the registered images. Optimizers available in the literature include gradient descent [

26,

27], advanced adaptive stochastic gradient descent [

28], simultaneous perturbation [

29,

30], Quasi-Newton [

27,

31], and nonlinear conjugate gradient [

32,

33] methods.

The biggest drawback encountered in many existing metrics is the effect of photometric distortions, such as changes in brightness and contrast [

34]. Assumptions that pixel values are independent of each other and that the brightness stays constant, or exhibits spatially stationary changes, are only valid in specific cases, so the selected technique should be able to account for illumination changes. Two intensity-based registration methods are evaluated in this paper: one based on a mean square error metric and a regular step gradient descent optimizer (denoted by

Intensity 1), together with a more advanced one based on the enhanced correlation coefficient (ECC) [

35] (denoted by

Intensity 2).

2.2.3. Frame Registration

Motion was estimated between each pair of neighboring frames, i.e., and , and , and , etc. This method is called frame-by-frame in this paper. The offset of -th frame w.r.t. the first image is a cumulative sum of the sequence.

A normalized cross-correlation (denoted by Norm X-correlation or NCC) was also used as a simple and computationally inexpensive registration method usually employed by researchers to be compared with more advanced motion compensation schemes. Intensity- and feature-based registration was performed at multiple resolution levels, where the first coarse alignment was obtained using down-scaled versions of the input images to remove the local optima and reduce computational load. The algorithm entered the next more detailed level once the optimizer converged to a suitable solution, down to a sub-pixel level for a more precise registration, using a linear interpolation scheme.

It should be noted that the image registration schemes can suffer from convergence issues, where the search for the optimal solution stops prematurely due to the presence of local optima. The usual sources of misconvergence include a step size being set at too large a value to minimize computational time, the number of resolution layers being too small to detect finer motion, the registered images not having enough overlap to establish corresponding feature pairs or intensity regions, and the algorithm not being able to detect features to accurately estimate the geometrical transformation. As a result, the incorrectly estimated pixel offset can lead to zero (no motion detected) or start oscillating (excessive motion and inability to track the object correctly).

2.3. Hardware Setup

This study consists of two parts: (a) a comparison of image registration methods using a silicone palm model and (b) an evaluation of the selected registration methods using live subjects.

Since the quality of iPPG depends on complex light-tissue interactions, the amplitude and morphology of a signal could be influenced and modulated by instability of the incident light, so the ability to control a light source in a reproducible manner is vital. The effects of variations in instrumentation equipment have not been fully evaluated in the literature, thus making iPPG system behavior less certain, and its potential applications less attractive to the practical community.

In order to create a stabilized light flux, an illuminator comprised of individual high-power LEDs was constructed based on the principles and results reported in previous work [

36]. In short, three high-power light emitting diodes (LEDs) (LXML Series, Philips, Andover, MA, USA) emitting at 530 nm with a typical half-power bandwidth of 20 nm were mounted on an aluminum substrate of high thermal conductivity to divert excess heat generated by the semiconductors (see

Figure 4a). When operating at full power the system was capable of delivering 470 lm of luminous flux. Current flow stability is achieved by designing a constant-current LED driver capable of varying the forward current using a 12-bit digital-analog converter (DAC) in order to achieve different levels of light intensity.

The selected LEDs were manufactured with a dome-shaped micro lens to provide a 120 field of view (FOV). Placed at about 50 cm above the surface, the illuminator could produce a fairly uniform but dispersed flux, resulting in a dimmer ROI and additional stray reflection from nearby objects and surfaces. A 25 FOV polycarbonate collimating lens (Carclo Optics, Aylesbury, UK) with 91% optical transmission was placed in front of the LED assembly to concentrate available flux on a smaller ROI. An additional 220-grit ground glass diffuser (Edmund Optics, Barrington, NJ, USA) with 75% transmission at 530 nm was placed in front of the collimating lens to diffuse light and even out non-uniformity caused by the circular lens.

A non-contact sensor comprised of an sCMOS monochrome camera (Orca Flash V2, Hamamatsu Co., Hamamatsu City, Japan) with an effective resolution of 2048 × 2048 pixels was coupled with a set of prime 50, 85, and 100 mm lenses (Planar T ZF-IR, Zeiss, Oberkochen, Germany) to provide several image magnification options and achieve higher system resolution. The camera was controlled from a workstation via a Camera Link interface and triggered by a control unit (CU) at 50 frames per second (fps). A contact PPG probe (cPPG) based on an integrated optical sensor (SFH 7050, OSRAM, Munich, Germany) sampled at 200 Hz was also placed on each subject’s finger to act as a reference point.

2.4. Experimental Protocol

The performance of image registration algorithms was evaluated on 10 subjects (aged 21–45) at the Photonics Engineering Research Group, Loughborough University, UK. These subjects belonged to Groups II and III of the Fitzpatrick skin classification system [

19]. The experimental protocol was approved by the Ethics Committee at Loughborough University, UK, and all subjects signed a consent form prior to the experiment.

Each subject was asked to rest his/her palm on a support approximately 20 cm below heart level in a seated position. The support consisted of two thin and slightly oiled silicone sheets with low friction between them but with high friction between the skin surface and the sheet. The bottom sheet was placed inside a redesigned rectangular lid with 20 mm high borders around its perimeter, fixed to a table surface, so the palm was constricted to move by no more than ±15 mm in any direction (

Figure 4b). Subjects were instructed to relax and move their palms freely without concentrating on the amplitude or frequency and were also asked to limit their hand supination and pronation. A contact PPG (cPPG) probe was strapped to the middle finger to act as a reference signal. The camera and lens assembly were positioned above the palm on an adjustable arm. The lens was focused and centered on the area of the highest LED illumination level verified by a real-time histogram on PC software. The image frames were captured during four separate sequences each lasting 12–15 s.

The goal of this study is to minimize small artificially induced motion similar to what a generic patient with tremors, respiratory-related movement, or involuntary muscular contraction can experience in a hospital environment during a body part scanning. Cases involving significant motion, such as in head or palm rotation or tilting, are unlikely to benefit significantly from the application of image registration, as different, previously unexposed, skin regions may be recorded by the camera.

2.5. Signal Processing

Figure 5 shows the framework utilized in this study. The video frames and the contact reference signal were processed offline using Matlab (Mathworks, USA) pipelines and algorithm implementations. Image scaling was performed on the raw frames to simulate the effects of system resolution, or an altered distance between the camera and an object, on the image registration and extracted iPPG signals.

For a fixed sensor-lens pairing, an increase in distance between an object and the optical system would result in a single pixel covering a wider area on the object’s surface, making the image less resolved and potentially causing smaller details to disappear (

Figure 6a,b). The region covered by a single pixel may also be altered by changing the focal length of a lens (provided the focus is maintained); a shorter length and a wider viewing angle could result in a larger area covered by a unit pixel.

The resolution is normally defined as an ability of a given imaging system to reproduce individual object details (

Figure 6). An increase in the pixel count, usually referred to as a higher pixel resolution, causes each sensor element to cover a smaller object area, provided all other factors remain constant. Consequently, finer palm surface details are easier to resolve and capture by individual pixels as the image resolution increases (

Figure 6b,c). Our hypothesis here is that a higher system resolution, and a more detailed image, should facilitate better frame registration and track smaller motion distortions by providing better resolved anchor points for an algorithm to use.

To simulate a reduced system resolution, the frames were captured at the camera’s maximum allowed pixel resolution () with the lens set at a distance to cover the whole palm, resulting in 74 pixels/cm. The image was progressively rescaled offline from the original size down to a factor using linear interpolation methods.

2.5.1. Signal Formation

All pixels within the selected ROI were spatially averaged, once successfully registered. This procedure was repeated for all frames in the data set resulting in a time-varying signal. The video sequences obtained by filming the silicone palm do not exhibit any periodic cardiac-induced variations, so its zero-mean temporal signal should ideally be zero. The video series capturing live tissue, however, include a slowly-varying

quasi-DC signal, while the cardiac cycles could vary in their amplitudes. The effects of applying optional normalization methods, such as division by its lowpass-filtered component, polynomial fit, or normalization with a Gaussian distribution are discussed in

Section 3.2.1.

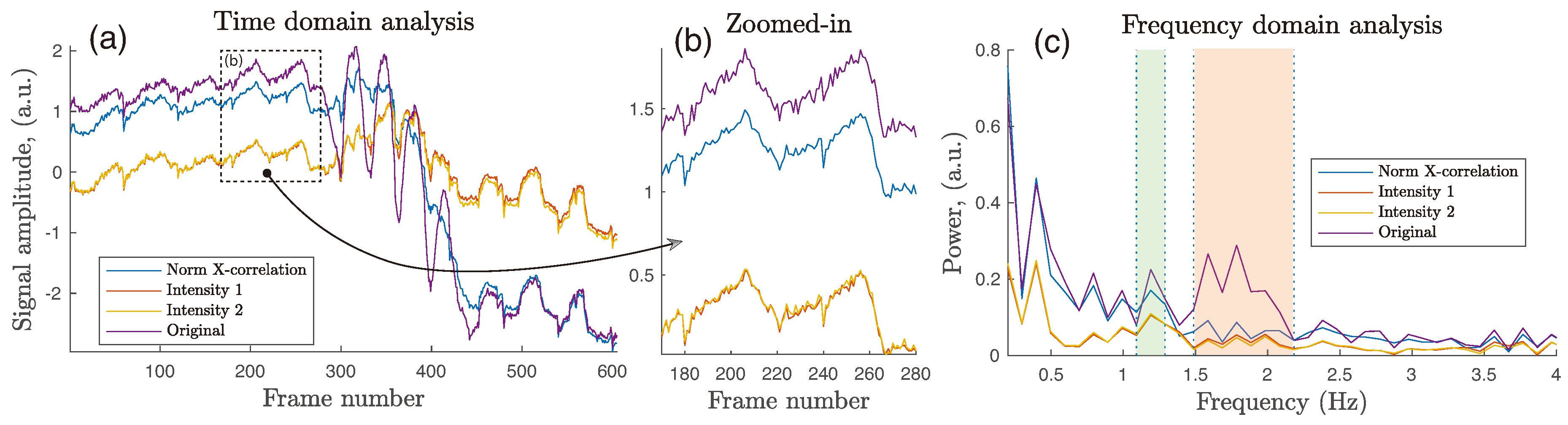

2.5.2. PPG Analysis

To evaluate the effect of motion compensation on signal composition, the extracted signal was analyzed in the time and frequency domains from five data sets. Separate methods were applied to the silicone palm and live tissue samples. The absolute noise floor was established using a signal derived from the silicone palm with no induced motion. In ideal conditions, the spatially averaged signal should be DC-stable, and any deviation indicating the presence of noise in either the optical imaging or the light source.

For live tissue signals, the evaluation criterion included noise component analysis in the frequency domain, adapted from [

37]. Ideally, a PPG signal has a clear and distinct peak with sharp roll-offs around its fundamental pulse frequency, followed by a small number of harmonic components. These frequency components would be attributed to a clean PPG signal. The signal-to-noise ratio (SNR) was calculated to act as a quality metric, where the

signal component was estimated from the spectral energy within ±0.1 Hz of the fundamental heart rate frequency identified and located by the reference contact (

) signal, and the

noise was estimated as the remaining spectral energy in the 0.5–7 Hz range.

A heart rate (HR) was extracted in the frequency domain by obtaining a fast Fourier transform (FFT) of a normalized PPG signal. The position of the frequency component with the highest amplitude was located, which corresponded to the heart rate with the conversion factor of 1 Hz = 60 BPM. was assumed to be less susceptible to motion artifacts and less noisy due to its firm contact with the fingertip, so it was selected as a ground truth signal for the current experiments.

4. Discussion

In this study, the initial noise floor assessment was performed on a silicone cast of a human palm. The inclusion of this stage can be avoided by applying image registration techniques directly into a live tissue dataset. However, the initial algorithm and optical setup assessment shows clear benefits. Firstly, this approach helps to establish a performance baseline for each image registration algorithm, since the backscattered light and stray reflections do not contain intensity fluctuations related to blood volume variations during a cardiac or respiratory cycle. An iPPG signal extracted from live tissue, in contrast to a static cast, could contain a complex combination of a low-amplitude oscillating component due to the propagating cardiac pulse-wave, some noise inherited from the instability and transients in the optical system, as well as any noise residue left from or introduced by image stabilization. Our previous research concluded that even a very stable tissue sample can result in an iPPG waveform effectively buried in wide-spectrum noise. In a tissue sample, subjected to moderate motion, the iPPG signal quality is a priori unknown, and one can only guess whether its source is due to the initially poor and noisy iPPG signal or a badly tuned image registration process. Secondly, although live skin opto-physiological properties are not maintained when targeting a silicone mold, the prosthesis inherited the shape, surface roughness, and inhomogeneity of real skin. Therefore, the effects of palm motion w.r.t. the camera system can be modeled effectively, regardless of the dye pigment or the wavelength of the illuminator used.

An empirically established system resolution of 74 pixels/cm of tissue surface, projected into the camera sensor, is suggested as being sufficient to detect and rectify small amplitude motion, similar to those that might be experienced in a hospital environment by a generic patient with tremor, respiratory-related movement, or involuntary muscular contraction, during a body part scanning. Decreasing the system resolution from 74 to 14.8 pixels/cm (

of the full resolution) affects the extracted signals in a nonlinear fashion, with peak–peak fluctuations and pixel standard deviation

rising from 0.42 to 0.85 and from 0.09 to 0.22, respectively (

Table 2). Although the requirements of the optimal system resolution depend on the expected motion amplitude, the observed benefit of a higher resolution would be suitable for those applications demanding the lowest possible noise within the extracted signals, as well as those featuring finer object details, such as iPPG perfusion mapping. The gain obtained with such high resolution, however, comes at the cost of an increased computational load at the image registration stage.

The speed and accuracy of intensity-based registration depends on a number of parameters, including the number of registration levels and the choice of optimizer, the similarity metric, and the frame interpolation method. Generally only a small number of search steps is required before reliable convergence is achieved, and additional registration enhancement can be expected as parameters are tuned further. The choice of image interpolation scheme may also influence the amount of noise, and consequently the deviation from an ideal result. The number of image registration levels should be estimated from the minimal motion amplitude and the available system resolution. Observing that sub-pixel leveling involves further interpolation, and potentially the creation of additional noise, we recommend that a minimal sub-pixel registration leveling is used together with a higher system resolution optical setup, with a single camera pixel covering an area equal to (or smaller than) the average frame-to-frame object displacement.

Depending on the image size, and the complexity of object’s geometrical transformation during the course of the video sequence, the computational requirement for the whole-frame global registration may be too great for real-time motion stabilization and data analysis. Consequently, it may only be possible for registration of a smaller image segment, or region of interest (ROI), during the extraction of vital signs. The size of such an ROI plays an important role in determining the quality of motion compensation and the final extracted iPPG signal. Following an iterative search, an ROI of roughly cm, measured on the palm surface, is the smallest recommended area compatible with achieving stable and repeatable results from the motion compensation algorithm. It is important to observe that these findings were obtained on human palm surfaces with relatively high surface homogeneity, and when viewed under green light; other body areas, such as the face, might render different results due to more well-defined local features, and skin imperfections, capable of acting as control points for better frame tracking.

The ultimate goal of an iPPG system, including any signal-enhancement algorithms, such as image stabilization, is its ability to run and display results in real-time, which will accelerate its acceptance and wide uptake in real clinical applications. Matlab software, used here for signal analysis, can have significant overhead in algorithm implementation depending on the underlying code and optimization paths selected by its developers, which limits our ability for online processing. The major performance bottleneck identified is the processing of a single frame pair at a time, taking on an average of 2.5–3 s on a generic consumer-grade 2.6 GHz processor. For real-time or near real-time analysis, the computational requirement is stringent and is around 10–30 ms per image pair depending on the frame rate. One workaround is to use parallel distributed computation, commonly found in graphics accelerators (GPUs). This approach will not only allow processing of 30–100 image pairs per second in parallel (provided ~1 s of video frames has been already buffered), but also the assignment of multiple cores to the same frame pair to achieve faster search for either control points or intensity regions. As similar problems are encountered in MPEG motion estimation, a number of fast optimization algorithms have been developed [

42] to evaluate the matching necessary in Equation (

2), and it is very likely that these can be adjusted for the present context. Finally, the utilization of application-specific electronic chips (ASICs) can yield substantial performance improvement by developing job-specific hardware and firmware without unnecessary overhead found in general-purpose solutions. In general, ASIC systems are only developed once a particular algorithm or method has been fully developed, has been tuned, and is ready for field deployment, but a number of MPEG ICs running SAD and other optimization algorithms have already been designed for both ASIC and FPGA platforms (see [

42] and references therein).

Signal normalization by the temporal

quasi-DC value is considered an alternative to optical image registration. Although such normalization mainly eliminates low-frequency distortion, it does not demonstrate substantial success in removing in-band distortion (

Figure 14). Image registration followed by a normalization stage is proposed in order to suppress iPPG signal noise due to fluctuations in light uniformity, which is not compensated by frame registration.

A known restriction in this study is its limited ability to track the hand during its supination or pronation. Significant geometrical transformation, such as tilting, is not likely to benefit significantly from the application of image registration since new, previously unexposed, skin regions may be exposed to the imaging system. The influence of the frequency of the induced motion on the estimated object transformation has also not been evaluated during this project, and further research into this topic is advisable.

5. Conclusions

Due to the variability in optical system setups, a straightforward quantitative cross-comparison of motion compensation methods, applied to iPPG signals, remains difficult. The noise floor of an iPPG signal extracted from a static ROI may contain at least 0.16% of its DC value and reach around a quarter of the available signal headroom, ultimately masking some of the desired cardiac-related fluctuations. It seems sensible in further research in this area to also establish such a noise floor level using an inanimate object placed adjacent to the imaged body part, and we would encourage groups to quote such values, together with the AC/DC ratio, when reporting iPPG signal quality.

This study has lead to a number of recommendations in this area, including for the minimum system resolution and the ROI size, against which it will be useful to benchmark future work. The results also show that the application of frame registration reduces motion-induced in-band fluctuations, which might otherwise be considered as being cardiac-related, and it also outperforms the simple method of signal normalization which has previously been used to account for small non-cardiac oscillations. The study also finds that intensity-based methods are particularly effective in suppressing small motion of the human palm up to ±15 mm when illuminated by green light. Areas as small as 1.5 cm can be effectively stabilized with sub-pixel accuracy, thus enabling high-detail local PPG mapping.

Although the conclusions reached might not be common to all circumstances (including those of particular measurement site, or light wavelength used for illumination, or a particular motion frequency), the study suggests that integration of an intensity-based frame registration into an iPPG framework can facilitate filtering of both low- and high-frequency noise, while also significantly improving the cardiac-related spectrum of extracted iPPG signals.